Open Access

Open Access

ARTICLE

Skill Optimization Algorithm: A New Human-Based Metaheuristic Technique

1 Department of Electrical Engineering, Shahreza Campus, University of Isfahan, Iran

2 Department of Technics, Faculty of Education, University of Hradec Kralove, Czech Republic

* Corresponding Author: Marie Hubalovska. Email:

Computers, Materials & Continua 2023, 74(1), 179-202. https://doi.org/10.32604/cmc.2023.030379

Received 24 March 2022; Accepted 16 May 2022; Issue published 22 September 2022

Abstract

Metaheuristic algorithms are widely used in solving optimization problems. In this paper, a new metaheuristic algorithm called Skill Optimization Algorithm (SOA) is proposed to solve optimization problems. The fundamental inspiration in designing SOA is human efforts to acquire and improve skills. Various stages of SOA are mathematically modeled in two phases, including: (i) exploration, skill acquisition from experts and (ii) exploitation, skill improvement based on practice and individual effort. The efficiency of SOA in optimization applications is analyzed through testing this algorithm on a set of twenty-three standard benchmark functions of a variety of unimodal, high-dimensional multimodal, and fixed-dimensional multimodal types. The optimization results show that SOA, by balancing exploration and exploitation, is able to provide good performance and appropriate solutions for optimization problems. In addition, the performance of SOA in optimization is compared with ten metaheuristic algorithms to evaluate the quality of the results obtained by the proposed approach. Analysis and comparison of the obtained simulation results show that the proposed SOA has a superior performance over the considered algorithms and achieves much more competitive results.Keywords

With the advancement of science and technology, the need for optimization techniques to address the applications and challenges of optimization and achieve optimal designs has become increasingly apparent. The goal in optimization is to find the best solution to a multi-solution problem (known as the optimization problem) [1]. Metaheuristic algorithms are one of the most powerful tools for solving optimization problems. These techniques, which are considered as random optimization approaches, are based on trial-and-error methods, random search, and the use of random operators. Advantages such as simplicity of understanding, ease of implementation, being independent of the type of problem, no need to derivation process, and capability to be used in nonlinear, non-convex, and NP-hard problems have led to the widespread utilization of metaheuristic algorithms [2].

Metaheuristic algorithms have the same optimization process in that they start by generating a number of candidate solutions in the problem-solving space at random. These solutions are then improved in an iterative process. Finally, the best improved candidate solution is introduced as the solution to the problem [3]. Due to the random nature of the search process in metaheuristic algorithms, the solutions obtained by these techniques are not guaranteed to be the global optimal solution [4]. However, these solutions are close to the global optimum. Therefore, these acceptable solutions are known as quasi-optimal solutions. A quasi-optimal solution is, at best, equal to the global optimum [5]. Attempts to find better quasi-optimal solutions have led to the introduction and design of numerous metaheuristic algorithms [6–11]. Metaheuristic algorithms have wide applications in various fields of science, including: Energy carriers [12,13], feature selection problem [14], parameter estimation [15], electrical engineering [16–21], protection [22], and energy management [23–26].

The main idea in designing metaheuristic algorithms is inspired by nature, wildlife, behaviors of animal, birds, insects, aquatic animals in nature, physical concepts and laws, biological sciences, game rules, human behaviors, and any type of process that has an evolutionary nature. The birds’ natural behaviors have been the main inspiration for Particle Swarm Optimization (PSO) algorithm [27]. Genetic Algorithm (GA) [28] was inspired by the reproduction process. The concept of gravity force in physics was utilized to obtain Gravitational Search Algorithm (GSA) [29]. The interactions between learners and teachers have been the main inspiration for Teaching-Learning Based Optimization (TLBO) [30], and Volleyball Premier League (VPL) method was inspired by the volleyball game [31].

The main research question is that despite the countless metaheuristic algorithms that have been designed so far, is there still a need to develop newer algorithms? There is the answer to this question in the concept of No Free Lunch (NFL) theorem [32], which states: The effective performance of an algorithm in solving a group of optimization problems does not guarantee the similar performance of that algorithm in solving other optimization problems. In fact, the NFL states that there is no presupposition as to whether or not an algorithm can be implemented successfully on an optimization problem, and that a particular algorithm cannot be claimed to be the best choice for all optimization applications. The NFL theorem motivates researchers to develop newer and more efficient algorithms in order to find better solutions for various optimization problems. The NFL theorem motivated the authors of this study to design a new algorithm to solve optimization problems more effectively to provide better quasi-optimal solutions.

Innovation and contribution of this paper is in designing a new metaheuristic algorithm called Skill Optimization Algorithm (SOA) to be utilized for solving optimization problems in various fields of science. The main inspiration employed in designing SOA is the human activities and efforts that seeks to acquire and improve skills. The steps of implementing SOA are mathematically modeled in two phases of exploration and exploitation, which are based on skill learning and individual practice, respectively. Twenty-three sets of standard benchmark functions of unimodal and multimodal types have been used for evaluating the ability of SOA to achieve optimal solutions. Moreover, the results obtained by SOA are compared with the performance of ten well-known metaheuristic algorithms.

The rest of this article is structured as follows: In Section 2, literature review is provided. The proposed SOA is introduced in Section 3. Simulation studies and results are presented in Section 4. Finally, conclusions and several suggestions for future studies are given in Section 5.

Metaheuristic algorithms are divided into five groups based on the source of inspiration used in the design: Swarm-based, evolutionary-based, physics-based, game-based, and human-based algorithms.

Swarm-based algorithms are inspired by nature, and the swarming behaviors of animals, birds, and other living things. PSO is one of the most popular optimization methods, which was developed based on modeling the movement of birds and fishes in search of food. The ability of ants to discover the shortest path between food sources and nests was a central idea in design of Ant Colony Optimization (ACO) technique [33]. The strategy of grey wolves in hunting and attacking prey was employed in the Grey Wolf Optimizer (GWO) design [34]. The humpback whale net-bubble hunting strategy was a major source of inspiration for designing Whale Optimization Algorithm (WOA) [35]. The strategy of pelicans in hunting and trapping prey was the main inspiration of Pelican Optimization Algorithm (POA) [36]. Some other swarm-based algorithms are: Cat and Mouse Based Optimizer (CMBO) [37], Good Bad Ugly Optimizer (GBUO) [38], Marine Predator Algorithm (MPA) [39], Tasmanian Devil Optimization (TDO) [40], Mutated Leader Algorithm (MLA) [41], Tunicate Search Algorithm (TSA) [42], Northern Goshawk Optimization (NGO) [43], Donkey Theorem Optimizer (DTO) [44], Rat Swarm Optimization (RSO) [45], All Members Based Optimizer (AMBO) [46], Red Fox Optimization (RFO) [47], Best Members Based Optimizer (MBMBO) [48], and Mixed Leader Based Optimizer (MLBO) [49].

Evolutionary-based algorithms are inspired by the concepts of natural selection, biology, and genetics. GA and Differential Evolution (DE) [50] are among the most widely used and well-known evolutionary algorithms, inspired by the process of reproduction, Darwin’s theory of evolution, and random operators such as selection, crossover, and mutation. Some other evolutionary-based algorithms are: Average and Subtraction-Based Optimizer (ASBO) [51], Genetic Programming (GP) [52], Search Step Adjustment Based Algorithm (SSABA) [53], Evolutionary Programming (EP) [54], Selecting Some Variables to Update-Based Algorithm (SSVUBA) [55], and Artificial Immune System (AIS) [56].

Physics-based algorithms are inspired by simulating processes, laws, and concepts in physics. Simulated Annealing (SA) is one of the most famous optimization algorithms that is inspired by the physical process of melting and cooling metals known as annealing [57]. Simulation of the law of curvature and spring force was the basis of Spring Search Algorithm (SSA) [58]. Simulation of momentum and collision of objects was the main inspiration of Momentum Search Algorithm (MSA) [59]. Considering gravitational force and laws of motion between objects at different distances, GSA was proposed. Some of the other physics-based algorithms are: Multi-Verse Optimizer (MVO) [60], Binary Spring Search Algorithm (BSSA) [61], Equilibrium Optimizer (EO) [62], and Henry Gas Solubility Optimization (HGSO) [63].

Game-based algorithms are inspired by modeling the rules of various individual and team games, the behavior of players, coaches, and referees, as well as holding competitions. Simulating the organization of the football league as well as the behavior of the players, and the interactions of the clubs with each other was the main idea in the design of Football Game Based Optimization (FGBO) [64]. The players’ effort to put the puzzle pieces together was the major inspiration in Puzzle Optimization Algorithm (POA) design [65]. Hide Object Game Optimizer (HOGO) is an optimization approach developed based on modeling players’ behavior to find a hidden object in the hide object game [66]. Some of other game-based algorithms are: VPL, Darts Game Optimizer (DGO) [67], Binary Orientation Search algorithm (BOSA) [68], Dice Game Optimizer (DGO) [69], Orientation Search algorithm (OSA) [70], and Ring Toss Game Based Optimizer (RTGBO) [71].

Human-based algorithms are designed based on human behaviors, the interactions of people in a community with each other, and the relationships between humans. TLBO is one of the most widely used optimization techniques that is designed based on modeling the space of a classroom with the presence of a teacher and a number of students in two phases of teaching and learning. Interactions between doctor and patients for examination, prevention, and treatment were the main inspiration of Doctor and Patient Optimization (DPO) [72]. Following the people of a society by the leader in order to develop that society was the source of inspiration in designing Following Optimization Algorithm (FOA) [73]. Some other human-based algorithms are: Teamwork Optimization Algorithm (TOA) [74], Group Optimization (GO) [75], Archery Algorithm (AA) [76], and Poor and Rich Optimization (PRO) algorithm [77].

3 Skill Optimization Algorithm

In this section, the proposed Skill Optimization Algorithm (SOA) is introduced and its mathematical modeling is presented. SOA is a population-based method, whose members are human beings who strive to learn and improve their skills. SOA population members are in fact candidate solutions to the given optimization problem. The positions of these members in the search space show the values of the problem decision variables. The positions of SOA members are randomly initialized at the start of the algorithm. The SOA population can be mathematically modeled using a matrix according to Eq. (1).

Here, X is the SOA’s population matrix,

Each member of the population is a candidate solution to the problem. In other words, by placing each member in the problem variables, a value for the objective function is evaluated. Therefore, the values obtained for the objective function can be mathematically modeled using a vector according to Eq. (2).

Here, F is the vector of obtained values for the objective function and

The process of updating population members in SOA is performed in two phases of exploration and exploitation. The exploration phase is based on simulating the process of skill learning from an expert. The exploitation phase is based on simulating skill improvement through individual efforts and activities. In SOA design, the update process is performed in two phases of exploration with the aim of global search in the problem-solving space, and the phase of exploitation with the aim of local search. In the exploration phase, SOA is designed so that SOA members move in the search space under the guidance of different members, and are prevented from moving only in the direction of the best member. This increases the algorithm’s exploration power in accurately scanning the search space and identifying the original optimal area. On the other hand, in the exploitation phase, based on the local search near each member of the population, the algorithm is able to converge to better possible solutions.

3.1 Phase 1: Skill Acquisition from Experts (Exploration)

In the first phase, each SOA member strives to acquire a skill based on the guidance of a community expert member. The quality of each population member is commensurate with the objective function value obtained by that member of the population. The expert member for an SOA member is the member who has better conditions based on the value of the objective function. For each SOA member, all members that have a better objective function value than that member are considered as “experts set”. Among the members of this set, one member is randomly selected as an expert to train the member in question. Therefore, the expert selected to guide the SOA member is not necessarily the best candidate solution. In fact, the best candidate solution is a permanent member of the experts set, for all SOA members. The expert member causes the member of the population to be guided to different positions in the search space by learning the skill, which means global search and exploration ability of the algorithm. The new position calculated for each member of the population is acceptable if it improves the value of the objective function. Therefore, the first phase of the update can be modeled according to the mentioned concepts using Eqs. (3) and (4).

Here,

3.2 Phase 2: Skill Improvement Based on Practice and Individual Effort (Exploitation)

In the second phase, each population member tries to improve the skills acquired in the first phase through individual practice and activity. In SOA, this concept is modeled as local search with the aim of increasing exploitation in such a way that each member, in the neighborhood of its position, seeks better conditions to improve the value of its objective function (which indicates the level of skill). Similar to previous phase, the newly calculated position in this phase is acceptable if it improves the objective function value. The concepts of this phase of SOA updating are mathematically modeled using Eqs. (5) and (6).

Here,

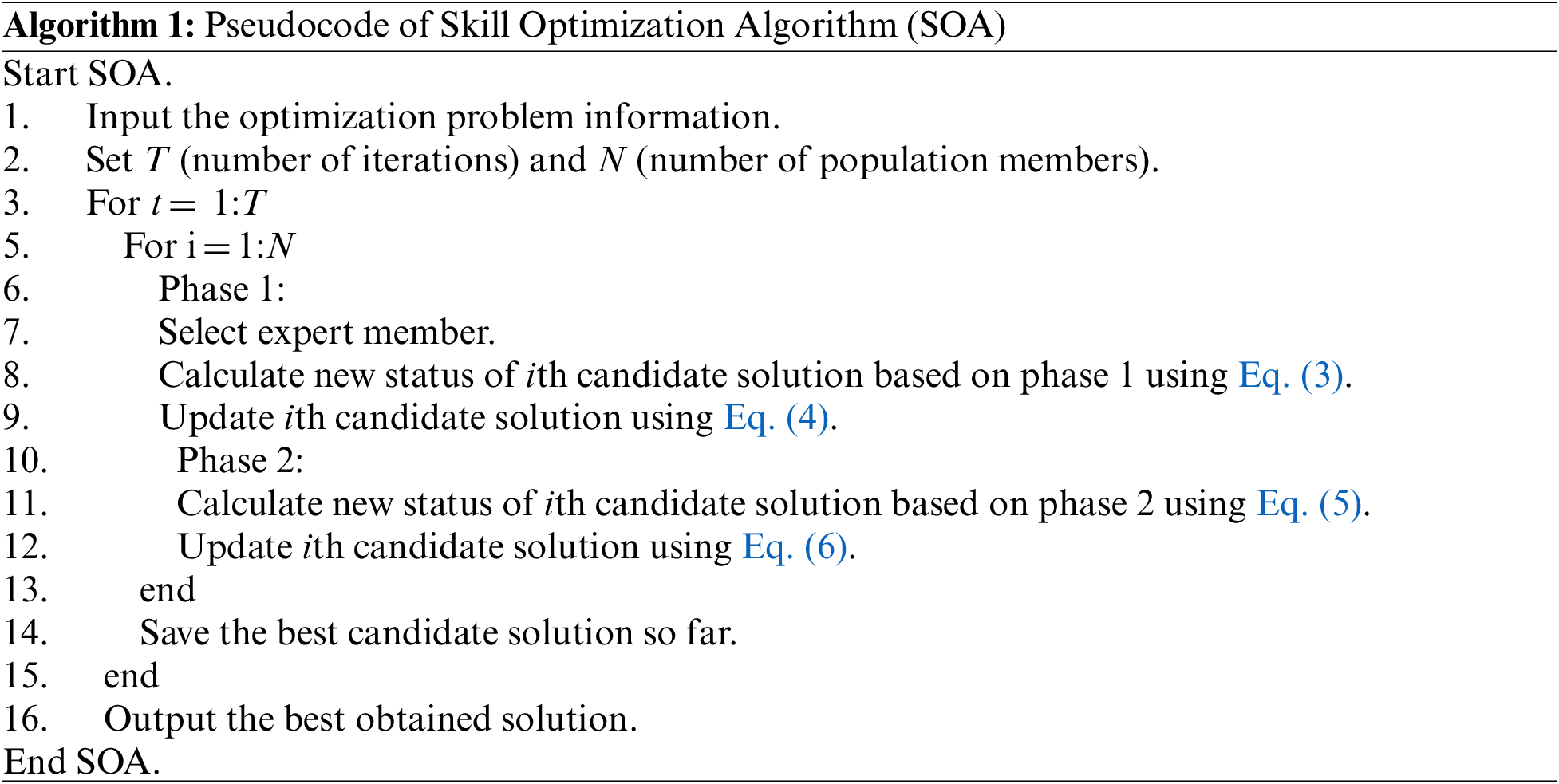

3.3 Repetition Process, Pseudocode, and Flowchart of SOA

The first iteration of SOA is completed after updating all members based on the first and second phases. The algorithm then enters the next iteration and the update process is repeated according to Eqs. (3) to (6). Once fully implemented, SOA puts the best candidate solution in the output. The SOA flowchart is presented in Fig. 1 and its pseudocode is presented in Alg. 1.

Figure 1: Flowchart of skill optimization algorithm (SOA)

3.4 Computational Complexity of SOA

In this subsection, the computational complexity of SOA is studied. The computational complexity of SOA initialization to create the initial population and initial evaluation of the objective function is equal to O(Nm), where N is the number of population members and m is the number of problem variables. The SOA member update process has two phases, while in each iteration and in each phase, the position of each updated member and the objective function are evaluated. Thus, the computational complexity of SOA in the upgrade process is equal to O(2NmT), where T is the maximum number of iterations. Thus, the total complexity of SOA is equal to O(Nm (1 + 2 T)).

4 Simulation Studies and Results

In this section, the performance of the proposed SOA in optimization applications is evaluated. For this purpose, SOA is implemented on a set of twenty-three standard objective functions. This set includes a variety of unimodal, high-dimensional multimodal, and fixed-dimensional multimodal functions [78]. The results obtained by the proposed SOA are compared with ten well-known metaheuristic algorithms including: GA, RFO, PSO, WOA, GWO, MVO, MPA, GSA, TSA, and TLBO. The algorithms selected for comparison are among the most popular metaheuristic algorithms published. The reason for choosing these algorithms for comparison is as follows. The most popular and widely used algorithms: GA and PSO. Highly cited algorithms: GSA, GWO, MVO, and WOA. Recently released and popular algorithms: RFO, MPA, and TSA. The setting values for the control parameters of competing algorithms are specified in Tab. 1. SOA and competing algorithms are employed in twenty independent implementations to optimize F1 to F23 functions, each of which contains 50,000 function evaluations. Optimization results are reported using six statistical indicators: Mean, best, worst, standard deviation (std), median, and rank.

4.1 Intuitive Analysis in Two-Dimensional Search Space

In order to observe the quality of SOA, the first experiment is performed on 10 objective functions including F1, and F3 to F11 in two dimensions, while the number of SOA population members is considered equal to 5. To show the SOA behavior in optimizing these functions, convergence curves, search history curves, and trajectory curves are presented in Fig. 2. These curves show the SOA’s behavior in search of the problem-solving space, solution-finding, and convergence process, how it achieves better solutions based on update processes, and decreases objective function values. Based on this analysis, it is determined that SOA, by improving the initial population, is able to increase the quality of candidate solutions and improve the accuracy of the quasi-optimal solution during the iterations of the algorithm.

Figure 2: Search history curves, trajectory curves, and convergence curves for optimization of different objective functions using SOA

4.2 Evaluation of Unimodal Functions

The first group of objective functions are selected from the unimodal type, including F1 to F7 functions. The results of implementing the proposed SOA and ten competing algorithms on these functions are reported in Tab. 2. Based on the analysis of the simulation results, it is clear that SOA has been able to provide the global optimal solution in optimizing F1, F3, F5, and F6. In optimizing F2, F4, and F7 functions, the SOA is the first best optimizer. Comparison of the optimization results shows that the proposed SOA offers superior and much more competitive performance against the ten mentioned algorithms and has the first rank of the best optimizer for optimizing F1 to F7 unimodal functions.

4.3 Evaluation of High-Dimensional Multimodal Functions

The second group of objective functions are selected from the high-dimensional multimodal category, including F8 to F13 functions. The optimization results of these functions using SOA and ten competing algorithms are presented in Tab. 3. The optimization results show that the proposed SOA has been able to provide the global optimal solution for F9, F10, and F11. In optimizing F8, F12, and F13 functions, the SOA is the first best optimizer. Analysis of the simulation results shows that the proposed SOA has presented superior and acceptable performance against ten compared algorithms and has the first rank of the best optimizer in solving F8 to F13 high-dimensional multimodal functions.

4.4 Evaluation of Fixed-Dimensional Multimodal Functions

The third group of objective functions is selected from the fixed-dimensional multimodal type, including F14 to F23 functions. The results of employing SOA and ten competing algorithms for optimizing these functions are given in Tab. 4. It can be inferred from the optimization results that the proposed SOA achieves the global optimal solution for F14 and F17. Moreover, SOA is the first best optimizer for F15, F21, and F22 functions. The analysis of the results also shows that in cases where the proposed SOA and some competing algorithms result in the same “mean” value, SOA has a lower value for the “std” criterion, which indicates that the performance of SOA is superior. Based on the results obtained by the proposed SOA and ten competing algorithms, one can confirm the effective ability of SOA in solving fixed-dimensional multimodal optimization problems.

The performance of competing algorithms and SOA in optimizing F1 to F23 is presented in the form of boxplots in Fig. 3.

Figure 3: Boxplots of performances for skill optimization algorithm (SOA) and competing algorithms

Reporting the results of implementing optimization algorithms on optimization problems through statistical indicators, provides useful information on the performance of these algorithms. In this subsection, a statistical analysis on the proposed algorithm and the mentioned ten competing algorithms is presented to determine whether the superiority of the proposed algorithm over competing algorithms is statistically significant or not. For this purpose, Wilcoxon rank sum test [79] which is a non-parametric statistical analysis is used. In this test, an index called “p-value” indicates whether there is a significant difference between the means of the two data samples.

The implementation results of this statistical analysis on the proposed algorithm and each of the competing algorithms are presented in Tab. 5. According to this table, in cases where the p-value is less than 0.05, the proposed SOA has a significant statistical superiority over the competing algorithm.

5 Conclusions and Future Works

In this paper, a new human-based metaheuristic algorithm called Skill Optimization Algorithm (SOA) was designed. Human effort to learn and improve skills is the main inspiration of SOA. The steps of implementing and updating SOA in two phases of exploration and exploitation were described and then mathematically modeled. A set of twenty-three standard objective functions of different types of unimodal and multimodal were considered to evaluate the optimization performance of SOA. The results of optimizing unimodal functions indicated the exploitation ability of SOA in local search. The results obtained by the optimization of high-dimensional multimodal functions showed the exploration ability of SOA in global search. The results of optimizing fixed-dimensional multimodal functions showed that SOA has an acceptable ability to balance the indicators of exploration and exploitation. In order to analyze the quality of the results achieved by SOA, its performance was compared with ten well-known metaheuristic algorithms. The simulation results confirmed the superiority of SOA performance over mentioned algorithms based on exploration and exploitation criteria. In other words, SOA can provide more effective solutions to optimization problems compared to the considered competing algorithms.

The proposed SOA can activate several research tasks for further studies. Development of binary and multi-objective versions of SOA are among the main proposals of this research. Employment of SOA for optimization purposes in various fields and real-world applications are other suggestions of the authors for future investigations.

Funding Statement: The research and article are supported by Specific Research project 2022 Faculty of Education, University of Hradec Kralove.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. M. Dehghani, Z. Montazeri, A. Dehghani, O. P. Malik, R. Morales-Menendez et al., “Binary spring search algorithm for solving various optimization problems,” Applied Sciences, vol. 11, no. 3, pp. 1286, 2021. [Google Scholar]

2. M. Dehghani, Z. Montazeri, G. Dhiman, O. Malik, R. Morales-Menendez et al., “A spring search algorithm applied to engineering optimization problems,” Applied Sciences, vol. 10, no. 18, pp. 6173, 2020. [Google Scholar]

3. M. Dehghani, Z. Montazeri, A. Dehghani, H. Samet, C. Sotelo et al., “DM: Dehghani method for modifying optimization algorithms,” Applied Sciences, vol. 10, no. 21, pp. 7683, 2020. [Google Scholar]

4. G. Dhiman, K. K. Singh, M. Soni, A. Nagar, M. Dehghani et al., “MOSOA: A new multi-objective seagull optimization algorithm,” Expert Systems with Applications, vol. 167, pp. 114150, 2021. [Google Scholar]

5. F. A. Zeidabadi, M. Dehghani and O. P. Malik, “RSLBO: Random selected leader based optimizer,” International Journal of Intelligent Engineering and Systems, vol. 14, no. 5, pp. 529–538, 2022. [Google Scholar]

6. M. Premkumar, P. Jangir, R. Sowmya and R. M. Elavarasan, “Many-objective gradient-based optimizer to solve optimal power flow problems: Analysis and validations,” Engineering Applications of Artificial Intelligence, vol. 106, pp. 104479, 2021. [Google Scholar]

7. S. Kumar, P. Jangir, G. G. Tejani, M. Premkumar and H. H. Alhelou, “MOPGO: A new physics-based multi-objective plasma generation optimizer for solving structural optimization problems,” IEEE Access, vol. 9, pp. 84982–85016, 2021. [Google Scholar]

8. R. -M. Devi, M. Premkumar, P. Jangir, M. -A. Elkotb, R. -M. Elavarasan et al., “IRKO: An improved runge-kutta optimization algorithm for global optimization problems,” Computers, Materials \& Continua, vol. 70, no. 3, pp. 4803–4827, 2022. [Google Scholar]

9. M. Premkumar, P. Jangir and R. Sowmya, “MOGBO: A new multiobjective gradient-based optimizer for real-world structural optimization problems,” Knowledge-Based Systems, vol. 218, pp. 106856, 2021. [Google Scholar]

10. M. Premkumar, P. Jangir, R. Sowmya, H. H. Alhelou, A. A. Heidari et al., “MOSMA: Multi-objective slime mould algorithm based on elitist non-dominated sorting,” IEEE Access, vol. 9, pp. 3229–3248, 2021. [Google Scholar]

11. S. Kumar, P. Jangir, G. G. Tejani and M. Premkumar, “MOTEO: A novel physics-based multiobjective thermal exchange optimization algorithm to design truss structures,” Knowledge-Based Systems, vol. 242, pp. 108422, 2022. [Google Scholar]

12. M. Dehghani, Z. Montazeri, A. Ehsanifar, A. R. Seifi, M. J. Ebadi et al., “Planning of energy carriers based on final energy consumption using dynamic programming and particle swarm optimization,” Electrical Engineering & Electromechanics, no. vol. 5, pp. 62–71, 2018. [Google Scholar]

13. Z. Montazeri and T. Niknam, “Energy carriers management based on energy consumption,” in Proc. of IEEE 4th Int. Conf. on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, pp. 0539–0543, 2017. [Google Scholar]

14. R. -M. Devi, M. Premkumar, P. Jangir, B. -S. Kumar, D. Alrowaili et al., “BHGSO: Binary hunger games search optimization algorithm for feature selection problem,” Computers, Materials \& Continua, vol. 70, no. 1, pp. 557–-579, 2022. [Google Scholar]

15. M. Premkumar, P. Jangir, R. Sowmya, R. M. Elavarasan and B. S. Kumar, “Enhanced chaotic JAYA algorithm for parameter estimation of photovoltaic cell/modules,” ISA Transactions, vol. 116, pp. 139–166, 2021/10/01/, 2021. [Google Scholar]

16. M. Dehghani, Z. Montazeri and O. Malik, “Optimal sizing and placement of capacitor banks and distributed generation in distribution systems using spring search algorithm,” International Journal of Emerging Electric Power Systems, vol. 21, no. 1, pp. 20190217, 2020. [Google Scholar]

17. M. Dehghani, Z. Montazeri, O. P. Malik, K. Al-Haddad, J. M. Guerrero et al., “A new methodology called dice game optimizer for capacitor placement in distribution systems,” Electrical Engineering & Electromechanics, no. vol. 1, pp. 61–64, 2020. [Google Scholar]

18. S. Dehbozorgi, A. Ehsanifar, Z. Montazeri, M. Dehghani and A. Seifi, “Line loss reduction and voltage profile improvement in radial distribution networks using battery energy storage system,” in Proc. of IEEE 4th Int. Conf. on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, pp. 0215–0219, 2017. [Google Scholar]

19. Z. Montazeri and T. Niknam, “Optimal utilization of electrical energy from power plants based on final energy consumption using gravitational search algorithm,” Electrical Engineering & Electromechanics, no. vol. 4, pp. 70–73, 2018. [Google Scholar]

20. M. Dehghani, M. Mardaneh, Z. Montazeri, A. Ehsanifar, M. J. Ebadi et al., “Spring search algorithm for simultaneous placement of distributed generation and capacitors,” Electrical Engineering & Electromechanics, no. vol. 6, pp. 68–73, 2018. [Google Scholar]

21. M. Premkumar, R. Sowmya, P. Jangir, K. S. Nisar and M. Aldhaifallah, “A new metaheuristic optimization algorithms for brushless direct current wheel motor design problem,” Computers, Materials & Continua, vol. 67, no. 2, pp. 2227–2242, 2021. [Google Scholar]

22. A. Ehsanifar, M. Dehghani and M. Allahbakhshi, “Calculating the leakage inductance for transformer inter-turn fault detection using finite element method,” in Proc. of Iranian Conf. on Electrical Engineering (ICEE), Tehran, Iran, pp. 1372–1377, 2017. [Google Scholar]

23. M. Dehghani, Z. Montazeri and O. P. Malik, “Energy commitment: A planning of energy carrier based on energy consumption,” Electrical Engineering & Electromechanics, no. 4, pp. 69–72, 2019. https://doi.org/10.20998/2074-272X.2019.4.10. [Google Scholar]

24. M. Dehghani, M. Mardaneh, O. P. Malik, J. M. Guerrero, C. Sotelo et al., “Genetic algorithm for energy commitment in a power system supplied by multiple energy carriers,” Sustainability, vol. 12, no. 23, pp. 10053, 2020. [Google Scholar]

25. M. Dehghani, M. Mardaneh, O. P. Malik, J. M. Guerrero, R. Morales-Menendez et al., “Energy commitment for a power system supplied by multiple energy carriers system using following optimization algorithm,” Applied Sciences, vol. 10, no. 17, pp. 5862, 2020. [Google Scholar]

26. H. Rezk, A. Fathy, M. Aly and M. N. F. Ibrahim, “Energy management control strategy for renewable energy system based on spotted hyena optimizer,” Computers, Materials & Continua, vol. 67, no. 2, pp. 2271–2281, 2021. [Google Scholar]

27. J. Kennedy and R. Eberhart, “Particle swarm optimization,” in Proc. of ICNN’95 - Int. Conf. on Neural Networks, Perth, WA, Australia, pp. 1942–1948, 1995. [Google Scholar]

28. D. E. Goldberg and J. H. Holland, “Genetic algorithms and machine learning,” Machine Learning, vol. 3, no. 2, pp. 95–99, 1988. [Google Scholar]

29. E. Rashedi, H. Nezamabadi-Pour and S. Saryazdi, “GSA: A gravitational search algorithm,” Information Sciences, vol. 179, no. 13, pp. 2232–2248, 2009. [Google Scholar]

30. R. V. Rao, V. J. Savsani and D. Vakharia, “Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems,” Computer-Aided Design, vol. 43, no. 3, pp. 303–315, 2011. [Google Scholar]

31. R. Moghdani and K. Salimifard, “Volleyball premier league algorithm,” Applied Soft Computing, vol. 64, pp. 161–185, 2018. [Google Scholar]

32. D. H. Wolpert and W. G. Macready, “No free lunch theorems for optimization,” IEEE Transactions on Evolutionary Computation, vol. 1, no. 1, pp. 67–82, 1997. [Google Scholar]

33. H. Givi, M. A. Noroozi, B. Vahidi, J. S. Moghani and M. A. V. Zand, “A novel approach for optimization of z-matrix building process using ant colony algorithm,” Journal of Basic and Applied Scientific Research, vol. 2, no. 9, pp. 8932–8937, 2012. [Google Scholar]

34. S. Mirjalili, S. M. Mirjalili and A. Lewis, “Grey wolf optimizer,” Advances in Engineering Software, vol. 69, pp. 46–61, 2014. [Google Scholar]

35. S. Mirjalili and A. Lewis, “The whale optimization algorithm,” Advances in Engineering Software, vol. 95, pp. 51–67, 2016. [Google Scholar]

36. P. Trojovský and M. Dehghani, “Pelican optimization algorithm: A novel nature-inspired algorithm for engineering applications,” Sensors, vol. 22, no. 3, pp. 855, 2022. [Google Scholar]

37. M. Dehghani, Š Hubálovský and P. Trojovský, “Cat and mouse based optimizer: A new nature-inspired optimization algorithm,” Sensors, vol. 21, no. 15, pp. 5214, 2021. [Google Scholar]

38. H. Givi, M. Dehghani, Z. Montazeri, R. Morales-Menendez, R. A. Ramirez-Mendoza et al., “GBUO: “the good, the Bad, and the ugly” optimizer,” Applied Sciences, vol. 11, no. 5, pp. 2042, 2021. [Google Scholar]

39. A. Faramarzi, M. Heidarinejad, S. Mirjalili and A. H. Gandomi, “Marine predators algorithm: A nature-inspired metaheuristic,” Expert Systems with Applications, vol. 152, pp. 113377, 2020. [Google Scholar]

40. M. Dehghani, Š Hubálovský and P. Trojovský, “Tasmanian devil optimization: A new bio-inspired optimization algorithm for solving optimization algorithm,” IEEE Access, vol. 10, pp. 19599–19620, 2022. https://doi.org.10.1109/ACCESS.2022.3151641. [Google Scholar]

41. F. A. Zeidabadi, S. -A. Doumari, M. Dehghani, Z. Montazeri, P. Trojovský et al., “MLA: A new mutated leader algorithm for solving optimization problems,” Computers, Materials \& Continua, vol. 70, no. 3, pp. 5631–5649, 2022. [Google Scholar]

42. S. Kaur, L. K. Awasthi, A. L. Sangal and G. Dhiman, “Tunicate swarm algorithm: A new bio-inspired based metaheuristic paradigm for global optimization,” Engineering Applications of Artificial Intelligence, vol. 90, pp. 103541, 2020. [Google Scholar]

43. M. Dehghani, Š. Hubálovský and P. Trojovský, “Northern goshawk optimization: A new swarm-based algorithm for solving optimization problems,” IEEE Access, vol. 9, pp. 162059–162080, 2021. https://doi.org.10.1109/ACCESS.2021.3133286. [Google Scholar]

44. M. Dehghani, M., Mardaneh, O. P. Malik and S. M. NouraeiPour, “DTO: Donkey theorem optimization,” in Proc. of Iranian Conference on Electrical Engineering (ICEE), Yazd, Iran, IEEE, pp. 1855–1859, 2019. [Google Scholar]

45. G. Dhiman, M. Garg, A. Nagar, V. Kumar and M. Dehghani, “A novel algorithm for global optimization: Rat swarm optimizer,” Journal of Ambient Intelligence and Humanized Computing, vol. 12, pp. 1–26, 2020. [Google Scholar]

46. F. A. Zeidabadi, S. A. Doumari, M. Dehghani, Z. Montazeri, P. Trojovský et al. “AMBO: All members-based optimizer for solving optimization problems,” Computers, Materials \& Continua, vol. 70, no. 2, pp. 2905–-2921, 2022. [Google Scholar]

47. D. Połap and M. Woźniak, “Red fox optimization algorithm,” Expert Systems with Applications, vol. 166, pp. 114107, 2021. [Google Scholar]

48. S. A. Doumari, F. A. Zeidabadi, M. Dehghani and O. P. Malik, “Mixed best members based optimizer for solving various optimization problems,” International Journal of Intelligent Engineering and Systems, vol. 14, no. 4, pp. 384–392, 2021. [Google Scholar]

49. F. A. Zeidabadi, S. A. Doumari, M. Dehghani and O. P. Malik, “MLBO: Mixed leader based optimizer for solving optimization problems,” International Journal of Intelligent Engineering and Systems, vol. 14, no. 4, pp. 472–479, 2021. [Google Scholar]

50. R. Storn and K. Price, “Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces,” Journal of Global Optimization, vol. 11, no. 4, pp. 341–359, 1997. [Google Scholar]

51. M. Dehghani, Š. Hubálovský and P. Trojovský, “A new optimization algorithm based on average and subtraction of the best and worst members of the population for solving various optimization problems,” PeerJ Computer Science, vol. 8, pp. e910, 2022. [Google Scholar]

52. W. Banzhaf, P. Nordin, R. E. Keller and F. D. Francone, “Genetic programming: An introduction: On the automatic evolution of computer programs and its applications,” in Library of Congress Cataloging-in-Publication Data, 1st Ed., vol. 27. San Francisco, CA, USA: Morgan Kaufmann Publishers, pp. 1–398, 1998. [Google Scholar]

53. F. -A. Zeidabadi, A. Dehghani, M. Dehghani, Z. Montazeri, Š Hubálovský et al., “SSABA: Search step adjustment based algorithm,” Computers, Materials \& Continua, vol. 71, no. 2, pp. 4237–4256, 2022. [Google Scholar]

54. L. J. Fogel, A. J. Owens and M. J. Walsh, “Artificial intelligence through simulated evolution,” 1966. [Google Scholar]

55. M. Dehghani and P. Trojovský, “Selecting some variables to update-based algorithm for solving optimization problems,” Sensors, vol. 22, no. 5, pp. 1795, 2022. [Google Scholar]

56. S. A. Hofmeyr and S. Forrest, “Architecture for an artificial immune system,” Evolutionary Computation, vol. 8, no. 4, pp. 443–473, 2000. [Google Scholar]

57. S. Kirkpatrick C. D. Gelatt, and M. P. Vecchi, “Optimization by simulated annealing,” Science, vol. 220, no. 4598, pp. 671–680, 1983. [Google Scholar]

58. M. Dehghani, Z. Montazeri, A. Dehghani and A. Seifi, “Spring search algorithm: A new meta-heuristic optimization algorithm inspired by hooke’s law,” in Proc. of IEEE 4th Int. Conf. on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, pp. 0210–0214, 2017. [Google Scholar]

59. M. Dehghani and H. Samet, “Momentum search algorithm: A new meta-heuristic optimization algorithm inspired by momentum conservation law,” SN Applied Sciences, vol. 2, no. 10, pp. 1–15, 2020. [Google Scholar]

60. S. Mirjalili, S. M. Mirjalili and A. Hatamlou, “Multi-verse optimizer: A nature-inspired algorithm for global optimization,” Neural Computing and Applications, vol. 27, no. 2, pp. 495–513, 2016. [Google Scholar]

61. M. Dehghani, Z. Montazeri, A. Dehghani, N. Nouri and A. Seifi, “BSSA: Binary spring search algorithm,” in Proc. of IEEE 4th Int. Conf. on Knowledge-Based Engineering and Innovation, Tehran, Iran, pp. 0220–0224, 2017. [Google Scholar]

62. A. Faramarzi, M. Heidarinejad, B. Stephens and S. Mirjalili, “Equilibrium optimizer: A novel optimization algorithm,” Knowledge-Based Systems, vol. 191, pp. 105190, 2020. [Google Scholar]

63. F. A. Hashim, E. H. Houssein, M. S. Mabrouk, W. Al-Atabany and S. Mirjalili, “Henry gas solubility optimization: A novel physics-based algorithm,” Future Generation Computer Systems, vol. 101, pp. 646–667, 2019. [Google Scholar]

64. M. Dehghani, M. Mardaneh, J. M. Guerrero, O. Malik and V. Kumar, “Football game based optimization: An application to solve energy commitment problem,” International Journal of Intelligent Engineering and Systems, vol. 13, no. 5, pp. 514–523, 2020. [Google Scholar]

65. F. A. Zeidabadi and M. Dehghani, “POA: Puzzle optimization algorithm,” International Journal of Intelligent Engineering and Systems, vol. 15, no. 1, pp. 273–281, 2022. [Google Scholar]

66. M. Dehghani, Z. Montazeri, S. Saremi, A. Dehghani, O. P. Malik et al., “HOGO: Hide objects game optimization,” International Journal of Intelligent Engineering and Systems, vol. 13, no. 4, pp. 216–225, 2020. [Google Scholar]

67. M. Dehghani, Z. Montazeri, H. Givi, J. M. Guerrero and G. Dhiman, “Darts game optimizer: A new optimization technique based on darts game,” International Journal of Intelligent Engineering and Systems, vol. 13, no. 5, pp. 286–294, 2020. [Google Scholar]

68. M. Dehghani, Z. Montazeri, O. P. Malik, G. Dhiman and V. Kumar, “BOSA: Binary orientation search algorithm,” International Journal of Innovative Technology and Exploring Engineering, vol. 9, no. 1, pp. 5306–5310, 2019. [Google Scholar]

69. M. Dehghani, Z. Montazeri and O. P. Malik, “DGO: Dice game optimizer,” Gazi University Journal of Science, vol. 32, no. 3, pp. 871–882, 2019. [Google Scholar]

70. M. Dehghani, Z. Montazeri, O. P. Malik, A. Ehsanifar and A. Dehghani, “OSA: Orientation search algorithm,” International Journal of Industrial Electronics,” Control and Optimization, vol. 2, no. 2, pp. 99–112, 2019. [Google Scholar]

71. S. A. Doumari, H. Givi, M. Dehghani and O. P. Malik, “Ring toss game-based optimization algorithm for solving various optimization problems,” International Journal of Intelligent Engineering and Systems, vol. 14, no. 3, pp. 545–554, 2021. [Google Scholar]

72. M. Dehghani, M. Mardaneh, J. M. Guerrero, O. P. Malik, R. A. Ramirez-Mendoza et al., “A new “doctor and patient” optimization algorithm: An application to energy commitment problem,” Applied Sciences, vol. 10, no. 17, pp. 5791, 2020. [Google Scholar]

73. M. Dehghani, M. Mardaneh and O. Malik, “FOA:‘following’optimization algorithm for solving power engineering optimization problems,” Journal of Operation and Automation in Power Engineering, vol. 8, no. 1, pp. 57–64, 2020. [Google Scholar]

74. M. Dehghani and P. Trojovský, “Teamwork optimization algorithm: A New optimization approach for function minimization/Maximization,” Sensors, vol. 21, no. 13, pp. 4567, 2021. [Google Scholar]

75. M. Dehghani, Z. Montazeri, A. Dehghani and O. P. Malik, “GO: Group optimization,” Gazi University Journal of Science, vol. 33, no. 2, pp. 381–392, 2020. [Google Scholar]

76. F. -A. Zeidabadi, M. Dehghani, P. Trojovský, Š Hubálovský, V. Leiva et al., “Archery algorithm: A novel stochastic optimization algorithm for solving optimization problems,” Computers,” Materials \& Continua, vol. 72, no. 1, pp. 399–416, 2022. [Google Scholar]

77. S. H. S. Moosavi and V. K. Bardsiri, “Poor and rich optimization algorithm: A new human-based and multi populations algorithm,” Engineering Applications of Artificial Intelligence, vol. 86, pp. 165–181, 2019. [Google Scholar]

78. X. Yao, Y. Liu and G. Lin, “Evolutionary programming made faster,” IEEE Transactions on Evolutionary Computation, vol. 3, no. 2, pp. 82–102, 1999. [Google Scholar]

79. F. Wilcoxon, “Individual comparisons by ranking methods,” Breakthroughs in Statistics, vol. 1, no. 6, pp. 196–202, 1992. [Google Scholar]

AppendixThe source codes of the proposed SOA are available at:

https://www.mathworks.com/matlabcentral/fileexchange/110675-skill-optimization-algorithm-soa.

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools