Open Access

Open Access

ARTICLE

IoMT-Based Healthcare Framework for Ambient Assisted Living Using a Convolutional Neural Network

1 Department of Computer Engineering, Mutah University, Al-Karak, Jordan; Higher Colleges of Technology, Dubai, UAE

2 Department of Computer Engineering, College of Engineering, Mutah University, Jordan

3 School of Information Technology, Skyline University College, University City Sharjah, Sharjah, UAE

4 Center for Cyber Security, Faculty of Information Science and Technology, UKM, 43600, Bangi, Selangor, Malaysia

* Corresponding Author: Taher M. Ghazal. Email:

Computers, Materials & Continua 2023, 74(3), 6867-6878. https://doi.org/10.32604/cmc.2023.034952

Received 02 August 2022; Accepted 01 November 2022; Issue published 28 December 2022

Abstract

In the age of universal computing, human life is becoming smarter owing to the recent developments in the Internet of Medical Things (IoMT), wearable sensors, and telecommunication innovations, which provide more effective and smarter healthcare facilities. IoMT has the potential to shape the future of clinical research in the healthcare sector. Wearable sensors, patients, healthcare providers, and caregivers can connect through an IoMT network using software, information, and communication technology. Ambient assisted living (AAL) allows the incorporation of emerging innovations into the routine life events of patients. Machine learning (ML) teaches machines to learn from human experiences and to use computer algorithms to “learn” information directly instead of relying on a model. As the sample size accessible for learning increases, the performance of the algorithms improves. This paper proposes a novel IoMT-enabled smart healthcare framework for AAL to monitor the physical actions of patients using a convolutional neural network (CNN) algorithm for fast analysis, improved decision-making, and enhanced treatment support. The simulation results showed that the prediction accuracy of the proposed framework is higher than those of previously published approaches.Keywords

Medical imaging is essential in modern medicine because it enables detailed, noninvasive observation of the human body’s internal architecture and metabolic processes. It also provides potentially valuable data for treating patient-specific diseases, providing characteristic support while diagnosing diseases, and for treatment planning. The volume of healthcare imaging data is quickly expanding due to the advancements in technology, population growth, cost reductions, and increased knowledge of the utility of imaging modalities. This contributes to the clinicians’ problem of how to evaluate vast amounts of data from divergent sources. Research has shown that inter-observer variability can be significant when completing numerous clinical imaging tasks. As a result, there is a cumulative request for diagnostic and decision-making tools [1]. Radiation has always been a part of the Earth’s evolution. In 1895, the biological effects of radiation were identified by the German physicist Wilhelm Conrad Röntgen, who discovered X-rays; Moreover, radioactivity was first demonstrated by the French physicist Henri Becquerel. In the early 20th century, ionizing radiation was first used to treat malignant (cancerous) and benign disorders. Henri Coutard, a French radiation oncologist, presented the first proof of fractional radiotherapy to treat advanced larynx cancer without any significant negative side effects [2].

An intelligent healthcare system uses wearable devices, Internet of Things, or mobile internet to constantly acquire data; to link people, resources, and institutions involved in healthcare; and to intelligently regulate and respond to the demands of the medical ecosystem. Many healthcare systems are becoming more common due to the rapid changes in health information technology and machine-learning technologies. The wide range of patient health data can guide clinicians in decision making and can be used to train intelligent healthcare systems [3].

Machine learning (ML) is a subfield of artificial intelligence (AI). It allows an AI system to learn from its surroundings and utilize that knowledge to make intelligent judgments. Machine learning has become increasingly popular across various industries, including retail, media, agriculture, finance, and healthcare [4]. In healthcare 4.0, ML plays a critical role. This study developed an intelligent healthcare model that uses ML techniques to synergize medical imaging and radiotherapy.

Many researchers have developed intelligent healthcare models to synergize medical imaging and radiotherapy using ML techniques. In some of their research works, the authors reported that the European Medicines Agency and the United States Food and Drug Administration authorized the sale of the first two chimeric antigen receptor T-cell-based (tisagenlecleucel and axicabtagene ciloleucel) therapies in 2018. These therapies showed excellent clinical results in patients with B-cell acute lymphoblastic leukemia, including diffused large B-cell lymphoma that did not respond to treatment after two or more lines of therapy [5].

The author developed an eye movement desensitization and reprocessing therapy prototype. It is a three-module design with an Android boundary that regulates the rapidity of the orders, the type of music, and the strength of the vibration of the perceptible module. The software is designed for psychotherapists, who may operate all three modules to follow a predetermined schedule or to develop a customized program for the patient. An Android gadget monitors the sound segment, whereas an IOIO board for the Android DEV-10748 motherboard monitors the visual and tactile modules. In the graphic treatment option, the red LEDs on the glasses are illuminated in various orders. Each hand receives pulses as part of the touch treatment option. The sound therapy option plays from a list of 10 distinct sounds in each person’s left or right ear. There are two modes of operation for each type of therapy: automated and manual. The psychotherapist can manually select the frequency of the sequence (from 0 to 6 s) and its display side [6]. The author used a cycle generative adversarial network (GAN) to generate counterfactual explanations by translating images in an unsupervised manner. By applying a translation between two classes in stages, the cycle GAN was able to amplify the differences. The performance of a linear support vector machine trained with structures extracted from recognized novel image areas was comparable to that of a CNN. The approach was performed using retinal images for forecasting diabetic macular edema.

A technique for detecting psychological issues in patients was presented. The authors analyzed patients’ posts on social media platforms to determine their depression and stress levels. The authors used a CNN, a recurrent neural network, and a bidirectional long short-term memory model to determine these levels. Furthermore, they developed an ontology-based endorsement scheme that directs text to patients to monitor the outcomes. On the other hand, the challenge was how to continuously monitor the patients using the data from their social interactions. A practical statistical analysis, data standardization, feature selection, and ML model were thus necessary to forecast the patient mood accurately [7].

A healthcare monitoring system needs access to the patient’s electronic health records (EHRs) to correctly put the patient’s vital signs into context when making suggestions or choices. On the other hand, patient’s medical records are frequently obtained from multiple medical institutions that do not communicate with each other. SAPHIRE utilizes a document-sharing framework to address this interoperability issue. Therefore, EHR documents are kept in local EHR sources. However, they are also recorded to a document-sharing registry through a set of metadata so that they can be located and accessed regardless of where they are stored. We addressed the discovery and access of relevant EHR documents with this architecture. Still, for the clinical decision support system to leverage the data in these EHR documents, they must be stored in a machine-processable format. HL7 Clinical Document Architecture level 3 documents were used in SAPHIRE, where the coding schemes mutually annotate the entries and sections [8].

ML techniques [9], transfer learning [10], deep ensemble learning [11], computational intelligence methods [12], fuzzy inference systems [13,14], supervised ML [15], deep extreme ML [16], and soft computing techniques [17–20] have all been used in research to improve smart healthcare monitoring frameworks.

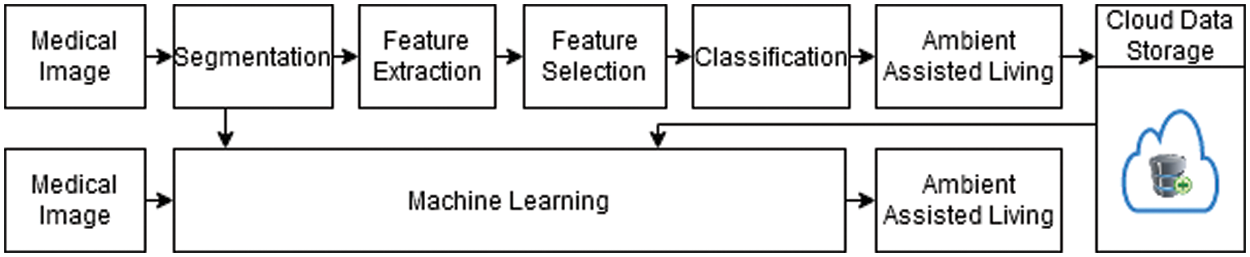

This paper describes AAL targets to create advanced technical clarifications and healthcare services to assist a smart IoMT-based healthcare framework in monitoring patients’ health, improving their quality of life, and reducing the costs associated with health and social care using a CNN-based ML algorithm for faster decision making and better treatment. Machine learning, including CNNs, has great potential for applications in imaging and therapy. Currently, CNNs have permeated almost every feature of medical image analysis. However, conventional image analysis approaches are never considered a substitute for radiologists but are used only as a supplementary tool. This paper proposes a smart IoMT-based healthcare framework for AAL using CNN, which proves beneficial in the medical field. A flowchart of the proposed framework is shown in Fig. 1.

Figure 1: Flowchart of the proposed healthcare model

As shown in Fig. 1, the proposed framework obtains input images and performs segmentation, feature extraction, selection, and classification on them. The classified outcomes are then stored in the cloud. In the other steps, real-time image data are taken as input, and trained patterns are imported from the cloud to check the presence of AAL.

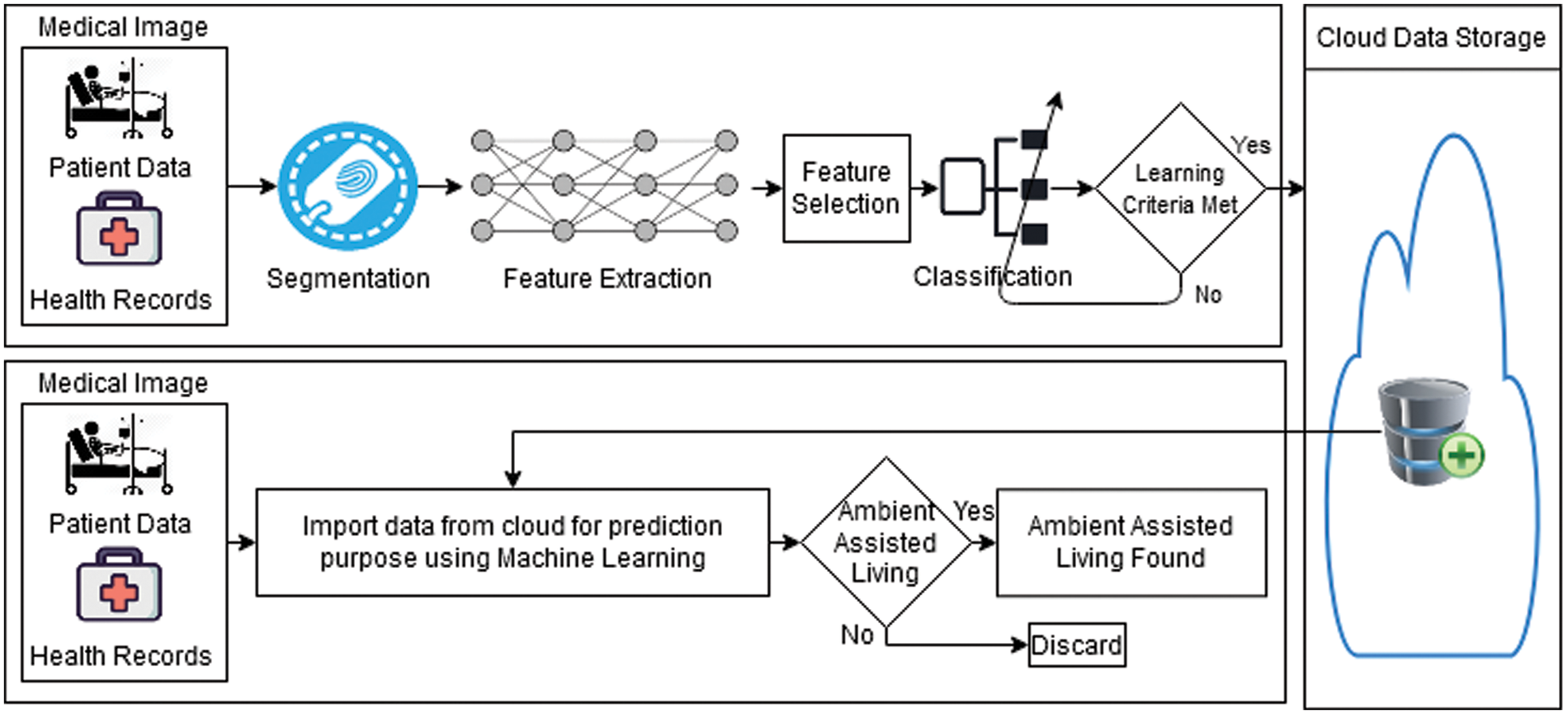

Fig. 2 shows a detailed description of Fig. 1. As shown in the former, the input is taken in image form and then through the segmentation process. Segmentation is the process of clustering parts of an image that relate to the same object class. This procedure is also known as pixel-level classification. The feature extraction process then converts the raw or noisy data into numerical features and proceeds with processing the original dataset. It yields better results than executing ML on the raw data directly. The feature selection process is based on a CNN algorithm that attempts to fit a given dataset. It is used to evaluate all possible feature combinations according to the criterion. Then, the data is classified by using the CNN algorithm.

Figure 2: Illustration of the proposed healthcare model

The loss function in the proposed model is defined as

The SoftMax transformation is defined as

where

In Eq. (1),

Eq. (2) is derived as

Taking the common

With division, we have

As we know,

Therefore, Eq. (5) can be written as

The derivation of Eq. (3) with respect to

It can be written as

It is known that

Moreover,

Therefore, we can derive this as

By taking the derivative with respect to

where

It can be defined as

Then,

where

Here,

After relieving the values of

Eq. (11) signifies the loss derivative of the weights for the fully connected layer.

After the classification, the learning criteria are tested to determine whether they are met. If not, the classification process is discarded; otherwise, the outcome will be stored in the cloud. The trained data are saved and imported from the cloud for prediction purposes in the validation phase using the CNN algorithm. AAL checks whether it was found or not in the validation phase. The operation is discarded if the answer is no; otherwise, the notification will state that AAL was detected.

Modern medical imaging techniques are increasingly used in medical care to study the complex hierarchical structures of anatomic specifics in various tissues and organs. Computer-aided diagnosis (CAD) processes use medical image analysis as a critical feature. Recent ML methods have made it possible to quantify the final classification labels from raw medical image pixels. In medical classification, the CNN algorithm is widely used. CNN is an excellent feature extractor; thus, classifying medical images may save time and money.

This paper proposes a smart IoMT-based healthcare framework for AAL using a CNN-based approach. The proposed CNN-based method was applied to a dataset gathered from the UCI ML data repository. The CNN-based approach was applied to 35,250 samples to synergize the medical images. Furthermore, the dataset was divided into training data (70%, 24,675 samples) and validation data (30%, 10,575 samples). Dissimilar parameters were used for performance control with other metrics, which were obtained using the following formulas [17]

Table 1 shows the proposed framework’s results for determining AAL’s presence during training. During the training, 24,675 samples were used, which were divided into 12,604 positive and 12,071 negative samples. A total of 11,548 TP samples were positively forecasted, and no AAL was recognized; however, 1056 records were incorrectly forecasted as negatives, indicating the presence of AAL. Similarly, 12,071 samples were obtained, with the negative ones indicating the presence of AAL and the positive ones indicating no AAL; of these, 10,876 samples were correctly recognized as negatives, indicating the presence of AAL, and 1195 samples were imprecisely predicted as positives, indicating no AAL, despite the presence of it.

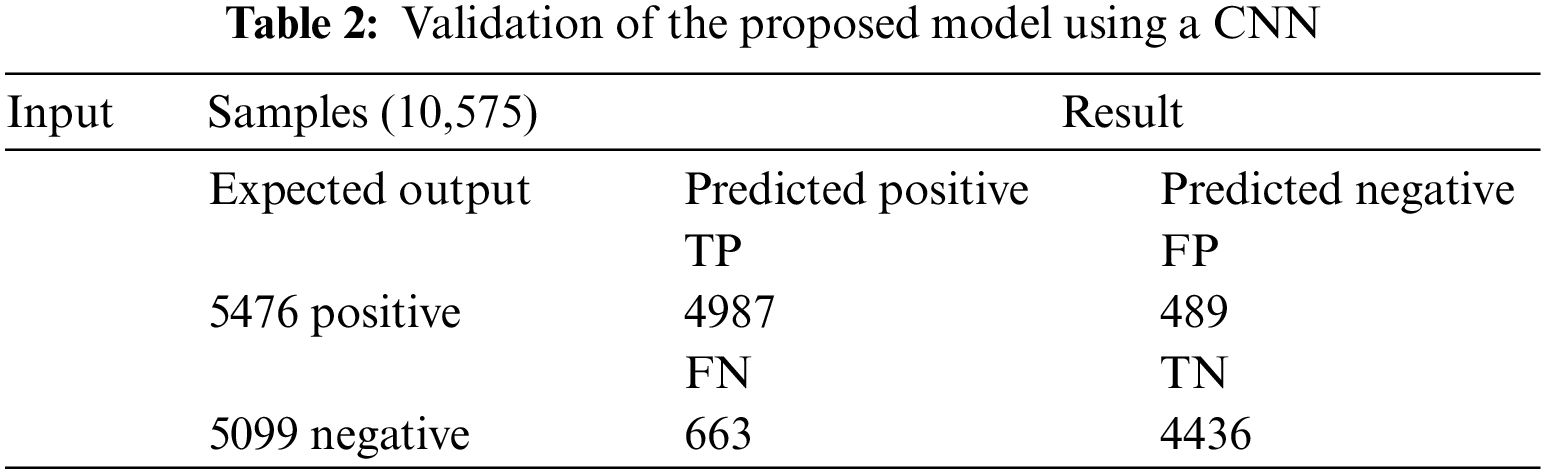

Table 2 shows the proposed framework’s results for determining AAL’s presence during the validation period. During the training, 10,575 samples were used, which were divided into 5476 positive and 5099 negative samples. A total of 4987 TP samples were positively predicted, and no AAL was recognized; however, 489 records were incorrectly forecasted as negatives, indicating the presence of AAL. Similarly, 5099 samples were acquired, with the negative ones showing the presence of AAL and the positive ones indicating no AAL; of these, 4436 samples were correctly recognized as negatives, indicating the presence of AAL, and 663 samples were imprecisely predicted as positives, indicating no AAL, despite the presence of it.

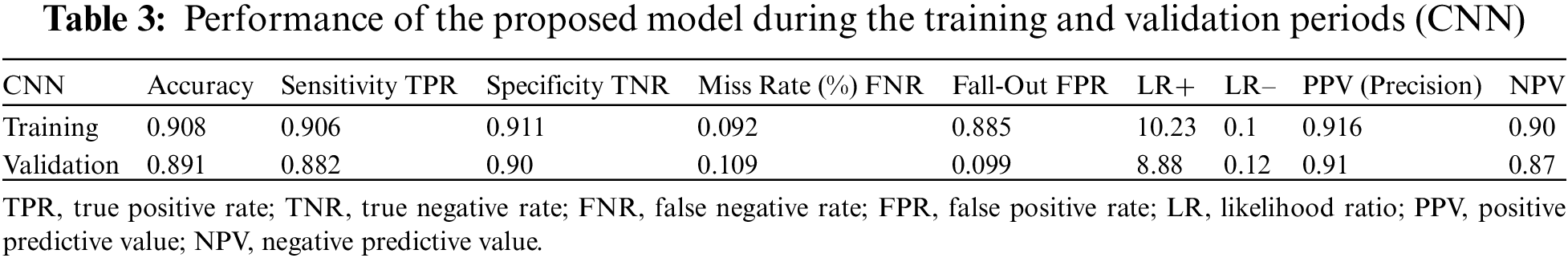

Table 3 (CNN) shows the performance of the framework in terms of accuracy (0.908), sensitivity (0.906), specificity (0.911), miss rate (0.0912), and precision (0.916). In the validation period, the proposed framework obtained accuracy, sensitivity, specificity, miss rate, and precision of 0.891, 0.882, 0.90, 0.1089, and 0.87, respectively. In addition, the proposed framework obtained a fall-out, likelihood positive ratio, likelihood negative ratio, and negative predictive value of 0.885, 10.23, 0.1, and 0.90, respectively, during the training period, and 0.099, 8.88, 0.12, and 0.87, during the validation period.

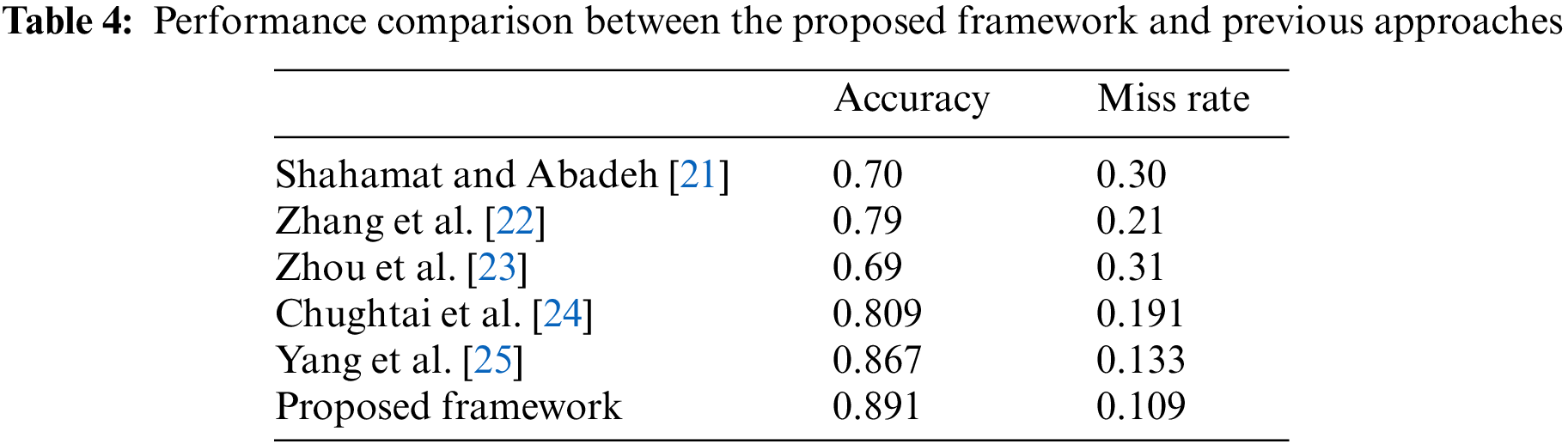

Table 4 compares the performance of the proposed healthcare framework for determining the presence of AAL using a CNN approach with those of previous methods. The results clearly show that the proposed framework is better than the earlier approaches regarding accuracy and miss rate.

Ambient assisted living (AAL) is an emerging field in which artificial intelligence enables new products, services, and processes to help provide safe, high-quality, and independent healthcare assistance to patients in their daily lives. Their daily routines can become physically and psychologically challenging, owing to their current health issues. Technology can help patients with their daily interactions and be integrated into their medical care, which is essential for ensuring their health and happiness. However, a CNN is necessary as it can help radiologists make more accurate diagnoses by conducting a statistical analysis of suspicious lesions and reducing the reading time owing to the automatic report generation, which is some of the advantages of AI that can be integrated into the clinical workflow. Artificial intelligence can be used in various radiological imaging tasks, including risk assessment, identification, diagnosis, therapy reaction, and cross-disease discovery, thanks to the advances in both imaging and computers.

This paper proposes an intelligent IoMT-based healthcare framework for AAL using a CNN algorithm, which proves beneficial and effective for radiologists and patients. The proposed approach obtained excellent outcomes, with an accuracy of 0.891 and a miss rate of 0.067, which are better than previous approaches [21–25].

Data Availability: The data used in this paper can be obtained from the corresponding author upon request.

Funding Statement: Self-funded; no external funding is involved in this study.

Conflicts of Interest: The authors declare no conflicts of interest concerning the publication of this work.

References

1. Z. Salahuddin, H. C. Woodruff, A. Chatterjee and P. Lambin, “Transparency of deep neural networks for medical image analysis: A review of interpretability methods,” Computers in Biology and Medicine, vol. 140, no. 75, pp. 105–111, 2022. [Google Scholar]

2. U. Busch, “Wilhelm conrad röntgen: Researcher and man,” Wilhelm Conrad Röntgen, vol. 8, no. 2, pp. 29–64, 2021. [Google Scholar]

3. R. Xie, I. Khalil, S. Badsha and M. Atiquzzaman, “An intelligent healthcare system with data priority based on multi vital biosignals,” Computer Methods and Programs in Biomedicine, vol. 18, pp. 105–126, 2020. [Google Scholar]

4. M. Allen, “Big healthcare data analytics in internet of medical things,” American Journal of Medical Research, vol. 7, no. 1, pp. 48–54, 2021. [Google Scholar]

5. N. Zozaya, J. Villaseca, F. Abdalla, M. A. Calleja, J. L. D. Martín et al., “A strategic reflection for the management and implementation of car-t therapy in Spain: An expert consensus paper,” Clinical and Translational Oncology, vol. 14, no. 4, pp. 1–13, 2022. [Google Scholar]

6. H. Shan, X. Jia, P. Yan, Y. Li, H. Paganetti et al., “Synergizing medical imaging and radiotherapy with deep learning,” Machine Learning: Science and Technology, vol. 1, no. 2, pp. 1–21, 2020. [Google Scholar]

7. F. Ali, S. E. Sappagh, S. R. Islam, A. Ali, M. Attique et al., “An intelligent healthcare monitoring framework using wearable sensors and social networking data,” Future Generation Computer Systems, vol. 114, no. 4, pp. 23–43, 2021. [Google Scholar]

8. O. Nee, A. Hein, T. Gorath, N. Hülsmann, G. B. Laleci et al., “Saphire: Intelligent healthcare monitoring based on semantic interoperability platform: Pilot applications,” Iet Communications, vol. 2, no. 2, pp. 192–201, 2012. [Google Scholar]

9. T. Batool, S. Abbas, Y. Alhwaiti, M. Saleem, M. Ahmad et al., “Intelligent model of ecosystem for smart cities using artificial neural networks,” Intelligent Automation and Soft Computing, vol. 30, no. 2, pp. 513–525, 2021. [Google Scholar]

10. T. M. Ghazal, S. Abbas, S. Munir, M. A. Khan, M. Ahmad et al., “Alzheimer disease detection empowered with transfer learning,” Computers, Materials & Continua, vol. 70, no. 3, pp. 5005–5019, 2022. [Google Scholar]

11. B. Ihnaini, M. A. Khan, T. A. Khan, S. Abbas, M. S. Daoud et al., “A smart healthcare recommendation system for multidisciplinary diabetes patients with data fusion based on deep ensemble learning,” Computational Intelligence and Neuroscience, vol. 7, no. 3, pp. 1–16, 2021. [Google Scholar]

12. A. H. Khan, M. A. Khan, S. Abbas, S. Y. Siddiqui, M. A. Saeed et al., “Simulation, modeling, and optimization of intelligent kidney disease predication empowered with computational intelligence approaches,” Computers, Materials & Continua, vol. 67, no. 2, pp. 1399–1412, 2021. [Google Scholar]

13. M. Saleem, M. A. Khan, S. Abbas, M. Asif, M. Hassan et al., “Intelligent fso link for communication in natural disasters empowered with fuzzy inference system,” in Int. Conf. on Electrical, Communication, and Computer Engineering, Pakistan, pp. 1–6, 2019. [Google Scholar]

14. S. A. Fatima, N. Hussain, A. Balouch, I. Rustam, M. Saleem et al., “IoT enabled smart monitoring of coronavirus empowered with fuzzy inference system,” International Journal of Advance Research, Ideas and Innovations in Technology, vol. 6, no. 1, pp. 188–194, 2020. [Google Scholar]

15. M. A. Khan, S. Abbas, A. Atta, A. Ditta, H. Alquhayz et al., “Intelligent cloud based heart disease prediction system empowered with supervised machine learning,” Computers, Materials & Continua, vol. 65, no. 1, pp. 139–151, 2020. [Google Scholar]

16. M. A. Khan, S. Abbas, K. M. Khan, M. A. Ghamdi and A. Rehman, “Intelligent forecasting model of COVID-19 novel coronavirus outbreak empowered with deep extreme learning machine,” Computers, Materials & Continua, vol. 64, no. 3, pp. 1329–1342, 2020. [Google Scholar]

17. M. Asif, S. Abbas, M. A. Khan, A. Fatima, M. A. Khan et al., “MapReduce based intelligent model for intrusion detection using machine learning technique,” Journal of King Saud University-Computer and Information Sciences, vol. 12, no. 1, pp. 1–9, 2021. [Google Scholar]

18. M. Saleem, S. Abbas, T. M. Ghazal, M. A. Khan, N. Sahawneh et al., “Smart cities: Fusion-based intelligent traffic congestion control system for vehicular networks using machine learning techniques,” Egyptian Informatics Journal, vol. 23, no. 3, pp. 417–426, 2022. [Google Scholar]

19. T. M. Ghazal, A. U. Rehman, M. Saleem, M. Ahmad, S. Ahmad et al., “Intelligent model to predict early liver disease using machine learning technique,” in 2022 Int. Conf. on Business Analytics for Technology and Security, UAE, pp. 1–5, 2022. [Google Scholar]

20. M. Salman and M. A. Rasool. “A systematic review: Explainable artificial intelligence based disease prediction,” International Journal of Advanced Sciences and Computing, vol. 1, no. 1, pp. 1–6, 2022. [Google Scholar]

21. H. Shahamat and M. S. Abadeh, “Brain MRI analysis using a deep learning based evolutionary approach,” Neural Networks, vol. 126, pp. 218–234, 2020. [Google Scholar]

22. M. Zhang, G. S. Young, H. Chen, J. Lia, L. Qin et al., “Deep-learning detection of cancer metastases to the brain on MRI,” Journal of Magnetic Resonance Imaging, vol. 52, no. 4, pp. 1227–1236, 2020. [Google Scholar]

23. L. Zhou, Z. Zhang, Y. C. Chen, Z. Y. Zhao, X. D. Yin et al., “A deep learning-based radiomics model for differentiating benign and malignant renal tumors,” Translational Oncology, vol. 12, no. 2, pp. 292–300, 2019. [Google Scholar]

24. A. Chughtai, Z. Malik and S. chugtai, “Brain tumor image generations using deep convolutional generative adversarial networks,” International Journal of Computational and Innovative Sciences, vol. 1, no. 3, pp. 1–12, 2022. [Google Scholar]

25. Y. Yang, L. F. Yan, X. Zhang, Y. Han, H. Y. Nan et al., “Glioma grading on conventional MR images: A deep learning study with transfer learning,” Frontiers in Neuroscience, vol. 12, no. 4, pp. 1–18, 2018. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools