Open Access

Open Access

ARTICLE

Optimized Identification with Severity Factors of Gastric Cancer for Internet of Medical Things

1 Faculty of Computing, Universiti Teknologi Malaysia, Johor Bahru, 81310, Malaysia

2 Faculty of Science and Technology, Shaheed Benazir Bhutto University Sanghar Campus, Pakistan

3 Faculty of Computing and Information Technology, Sule Lamido University, Kafin Hausa, P.M.B.048, Nigeria

* Corresponding Author: Fatima Tul Zuhra. Email:

Computers, Materials & Continua 2023, 75(1), 785-798. https://doi.org/10.32604/cmc.2023.034540

Received 20 July 2022; Accepted 17 November 2022; Issue published 06 February 2023

Abstract

The Internet of Medical Things (IoMT) emerges with the vision of the Wireless Body Sensor Network (WBSN) to improve the health monitoring systems and has an enormous impact on the healthcare system for recognizing the levels of risk/severity factors (premature diagnosis, treatment, and supervision of chronic disease i.e., cancer) via wearable/electronic health sensor i.e., wireless endoscopic capsule. However, AI-assisted endoscopy plays a very significant role in the detection of gastric cancer. Convolutional Neural Network (CNN) has been widely used to diagnose gastric cancer based on various feature extraction models, consequently, limiting the identification and categorization performance in terms of cancerous stages and grades associated with each type of gastric cancer. This paper proposed an optimized AI-based approach to diagnose and assess the risk factor of gastric cancer based on its type, stage, and grade in the endoscopic images for smart healthcare applications. The proposed method is categorized into five phases such as image pre-processing, Four-Dimensional (4D) image conversion, image segmentation, K-Nearest Neighbour (K-NN) classification, and multi-grading and staging of image intensities. Moreover, the performance of the proposed method has experimented on two different datasets consisting of color and black and white endoscopic images. The simulation results verified that the proposed approach is capable of perceiving gastric cancer with 88.09% sensitivity, 95.77% specificity, and 96.55% overall accuracy respectively.Keywords

Internet of Medical Things (IoMT) in other word Wearable Internet of Things (WIoT) is a promising technology that modernizes the existing healthcare systems with the fusion of the Internet of Things (IoT), Artificial Intelligence (AI), and Wireless Body Sensor Network (WBSN) as a smart healthcare system. IoMT is gaining tremendous research interest in healthcare applications to provide remote global healthcare anytime and anywhere with special attention to emergencies and avoid unfortunate incidents. The concept of IoT is originated by Kevin Ashton in 1999 i.e., “uniquely identifiable interoperable connected things/objects with Radio Frequency Identification (RFID) technology” [1]. Typically, IoT provides Machine-to-Machine (M2M) network infrastructure by which a wide variety of things with different sizes and abilities communicate with each other to make more informed decisions regarding the provision of the services by autonomous communication. In IoT, smart devices such as watches, door locks, motorbikes, wheelchairs, fire alarms, mobile phones, fitness trackers, vehicles, medical sensors, electronic appliances, computers, security systems, and many more are interconnected via Internet. The infrastructure of IoMT includes a range of WBSNs with various sensor nodes, the base station (laptop), medical server, and smart services interconnected via the Internet to collect the patient’s related data, provide remote aid, and enhance the drugs industry as shown in Fig. 1. IoMT emerges with the vision of WBSN to improve the health monitoring systems. In a hospital environment, especially in a non-critical situation, various biosensors collect and transmit the physiological data to the medical server. In this way, doctors get the patient information along with physiological data [2–4]. However, in a critical/emergency situation, family and medical staffs are notified via an alarm or caution signals for immediate treatment and rescue operations are carried out. Moreover, in a home environment, WBSNs are developed for monitoring the old patients to minimized the cost of healthcare and inessential hospitalization is avoided.

Figure 1: Infrastructure of the IoMT

A wireless endoscopic capsule acts as a biosensor node made up of a micro-imaging camera with a wireless circuit and localization software. While a data recorder acts as a sink node attached to the human body. When the capsule passes through the gastrointestinal tract, 50,000 frames/images are captured in eight hours and transmitted towards the base station via a recorder. Although, it produces a huge amount of patient data, however, processing and making intelligent and feasible decisions are time-consuming procedures. Scientists and researchers have been trying to imitate the human brain’s capability via AI techniques and models to create autonomous and intelligent applications that can make intelligent decisions without human intervention. The existing AI techniques are used to provide instinctive resource provision and acquire the hidden knowledge by processing the raw data to determine regular patterns which make better predictions and critical decisions in medical diagnosis. In addition, IoMT has brought the idea of the smarter world into a reality along with the massive extent of numerous services. Nevertheless, it supports caregivers and patients to improve the quality of life, understand the health risks, and early diagnosis, treatment, and management of chronic diseases (for instance, abnormalities of heart/cardiovascular issues, diabetes, cancer (brain, stomach/lungs and skin, etc.)) via wearable health sensors (pacemaker, endoscopic capsules, etc.) along with a wireless communication link to a hub (recorder) placed on the waist or nearby the human body. Although, the life of a human being can be improved if various disorders and diseases are predicted at the preliminary stage before they become worst and unsafe via recognizing the vital signs. The scientific contribution of this paper can be summarized as follows.

• We perform a critical analysis of various types of cancer towards gastric cancer along with most relevant literature.

• We propose an optimized AI-based approach for smart healthcare application of IoMT to diagnose and discriminate various types, stages, and grades of gastric cancer based on the endoscopic images respectively.

• We calculate and compare the efficacy of the proposed approach by specificity, sensitivity, and overall accuracy with the existing state-of-the-art methods and demonstrate that our proposed method achieves superior performance.

The rest of the paper is organized as follows. Section 2 investigates and summarizes some of the existing gastric cancer detection approaches along with the basic concept of cancer and highlights their limitations. Section 3 describes an in-depth overview of the proposed optimized AI-based approach for the smart healthcare applications. Section 4 presents the experimental results. Finally, Section 5 concludes the paper and outlines the future direction.

The human body is composed of a number of cells that grow and split to form new cells. When cells become damaged or grow old, they expire, and new cells replace them. When cancer/tumor arises, the normal formation cycle of cells is completely disturbed as the cancer cells grow nonstop in trillions anywhere into surrounding tissues in the human body. Gastric cancer is dangerous and slow-growing tumors that are usually found in the gastrointestinal tract begin from the oral cavity to the rectum where food is expelled. The main function of this tract is to split the food into nutrients via performing various functions. In the case of gastrointestinal disorder or disease, this function is not achieved. Consequently, it is very significant to identify gastric cancer at early stages.

In this context, medical imaging (segmentation) plays a vital role and has a great impact on medicine, identification, and treatment of multidisciplinary diseases. The examination of cancer imaging entirely depends on the professional doctors with high expertise and clinical experiences. Although, the growing amount of medical imaging data has carried out additional challenges to radiologists. Nowadays, deep and machine learning techniques are getting remarkable progress in the gastric cancer domain based on their learning capacities and competent computational power. These methods provide an effective solution for automatic classification and segmentation to accomplish high-precision intelligent identification of cancers [5]. Consequently, AI-based diagnosis consists of endoscopy using Convolutional Neural Network (CNN) [6], Computerized Tomography (CT) [7], and pathology [8]. Although in [9], various machine learning algorithms are used feature selection to minimize the feature space and data dimension. In addition, K-NN algorithm is used to categorize the lymph and non-lymph node metastasis in gastric cancer. Furthermore, a Computer Aided Diagnostic (CAD) system has been proposed in the medical domain which consists of multiple classification models based on the CNN for the classification of pathological sites [10]. In CAD, the endoscopic images are pre-classified for negative and positive states with the evidence of cancer and non-cancer diseases such as a primary tumor that comprise the multifaceted vascular information.

However, a modified CNN classification model is proposed for Gastric Intestinal Metaplasia (GIM) [11]. In the proposed method, an identification system of 336 patients sample data is developed data by a retrospective collection of 1880 endoscopic images that has been confirmed to have GIM or non-GIM autopsy from different hospital sectors. Besides, an AI-based system is developed to predict the invasion depth of gastric cancer images from different distances and angles by using Narrow-Band Imaging (NBI), White-Light Imaging (WLI), and Indigocarmine (Indigo) tools [12]. Recently, an expert system is proposed which consists of three pre-trained CNN models for feature extraction such as InceptionV3, VGG19 and ResNet50 [13]. Moreover, supervised machine learning algorithms are used to categorize the extracted features into bleeding and non-bleeding endoscopic images. It is observed from the above literature, AI-assisted endoscopy plays a very significant role in the detection of gastric cancer. The majority of the methods diagnoses gastric cancer via CNN and are based on various feature extraction models, consequently, limiting the identification and classification performance in terms of cancerous stages and grades associated with each type of gastric cancer.

This section describes the overall procedure of the proposed optimized AI-based approach which is entirely based on the identification and risk assessment of the gastric cancer. The proposed method is categorized into five phases such as image preprocessing, Four-Dimensional (4D) image conversion, image segmentation, K-Nearest Neighbour (K-NN) classification, and multi-grading and staging of image intensities. Moreover, two different datasets are considered such as (i) Dataset I: black and white and (ii) Dataset II: color captured/unprocessed endoscopic images taken from American Cancer Society (ACS) and Kvasir respectively [14,15]. Referring to Fig. 2 and Algorithm 1, initially, the endoscopic images are preprocessed and converted into 4D images; afterward, 4D images are segmented, classified accordingly with K-NN and histogram of image intensities of datasets to determine various stages, grades, and types of gastric cancer. Furthermore, the prevalence of the disease is calculated for finding the overall accuracy in terms of Matthews Correlation Coefficient (MCC), sensitivity, and specificity. Nevertheless, the output of each phase is used as an input for consequent phases and the detail of each phase is given in following subsections.

Figure 2: Flowchart of the proposed approach

This is the preliminary phase of the proposed approach which aims to enhance the image quality by eliminating the redundant information and noise from the captured gastrointestinal endoscopic 3D (430 × 476) images from both Datasets I and II via a denoising method i.e., transforms domain filtering (Gaussian filter). Furthermore, the additional background containing unnecessary information is removed via a grab cut technique. Afterward, the color endoscopic images (Dataset II) are converted into gray-scale before inputting them to the next stage for further processing.

3.2 Four Dimensional Image Conversion

In this phase, the preprocessed endoscopic images are converted into 4D (440 × 510) spatial images to identify more clear vision and achieve the necessary precision for the detection of minute tumors of the beginning stage in the stomach. In this context, a Framework Standard Library (FSL) tool is used to increase the resolution and pixel dimensions (pixel size 360 mm × 360 mm while the distance between the slides is 1 mm and the width of the slide 6 mm) which target the flow of pixels.

In this phase, the 4D endoscopic images are categorized into meaningful sections or regions based on certain criteria such as color, intensity, texture, and their combinations to identify tumors. A non-flexible transformation approach such as Gaussian mixing model is used to increase the signal-to-noise ratio (square root of the number of photons in the brightest part of the image) of concurrent 4D spatial/phonological endoscopic images and obtain the required deformation matrices. Moreover, edge-based and location-based detections have been done in the endoscopic images to consider the pixels and their boundaries in terms of position and region of the tumor cell in the stomach respectively. Each pixel in a region is measured in terms of the boundaries of tumor cells. Consequently, a local segmentation has been done with the specific part of cancer to improve the visual effects of the endoscopic images. A threshold value is defined to measure the pixel intensity. The pixels below the defined threshold values are converted into black (bit value equals zero) and the pixels above the threshold values are converted to white (one bit value) using the histogram.

3.4 K-Nearest Neighbor Classification

In this phase, a supervised machine learning algorithm i.e., K-NN is used to solve the classification and regression problems. It stores and sorts all the available states and new states based on similarities (e.g., distance functions) respectively. The condition of endoscopic images is classified by the majority of the calculated distance of the tumor cells. The Xi, Yi, and q are considered for categorizing the cancer stages, grades in terms of Histogram of Oriented Grading (HOG) expose the detailed distance or location of cancer in stomach as an object from one point to another and indicate the specific value of cancer in solid form with maximum intensity (frequency occurrence) that will measure the grading and stages of cancer. Besides, the condition of the most common class is assigned to its closest K neighbors if the value of K is equal to one. The distance of tumor cells is true for static variables and calculated by Eqs. (1)–(3) respectively.

The simplest way to determine the optimal value of K is to first analyze the data. The higher value of K is more reliable because it eliminates the remaining noise. Furthermore, the cross validation has been done to evaluate a successful value of K (K-mean) using an unbiased dataset.

3.5 Multi-Grading and Staging of Image Intensities

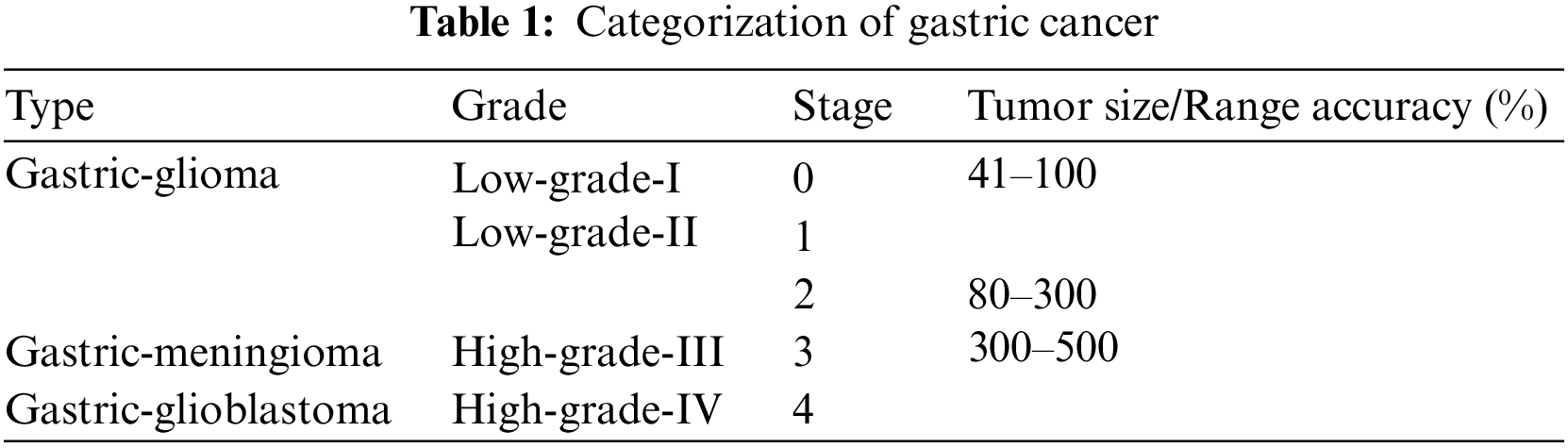

The cancer classification shows the irregular shape of cancer cells and tissues. In this phase, the gastric cancer is categorized according to the types, grades, and stages of cancer. Various types, grades, and stages of cancer along with various ranges are defined in Table 1.

:

4.1 The Classification Performance of Gastric Cancer

With the proposed method described in Section 3, various experiments are conducted using MATLAB to identify gastric cancer and measure the classification performance in terms of grading and staging. For initial testing, 150 endoscopic images were taken from each dataset (I and II). Out of 300 images, 250 images include stage 0–4 anomalies while 50 images consist of inflammatory anomalies. Fig. 3 shows the sample 3D (430 × 476) endoscopic images. Moreover, Fig. 4 shows the experimental results of gastric cancer in terms of the 4D image segmentation with binary transformation on black and white (B/W) and color (C) of the endoscopic images. However, Fig. 5 shows the K-NN classification and histogram (HOG) of each grade and stage based on the statistical values in terms of the size of the tumor and image intensity of gastric cancer. Moreover, various gastric cancer stages have been shown in 4D_im1 and 4D_im12 endoscopic images in Figs. 4 and 5 and presented as follows respectively.

■ 4D_im1 and 4D_im7 represent the first stage and low-grade-I of Glioma cancer with the size of 74 cm (B/W and C) and maximum intensity B/W: 8945 and C: 5520.

■ 4D_im2 and 4D_im8 represent the second stage and low-grade-II of Glioma cancer with the size of 254 cm (B/W) and 95 cm (C), and maximum intensity B/W: 64502 and C: 8945.

■ 4D_im3 represents the third stage and high-grade-III of Meningioma cancer with the size of 360 cm (B/W) and the maximum intensity B/W: 129672.

■ 4D_im4 represents the fourth stage and high-grade-IV of Glioblastoma cancer with the size of 380 cm (B/W) and maximum intensity B/W: 144348.

■ 4D_im5 and 4D_im6 represent the second stage and low-grade-II of Glioma cancer with the size of 85 and 144 cm (B/W) with maximum intensity B/W: 7288 and 20855.

■ 4D_im9 represents the second stage and high-grade-II of Glioma cancer with the size of 95 cm (C) and the maximum intensity C: 12672.

■ 4D_im10 represents the second stage and high-grade-II of Glioma cancer with the size of 210 cm (C) and maximum intensity C: 43914.

■ 4D_im11 and 4D_im12 represent the fourth stage and high-grade-IV of Glioblastoma cancer with the size of 383 and 469 cm (C) and maximum intensity C: 146455 and 219658.

Figure 3: (3D_im1–3D_im12) 3D endoscopic images

Figure 4: (4D_im1–4D_im12) 4D converted endoscopic images, (r1–r12) segmented regions and (b1–b12) binary transformation results of image segmentation of the endoscopic images

Figure 5: (k-mean_im1– k-mean_im12) K-NN classification and histogram results of the endoscopic image intensities

4.2 Prevalence of Gastric Cancer

The prevalence of gastric cancer is evaluated by sensitivity, specificity, MCC and overall accuracy. However, sensitivity and specificity are statistical measures of performance of the binary classification test that is widely used in medicine and are defined as follows:

■ Sensitivity is a true positive rate of accuracy and measures the proportion of positivity being identified as an affected condition.

■ Specificity is a true negative rate of accuracy and measures the proportion of negativity being identified as unaffected condition.

■ MCC measures the quality of binary classifications by balanced indicator and estimates the classification prediction correlation of disease.

■ Overall accuracy is a general classification in terms of True Positive (TP), False Negative (FN), False Positive (FP) and True Negative (TN) for the proposed method.

We have used the Sensitivity/recovery (Se) is the TP ratio, Specific (Sp) is the TN ratio, Accuracy (Acc) is the positive predictive rate and the F score measures classification performance in terms of recall and accuracy. All metrics are defined by Eqs. (4)–(7) respectively.

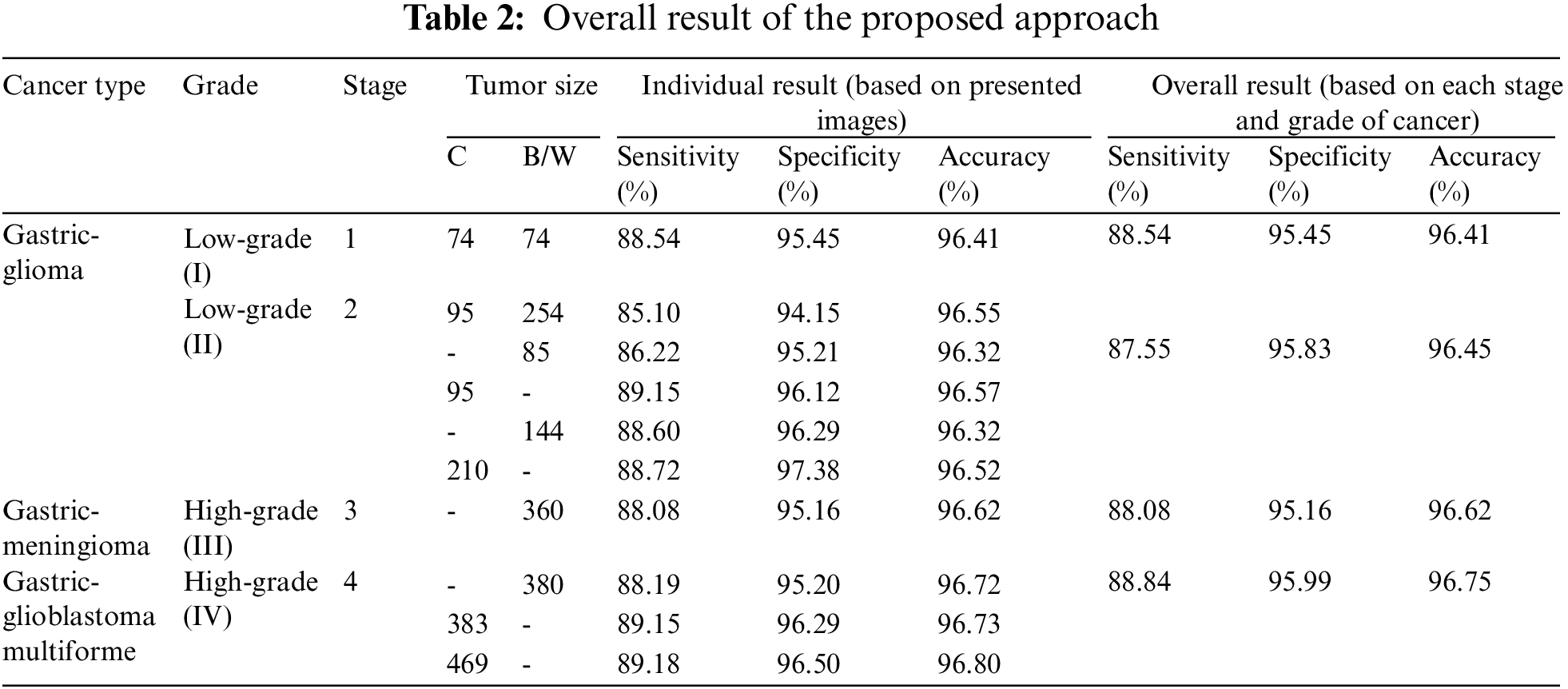

Moreover, the proposed method is compared with the existing methods in terms of overall accuracy. The existing methods are based on feature extraction of the gastric cancer for other purposes. In [7], CT-based radiographic model is used based on a machine learning method to create a predictive radiation signature with 83.1% accuracy. While in [9], a simple K-NN based diagnosis method is used to distinguish lymph node metastasis from non-lymph node metastasis with 96.33% accuracy. However, Table 2 shows the overall result of the proposed method which includes the types, grades, and stages based on the size of gastric cancer with 96.55% overall accuracy.

In this paper, we have proposed an optimized AI-based approach that identifies and assesses the severity factor of gastric cancer to enhance the entire identification and classification results of the endoscopic images. For this purpose, we examined two datasets that categorized gastric cancer in color and black and white endoscopic images based on the type, grades, and stages respectively. Consequently, the experimental results determine the effectiveness of the proposed method to make an accurate decision regarding the four grades with stages of gastric cancer. The simulation results verified that the proposed method achieved improved results in terms of aggregated sensitivity by 88.09%, specificity by 95.77% and accuracy by 96.55% respectively. In the future, we will aim to extend our current work for the detailed classification of each grade by investigating the light field tools using K-NN algorithm to achieve a balance between efficiency and accuracy.

Acknowledgement: The authors would like to thank Universiti Teknologi Malaysia, for providing the environment to conduct this research work. In addition, one of the authors of this paper would like to also thank Sule Lamido University, Kafin Hausa, Nigeria, for generous support to pursue his postgraduate studies.

Funding Statement: The authors extend their appreciation to the Universiti Teknologi Malaysia for funding this research work through the Project Number Q.J130000.2409.08G77.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. L. Shancang, X. L. Da and Z. Shanshan, “The internet of things: A survey,” Information Systems Frontiers, vol. 17, pp. 243–259, 2015. [Google Scholar]

2. F. T. Zuhra, K. A. Bakar, A. Ahmed and M. A. Tunio, “Routing protocols in wireless body sensor networks: A comprehensive survey,” Journal of Network and Computer Applications, vol. 99, no. 1, pp. 73–97, 2017. [Google Scholar]

3. F. T. Zuhra, K. B. A. Bakar, A. A. Arain, K. M. Almustafa, T. Saba et al., “LLTP-QoS: Low latency traffic prioritization and QoS-aware routing in wireless body sensor networks,” IEEE Access, vol. 7, no. 1, pp. 152777–152787, 2019. [Google Scholar]

4. F. T. Zuhra, K. B. A. Baka, A. Ahmed, U. A. Khan and A. R. Bhangwar, “MIQoS-RP: Multi-constraint intra-BAN, QoS-aware routing protocol for wireless body sensor networks,” IEEE Access, vol. 8, no. 1, pp. 99880–99888, 2020. [Google Scholar]

5. P. -H. Niu, L. -L. Zhao, H. -L. Wu, D. -B. Zhao and Y. -T. Chen, “Artificial intelligence in gastric cancer: Application and future perspectives,” World Journal of Gastroenterology, vol. 26, no. 1, pp. 5408, 2020. [Google Scholar]

6. Y. Horiuchi, K. Aoyama, Y. Tokai, T. Hirasawa, S. Yoshimizu et al., “Convolutional neural network for differentiating gastric cancer from gastritis using magnified endoscopy with narrow band imaging,” Digestive Diseases and Sciences, vol. 8, no. 65, pp. 1355–1363, 2020. [Google Scholar]

7. W. Zhang, M. Fang, D. Dong, X. Wang, X. Ke et al., “Development and validation of a CT-based radiomic nomogram for preoperative prediction of early recurrence in advanced gastric cancer,” Radiotherapy and Oncology, vol. 145, no. 1, pp. 13–20, 2020. [Google Scholar]

8. F. Leon, M. Gelvez, Z. Jaimes, T. Gelvez and H. Arguello, “Supervised classification of histopathological images using convolutional neuronal networks for gastric cancer detection,” in Proc. XXII Symp. on Image, Signal Processing and Artificial Vision (STSIVA), Bucaramanga, Colombia, pp. 1–5, 2019. [Google Scholar]

9. C. Li, S. Zhang, H. Zhang, L. Pang, K. Lam et al., “Using the K-nearest neighbor algorithm for the classification of lymph node metastasis in gastric cancer,” Computational and Mathematical Methods in Medicine, vol. 2012, no. 8, pp. 1–11, 2012. [Google Scholar]

10. D. T. Nguyen, M. B. Lee, T. D. Pham, G. Batchuluun, M. Arsalan et al., “Enhanced image-based endoscopic pathological site classification using an ensemble of deep learning models,” Sensors, vol. 20, no. 1, pp. 5982, 2020. [Google Scholar]

11. T. Yan, P. K. Wong, I. C. Choi, C. M. Vong and H. H. Yu, “Intelligent diagnosis of gastric intestinal metaplasia based on convolutional neural network and limited number of endoscopic images,” Computers in Biology and Medicine, vol. 126, no. 1, pp. 104026, 2020. [Google Scholar]

12. S. Nagao, Y. Tsuji, Y. Sakaguchi, Y. Takahashi, C. Minatsuki et al., “Highly accurate artificial intelligence systems to predict the invasion depth of gastric cancer: Efficacy of conventional white-light imaging, nonmagnifying narrow-band imaging, and indigo-carmine dye contrast imaging,” Gastrointestinal Endoscopy, vol. 92, no. 1, pp. 866–873, 2020. [Google Scholar]

13. A. Caroppo, A. Leone and P. Siciliano, “Deep transfer learning approaches for bleeding detection in endoscopy images,” Computerized Medical Imaging and Graphics, vol. 88, no. 101852, pp. 101852, 2021. [Google Scholar]

14. F. R. Lucchesi and N. D. Aredes, “Radiology data from the cancer genome atlas stomach adenocarcinoma [TCGA-STAD] collection,” The Cancer Imaging Archive, vol. 10, pp. K9, 2016. [Google Scholar]

15. K. Pogorelov, K. R. Randel, C. Griwodz, S. L. Eskeland, T. D. Lange et al., “Kvasir: A multi-class image dataset for computer aided gastrointestinal disease detection,” in Proc. 8th ACM on Multimedia Systems Conf., Taipei, Taiwan, pp. 164–169, 2017. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools