Open Access

Open Access

ARTICLE

Enhancing Military Visual Communication in Harsh Environments Using Computer Vision Techniques

1 School of Built Environment, Engineering and Computing, Leeds Beckett University, Leeds, LS6 3HF, UK

2 Department of Computer Science and Engineering, Chennai Institute of Technology, Chennai, 600069, India

3 Centre for Research Impact & Outcome, Chitkara University Institute of Engineering and Technology, Chitkara University, Rajpura, 140401, India

4 Department of Electronics and Communication Engineering, Panimalar Engineering College, Poonamallee, Chennai, 600123, India

5 Computer Skills, Department of Self-Development Skill, Common First Year Deanship, King Saud University, Riyadh, 11362, Saudi Arabia

6 Computer Science Department, College of Computer and Information Sciences, King Saud University, Riyadh, 12372, Saudi Arabia

7 Chitkara Centre for Research and Development, Chitkara University, Rajpura, 140401, India

8 Division of Research & Innovation, Uttaranchal University, Dehradun, 248007, India

* Corresponding Author: Shitharth Selvarajan. Email:

(This article belongs to the Special Issue: Advances in Object Detection: Methods and Applications)

Computers, Materials & Continua 2025, 84(2), 3541-3557. https://doi.org/10.32604/cmc.2025.064394

Received 14 February 2025; Accepted 04 June 2025; Issue published 03 July 2025

Abstract

This research investigates the application of digital images in military contexts by utilizing analytical equations to augment human visual capabilities. A comparable filter is used to improve the visual quality of the photographs by reducing truncations in the existing images. Furthermore, the collected images undergo processing using histogram gradients and a flexible threshold value that may be adjusted in specific situations. Thus, it is possible to reduce the occurrence of overlapping circumstances in collective picture characteristics by substituting grey-scale photos with colorized factors. The proposed method offers additional robust feature representations by imposing a limiting factor to reduce overall scattering values. This is achieved by visualizing a graphical function. Moreover, to derive valuable insights from a series of photos, both the separation and in-version processes are conducted. This involves analyzing comparison results across four different scenarios. The results of the comparative analysis show that the proposed method effectively reduces the difficulties associated with time and space to 1 s and 3%, respectively. In contrast, the existing strategy exhibits higher complexities of 3 s and 9.1%, respectively.Keywords

Identification of various circumstances with visualized patterns is mostly conducted via image processing techniques, particularly in cases where a distinct block division is not evident. In this scenario, it is crucial to employ a vision technology that accurately detects suitable boundaries while eliminating hazy circumstances to ensure the complete separation of blocks. Although many image processing algorithms have been used to identify objects in challenging situations, the accuracy of identification significantly decreases after scaling. Therefore, the suggested methodology utilizes computer vision algorithms to enhance the precision and security of collected images, enabling the acquisition of comprehensive shape features through classification techniques. Furthermore, utilizing an image processing tool is crucial in facilitating comprehensive surveillance and monitoring of the surrounding items. This tool enables the real-time separation of recognized objects through the implementation of a series of voice commands. Moreover, the application of computer vision algorithms enables real-time authentication, hence ensuring secure access to all blocks within each image with improved pixel quality. Nevertheless, the attributes of photographs acquired in challenging circumstances stay the same even when the images are scaled, enabling intelligent identification for both damage evaluation and defense tactics. Given the increased volume of data created in military applications, it becomes feasible to achieve comprehensive situational awareness through the use of visual patterns. In this scenario, the entire context can be effectively deployed by deploying centralized node processing units. Fig. 1 illustrates the block diagram of the proposed computer vision approach for detecting different objects collected in challenging situations.

Figure 1: Block diagram of computer vision measures in harsh environments

Fig. 1 presents the overall architecture of the proposed computer vision-based enhancement framework tailored for military applications in harsh environments. The system initiates with the image acquisition module, where real-time images affected by environmental distortions are captured. These images are passed through a visualized filtering stage, which isolates key edges and suppresses noise while preserving structural details. Next, graphical enhancement functions are applied to address truncations and illumination inconsistencies, improving clarity in low-visibility conditions. The histogram gradient module then identifies edge orientations and spatial intensity variations, aiding in precise object delineation. Following this, adaptive thresholding adjusts the local contrast and suppresses overlapping features. The final stages involve image separation and inversion mechanisms, which ensure robust segmentation of objects across multiple frequencies and lighting conditions. Together, these interconnected blocks form a cohesive pipeline for real-time, distortion-resilient visual communication.

1.1 Background and Related Works

The analysis of vision approaches is crucial for obtaining significant insights, as their implementation necessitates real-time processing while mitigating external influences on scaled images. Therefore, in this section, a comprehensive review of relevant works is conducted using multiple objective patterns, and a modernization solution is attained by enhancing essential criteria. In addition, The key functions of image processing techniques are examined to manage intricate security-related challenges. The existence of several feature sets in the processing of colorized image sets is seen to yield the most important metric in transmission systems [1]. The application of color segment embedding enables the comprehensive identification of various effects inside underwater systems, hence facilitating the establishment of a cohesive framework with interconnected mechanisms. While it is evident that underwater systems may be identified using color scaling mechanisms in real time, the application of homomorphic and multiple filter techniques is necessary to incorporate specific traits. This integration adds complexity to the process of getting unambiguous visualization. The retrieval network facilitates the analysis of complete picture features by leveraging neural networks, which are processed at a debauched rate [2]. This process involves the conversion of low-mapped features into high-spectral characteristics. It has been found that a greater number of localized picture features are required during these conversions to divide the entire block and distribute semantic information. However, as the images are reshaped, the local details will be eliminated, necessitating the use of gradient mapping features that are not noticed in retrieval networks. Both of the above-mentioned processes focus on determining color variations and do not address information concerning grayscale spectrums, resulting in higher mistake rates.

In contrast, Ref. [3] predicts a greater quantity of data created for military applications by leveraging more comprehensive information from the entire image set. The observed outcomes in such forecasts are subject to interpretations influenced by the presence of normalization factors. If the threshold values are eliminated, the system will no longer retain the border requirements for the image collection, rendering direct sources unsuitable for military applications. Therefore, the mistake rate during identification increases due to the influence of interpretation values, and achieving precise mapping with utility features for improved decision-making is not possible. The estimation of acquired images in different contexts is conducted in [4] using distinct histogram gradients. These gradients are used to boost the current bands that are active in the resized photos. When gradients are raised, there is an increase in periodicity, resulting in compression rates that exceed the boundary limitations. This phenomenon gives rise to the occurrence of many mistakes. Furthermore, this study presents a scene discrimination mechanism that is applicable to a wide range of applications. This mechanism involves the real-time identification of important targets utilizing infrared patterns [5]. The presence of such infrared patterns is widely recognized as a fundamental approach in the early stages of picture analysis. When defining infrared patterns, it is necessary to construct two types of scenes, each with critical feature points. This creates a sophisticated system that separates each block. Furthermore, to address challenging environments, it is necessary to remove unclear images at designated target areas, requiring the use of multiple spectral scales. This approach effectively reduces the overall running time in this scenario. In addition to the integration procedure, the aforementioned strategic considerations can only be applied to underwater systems where it is significantly challenging to eliminate complete roughness in the entire image.

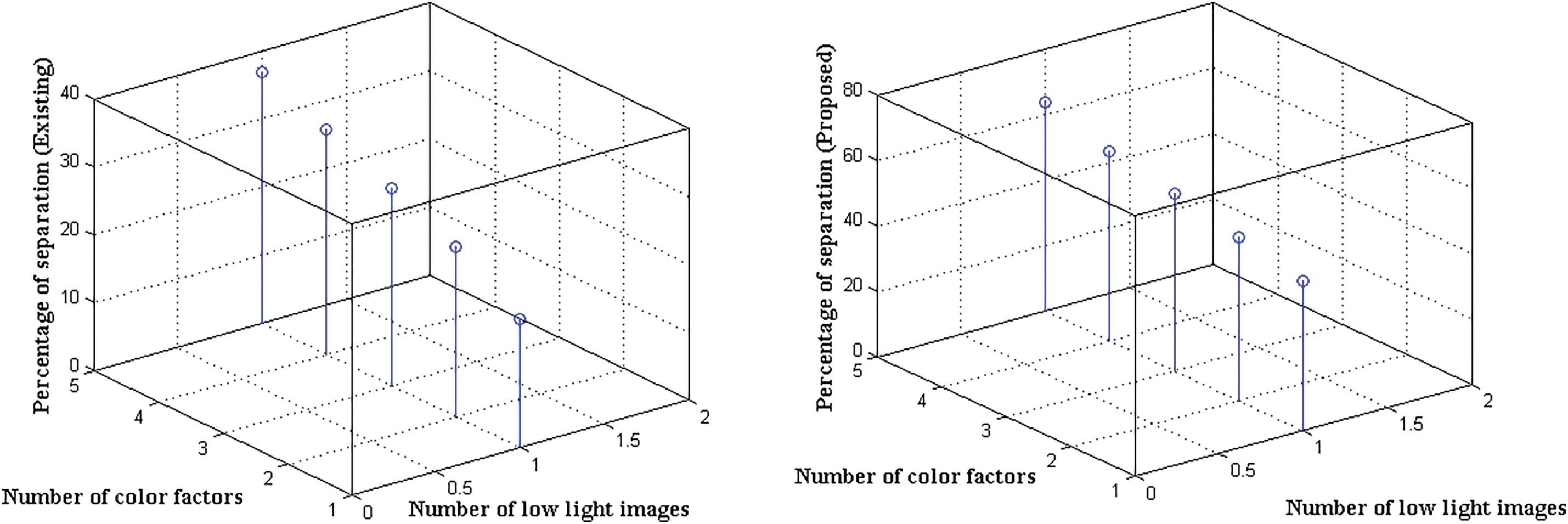

The use of a specialized device is employed in [6] to aid in the identification of image blocks within the near-infrared areas, where an edge detection operation is carried out. Throughout this process, the effect on the marginal sequence can be observed for all progressive values, resulting in the definition of complete sinusoidal functions in an appropriate manner. Furthermore, the extraction process in this scenario is straightforward compared to morphological separations, allowing for the integration of additional filter requirements with homomorphic features. Should advanced functionality in marginal distributions be necessary, machine vision techniques can be utilized to address uncontrollable concerns in specific applications. Various feature enhancement models are subsequently identified using deep learning algorithms, leading to improved performance visions accompanied by suitable countermeasures [7]. If any issues arise during image processing within block separation units, auxiliary networks may be employed to assist in separating different blocks after resizing the image sequence. In this system, the addition of auxiliary units will disrupt the initial image set, resulting in decreased classification accuracy due to the larger structure. In the context of computer vision measurements, it is possible to implement a retention mechanism when an image set is rejected. This method involves using image-enhancing features, such as a multiple-channel fusion procedure, in conjunction with adaptive color mechanisms [8]. By incorporating diverse color elements, it becomes possible to classify images accurately. However, in challenging circumstances, if the background does not match the appropriate color components, vision tasks are likely to fail. In addition, incorporating weighted multi-scale features can enhance the contrast of an image. However, in challenging circumstances, if the background does not match the appropriate color components, Vision tasks are likely to fail. Additionally, incorporating weighted multi-scale features can enhance the contrast of an image. Collection by utilizing reliable parameters for reconstruction procedures [9]. In this scenario, all reliable factors must collectively contribute to improving visual acuity across all forms of irradiance, thereby eliminating any low-frequency components at the receiver. Table 1 presents a comparison of similar works that utilize objective functions.

1.2 Research Gap and Motivation

Various objective patterns are noticed in different ways when visualization is processed utilizing optimized algorithms, as indicated in Table 1. Furthermore, each traditional approach examines the processing strategy by segregating different blocks, resulting in significantly fewer circumstantial detections in this scenario. Furthermore, a significant deficiency in the current system is the absence of defined filters, resulting in reduced visualization factors and hence low restoration factors. Therefore, the suggested approach must be designed to address the limitations of existing methods in the following manner.

RG1: Can the integration of suitable filters for picture enhancements be achieved through the utilization of control factor measurements?

RG2: Is it possible to reduce scattering measurements by observing graphical functions with defined restriction settings?

RG3: Can pictures be effectively separated using suitable restoration and inversion factors?

To address the limitations of current methodologies, the proposed strategy introduces a robust computer vision framework that leverages histogram gradients and adaptive thresholds for visual communication in harsh military environments. The key contributions of this work are as follows:

• Integration of a precision-driven filtering mechanism that enhances the visual quality of degraded military images using well-defined persuasive functions.

• Development of a graphical function-based visualization model that applies constraint-aware parameters to reduce image scattering and minimize truncation effects in low-light or complex environments.

• Implementation of a gradient-based restoration and inversion technique that improves image separability and enables accurate object recognition by applying adaptive inversion factors.

• Comprehensive evaluation using 928 real-time military images, demonstrating significant improvements over conventional methods—with a reduction in processing time to 1 s and enhancement error down to 3%.

This section explores the mathematical methodology employed for picture upgrades, with the aim of effectively addressing challenging settings that require a conversion state for visual identification. Analytical representations in this particular situation assume a significant role as they facilitate the classification and enhancement of extracted images, hence enabling the recognition of comprehensive properties essential for various applications. The conversion probabilities from a low to a high state are monitored in real-time using appropriate mathematical expressions, which are also represented by similar parametric expressions.

In order to attain a high level of precision in collected images within challenging military settings characterized by the disruption of diverse air conditions, a decomposition factor is employed to ensure the preservation of all image edges. The acquisition of detailed layers in current photos with high enhancement values is a direct consequence of the preservation process, as demonstrated by Eq. (1).

where,

The existence of limited illumination in captured photos for military purposes will invariably hinder the attainment of comprehensive improvements, hence rendering the process exceedingly intricate in ascertaining precise resolutions. Hence, it is imperative to prevent low light circumstances by adjusting the appropriate parametric index, as specified in Eq. (2).

where,

In situations when low light conditions are present, it becomes imperative to emphasize specific qualities through the utilization of visualization functions. The proposed strategy aims to enhance the visualization factors by utilizing a two-degree value approach, resulting in the attainment of a persuaded function as illustrated in Eq. (3).

where,

In order to achieve comprehensive augmentation of cloud photographs obtained during diverse military operations, it is vital to incorporate radiance with the original image spectrum. In order to ensure accurate visualizations in challenging environments, it is necessary to measure the scattering values, as specified in Eq. (4).

where,

The increased level of light in contemporary photographs effectively captures all the information contained inside high-frequency representations. In wartime contexts, the presence of high frequency conditions is consistently required to effectively manage irradiations in images, hence enhancing visualization, as demonstrated by Eq. (5).

where,

In military applications, it is imperative to segregate images across multiple domains due to the significant variations in collected images across distinct frequency bands. Consequently, in this step of separation, the brightness of each picture will be reduced, resulting in an exponential transformation that increases the conditionality factors, as shown in Eq. (6).

where,

It is necessary to assess the extent of restoration in relation to both non-colorized and colorized image sets. In this scenario, dynamic ranges can be determined by employing scaling factors. Therefore, in this scenario, it is necessary to utilize the whole number of restored values along with their respective weighting functions, as specified in Eq. (7).

where,

In this scenario, it is necessary to partition the total pixel values into distinct border indications. This entails doing measurements along both the horizontal and vertical axis using inversion measures. Eq. (8) indicates that double-precision values are crucial for establishing the primary use of these inversion metrics.

where,

It is crucial to include a composite objective function for all the parametric observations listed above, as it allows for a multi-objective perspective with minimum and maximum values. Therefore, Eqs. (9) and (10) can be utilized to build distinct composite functions for altering picture weights without any predetermined alterations.

The utilization of composite objective functions with independent set functions is employed to determine optimal outcomes through the utilization of combinational sets, as denoted by Eq. (11).

In order to enhance the accuracy measures for each image set in challenging circumstances, it is imperative to combine the objective function in Eq. (11) with the computer vision algorithm. The following is a comprehensive explanation of vision algorithms.

To effectively understand all specified circumstances in acquired images, it is imperative to incorporate a computer vision algorithm that enables the generation of optimized solutions. In this particular scenario, all jobs will be identified and classified in order to facilitate a comparison with the underlying database. Deep learning algorithms are currently playing a crucial part in upgrading various photographs by arranging them in a sequential manner during the interpretation process. The utilization of computer vision algorithms is crucial in numerous instances since they are employed in conjunction with suitable training patterns to attain error-free circumstances. Consequently, these algorithms offer significant advantages in various military applications. In the context of computer vision algorithms, the recognition of linked patterns in images involves the identification of items on virtual screens.

The utilization of a descriptor in image processing offers significant benefits in the capture of images, enabling the recognition of diverse objects and facilitating the attainment of localized solutions with suitable orientations. The significance of histograms in image processing techniques lies in their ability to identify different gradients through the utilization of diverse edge detection systems. Hence, histogram gradients are crucial in defining each image in terms of pixel variations and feature recognition techniques. The process of picture differentiation can be effectively accomplished through several methods. However, histogram gradients offer valuable insights into both edges and forms. This is achieved by partitioning each image into smaller cell representations, resulting in simplified expressions. Fig. 2 indicates the histogram gradients for vision enhancements.

Figure 2: Histogram gradients for vision enhancements

An invariant matrix representation can be employed to enhance the reliability of object recognition by normalization determination. Therefore, the proposed method involves the implementation of normalization at each stage, utilizing magnitude test patterns. In this particular example, the cut version will be employed, as specified in Eq. (12).

where,

The mapping process is crucial in the use of vision for military photos captured in hard circumstances. It gives valuable information for detecting and classifying challenging images based on several separation characteristics. In this particular scenario, the magnitude of gradients exhibits a tendency to vary, as represented by Eq. (13).

where,

In order to identify the existence of objects, it is necessary to establish a regularization metric. This metric will define the square of each cell, which will then be used to display the vector outputs using appropriate representations. Therefore, Eq. (14) is utilized to represent the regularization pattern with an independent size block.

where,

When photos are acquired in challenging situations with adequate illumination, it becomes crucial to prioritize the reduction of spatial fluctuations. This is because the entire image is classed using a three-dimensional spectrum. Therefore, an adaptive threshold approach is implemented to integrate the combined features of images in all local regions, thereby mitigating the influence of external factors on acquired images. While the adaptive threshold mechanism is commonly regarded as a sub-task of integrated components, it is important to note that in this particular example, it is a dynamic establishment specifically designed for images that have been divided using proper computational methods. Fig. 3 illustrates the adaptive thresholds for vision enhancements.

Figure 3: Adaptive thresholds for vision enhancements

An image threshold is defined by utilizing magnitude values obtained from gradient vectors to establish maximum and minimum limits. Therefore, the neighborhood values that surpass the overlapping pictures are noticed in this particular scenario, which is defined in arithmetic mode as specified in Eq. (15).

where,

The utilization of a linear threshold mechanism is employed in computer vision to provide optimized solutions within a constant time period, with a specific focus on border scenarios. The linear threshold employed in this method calculates the integral image set at both corners, resulting in the elimination of the entire overlap for individual pixels, as described in Eq. (16).

where,

3.2.3 Image Volume Maintenance

Given that each cell is partitioned into many blocks, it is imperative to ensure that the volume within each individual block remains at a minimum. Consequently, the overall volume of the image is determined by measuring narrow image bands in this scenario. By maintaining distinct picture sets, the accuracy rate is increased, hence preventing non-uniform cell measurements, as indicated by Eq. (17).

where,

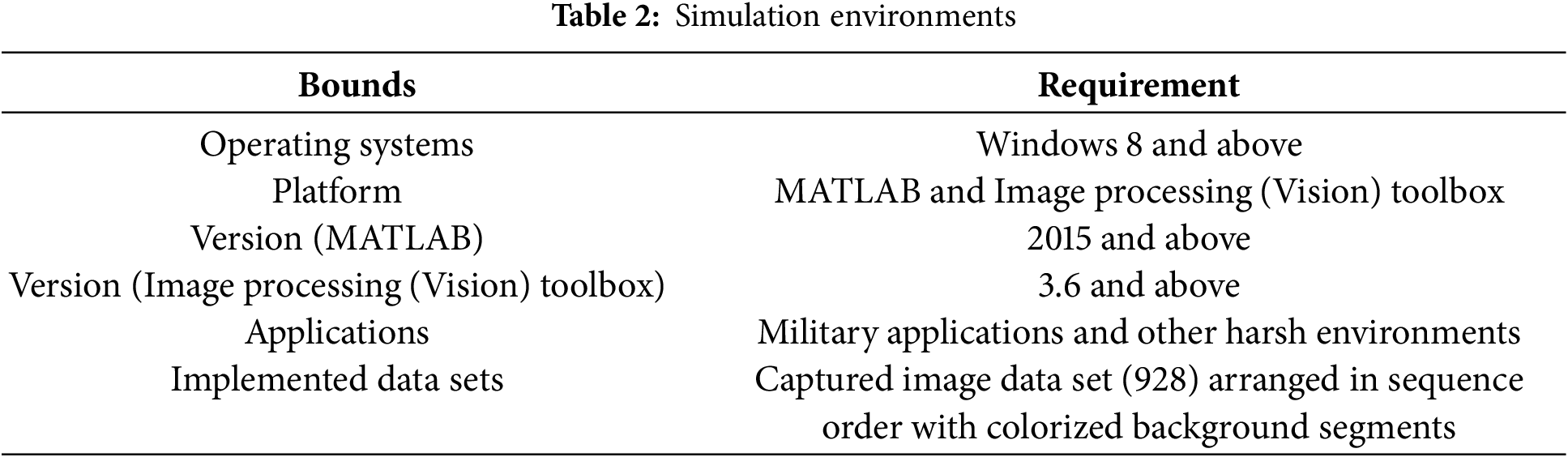

This section presents a real-time investigation of computer vision through the examination of a sequence of 928 photographs taken under various challenging circumstances. Most of the photos considered for analysis are sourced from military applications, where background segments are recognized with enhanced accuracy. To evaluate the results of the proposed method, the collected image set is combined with gradient characteristics, ensuring non-overlapping identification. Additionally, this scenario presents a feature set that has been colorized, along with normalizations that accurately describe the limiting factors. By incorporating a limiting factor, a control unit is linked to analyze a sequence of images within a shorter time frame. This facilitates the precise identification of histograms with high and low-value measurements, thereby improving detection accuracy. After providing the gradients for all images, the threshold level for individual blocks is determined to eliminate any additional overlaps. Conversely, the proposed method involves observing the 928 images using a filter that assesses the current photos while considering specific control parameters established for border segments. As the projected model analyses the acquired photographs in challenging conditions, any borders that disrupt the entire image set are removed, resulting in a higher maintenance rate. Subsequently, any scattered observations where the image set overlaps with background features are identified using both horizontal and vertical distributions. The replacement of half of the size part in the sequence image set with computer vision features allows for the display of individual blocks with reduced restoration values. This approach achieves the original results without the requirement for any external distributions. Four scenarios are developed to analyze the parametric outcomes, and the significance of all scenarios is listed in Table 2.

Discussions

The role of simulation environments is crucial in determining the outcomes of the proposed method, as it involves the real-time processing of recorded images using predetermined parameters and simulation units. The operating system will be linked to visual processing technologies, with MATLAB serving as the principal platform for processing image sequences. In this scenario, all photographs are presented uniformly, resulting in sequential arrangements. Additionally, the configuration needs are established automatically. After capturing the photos, separate processing units with minimal restoration rates are employed, leading to a total reduction of external distribution rates. Furthermore, to enhance the grey scale factors through the use of analogous color representations, horizontal blocks are designated with threshold values, limited to a factor set at 1. Table 2 indicates the simulation environments for conducting various scenarios. A comprehensive overview of enhancement techniques, ranging from spatial domain methods like contrast stretching and histogram equalization to frequency domain approaches utilizing various filtering mechanisms, is discussed [17]. To address tactical deployment scenarios, communication models inspired by the Internet of Battle Things architecture is considered [18]. Further immersive experiential learning methods using extended reality technologies are interfaced with simulation designs [19]. Additionally Explainable AI concepts were considered to enhance transparency and trust in the decision-making components of the system [20,21]. Therefore, these classical methods serve as a baseline for understanding more advanced algorithms applied in challenging environments. Since the proposed method is carried out using image processing techniques, it is necessary to avoid overlapping images. Therefore, at the output, it is possible to enhance the images as indicated in Fig. 4. The comparison from Fig. 4 with the existing image set indicates that the projected military visual communications can be achieved only if images are enhanced with high quality.

Figure 4: Comparisons of visual communications in harsh environments. (a) Existing; (b) Proposed

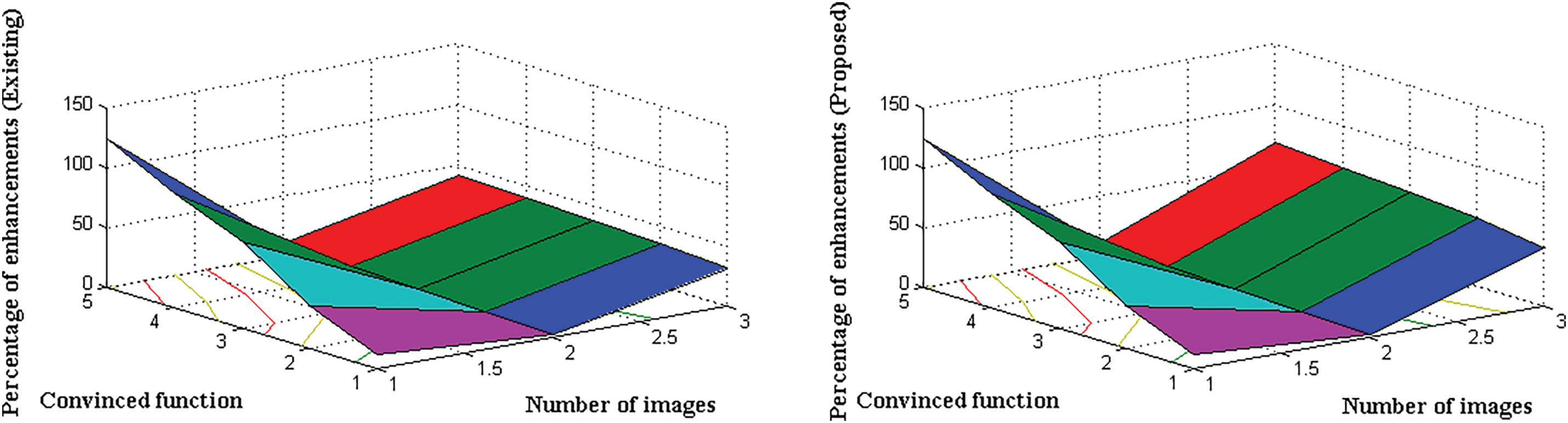

Scenario 1: Enactment of visualized filter and consistent enhancement features

The present situation involves the observation of comprehensive enhancement features for a sequence of images subsequent to the application of appropriate filter configurations. Typically, picture enhancement merely improves the contrast of background pixels. However, the suggested method increases the contrast of each data block connected to the main blocks, allowing for uninterrupted identification of objects. By establishing control factors that are equivalent to the current images, it becomes possible to assign limiting values to all normalized segments. This allows for the possibility of reconnecting any problems that may arise with divided blocks or blocks that are unnecessarily removed by the visualized filter to the same image set in the future. Therefore, the primary benefit in this particular situation is not only in the augmentation of photos but also in the ability to detect unattached images that have been altered using specific range values. The enhancing aspects of the proposed and existing approach are illustrated in Fig. 5.

Figure 5: Image functions for enhancements with convinced rate

Fig. 5 demonstrates that the proposed strategy may consistently improve acquired photos compared to the previous approach [6]. The inclusion of similar control elements in filters enables the manipulation of external effects in photographs, even when captured under challenging environmental conditions. In an alternative scenario, the potential for achieving consistency is also realized through the utilization of normalized values, wherein the spreading factor of the image set is expanded to encompass 360 degrees in the proposed methodology. In order to assess the results of consistent improvements, a total of 12, 36, 72, 96, and 124 photographs are reviewed. The function used in this case remains at 3, 5, 6, 8, and 10 for each batch of images. The existing method achieves consistent enhancements of 32%, 36%, 37%, 39%, and 41% for the indicated photographs. In contrast, the projected model achieves constant enhancements of 49%, 57%, 61%, 64%, and 69% for the same images. Therefore, by utilizing visualization filters, it is possible to eliminate superfluous block elements entirely in the proposed method. Additionally, if the number of photos in the collection exceeds 100%, consistency may be attained.

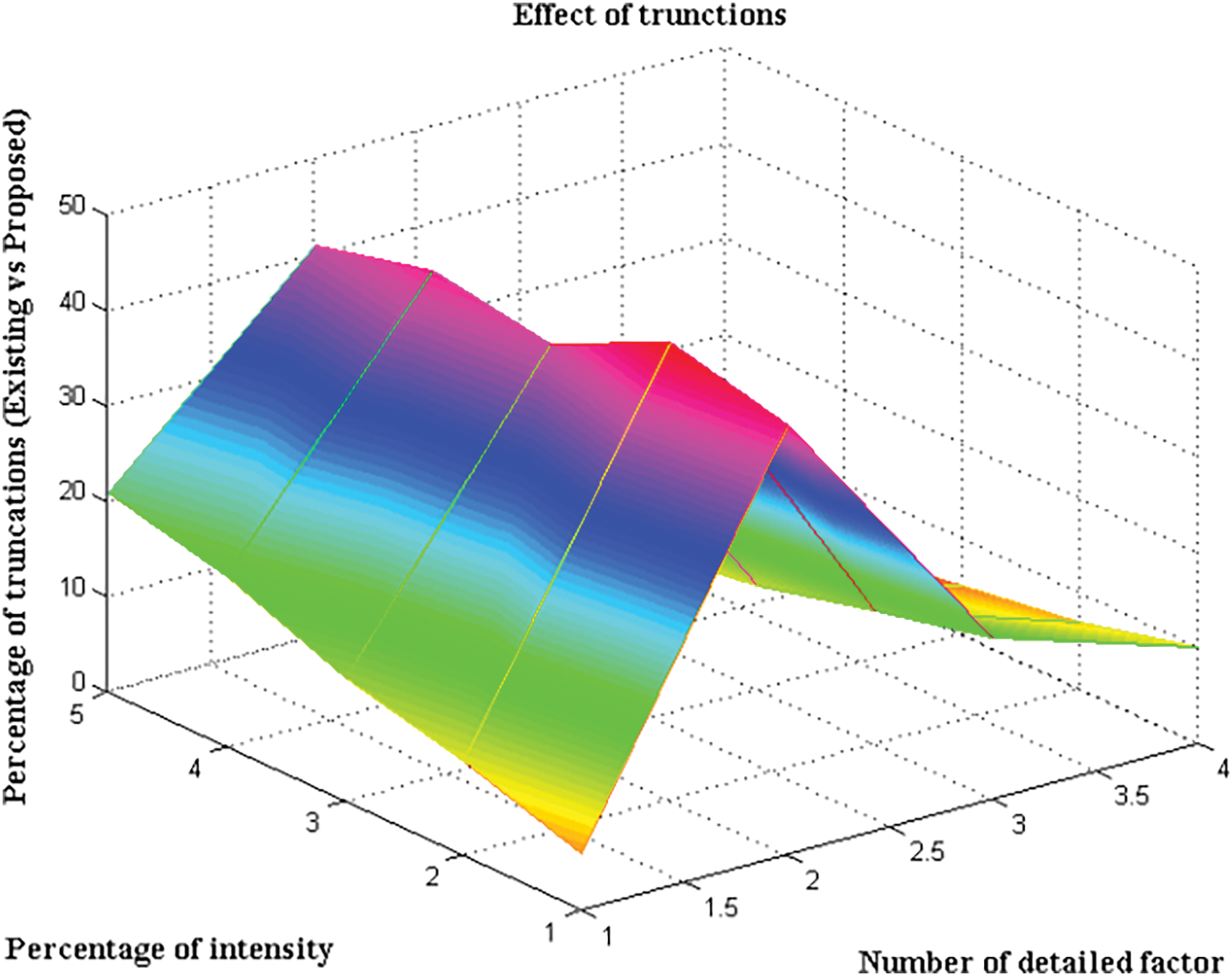

Scenario 2: Visualizations with graphical units

In order to enhance the analytics component for all visualization units, it is important to have graphical representations available. Therefore, in this particular situation, the phenomenon of total scattering is observed in each set of images, with each set being represented by unique graphical units. A border limit is established to prevent truncations. Furthermore, the presence of low-light in the background poses a significant challenge in addressing several associated problems. Consequently, doing a thorough analysis of the specific components involved in this scenario can facilitate the elimination of low light conditions, thereby reducing the occurrence of scattered measurement values.

Given that photographs are captured in diverse and challenging circumstances, the occurrence of significantly elevated haze levels can lead to a severe situation where the overall intensity of the images is compromised, rendering them irreparable in subsequent instances. Therefore, in the initial state, it is necessary to identify and eliminate all scattering values, even if visualized filters are configured with certain constraints. By using truncated visualization, one can only get a clean vision for improved object recognition. Fig. 6 shows the truncations for high-intensity images.

Figure 6: Truncations for high-intensity images

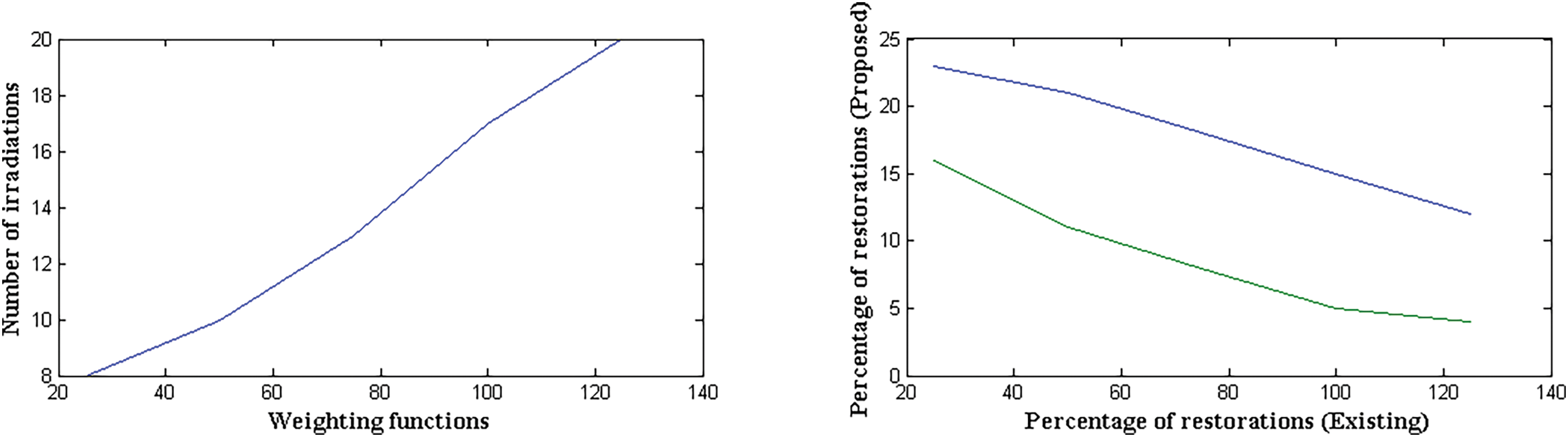

Scenario 3: Visualization brilliance and restoration units

The enhancement procedure for gray-scale photos is challenging, necessitating the inclusion of analogous color representations. This is necessary to distinguish background images from segmented blocks and accurately identify different items. Therefore, in this particular scenario, an analysis is conducted on restoration units for all photos, focusing on the visualization brightness and the observation of reflection elements in the sequence of images. In the field of image processing, restoration refers to the procedure of eliminating noisy and corrupted formats, resulting in the creation of normal images that retain only the original blocks. The proposed method involves adding a colorized unit with a low reflection outcome to each block instead of removing noise. This enhances the accuracy of irradiation measurements, which may then be further decreased to replace poor values. Restoration units enable the identification of all images in hard settings by assigning specific weighting factors as shown in Fig. 7, resulting in the formation of acceptable pixels in clear states.

Figure 7: Individual weighting functions with minimized restorations

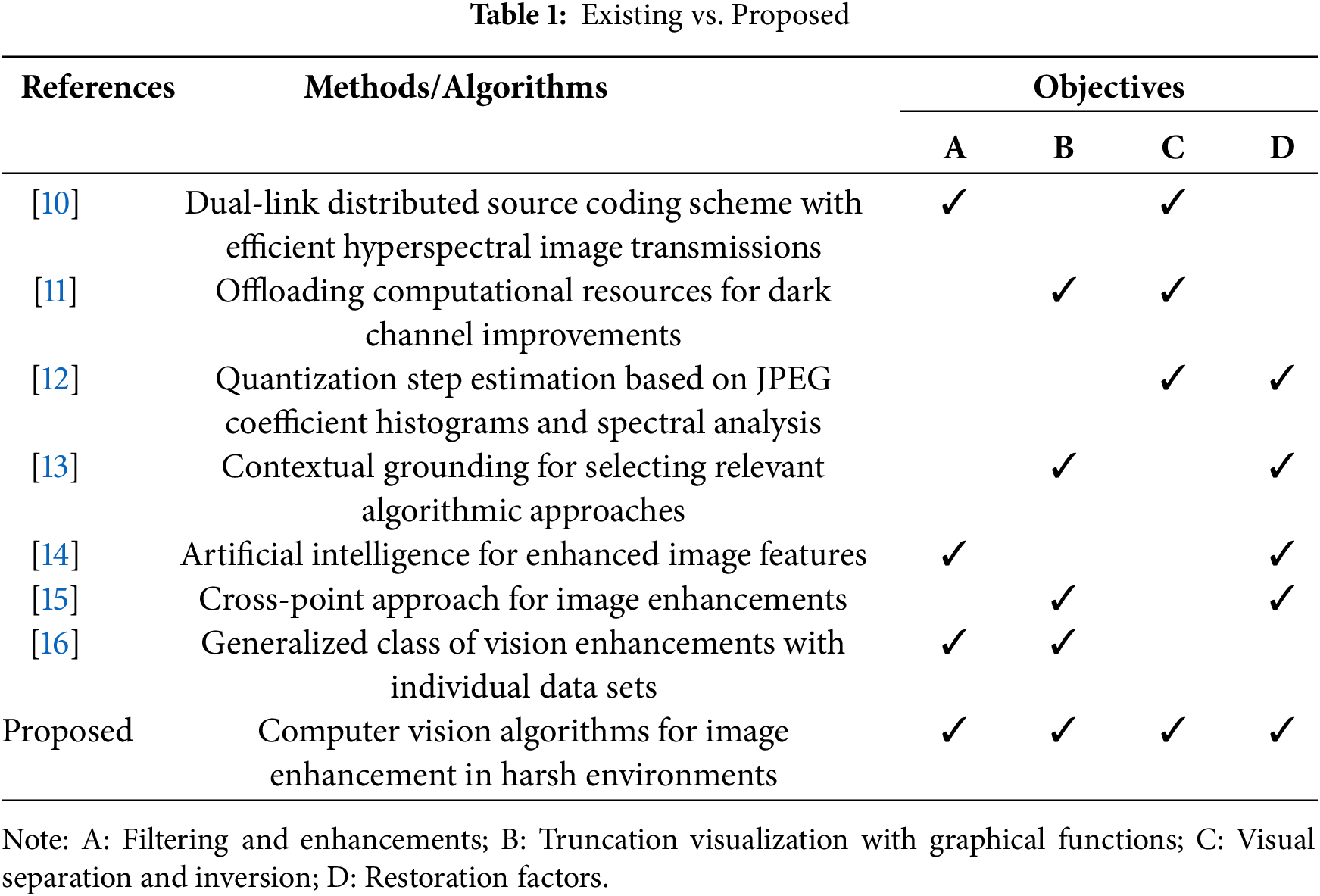

Scenario 4: Inversions and separations

In this case, we witness complete image inversions with equal distance separations, taking into account both vertical and horizontal pixel blocks. Recognizing analogous color blocks and mapping each light block to dark ones is crucial for accurately distinguishing distinct objects and achieving more precise measurements using picture inversion. When creating mapping representations of this nature, changes are made at each boundary, followed by the execution of curve step procedures.

By accurately separating images with appropriate pixel values, it becomes able to accurately identify them during the inversion step, eliminating any crucial measurements. The key measurement in this situation is determined by calculating the differences between the acquired and original image sets. This allows for the monitoring of the exponential ranges of each image. The comparative simulation outcomes of the proposed and existing approach in terms of inversions and separations are presented in Fig. 8.

Figure 8: Color factor in the presence of low light conditions

The utilization of image enhancement techniques in visual processing holds substantial importance in contemporary identification applications, particularly in military processing units, where establishing a secure environment is imperative. Most military actions conducted along borders occur in challenging settings, where it is especially difficult to identify territorial crowds composed of similar groups. Moreover, managing climatic conditions poses a significant challenge, as the majority of the territorial population encounters either high or low-temperature climates, thereby complicating the identification procedure. Therefore, to reduce the complexity of photos taken in difficult situations, it is necessary to process them using computer vision measures for accurate image identification. The approach outlined for picture identification employs a visualized filter to establish a controlling factor, which is subject to specific limits. This control factor is implemented by dividing the entire image set into horizontal and vertical blocks. Furthermore, to enhance visual acuity, two unique criteria, namely conviction and definiteness, are employed, each possessing identical values. Consequently, the results are derived from a two-degree set of images. In the proposed model, a visualized filter is employed to minimize the total number of truncations and obtain precise information about the image set while ensuring appropriate limiting settings are applied. A sequential strategy is employed to process all collected photos, utilizing a histogram gradient and an adaptive threshold mechanism. This approach effectively manages the occurrence of overlaps at the boundaries of the images.

Four scenarios and two performance measures are used to verify the suggested mechanisms and procedures with equal analytical representation. These metrics are then compared with the previous technique. The comparison analysis reveals that the new method consistently achieves a 69% improvement in picture enhancement, while the conventional methodology only achieves a 41% improvement in challenging settings. In contrast, the proposed model reduces the proportion of truncations to 1%, compared to the existing model’s 6%. As a result, the need for picture restoration is minimized to 4% and 12% for the proposed and traditional methods, respectively. Artificial intelligence systems can be utilized to automatically interpret photographs acquired in hostile environments in the future.

Acknowledgement: This work is financially supported by Ongoing Research Funding Program (ORF-2025-846), King Saud University, Riyadh, Saudi Arabia.

Funding Statement: This work is financially supported by Ongoing Research Funding Program (ORF-2025-846), King Saud University, Riyadh, Saudi Arabia.

Author Contributions: The authors confirm contribution to the paper as follows: study conception and design: Shitharth Selvarajan; Hariprasath Manoharan; data collection: Subhav Singh; Taher Al-Shehari; Nasser A Alsadhan; analysis and interpretation of results: Subhav Singh; Shitharth Selvarajan; Hariprasath Manoharan; draft manuscript preparation: Shitharth Selvarajan; Hariprasath Manoharan. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: Data available on request from the authors. The data that support the findings of this study are available from the corresponding author, [Shitharth Selvarajan], upon reasonable request.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Ibrahim RW, Jalab HA, Karim FK, Alabdulkreem E, Ayub MN. A medical image enhancement based on generalized class of fractional partial differential equations. Quant Imaging Med Surg. 2022;12(1):172–83. doi:10.21037/qims-21-15. [Google Scholar] [PubMed] [CrossRef]

2. Gopalan S, Arathy S. A new mathematical model in image enhancement problem. Procedia Comput Sci. 2015;46:1786–93. doi:10.1016/j.procs.2015.02.134. [Google Scholar] [CrossRef]

3. Žigulić N, Glučina M, Lorencin I, Matika D. Military decision-making process enhanced by image detection. Information. 2024;15(1):11. doi:10.3390/info15010011. [Google Scholar] [CrossRef]

4. Wang J, Yuan Y, Li G. Multifeature contrast enhancement algorithm for digital media images based on the diffusion equation. Adv Math Phys. 2022;2022:1982555. doi:10.1155/2022/1982555. [Google Scholar] [CrossRef]

5. Yang R, Chen L, Zhang L, Li Z, Lin Y, Wu Y. Image enhancement via special functions and its application for near infrared imaging. Glob Chall. 2023;7(7):2200179. doi:10.1002/gch2.202200179. [Google Scholar] [PubMed] [CrossRef]

6. Li P, Gu X. An image enhancement method based on partial differential equations to improve dark channel theory. IOP Conf Ser Earth Environ Sci. 2021;769(4):042112. doi:10.1088/1755-1315/769/4/042112. [Google Scholar] [CrossRef]

7. Zhang H, Gong L, Li X, Liu F, Yin J. An underwater imaging method of enhancement via multi-scale weighted fusion. Front Mar Sci. 2023;10:1150593. doi:10.3389/fmars.2023.1150593. [Google Scholar] [CrossRef]

8. He K, Tao D, Xu D. Adaptive colour restoration and detail retention for image enhancement. IET Image Process. 2021;15(14):3685–97. doi:10.1049/ipr2.12223. [Google Scholar] [CrossRef]

9. Zhang P. Image enhancement method based on deep learning. Math Probl Eng. 2022;2022(1):6797367. doi:10.1155/2022/6797367. [Google Scholar] [CrossRef]

10. Hagag A, Omara I, Chaib S, Ma G, El-Samie FEA. Dual link distributed source coding scheme for the transmission of satellite hyperspectral imagery. J Vis Commun Image Represent. 2021;78:103117. doi:10.1016/j.jvcir.2021.103117. [Google Scholar] [CrossRef]

11. Jiang Y, Dong L, Liang J. Image enhancement of maritime infrared targets based on scene discrimination. Sensors. 2022;22(15):5873. doi:10.3390/s22155873. [Google Scholar] [PubMed] [CrossRef]

12. Yao H, Wei H, Qiao T, Qin C. JPEG quantization step estimation with coefficient histogram and spectrum analyses. J Vis Commun Image Represent. 2020;69(3):102795. doi:10.1016/j.jvcir.2020.102795. [Google Scholar] [CrossRef]

13. Galán JJ, Carrasco RA, LaTorre A. Military applications of machine learning: a bibliometric perspective. Mathematics. 2022;10(9):1397. doi:10.3390/math10091397. [Google Scholar] [CrossRef]

14. Peng X, Zhang X, Li Y, Liu B. Research on image feature extraction and retrieval algorithms based on convolutional neural network. J Vis Commun Image Represent. 2020;69(6):102705. doi:10.1016/j.jvcir.2019.102705. [Google Scholar] [CrossRef]

15. Li C, Anwar S, Hou J, Cong R, Guo C, Ren W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans Image Process. 2021;30:4985–5000. doi:10.1109/TIP.2021.3076367. [Google Scholar] [PubMed] [CrossRef]

16. Lozano-Vázquez LV, Miura J, Rosales-Silva AJ, Luviano-Juárez A, Mújica-Vargas D. Analysis of different image enhancement and feature extraction methods. Mathematics. 2022;10(14):2407. doi:10.3390/math10142407. [Google Scholar] [CrossRef]

17. Gonzalez RC, Woods RE. Digital image processing. 4th ed. London, UK: Pearson Education; 2018. [Google Scholar]

18. Kufakunesu R, Myburgh H, De Freitas A. The Internet of battle things: a survey on communication challenges and recent solutions. Discov Internet Things. 2025;5(1):3. doi:10.1007/s43926-025-00093-w. [Google Scholar] [CrossRef]

19. Garcia Estrada J, Prasolova-Førland E, Kjeksrud S, Themelis C, Lindqvist P, Kvam K, et al. Military education in extended reality (XRlearning troublesome knowledge through immersive experiential application. Vis Comput. 2024;40(10):7249–78. doi:10.1007/s00371-024-03339-w. [Google Scholar] [CrossRef]

20. e Oliveira E, Rodrigues M, Pereira JP, Lopes AM, Mestric II, Bjelogrlic S. Unlabeled learning algorithms and operations: overview and future trends in defense sector. Artif Intell Rev. 2024;57(3):66. doi:10.1007/s10462-023-10692-0. [Google Scholar] [CrossRef]

21. Wood NG. Explainable AI in the military domain. Ethics Inf Technol. 2024;26(2):29. doi:10.1007/s10676-024-09762-w. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools