Open Access

Open Access

REVIEW

Transformers for Multi-Modal Image Analysis in Healthcare

1 Department of Biomedical Engineering, KMCT College of Engineering for Women, Kerala, 683104, India

2 MCA Department, Federal Institute of Science and Technology, Kerala, 683104, India

3 ECE Department, National Institute of Technology Calicut, Kerala, 683104, India

4 Electrical Engineering Department, College of Engineering, King Khalid University, Abha, 61421, Saudi Arabia

* Corresponding Author: Sameera V Mohd Sagheer. Email:

Computers, Materials & Continua 2025, 84(3), 4259-4297. https://doi.org/10.32604/cmc.2025.063726

Received 22 January 2025; Accepted 16 June 2025; Issue published 30 July 2025

Abstract

Integrating multiple medical imaging techniques, including Magnetic Resonance Imaging (MRI), Computed Tomography, Positron Emission Tomography (PET), and ultrasound, provides a comprehensive view of the patient health status. Each of these methods contributes unique diagnostic insights, enhancing the overall assessment of patient condition. Nevertheless, the amalgamation of data from multiple modalities presents difficulties due to disparities in resolution, data collection methods, and noise levels. While traditional models like Convolutional Neural Networks (CNNs) excel in single-modality tasks, they struggle to handle multi-modal complexities, lacking the capacity to model global relationships. This research presents a novel approach for examining multi-modal medical imagery using a transformer-based system. The framework employs self-attention and cross-attention mechanisms to synchronize and integrate features across various modalities. Additionally, it shows resilience to variations in noise and image quality, making it adaptable for real-time clinical use. To address the computational hurdles linked to transformer models, particularly in real-time clinical applications in resource-constrained environments, several optimization techniques have been integrated to boost scalability and efficiency. Initially, a streamlined transformer architecture was adopted to minimize the computational load while maintaining model effectiveness. Methods such as model pruning, quantization, and knowledge distillation have been applied to reduce the parameter count and enhance the inference speed. Furthermore, efficient attention mechanisms such as linear or sparse attention were employed to alleviate the substantial memory and processing requirements of traditional self-attention operations. For further deployment optimization, researchers have implemented hardware-aware acceleration strategies, including the use of TensorRT and ONNX-based model compression, to ensure efficient execution on edge devices. These optimizations allow the approach to function effectively in real-time clinical settings, ensuring viability even in environments with limited resources. Future research directions include integrating non-imaging data to facilitate personalized treatment and enhancing computational efficiency for implementation in resource-limited environments. This study highlights the transformative potential of transformer models in multi-modal medical imaging, offering improvements in diagnostic accuracy and patient care outcomes.Keywords

Contemporary medical practice relies extensively on diagnostic imaging techniques, which play a crucial role in providing vital insights into the anatomical structures of patients and their disease states. These imaging modalities encompass a range of technologies including Magnetic Resonance Imaging (MRI), Computed Tomography (CT), and Positron Emission Tomography (PET), each offering unique advantages in the diagnostic process. According to Zaidi et al. [1], MRI produces detailed images of soft tissues; CT is particularly effective for examining bone structures and identifying abnormalities; and PET captures metabolic activity for functional imaging. The integration of these complementary methods, referred to as multi-modal imaging, enables a comprehensive diagnostic assessment. This method improves the precision and effectiveness of medical decision making by offering a more comprehensive view of the health status of the patient. The analysis of multi-modal medical images poses numerous obstacles. Variations in resolution, contrast, and acquisition parameters among different modalities complicate direct comparisons. Furthermore, Wenderott et al. [2] highlighted that the immense quantity of information produced in medical environments surpasses the capabilities of the conventional analytical techniques and human interpreters. Artificial Intelligence (AI) has emerged as a promising approach for addressing these issues. By streamlining and enhancing image interpretation, AI can assist in overcoming the constraints of manual analysis, thus enhancing diagnostic accuracy and alleviating the workload of medical professionals [3–5].

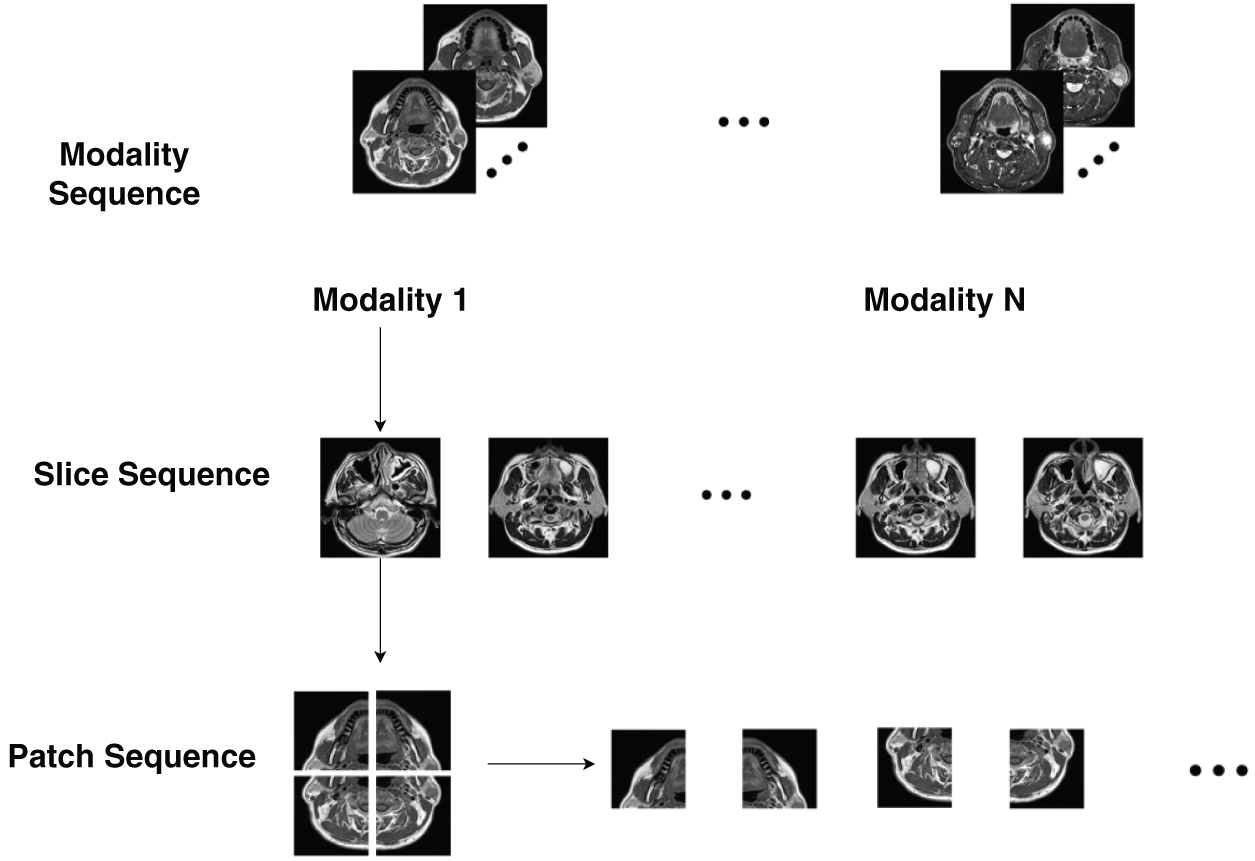

In medical imaging, distinct sequences often capture crucial long-distance relationships and semantic contents, as shown in Fig. 1. These sequences are essential for accurately depicting the structural and functional aspects of the human organs. Consistency among organs ensures that medical images maintain an inherent structure, facilitating uniform visual interpretation. Disrupting or modifying these sequences can significantly impair the model performance by hindering the extraction of meaningful patterns. Consequently, maintaining the integrity of these sequences is crucial for achieving reliable and effective results in the analysis of medical images.

Figure 1: Comparison between natural and multi-modal medical images (adapted from Dai et al. [6])

CNNs have emerged as a crucial element of deep learning in the field of AI-driven medical imaging. These sophisticated networks demonstrate exceptional performance in various tasks, including the classification and segmentation of images, as well as the identification of anomalies. CNNs have become an essential tool in advancing AI applications within the medical imaging domain. Nevertheless, Li et al. [7] point out that CNNs have fundamental shortcomings in capturing long-distance relationships and comprehensive contextual information, which are essential for analyzing images across multiple modalities. The limited receptive field of CNNs, determined by their convolutional kernels, impedes their ability to detect relationships between spatially distant parts of an image. This limitation hampers capacity of CNNs to fully leverage the complementary aspects of multi-modal data. Advancements in interpretable AI, biomedical signal analysis, and biomechanics have contributed to improved medical imaging, gene selection, and interaction recognition [8–12]. The implementation of these denoising methods can enhance the robustness of multimodal medical-image analysis, thereby increasing the applicability of transformers in practical clinical environments [13–17]. Recent progress in areas such as skeleton-based human pose prediction, enhancing the resolution of retinal fundus images, improving the sensitivity of spin-exchange relaxation-free magnetometers, assessing image quality without reference using transformers, and parsing complex electronic medical records has made a substantial impact on the domains of computer vision, medical imaging, and biomedical signal processing [18–22]. Recent studies have explored deep learning-based approaches for biomedical signal processing, including Electrocardiogram (ECG) denoising, ultrasound imaging, dental plaque segmentation, and muscle fatigue detection [23–27].

Initially designed for Natural Language Processing (NLP) tasks, transformers have recently become increasingly popular in the field of computer vision owing to their ability to effectively model global relationships. Unlike CNNs, transformers employ a self-attention mechanism to process all input parts simultaneously, enabling them to capture the complex relationships between various image regions. This characteristic makes transformers particularly well-suited for multi-modal image analysis, where understanding spatial and semantic relationships across multiple imaging modalities is essential [28–31]. Recent progress in transformer-based models has demonstrated their capability to manage noisy data in medical imaging. For example, Naqvi et al. [32] investigated how transformers can improve image quality by minimizing noise, which aligns with our discussion on the resilience of the model to variations in image quality.

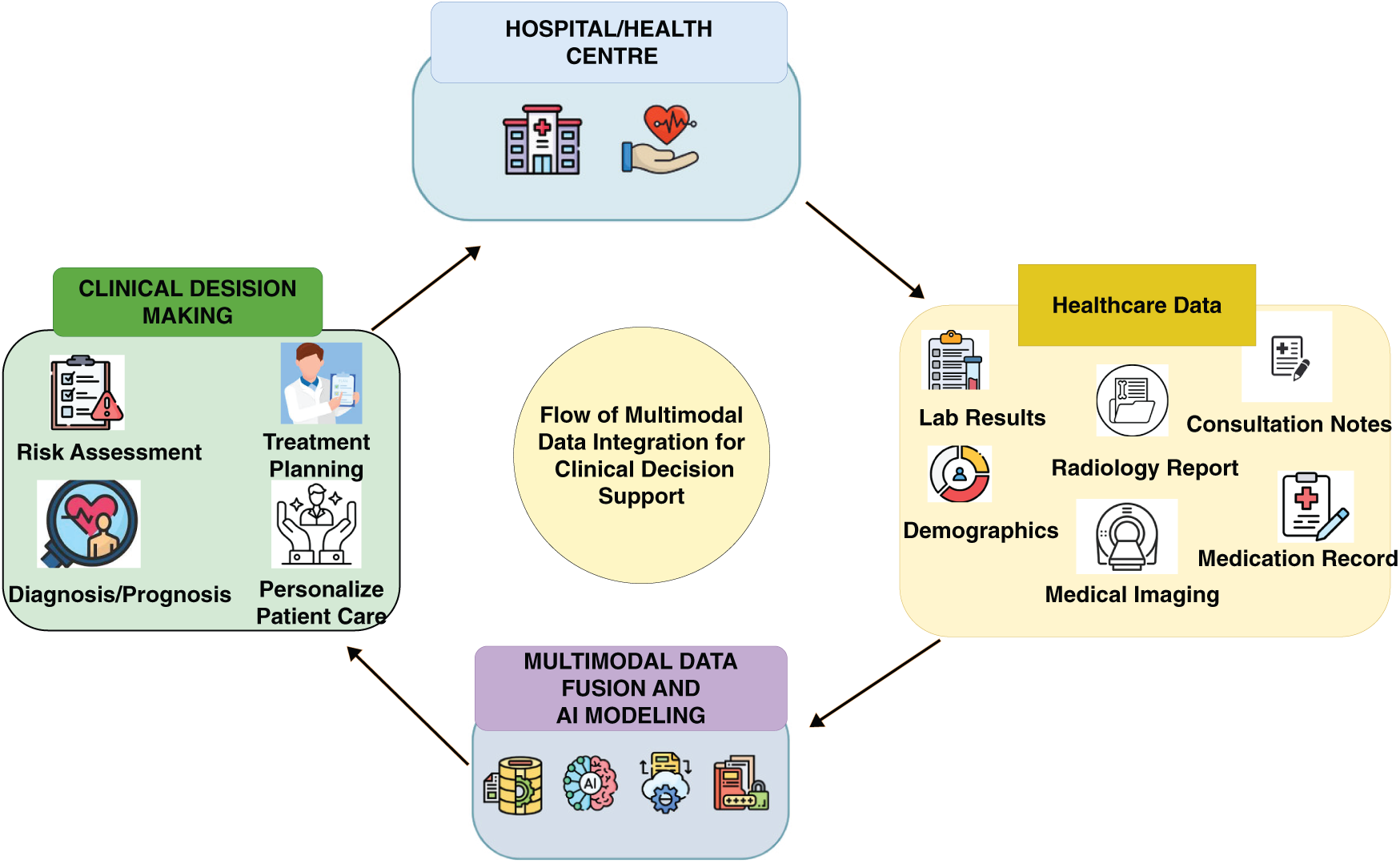

Transformers have shown significant potential in the field of medical imaging. Studies have revealed their effectiveness in various applications, including segmenting images, identifying diseases, and pinpointing anomalies. Through the use of self-attention mechanisms, transformers can more effectively learn and merge features from multiple modalities compared to conventional methods. For example, Lai et al. [33] explained tumor segmentation tasks: transformers can concurrently consider anatomical information from MRI and metabolic activity from PET, resulting in more precise tumor boundary delineation. This ability not only improves the precision of diagnoses but also offers crucial information about disease progression and response to treatments. Transformer-based models offer scalability and adaptability, which are vital in healthcare settings. These models can undergo initial training on large-scale datasets and subsequently be refined for particular applications using minimally labeled data, which is a typical situation in medical imaging because of the substantial costs and specialized knowledge required for data labeling. Additionally, Alsaad et al. [34] highlighted that the flexible architecture of transformers facilitates the seamless integration of various data types, including medical histories of the patients, laboratory test results, and genetic information. This adaptability opens up possibilities for developing comprehensive healthcare solutions that are centered around individual patients. The proposed framework for improving the clinical decision support through the integration of multimodal data is presented in Fig. 2. The process is initiated with the collection of information from diverse healthcare facilities, which is then consolidated using multimodal data-fusion techniques. Subsequently, AI modeling was applied to the aggregated data to derive crucial insights encompassing diagnosis, prognosis, risk evaluation, and treatment strategies. These valuable insights are then relayed to healthcare professionals, empowering them to make informed and effective decisions regarding patient care. Recent progress in compensating for magnetic fields in optically pumped magnetometers, guiding hematoma evacuation with imaging, employing multi-wavelength microscopy, classifying speech imagery using Electroencephalogram (EEG), and reconstructing visual stimuli from EEG signals has greatly enhanced biomedical imaging and neurological applications [35–38].

Figure 2: Flow of multimodal data integration for clinical decision support (adapted from Teoh et al. [39])

The interpretability of transformer models is another significant advantage. In clinical environments, it is crucial for AI systems to provide explainable results that clinicians can comprehend and trust. As referenced by Alshehri et al. [40] the attention maps generated by transformers highlight the input areas on which the model focuses, offering transparency in the decision-making processes. This interpretability not only aids in validating model predictions but also fosters confidence among healthcare professionals in adopting AI-assisted diagnostic tools.

Although transformer-based models offer numerous benefits, their implementation in clinical settings presents several hurdles. Transformers face significant constraints owing to their quadratic scaling with the input size, especially when processing high-resolution medical images, which results in considerable computational requirements. Researchers are currently developing more efficient transformer architectures such as Swin Transformers and Vision Transformers (ViTs) to reduce computational costs while maintaining performance levels, as noted by Xu et al. [41]. Furthermore, ensuring that these models can be applied across diverse patient groups and imaging protocols is essential for their widespread adoption in health care. Recent developments, such as the GLoG-CSUnet framework, enhance ViTs by incorporating flexible radiomic features, such as Gabor and Laplacian of Gaussian (LoG) filters. This method boosts the segmentation precision by capturing intricate anatomical details, highlighting the potential of feature-enhanced transformer models in medical image analysis [42–45].

This study investigated the potential of transformer-based models for analyzing multimodal images in healthcare. As mentioned by Dai et al. [6], we offer a thorough examination of their applications, emphasizing their capacity to integrate and examine data from various imaging modalities. It is important to note that this work does not present original experimental contributions but instead provides a comprehensive review and analysis of existing literature on transformer-based models in multimodal medical imaging.

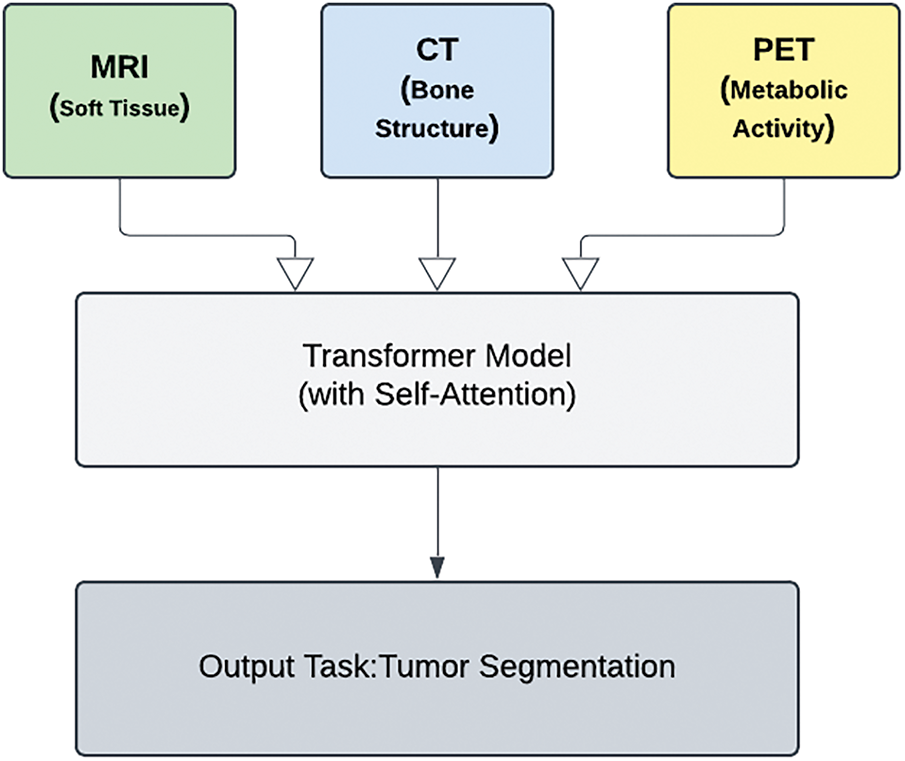

The framework proposed in Fig. 3 utilizes transformer models featuring self-attention to analyze the inputs from various imaging modalities. These include MRI for soft tissue visualization, CT for bone structure examination, and PET for metabolic activity assessment. The ultimate goal of this multimodal approach is to achieve an accurate tumor segmentation.

Figure 3: Transformer-based models in multi-modal medical imaging

Transformers represent a major advancement in the field of medical image analysis. By leveraging their distinctive attributes, healthcare systems can attain unparalleled levels of precision and effectiveness in diagnostics, ultimately resulting in improved patient outcomes, as discussed by Li et al. [46]. As this field continues to advance, future research should concentrate on optimizing transformer architectures for medical applications and integrating them with other AI-driven tools to create comprehensive healthcare ecosystems [47–49].

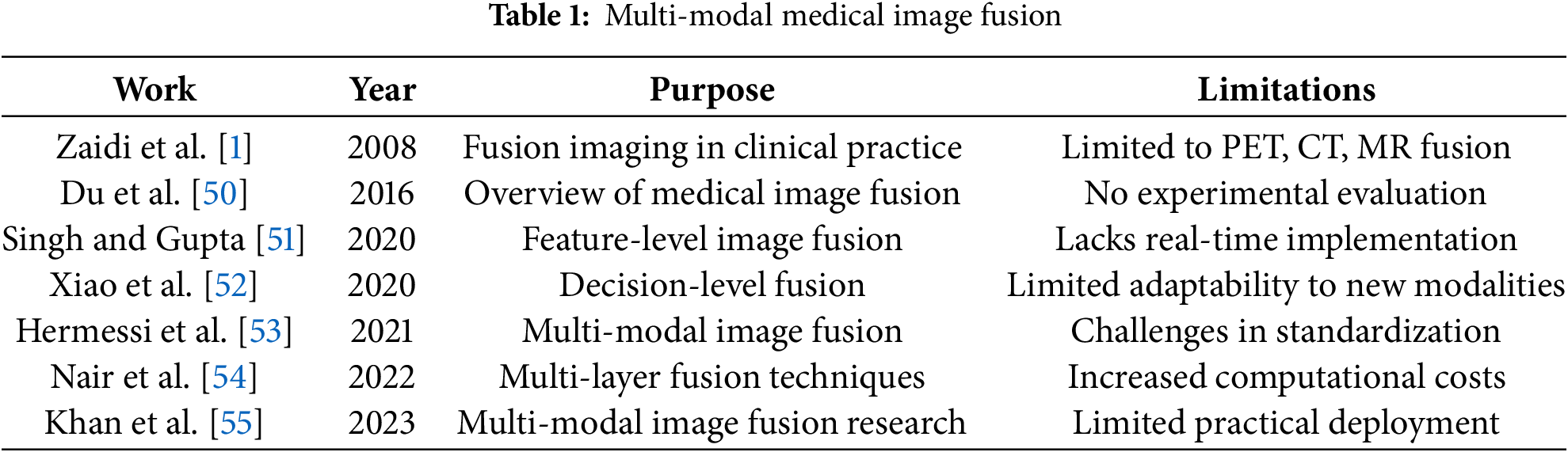

Table 1 presents an overview of various multi-modal medical image fusion techniques, highlighting their objectives and associated limitations.

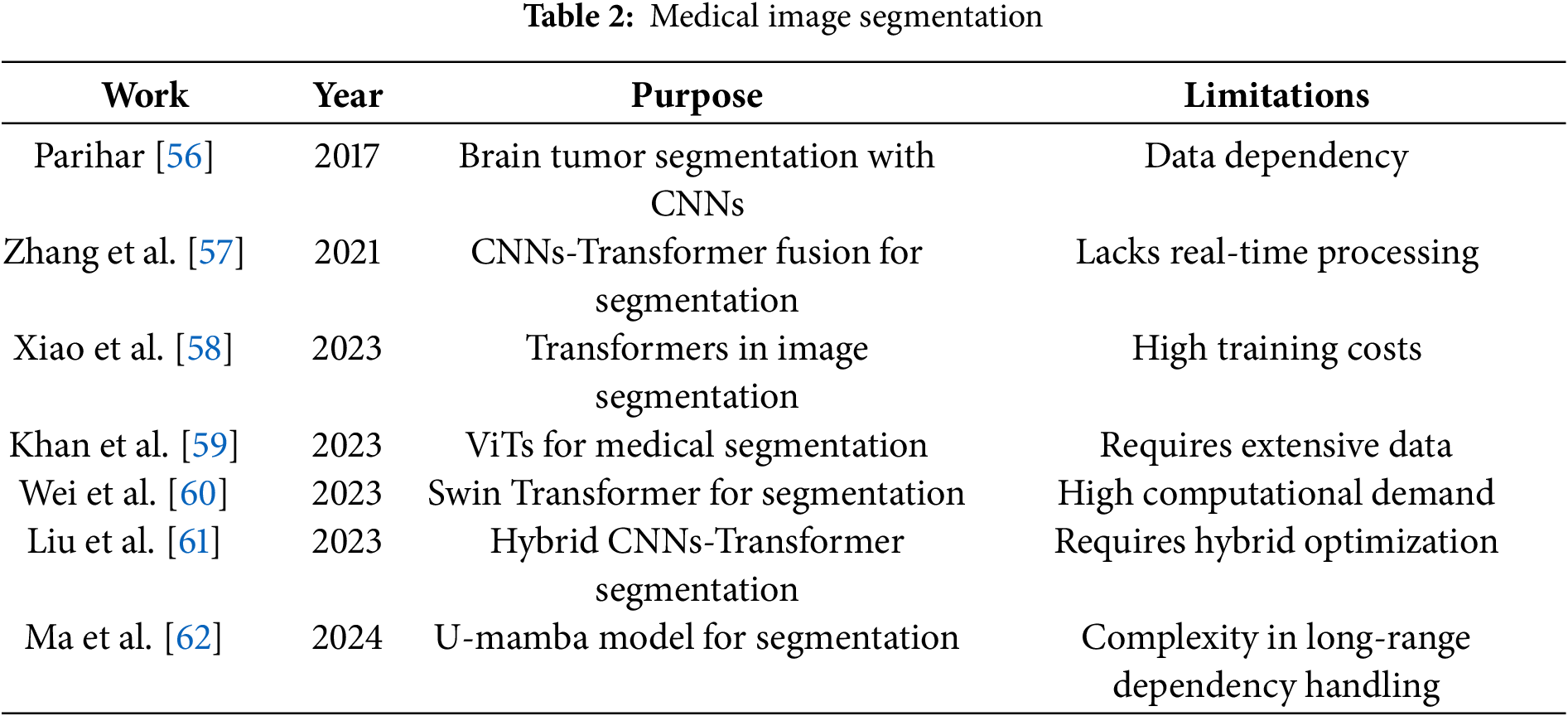

Table 2 summarizes notable works on medical image segmentation, focusing on different deep learning models and their constraints.

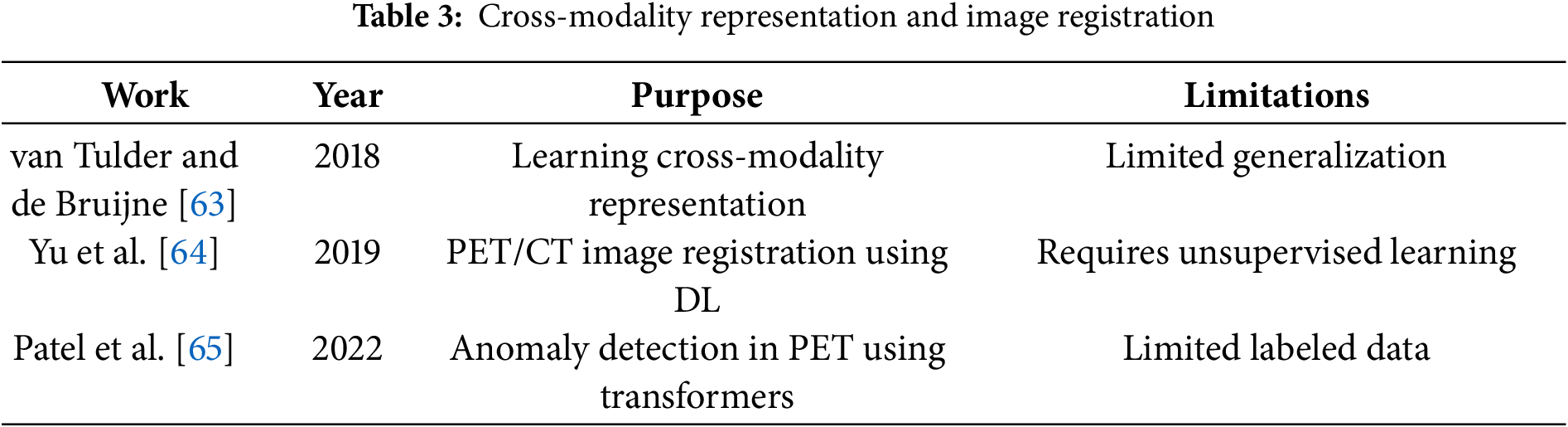

Table 3 outlines key studies on cross-modality representation learning and image registration in medical imaging.

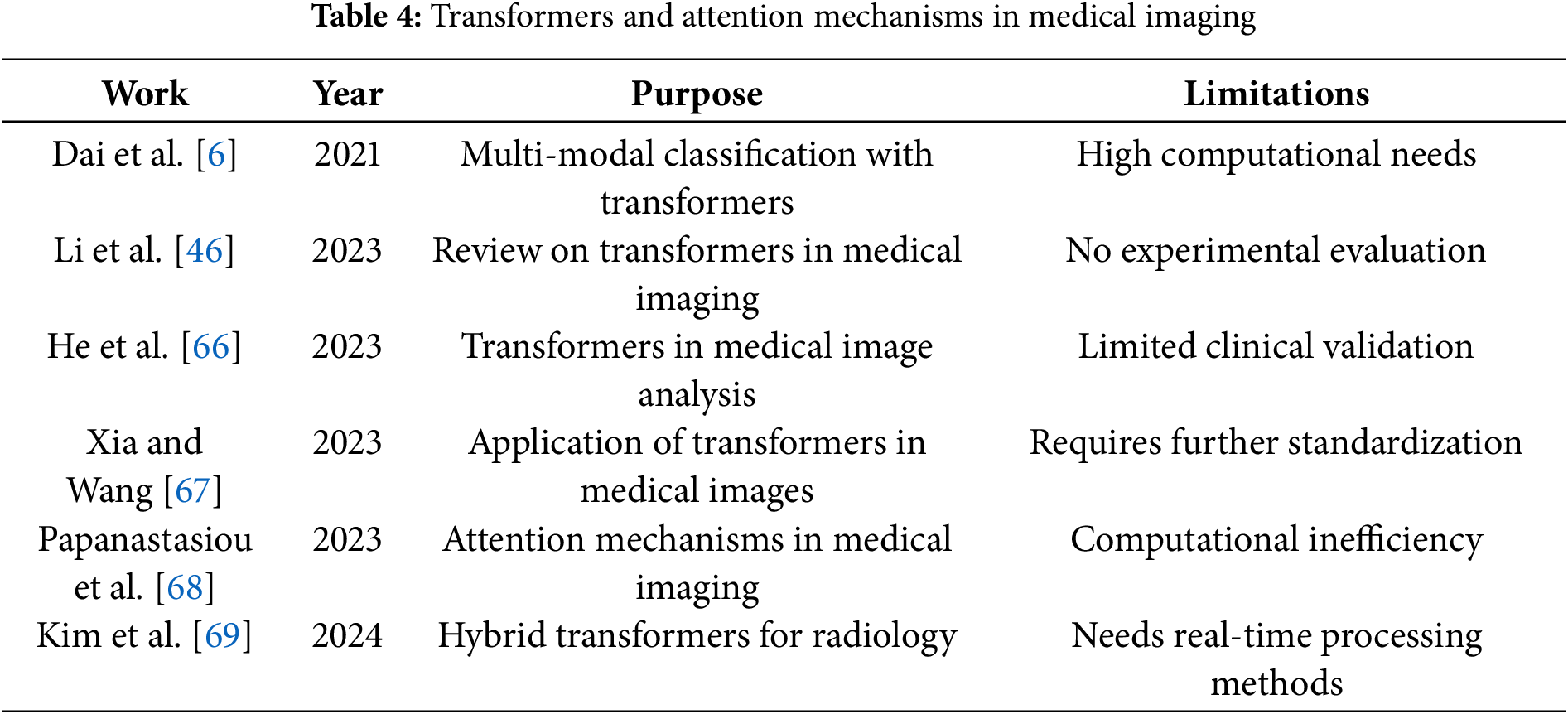

Table 4 provides insights into the use of transformers and attention mechanisms in medical imaging, emphasizing their challenges.

Healthcare has undergone significant changes owing to the incorporation of AI, which has revolutionized many diagnostic and therapeutic processes. Transformer-based models have emerged as particularly noteworthy among AI innovations, owing to their remarkable capacity to process and synthesize intricate, multidimensional data. This section delves into the essential concepts required to comprehend their functions in multimodal medical image analysis. It encompasses the examination of various medical imaging techniques, conventional image analysis methods, the emergence of transformers, and their implementation in multimodal medical imaging contexts [36].

2.1 Medical Imaging Modalities

In contemporary healthcare, medical imaging serves a crucial function by providing an in-depth understanding of the anatomical and functional conditions of the body. Various imaging techniques can be used to capture specific aspects of human physiology.

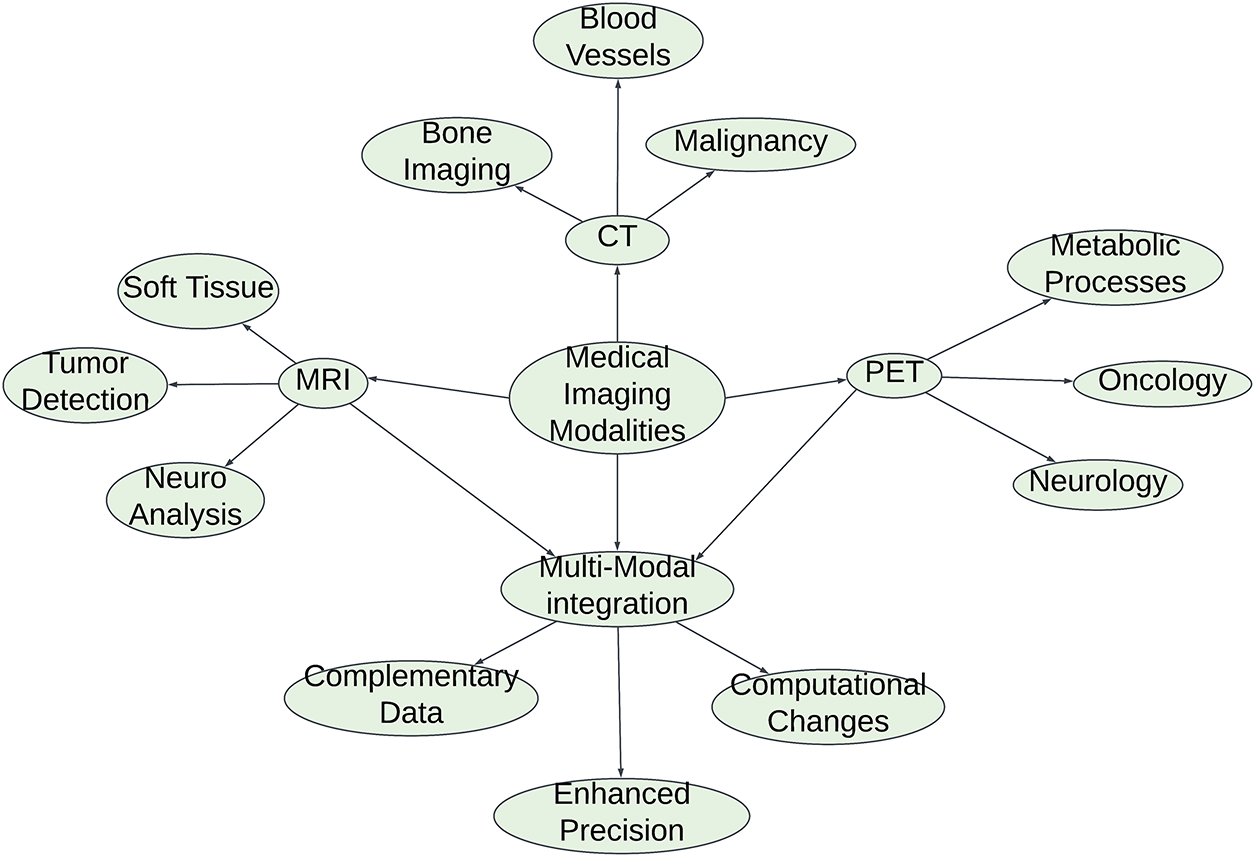

• MRI (Magnetic Resonance Imaging): MRI generates highly detailed, contrast-rich images of soft tissues that are crucial for detecting and evaluating various health issues, including neoplasms and disorders affecting the nervous system.

• CT (Computed Tomography): High-resolution cross-sectional imagery is particularly useful for identifying bone breaks, malignancies, and disorders affecting the blood vessels.

• PET (Positron Emission Tomography): Visualization of metabolic processes through functional imaging is commonly used in the fields of oncology and neurology.

Although individual modalities are effective on their own, integrating them improves the diagnostic precision by offering complementary data. Nevertheless, this multimodal strategy presents difficulties in merging and interpreting information and requires sophisticated computational techniques. Fig. 4 illustrates that, while individual modalities perform well on their own, combining them creates a multimodal approach that improves diagnostic precision by utilizing complementary information. Nevertheless, this method presents challenges in terms of data integration and interpretation, requiring sophisticated computational techniques.

Figure 4: Medical imaging modalities and multi-modal integration

2.2 Traditional Approaches in Medical Image Analysis

Historically, medical image interpretation relied on hand-crafted features and traditional machine learning techniques, and the emergence of CNNs has marked a significant breakthrough, as these systems automated feature extraction processes and exhibited remarkable effectiveness in a range of applications, including:

• Image Classification: Assigning diagnostic labels based on visual patterns.

• Segmentation: Identifying and outlining specific anatomical components or diseased areas.

• Object Detection: Identifying and localizing specific abnormalities.

Despite the impressive achievements of CNNs, they have inherent limitations. The limited scope of their receptive fields hinders their capacity to detect long-distance relationships, which is essential for processing multimodal information. Furthermore, CNNs struggle to effectively combine diverse data types, as they handle each modality independently, thus constraining their effectiveness in tasks that require the comprehensive integration of multiple modalities [70–74].

2.3 Transformers: A Paradigm Shift

Originally designed for NLP, Transformers have brought about a significant shift in the field of AI by addressing the shortcomings of traditional models. Unlike CNNs, transformers utilize self-attention mechanisms to examine global connections throughout entire datasets. This ability allows them to recognize intricate relationships among diverse components of input data, making them exceptionally suitable for tasks demanding a thorough grasp of information. In the realm of computer vision, transformer models such as ViTs and Swin Transformers have demonstrated exceptional performance for

• Image Classification: Competing with or surpassing CNNs in accuracy.

• Object Detection and Segmentation: Providing enhanced precision by leveraging global context.

• Anomaly Detection: Identifying subtle, context-dependent irregularities.

These advancements have created new opportunities in the field of medical imaging, where it is essential to comprehend the spatial and semantic connections across various modalities [75–77].

2.4 Transformers in Multi-Modal Medical Imaging

The intricate nature of multimodal medical imaging data necessitates the development of models that can synthesize various types of information. In this field, transformers have shown exceptional performance by utilizing self-attention mechanisms to capture cross-modal relationships efficiently. This approach has resulted in notable progress in several areas including:

• Tumor Segmentation: Combining anatomical data from MRI scans with functional information from PET imaging to accurately determine tumor boundaries.

• Disease Detection: Improving diagnostic accuracy, especially for intricate disorders such as neurodegenerative conditions, by incorporating diverse types of input data.

• Anomaly Localization: Identifying abnormalities by integrating information across modalities.

Advanced models such as TransUNet and MedT, which integrate transformer-based architectures, have demonstrated remarkable performance in medical image segmentation. These advanced systems leverage self-attention mechanisms to extract multiscale contextual information, leading to more accurate and robust image analysis. Moreover, transformer models can be trained on large-scale datasets and then fine-tuned for specific applications using small amounts of labeled data, thereby addressing a common challenge in medical imaging. The modular design of these systems enables smooth integration of additional patient data, including electronic health records (EHRs), lab results, and genetic information. This integration establishes the foundation for personalized medical approaches [78].

2.5 Challenges and Future Directions

Despite their potential, transformer-based models face challenges in clinical deployment:

• High Computational Complexity: Processing high-resolution medical images using transformers requires a substantial amount of computational power and resources.

• Generalizability: To ensure broad adoption, it is essential to verify that the model functions effectively in diverse patient populations and across various imaging modalities.

Future studies will address these obstacles by streamlining transformer designs for greater efficiency and improving their ability to adapt to various clinical environments. The combination of transformers with other AI technologies is expected to result in holistic, patient-focused healthcare systems [79].

This literature review offers a comprehensive analysis of ongoing studies and methodologies pertaining to the application of transformer-based models in multimodal image analysis within healthcare. By exploring the current landscape, this section sheds light on the existing knowledge deficits, challenges, and prospective developments in the field. To methodically explore fundamental works, methodologies, comparative studies, and emerging trends, this review is structured into separate subsections [38].

This section discusses the approaches utilized to implement transformer-based models in multimodal medical image analysis. We aimed to provide a comprehensive analysis of the techniques, procedures, and key architectural decisions involved in developing and implementing transformer-based systems for medical image analysis. Our goal is to present a detailed exploration of the fundamental elements and factors to be considered when building these sophisticated analytical frameworks for healthcare imaging [80–83].

3.1.1 Transformer Architectures for Medical Image Analysis

In medical imaging, transformers demonstrate exceptional performance by capturing intricate patterns across different modalities and modeling global relationships. Their self-attention mechanisms allow for accurate segmentation and classification, thus outperforming conventional models. The following sections examine the influence of key architectures, including ViTs, swine transformers, and hybrid models, on the field of medical imaging.

ViTs (ViTs)

ViTs are one of the most significant architectures in image analysis, showcasing their capability in both classifying and segmenting medical images, which involves splitting images into patches and processing them using multihead self-attention mechanisms. This holistic method enables ViTs to recognize far-reaching connections, which is crucial for analyzing the complex patterns present in medical imagery. Li et al. [84] employed a dual-stream Vision Transformer to analyze gait using only a single, affordable RGB camera, highlighting the capability of transformers to derive medical insights from limited data. ViTs have demonstrated superior capabilities in certain image analysis tasks compared to CNNs, primarily due to their capacity to model relationships between distant pixels [66].

Swin Transformers (Swin-T)

Swin Transformers, developed as a model for extracting hierarchical features, excel in processing high-resolution imagery. In contrast to conventional ViTs, which use a fixed patch size for image analysis, Swin-T employs self-attention mechanisms based on windows to control the computational complexity. This design has shown remarkable effectiveness in medical imaging applications, such as segmenting tumors and classifying diseases, owing to its ability to process large-scale, high-resolution medical images efficiently [58]. Zhang et al. [85] introduced an innovative method to address the difficulties of segmenting brain tumors when MRI modalities are unavailable. Achieving precise segmentation of brain tumors using MRI is crucial for integrated analysis of multimodal images. Nonetheless, in clinical settings, obtaining a full set of MRIs is not always feasible, leading to significant performance drops in current multimodal segmentation techniques owing to missing modalities. In this study, the authors introduced the first approach to leverage the transformer for multimodal brain tumor segmentation, which remains effective regardless of the combination of available modalities. Specifically, the authors proposed a new multimodal Medical Transformer (mmFormer) designed for learning from incomplete multimodal data, featuring three key components: hybrid modality-specific encoders that connect a convolutional encoder with an intra-modal transformer to capture both local and global contexts within each modality; an intermodal transformer that establishes and aligns long-range correlations across modalities to derive modality-invariant features with global semantics related to the tumor region; and a decoder that progressively upsamples and merges these modality-invariant features to produce reliable segmentation. Additionally, auxiliary regularizers are incorporated into both the encoder and decoder to bolster the resilience of the model to missing modalities. The authors performed comprehensive experiments using the public BraTS 2018 dataset for brain tumor segmentation. The findings reveal that the proposed mmFormer surpasses the leading methods for incomplete multimodal brain tumor segmentation across nearly all subsets of missing modalities [85–87].

Hybrid Models Combining CNNs and Transformers

The integration of CNNs and transformers offers a synergistic approach: CNNs excel at efficient local feature extraction, whereas transformers excel at handling global dependence. This combined architecture has proven particularly valuable in multimodal medical image analysis, where integrating various data types, such as MRI, CT, and PET scans, is crucial. By merging these two architectural styles, significant enhancements were achieved in both segmentation and classification tasks [69].

3.1.2 Pre-Processing Techniques for Multi-Modal Medical Images

Multimodal medical imaging is based on a combination of diverse diagnostic imaging methods, including MRI, CT, and PET scans. Preparing the data through preprocessing is crucial for ensuring proper alignment, standardization, and overall quality improvement. This step addresses various issues, including misalignment, variations in intensity, and scarcity of labeled data, ultimately leading to enhanced model performance and improved diagnostic precision. Critical preprocessing techniques involve image registration, normalization, and data augmentation, which prepare the data for subsequent tasks, such as segmentation and feature extraction.

Image Registration

Image registration is a crucial preliminary step in multimodal medical image analysis. This method synchronizes different medical imaging modalities, including MRI, CT, and PET scans, with a common reference frame. By doing so, it ensures that anatomical structures identified in each modality correspond accurately, facilitating effective data integration. Various registration techniques, such as rigid and non-rigid methods, are employed based on the types of images and the level of precision required. The image registration process contributes to an improved model performance in multimodal fusion tasks by providing consistently aligned data for analysis. Accurate image alignment enables more precise interpretations from the combined data, which is vital for the efficacy of medical diagnostic tools. The alignment depicted in Fig. 5 guarantees that anatomical structures observed across different modalities correspond accurately, facilitating smooth integration of data [64].

Figure 5: Medical imaging modalities and multi-modal integration

Normalization and Standardization

Different modalities in multimodal medical imaging often have distinct intensity distributions, which create challenges when integrating data from various sources. To overcome this obstacle, normalization methods are frequently utilized to harmonize the pixel intensity across different modalities. For instance, MRI scans, which typically display lower intensity values than PET images, require normalization to ensure that the features from diverse imaging techniques are comparable. The standardization procedure is not just a technical necessity but also a vital element in ensuring that subsequent steps in feature extraction function efficiently and precisely across different modalities. It aids in creating consistent and comparable data for the model to learn from, thereby enabling the development of robust and high-performance models [63,88,89].

Data Augmentation for Medical Imaging

Data augmentation has become an essential strategy for overcoming challenges associated with the scarcity of labeled medical imagery. In healthcare, the shortage of labeled data remains a significant hurdle because acquiring a substantial amount of high-quality annotated images is often costly and time intensive. Various data augmentation techniques, including rotation, flipping, and scaling, have been employed to artificially increase the size of datasets. This expansion improves the model’s ability to effectively generalize. Through data augmentation, the models become more resilient to different image transformations and exhibit improved performance on unseen data. These augmentation techniques are specifically adapted for medical image datasets to ensure diverse representations of the anatomical structures and abnormalities. This modification is crucial for enhancing the resilience of the model and its ability to apply knowledge to diverse real-world situations [90]. The transformer-based framework for multimodal medical imaging was trained through a two-step process: pre-training followed by fine-tuning. Initially, during the pretraining stage, the model was exposed to a large-scale medical imaging dataset to acquire generalizable feature representations across various imaging modalities. This phase ensures that the transformer adeptly captures both the modality-specific and cross-modal relationships. In the fine-tuning stage, a diverse, task-specific dataset is employed to tailor the model for the intended medical imaging application. This dataset encompasses a broad spectrum of cases with different imaging conditions, anatomical structures, and pathological manifestations, thereby ensuring the robustness and generalizability of the model. Furthermore, domain-specific augmentation techniques and optimization strategies to boost model performance while mitigating overfitting. Additional details regarding the dataset size, diversity, and pre-training configurations can be found in the Methodology section.

3.1.3 Fusion Strategies for Multi-Modal Data

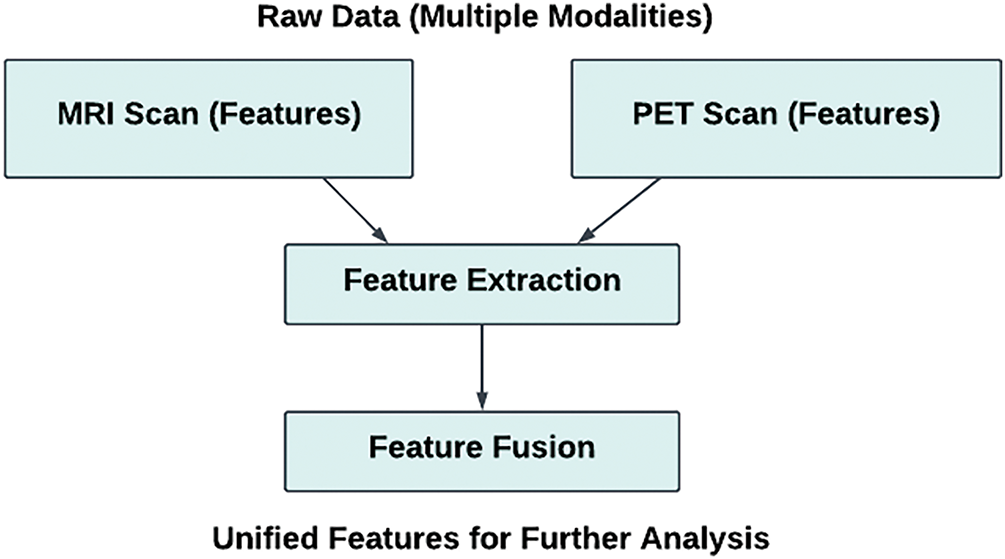

Data fusion techniques merge information from various sources to improve the model outcomes. One approach, known as early fusion, incorporates features from different data types during the initial processing stage, thus enabling the model to examine complementary information. Although this method is powerful for intricate tasks, it requires meticulous alignment and standardization. Another technique, late fusion, aggregates results from independent networks trained on single data types, providing ease of implementation and adaptability but potentially overlooking interactions between different modalities. To improve the interpretability of the transformer-based model for clinical use, attention-based visualization techniques to examine the decision-making process. specifically used attention heatmaps and Grad-CAM-like methods tailored for transformer architectures to pinpoint the most significant areas in the input data during predictions. These visual tools enable us to evaluate how the model focuses on various modalities and anatomical structures, thereby offering insights into its reasoning. By utilizing these techniques, it is ensured that the prediction of the model met clinical expectations, thereby enhancing transparency and trust among healthcare professionals. Furthermore, attention distributions across different layers to comprehend how interactions specific to each modality and cross-modal interactions contribute to the final decision. These visual explanations are essential to confirm the reliability of the model in practical medical applications. A detailed discussion of the interpretability and visualization outcomes is included in the Results section. Early fusion, a technique illustrated in Fig. 6, combines features from diverse data types during the initial processing phase. This approach enables the model to examine complementary data from the beginning.

Figure 6: Feature-level fusion of MRI and PET scan data

Early Fusion (Feature-Level Fusion)

Early fusion, also known as feature-level fusion, combines features extracted from multiple modalities during the initial phases of processing before the network analyzes them. This method enables the model to concurrently evaluate the complementary attributes from multiple sources. Although this approach offers benefits, it also presents challenges. Sophisticated techniques for alignment and normalization are necessary to address issues stemming from modality-specific variations such as disparities in image quality, intensity, or spatial alignment. Nevertheless, early fusion remains a viable option when it’s crucial to incorporate diverse information from multiple modalities from the outset. For intricate challenges such as multi-modal segmentation or multi-class classification, this approach proves especially valuable. Integrating various data sources enables a more thorough understanding of the medical conditions being examined [51,73,91].

Late Fusion (Decision-Level Fusion)

In contrast to early fusion, late fusion combines the results or outputs from separate networks, each of which has been trained on different modalities that are processed through their own networks, with the results combined at the final stage using methods such as weighted averaging or majority voting. This approach, which is simpler and potentially advantageous when aligning modalities is difficult, has the drawback of not fully exploiting intermodal interactions during feature extraction. The simplicity of late fusion makes it a viable option when modal alignment is particularly challenging or when computational resources are constrained. However, learning complex relationships between modalities may not be optimal [52].

3.1.4 Self-Attention and Cross-Attention Mechanisms in Transformers

The self-attention mechanism in transformer models allows the system to focus on different parts of an image, effectively capturing long-range dependencies that are crucial for applications, such as tumor detection. Cross-attention, when analyzing data from multiple sources, enables the model to link relevant information across various modalities by integrating MRI and PET scan data. This combination improves the accuracy and effectiveness of the model in tasks such as defining tumor margins. Abidin et al. [92] underscored the significance of integrating various MRI modalities to enhance brain tumor segmentation. By combining different MRI sequences, a more comprehensive and precise depiction of tumors and adjacent brain structures can be achieved, which is vital for effective segmentation. Multi-modal MRI allows researchers to assess the efficacy of different segmentation algorithms and compare their outcomes. These comparative analyses have fostered the creation of new techniques, ultimately improving the precision of brain tumor segmentation. The Brain Tumor Segmentation (BraTS) Challenge dataset is a crucial benchmark for evaluating segmentation performance. This dataset comprises multiple MRI modalities, including T1, T2, T1ce, and FLAIR, along with meticulously annotated tumor-segmentation masks. It remains a vital resource for researchers and clinicians involved in glioma segmentation and brain tumor diagnosis.

Self-Attention in ViTs

Transformer models are characterized by their key component, namely self-attention. This capability enables the model to focus on different parts of an input image or sequence, regardless of their spatial connections, making it particularly useful for analyzing complex medical imagery. Self-attention facilitates the model’s ability to discern connections between distant image regions, thereby capturing essential long-range dependencies that are crucial for the precise segmentation or classification of complex structures in medical data. This capability is especially advantageous for tasks such as tumor identification, which requires comprehension of the interrelationships among diverse anatomical areas. The self-attention mechanism allows transformers to efficiently process these relationships, enabling a more precise and dependable analysis of medical imagery [68,75,93].

The attention mechanism between different input vectors is computed as follows [6]:

where

Cross-Attention for Multi-Modal Data

Cross-attention is a vital function of multimodal image analysis. In a multimodal framework, the model must grasp the interrelationships between different modalities, such as MRI and PET, which provide complementary information. Transformer models utilize cross-attention mechanisms to concentrate on the pertinent aspects of one modality while processing the other. This enables improved data integration because the model can correlate structural elements from one modality with functional or metabolic information from another. For example, in tumor segmentation, cross-attention enables the model to merge structural details from MRI with metabolic activity patterns from PET scans, resulting in more precise segmentation and diagnosis. A key advantage of transformers in multimodal medical image analysis is their capacity to capture and align relationships efficiently across different modalities [65].

3.1.5 Challenges and Future Directions in Transformer-Based Models for Healthcare

In the healthcare sector, transformer models encounter several obstacles, including intensive computational requirements, medical data inconsistencies, and limited applicability in various clinical environments. Ongoing studies aim to enhance these models by focusing on three key areas: boosting operational efficiency, strengthening resilience against data irregularities, and improving versatility. To overcome these obstacles and enhance the capabilities of transformer models in medical contexts, scientists are utilizing techniques like data augmentation and transfer learning.

Computational Efficiency and Scalability

The implementation of transformer models for high-resolution medical imaging presents significant computational hurdles. These models typically require extensive memory and processing capabilities, especially when processing large 3D medical datasets, which can be computationally demanding. Ongoing research is aimed at enhancing the computational efficiency of transformers. Strategies such as model pruning, distillation, and hybrid architectures are under investigation to decrease the parameter count while preserving high performance, while streamlining transformer architectures for deployment on hardware with limited resources, such as edge computing systems and mobile devices. This requires careful balancing of the model size with inference speed. These advancements are essential for enabling the implementation of transformer-based models in real-world healthcare settings, where computational resources may be constrained [38,67,79].

Data Quality and Noise Robustness

Noise and artifacts frequently degrade medical images, potentially hampering the effectiveness of the models trained on such data. To ensure practical applications in healthcare settings, transformer models must be able to withstand these imperfections. Current studies have explored strategies, such as adversarial training and noise reduction, to enhance model resilience when faced with noisy data. Researchers have strived to develop more dependable and stable solutions for real-world clinical applications by improving the model resistance to common imaging flaws. The widespread adoption of transformer models largely depends on their ability to handle noise because actual medical data often contain imperfections that must be considered during model development and implementation [94].

Generalizability Across Clinical Scenarios

A key issue is ensuring that transformer models can function effectively in various clinical settings. Bias in medical image datasets stemming from factors such as patient demographics and imaging techniques poses a significant challenge. Overcoming these obstacles is vital for models to perform consistently in different healthcare environments, and scientists are exploring methods such as data augmentation, domain adaptation, and transfer learning to mitigate biases in AI models. These efforts aimed to improve the flexibility of transformer models, allowing them to be used in a variety of clinical settings. This would enable the wider adoption and increase the impact of AI in the medical field. The success of AI systems in diverse real-world healthcare environments depends on their ability to effectively generalize [95–97].

The application of transformer-based models for multimodal medical image analysis has gained increasing attention in recent years. This section examines the primary insights from the existing research, emphasizing the efficacy, obstacles, and potential opportunities associated with incorporating transformers into healthcare applications [98].

3.2.1 Advancements in Medical Image Classification and Segmentation Using Transformers

ViTs have demonstrated exceptional performance in the realm of medical image analysis, particularly for tasks such as classification and segmentation. These capabilities are essential for interpreting various types of medical-data formats. Early studies have underscored the effectiveness of ViTs in detecting illnesses and outlining anatomical structures. Studies have shown that ViTs can effectively capture long-range pixel relationships by breaking down images into smaller segments and utilizing multihead self-attention mechanisms. The ability to recognize connections between distant elements is particularly advantageous in the field of medical imaging, where understanding the interrelations of spatially separated components is crucial [59].

Studies have shown that ViTs demonstrate superior performance compared with conventional CNNs in certain medical imaging tasks. This advantage is particularly evident in areas such as tumor segmentation and organ detection, especially when processing intricate images such as those obtained from MRI and CT scans. Unlike CNNs, which rely on localized receptive fields, the global attention mechanism employed by transformers enables them to detect intricate patterns across various image sections, which is a crucial feature in medical image analysis. Furthermore, numerous studies have shown that ViTs trained on large datasets demonstrate enhanced generalization capabilities when encountering unfamiliar images, highlighting their adaptability to different medical imaging modalities. In the field of medical image analysis, ViTs demonstrate a notable advantage owing to their ability to recognize intricate patterns across various parts of an image [66,79,99].

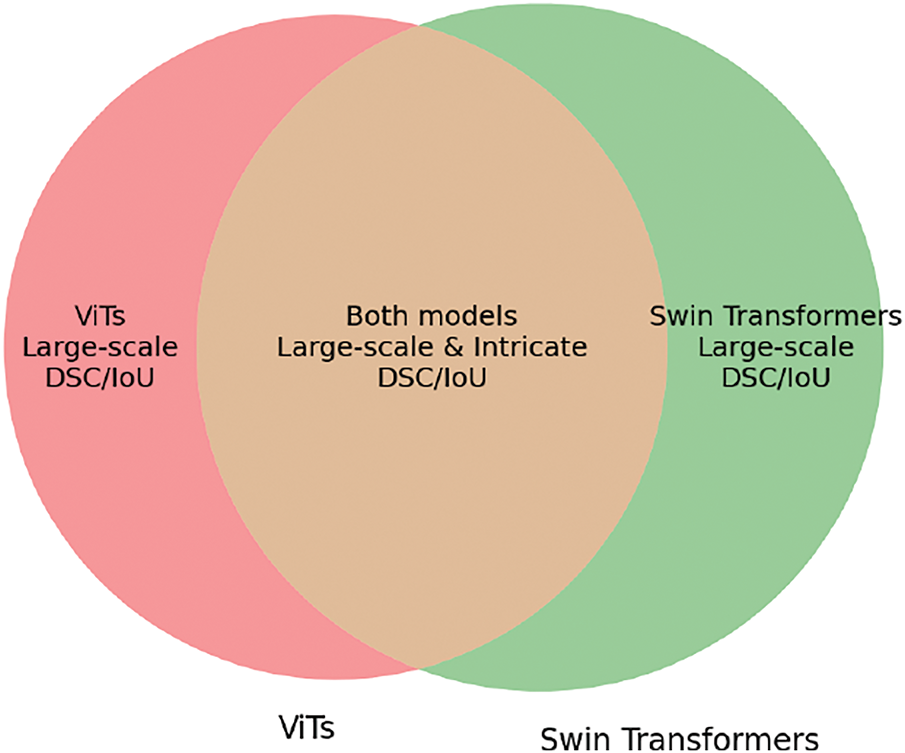

3.2.2 The Role of Swin Transformers in Handling High-Resolution Medical Images

Current studies have demonstrated the remarkable capabilities of swine transformers (Swin-T) in medical image analysis, especially for high-resolution applications. The hierarchical attention mechanism is a key strength of the Swin-T. Unlike ViTs, which use static patch sizes, Swin-T employs a window-based self-attention method that dynamically adjusts patch sizes, as shown in Fig. 7. This enables Swin-T to process high-resolution images, such as those from MRI and CT scans, more effectively. The hierarchical structure of Swin-T allows it to efficiently manage the computational requirements of high-resolution data while preserving essential spatial information [60].

Figure 7: Flowchart: Swin-T vs. ViTs in medical image analysis

Studies have shown that Swin-T outperforms traditional models across a range of medical imaging applications such as identifying lesions, outlining tumors, and categorizing diseases. The ability of the model to process high-resolution images efficiently makes it particularly well suited for analyzing medical images in real time. This capability is crucial in clinical settings where both speed and precision are vital factors to consider. Hussain et al. [100] advanced the segmentation of biomedical images by incorporating DenseNet-based attention mechanisms that focus on spatial and semantic channel guidance. This method enhances the extraction of features and the precision of segmentation, overcoming the shortcomings of conventional encoder-decoder models. Utilizing such sophisticated architectures can boost the model performance in medical imaging applications.

3.2.3 Hybrid Models Combining CNNs and Transformers for Enhanced Performance

Recent studies have highlighted a growing trend in merging CNNs and transformers. This combination leverages the advantages of both structural designs: CNNs are adept at identifying localized patterns, while transformer models are particularly effective in recognizing broader contextual relationships. Research has shown that these hybrid models can surpass the performance of individual CNNs or transformers when analyzing multimodal medical images.

Combining CNNs with transformers has shown remarkable success in applications that require the synthesis of information from multiple modalities, particularly when merging data from diverse medical imaging sources, such as MRI, CT, and PET scans. In this combined approach, CNNs efficiently extract localized features, whereas transformers process broader connections across different modalities. This strategy has led to notable advancements in applications such as multi-modal tumor segmentation and disease classification, where effectively combining complementary information from diverse modalities is crucial. The combined structural approach offers a promising avenue for improving both the accuracy and robustness of techniques used in medical image analysis [101–103].

3.2.4 Multi-Modal Data Fusion Strategies for Improved Diagnostic Accuracy

The successful integration of multimodal medical images plays a crucial role in improving diagnostic precision. Several strategies have been identified for incorporating multimodal data into transformer-based models, such as early, late, and intermediate fusion techniques. These methodologies are essential for effectively merging the information from diverse medical imaging modalities [55].

Early Fusion (Feature-Level Fusion)

Studies have demonstrated that early fusion–a technique for combining features from diverse modalities in the initial phase–enables models to develop integrated representations of complementary characteristics across various data types. This method is especially advantageous when working with heterogeneous data sources such as MRI and PET scans. Nevertheless, meticulous preprocessing is required to address the issues related to modality misalignment and intensity normalization. Although early fusion can be computationally intensive, it has been proven to improve model performance by facilitating acquisition of more comprehensive and complex representations of medical data [50].

Late Fusion (Decision-Level Fusion)

Late fusion is a widely used approach in multimodal medical image analysis, particularly when aligning the different modalities is difficult. This method involves processing each modality independently using separate neural networks before merging their final outputs. Current studies indicate that late fusion is particularly advantageous in situations with constrained computational resources, or when modality alignment is not feasible. However, this technique may not fully exploit the potential of the intermodal connections. Despite its simplicity, late fusion has shown efficacy in certain applications such as disease identification and classification, where the interplay between various modalities is less important [54].

Intermediate Fusion (Layer-Level Fusion)

Recently, a more sophisticated approach, called intermediate fusion, has gained prominence. This technique involves integrating features from diverse modalities within the middle layers of the neural networks. Models based on transformers employing self-attention mechanisms are particularly adept at implementing this fusion method. Studies have shown that intermediate fusion effectively captures the interrelationships between various modalities across different levels of abstraction, resulting in improved performance in classification and segmentation tasks. This method achieves a middle ground between the computational advantages of late fusion and the extensive feature representations of early fusion, offering a promising technique for examining multi-modal medical imagery [53,55,104].

3.2.5 Advancements in Self-Attention and Cross-Attention Mechanisms

Two key mechanisms are employed in multimodal image analysis using transformer models: self-attention and cross-attention. These methods allow the model to prioritize the key aspects of the input information, either within a single mode (self-attention) or between different modes (cross-attention). Using these techniques, the model can effectively prioritize and process important information from the input [105].

Self-Attention

Self-attention mechanisms in transformers enable the recognition and processing of extensive connections within medical images, which is crucial for the accurate segmentation and classification of anatomical components. Research has shown that this self-attention capability enhances transformers’ capacity to examine complex medical imagery, where relationships between distant regions are important. This characteristic makes transformers particularly adept at analyzing noncontiguous structures, such as detecting tumors or identifying anatomical features that span large portions of an image [106].

Cross-Attention

Cross-attention mechanisms have been demonstrated to be remarkably effective in multimodal frameworks. The transformer’s capabilities allow it to prioritize the crucial elements of one imaging modality while analyzing another, facilitating the integration of complementary data from various imaging techniques. For example, this approach can merge structural details from MRI with metabolic information from PET scans. The implementation of cross-attention has notably enhanced the precision of multimodal tasks such as tumor segmentation. In this scenario, various modalities provide essential but distinct insights into tumor size, location, and metabolic activity [107].

3.2.6 Challenges in Computational Efficiency and Scalability

Scientists have encountered notable obstacles when attempting to apply transformer-based models to high-resolution medical images, primarily because of the issues related to computational complexity and scalability. Despite their proven effectiveness, these models require substantial computing resources, particularly for processing three-dimensional medical imaging data. As illustrated in Fig. 8, current research efforts are focused on enhancing transformer efficiency using various methods, including model pruning, distillation, and the creation of hybrid architectures. The goal of these methods is to reduce the number of parameters and memory demands, thereby enhancing the efficiency and practical use of these models in medical imaging applications [7,108–110].

Figure 8: Challenges and strategies for improving Transformer models in medical imaging

Scientists are striving to improve transformer models for deployment in clinical settings, where real-time data analysis is essential. Studies have also explored the use of edge computing and mobile technologies to enable the deployment of transformer-based models in resource-constrained environments. Addressing these challenges is crucial to ensuring that transformer models are feasible and can be expanded for broad implementation across the healthcare industry.

3.2.7 Data Quality, Noise, and Robustness

Challenges related to data quality and noise continue to pose significant hurdles in medical image analysis. Current research has focused on enhancing the resilience of transformer models when confronted with defective or noisy datasets. Imperfections in medical images, such as noise generated by scanners and artifacts caused by motion, are common and can negatively impact the effectiveness of the algorithms used for image analysis. Research has demonstrated that transformer models are particularly vulnerable to these data imperfections, particularly when implemented in real-world clinical settings [111].

Researchers have explored multiple approaches to address these issues, such as adversarial training, noise reduction methods, and robust loss functions, with the goal of improving the ability of transformer models to handle noisy data. The objective is to improve the durability and dependability of these systems when implemented in healthcare settings where incomplete or distorted data are prevalent. Enhancing the robustness of transformers is a critical step toward their successful implementation in practical healthcare settings [75,112,113].

Evaluating the relative strengths of transformer-based models in multimodal medical image analysis has emerged as a crucial component in determining their efficacy and constraints compared with conventional deep learning techniques, particularly CNNs. This section provides a comparative evaluation of transformer architectures, CNNs-based methods, and hybrid solutions, focusing on their effectiveness in critical medical image analysis tasks including classification, segmentation, and multimodal data fusion. In addition, we investigate the trade-offs between these approaches in terms of computational performance, robustness, and versatility across various scenarios [114].

3.3.1 Transformer Models vs. CNNs in Medical Image Analysis

Transformers excel at managing tasks that require extensive context and long-range connections, whereas CNNs are more effective in handling localized features and processing smaller images. Although Transformers enhance segmentation capabilities, they require additional computational resources.

Performance Comparison in Image Classification

Transformers have demonstrated significant improvements over traditional CNNs in various image-classification tasks, particularly in situations requiring extensive context and long-range dependencies. CNNs have been widely adopted for medical image analysis, including applications in tumor detection, organ segmentation, and disease classification. However, these architectures have limitations. Although CNNs are adept at extracting localized features through convolution operations, they face challenges in capturing comprehensive relationships between distant image regions. This capability is particularly crucial in medical imaging, in which spatial correlations among structures can extend across substantial portions of an image. By contrast, transformers have shown promise in addressing these limitations and providing a more holistic approach to image analysis in medical applications [115]. Utilizing advanced frameworks such as Hussain & Shouno [116] can significantly improve the performance of medical image segmentation tasks. MAGRes-UNet introduces a multi-attention-gated residual U-net structure that incorporates multi-attention-gated residual blocks using activation functions such as Mish and ReLU, along with optimization techniques such as AdamW and Adam. This architecture overcomes the limitations of traditional encoder-decoder networks by effectively merging information from feature maps and capturing fine-scale contextual details, thus enhancing segmentation precision. The statistical significance analysis verifies that these improvements are not merely due to random fluctuations, but are a result of the superior capability of the model to capture global dependencies and multimodal relationships. A detailed breakdown of the performance metrics and significance tests is provided in the results section.

Conversely, ViTs operate by dividing images into patches and utilizing multihead self-attention mechanisms, allowing them to detect global connections across the entire image. Research comparing ViTs and CNNs has demonstrated that ViTs frequently surpass CNNs in tasks that require the recognition of patterns across distant areas, such as identifying large tumors or categorizing diseases with intricate patterns. Despite advancements in other techniques, CNNs remain more computationally efficient, particularly when processing images of smaller size or lower resolution, where the overall context is less important [57]. Transformer-based models have found successful applications beyond medical image analysis, particularly in various vision-based healthcare-monitoring systems. A significant example is their use in analyzing electrocardiograms (ECGs) to detect heart disease. In the research [117], scientists employed Vision Transformer architectures such as Google-ViT, Microsoft-Beit, and Swin-Tiny to classify ECG images. These models achieved impressive classification outcomes, underscoring the potential of transformers in interpreting ECG data to diagnose heart conditions [118,119].

Integrating Transformer models into vision-based healthcare monitoring systems provides notable benefits, including the ability to capture long-range dependencies and model global relationships within the visual data. These features can enhance the diagnostic precision and streamline monitoring processes across a range of healthcare applications.

Segmentation Tasks: Transformers vs. CNNs

In the field of image segmentation, where accurate identification of anatomical features or abnormalities is crucial, both transformers and CNNs exhibit distinct advantages. In the field of medical image segmentation, techniques based on CNNs, especially Fully Convolutional Networks (FCNs) and U-Net, have shown exceptional performance and effectiveness. These approaches excel in tasks such as delineating brain tumors, outlining organs, and lesion detection. CNNs excel in their capacity to effectively detect and process local patterns and formations, making them ideally suited for intricate segmentation tasks that demand precise boundary identification [56].

By contrast, transformers have become increasingly popular for segmentation tasks because of their capacity to model relationships across long distances. Vision Transformer and Swin Transformers have demonstrated enhanced segmentation accuracy by capturing contextual information from the remote areas of an image. This capability is particularly beneficial when segmenting intricate structures, such as the brain or organs, where transformers can identify spatial connections that are challenging for CNNs to detect, especially in cases involving large or irregularly shaped structures. Nevertheless, the computational demands of transformers, particularly ViTs, can be substantial when processing high-resolution images, potentially limiting their practical applications [62]. The Adversarial Vision Transformer framework boosts the segmentation of medical images by combining adversarial training with the transformers. This method enhances segmentation precision, particularly when annotations are scarce, by improving feature learning and strengthening the generalization [120–122].

3.3.2 Hybrid CNNs-Transformer Models

Architectures combining CNNs and transformers take advantage of the strengths of both components. These hybrid models utilize CNNs for their proficiency in detecting local patterns while simultaneously harnessing strength of the transformers in capturing broader contextual information. These hybrid architectures demonstrate exceptional performance in applications, such as multimodal tumor segmentation and disease classification. However, their high computational demands and complex training procedures necessitate careful optimization strategies.

Combining CNNs and Transformers for Enhanced Performance

Architectures that combine CNNs and transformers seek to leverage the strengths of both approaches. CNNs excel at identifying local patterns, whereas transformers are particularly skilled at recognizing long-distance connections within the data. Architectures such as the CNNs-Transformer and U-Net-Transformer strive to merge proficiency of the CNNs in local feature detection with the transformer’s ability to comprehend the global context. These hybrid approaches seek to combine the strengths of both neural network types to enhance overall performance [61].

Studies have demonstrated that combining CNNs and transformers into hybrid models can yield superior results compared with using either approach alone for certain medical imaging tasks. This involves examining multimodal medical imagery, which combines different imaging methods such as MRI, CT, and PET scans. In these scenarios, hybrid models excel by employing CNNs to efficiently extract localized features from each imaging modality, while simultaneously using transformers to capture global relationships across different modalities. This approach has proven particularly effective in applications such as multimodal tumor segmentation and disease classification, in which both localized details and broader contextual information play crucial roles [123].

Nevertheless, combining CNNs and transformers in hybrid models can be resource-intensive, necessitating careful network design to optimize the performance of both components. Moreover, integrating these distinct architectural approaches may present difficulties in model training and convergence, which presupposes that Fig. 9 demonstrates or exemplifies a hybrid CNNs-Transformer model or its implementation. If the image pertains to something else, the citation can be modified as needed.

Figure 9: Hybrid CNNs-Transformer model

3.3.3 Comparing Multi-Modal Data Fusion Techniques

Feature integration in multimodal networks can occur at various levels. One approach, known as early fusion, merges the features from various modalities at the input stage of the network. This method offers a comprehensive representation but requires advanced techniques for effectively aligning different modalities. Conversely, late fusion merges the outputs of separate networks, thereby offering computational simplicity at the cost of reduced precision. Striking a balance between these methods, intermediate fusion blends data at the middle layers, effectively capturing intermodal relationships while mitigating the drawbacks of both early and late fusion strategies.

Early Fusion vs. Late Fusion

The analysis of multimodal medical images often requires combining data from diverse imaging techniques, including MRI, CT, and PET scans. Researchers have suggested several integration approaches, with early fusion (at the feature level) and late fusion (at the decision level) being the most prevalent approaches. Early fusion combined features extracted from multiple modalities in the initial stages of the neural network, enabling the model to develop unified representations of complementary data. By contrast, late fusion merges the outputs of separate networks trained on individual modalities at a later point in the process [124].

Research comparing different approaches has demonstrated that early fusion can yield superior results in scenarios where fine-grained integration of complementary features from multiple modalities is necessary, such as multimodal tumor segmentation. Nevertheless, early fusion requires advanced techniques for alignment and normalization to address modality-specific variations, including differences in resolution, intensity, and spatial alignment.

In contrast, late fusion, which is computationally less complex and less affected by alignment issues, may not fully capitalize on the interactions between modalities. Research indicates that late fusion tends to be more effective when dealing with highly dissimilar modalities such as combining structural and functional imaging data. However, it may not achieve the same level of precision as early fusion when modalities are more complementary in nature [125].

Intermediate Fusion: A Balanced Approach

Intermediate fusion, which integrates features from various modalities in the mid-level layers of neural networks, is becoming increasingly popular as a balanced method. This approach offers the benefit of identifying cross-modal relationships without inundating the network with unprocessed data in its initial stages. Transformer-based architectures, which incorporate self-attention mechanisms, excel at intermediate fusion due to their capacity to identify complex relationships between modalities across various levels of abstraction [126].

Current studies have shown that intermediate fusion can achieve similar outcomes in multimodal applications, such as tumor segmentation and disease classification, effectively bridging the gap between early and late fusion approaches. This strategy is particularly effective when there is a need to capture both minute details and overarching dependencies in the merged data, as exemplified in multimodal imaging for tumor characterization [91,127,128].

3.3.4 Comparative Evaluation of Performance Metrics

Key metrics, such as accuracy, precision, recall, DSC, and IoU, were used to assess model performance. In tasks involving segmentation, CNNs show high precision and recall, whereas transformers perform well in intricate situations but struggle with smaller objects or structures. Hybrid approaches enhance both precision and recall and strike a balance between effectiveness and efficiency [129].

Accuracy, Precision, and Recall

To evaluate the efficacy of different models for analyzing medical images, crucial metrics include accuracy, precision, and recall. Although accuracy offers a broad overview of correct predictions, precision and recall are particularly crucial in healthcare settings because of the potentially serious implications of false positives and negatives [130].

For segmentation tasks, especially those involving the identification of specific anatomical structures, such as tumors or organs, CNNs typically demonstrate high precision and recall. By contrast, transformers may face challenges in achieving high recall, particularly when dealing with small or indistinct structures that are difficult to differentiate from the surrounding tissue [131].

Combining CNNs and Transformers in hybrid models has shown promising results in improving both accuracy and sensitivity. These combined methods employ CNNs to extract fine-grained local features while leveraging transformers to capture more comprehensive contextual information. Consequently, these hybrid models are more adept at delivering precise predictions while remaining responsive to smaller or less-obvious structures [132–134].

Dice Similarity Coefficient (DSC) and Intersection over Union (IoU)

In evaluating segmentation tasks, the Dice Similarity Coefficient (DSC) and Intersection over Union (IoU) are frequently employed as measures to evaluate the correspondence between predicted and ground truth masks. As illustrated in Fig. 10, transformer-based architectures, particularly ViTs and Swin Transformers, have demonstrated remarkable DSC and IoU results in specific segmentation contexts, especially when identifying large or complex structures [135].

Figure 10: Segmentation performance of Transformer models in DSC and IoU

Nevertheless, the DSC and IoU performances of transformer models can be significantly influenced by the input image resolution and the effectiveness of their attention mechanisms. In scenarios involving high-resolution images, the computational demands of transformers may restrict their capacity to handle large data volumes, potentially affecting their overall segmentation accuracy.

3.3.5 Computational Efficiency and Scalability

Owing to their quadratic complexity, the computational demands of transformers restrict their application in settings with limited resources. Although CNNs are more efficient, they struggle to capture the overall context. Hybrid models combine both architectures, achieving a compromise between the effectiveness and computational demands. This makes them well suited for analyzing medical images in real time [136].

Computational Complexity of Transformers

Despite their remarkable performance in numerous medical imaging tasks, transformer-based models continue to face significant challenges due to their high computational demands. Self-attention of the transformers component operates with quadratic complexity, resulting in significant resource consumption, particularly when processing extensive datasets or high-resolution three-dimensional images. For instance, ViTs and swine transformers demand considerable memory and processing capabilities, which may restrict their use in settings with limited resources, such as mobile devices or real-time clinical systems [137].

However, CNNs are more computationally efficient and can be utilized with less powerful hardware. The widespread use of CNNs in medical image analysis persists owing to their computational efficiency despite their limitations in capturing global relationships. To overcome these shortcomings, researchers developed hybrid models that combine CNNs with transformers. These integrated approaches aim to strike a balance between processing speed and effectiveness, thereby providing a viable option for analyzing medical images in real time [138–140].

3.4 Limitations of Transformer-Based Models in Medical Image Analysis

Although transformer-based models have shown remarkable progress and promise in medical image analysis, several obstacles hinder their widespread implementation and optimal use. These challenges encompass various aspects including computational efficiency, data needs, model resilience, and adaptability across different clinical contexts. This section delves into these limitations, offering a comprehensive examination of the hurdles that must be overcome to improve the effectiveness of transformers in medical imaging applications. This study investigates the intricacies of tackling these challenges to improve the real-world applicability of such models in medical environments [141].

3.4.1 Computational Complexity and Resource Demands

A major drawback of transformer architectures, especially ViTs and Swin Transformers, is their substantial computational demands; unlike CNNs, which employ localized receptive fields and are comparatively computationally efficient, transformer models rely on self-attention mechanisms that process every pair of positions within the input sequence. This results in a quadratic time complexity relative to the number of input elements, such as image patches. For substantial images, including 3D medical scans or high-resolution images, the computational load becomes increasingly challenging [142].

This computational expense also translates into substantial memory requirements. Transformers require considerable memory to store intermediate activations and attention maps during both training and inference phases. This can quickly become a constraining factor, particularly in scenarios involving large datasets or real-time processing. This issue is further amplified in medical imaging applications, where datasets may comprise 3D volumes containing hundreds or thousands of slices per image. Although numerous strategies have been employed to minimize complexity, including the use of efficient attention mechanisms and hierarchical methods, such as Swin Transformers, the substantial computational requirements continue to pose a major challenge to the widespread implementation of these technologies in clinical environments [143–146].

Solutions and Ongoing Research

Current research efforts are directed towards enhancing transformer models for use in medical imaging. Scientists are investigating various strategies to decrease the memory and processing demands of these models, including techniques such as model pruning, distillation, and low-rank approximation. Additionally, increasing attention is being paid to combined approaches that merge the benefits of CNNs and transformer architectures. These combined approaches enable efficient extraction of local features while leveraging the global contextual understanding provided by transformers [147].

3.4.2 Data Requirements and Labeling Challenges

A significant drawback of transformer models is their reliance on extensive high-quality datasets for training. CNNs can perform adequately with moderate amounts of labeled data through transfer learning. Transformers typically require vast quantities of labeled information to achieve comparable results, which presents a specific difficulty in the field of medical image analysis, where annotated datasets are often scarce and expensive to obtain, owing to the need for expert-provided labels [148].

Furthermore, medical image datasets frequently suffer from imbalances, with certain conditions or abnormalities being underrepresented. Similar to other deep learning architectures, transformers are prone to overfitting when faced with limited data, owing to their extensive number of trainable parameters. In the absence of sufficient data, transformers may struggle to generalize effectively, potentially resulting in suboptimal performance when applied in real-world clinical environments [149].

Data Augmentation and Synthetic Data Generation

Researchers are tackling the challenge of limited data by exploring techniques for data augmentation and creating synthetic data. To artificially expand the training datasets, methods such as rotation, scaling, and translation can be utilized, which helps improve the model’s capacity for generalization. Additionally, scientists are examining the potential of generative adversarial networks (GANs) and other approaches for creating synthetic data to produce lifelike medical images that can be used to train transformer models. Nevertheless, the production of high-quality synthetic data that accurately capture the diversity of real-world medical images remains a formidable challenge [20,150,151].

3.4.3 Overfitting and Generalization Issues

The versatility and strength of transformer models render them susceptible to overfitting, particularly when trained on small datasets. When a model learns to mimic training data instead of identifying general patterns, overfitting occurs, leading to suboptimal performance on unseen data. This problem is particularly significant in the field of medical image analysis, where there is a considerable diversity in patient characteristics, imaging protocols, and disease presentations [152].

Furthermore, transformer models often face challenges in adapting to new clinical environments or different medical facilities. As an example, a model developed using data from a single healthcare facility may not function effectively when applied to another facility with differing imaging techniques or patient demographics. The inability to generalize across various clinical settings poses a substantial obstacle to the practical implementation of transformer models in healthcare [36,153].

Transfer Learning and Domain Adaptation

Transfer learning is a commonly employed technique for addressing overfitting and improving the model generalization. This approach involves refining a model trained on one dataset using another. In the context of medical image analysis, this typically consists of initially training transformer models on large, publicly available datasets such as ImageNet or other medical image collections, and then fine-tuning them on smaller, more specialized datasets. Although transfer learning has shown promising results in some cases, its efficacy is largely dependent on the level of similarity between the source and target domains. To further enhance the adaptability of transformer models across various clinical contexts, researchers are investigating domain-adaptation techniques, such as adversarial training and domain-invariant feature learning [113].

3.4.4 Model Interpretability and Explainability

Transformer models face a major hurdle in terms of their lack of transparency and interpretability. Despite their effectiveness in identifying complex data patterns, the opaque nature of their decision-making process makes it challenging for healthcare professionals to rely on and comprehend model outputs. In medical imaging, this concern is especially crucial because comprehending the rationale behind a model’s decision is essential to safeguard patient health and facilitate informed clinical decision-making [154].

However, conventional approaches, such as CNNs, offer somewhat better interpretability, especially when used in conjunction with visualization methods, such as saliency maps or Grad-CAM. These methods emphasize the regions in the images that are most influential in determining the outputs of the model. Such interpretability approaches are essential for healthcare professionals who rely on transparent reasoning to make informed decisions based on a model’s findings [155].

Efforts in Enhancing Explainability