Open Access

Open Access

REVIEW

Evaluation of State-of-the-Art Deep Learning Techniques for Plant Disease and Pest Detection

1 Department of AKCSIT, University of Calcutta, Kolkata, 700106, India

2 Department of Computer Science, Illinios Institute of Technology, Chicago, IL 60616, USA

3 Department of Mathematics, SNEC, University of Calcutta, Kolkata, 700009, India

4 Department of Computer Science, SNEC, University of Calcutta, Kolkata, 700009, India

* Corresponding Author: Himadri Nath Saha. Email:

Computers, Materials & Continua 2025, 85(1), 121-180. https://doi.org/10.32604/cmc.2025.065250

Received 07 March 2025; Accepted 24 June 2025; Issue published 29 August 2025

Abstract

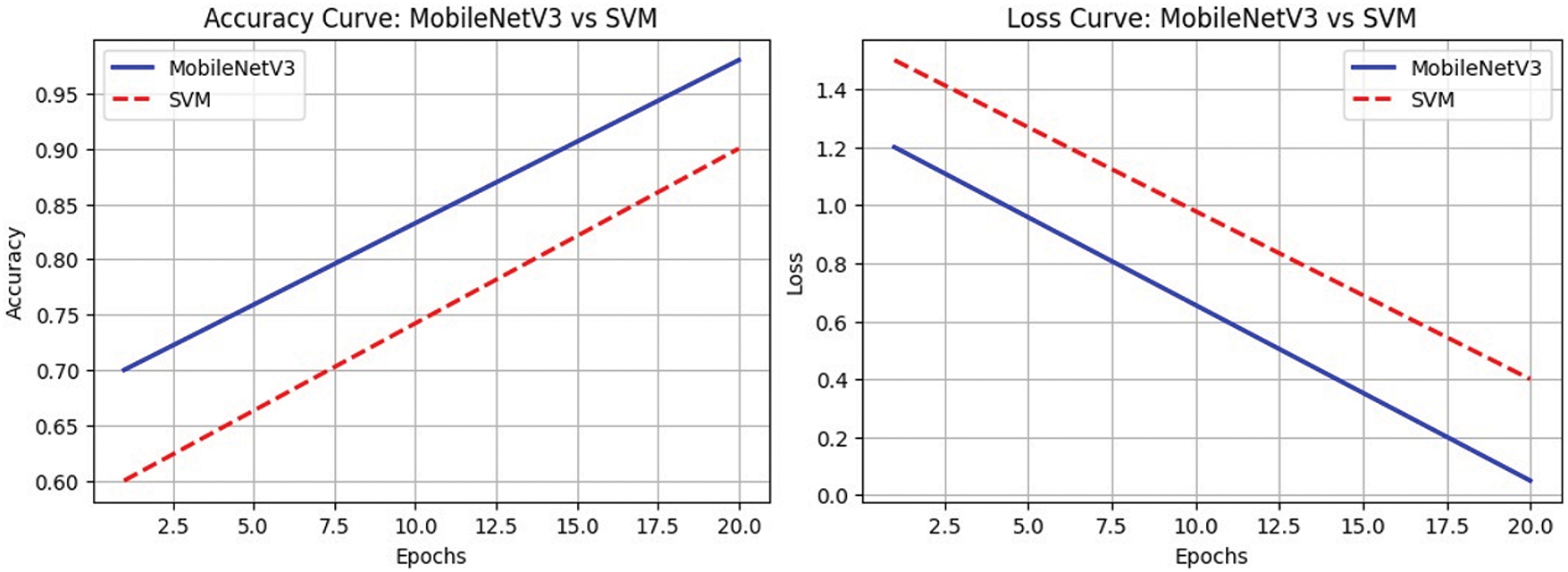

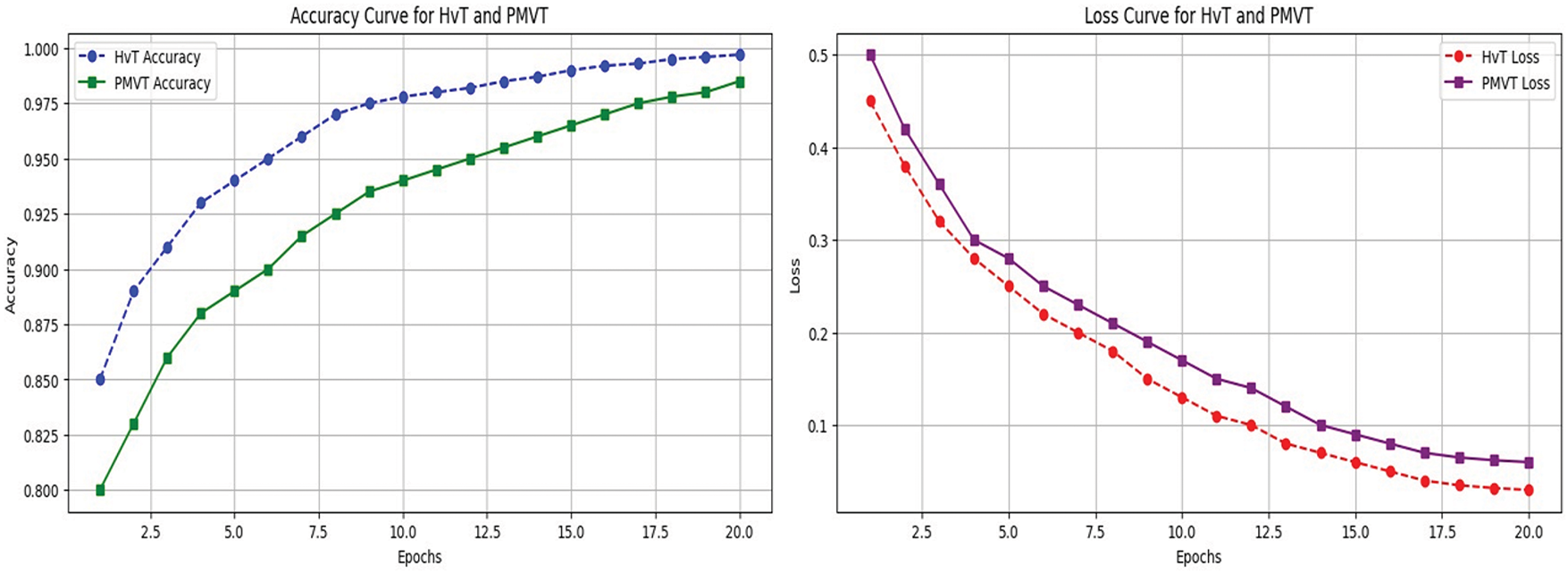

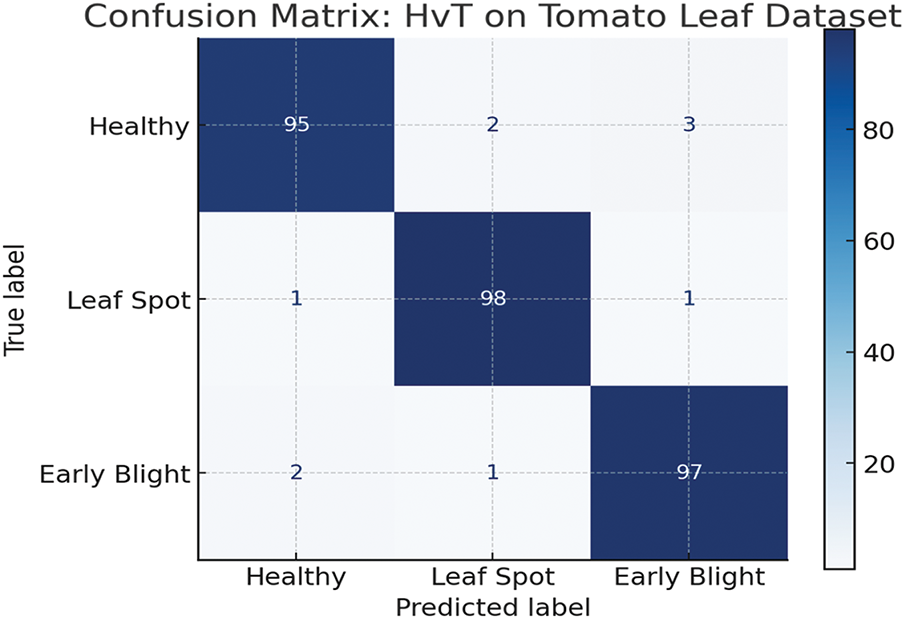

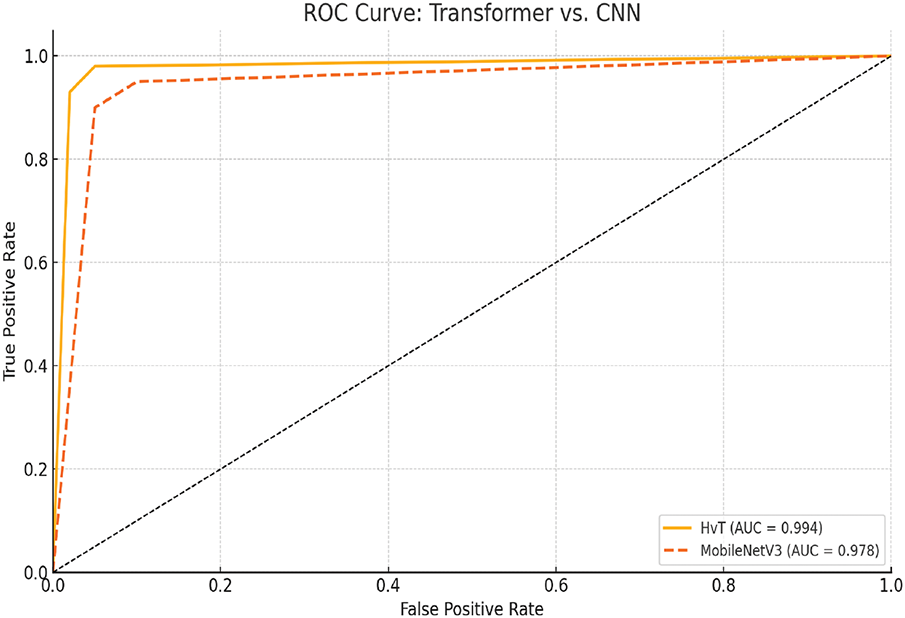

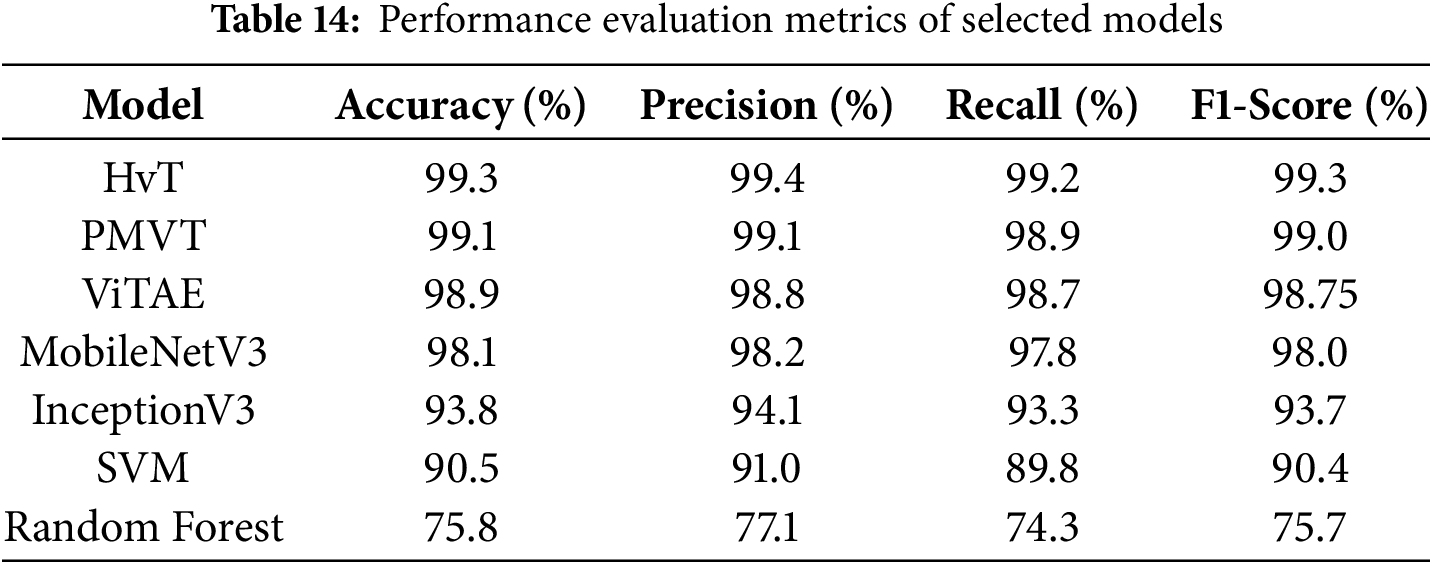

Addressing plant diseases and pests is not just crucial; it’s a matter of utmost importance for enhancing crop production and preventing economic losses. Recent advancements in artificial intelligence, machine learning, and deep learning have revolutionised the precision and efficiency of this process, surpassing the limitations of manual identification. This study comprehensively reviews modern computer-based techniques, including recent advances in artificial intelligence, for detecting diseases and pests through images. This paper uniquely categorises methodologies into hyperspectral imaging, non-visualisation techniques, visualisation approaches, modified deep learning architectures, and transformer models, helping researchers gain detailed, insightful understandings. The exhaustive survey of recent works and comparative studies in this domain guides researchers in selecting appropriate and advanced state-of-the-art methods for plant disease and pest detection. Additionally, this paper highlights the consistent superiority of modern AI-based approaches, which often outperform older image analysis methods in terms of speed and accuracy. Further, this survey focuses on the efficiency of vision transformers against well-known deep learning architectures like MobileNetV3, which shows that Hierarchical Vision Transformer (HvT) can achieve accuracy upwards of 99.3% in plant disease detection. The study concludes by addressing the challenges of designing the systems, proposing potential solutions, and outlining directions for future research in this field.Keywords

In 2050, it’s predicted that there will be 9.1 billion people on the planet [1] and, therefore, to supply the food needs of the rapidly expanding population, the agricultural output must increase by around 70%. Food security, rural livelihoods, and agricultural trade are all negatively impacted by plant pests and diseases, which cause up to 40% of worldwide crop production to be lost each year, according to the Food and Agriculture Organization (FAO) [2]. To meet the growing need for food, agricultural productivity must rise by almost 70%, as the world’s population is expected to reach 9.1 billion by 2050 [1]. Early disease and pest identification and control are agronomic requirements and vital facilitators of food system resilience. Global food security goals are directly supported by emerging technologies like artificial intelligence and deep learning, which provide scalable and precise solutions that enable prompt intervention, less chemical use, and sustainable agriculture methods [2–6].

Fungal or fungal-like organisms primarily cause most plant diseases (around 85%). The bulk of plant diseases are generally linked to bacterial or viral pathogens, whereas only a small number are caused by certain worms [7]. According to recent research, pathogen-induced stress significantly alters chloroplast function and reduces photosynthetic efficiency, resulting in slower plant development and lower potential yield [8]. Among the most damaging fungal threats to the world’s food security are wheat rust diseases, which include stem rust (“Puccinia graminis”), stripe rust (“P. striiformis”), and leaf rust (“P. triticina”). Stripe rust alone can result in total crop failure under vulnerable circumstances, and several diseases can cause catastrophic crop losses, with yearly economic costs estimated at US $5 billion worldwide [9]. Pests also cause considerable losses in agricultural production [4] as insects often feed on leaves, disrupting photosynthesis. Furthermore, pests serve as vectors for various diseases, contributing to significant crop losses worldwide [3]. For example, the bug “L.hesperus” is a pest that severely damages cotton crops, and therefore, it is a common target of pesticide applications [10,11].

“Smart farming” refers to the management of farms that make use of contemporary technology for communication and information to maximize production and quality while minimizing the need for manpower [12]; it is crucial for raising agricultural production’s yield, ecological impact, food safety, and resilience [13]. Smart farming enables the continuous monitoring of plants to detect diseases and pests at an early stage, allowing for timely interventions. When an infection is detected, smart farming can help farmers classify different types of diseases and pests based on various aspects, such as other plant and crop species, the age of plants, and external conditions [14–16]. Based on the identified threats, smart farming can be used to recommend appropriate countermeasures, such as pesticides, fungicides, or herbicides, to mitigate these risks. As a result, farmers can act very early and stop the spread of diseases and pests before they can infect large areas of the fields. Additionally, the use of toxic chemicals can be significantly reduced. For these reasons, fast, automated, accurate, and cost-effective approaches to identifying crop diseases and pests are of significant practical importance [17,18].

The extraction and analysis of plant image data have enabled several novel approaches, such as computer vision, machine learning (ML), and deep learning (DL), to successfully identify plant diseases and pests. These techniques are also accurate and fast. Rehman et al. secured an accuracy of 99.6% and above on apple, grape, peach and cherry diseases using Darknet53 while proposing an improved swarm optimisation butterfly algorithm [19]. Khan et al. [20] achieved an accuracy 97.2% with their proposed feature extraction method in detecting apple diseases. Hassan et al. have proposed a novel deep-learning model based on the inception layer, and a residual connection has been trained and tested on three different plant disease datasets, obtaining 99.39% accuracy on the PlantVillage dataset overall, and 99.66% on the rice disease dataset and the cassava dataset 76.59% [21]. The symptoms of pest attack or disease infection on plants differ in shape, texture, colour, and size based on the nature of pathogens, viruses, fungi, etc. The qualities of the image surface features, such as texture, colours, and structure, are assessed using deep learning [22], A branch of artificial intelligence called machine learning is being used more and more to mimic human cognitive processes like perception, categorization, and judgment in intricate, data-rich settings [23]. To be more precise, convolutional neural networks (CNN) are frequently used in various object identification and image classification applications [24–27]. These algorithms can be trained using various contaminated plant images gathered from public online sources, agricultural farms, and laboratories. Several image processing-based segmentation, feature extraction and clustering methods are applied to an input image for successful and accurate disease and pest detection. Some of the CNN models used in this domain include AlexNet [28], InceptionV3 [29], Inception V4 [30], VGGnet [31], Microsoft ResNet [32], and DenseNet [33].

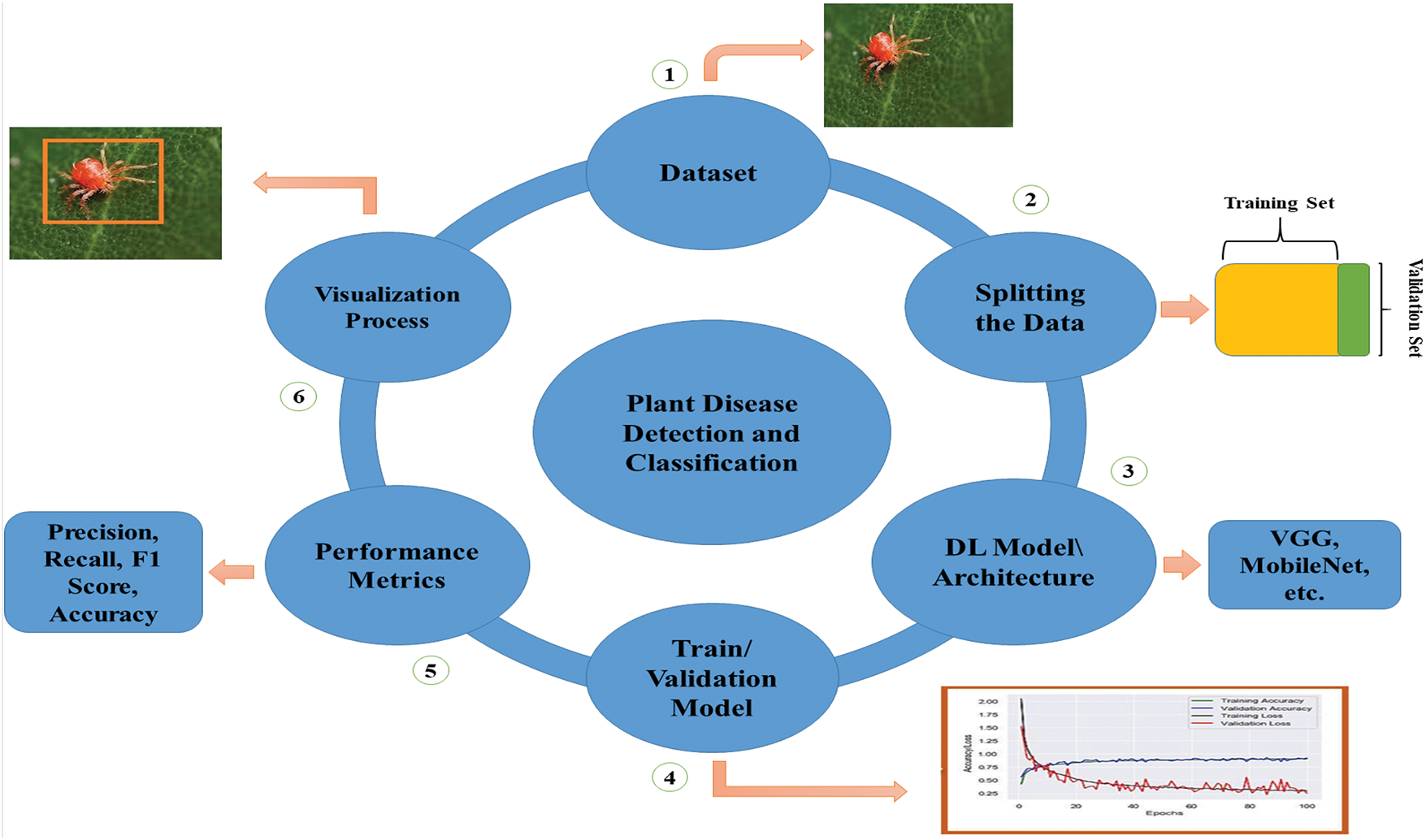

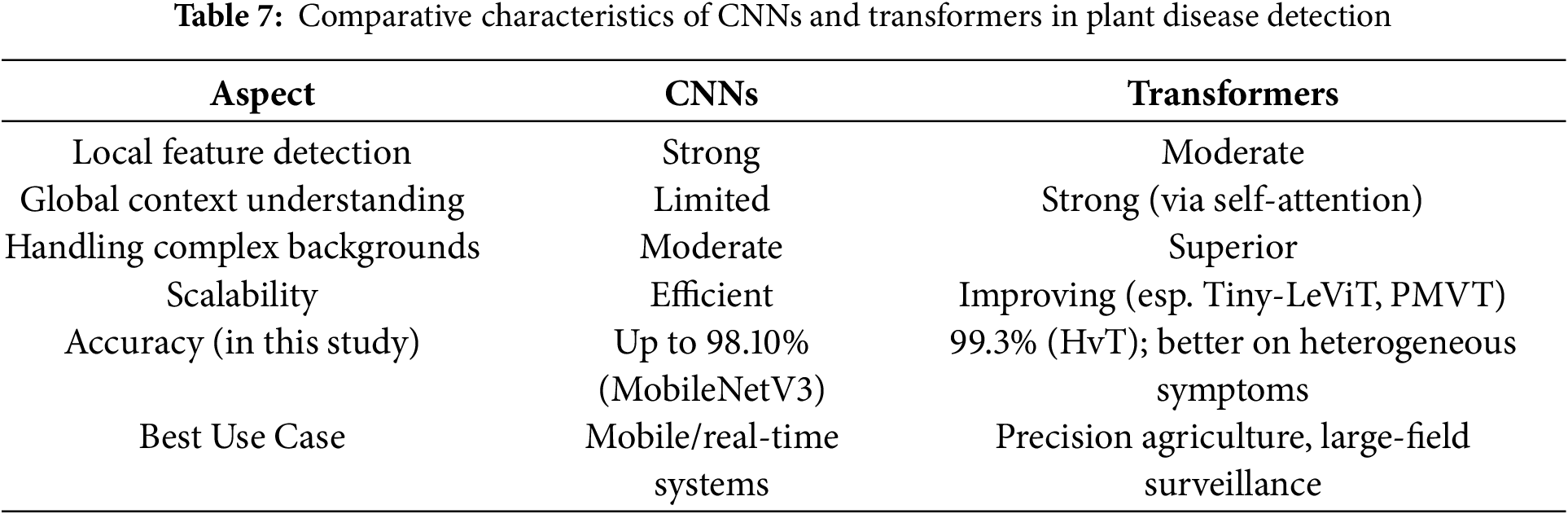

Our review paper uniquely categorises and evaluates methodologies for identifying plant pests and diseases using deep learning models. Unlike existing literature, which often consolidates various methods into a single section, this structured categorization allows researchers to efficiently identify and compare the most suitable deep learning techniques for their specific pest and disease detection objectives. Additionally, we examine modified deep learning and transformer models, incorporating the latest research to reflect current advancements in the field. We have used vision transformers against well-established models on the tomato leaf dataset from PlantVillage [34]; the results demonstrated that vision transformers perform better than the convolutional neural network (CNN) architectures. This comprehensive analysis provides valuable insights, guiding researchers in selecting the most suitable methods for their needs and advancing the field. A general flow diagram of implementing deep-learning models for visualizing plant pests is described in Fig. 1, which states that the process begins with dataset acquisition, followed by its division into two halves. Consequently, the training/validation graphs highlight the models’ significance, emphasizing the training methods of deep learning architectures, whether initiated from scratch or utilizing transfer learning. The following stage involves using assessment metrics specifically tailored for image classification tasks, particularly for identifying pest and plant disease varieties. The procedure involves applying mapping and visualization strategies that facilitate image classification, localization, and identification. This comprehensive visual depiction clearly illustrates the deep learning model’s sequential workflow, providing readers with a clear understanding of its structure, implementation procedures, and general operation.

Figure 1: A comprehensive flow diagram illustrating the developmental stages of a typical deep learning model

This survey work offers several contributions to plant pest and disease detection:

• Different types of traditional and modified deep learning architectures for identifying and classifying pests and diseases of plants are described.

• CNN models for plant pest and disease detection have been compared. Researchers must select the appropriate deep-learning model to construct a realistic scenario for plant pest and disease identification in the real world.

• Visualization methodologies show the localization of infected spots on the plants and provide the farmers with necessary prevention suggestions about the pest or disease. Moreover, this agricultural information is presented without the involvement of agricultural professionals.

• An overview of hyperspectral imaging with deep learning methodologies is provided, along with its applications in both laboratory and field settings for classifying and identifying the early stages of pest attacks and disease lesions in plants.

• The paper consolidates the advantages and limitations of the techniques above, providing users with a comprehensive understanding to inform their selection of the most appropriate methodology for their specific applications.

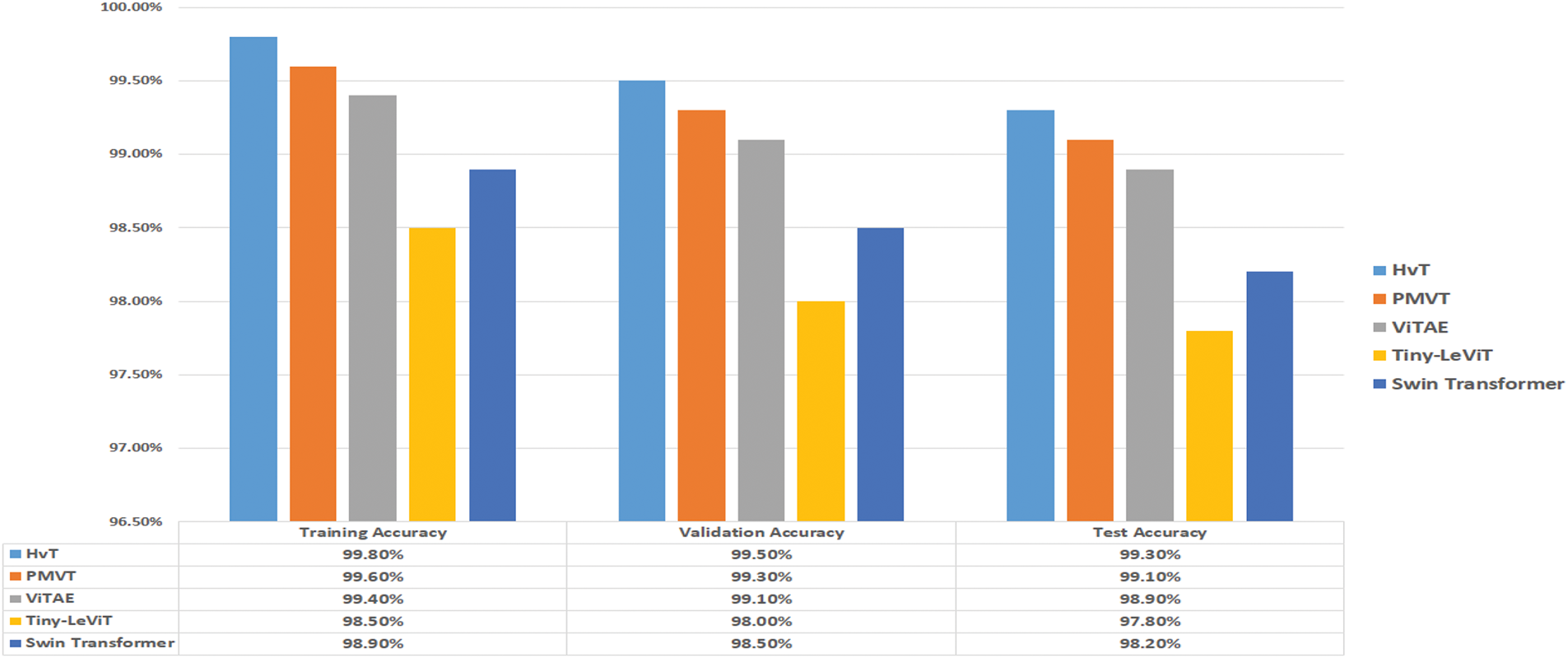

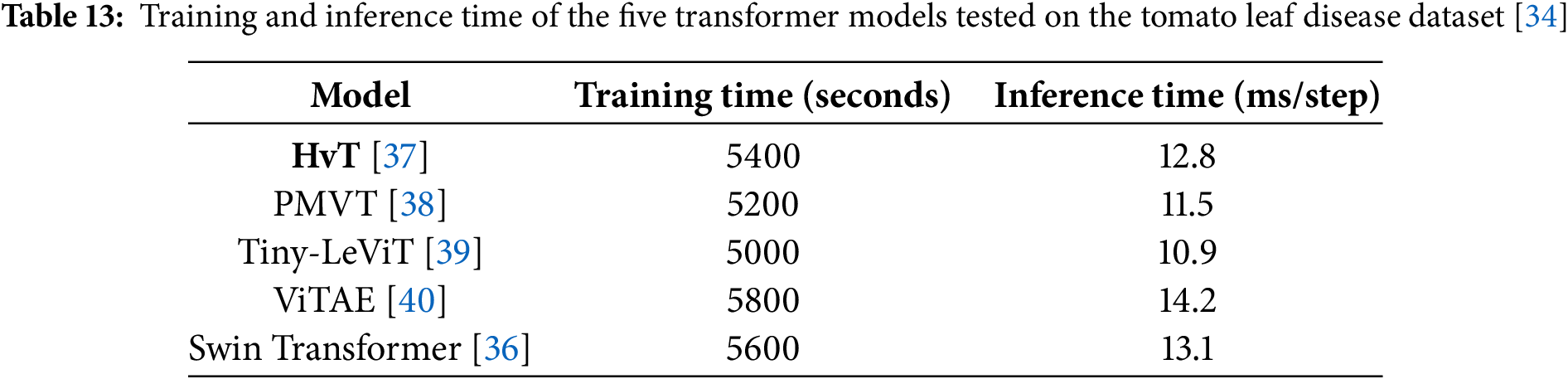

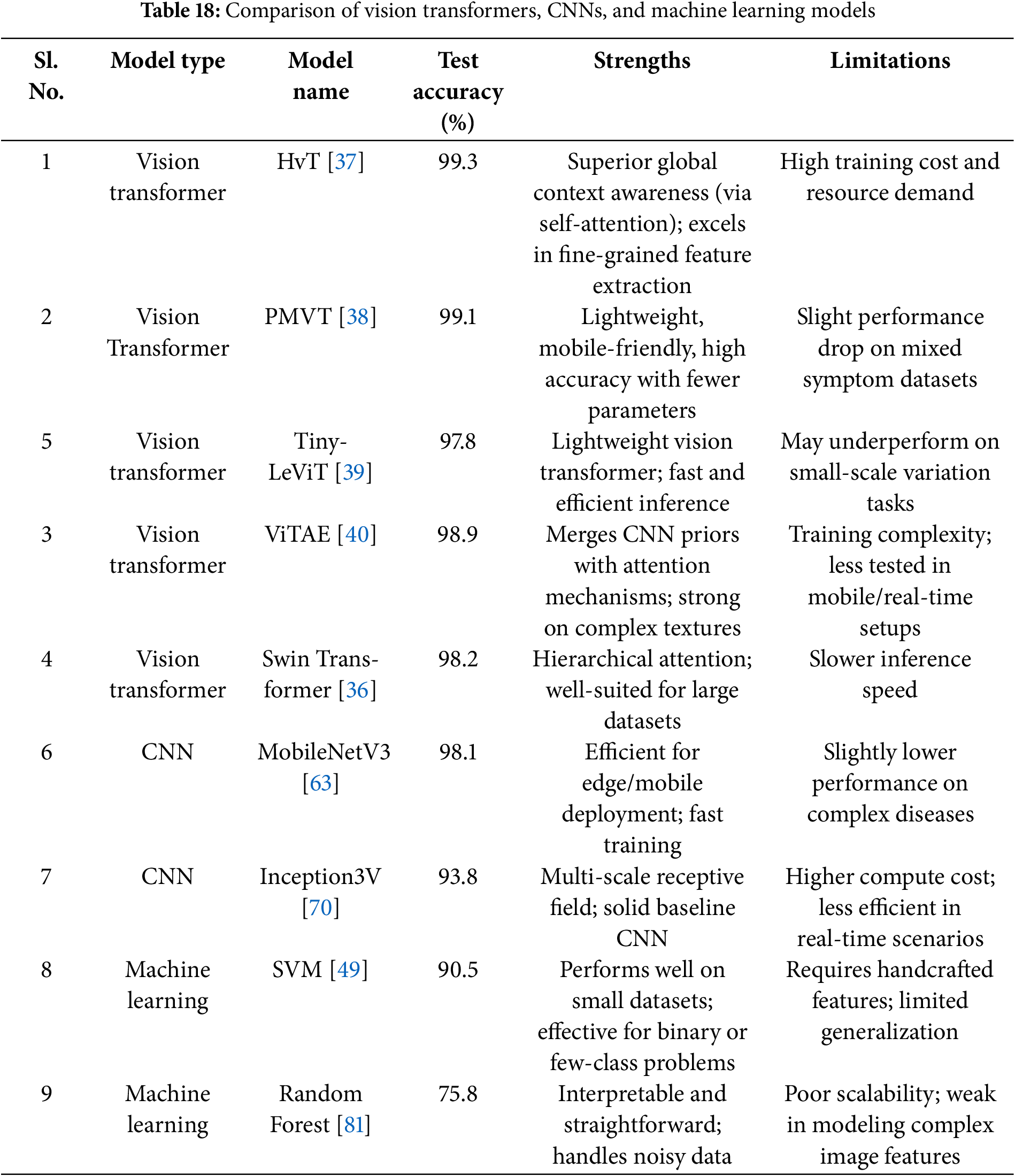

• The architecture and suitability of the Vision Transformer (ViT) models for visual identification tasks are highlighted in this manuscript [35]. The performance of transformer-based architectures Swin Transformer [36], HvT [37], PMVT [38], Tiny-LeViT [39], and ViTAE [40] on the Tomato Leaf dataset from the PlantVillage dataset [34]. The results demonstrated that the vision transformers performed on par with and surpassed machine learning and CNN models in test and validation accuracy.

• Analyzes machine learning, deep learning, and vision transformers quantitatively in terms of precision, computing efficiency, and visualization capabilities.

• The scope of future research on pest and disease identification in agriculture, including potential improvements, general limitations and challenges, has been aggregated.

2 Structural Design of the Survey Work

The bibliography-based analysis of plant pest and disease detection and classification involves two steps in the study: (a) accumulation of existing related research works and (b) a comprehensive evaluation of various research works. The journal articles and conference papers were collected from the scientific knowledge bases of IEEE Explore [41] and ScienceDirect [42]. As search keywords, the following queries are applied: “agriculture”, “deep learning”, “plant pest and disease detection,” and “convolutional neural network.”

We have sorted out papers that describe traditional and Modified deep learning models applied to the pest and disease domain of the agricultural field. We initially collected over a hundred papers covering all the methodologies relevant to this work. In the next phase, the selected papers were evaluated individually, focusing on the different characteristics, such as accuracy and time, as well as the working principles of these technologies.

This is a structural breakdown of this paper:

• Section 3 outlines the typical deep learning model visualization and non-visualization techniques, other modified architectures, and state-of-the-art transformer models for identifying plant pests and diseases.

• Section 4 explains hyperspectral imaging with deep learning models.

• Section 5 offers the research challenges and possible solutions in this domain.

• Section 7 provides a nuanced comparison between traditional machine learning, CNNs and contemporary vision transformers.

• This paper has been concluded in Section 8 and provided future recommendations to improve the visualization and identification of diseases and pests of plants.

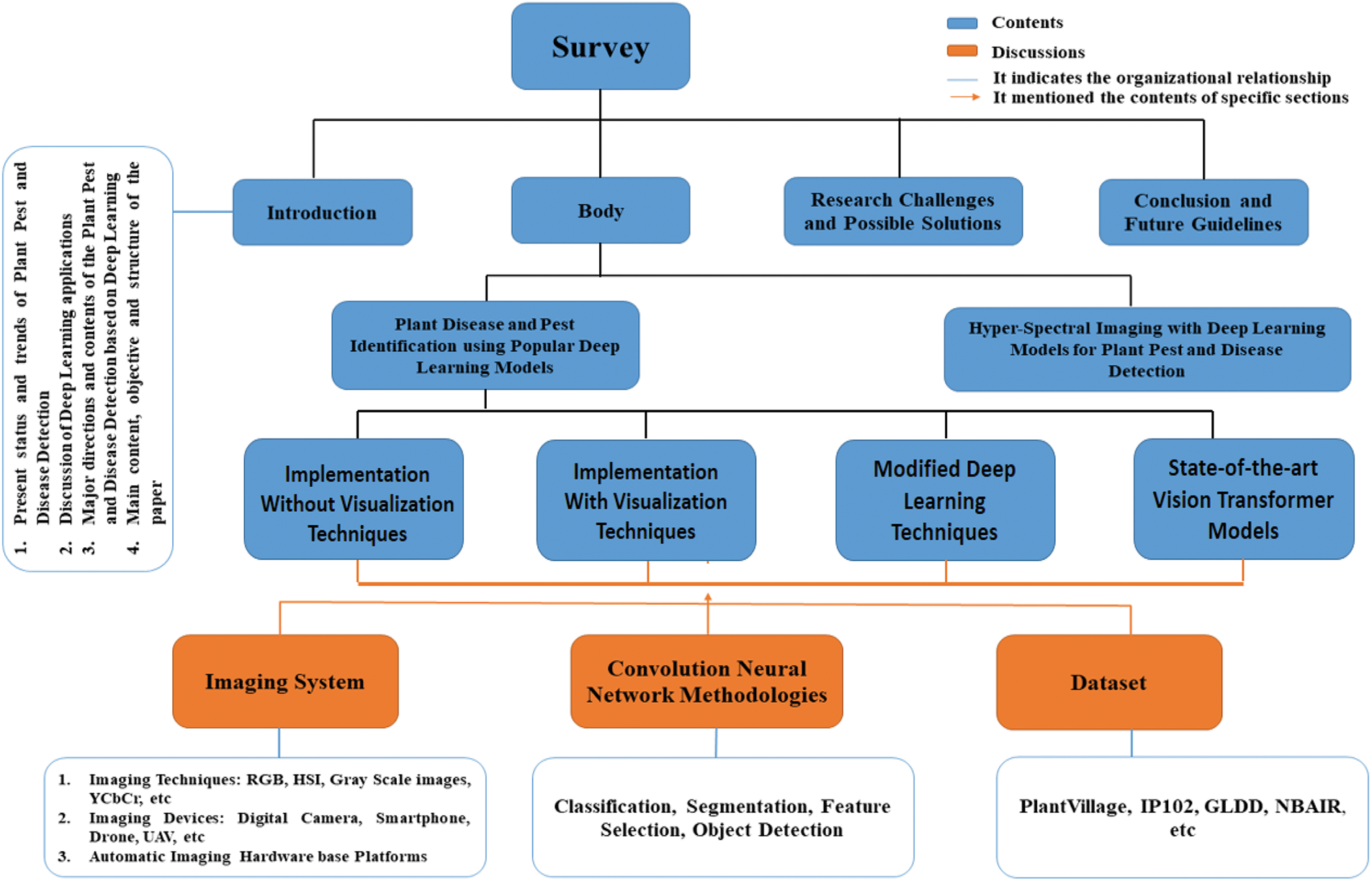

In Fig. 2, the organization of the paper is presented.

Figure 2: The organisation of the paper

3 Detection of Plant Disease and Pests Employing Popular Deep Learning Models

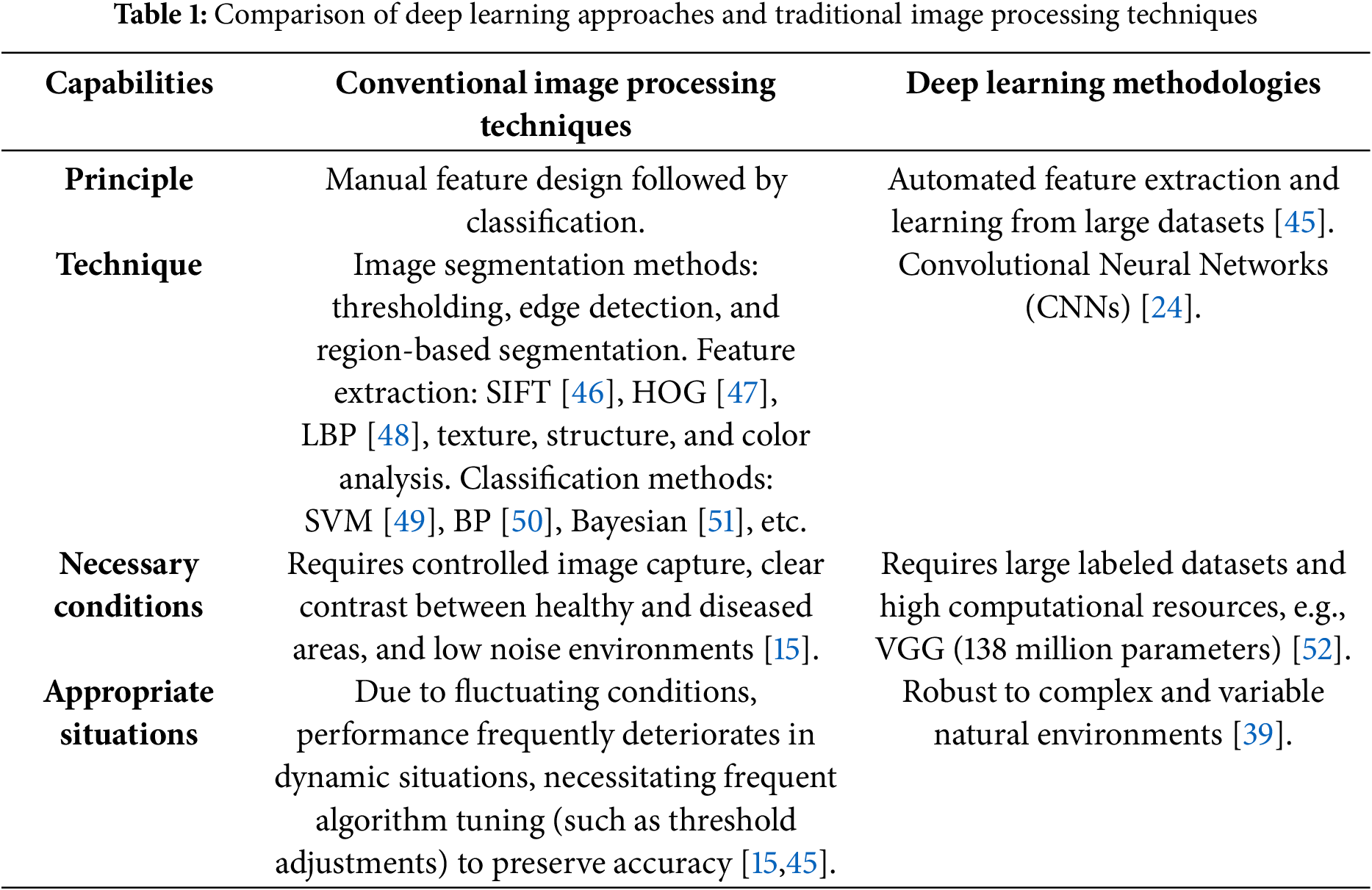

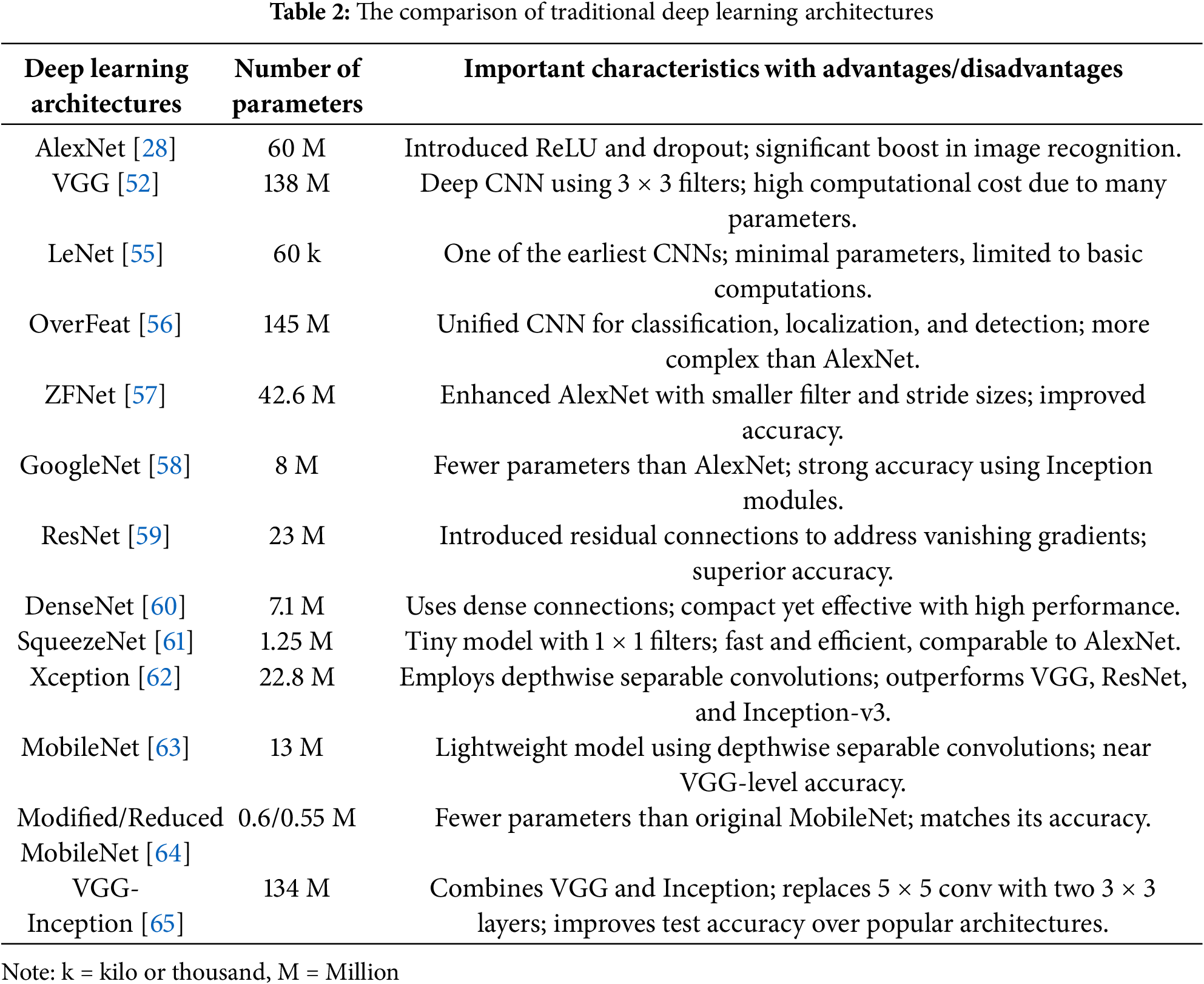

The concept of deep learning was created by Hinton et al. in 2006 [43]. Table 1 presents the differences between conventional image processing and machine learning approaches with deep learning methodologies. Many traditional deep learning architectures have been developed since the introduction of AlexNet [28]. For instance, Patel et al. demonstrated that state-of-the-art EfficientNet architectures achieve 98.3% disease classification accuracy while requiring 8.4 times fewer parameters than conventional CNNs [44].

Many traditional deep learning architectures have been developed since the introduction of AlexNet [28]. For instance, Patel et al. demonstrated that state-of-the-art EfficientNet architectures achieve 98.3% disease classification accuracy while requiring 8.4 times fewer parameters than conventional CNNs [44]. Deep learning techniques are employed to identify, segment, and categorise images. This section covers the well-known deep learning model-based research efforts used for plant disease and pest identification and classification in the agricultural domain. Notably, Lee and Wang recently proved that few transformer-based models can outperform CNNs in cross-species pest identification using attention mechanisms [53]. Additionally, various performance metrics are applied to these works to evaluate the designated deep learning models, as illustrated in Section 3.1.

3.1 Implementation of Deep Learning Models

The decision-making processes employed by deep learning architectures must be fully understood. Based on the properties of plant images, we synthesize two study approaches to extract insights. First, we have discussed the deep learning architectures based on (with or without) visualisation techniques. Next, the modified deep-learning architectures for plant pests and disease identification have been presented. In Table 2, the categories of deep learning models frequently employed to recognise and categorise plant diseases and pests are briefly explained.

3.1.1 Without Visualization Technique

The classification of diseases affecting the maize plants was performed using a CNN, and the model’s usefulness was determined using histogram approaches [54].

The extraction of pertinent visual features made possible by this technique significantly improved the categorization accuracy. Additionally, the model showed consistent performance across a range of imaging circumstances, indicating that it is robust for field-level applications. Tomato plants are highly vulnerable to a variety of diseases that can negatively affect their quality and yield. To detect various tomato plant diseases, the standard CNN architectures “AlexNet,” “ResNet,” and “GoogleNet” were introduced in [66]. According to the experimental results, the “ResNet” performed better than the other convolutional neural network models applied. The “LeNet” Architecture identified the banana leaf diseases, which significantly supported an accurate detection of RGB images with 92.88% detection accuracy and 85.94% for Grey Scale images of banana diseases in [67]. Different types of deep learning models, including “VGG”, “AlexNet”, “AlexNetOWTbn”, “GoogLeNet”, and “Overfeat”, were applied for the recognition of plant diseases and lesions [68]. Eight different plant diseases are identified using a variety of machine learning classifiers, including the following: “SVM”, “KNN”, and “Extreme Learning Machine (ELM),” as well as deep learning architectures such as “GoogleNet,” “ResNet-50,” “InceptionResNetv2,” and “SqueezeNet” [69]. Finally, the “ResNet-50” with the “Support Vector Machine” classifier outperformed other trained models.

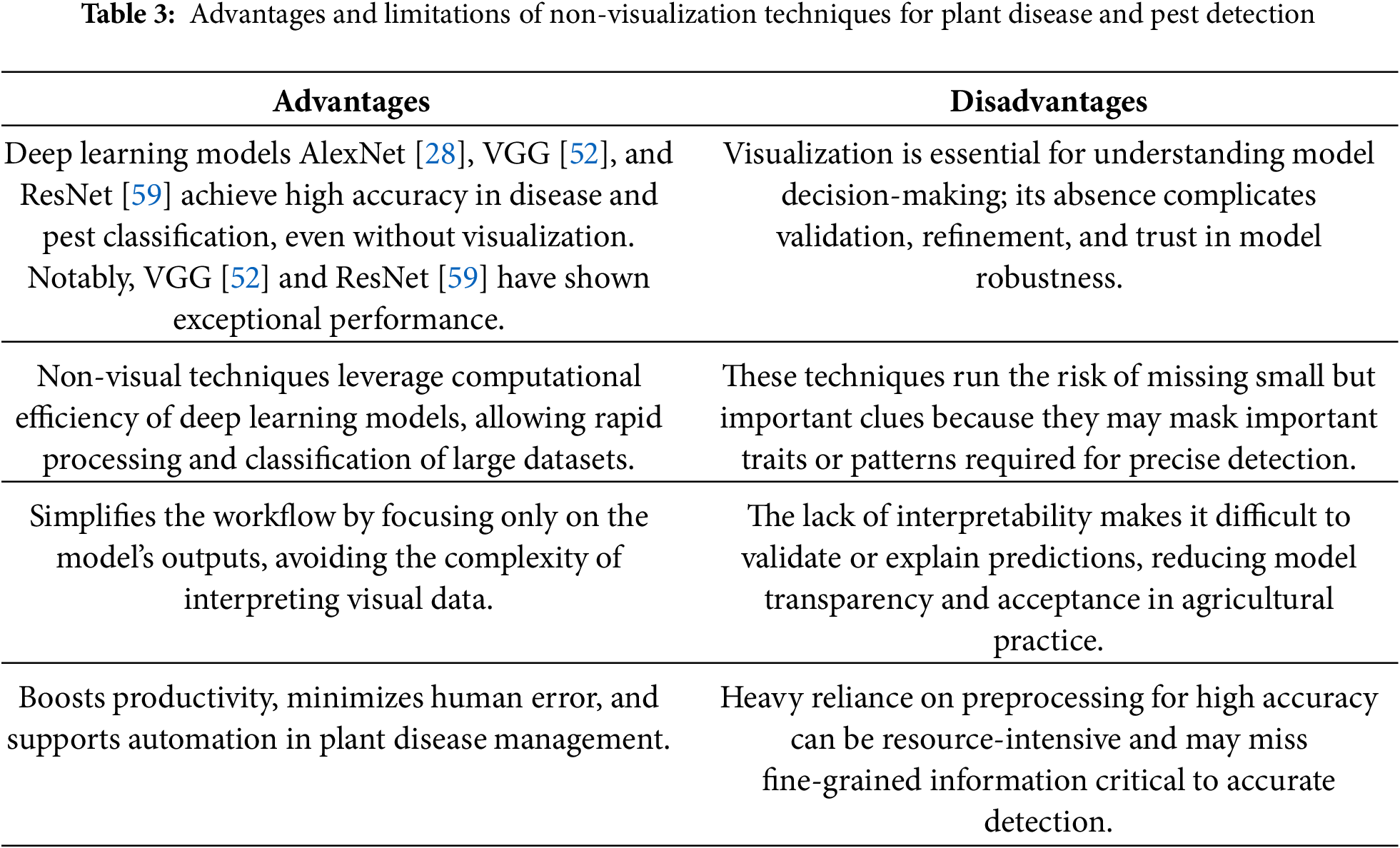

In [70], three types of diseases and two pest infections of the cassava leaf dataset were identified by applying transfer learning of the “Inception-v3” architecture. A proprietary convolutional neural network and a 4-fold cross-validation approach were used to construct an autonomous and reliable plant disease identification model, and the emerging algorithm had an average precision of 82.3% [71]. The deep learning classifier categorised four potato plant diseases in [72]. The convolutional neural network models employed were VGG16 and VGG19. Finally, the VGG16 model obtained an average accuracy of 91% higher than the accuracy of 90% (average) achieved by the VGG19 model for potato plant disease classification. Four different tomato plant diseases were successfully identified and categorised using a hybrid model of CNN and the “Learning Vector Quantization (LVQ)” technique, with an average classification accuracy of 86% in [73]. In [74], a comparative study was presented to select the most suitable deep learning architecture for identifying plant diseases. Furthermore, in [75], the PlantVillage dataset was utilised for training the deep learning-based architectures “VGG16” and “AlexNet,” which both attained classification accuracy of 97.29% and 97.49%, respectively, using the healthy class and six-leaf disease classes of tomato plants. In the methodologies mentioned above, Grad-CAM and Score-CAM visualisation methods are not used to spot infections of plant diseases and pest lesions. Table 3 summarises the key advantages and limitations of using non-visualisation techniques in deep learning models for plant disease and pest detection.

3.1.2 With Visualization Technique

Plant pest and disease symptoms have been better visualized and understood using several deep learning architectures. Saliency maps [76] and occlusion approaches [77] have drawn attention to disease-affected areas, making them easier for farmers to understand. An Ultra-Lightweight Efficient Network (ULEN) outperformed SVM in [78] with a classification accuracy of 96.30%. Nine tomato leaf diseases were classified using ResNet-50, Xception, MobileNet, ShuffleNet, and DenseNet121_Xception in [79], with early convolution layers successfully identifying impacted areas. In [80], an enhanced LeNet used edge mapping to identify localized olive plant diseases. Reference [81] suggested a deep model for cucumber plants that accurately outperformed SVM, RF, and AlexNet. YOLOv4 produced the most favourable classification results when object detection models, such as SSD, YOLOv4, and Faster R-CNN, were tested for scale pest localization [82]. In [83], a novel teacher-student framework enabled region-level visualization and precise lesion identification.

The percentage of plant regions affected by disease has been accurately determined using deep learning models combined with different detectors [84]. Faster R-CNN, R-FCN, and SSD combinations with backbone networks including AlexNet, VGG, ZFNet, ResNet-50, and ResNetXt-101 were tested; the most efficient results were obtained with R-FCN + ResNet-50—the use of bounding boxes allowed for accurate disease localization. Using ResNet-50, Inception-V2, and MobileNet-V1 combined with Faster R-CNN and SSD, banana leaf diseases were identified in [85]. Similarly, a CNN-based pipeline for maize detected northern leaf blight (NLB) lesions, which were then evaluated using receiver operating characteristic (ROC) curves and annotated using CyVerse tools [67]. Accurate in-situ detection of rice leaf disease was made possible by Faster R-CNN with an improved Region Proposal Network (RPN) architecture, which produced high-quality regions of interest [86].

Many deep learning models have been successfully used to detect pests and plant diseases. VGG-based CNN and fully convolutional networks displayed wheat disorders [87], but LeNet correctly recognized soybean diseases [88]. In [89], radish regions were divided into healthy and Fusarium wilt-affected areas using the VGG model, with bacterial patches being identified using K-means clustering. Similar deep learning techniques were used to visualize plant symptoms in [90], and UNet and PSPNet with ResNet-50 were used to classify the severity and stress of coffee leaves based on disease spots [91]. Hidden neurons for pest detection were adjusted by an improved YOLOv3 using Adaptive Energy-based Harris Hawks Optimization (AE-HHO) [92]. Tiny YOLOv3 detected multi-class insect sites on sticky traps [93], while CNNs indicated areas of rice crops impacted by infection [94].

In order to guarantee color stability, target spots were identified from segmented images using a hotspot-based technique that uses descriptors for bacterial color characteristics and scorching spot textures [95]. In [96], field crop insects were classified using AlexNet, ResNet, GoogLeNet, and a suggested DCNN model; the DCNN demonstrated good reliability. To efficiently identify and categorize multi-class pests, “PestNet,” a region-based end-to-end architecture first presented in [97], included a Channel-Spatial Attention (CSA) unit, Region Proposal Network (RPN), Fully Connected (FC) layers, and Position-Sensitive Score Map (PSSM). A hybrid model that combined ZFNet with RPN and Non-Maximum Suppression (NMS) performed better than more conventional models like AlexNet and ResNet for the localization and counting of insects [98]. In [99], fruit crop diseases were automatically segmented and recognized using visualization techniques based on VGG-16 and AlexNet. The real-time model “Faster DR-IACNN” successfully identified grape leaf diseases in [100], whereas LeNet, in conjunction with color space and vegetative indicators, successfully identified grape plant diseases in [101].

By combining dilated convolution and global pooling, the Global Pooling Dilated Convolutional Neural Network (GPDCNN) successfully recognized six prevalent cucumber leaf diseases in a different study [102].

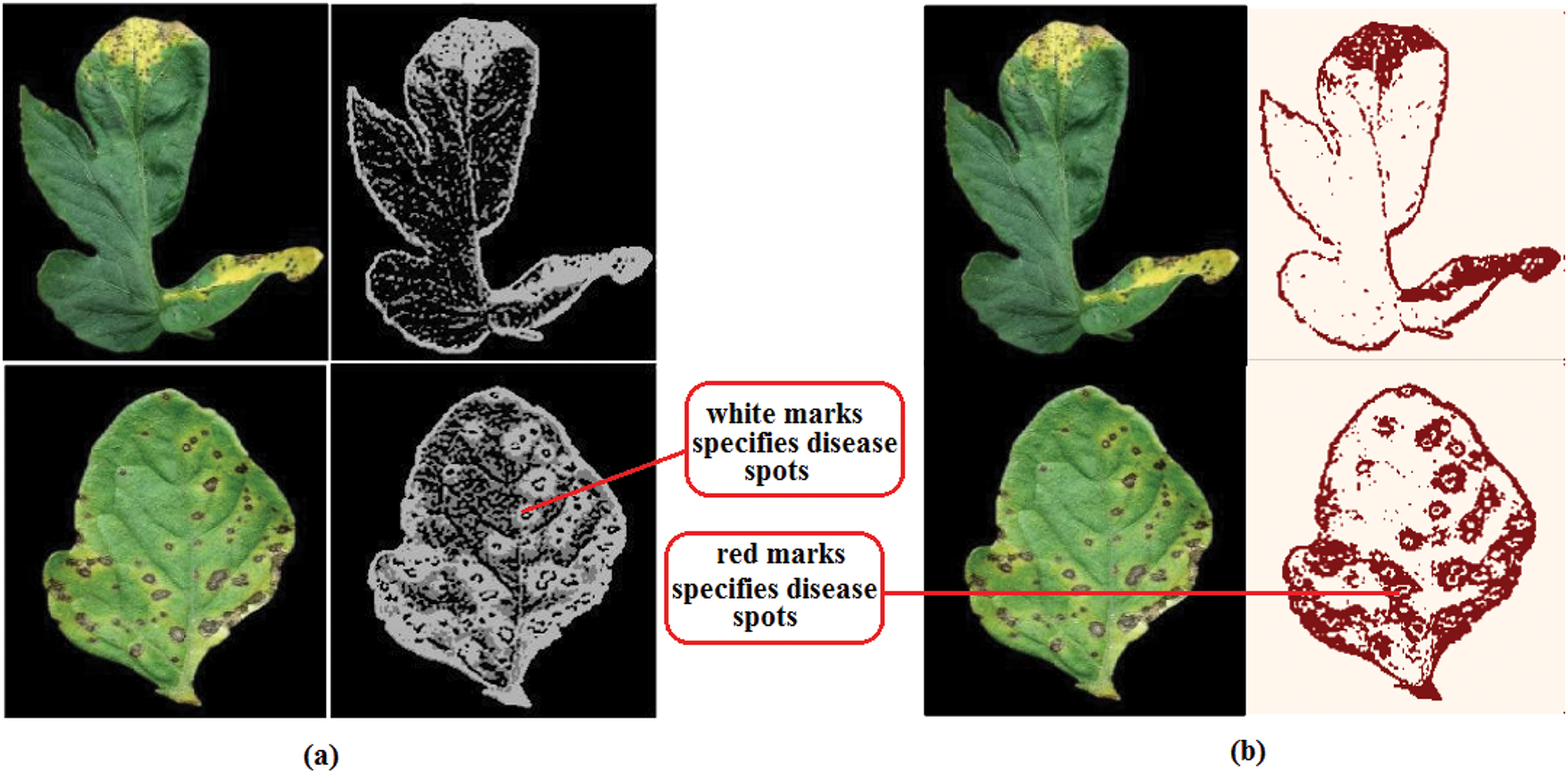

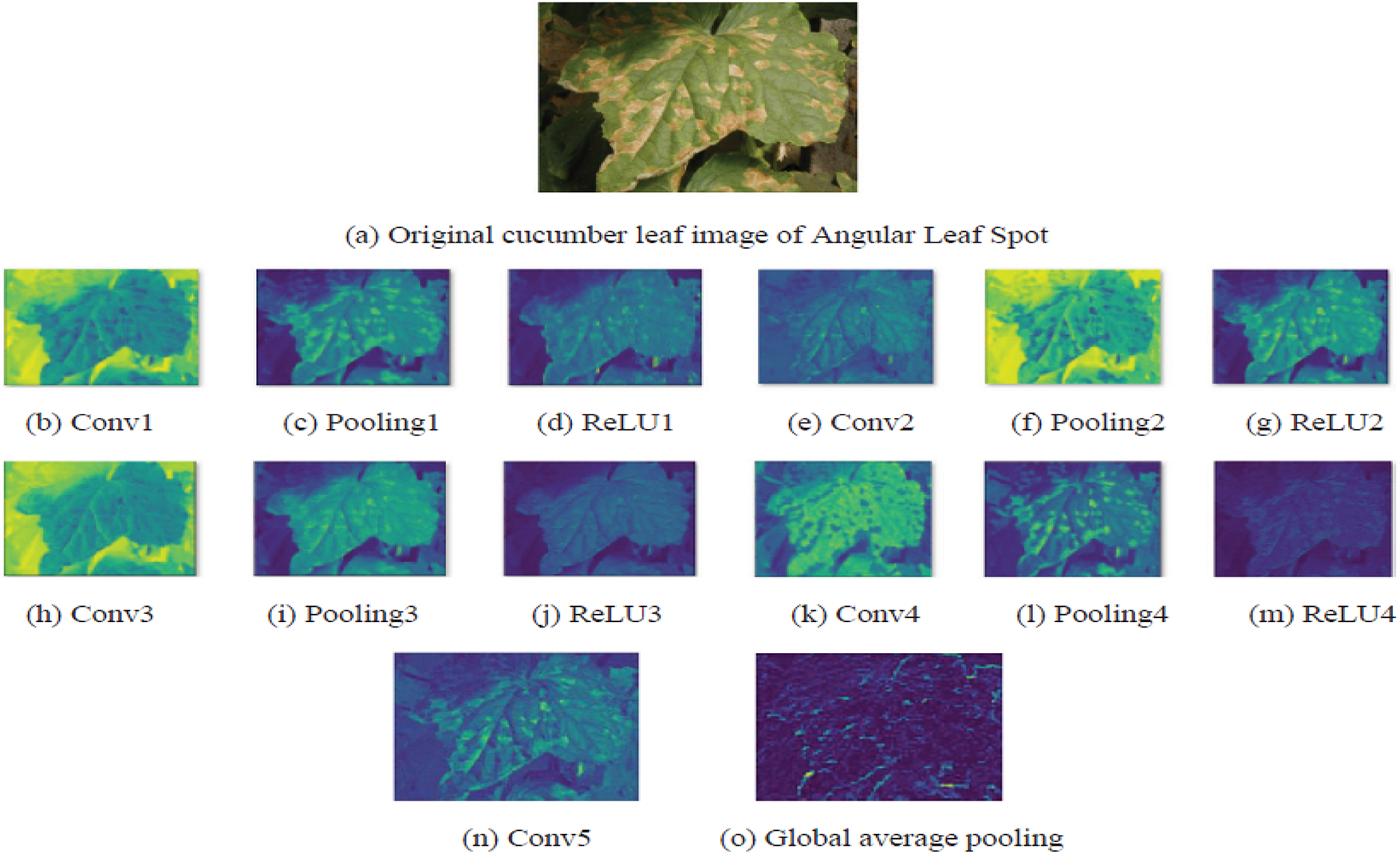

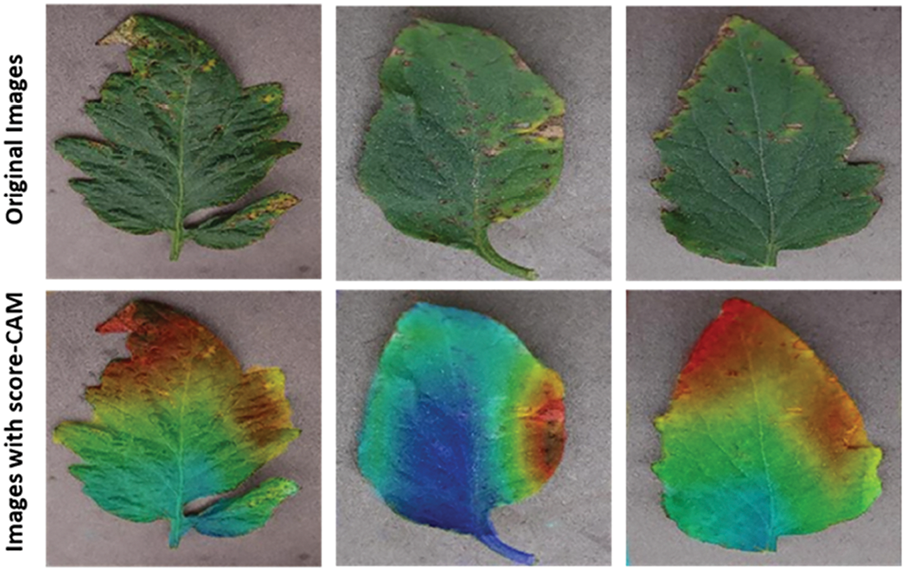

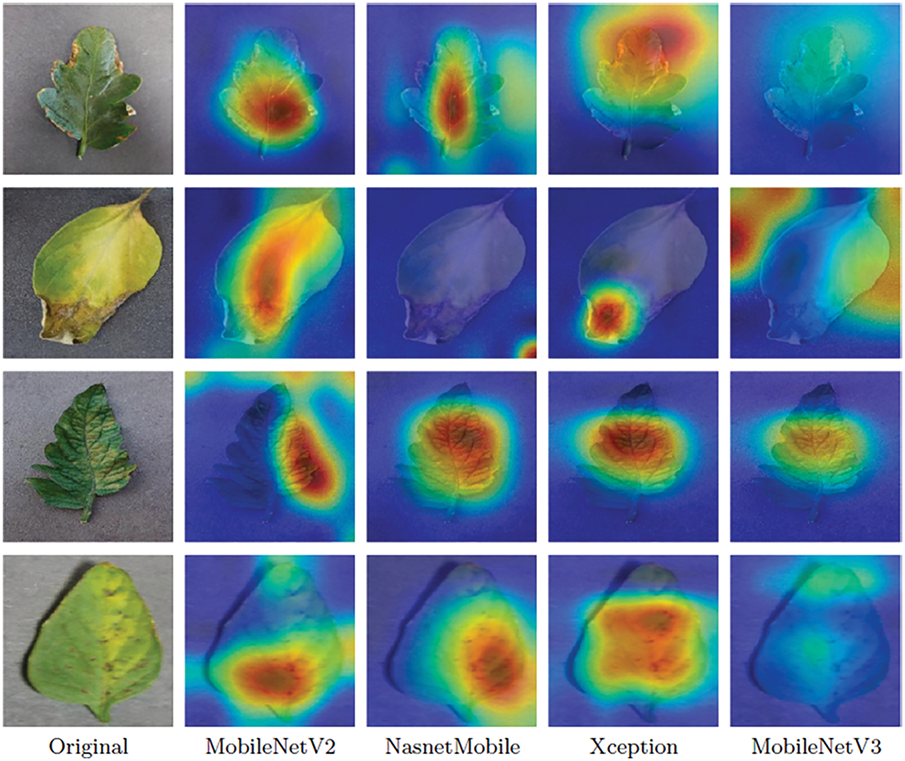

In situ experimental observations must consider real-world circumstances to assess how well deep learning architectures identify plant diseases and pests. Their application for practical disease detection and classification is limited because most of the approaches covered above rely on datasets gathered in controlled or typical environmental circumstances [50,65–67,77–80,94]. Real-time field circumstances have been addressed explicitly in only a small number of studies [66,69,70,84,87,89,102]. Several of these works’ visualization techniques are depicted in Figs. 3–11.

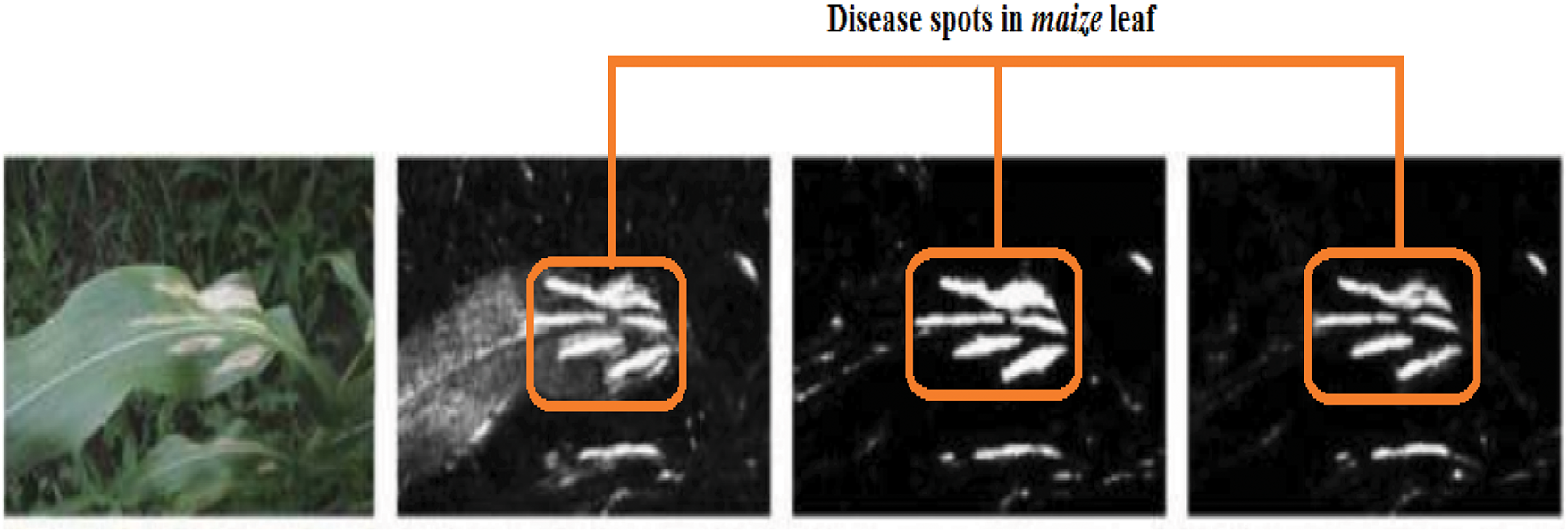

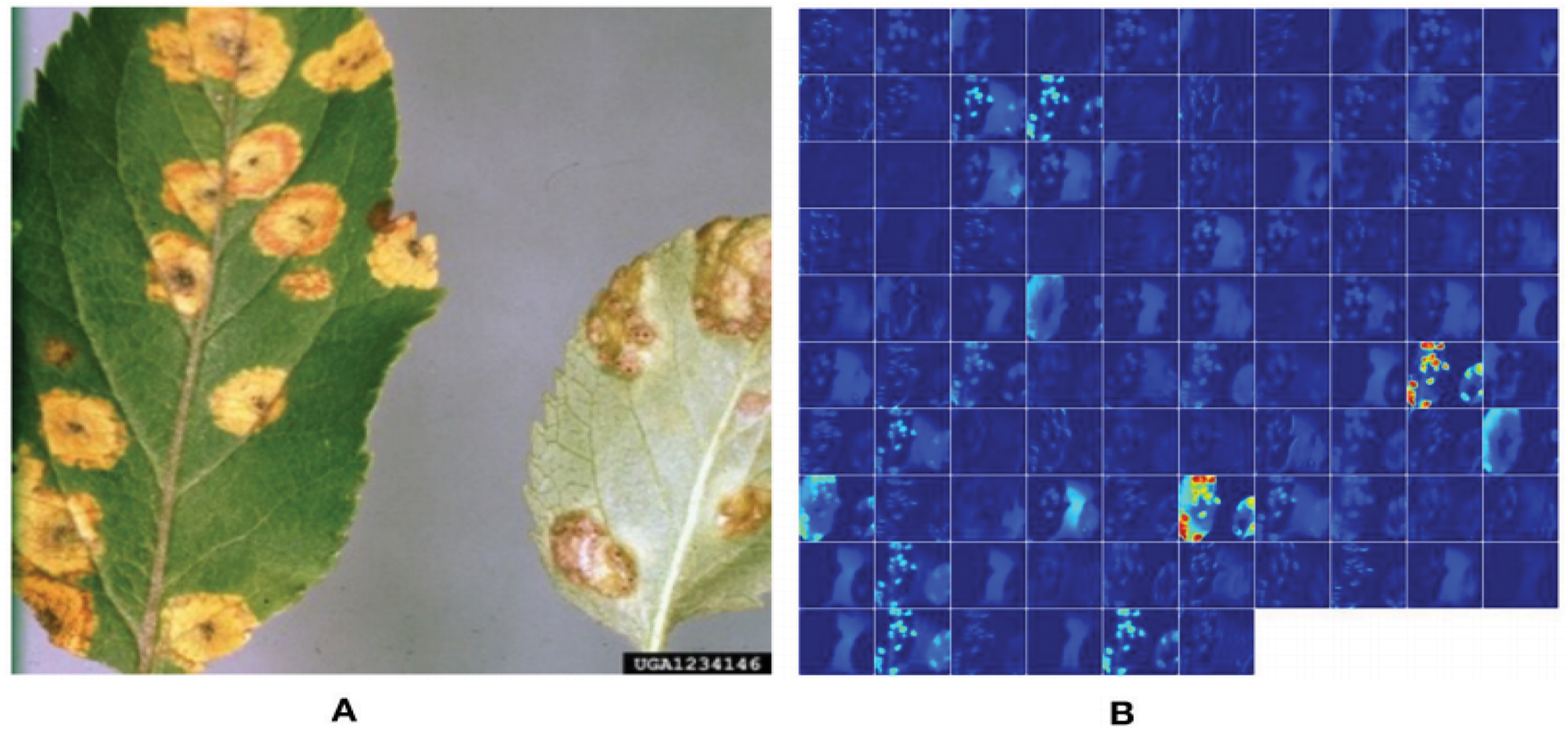

Figure 3: Identification of maize disease (specified by orange rectangles) by heat map [45]

Figure 4: Demonstration of activation in the first layers of an AlexNet model to show that it has learned to efficiently stimulate against the affected areas of the target leaf image of “Apple Cedar Rust” (a deep blue circle indicates the diseased patches) [79]

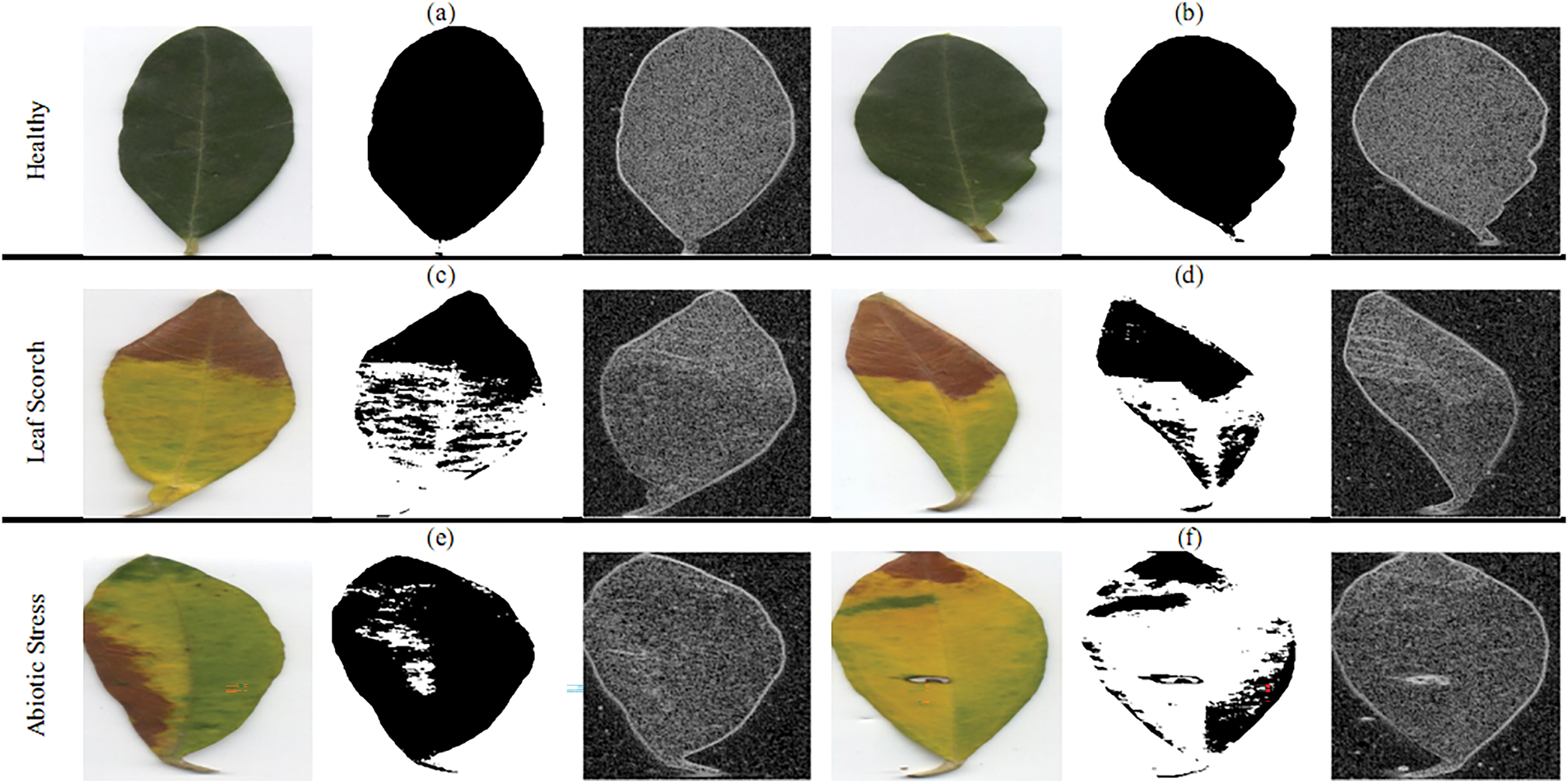

Figure 5: Olive leaf disease identification using segmentation and edge map [80]

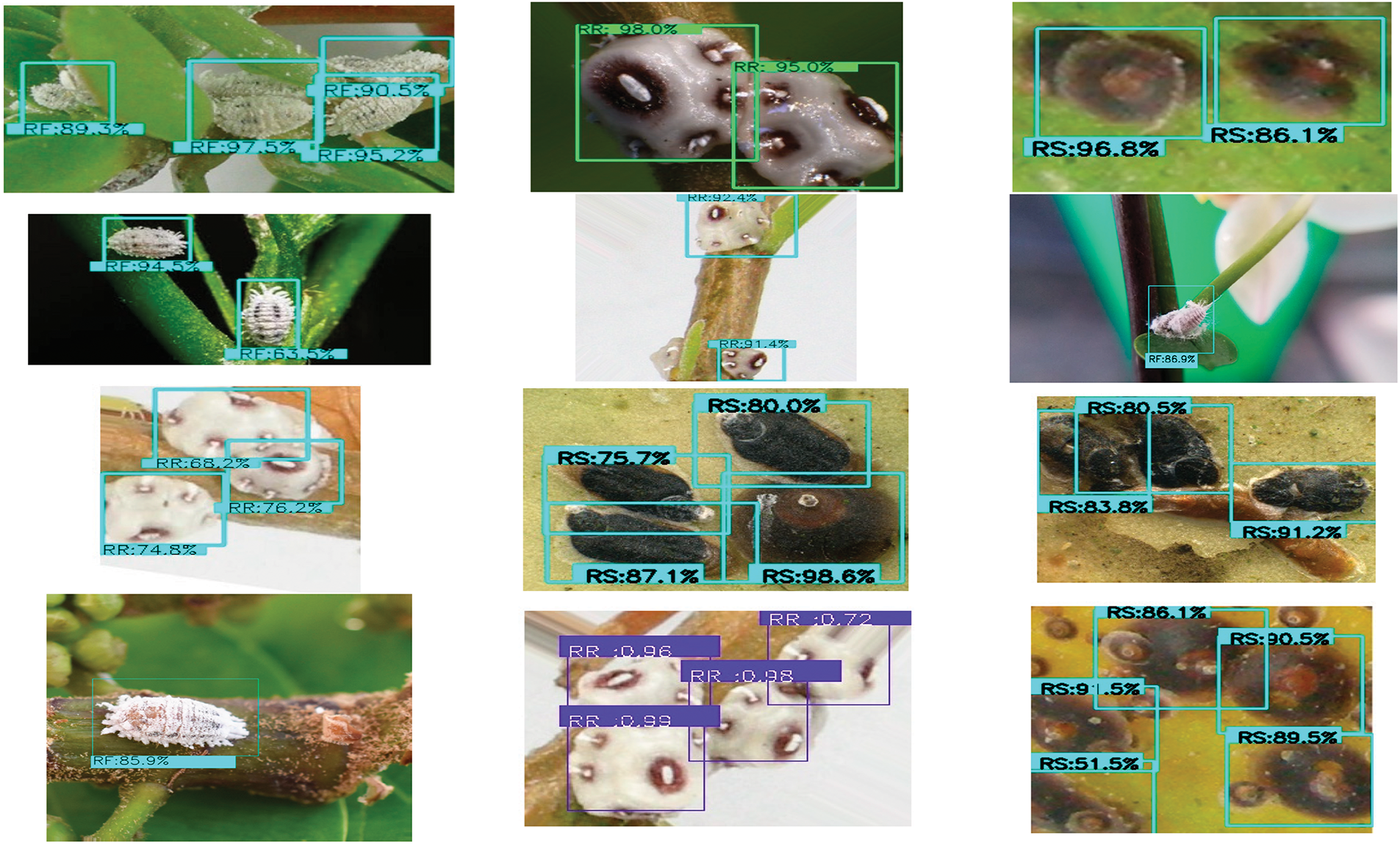

Figure 6: Bounding boxes mark the predicted outcomes of the object detection model to identify three specific pests [82]

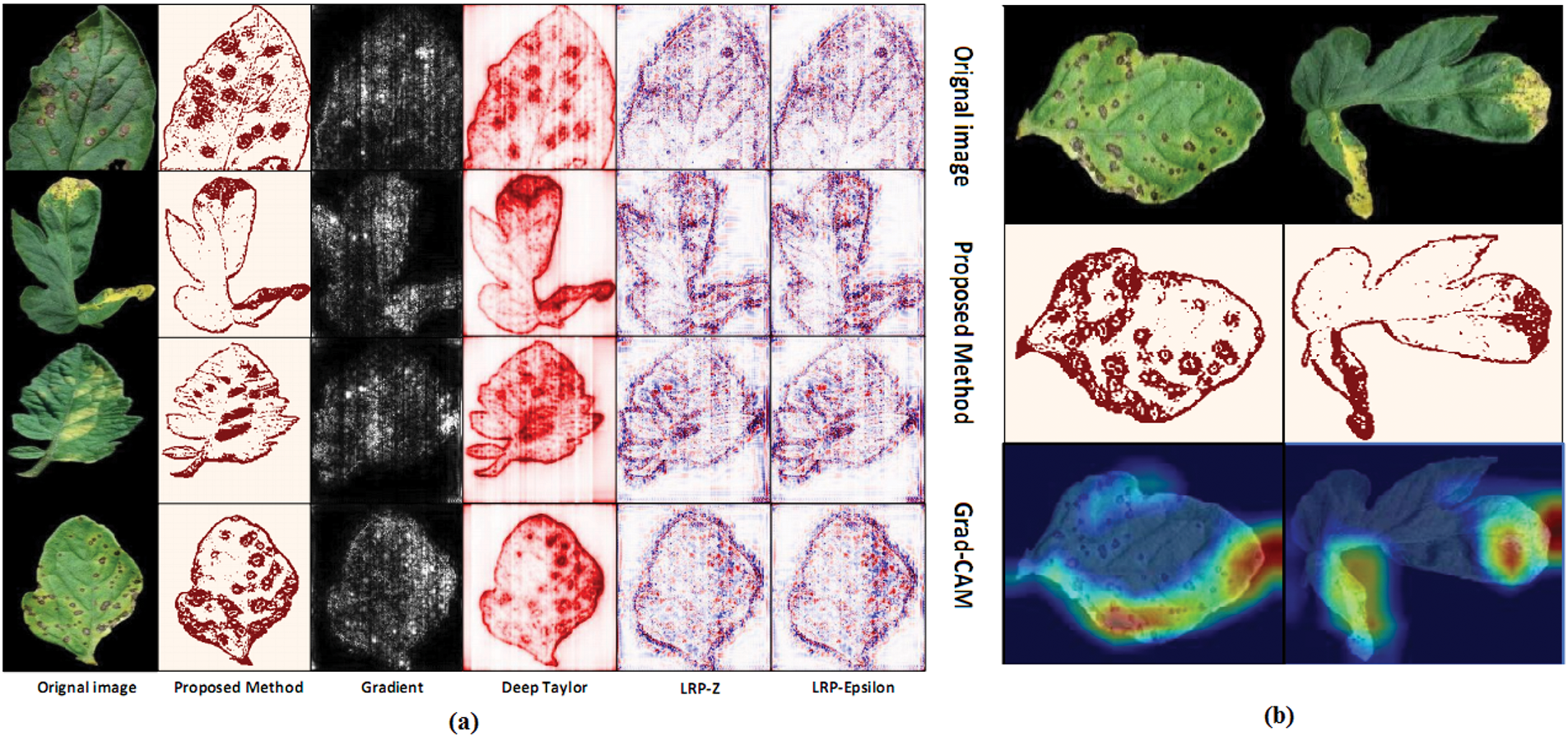

Figure 7: (a) Visualization algorithm results are compared; (b) Comparison of Grad-CAM [82]

Figure 8: (a) A novel “Teacher/student” framework; (b) “Binary threshold algorithm” for fragmentation [83]

Figure 9: Feature maps representation after the convolution layers applied to an image [102]

Figure 10: Visualization of tomato leaf pictures using Score-CAM with accurate classification: sliced leaves with a score-CAM heat map over the original leaves [103]

Figure 11: Saliency maps for “Bacterial spot,” “Late blight,” “Leaf mold,” and “Yellow leaf curl virus” are shown in the first, second, third, and fourth rows, respectively [104]

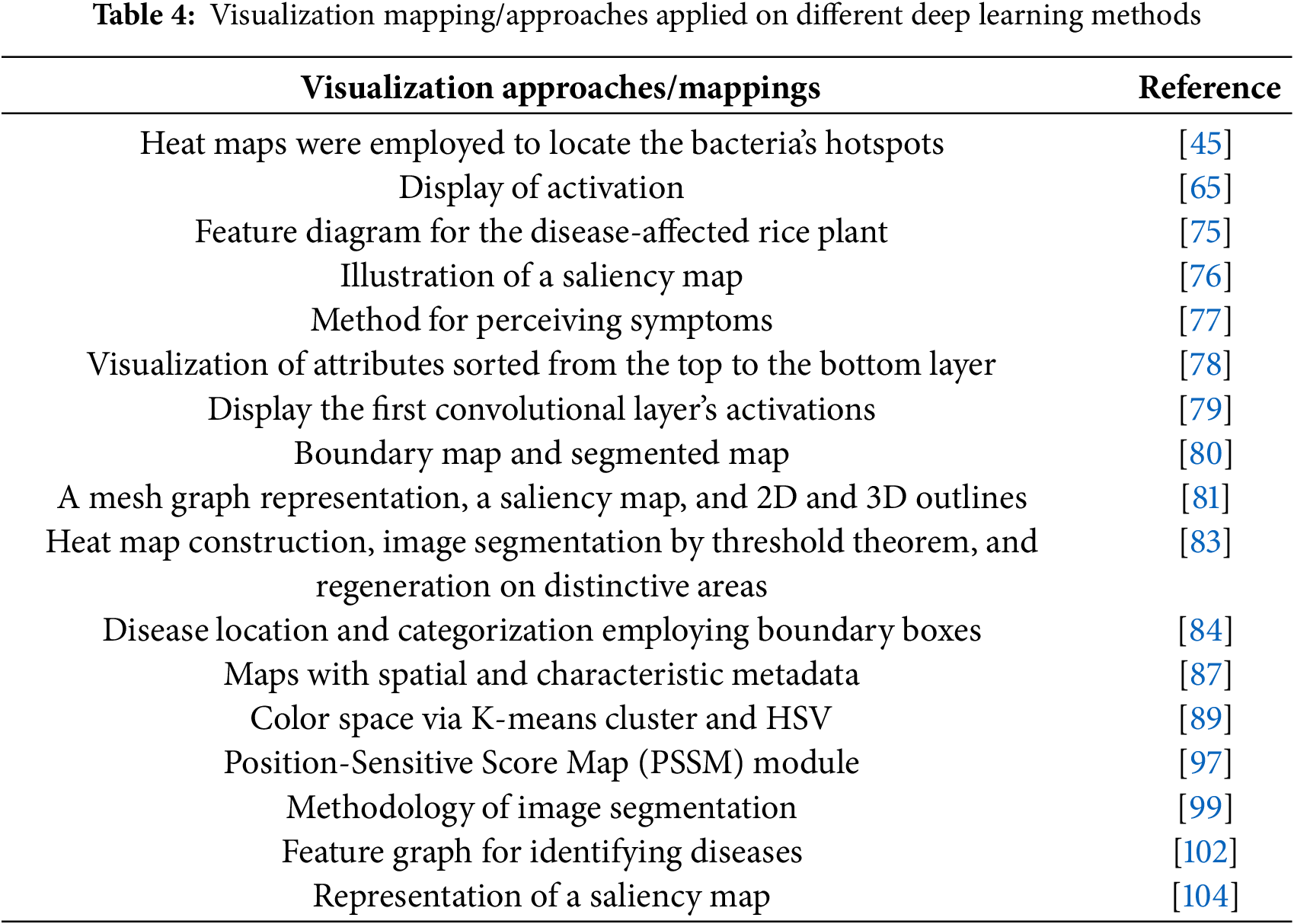

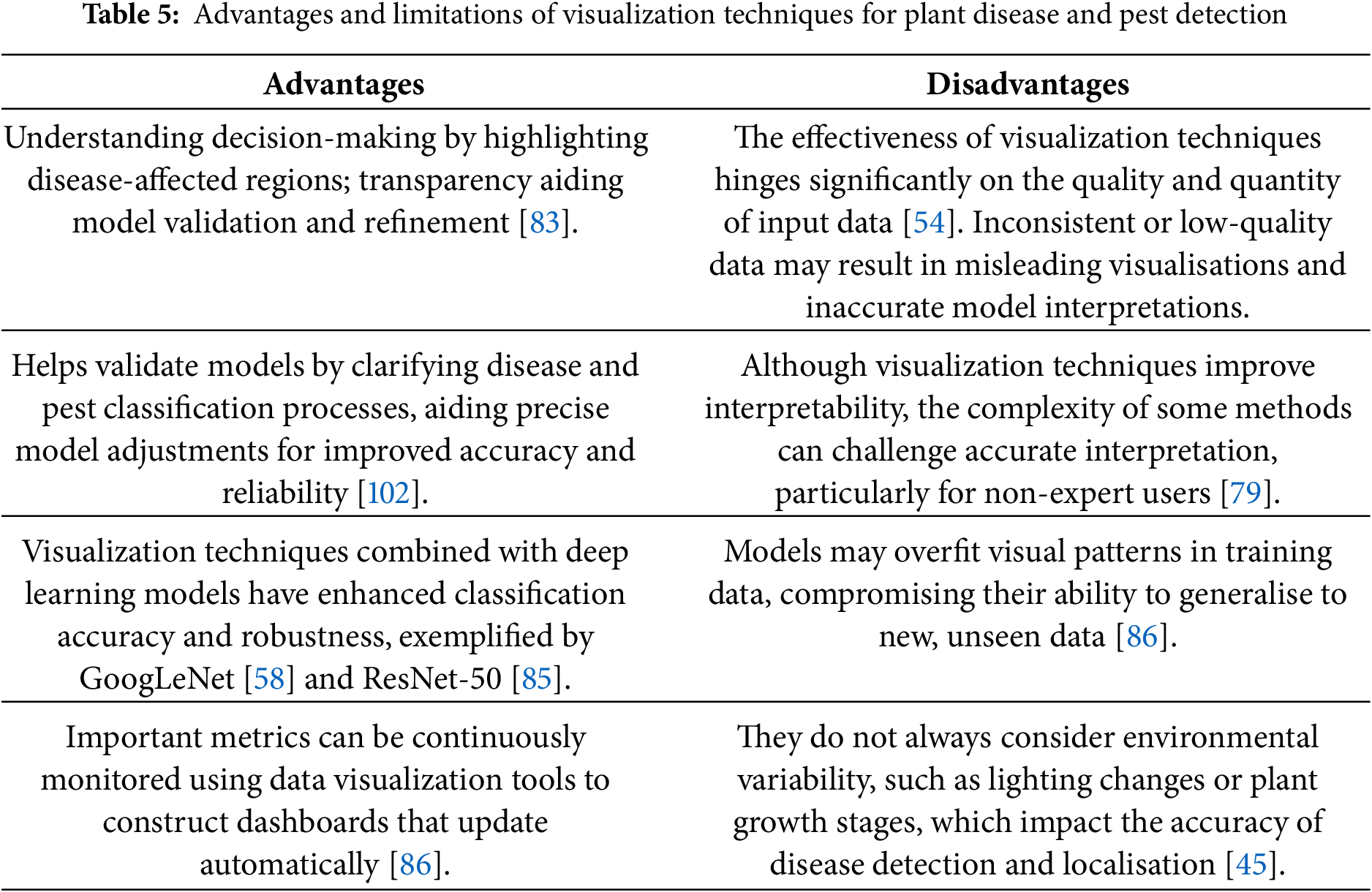

Fig. 3 identifies the infected maize plants using the heat map. Initially, each location of the selected image contains a lesion, which can be considered a measure of the probability that each lesion was caused by the disease. After that, a matrix form is generated using the probabilities, indicating the model’s outcome for each target image region [45]. The feature maps in Fig. 4 were obtained to understand the importance of CNN topologies in discriminating between different plant diseases. The output demonstrates the decent efficiency of the “AlexNet” architecture, which is capable of detecting plant diseases [79]. This figure also illustrates how characteristics are extracted using shallower Convolutional Neural Networks (CNNs). Compared to deeper models such as ResNet, the coarse and less selective activations of AlexNet may hamper performance in fine-grained disease classification. In Fig. 5, the plant diseases are detected using segmentation and edge maps. In the segmentation map, the yellow region appears as a white-coloured surface to highlight the lesion portion of the input leaf [80]. Segmentation, as opposed to bounding boxes, offers distinct boundaries, enhancing the quality of training data and the prediction of disease severity. Separating clustered leaf symptoms is further aided by edge-enhanced segmentation. The matrix reveals the probabilistic occurrence of lesions across spatial areas. By combining probabilities over time, these heatmaps may assist in improving severity assessment, allowing for customized treatment as opposed to uniform spraying. In Fig. 6, the three classes of pest detection are presented. RF points to the “mealybugs” pests, RR means “Coccidae”, and RS indicates “Diaspididae”. Each image contains multiple predicted outcomes [82], and for class-wise detection, including mealybug and coccidae, is depicted in the figure. Visually, the detection seems accurate, but no metrics are displayed. A detailed comparison in the updated publication demonstrates that SSD achieves 82.5% mAP on the dataset, with an accuracy of 89% for mealybugs and 76% for coccidae. illustrates how distillation sharpens the student model’s focus, enhancing interpretability and mitigating a novel approach for visualisation is demonstrated in [83], as presented in Figs. 7a,b and 8a,b. The selected imagery has been reassembled in Fig. 8a to create the “student/teacher” model [83], and in Fig. 8b, a single channel heat map has been created and segment significant portions of the image. After that, a straightforward Boolean threshold method reliably displays signs of severe plant infection. This design blends a student’s efficiency with high-level semantics from a teacher model. This image illustrates how distillation sharpens the student model’s focus, enhancing interpretability and mitigating overfitting on real training data. Fig. 7a presents the different visualisation approaches. Heat maps created using visualisation approaches like “Gradient,” “LRP-Z,” and “LRP-Epsilon” are hazy and difficult to understand. The deep Taylor technique has achieved superior outcomes, but it has specified only a few spots of the disease on the leaf. Furthermore, a defective localisation of the disease spots is achieved using the Grad-CAM technique. At the same time, the proposed method addressed the issue by employing a decoder, as shown in Fig. 7b [82]. As a conclusion of the figure, Deep Taylor and LRP concentrate on relevance at the pixel level, whereas Grad-CAM emphasises more general regions. Although LRP provides superior noise reduction in the updated statement, it may overlook contextual cues. According to a user assessment, agronomy specialists rated Grad-CAM as the most interpretable. Fig. 9 shows how the feature map uses pooling, convolution, and ReLU operations to process an image so that different features can be extracted from other layers. Every feature map is depicted, and ultimately, the Global Average Pooling (GAP) layer records several noteworthy attributes [102]. Additionally, the progressive abstraction of characteristics in a CNN is illustrated in this figure. Deeper layers identify intricate disease patterns, such as lesions or pest forms, while early layers record low-level information, such as edges and textures. For reliable categorization in a variety of illumination and occlusion scenarios, this layered abstraction is essential. Figs. 10 and 11 illustrate two distinct visualization techniques: saliency maps and heat maps. The Score-Weighted Visual Explanations for CNNs (Score-CAM) heat map is used to identify infections in the source images of Fig. 10 as red dots [103] and saliency maps aid in seeing the active pixels affecting the model’s prediction, but noisy backgrounds may make them difficult to understand. They are faster than CAM-based techniques, although they are frequently less accurate in pinpointing the locations of symptoms. A superior methodology, known as the saliency map technique, is introduced, which effectively detects all disease-affected spots on tomato plants, as shown in Fig. 11 [104]. This technique generates more concentrated attention maps than saliency, a class-specific activation. This figure illustrates how Score-CAM enhances field-level diagnostic accuracy by distinguishing between overlapping symptoms of disease. Table 4 shows different visualisation mapping/techniques to summarise this section. These methods, which highlight key image regions that make predictions, such as Grad-CAM, saliency maps, and feature attribution, improve the interpretability of deep learning models. In crucial applications like plant disease and pest detection, this kind of visualization aids in determining the model’s target region, fostering trust and facilitating validation. Table 5 summarizes the key advantages and limitations of visualization techniques in deep learning models for plant disease and pest detection.

3.1.3 Modified Deep Learning Architectures for Plant Pests and Diseases Identification

Several new deep learning architectures have been applied in some works to achieve superior and apparent identification of plant diseases. For instance, reference [105] provided an improved “GoogLeNet” architecture, and this model’s usefulness is significantly superior to that of the “AlexNet” and “VGG” architectures. The updated versions of these traditional deep learning models have achieved 98.9% accuracy. In [106], a fresh combination of “DenseNet” with “Xception” based “DCNN” is proposed for the recognition & early diagnosis of apple tree leaf diseases accurately. The proposed approach is 98.82 percent accurate in identifying five diseases in apple leaves. To identify, categorise, and count pests, a unique “entire-and-partial feature transfer learning” approach is presented; finally, it provides the detection frequency (90.2%) of pest movement [107]. Different fine-tuned feature maps are reinforced utilising the “entire-feature transfer learning” weight matrix while utilizing the “partial-feature transfer learning” approach. Finally, the whole feature network’s cross-layer and multi-scale convolution layers are integrated. To categorise the soybean pests, transfer learning techniques are employed in [108] and in conjunction with the deep learning models “Inception-v3”, “VGG-19”, “Resnet-50”, and “Xception”. The entire soybean dataset comprises 5000 images, captured in real-time. The ResNet-50 deep learning model achieves the highest classification accuracy at 93.82%. Haridasan et al. [109] conducted a study focusing on the automatic detection and analysis of rice crop diseases by incorporating various pre-processing techniques and utilising Principal Component Analysis for dimensionality reduction. In their work, Abbas et al. [110] introduced an enhanced Cuckoo Search algorithm termed the Hybrid Improved Cuckoo Newton Raphson Optimization (HICNRO). This approach not only diminishes computational time but also eliminates redundant features and enhances accuracy. These selected features are then utilised to train ten distinct machine learning classifiers, resulting in notable accuracies of 100%, 92.9%, and 99.2% on Tomato, Potato, and Cucumber Leaf datasets. Khattak et al. [111] proposed a CNN model with a test accuracy of 94.55 %, making it a valuable decision support tool for farmers to classify citrus fruit/leaf diseases.

In [112], LeafNet, a new CNN model, is employed for tea leaf disease classification, achieving greater accuracy compared to the “Multi-Layer Perceptron (MLP)” and “Support Vector Machine (SVM)” models. In [113], a “deep convolutional generative adversarial network (DCGAN)” was trained on a segmented and filtered dataset containing 56,000 images of 19 crops, which comprise 38 classes collected from PlantVillage. A CNN model such as “MobileNet” deployed on smartphones is used to classify plant diseases and obtained 92% test accuracy compared to the modified “Inceptionv3” model with 88.6% accuracy. Two deep learning architectures, namely the reduced “MobileNet” and reformed “MobileNet”, are proposed in [64], and the obtained classification accuracy is similar to that of the “VGG” architecture. Finally, the reduced “MobileNet” has obtained 98.34% accuracy. This model significantly reduces training time, as it has fewer parameters than the VGG model. In [65], the hybrid CNN architecture, known as the “VGG-Inception” model, is used to classify and identify five types of diseases in apple plants. This model outperformed several deep learning models, including AlexNet, GoogLeNet, VGG, and specific variants of ResNet. In addition, it offers inter-object and class identification and activation visualisation. Another advantage of using this model is that it perfectly visualises disease-affected spots on plants. In a different study, Liu et al. [114] developed an enhanced Faster R-CNN with feature pyramid fusion (FPF-RCNN) that maintained a detection speed of 21.5 FPS while achieving 79.2% mAP on the Citrus Pest and Disease Dataset (CPDD). Furthermore, Amin et al. [115] utilise two pre-trained convolutional neural networks (CNNs), EfficientNetB0 and DenseNet121, to extract deep features from the corn plant images, ultimately achieving a classification accuracy of 98.56%.

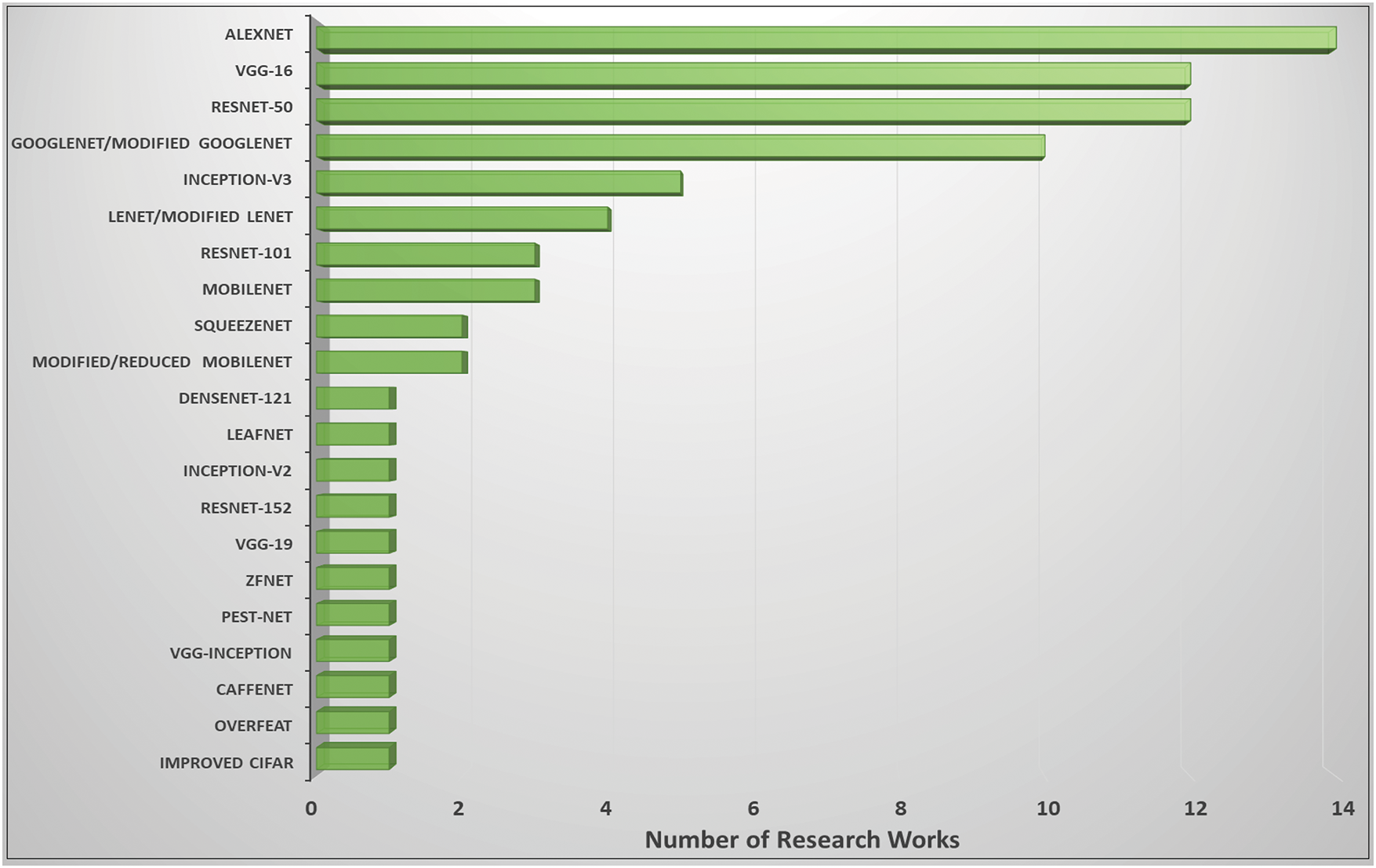

The most prominent deep learning algorithms for accurate plant infection identification and categorisation are shown in a bar chart in Fig. 12. Most research work has applied the AlexNet [28] model. The following widely used deep learning architectures are ResNet-50 [85], VGG-16 [75], and GoogLeNet [58]. Correspondingly, various hybrid deep learning architectures, such as VGG-Inception [65], reduced or modified MobileNet [64], updated LeNet [80], and modified GoogLeNet [105], are employed to identify pests and diseases in plants. In [116], Pacal et al. (2024) conducted a comprehensive review analyzing 160 studies on deep learning applications in plant disease detection, focusing on classification, detection, and segmentation tasks. The paper highlights the effectiveness of deep learning in early disease detection and discusses challenges such as dataset limitations and model generalization.

Figure 12: Plant infections and pests are frequently detected using deep learning designs

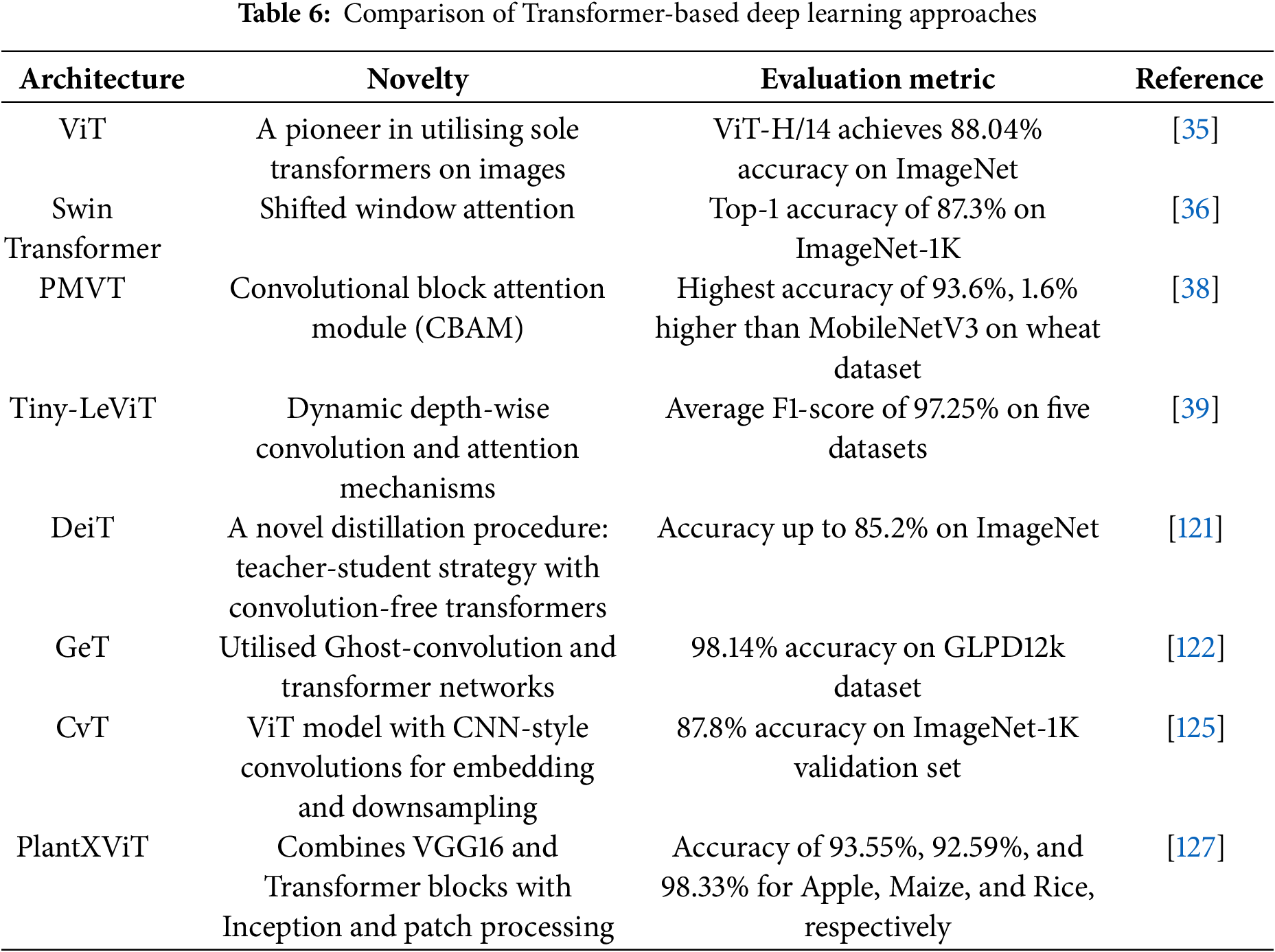

3.1.4 State-of-the-Art Transformer Architectures for Plant Pests and Diseases Identification

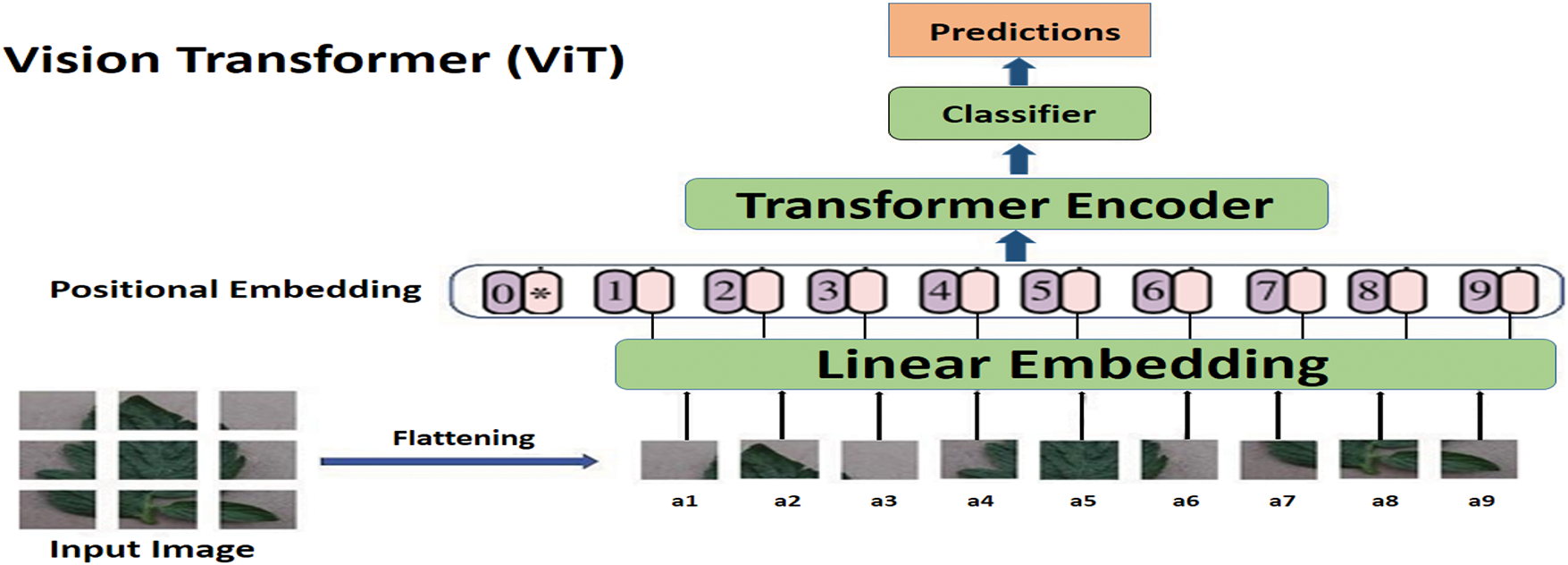

A new advancement in deep learning, vision transformers (ViTs) utilise the transformer architecture, originally designed for natural language processing, to analyse images. Vision transformers split an image into tiny patches and treat them as tokens in a sequence, unlike conventional convolutional neural networks (CNNs), which scan images using localized filters. This allows the model to capture global context and long-range dependencies inside an image. This marks a departure from traditional sequence-to-sequence models, which often rely on recurrent neural networks (RNNs), such as Long Short-Term Memory (LSTM) [117], predominantly employed in Natural Language Processing (NLP) applications. In the context of images and visual data, where inherent spatial and temporal coherence prevails, the innovative use of transformers signifies a paradigm shift in how we approach information capture and contextual understanding with it being successfully used in image segmentation [118,119], image classification [120], and so on. Introduced in [35], the Vision Transformer (ViT) represents a groundbreaking approach to image recognition. Departing from conventional convolutional neural networks, ViT embraces a transformer architecture originally devised for natural language processing. The model treats images as sequences of patches, facilitating the effective capture of extensive contextual information and long-range dependencies. Nevertheless, ViTs frequently encounter challenges associated with substantial memory requirements, compounded by inherent limitations in receptive fields, local context understanding, and the sharing of weights.

Substantial research has been conducted to overcome the challenges encountered in typical transformer-based computer vision models. These works could be broadly classified into two categories: hybrid transformers, which typically use convolutional networks to overcome limitations in understanding local contexts, and pure transformers. Pure transformer-based computer vision models exclusively employ self-attention mechanisms for capturing long-range dependencies and global contextual information. Dosovitskiy et al. [35]’s ViT marks a pioneering milestone as the first to leverage transformers for computer vision tasks. Li et al. [38] introduce a PMVT transformer model, a novel approach that replaces the convolution block in MobileViT with an inverted residual structure that uses a 7

A lightweight model, Tiny-LeViT, based on the hybrid transformer-CNN in [39], trained on five distinct datasets, at least 9% higher frame rates. Lu et al. [122] introduced an efficient approach for grape leaf diagnosis using ghost convolution [123] and transformer networks. They curated the GLDP12k dataset, which comprises 11 classes and 12,615 images. Transfer-learned on the ImageNet dataset, the Ghost Enlightened Transformer model (GeT) outperformed other models, offering 98.14% accuracy, 1.7 times faster processing (180 frames per second), and 3.6 times lighter weight (1.16 million) than MobileNetV3_large [124]. Wu et al. [125] present CvT, a novel model seamlessly incorporating convolutions into the ViT framework. This integration introduces a convolutional token embedding layer and a convolutional transformer block, skillfully amalgamating the shift, scale, and distortion invariance inherent in CNNs with the dynamic attention and global context of transformers. It adopts a hierarchical structure, systematically reducing the number of tokens while increasing the token width, emulating the spatial downsampling impact observed in CNNs. Their CVT-w24 variant obtained a top-1 accuracy of 87.7% on the ImageNet-1k val dataset.

Li et al. [126] propose LocalViT, where they achieve the locality mechanism by integrating depth-wise convolution into the feed-forward network of each transformer block. The utilisation of depth-wise convolution focuses on individual channels of the input, facilitating the capture of local relationships among pixels within a confined neighbourhood. This approach differs from self-attention, which concentrates on discerning long-range dependencies across all elements in the input. Guo et al. [127] present the CMT block, comprising a Local Perception Unit (LPU), a Lightweight Multi-head Self-Attention (LMHSA) module, and an Inverted Residual Feed-Forward Network (IRFFN). The LPU, employing depth-wise convolution, ensures translation invariance while capturing local information, preserving model integrity. Within the LMHSA module, depth-wise convolution enhances self-attention, reducing computational load and introducing relative position biases for improved performance. The IRFFN adapts the original ViT feed-forward network (FFN) by incorporating an inverted residual block with carefully placed shortcut connections, enhancing outcomes.

In [128], the embedding stem of the ViT was substituted with a minimal convolutional stem, which altered the early visual processing of the ViT, accelerating the convergence of the models and offering valuable insights into optimising the training dynamics of ViT models. The convolutional stem design employed stacked 3

3.2 Computational and Hardware Demands for Agricultural AI System

When implementing deep learning models in actual agricultural environments, hardware capabilities, energy limitations, and the scalability of computational resources must all be carefully considered. The dataset amount, the model’s complexity, and the real-time processing demands in field settings significantly affect the deployment’s viability.

High-End Server/Cloud-Based Inference:

For training or running large-scale inference using heavy models such as VGG-19, DenseNet, or ViT:

• Recommended Hardware:

These setups are suitable for:

Drawback: Requires consistent connectivity and is expensive to maintain in remote areas.

Edge Devices for On-Field Deployment:

Lightweight models such as Reduced MobileNet, Tiny-LeViT, and SqueezeNet are preferable for field deployment (e.g., on drones, smartphones, and smart sensors).

• Recommended Edge Devices:

These devices can run models with <10 M parameters and offer real-time predictions with low latency.

Benefits:

Cost-effective and portable

No internet dependency

Real-time feedback for pest/disease alerts

Model Optimization Techniques for Deployment:

• Quantization and pruning: Reduce model size and computation for faster inference.

• Knowledge distillation: Transfer knowledge from larger models to smaller student models.

• ONNX/TFLite Conversion: Convert models to lightweight formats for mobile and embedded systems deployment.

Scalability Considerations:

• In large-scale operations covering hundreds of acres, distributed sensing and computing systems are needed.

• Integration with UAVs (drones), IoT sensors, and robotic arms requires:

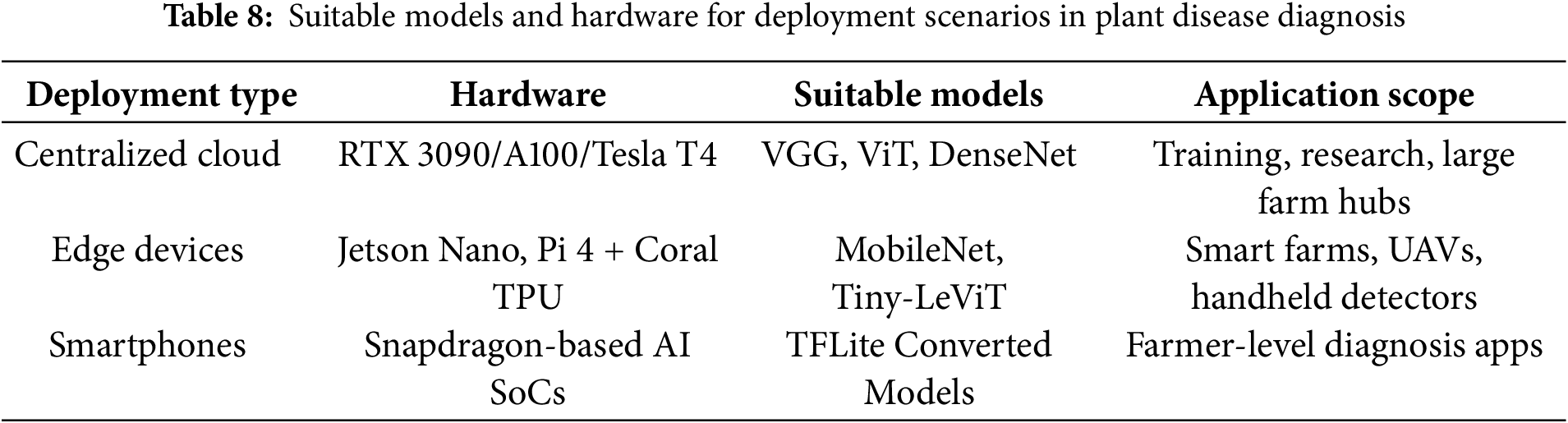

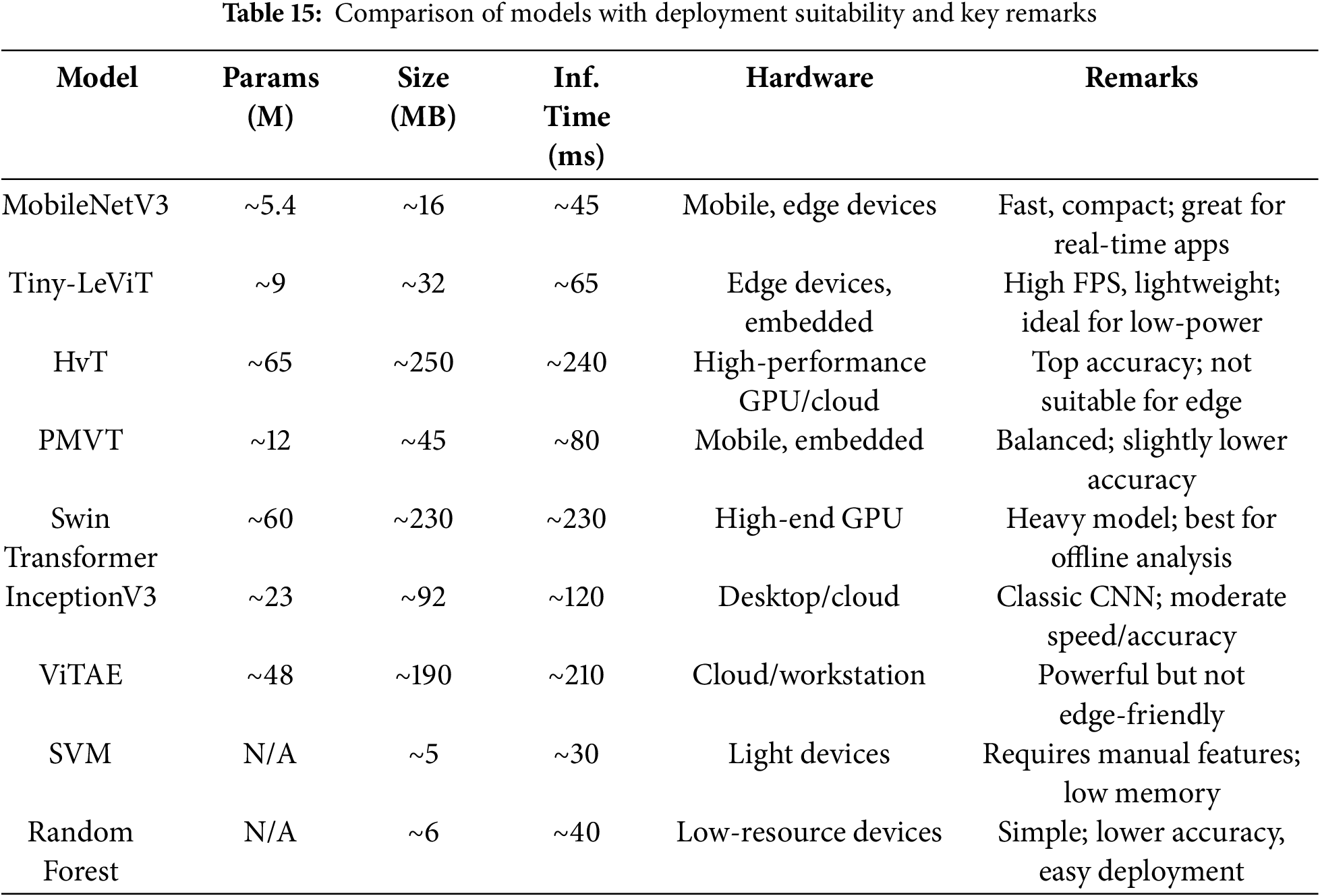

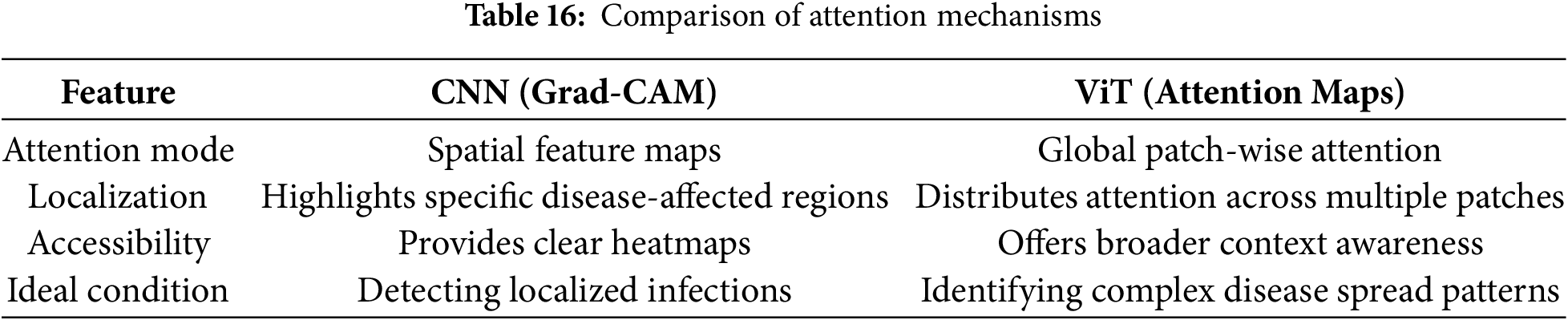

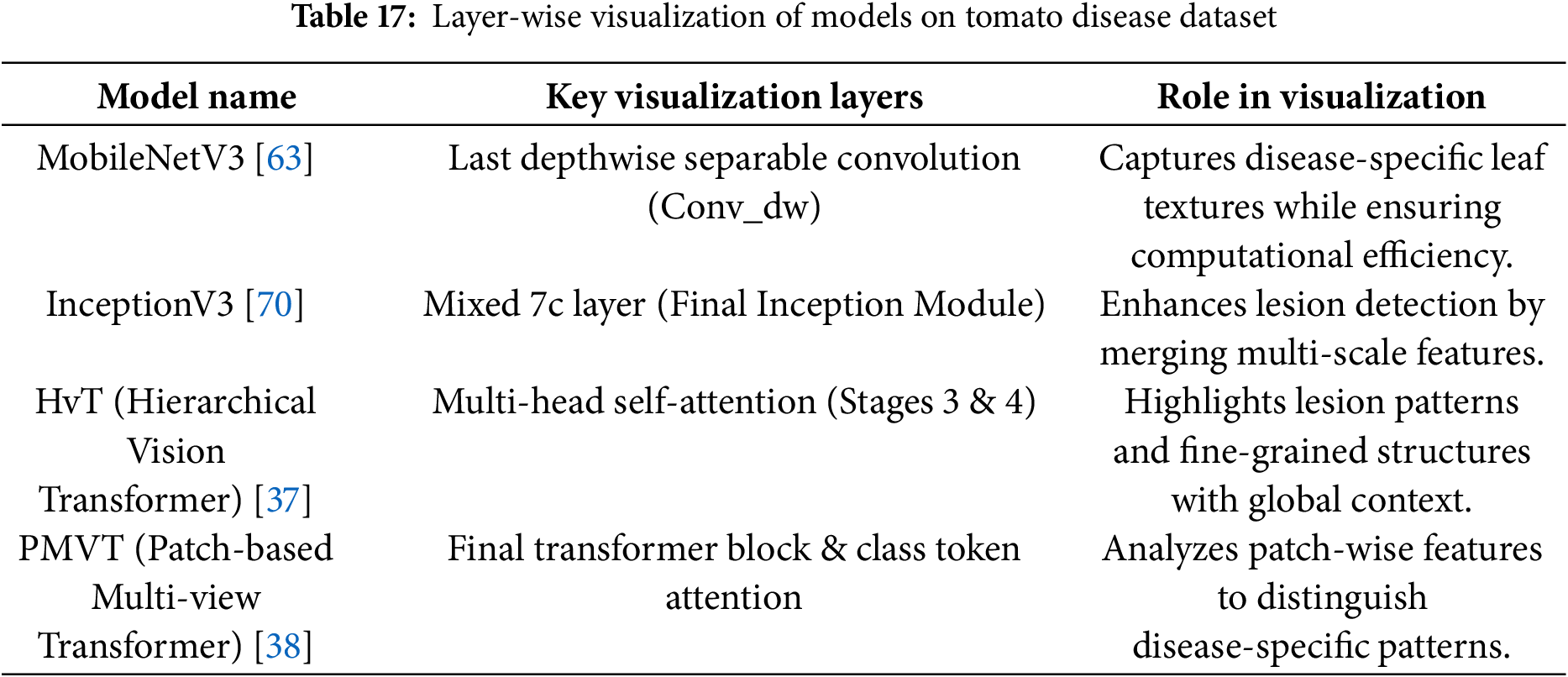

Table 8 summarizes that large models are supported by cloud-based systems for centralized decision-making, but they need steady connectivity. Lightweight models allow offline, real-time inference on smartphones and edge devices. Every platform involves trade-offs among accuracy, latency, and power efficiency; field circumstances and scale should be considered when making deployment decisions.

4 Hyper-Spectral Imaging for Plant Pest and Disease Identification Using Deep Learning Architectures

Several imaging techniques have been developed for the early recognition of infections and pests that affect plants, including spatial and spectral imaging (multispectral) [130], infrared imaging, and hyperspectral imaging [131]. Hyperspectral imaging is an effective method of acquiring images that store information over hundreds of continuous, narrow spectral bands, much wider than the human eye can see. The ability to identify minute changes in plant tissue, including moisture content, chlorophyll content, or disease symptoms, makes it particularly useful for detecting early plant diseases and insect infestations. For example, in [132], the “Tomato Spotted Wilt Virus (TSWV)” was discovered in capsicum vines using hyperspectral imaging technology (VNIR and SWIR) as well as many machine learning-based algorithms. In another work [133], tomato plant diseases were identified using a hyperspectral imaging technique, where the “Region of Interest (RoI)” was determined, and a “feature ranking-KNN (FR-KNN)” architecture achieved an acceptable outcome for the identification of healthy and disease-affected plants. Recently, HSI was employed for apple disease identification, and the “Orthogonal Subspace Projection” method, an unsupervised feature selection approach, solved the redundancy problem [134]. A novel hyperspectral imaging-based methodology was introduced [135] to detect anthracnose in tea plants. Using these bands, two novel disease indexes were produced: (a) “Tea Anthracnose Ratio Index (TARI)” and (b) “Tea Anthracnose Normalized Index (TANI)” its experimental analysis revealed that the detected disease-sensitive bands were at 542, 686, and 754 nanometers, which were learnt by evaluating the spectral sensitivity. Based on an idealised set of wavelet coefficients for disease scab identification, “unsupervised classification” and “adaptive two-dimensional thresholding” techniques had been formalised. Another study [136] demonstrated that, under varying mangrove pest and disease severities, sensitive spectral and textural features can be precisely retrieved from HSI leaf data using the Successive Projection Algorithm (SPA) and Random Forest (RF) techniques.

Hence, combining HSI with machine learning approaches is advantageous. For example, in [137], machine learning methodologies were designed for HSI to solve several issues in the agricultural domain. The “citrus canker” is a remote sensing methodology that detects disease of sugar belle leaves and green fruit under laboratory conditions in [138]. Underdiagnosed, intermediate, and delayed disease symptoms growth phases were examined using a hyperspectral imaging system (400–1000 nm). In this work, the classification approaches “radial basis function (RBF)” and “K-nearest neighbour (KNN)” are employed. An identical UAV-mounted imaging system also detected the “citrus canker” on tree canopies on a large farm. Moreover, the HSI-based multi-step method was introduced [139] to differentiate several plant stresses. The process involved: (1) a “Continuous Wavelet Analysis (CWA)” based feature extraction process to detect and discriminate the Tea plant stresses; (2) the “SVM” and “K-means clustering” method detected some lesion regions on the tea leaves; (3) The creation of a system that uses the Random Forest (RF) algorithm to identify and distinguish between three tea plant “stresses—tea green leafhopper”, “anthracnose”, and sunburn (disease-like stress). For the detection of wheat disease, the multispectral imaging method based on the Random Forest (RF) predictor achieved an overall accuracy of 89.3% [140]. In [141], a machine learning approach based on HSI (380–1020 nanometres) identified the “powdery mildew” disease growth phases (asymptomatic, early, midway, and late disease phases) in the plant of squash. The “Support Vector Machine”(SVM)-based HSI system detected different plant diseases, and the developed model obtained 86% overall accuracy [142]. In their research, Javidan et al. [143] effectively utilised the PlantVillage dataset to identify and classify grape leaf diseases, encompassing black measles, leaf blight, and black rot. Employing K-means clustering, they delineated affected regions and extracted features from three distinct colour models: L*a*b, HSV, and RGB. Using multi-class support vector machines (SVMs), their method obtained 98.71% accuracy by employing Grey-level co-occurrence matrix features and 98.97% accuracy by employing principal component analysis (PCA) on the extracted features.

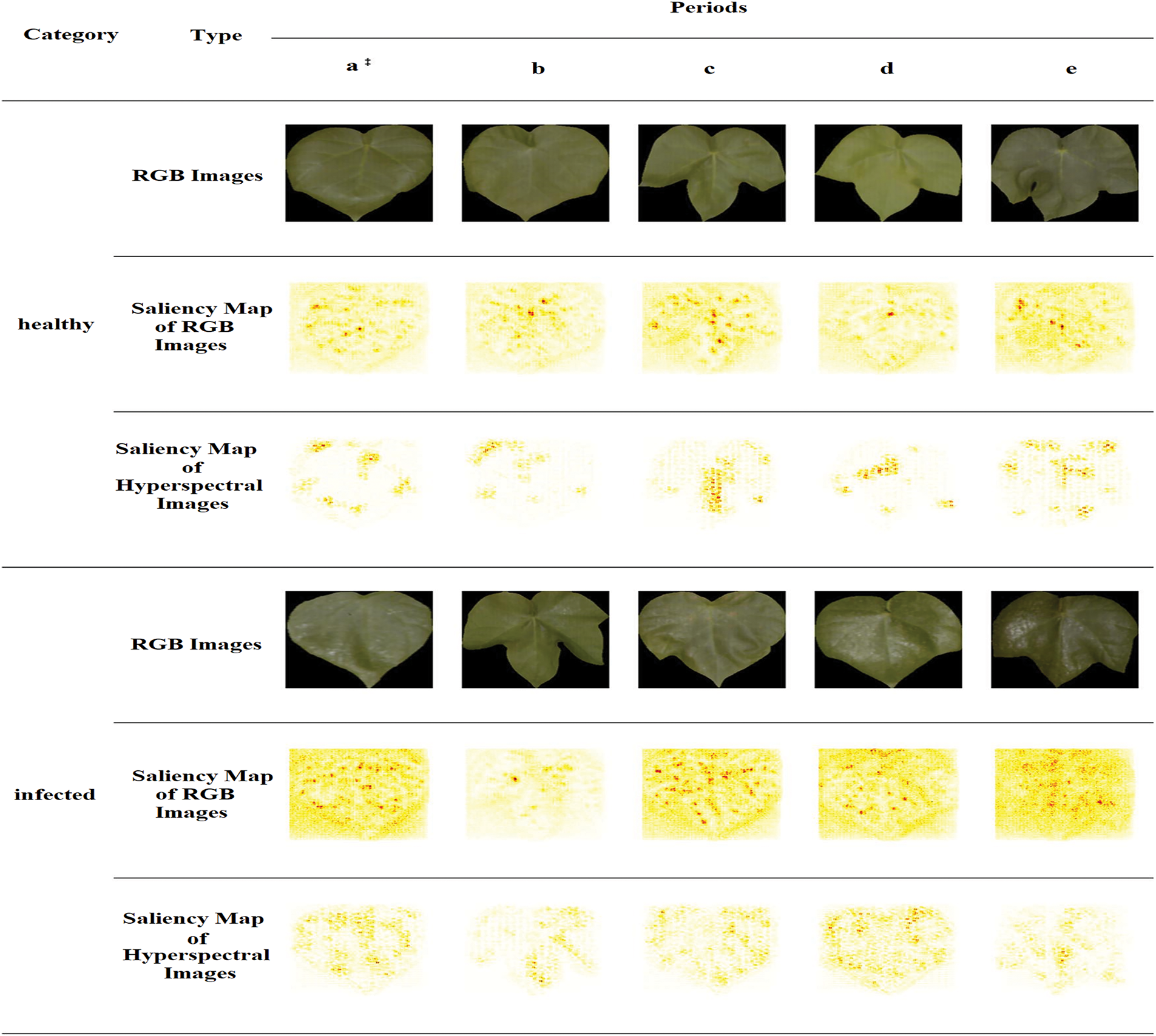

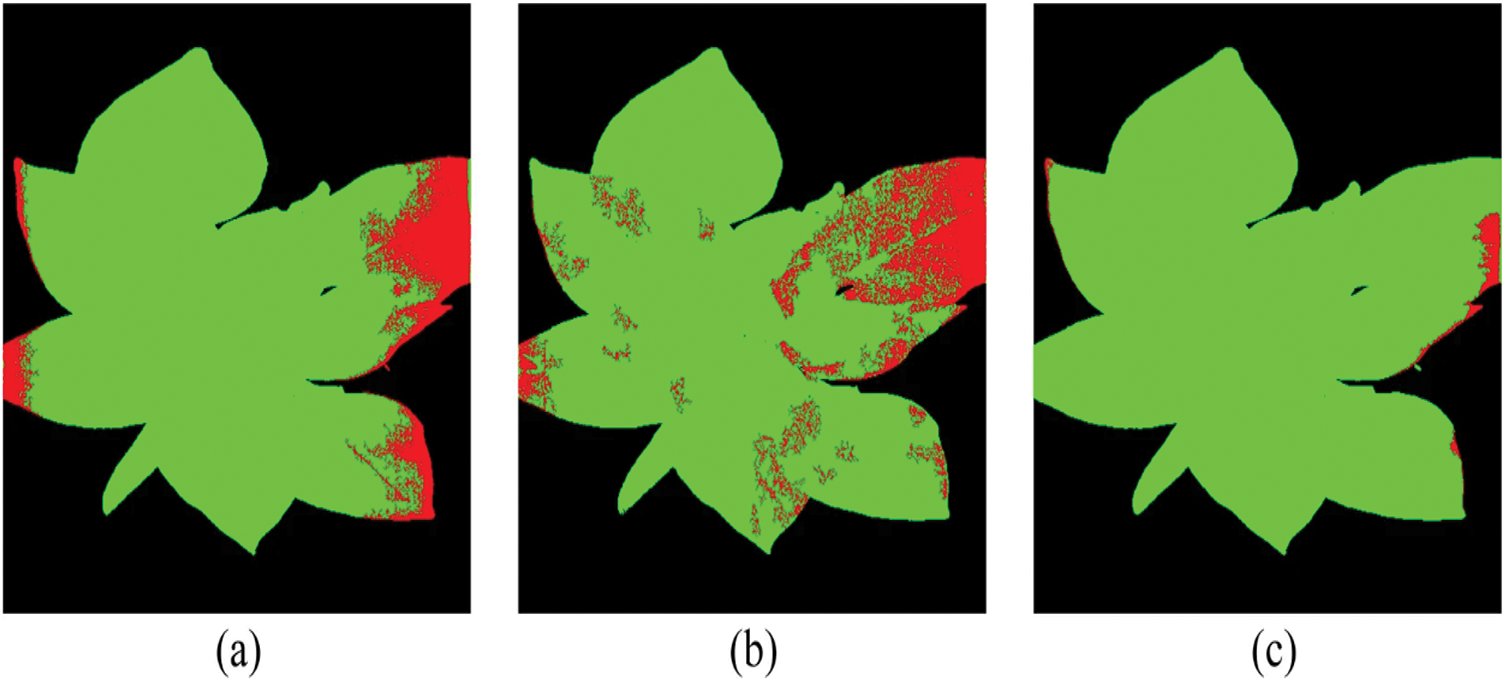

Several scientific studies on plant pests and disease monitoring have employed deep learning designs based on hyperspectral imaging (HSI) to achieve flawless visualisation of symptoms caused by plant diseases and pest attacks. To classify the hyperspectral images, a hybrid methodology using deep convolutional neural networks (DCNN), logistic regression (LR), and principal component analysis (PCA) has been developed. This methodology produced more advanced results than previous methods for classification tasks. In [144], the proposed “3D-CNN” architecture identified the charcoal rot disease of soybean plants by assessing the hyperspectral images collected at 240 various wavelengths in the range of 383–1032 nanometers. The trained model was developed in this work by applying a saliency map and visualising the best delicate pixel locations to smooth the classification process. Moreover, convolutional neural networks (CNNs) are utilised on visible/near-infrared (Vis/NIR) based hyperspectral imaging (HSI) systems (376–1044 nm) [145] for the detection of aphid-attacked lesions in cotton leaves where the RGB and HSI specimens are compared head-to-head, along with a comparison of the suggested 1DCNN and 2DCNN, and a test set of images of healthy and contaminated leaves in RGB and hyperspectral colour; the depiction of saliency maps for test sets contains two classes of samples over five periods, and the labels of each sample are correctly predicted in Fig. 13. The overfitting issue was addressed in [146] where a detailed observation-based comparison work was established between different deep learning methodologies of 1D and 2D-CNN (2D-CNN performs better), “Long Short-Term Memory”, and “Gated Recurrent Unit (GRU)” (both faced the overfitting issue), and “2D-CNN-LSTM/GRU” (yet overfitting occurs). Hence, the combination of a convolutional and a “bi-directional gated recurrent network” (termed as “2D-CNN-BidLSTM/GRU”) model was applied to the HSI images to address overfitting issues, ultimately yielding an accuracy of 0.73 and an F1-score of 0.75 for wheat disease identification [147]. In [148], “Outlier Removal (OR-AC-GAN)”, a unique “generative adversarial network” (GAN)-based hyperspectral proximal-sensing method, detected tomato plant infections before clinical disease signs manifested (as presented by Fig. 14). The green regions in the figure indicate healthy plant tissue, while the red areas highlight lesions. To identify diseases and infections caused by pest attacks on tomato plants in [149], a novel deconvolutional network for feature visualisation methodology was proposed to examine the effects of colour and spectral information in images of tomato leaves. Another cutting-edge, deep learning-based hybrid strategy combining terahertz imaging technology and near-infrared hyperspectral imaging technology was utilised to determine the bacterial blight immune rice seeds.

Figure 13: Both RGB and hyperspectral images’ saliency maps. RGB images are shown by 2D CNN saliency maps, and hyperspectral images are shown by 3D CNN saliency maps [145]

Figure 14: Results of specific tests conducted on plants using the new “OR-AC-GAN”. “Tomato spotted wilt virus (TSWV)” pixels are marked as red, whereas healthier pixels are marked as green [148]

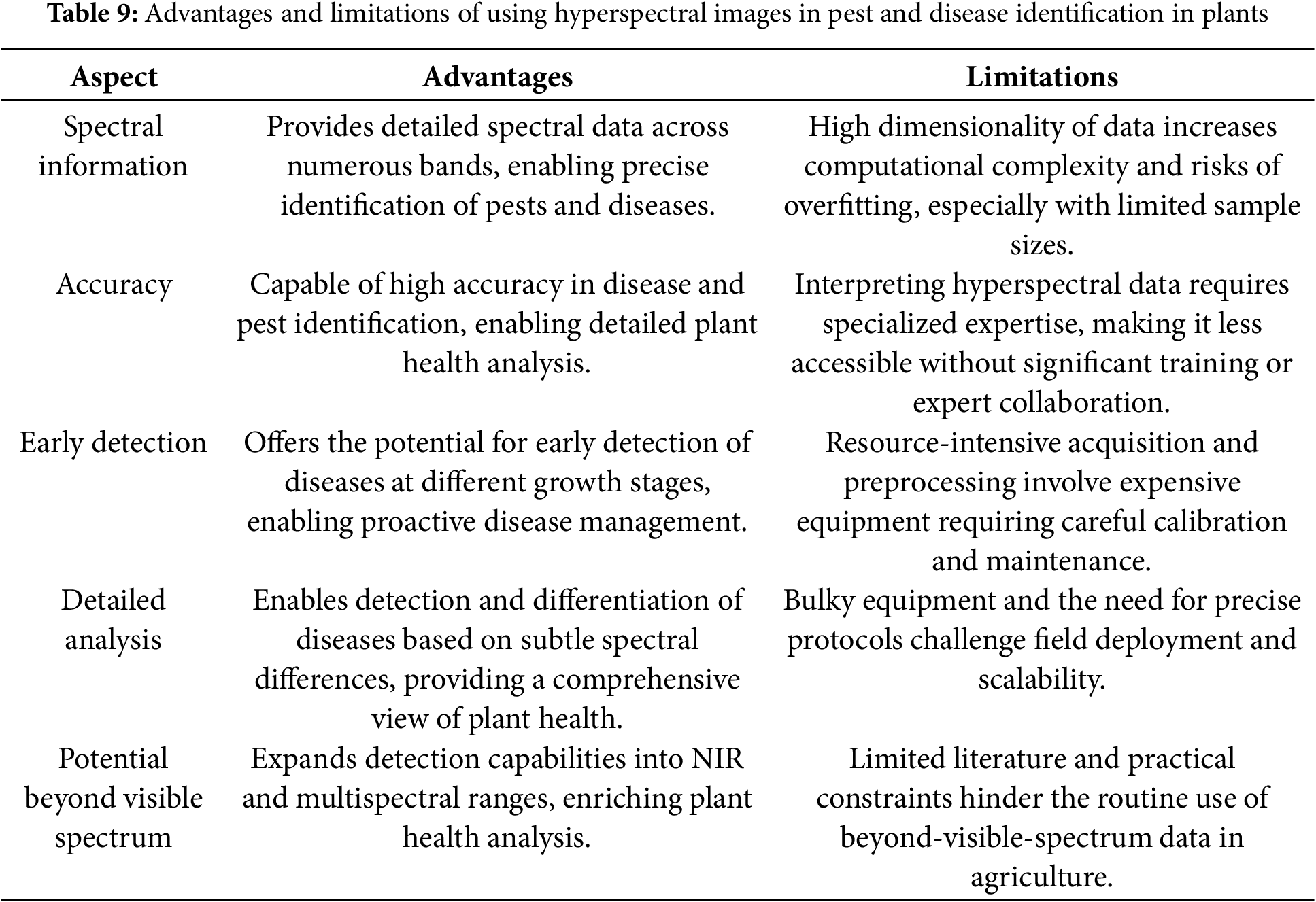

Despite the emergence of several HSI-based deep learning architectures for plant disease diagnosis, significant research gaps remain in addressing environmental variability. The goal of this research is to enhance the robustness of disease diagnosis under challenging conditions, such as changes in illumination, spectral reflectance, and field occlusion situations [150]. Table 9 outlines the advantages and limitations of hyperspectral imaging in disease and pest detection in crops and plants.

Additionally, Table 10 provides a comprehensive summary of recent research using several deep learning architectures for the diagnosis of plant diseases and pests. This analysis reveals that ResNet, VGG, and DenseNet are the most widely used models, primarily due to their robustness against a diverse range of crop varieties and environmental conditions. MobileNet and its lightweight variants are preferred for real-time detection on edge devices. To increase generalizability, augmentation techniques, including flipping, rotation, and contrast enhancement, are often employed. Notably, transformer-based methods are becoming highly accurate and potent substitutes, particularly when complex symptoms or extensive field data modeling are needed.

5 Research Challenges and Possible Solutions

In this section, we have discussed research gaps and potential solutions for detecting plant pests and diseases. After thoroughly analysing each research work in this manuscript, few major challenges are observed.

• Dataset Size & Preprocessing Issue

• Early disease and pest attack detection

• Detection performance under the illumination and occlusion conditions

• Detection Speed

• Overfitting and Generalizability in Deep Learning

• Computational Challenges of Transformers

• Model Deployment Strategies for Real-World Agricultural Applications

• Integration of Deep Learning Models into Agricultural Pest Management Systems

Ultimately, our discussion demonstrated the significant strides made by deep learning in identifying plant diseases and pests in this subsection:

• Recent Breakthroughs and Emerging Trends of Deep Learning in Plant Pest and Disease Detection

5.1 Dataset Size & Preprocessing Issue

Plant disease and pest identification are specific areas of the agricultural domain. The available plant disease and pest datasets for this task need to be more extensive and comprehensive. Self-collected datasets often lack the thoroughness, accessibility, scale, and labeling quality of publicly available datasets. For example, some plant diseases are rare, and retrieving damaged plants is expensive and time-consuming. This restricts the efficacy of deep learning applications in plant disease detection and pest recognition because, in many cases, only a few images are gathered for training. Three different types of solutions have been mentioned here:

5.1.1 Data Augmentation and Generation

A major element of deep learning model training is data augmentation. Data augmentation strategies are typically used to add modified existing data to the training data.

Some popular data augmentation techniques used in the plant pest and disease detection domain are:

(a) Rotation: To replicate various orientations, rotate images by 90, 180, or 270 degrees, etc.

(b) Flipping: To create the illusion of mirror reflections, flip images vertically or horizontally.

(c) Scaling: images can be scaled up or down to represent diverse distances or resolutions.

(d) Translation: Simulating various locations by translating images vertically or horizontally. Data augmentation has the potential to substantially influence the effectiveness of deep learning models concerning illumination, occlusion, and other environmental conditions in several ways:

(i) Variations in Lighting:

• Colour jittering: By incorporating images with varying lighting conditions (such as brightness, contrast, and saturation), models may be trained to be invariant to lighting changes.

• Gamma correction: Gamma correction may be applied to images by simulating various lighting situations, strengthening models against lighting fluctuations.

(ii) Occlusion:

• Random cropping: Models can learn to focus on pertinent features by simulating occlusions through random image cropping.

• Occlusion simulation: Models can learn to recognize objects across varying surface appearances through texture and pattern (such as rectangles or polygons) augmentation. Occlusion issues are discussed comprehensively in the “Detection Performance Under the Illumination and Occlusion Conditions” section.

(iii) Environmental Factors:

• Weather simulation: Models can learn to identify objects in various environmental situations by simulating weather conditions (such as rain, snow, and fog).

• Texture and pattern augmentation: Models can be trained to identify objects despite differences in surface appearance by adding images with various textures and patterns.

Data augmentation has several advantages. Here are some of the key benefits:

• Lowers the price of labelling and collecting data.

• Improves the model’s prediction accuracy.

• Prevents data scarcity to get optimal results.

• Reduces the probability of data overfitting.

• The variability and flexibility of the model increase.

• Resolve the class imbalance issues for classification.

Moreover, “Generative Adversarial Networks (GANs)” [151] and “Variational Autoencoder (VAE)” [152] can be employed to generate various samples, thereby improving datasets on plant diseases and pests.

Table 11 was added to improve readability and facilitate reproducibility. It presents the most popular preprocessing methods systematically, describing their particular uses and providing examples of studies that have used them to detect pests and plant diseases.

5.1.2 The Use of Fine-Tuning and Transfer Learning

Transfer learning mitigates minor dataset limitations. The goal of employing transfer learning is to transfer information from a large dataset to a smaller dataset that is roughly similar in nature. Unlike in a deep learning model, where the parameters are adjusted or changed, this approach can prove extremely helpful for accurately identifying plant diseases and pests, making it more affordable to train the model and enabling CNN to adapt to a small dataset. Transfer learning has the following benefits:

• Transfer learning increases learning speed.

• Transfer learning offers a deep learning model to achieve higher performance and obtain accurate results.

Vallabhajosyula et al. employed a deep-set neural network (DENN) to detect plant infections, utilising transfer learning to optimise the model’s parameters, thereby outperforming other cutting-edge methods [153]. A transfer-learned model is proposed for detecting diseases and pests in hot peppers in [154]. A deep CNN was applied by Huang et al. to categorise eight species of tomato plant pests. Here, transfer learning effectively reduces the training time [155]. In [156], a transfer learning approach using a feature extraction-based technique is developed to identify pearl millet mildew. This work utilises transfer learning to address the issue of small datasets.

5.1.3 Real-Time Detection Model Design

A real-time detection model can minimise the requirements of the plant pest and disease dataset. For example, a real-time deep-learning architecture for identifying tomato plant diseases was created by Fuentes et al. Different environmental issues, such as illumination effects, background and weather variations, etc., have been considered in this paper [157]. An optimized Inception-v3 architecture is demonstrated in [158] for the identification of tomato disease in its early stages. It uses its auxiliary classifiers and factorized convolutions to achieve 96.4% accuracy on datasets gathered in the field. Real-time deployment in precision agriculture is made possible by the model’s computational efficiency, which outperforms conventional CNNs in terms of speed and diagnostic reliability in a range of illumination situations (14.8 FPS on smartphones and 32.5 FPS on UAVs).

5.2 Early Disease and Pest Attack Detection

To sustain healthy production, it’s also crucial to promptly identify and treat plant diseases and pest lesions. Several research studies have found several early-stage detection issues.

Small Lesion Area Detection

Several deep neural network downsampling procedures have occasionally led to the deep neural network ignoring minor lesion items. Separating the small lesion areas of the low-resolution images is quite challenging. Additionally, background noise issues in the collected images may lead to false identification. Modern attention modules, including the Convolutional Block Attention Module (CBAM) in conjunction with spatial transformers, have been demonstrated to increase significantly object localization in agricultural images, which can help with the detection of small-scale disease symptoms in crops [159]. The principle of the mechanism is to identify the region of interest quickly and overlook other insignificant areas of the target image. After that, the weighted sum methodology with a weighted coefficient is applied to separate the features, which leads to invalidating the background noise of the image. Then, to create a new combination of features to lower the distortion, the softmax function merges the feature imagery with the initial feature image. Typically, the attention mechanism can construct a prominent image, eliminate small-scale items from that image, and disregard the background. In [160], a Recurrent Neural Network (RNN) is used with the “attention mechanism” to automatically detect lesion areas and extract key characteristics for disease classification.

5.3 Detection Performance under the Illumination and Occlusion Conditions

Environmental conditions, such as illumination and occlusion, also influence the detection accuracy.

Variable illumination significantly reduces the detection accuracy of deep learning models. The majority of research efforts utilise existing datasets that contain images collected under predetermined lighting conditions. Hence, the image processing stages can be simplified. These images differ from those acquired in a live situation. As the natural light changes frequently, sometimes colour distortion occurs. Additionally, the differences in angle and distance during image capture also affect the features of the images, which can lead to poor visual recognition of pests and diseases.

The possible solutions are given below:

• Develop algorithms that adapt to changing illumination conditions by dynamically correcting colour and brightness variations in real time.

• Augment existing datasets with synthetic images generated under diverse lighting scenarios, providing the model with a more comprehensive understanding of varying illumination conditions.

• Explore the use of multi-spectral or hyperspectral imaging technologies, which can capture a broader spectrum of light and potentially mitigate the impact of fluctuations in natural light.

• Expand datasets by incorporating images with varying illumination levels, enabling the model to learn and adapt to more diverse lighting conditions.

Most research works in the plant pest and disease detection area have avoided the occlusion problem and rarely considered occluded images. The detection accuracy under occlusion is always low. Yet, occlusion problems frequently occur in real-time settings. Certain occlusions are caused by modifications in branch occlusion, leaf occlusion, light occlusion, etc., and they happen at random in various plant parts. So, the noise overlap and shortage of features in images are caused by the occlusion, which directly impacts recognition accuracy and produces false or missed detection.

Advanced algorithms based on deep learning have significantly improved the diagnosis of plant diseases and pests in various environmental conditions, including occlusion [161]. This sort of study application increases the efficacy of deep learning techniques in a natural context. The frameworks of deep learning architectures should be improved in the future to reduce occlusion issues.

The potential fixes are given here:

• Utilizing multi-view imaging approaches involves capturing multiple perspectives of the same scene. By integrating images from various angles, the impact of occlusion is mitigated, enabling the model to obtain more comprehensive information about the target.

• Algorithms specialized in recognizing partially occluded objects improve feature identification despite obstructions.

• GANs can be employed to generate synthetic images that simulate occlusion scenarios.

• Incorporating temporal information from consecutive frames in video sequences can contribute to occlusion resolution. By analyzing the movement and interactions of objects over time, the model gains insights that help overcome occlusion challenges.

• Using depth sensors to reduce the effects of occlusion and offer 3D information, such as lidar or stereo cameras.

Although deep learning is more accurate than conventional techniques in identifying diseases and pests, its high processing requirements may cause latency, which could delay prompt action in actual field situations. For real-time applications, this delay is inappropriate. Speed can be increased by reducing processing or training, but accuracy is at risk. Therefore, effective models must strike a delicate balance between speed and accuracy.

The plant disease and pest identification methodologies have three major sections:

• Data collection and labeling

• Model training

• Model interpretation

Real-time pest and disease detection relies heavily on model interpretability, as understanding the justification behind forecasts not only promotes trust and informed decision-making but also supports the primary objective of achieving high predicted accuracy in real-world agricultural applications. Therefore, more attention should be given to the model’s efficiency. In [162], a unique “CenterNet” architecture built on DenseNet-77 is suggested for classifying plant diseases. According to the experiment results, the custom “CenterNet” model outperforms standard deep learning models currently in use. More transparent pest detection systems are now possible thanks to recent developments in explainable AI. In [163], a “Grad-CAM++ enhanced YOLOv5” architecture outperformed conventional CNNs in speed (28 FPS) and interpretability measures, demonstrating 96.2% classification accuracy while offering visual explanations of disease localization.

5.5 Overfitting and Generalizability in Deep Learning for Agricultural Pest and Disease Detection

Although deep learning models can accurately classify plant diseases and pests, they often encounter two significant issues: overfitting and poor generalizability to real-world scenarios.

Overfitting:

Overfitting occurs when models learn noise and dataset-specific artifacts rather than generalizable patterns. Regarding the identification of plant diseases:

• A lot of research depends on specially chosen datasets, such as PlantVillage, which include excellent, noise-free images taken in controlled settings. When real-world images with different backgrounds, lighting, occlusion, or environmental noise are presented to models trained on these datasets, they frequently exhibit subpar performance.

• When there is a shortage of labeled agricultural data, deep models like VGG16, ResNet-50, or DenseNet are susceptible to overfitting because of their high parameter counts.

Mitigation Strategies:

• Rotation, flipping, brightness modulation, and other data augmentation techniques are used to increase robustness.

• Overfitting can be decreased during training by utilising dropout layers, batch normalisation, and regularisation strategies.

• One popular method of addressing data scarcity is to transfer learning from pre-trained models on large-scale datasets (such as ImageNet).

Generalizability:

Generalization refers to the ability of a model to continue performing in unknown circumstances, such as:

• Varying crop species, geographic locations, and imaging modalities (e.g., smartphone images vs. hyperspectral), is known as generalization.

• Symptoms of the disease vary depending on the stage of plant growth or environmental conditions (e.g., soil type, humidity).

Challenges:

• It’s possible that Deep Learning models developed using datasets unique to a region or crop won’t generalize to other crops or conditions.

• Many currently misused models assume that input image quality and crop types are uniform.

Potential Solutions:

• Developing and disseminating extensive, varied, annotated datasets encompassing various crops, disease stages, and situations.

• Models can be adapted to new domains with less labeled data by investigating domain adaptation and few-shot learning.

• Transformer architectures and attention mechanisms are promising but still being investigated since they can more accurately model complex characteristics and long-range dependencies in various scenarios.

Future Research Directions: There are several advantages to the field:

• Comparing models to real-world datasets from field circumstances using suitable criteria (such as robustness and uncertainty) that go beyond accuracy.

• To comprehend why models fail in particular scenarios, explainable AI strategies are being investigated.

• Deep Learning can improve reliability with expert-driven rules or multimodal sensing (visual and hyperspectral).

5.6 Computational Challenges of Transformers

The excellent ability of transformer-based models to capture global features has drawn recent interest in agricultural image analysis. However, their application comes with certain limitations. These models require substantial memory footprints, lengthier training durations, and computational resources when used on large datasets. It’s critical to understand the resource implications and practical challenges of training and implementing these models in agricultural settings before exploring their potential. A summary of the main obstacles to training transformer models on large datasets is provided below:

• High Computational Cost: Vision Transformers (ViTs) scale quadratically with input size due to self-attention, demanding greater computational resources than CNNs. Therefore, they are demanding in terms of GPU memory, training duration, and energy usage.

• Data-Hungry Nature: Transformers frequently perform poorly on tiny or modestly sized datasets unless they have been extensively enhanced or pre-trained on large corpora (like ImageNet). In contrast to CNNs, which can function rather well with less supervision, they may overfit or fail to generalize in the absence of enough data.

• Training Complexity: Transformers are more difficult for inexperienced practitioners to optimize since they usually require longer convergence times and meticulous hyperparameter adjustment (such as learning rate warm-up, attention dropout, and positional encoding techniques).

• Limited Edge Deployment: Unless compressed or hybridized (e.g., using Tiny-LeViT or PMVT), most transformer models are unsuitable for edge devices or low-power situations due to their huge parameter sizes and longer inference times.

• Ongoing Research: Current research aims to reduce computing costs and maintain accuracy by making transformers lighter and faster using token pruning, sparse attention, or hybrid CNN-transformer designs.

5.7 Model Deployment Strategies for Real-World Agricultural Applications

The practical implementation in actual agricultural settings presents a distinct set of challenges, even though model creation and accuracy metrics are essential to advancing research. High-performing models are necessary for successful deployment, but so is careful integration with the hardware and infrastructure limitations of agricultural settings. The adaptation of various model designs for deployment across edge devices, mobile-based applications, and centralized cloud platforms is examined in this section. We aim to bridge the gap between theoretical performance and real-world field utility by evaluating deployment feasibility, benefits, and constraints. This will provide recommendations for the practical implementation of AI-driven systems for monitoring plant diseases and pests.

Centralized Deployment of Cloud Services:

How it is used: Models hosted on cloud servers (e.g., AWS, Google Cloud, Azure) are accessible via APIs or mobile/web interfaces.

Benefits:

*high processing power for intricate models (such as Swin Transformer and HvT).

*Simple scalability and integration with analytics tools and big datasets.

*Ideal for large-scale disease surveillance and teaching.

Limitations:

*Requires continuous internet access, which may not always be available in rural areas.

*Higher latency makes it unsuitable for field settings requiring real-time inference. Large-scale operations may raise concerns about data privacy and transfer costs.

Edge Computing (Smart Cameras, Embedded Systems, UAVs):

How it is used: Devices such as the Jetson Nano, Raspberry Pi, or field-deployed UAVs are equipped with lightweight variants, including MobileNetV3 and Tiny-LeViT.

Benefits:

*Inference in real time without the need for connectivity.

*lowers the cost of data transfer by local processing.

Allows for precise spraying or targeted treatment.

Limitations:

*Model size and complexity are constrained by low memory and computing power.

*Requires model design that takes hardware into consideration (quantization, pruning).

*Rain, heat, and dust all have an impact on how well a gadget works.

Mobile Device-Based Implementation:

Deployment method: Models are transformed into formats like as ONNX or TensorFlow Lite and then made available through Android and iOS applications.

Benefits:

*Extremely reachable by field technicians and farmers. *With built-in cameras, it is both portable and easy to use.

*Uses preloaded models and operates offline.

Limitations:

*limited accuracy and speed of inference because of device limitations.

*For non-technical users, the design of the app must be intuitive.

*Results may vary depending on the lighting and camera quality.

5.8 Integration of Deep Learning Models into Agricultural Pest Management Systems

The usefulness of deep learning in agriculture is based on both model performance and the models’ ability to be integrated into current frameworks for managing pests and diseases. By connecting data-driven diagnostics with decision-making tools, integration provides farmers, agronomists, and policymakers with relevant information. This section examines the main routes for incorporating deep learning models into agricultural processes, emphasizing the advantages, practical limitations, and tactical factors for practical implementation.

Key Integration Pathways:

• Field Scouting and Mobile Apps: Farmers can use trained models integrated into mobile apps to help them with regular field inspections. For example, Android apps have utilised MobileNet-based models to identify tomato diseases in real-time.

• Relation to Decision Support Systems (DSS): Model results can be fed into DSS platforms, which produce treatment suggestions according to environmental factors, crop stages, pest species, and symptoms that have been identified.

• Automated Monitoring Systems: Geo-tagging and time-stamped detections for spatial analytics enable continuous monitoring of big farms using drones, IoT-enabled cameras, and robotic vehicles.

• Feedback Loop for Continuous Learning: Cloud systems integrate real-time detections to refine models, augment datasets, and generate region-specific detectors.

• Agricultural Extension Services: AI-assisted technologies can be utilised by governments and advisory programs to enhance outreach and provide smallholder farmers with timely diagnoses.

Advantages of Integration:

• Early Intervention: It is made possible by timely detection, thereby reducing yield loss and disease spread.

• Precision Intervention: It reduces the environmental effect and usage of pesticides by enabling localized treatment.

• Scalability: Adaptable to different farming scales, areas, and crops.

• Empowerment: Improves smallholder farmers’ and experts’ ability to make decisions.

• Data-Driven Insights: Enables centralized data aggregation for long-term monitoring and policy planning.

Limitations and Restrictions:

• Connectivity and Infrastructure: The utilization of cloud-based functionality and real-time updates is restricted in many rural locations due to inadequate internet connectivity.

• Hardware Restrictions: The processing power of smartphones and edge devices is limited, which limits the speed and complexity of the models.

• Data Security and Privacy: Centralized systems that handle user data need to make sure that privacy laws are followed.

• Model Generalization: When applied to unknown data from several locations or in various environmental circumstances, many models exhibit subpar performance.

• User Adoption and Literacy: In low-resource environments, farmers may need training to use AI-powered products efficiently.

6 Recent Breakthroughs and Emerging Trends of Deep Learning in Plant Pest and Disease Detection

Recent developments have significantly enhanced the identification of plant pests and diseases using deep learning, yielding innovative solutions that improve precision, effectiveness, and scalability. Among the significant developments are:

• AI-Assisted Disease Identification in Tea Farming: In tea farming, researchers have used sophisticated YOLO models, including YOLOv10s, to identify pests and diseases in tea leaves precisely [164]. This method improves yield quality and reduces crop loss by facilitating early intervention.

• Frameworks for Multimodal Deep Learning: Integrating natural language processing with image processing improves pest detection accuracy. For example, tiny-BERT with R-CNN and ResNet-18 has been used to analyse textual and visual data [165]. This multimodal strategy overcomes the drawbacks of conventional CNN-based techniques.

• Software for Hybrid Image Segmentation and Machine Learning: With the use of video footage, tools like “Plant Doctor” use algorithms such as YOLOv8, DeepSORT, and DeepLabV3Plus to diagnose urban street plants autonomously [166]. This technology tracks and identifies individual leaves, evaluating their health and identifying bacterial, fungal, and insect damage.