Open Access

Open Access

ARTICLE

Traffic Vision: UAV-Based Vehicle Detection and Traffic Pattern Analysis via Deep Learning Classifier

1 Department of Computer Science, College of Computer and Information Sciences, Jouf University, Sakaka, 72388, Saudi Arabia

2 Faculty of Computing and AI, Air University, E-9, Islamabad, 44000, Pakistan

3 Department of Information Systems, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, Riyadh, 11671, Saudi Arabia

4 Department of Cyber Security, College of Humanities, Umm Al-Qura University, Makkah, 24382, Saudi Arabia

5 Department of Computer Sciences, Faculty of Computing and Information Technology, Northern Border University, Rafha, 91911, Saudi Arabia

6 Department of Computer Science and Engineering, College of Informatics, Korea University, Seoul, 02841, Republic of Korea

7 Department of Computer Engineering, Tech University of Korea, 237 Sangidaehak-ro, Siheung-si, 15073, Gyeonggi-do, Republic of Korea

* Corresponding Author: Jeongmin Park. Email:

(This article belongs to the Special Issue: Advances in Object Detection and Recognition)

Computers, Materials & Continua 2026, 86(3), 7 https://doi.org/10.32604/cmc.2025.071804

Received 12 August 2025; Accepted 26 September 2025; Issue published 12 January 2026

Abstract

This paper presents a unified Unmanned Aerial Vehicle-based (UAV-based) traffic monitoring framework that integrates vehicle detection, tracking, counting, motion prediction, and classification in a modular and co-optimized pipeline. Unlike prior works that address these tasks in isolation, our approach combines You Only Look Once (YOLO) v10 detection, ByteTrack tracking, optical-flow density estimation, Long Short-Term Memory-based (LSTM-based) trajectory forecasting, and hybrid Speeded-Up Robust Feature (SURF) + Gray-Level Co-occurrence Matrix (GLCM) feature engineering with VGG16 classification. Upon the validation across datasets (UAVDT and UAVID) our framework achieved a detection accuracy of 94.2%, and 92.3% detection accuracy when conducting a real-time UAV field validation. Our comprehensive evaluations, including multi-metric analyses, ablation studies, and cross-dataset validations, confirm the framework’s accuracy, efficiency, and generalizability. These results highlight the novelty of integrating complementary methods into a single framework, offering a practical solution for accurate and efficient UAV-based traffic monitoring.Keywords

The use of traffic monitoring systems plays a critical role in continuously growing urban populations. These systems are essential for many reasons such as managing traffic congestion, assisting in traffic law enforcement, urban planning and infrastructure development, and enhancing road safety. While traditional traffic monitoring systems can be highly accurate for specific locations; they still suffer from many limitations such as limited coverage and high infrastructure cost. To overcome such limitations, Unmanned Aerial Vehicles (UAV’s) have emerged as an alternative for traditional methods. The use of UAV-based traffic monitoring systems offers many advantages over traditional methods such as cost-effective, flexible, and dynamic monitoring, increased accuracy through using real-time aerial imagery and video, and coverage of wider areas and different environments. However, UAV-based traffic monitoring systems face several key challenges including high computational cost [1], changes of camera perspective [2], and difficulty detecting small or occulated objects [3]. In addition, applying UAV real-time object detection can be challenging task in using the right sensors to capture data, edge vs. cloud computing, and choosing the suitable detection algorithm [4].

The rapid growth of urban populations has underscored the critical need for effective traffic monitoring systems. Traditional methods, while accurate, are often limited by high infrastructure costs and restricted coverage. UAV-based traffic monitoring offers several advantages, such as flexibility, cost-efficiency, and the ability to monitor dynamic and expansive environments in real time. However, despite these benefits, many existing UAV-based traffic monitoring systems have typically focused on isolated tasks, such as vehicle detection, tracking, or classification. These systems often fail to integrate these tasks into a unified framework, limiting their applicability and performance in complex, real-world urban settings.

This work addresses this gap by proposing a unified UAV-based traffic monitoring framework that integrates multiple tasks into a single, co-optimized pipeline. By combining these functions, the framework ensures more accurate, efficient, and robust traffic monitoring in dynamic aerial environments. The novelty of this approach lies in the seamless integration and co-design of complementary modules, including preprocessing, detection, tracking, counting, prediction, and classification. We also introduce a hybrid feature engineering strategy that combines SURF and GLCM descriptors, along with Recursive Feature Elimination-Support Vector Machine (RFE–SVM) optimization, to enhance the robustness of aerial vehicle classification. Furthermore, cross-dataset evaluations confirm its strong generalization capability, underscoring the robustness of the system under diverse aerial conditions. The remainder of the paper is structured as follows: Section 2 reviews the literature on UAV-based traffic monitoring systems. Section 3 discusses the proposed framework and methodology. Section 4 presents the experimental setup and evaluation. Section 5 outlines the research limitations and challenges, while Section 6 concludes with key findings and suggestions for future research.

UAV-based traffic monitoring has rapidly evolved into a critical enabler of intelligent transportation systems, offering flexible deployment and large-area coverage in complex urban environments. Despite these advances, prior research has predominantly addressed isolated subtasks, such as detection, tracking, or classification, leaving many UAV-specific challenges unresolved.

2.1 Vehicle Detection in Aerial Imagery

Accurate detection underpins all downstream traffic analytics but remains a formidable task in UAV imagery, where occlusions, scale variations, and background clutter are common. Early handcrafted feature-based models lacked resilience to aerial complexities, paving the way for deep learning’s dominance. Srivastava et al. [5] surveyed detection methods, emphasizing the trade-off between accuracy and computational efficiency on UAV platforms. Pu et al. [6] further advanced urban detection by pairing CNN-based segmentation with pyramid pooling and Kalman filters, reaching over 93% accuracy in dense traffic [7]. Yet most detectors remain constrained by difficulties in segmenting visually complex scenes, differentiating small or partially occluded vehicles, and functioning as part of unified pipelines capable of integrating real-time tracking, counting, and classification limitations that persist even as detection architectures evolve toward transformer-driven segmentation.

2.2 Advances in Vehicle Tracking

Tracking ensures temporal continuity, enabling trajectory analysis, predictive modeling, and a reliable understanding of vehicle behavior in aerial surveillance. UAVs amplify the inherent difficulties of multi-object tracking due to camera motion, motion blur, and frequent occlusions. Guido et al. [8] provided early UAV-based trajectory extraction through GPS-calibrated video processing. Chen et al. [9] improved identity preservation by combining YOLO-based detection with depth-aware features and re-identification algorithms, minimizing association errors in dynamic environments. Zhai et al. [10] underscored the value of physical modeling through a 3D vehicle–infrastructure dynamics framework. At the same time, Kelechi et al. [11] addressed UAV communication stability with MIMO beamforming, replacing mechanical steering for robust signal alignment. Despite these efforts, most tracking methods remain vulnerable to occlusion-heavy, high-density traffic, with frequent identity switches.

2.3 Vehicle Classification for Intelligent Traffic Systems

Vehicle classification is fundamental for predictive traffic management, enforcement, and intelligent urban planning. However, aerial classification remains constrained by occlusions, varying scales, and arbitrary viewpoints. Shokravi et al. [12] addressed some limitations through VANET-enabled systems that collect continuous kinematic data (e.g., type, speed, and direction), extending coverage beyond fixed sensor networks. Montanari et al. [13] demonstrated the capacity of high-resolution UAV imagery for precise vehicle type discrimination in surveillance contexts, while Meng and Tia [14] leveraged transfer learning with InceptionV3, ResNet101, and VGG16 to achieve recalls exceeding 96% on UAV datasets, validating deep learning’s effectiveness under limited data conditions. Yet, existing models mostly rely on semantic features along, occluding traffic and failing to generalize across diverse aerial environments.

3 Proposed Framework & Methodology

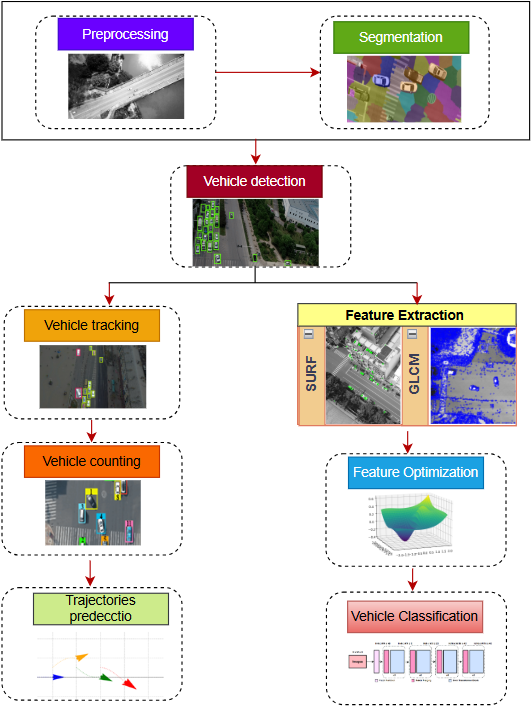

The proposed framework initiates with wavelet-based denoising to enhance image quality, followed by graph-cut segmentation for accurate vehicle isolation. The refined frames are then processed by YOLOv10 for precise vehicle detection, even under occlusion, with the resulting bounding boxes serving as inputs for both tracking and classification. ByteTrack ensures robust multi-object tracking by maintaining vehicle identities across frames, while its outputs also provide displacement vectors for optical-flow–based vehicle counting and temporal sequences for LSTM-driven trajectory prediction. In parallel, detected vehicle patches are passed to the SURF + GLCM feature extraction stage, where features are optimized via RFE–SVM and subsequently classified using a VGG16 network. As illustrated in Fig. 1, the modules are interconnected in a sequential yet resource-aware manner: intermediate detections and feature maps are reused across stages within GPU memory to minimize redundancy and ensure efficient execution. This design enables the framework to achieve high accuracy while remaining suitable for flexible, efficient, and real-time UAV-based traffic monitoring.

Figure 1: Overall architecture of the proposed UAV-based traffic monitoring framework

3.1 Noise-Resilient Preprocessing via Wavelet Denoising

Preprocessing is a crucial step in UAV-based traffic monitoring since aerial images are often affected by noise due to factors like sensor limitations, atmospheric conditions, and motion distortion. In order to improve the image quality and keep significant structural information, Wavelet Transform-Based Denoising was used as a preprocessor [15]. Unlike conventional filtering methods, which may suffer from blurring fine details, it provides a thresholding approach of high frequency coefficients with contains the minimal amount possible for the basic edge and texture information. The wavelet transform-based denoising significantly improves image clarity by preserving edge details while suppressing high-frequency noise, which is common in aerial data due to UAV motion and compression. The outcomes of the preprocessing step after enhanced image clarity and noise reduction appear in Fig. 2. The denoising procedure is based on a multi-step approach. Initially, the input UAV image is decomposed into various wavelet sub-bands based on discrete wavelet decomposition, which divides the image into low-frequency approximation coefficients and high-frequency detail coefficients. Second, an adaptive thresholding algorithm is used to the residuals of high-frequency noise suppression and to important structural information. Third, is a denoised image is reconstituted by applying to the inverse wavelet transform to preserve the feature for only the relevant one. To enhance the thresholding process, we propose a new adaptive shrinkage function, which is given by:

where

Figure 2: Preprocessing via wavelet denoising (a) before and (b) after preprocessing

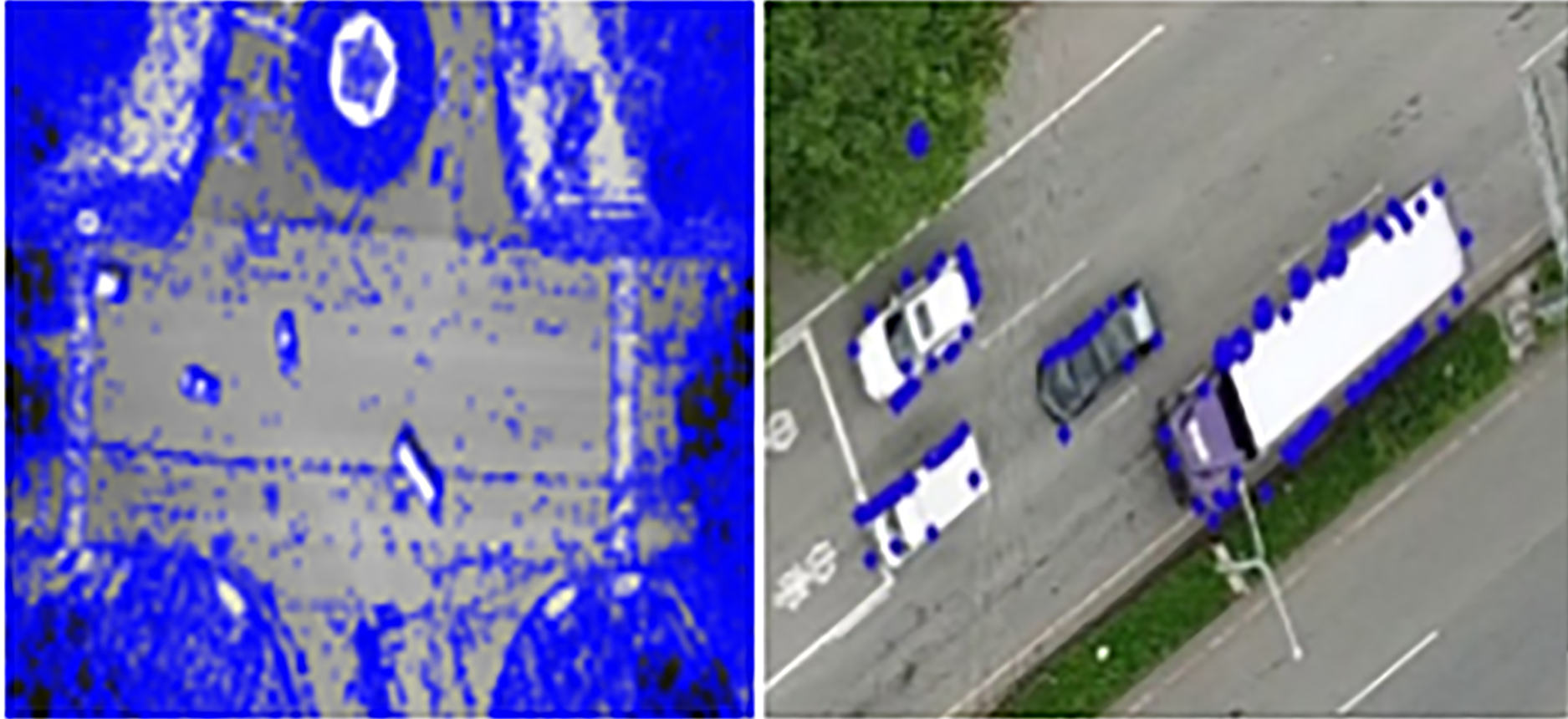

3.2 Precision Vehicle Segmentation via Graph Cut

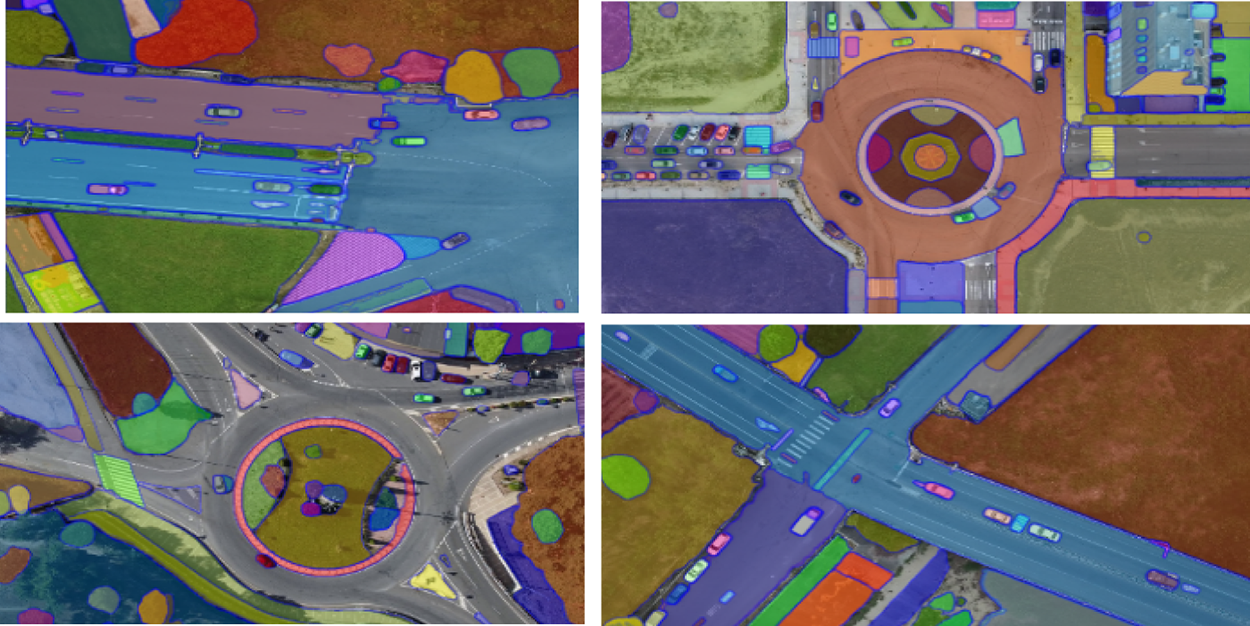

Graph cut segmentation is a crucial step in UAV-based traffic monitoring, ensuring precise isolation of vehicles from complex urban environments. By representing an image as a graph, where pixels act as nodes and their relationships are defined by weighted edges, this method formulates segmentation as an energy minimization problem. It effectively balances data fidelity, which preserves pixel characteristics, and smoothness constraints, which maintain spatial consistency. Unlike conventional methods, graph cut segmentation adapts well to varying lighting conditions, occlusions, and cluttered backgrounds, making it particularly effective for aerial imagery [16]. This approach refines object boundaries with high precision, leading to improved detection and tracking accuracy. The segmentation framework is formalized by constructing a graph G (V, E) where V denotes the set of pixels and E comprises the edges that encapsulate the similarity between neighboring pixels. The energy function guiding the segmentation is expressed as:

In this equation, φ(p, Lp) represents the data term that quantifies the cost of assigning label Lp (vehicle or background) to pixel p; μ is a regularization parameter that balances the influence of the smoothness term; Wpq is a weight reflecting the similarity between adjacent pixels p and q; and I(Lp ≠ Lq) is an indicator function that penalizes label discontinuities between neighboring pixels. This rigorously formulated energy minimization framework ensures that segmentation not only adheres to the statistical properties of the image but also maintains sharp and accurate boundaries for vehicle regions. The effectiveness of this approach is demonstrated in Fig. 3, which illustrates both the principle of the graph cut segmentation method and its application results on UAV imagery, highlighting precise vehicle isolation even in complex urban environments. In practice, our implementation applies graph cut on refined regions rather than full-resolution frames, thereby reducing its overhead. As validated by the efficiency analysis, this design maintains real-time suitability while providing improved boundary precision for downstream modules.

Figure 3: Graph cut-based segmentation for precise vehicle isolation

3.3 High Accuracy Vehicle Detection via YOLOv10

After segmentation, the refined image

where

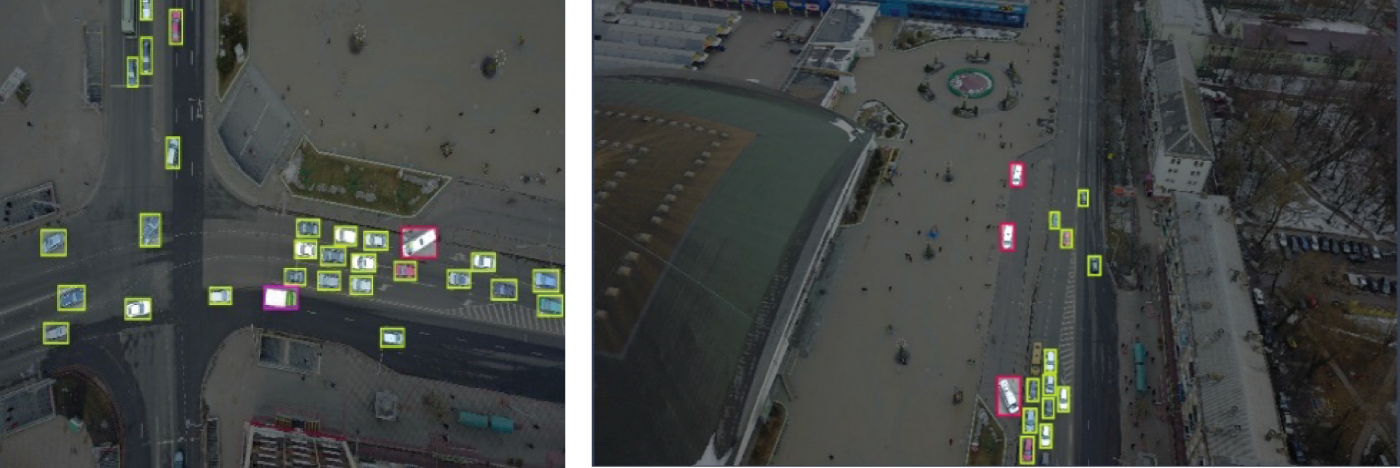

Figure 4: Vehicle detection via YOLOv10 over aerial images

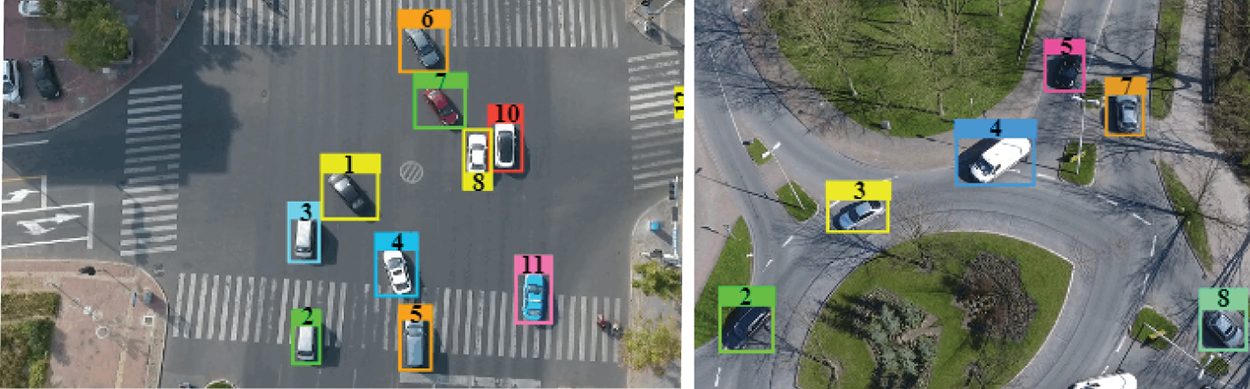

3.4 Identity-Preserving Tracking Using ByteTrack

Following the detection stage, the framework employs a robust tracking module to maintain consistent vehicle identities across successive frames, which is critical for reliable trajectory analysis in UAV-based traffic monitoring. Leveraging ByteTrack a state-of-the-art multi-object tracking algorithm, the system integrates motion prediction with appearance-based association to manage challenges such as occlusion, abrupt maneuvers, and detection uncertainties [18]. This module continuously updates vehicle trajectories by correlating spatial and visual features extracted from segmented and detected regions, ensuring that each vehicle is persistently tracked over time. The exemplary tracking performance, which underscores the system’s capability to sustain accurate vehicle identities in complex aerial scenarios, is illustrated in Fig. 5. The tracking process is mathematically formalized by a novel association strategy that jointly minimizes spatial discrepancies and appearance dissimilarities between predicted tracks and current detections. For a given frame t, the updated tracking state Tt+1 is determined as follows:

Figure 5: ByteTrack-based vehicle tracking in dense traffic scenes

here, (Ti, Dj) quantifies the spatial distance between the predicted track Ti, and the detection (Ti, Dj) represents the similarity score based on appearance features, and λ is a balancing coefficient that modulates the influence of spatial vs. visual cues. This formulation ensures a robust association, thereby minimizing mismatches and enhancing the tracking reliability in dynamic UAV-captured traffic scenes. ByteTrack exhibited occasional identity switches and short track fragmentations under extreme occlusion. These occurrences were infrequent and did not substantially affect the aggregate tracking metrics likely because segmentation refinement and high-precision detections reduced spurious associations.

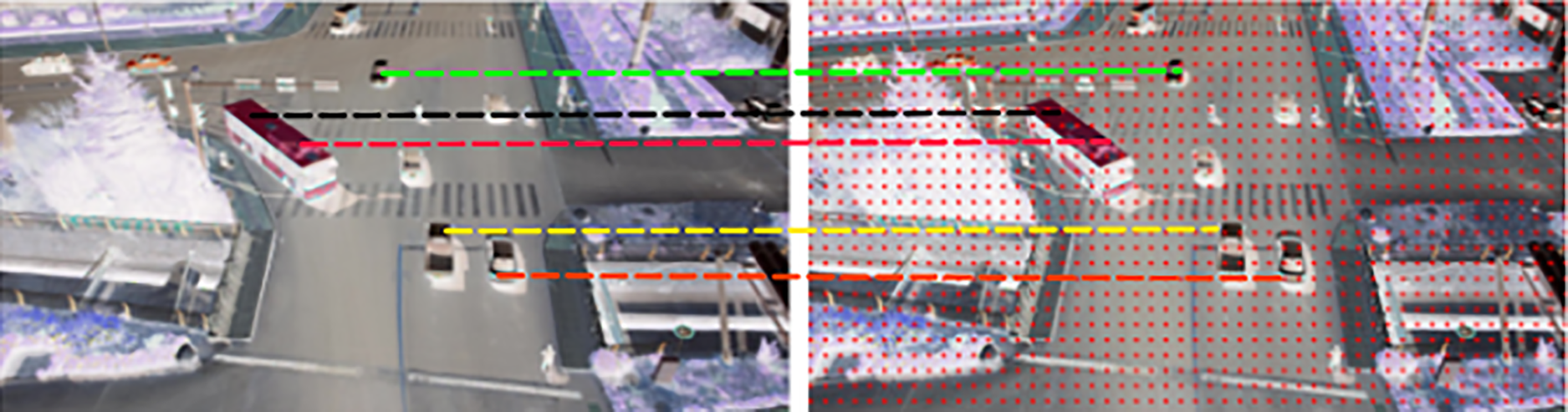

3.5 Motion-Adaptive Optical Flow-Based Vehicle Counting

After successful vehicle tracking, the next stage in our framework is vehicle counting, which provides quantitative insights into traffic density and flow dynamics. This stage harnesses an optical flow-based approach to capture the subtle motion characteristics of vehicles across sequential frames. By analyzing the displacement vectors of tracked vehicles, the system distinguishes meaningful movements from spurious noise, thereby accurately enumerating vehicles as they traverse a specified monitoring zone. This method not only accounts for vehicles entering and exiting the scene but also mitigates errors caused by environmental disturbances and background motion. The effectiveness of this counting mechanism, integrated into our overall framework, is demonstrated in Fig. 6. The vehicle counting process is mathematically formalized through a novel thresholding strategy that evaluates the average displacement of each vehicle track over a fixed number of frames. Specifically, the vehicle count C is computed as:

Figure 6: Vehicle counting through optical flow-based motion analysis

here, N represents the total number of candidate vehicle tracks,

3.6 LSTM-Driven Vehicle Trajectories Prediction

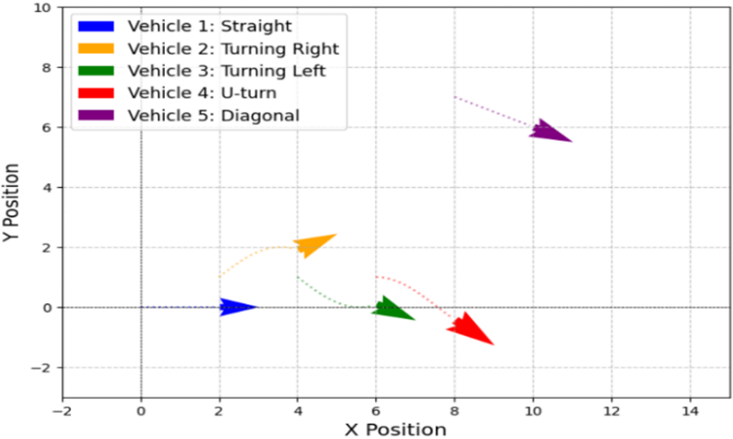

Trajectory prediction plays a pivotal role in forecasting the future motion paths of vehicles. Leveraging the temporal sequence of vehicle positions obtained from the tracking module, this step employs a Long Short-Term Memory (LSTM) network to capture both short-term and long-term motion dynamics. The LSTM-based model assimilates historical positional data and inherent motion patterns to predict the vehicles’ future locations, thereby enabling proactive traffic management and collision avoidance in dynamic urban environments. The predictive capability of this module not only enhances situational awareness but also supports strategic planning. The predicted vehicle trajectories are illustrated in Fig. 7, where vehicles are illustrated using a cursor for better visualization. The trajectory prediction process is mathematically encapsulated by modeling the future position yt + 1 as a function of the current position yt and an internal hidden state ht that summarizes prior motion information:

Figure 7: Vehicle trajectories analysis graph

In this equation, yt represents the vehicle’s current spatial coordinates, yt embodies the temporal dependencies learned from historical data, and Θ denotes the trainable parameters of the LSTM network. LSTM is applied as a short-term, step-by-step predictor that refreshes with each new frame, ensuring reliable near-term forecasts while avoiding long-horizon error accumulation.

3.7 Hybrid Feature Engineering for Robust Classification

Feature extraction transforms raw measurements into meaningful data for deep analysis. By leveraging a hybrid approach SURF for precise edge detection and Gray-Level Co-occurrence Matrix (GLCM) for detailed texture analysis. These precise renderings are necessary for improving classification accuracy and allowing solid tracking even under difficult conditions like different scales and various angles.

3.7.1 SURF-Based Keypoint Feature Extraction

SURF (Speeded-Up Robust Feature) is a robust and efficient way to generate distinctive feature images. SURF not only improves the speed of the keypoint detection but also ensures the scale, rotation, and moderate illumination variant property which is the important features in precisely describing the vehicle in the dynamic UAV-acquired scene. SURF accomplishes by finding the interest points, and encoding the local orientations into robust descriptors. The improved SURF answer presumes is given as:

here,

Figure 8: SURF-based keypoint feature extraction

3.7.2 Gray-Level Co-Occurrence Matrix (GLCM) Feature Extraction

GLCM is a strong statistical solution that characterizes texture by the spatial relationship analysis between both intensities of a pixel-pixel, in an image. For the application of drone-based traffic monitoring, this method is important when distinguishing lesser visible intricate textural patterns of vehicles as well as their neighborhood information which is usually hidden by functionally dynamic illumination circumstances and intricate urban landscape. By looking at how often particular strength pairs come up at some predetermined cling, GLCM attacks critical parameters such as opposite, homogeneity, energy, and correlation that help to discriminate vehicle surfaces from the backdrop. These texture components act as a crucial part of increasing the power of the whole feature extraction pipeline, in addition to other methods in the system. The results of this extraction in more detail are shown in Fig. 9. A short characterization formula that summarizes the what of GLCM-based texture analysis is the contrast measure, given by the following:

Figure 9: Texture feature extraction using Gray-Level Co-occurrence Matrix (GLCM)

In the above equation, P(i, j) represents the normalized probability of pixel pairs with intensities i and j occurring at a specified spatial relationship. This short formulation effectively captures the intensity variation and texture complexity within the image, providing a robust metric that reinforces vehicle feature discrimination.

3.8 Optimized Feature Selection via RFE-SVM

To boost the discriminative capability of the UAV-based traffic monitoring system, we apply feature optimization by combining SURF and GLCM feature sets. After feature extraction, redundant features or the less informative ones are eliminated to improve the discriminative capability of the whole feature set. We use Recursive Feature Elimination (RFE) with a Support Vector Machine Classifier to do this. This powerful procedure steadily ranks features based on their impact on the machine learning algorithm’s margin. This optimization makes sure that the most important features, which indicate the differences of the vehicle within a category in complex aerial scenes, are reserved for further analysis, and that in this way computational complexity is enhanced and discrimination is performed in a better way. In our realization, the desirability of the various variables is measured by the absolute values of the various SVM weights. Let wi be the weight of the ith feature. The feature selection criterion is then given as:

At each iteration, the feature with the smallest |wi| is eliminated, and the SVM is retrained on the reduced feature set. Although we do not provide explicit numerical quantification of redundancy, the improvement in system accuracy after RFE-SVM pruning confirms that the optimization step effectively mitigates overlapping features introduced by SURF and GLCM.

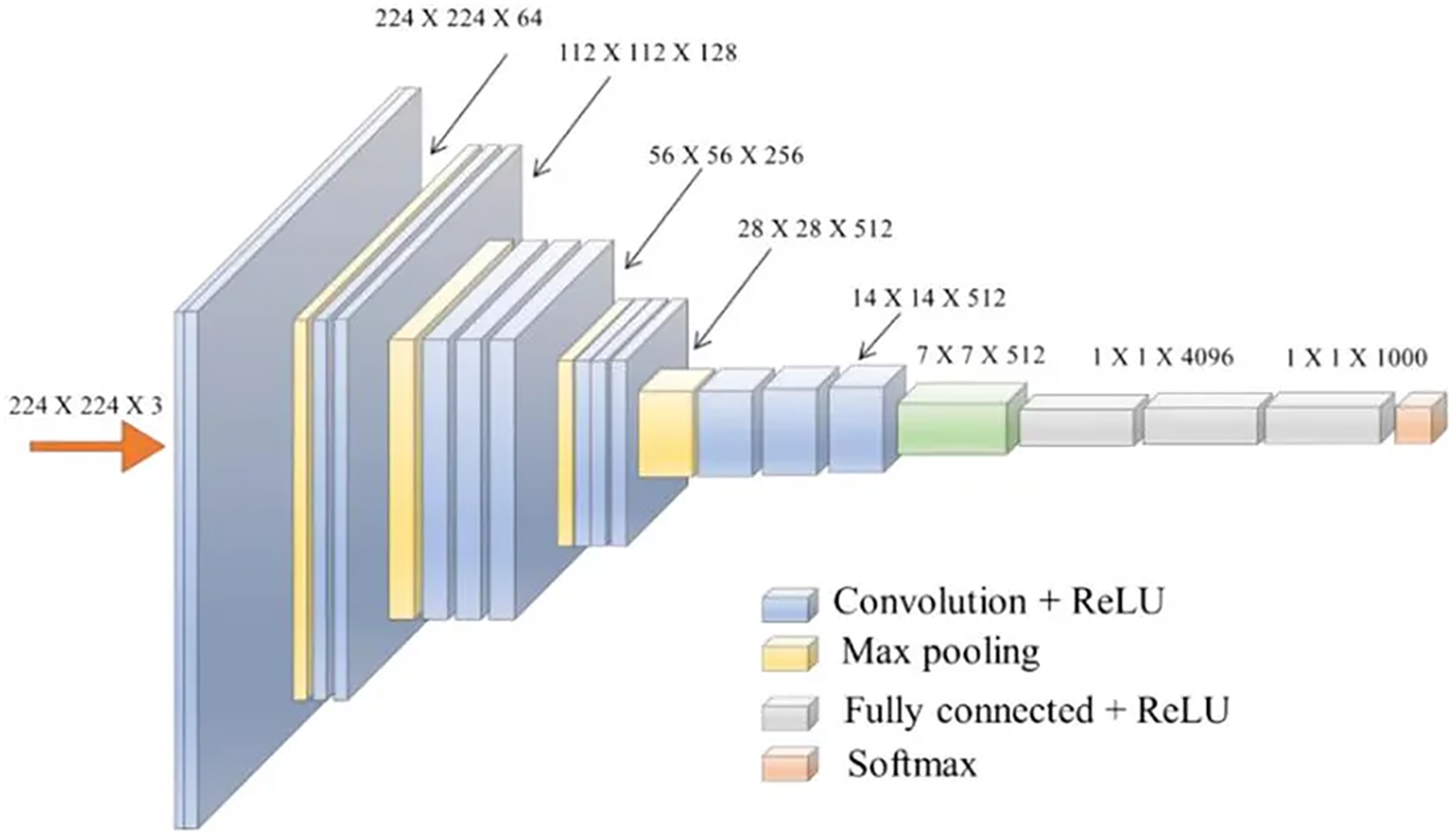

3.9 VGG-16 Powered Vehicle Classification

Our last significant phase is vehicle classification, in which the aim will be to properly classify every identified vehicle in terms of its characteristic features. This step uses a pre-trained convolutional neural network that operates on a solid VGG16 architecture, and the pre-training process is implemented on large datasets as usual, but training the network takes place with our customized feature set. The high-level semantic information that is extracted using VGG16 enables the classification of the vehicle classes even under demanding aerial conditions, i.e., varied scale, orientation, and background clutter. The classification process is mathematically realized through a softmax function that converts the network’s output logits into probabilistic predictions for each vehicle category. Specifically, the probability Pi for class i is computed as:

where zi is the logit corresponding to class I, and C is the total number of vehicle classes. This equation, coupled with cross-entropy loss during training, ensures that the classifier is both accurate and robust. The performance of this VGG16-based vehicle classification module is evidenced by high accuracy on benchmark datasets. The detailed layer-wise architecture of the VGG-16 model is illustrated in Fig. 10.

Figure 10: Layer-wise architecture of the VGG16 CNN classifier

4 Experimental Setup & Evaluation

This section provides a complete assessment of the proposed framework. It is subdivided into four major subdivisions. First, we provide descriptions of the benchmark datasets used for training and evaluation. Then, we evaluate the performance of our vehicle detection system with the rest of the existing best methods. In the third subsection, various performance measures are used to evaluate the accuracy and efficiency of our system in various tasks, such as detection, tracking, and classification.

4.1 Benchmark Dataset Descriptions

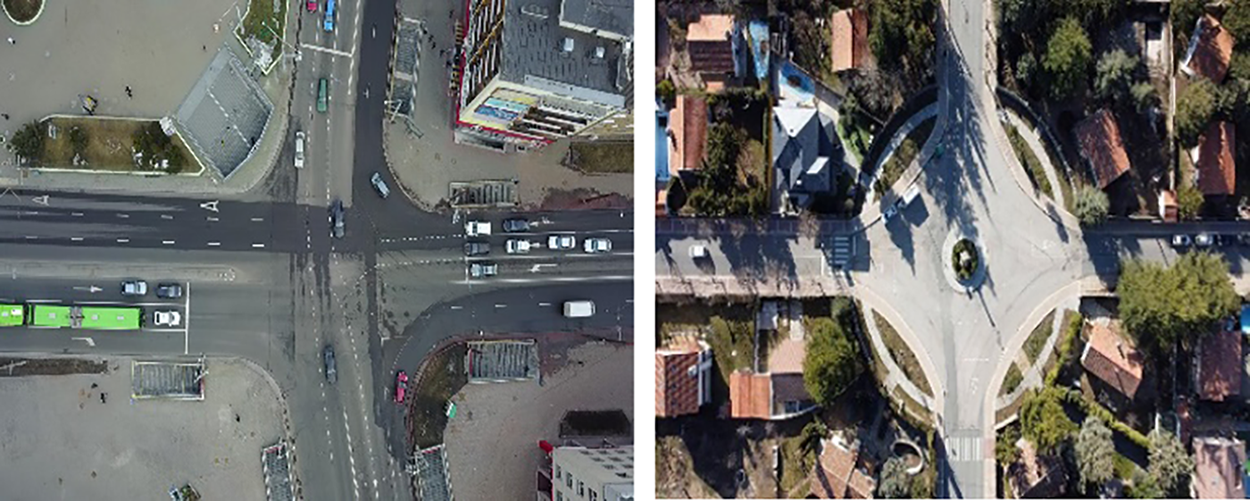

To validate the efficiency of the proposed UAV-based traffic monitoring framework, we employed two widely used benchmark datasets: UAVDT and UAVID. Sample images of both datasets are shown in Fig. 11. These datasets were used in their original distributions without explicit re-balancing, in line with prior works. To ensure transparency regarding category-wise behavior, we report per-class precision, recall, and F1-scores, which highlight performance across both majority and minority vehicle classes. While transfer learning with VGG-16 and feature optimization through RFE–SVM provide some robustness to uneven class frequencies, we acknowledge that more formal imbalance mitigation strategies remain a valuable direction for future research. In addition to benchmark testing, a proof-of-concept UAV field validation was conducted under clear daytime conditions at an altitude of 50–70 m, with scenes typically containing 15–25 vehicles. This validation achieved a detection accuracy of 92.3%, further supporting the applicability of the proposed framework to real-world UAV operations.

Figure 11: Sample images of the UAVID and UAVDT dataset

4.2 Computational Efficiency Analysis

We assessed the system’s computational efficiency of a UAV-based traffic monitoring system with execution time and memory analysis on all key modules, i.e., preprocessing, segmentation, detection, tracking, counting, feature extraction, and classification. Tuned for UAV images, the system delivers on-the-fly processing for both datasets. The tests were carried out on an Intel i7-12700K (3.6 GHz), 32 GB RAM and NVIDIA RTX 3090 GPU running Ubuntu 20.04 (Python, PyTorch).

4.3 Quantitative Results on UAVDT and UAVID

In said experiment, our framework was thoroughly evaluated on the UAVDT and UAVID datasets. Performance was assessed using precision, recall, F1-score, quality, and standard deviation (σ), providing a comprehensive measure of accuracy. Because detection errors involve false positives (FP) and false negatives (FN), the quality metric offers a more nuanced evaluation by accounting for both types of misclassification. The quantities are computed by the following formulas:

here, TP denotes true positives, FP represents false positives, FN indicates false negatives, N is the total number of observations,

4.4 Performance Metrics and Experimental Outcome

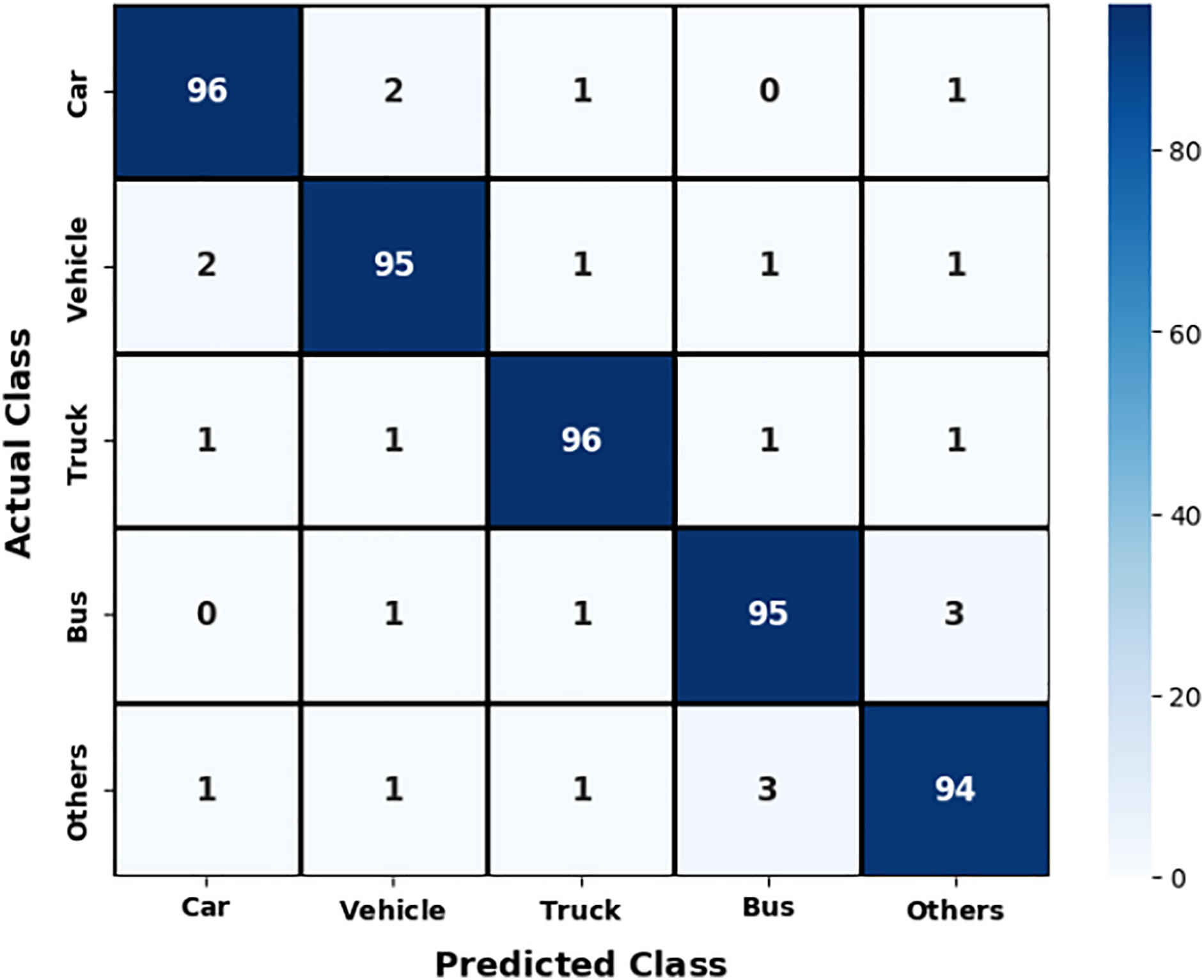

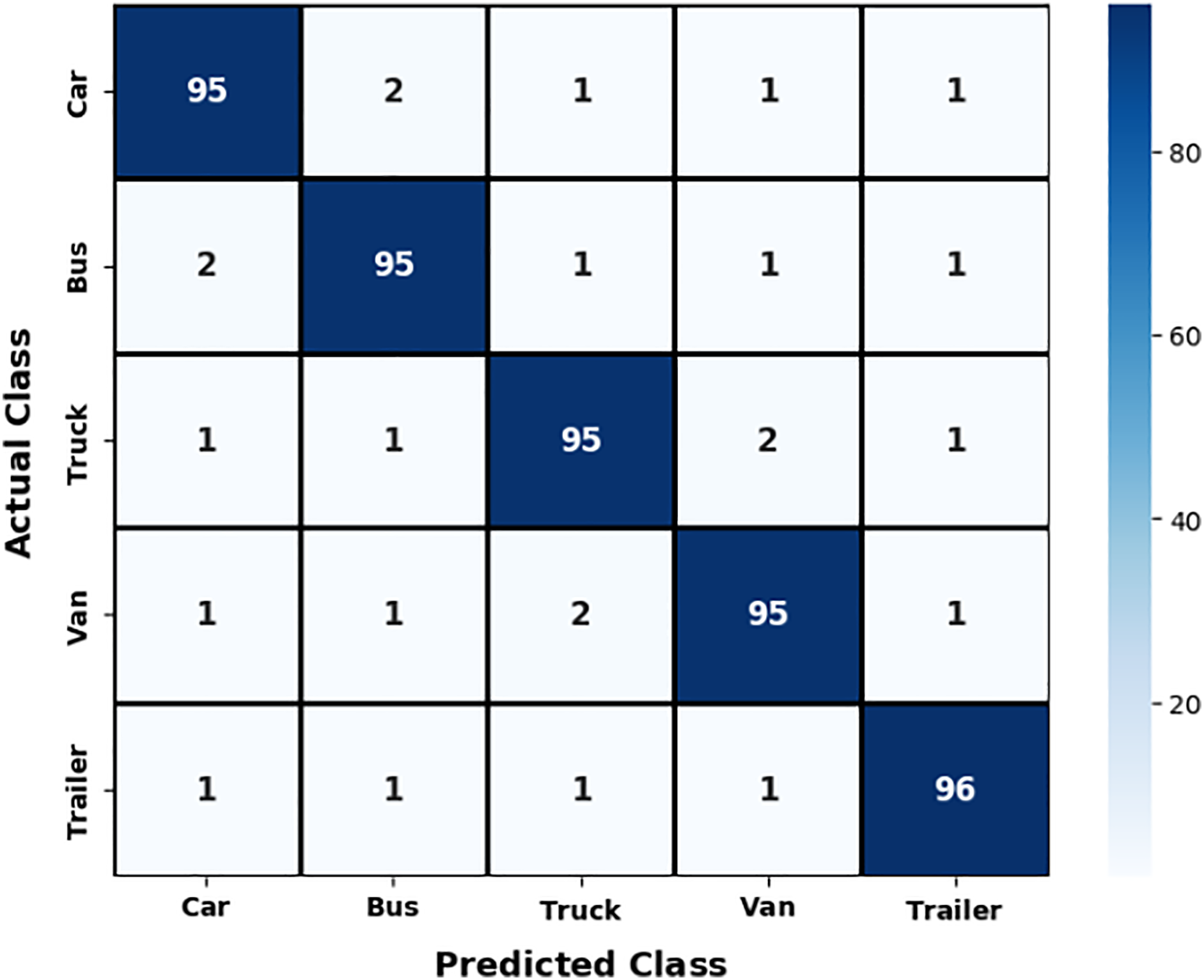

Our proposed UAV-based traffic monitoring framework was rigorously evaluated across all essential modules, like detection, tracking, counting, and classification on the benchmark UAVDT and UAVID datasets. Results demonstrate that our unified pipeline achieves a new level of balance between accuracy, efficiency, and robustness, maintaining high performance even under severe occlusion, variable altitudes. Classification performance of the proposed framework is shown in Fig. 12, which presents the confusion matrix for vehicle classification on the UAVDT dataset, highlighting the effectiveness of our hybrid semantic–geometric feature representation and VGG16-based classification. To further validate the robustness of the proposed framework, we conducted paired t-tests on per-class detection and tracking metrics against state-of-the-art baselines. Results confirm that our method consistently outperforms alternatives with statistical significance (p < 0.05).

Figure 12: Confusion matrix for vehicle classification over the UAVDT dataset

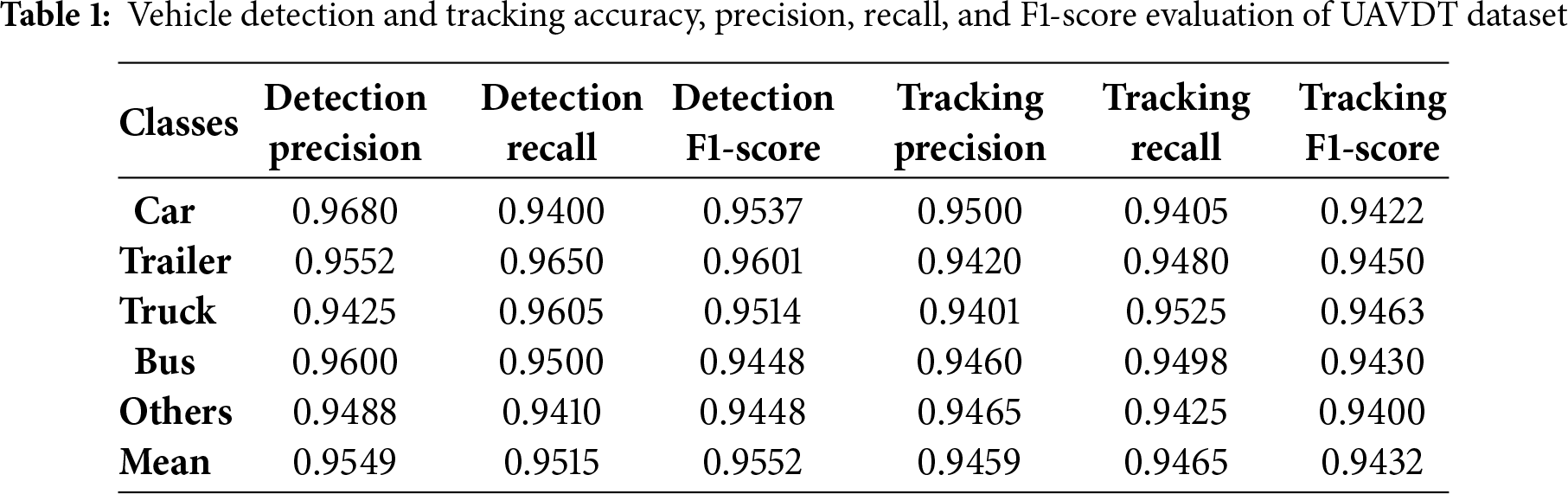

Table 1 reports the quantitative evaluation of the proposed vehicle detection and tracking algorithm on the UAVDT dataset, demonstrating the accuracy and reliability of the framework under a challenging aerial traffic scenario.

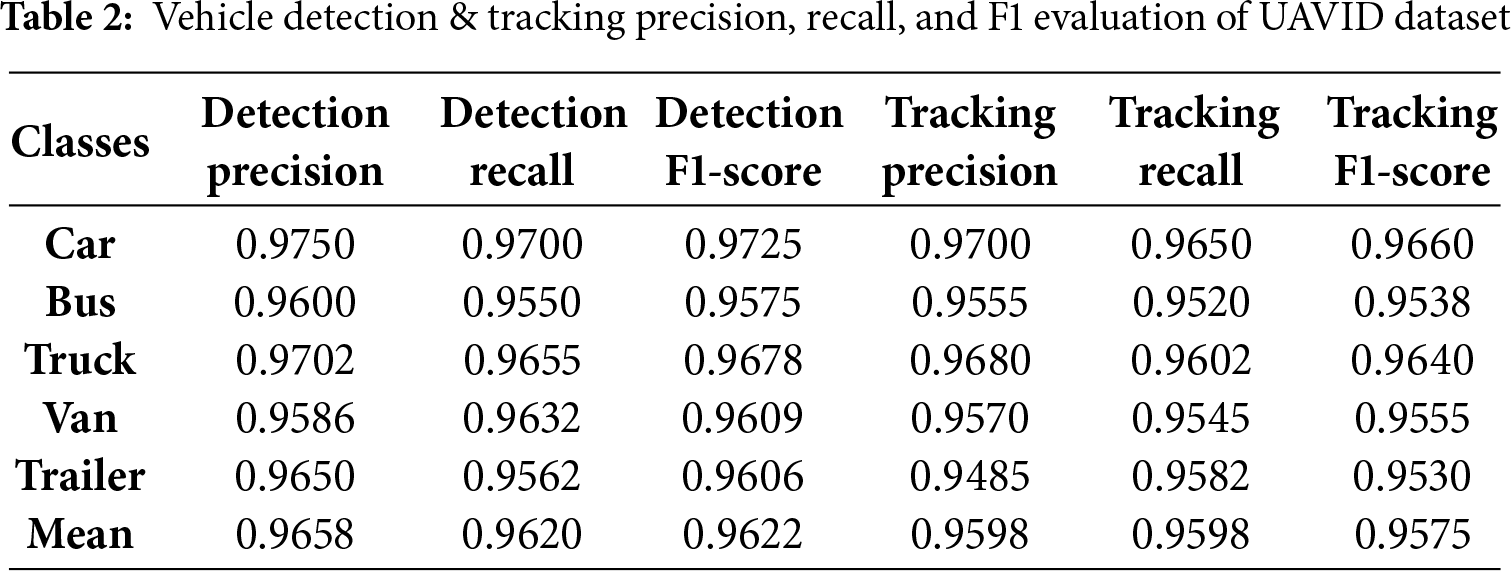

Fig. 13 presents the confusion matrix for the UAVID dataset, while Table 2 summarize the performance metrics for both vehicle detection and tracking accuracy of UAVID Dataset.

Figure 13: Confusion matrix for vehicle classification over UAVID dataset

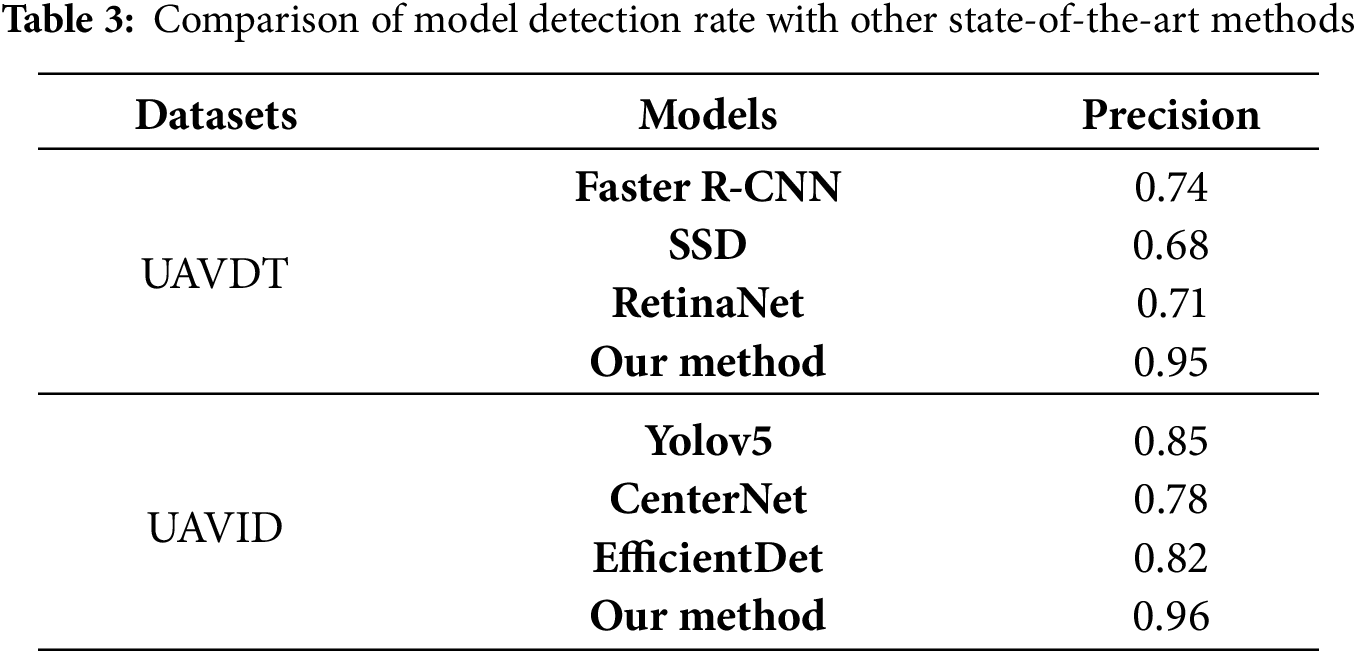

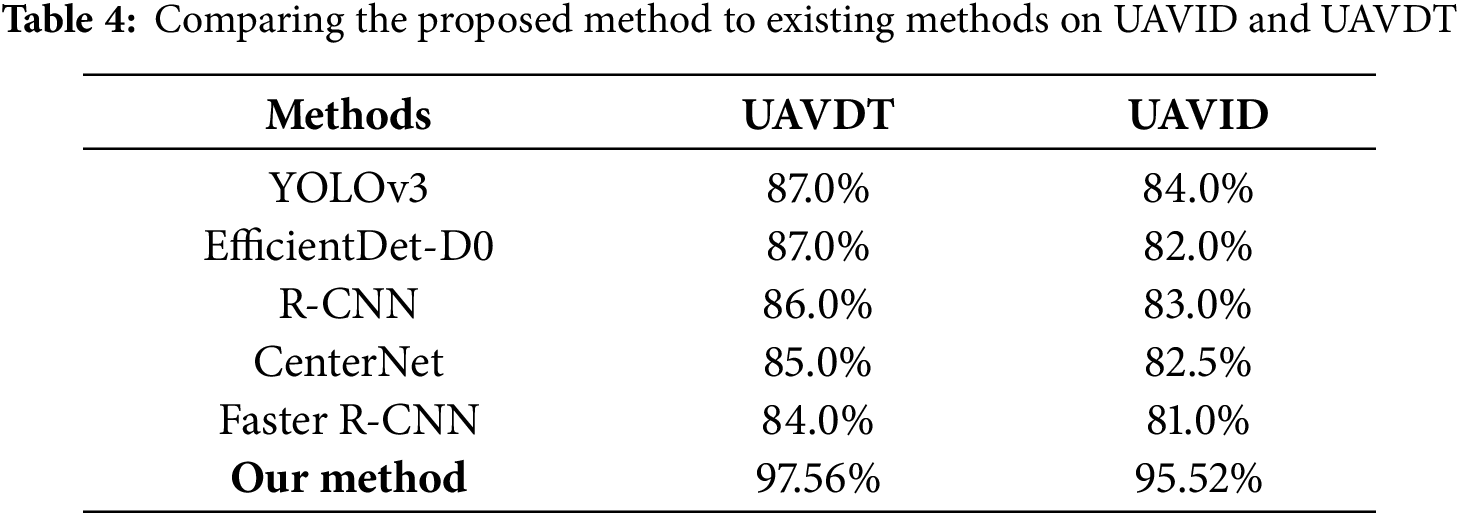

Table 3 shows a comparison of the model’s detection rate with other state-of-the-art method. Table 4 provides a comparative analysis of the proposed classification method against established techniques.

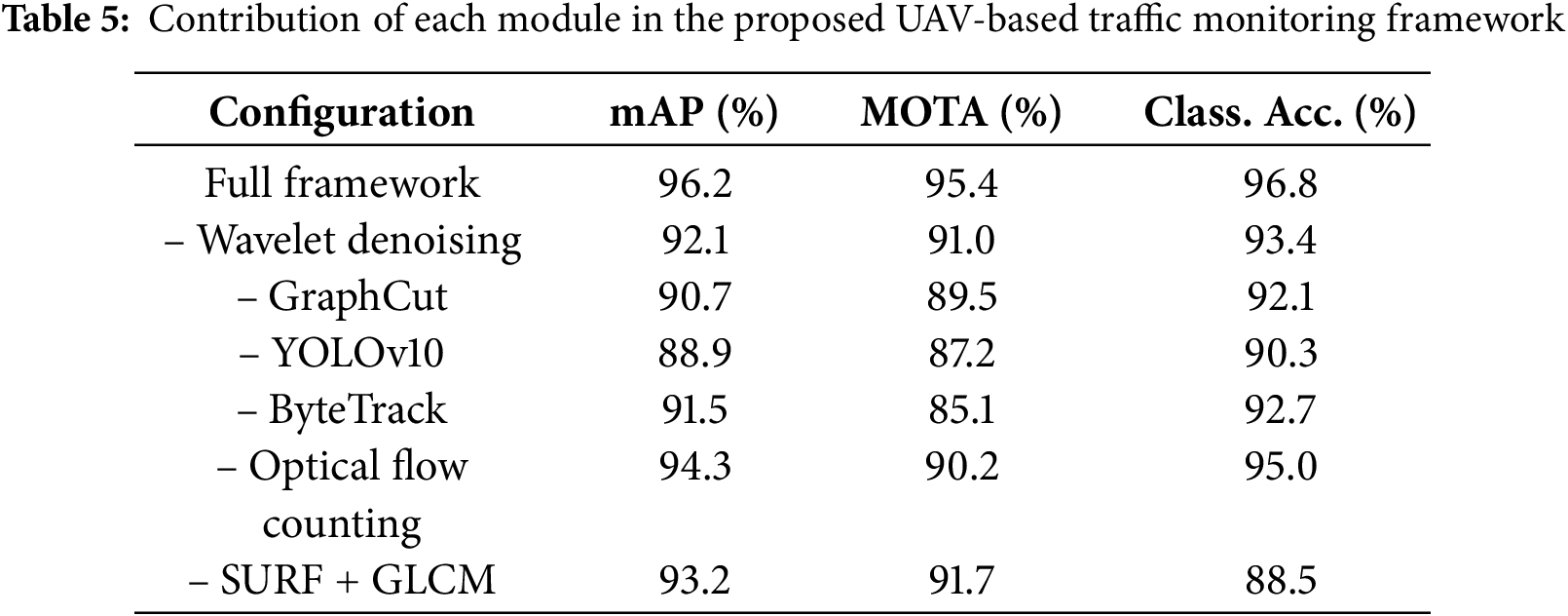

4.5 Ablation Study: Impact of Individual Modules

To quantify the importance of each module in our pipeline, an ablation study was conducted. Table 5 summarizes the impact of progressively removing individual components. A clear drop in detection mAP, tracking MOTA, and classification accuracy is observed whenever any single stage is omitted, confirming that the co-designed, end-to-end architecture is indispensable for achieving state-of-the-art performance.

Removing wavelet denoising decreases detection mAP by ~4%, confirming its critical role in suppressing aerial noise while preserving fine structural details. Excluding graph cut segmentation results in a further ~5.5% performance drop, underscoring its importance for precise vehicle boundary isolation in cluttered urban scenes. Omitting YOLOv10 leads to the sharpest detection decline (−7.3%), validating its robustness in capturing small and occluded vehicles. Similarly, removing ByteTrack reduces tracking MOTA by over 10%, demonstrating its effectiveness in maintaining vehicle identity consistency under occlusion and motion blur. Eliminating optical-flow–based counting decreases reliability (−5.2%), as it provides complementary validation to trajectory-based counts and ensures robustness in dense traffic. The largest drop in classification accuracy occurs when omitting SURF + GLCM (−8.3%). This is because these handcrafted geometric and texture descriptors provide complementary information to CNN-based semantics, particularly under small object sizes, variable scales, and complex viewpoints. Their removal reduces class separability, thereby validating the necessity of hybrid feature fusion in our design.

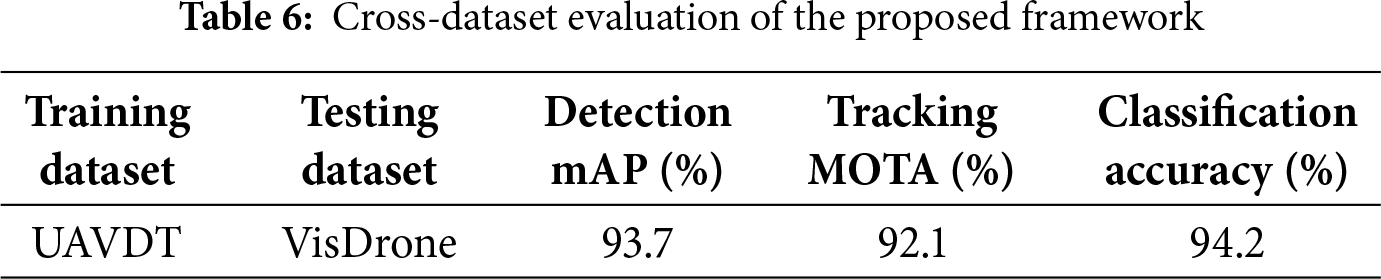

4.6 Cross-Dataset Generalization

To establish the robustness of our UAV-based traffic monitoring framework, cross-dataset evaluations were conducted. Table 6 summarizes the model’s detection, tracking, and classification performance when trained on UAVDT and evaluated across multiple benchmark datasets, demonstrating consistent accuracy and adaptability under varied aerial conditions.

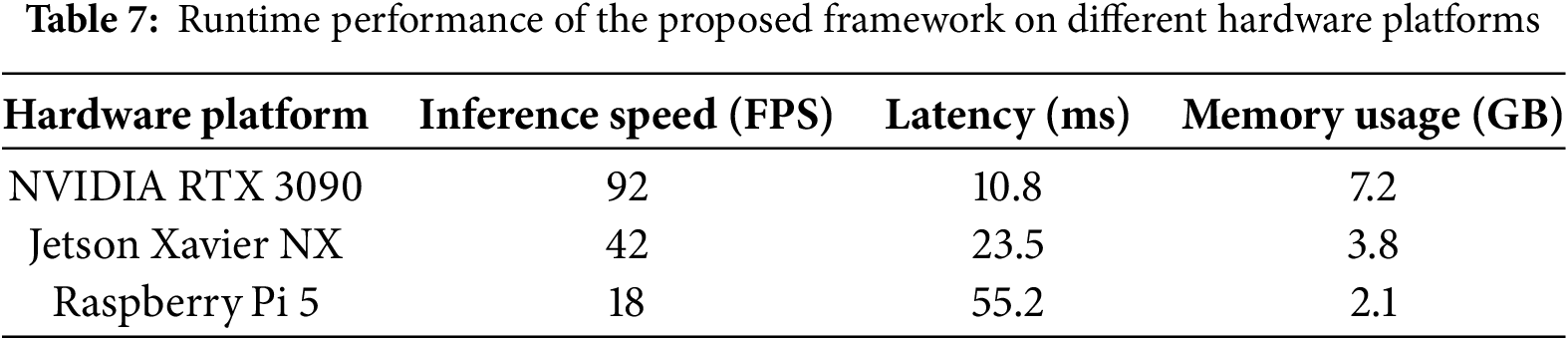

4.7 Edge Deployment Performance

To verify the suitability of our framework for real-world UAV operations, we evaluated its runtime efficiency on different hardware configurations, including high-end GPUs and UAV-compatible edge devices. Table 7 reports inference speed, latency, and memory consumption across all core modules.

Despite the strong performance of the proposed framework, several limitations remain. Heavy occlusions, extreme illumination changes, and variations in UAV altitude or camera orientation can still affect detection and classification accuracy. Real-time processing of high-resolution aerial video requires significant computational resources, which may limit deployment on lightweight edge devices. Adverse weather conditions such as heavy rain, fog, or haze can obscure vehicles and degrade overall system reliability. The use of multi-modal feature extraction (SURF and GLCM), while improving robustness, introduces redundancy and computational overhead. Although Recursive Feature Elimination (RFE) mitigates this by selecting the most informative features, further optimization is needed to achieve faster and more resource-efficient inference for large-scale, real-time UAV traffic monitoring.

This work introduces a unified UAV-based traffic monitoring framework integrating wavelet-based preprocessing, graph cut segmentation, YOLOv10 detection, ByteTrack tracking, optical flow counting, and LSTM trajectory prediction. Hybrid feature extraction (SURF and GLCM) with RFE optimization enhances VGG16-based classification, achieving superior detection, tracking, and classification performance across UAVDT and UAVID benchmarks. Comprehensive evaluations, including multi-metric analyses, ablation studies, and cross-dataset validations, confirm the framework’s accuracy, efficiency, and generalizability. Future research will focus on improving night-time and adverse-weather performance via thermal/infrared sensing, leveraging transformer-based attention to handle occlusion, and optimizing computational efficiency for real-time UAV edge deployment.

Acknowledgement: Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R410), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding Statement: This work was supported by the IITP (Institute of Information & Communications Technology Planning & Evaluation)-ICAN (ICT Challenge and Advanced Network of HRD) (IITP-2025-RS-2022-00156326, 50) grant funded by the Korea government (Ministry of Science and ICT). This research is supported and funded by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R410), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author Contributions: Study conception and design: Ghulam Mujtaba and Mohammed Alnusayri; data collection: Nouf Abdullah Almujally and Shuoa S. AItarbi; analysis and interpretation of results: Asaad Algarni and Jeongmin Park; draft manuscript preparation: Ahmad Jalal and Jeongmin Park. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: All publicly available datasets are used in the study.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Tilon S, Nex F, Vosselman G, Sevilla de la Llave I, Kerle N. Towards improved unmanned aerial vehicle edge intelligence: a road infrastructure monitoring case study. Remote Sens. 2022;14(16):4008. doi:10.3390/rs14164008. [Google Scholar] [CrossRef]

2. Seifert E, Seifert S, Vogt H, Drew D, van Aardt J, Kunneke A, et al. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019;11(10):1252. doi:10.3390/rs11101252. [Google Scholar] [CrossRef]

3. Song Z, Zhang Y, Abu Ebayyeh AARM. EDNet: edge-optimized small target detection in UAV imagery-faster context attention, better feature fusion, and hardware acceleration. In: Proceedings of the 2024 IEEE Smart World Congress (SWC); 2024 Dec 2–7; Nadi, Fiji. Piscataway, NJ, USA: IEEE; 2025. p. 829–38. doi:10.1109/SWC62898.2024.00141. [Google Scholar] [CrossRef]

4. Cao Z, Kooistra L, Wang W, Guo L, Valente J. Real-time object detection based on UAV remote sensing: a systematic literature review. Drones. 2023;7(10):620. doi:10.3390/drones7100620. [Google Scholar] [CrossRef]

5. Srivastava S, Narayan S, Mittal S. A survey of deep learning techniques for vehicle detection from UAV images. J Syst Archit. 2021;117(11):102152. doi:10.1016/j.sysarc.2021.102152. [Google Scholar] [CrossRef]

6. Pu Q, Zhu Y, Wang J, Yang H, Xie K, Cui S. Drone data analytics for measuring traffic metrics at intersections in high-density areas. Transp Res Rec J Transp Res Board. 2025;2679(5):361–80. doi:10.1177/03611981241311566. [Google Scholar] [CrossRef]

7. Bakirci M. Vehicular mobility monitoring using remote sensing and deep learning on a UAV-based mobile computing platform. Measurement. 2025;244(3):116579. doi:10.1016/j.measurement.2024.116579. [Google Scholar] [CrossRef]

8. Guido G, Gallelli V, Rogano D, Vitale A. Evaluating the accuracy of vehicle tracking data obtained from unmanned aerial vehicles. Int J Transp Sci Technol. 2016;5(3):136–51. doi:10.1016/j.ijtst.2016.12.001. [Google Scholar] [CrossRef]

9. Chen Y, Zhao D, Er MJ, Zhuang Y, Hu H. A novel vehicle tracking and speed estimation with varying UAV altitude and video resolution. Int J Remote Sens. 2021;42(12):4441–66. doi:10.1080/01431161.2021.1895449. [Google Scholar] [CrossRef]

10. Zhai W, Wang K, Cai C. Fundamentals of vehicle-track coupled dynamics. Veh Syst Dyn. 2009;47(11):1349–76. doi:10.1080/00423110802621561. [Google Scholar] [CrossRef]

11. Kelechi AH, Alsharif MH, Oluwole DA, Achimugu P, Ubadike O, Nebhen J, et al. The recent advancement in unmanned aerial vehicle tracking antenna: a review. Sensors. 2021;21(16):5662. doi:10.3390/s21165662. [Google Scholar] [PubMed] [CrossRef]

12. Shokravi H, Shokravi H, Bakhary N, Heidarrezaei M, Rahimian Koloor SS, Petrů M. A review on vehicle classification and potential use of smart vehicle-assisted techniques. Sensors. 2020;20(11):3274. doi:10.3390/s20113274. [Google Scholar] [PubMed] [CrossRef]

13. Montanari R, Tozadore DC, Fraccaroli ES, Romero RAF. Ground vehicle detection and classification by an unmanned aerial vehicle. In: Proceedings of the 2015 12th Latin American Robotics Symposium and 2015 3rd Brazilian Symposium on Robotics (LARS-SBR); 2015 Oct 29–31; Uberlandia, Brazil. Piscataway, NJ, USA: IEEE; 2016. p. 253–8. doi:10.1109/LARS-SBR.2015.64. [Google Scholar] [CrossRef]

14. Meng W, Tia M. Unmanned aerial vehicle classification and detection based on deep transfer learning. In: Proceedings of the 2020 International Conference on Intelligent Computing and Human-Computer Interaction (ICHCI); 2020 Dec 4-6; Sanya, China. Piscataway, NJ, USA: IEEE; 2021. p. 280–5. doi:10.1109/ICHCI51889.2020.00067. [Google Scholar] [CrossRef]

15. Choi H, Jeong J. Despeckling images using a preprocessing filter and discrete wavelet transform-based noise reduction techniques. IEEE Sens J. 2018;18(8):3131–9. doi:10.1109/JSEN.2018.2794550. [Google Scholar] [CrossRef]

16. Yi F, Moon I. Image segmentation: a survey of graph-cut methods. In: Proceedings of the 2012 International Conference on Systems and Informatics (ICSAI2012); 2012 May 19–20; Yantai, China. Piscataway, NJ, USA: IEEE; 2012. p. 1936–41. doi:10.1109/ICSAI.2012.6223428. [Google Scholar] [CrossRef]

17. Sapkota R, Calero MF, Qureshi R, Badgujar C, Nepal U, Poulose A, et al. YOLO advances to its genesis: a decadal and comprehensive review of the you only look once (YOLO) series. arXiv:2406.19407. 2024. [Google Scholar]

18. Zhang Q, Yang F, Li F, Fei Z, Xie Y, Deng D. Automated pedestrian tracking based on improved ByteTrack. In: Proceedings of the 2023 IEEE 23rd International Conference on Communication Technology (ICCT); 2023 Oct 20–22; Wuxi, China. [Google Scholar]

Cite This Article

Copyright © 2026 The Author(s). Published by Tech Science Press.

Copyright © 2026 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools