Open Access

Open Access

ARTICLE

MDMOSA: Multi-Objective-Oriented Dwarf Mongoose Optimization for Cloud Task Scheduling

1 Department of Computer Science, Faculty of Computing, Universiti Teknologi Malaysia, Skudai, Johor Bahru, 81310, Malaysia

2 Information Technology Services Department, Gateway (ICT) Polytechnic Saapade, Remo North, 121116, Nigeria

* Corresponding Author: Olanrewaju Lawrence Abraham. Email:

Computers, Materials & Continua 2026, 86(3), 89 https://doi.org/10.32604/cmc.2025.072279

Received 23 August 2025; Accepted 24 September 2025; Issue published 12 January 2026

Abstract

Task scheduling in cloud computing is a multi-objective optimization problem, often involving conflicting objectives such as minimizing execution time, reducing operational cost, and maximizing resource utilization. However, traditional approaches frequently rely on single-objective optimization methods which are insufficient for capturing the complexity of such problems. To address this limitation, we introduce MDMOSA (Multi-objective Dwarf Mongoose Optimization with Simulated Annealing), a hybrid that integrates multi-objective optimization for efficient task scheduling in Infrastructure-as-a-Service (IaaS) cloud environments. MDMOSA harmonizes the exploration capabilities of the biologically inspired Dwarf Mongoose Optimization (DMO) with the exploitation strengths of Simulated Annealing (SA), achieving a balanced search process. The algorithm aims to optimize task allocation by reducing makespan and financial cost while improving system resource utilization. We evaluate MDMOSA through extensive simulations using the real-world Google Cloud Jobs (GoCJ) dataset within the CloudSim environment. Comparative analysis against benchmarked algorithms such as SMOACO, MOTSGWO, and MFPAGWO reveals that MDMOSA consistently achieves superior performance in terms of scheduling efficiency, cost-effectiveness, and scalability. These results confirm the potential of MDMOSA as a robust and adaptable solution for resource scheduling in dynamic and heterogeneous cloud computing infrastructures.Keywords

Cloud computing represents a transformative computing paradigm that provides ubiquitous, convenient, and on-demand network access to a shared pool of configurable computing resources. These resources include networks, servers, storage systems, applications, and services that can be rapidly provisioned and released with minimal administrative overhead or direct interaction with the service provider. By abstracting the underlying hardware and infrastructure, cloud computing enables users to access computational resources as a service, rather than owning or managing physical systems [1,2]. This model facilitates the delivery of a broad spectrum of services over the internet, classified into three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). IaaS provides virtualized computing resources such as virtual machines, storage, and networks; PaaS offers a development and deployment environment for applications without the complexity of managing the underlying infrastructure; and SaaS delivers software applications on a subscription basis, accessible through web browsers without local installation.

One of the characteristics of cloud computing is its elasticity. Users can scale resources up or down dynamically based on workload demands, which supports efficient resource utilization and cost-effectiveness [3]. This elasticity, combined with a pay-as-you-go pricing model, allows organizations to optimize operational costs and reduce capital expenditures, making cloud services particularly attractive for both startups and large enterprises. Furthermore, cloud computing supports multiple deployment models tailored to different organizational strategies and compliance requirements. Public clouds are operated by third-party providers and deliver services over the public internet, offering high scalability and cost efficiency. Private clouds, on the other hand, are dedicated to a single organization and typically hosted on-premises or in a classified data center, providing enhanced control, security, and customization. Hybrid clouds combine elements of both public and private clouds, enabling data and application portability across environments to balance flexibility, performance, and regulatory compliance [4].

A vital component of this resource management process is task scheduling, which involves allocating user-submitted tasks to suitable virtual resources based on various criteria [5]. Task scheduling is critical from both the cloud provider’s and the user’s perspectives. For cloud service providers, it involves the automatic and efficient distribution of a diverse and continuously growing set of tasks across the available infrastructure. These tasks often vary in size, computational complexity, and runtime requirements, making it impractical to assign all tasks to a single type of virtual machine. Consequently, selecting the right resource for each task becomes a complex yet essential challenge.

The problem of scheduling in cloud environment arises from the dynamic and heterogeneous nature of the environment. As the number of users and the size of their computational workloads increase, the task scheduling problem becomes increasingly difficult to manage [6]. This problem is further amplified by the need to ensure scalability, responsiveness, and fairness across a wide range of applications. Due to these factors, task scheduling in the cloud is widely recognized as a Non-deterministic Polynomial-time hard (NP-hard) problem. Existing scheduling strategies often struggle to cope with the evolving demands of large-scale cloud infrastructures, leading to inefficiencies such as increased execution times, underutilized resources, and potential service-level violations. To manage this problem, researchers have developed a wide range of heuristic and metaheuristic algorithms designed to produce near-optimal solutions within a reasonable computational time. In earlier approaches, heuristic techniques [7,8] were proposed to oversee task scheduling by applying predefined rules or policies. While heuristics can be efficient for smaller or more predictable workloads, they tend to struggle with scalability and adaptability when faced with large-scale or highly dynamic cloud environments. These methods often rely heavily on specific rule sets, which limits their ability to generalize across diverse types of scheduling scenarios.

Metaheuristic algorithms have proven to be more versatile and effective in addressing the complexities of real-world scheduling problems [9–13]. Despite their success, these algorithms are not without limitations. Issues such as sensitivity to parameter tuning, premature convergence to local optima, and high memory consumption can hinder their performance in certain conditions. Researchers have also explored hybrid approaches that combine the strengths of multiple heuristics and metaheuristics [14,15]. By integrating different techniques, hybrid algorithms aim to balance exploration and exploitation more effectively, improve convergence speed, and enhance solution quality. This direction continues to be a promising area of research for developing more robust and adaptive task scheduling strategies in cloud computing environments.

In response to task scheduling challenges, numerous task scheduling algorithms have been developed, each aiming to satisfy specific constraints and performance objectives. The design of a scheduling strategy can follow either a single-objective or a multi-objective approach, depending on whether the scheduler aims to optimize one criterion or multiple, often conflicting, performance metrics [16]. Single-objective task scheduling focuses on achieving a single dominant goal in the scheduling process. For instance, the scheduler may be designed solely to minimize makespan, which is the total time required to complete all tasks. In other scenarios, the goal might be to minimize execution cost or to maximize resource utilization. This approach is often used in controlled or static environments where one performance metric is clearly prioritized over others.

However, multi-objective task scheduling considers multiple performance criteria simultaneously. In a cloud environment, common objectives include minimizing makespan, reducing energy consumption, lowering operational cost, maximizing throughput, and improving load balancing [17,18]. These objectives are often interrelated and conflicting. For example, reducing energy consumption might increase execution time, while minimizing cost could compromise availability or service quality. Multi-objective scheduler seeks to find a balance among competing goals to deliver the best possible outcomes for both the cloud service provider and the user. A key outcome of multi-objective scheduling is the generation of trade-off solutions rather than a single optimal one. For instance, the scheduler might provide a set of scheduling options where each represents a different balance between execution time and energy usage. It allows cloud providers to deliver more reliable and cost-effective services while giving users a higher degree of satisfaction and performance consistency.

Cloud task scheduling is an NP-hard optimization problem where no exact method can scale efficiently [14]. Although many meta-heuristics exist [5,18–20], they often suffer from premature convergence, sensitivity to initialization, or bias toward a single objective. In this work, we adapt and enhance the DMO algorithm which has been successfully utilized in other optimization domains such as engineering design, power and control engineering, intrusion detection, feature selection, and image classification. By embedding a simulated annealing local search and introducing improvements such as heuristic seeding, elitism, controlled mutation, and a combined makespan–cost objective, we tailor DMO specifically for the NP-hard problem of cloud task scheduling. These targeted enhancements address recognized weaknesses in existing methods and result in measurable improvements in makespan, cost, throughput, and load balancing. Experiments in CloudSim show that the proposed MDMOSA achieves superior performance compared with baseline techniques, demonstrating its potential as a practical scheduling tool in dynamic cloud environments. Through this study, we explore the potential of DMO to minimize makespan, and execution cost and to enhance overall resource management in cloud environments.

The key contributions of this study are outlined as follows:

• We propose an improved task scheduling algorithm, MDMOSA, for cloud computing by enhancing the DMO framework with SA. The enhanced algorithm leverages the adaptive search behavior of DMO and the probabilistic acceptance mechanism of SA to strike a better balance between exploration and exploitation.

• To overcome premature convergence and enhance global search capabilities, SA is embedded into the exploitation phase of DMO. This integration introduces a controlled randomization mechanism that allows the algorithm to escape local optima and explore more diverse regions of the search space, especially under complex, multi-objective scheduling conditions.

• The proposed algorithm, MDMOSA, is designed specifically to address multi-objective task scheduling challenges in cloud computing. It simultaneously optimizes key performance metrics, which include makespan and execution cost.

• We implement and evaluate the proposed algorithm using the real-world google cloud jobs (GoCJ) dataset within the CloudSim environment. The results are compared against few recent methods to assess performance in terms of solution quality, convergence speed, and scalability.

• Our findings demonstrate that the improved DMO algorithm offers a robust and efficient solution for task scheduling in dynamic cloud infrastructures. By effectively mapping tasks to virtual resources, it enhances both provider-side system performance and end-user satisfaction, contributing to the development of more reliable and cost-effective cloud computing services.

This paper presents a focused study on optimizing task scheduling in cloud computing environments through a hybrid metaheuristic approach. We propose an enhanced version of the DMO algorithm by incorporating the SA technique to improve its exploration capabilities and avoid premature convergence. The algorithm aims to efficiently allocate tasks to virtual machines while optimizing objectives such as makespan, execution cost, and resource utilization. The rest of the paper is structured as follows. Section 2 reviews existing research on task scheduling in cloud computing, with particular emphasis on metaheuristic techniques and their effectiveness in handling multi-objective optimization problems. It also highlights the limitations of current approaches and the need for more adaptive scheduling solutions. Section 3 provides the definition of the task scheduling problem along with its formulation as a multi-objective optimization problem. Section 4 describes the proposed enhanced algorithm, outlining the original structure of DMO and detailing the enhancements introduced by integrating simulated annealing. The section explains the scheduling model, the algorithm’s components, and how it addresses challenges in dynamic cloud environments. In Section 5, we present the experimental setup, including workload characteristics, benchmark scenarios, and algorithm parameters. This section also provides a comparative performance analysis of the proposed method against state-of-the-art algorithms. Section 6 concludes the study by summarizing the contributions and discussing the broader implications for task scheduling in cloud computing. We also propose potential future work, including real-time deployment, adaptive parameter tuning, and integration with other intelligent optimization methods.

Task scheduling in cloud computing has attracted significant research attention due to its critical role in optimizing resource utilization and ensuring quality of service in highly dynamic and distributed environments. Over the years, a wide range of strategies has been proposed to address this challenge, including heuristic-based methods, metaheuristic approaches, and hybrid techniques. Numerous variants of meta-heuristics such as Particle Swarm Optimization (PSO) [21], Ant Colony Optimization (ACO) [9], Genetic Algorithms (GA) [22], and Symbiotic Organism Search (SOS) [23] have been applied to cloud scheduling. PSO, while powerful, is known to be sensitive to parameter settings and prone to premature convergence in high-dimensional spaces, leading to suboptimal schedules. Consequently, many variants and hybridizations of different scheduling techniques have been published to overcome these drawbacks. Dwarf Mongoose Optimization (DMO) [24], a newer swarm-based method, has been shown in benchmark studies to outperform PSO and GA on certain high-dimensional problems due to its stronger exploration ability and reduced tendency to stagnate in local optima. Our work builds on this foundation by hybridizing DMO with simulated annealing and multi-objective enhancements, thereby tailoring it to the specific requirements of cloud task scheduling.

In this section, we highlight recent algorithms in the field of cloud task scheduling that have demonstrated impressive performance in solving multi-objective problems.

In the study [25], the author proposed the grey wolf optimizer for task scheduling (GWOTS) as a solution to the challenges of multi-objective task scheduling in cloud computing environments. Unlike traditional methods that reduce multi-objective problems into a single-objective framework, this approach directly addresses multiple scheduling objectives. By maintaining the distinct nature of each objective, the algorithm is better suited to real-world cloud scenarios where trade-offs between factors like makespan, energy consumption, and cost must be carefully managed. The GWOTS algorithm introduces discrete encoding for representing task-to-resource mappings, ensuring accurate modeling within cloud infrastructures. It further enhances its decision-making process through a weighted sorting technique that prioritizes high-quality solutions based on multiple evaluation criteria. This research incorporates iterative search mechanisms and Pareto front construction to maintain diversity in the solution set and to identify balanced trade-offs among objectives. However, a noted limitation of the study is the algorithm’s reliance on fixed weight parameters during solution ranking, which may reduce adaptability in dynamic cloud environments where task requirements and resource availability frequently change.

In the paper [26], a multi-objective cat swarm optimization for fault-tolerant load balancing (MCSOFLB) algorithm was presented, specifically designed to address task scheduling and load balancing challenges in multi-cloud environments. The algorithm applies the principles of cat swarm behavior to perform a multi-objective optimization process, targeting several critical metrics such as makespan, execution cost, resource utilization, and task success rate. A notable aspect of the study is the integration of a rescue workflow technique, which contributes to the system’s fault tolerance. This mechanism ensures that workflows continue to execute even when some tasks fail, by attempting to recover and reassign failed tasks without immediately terminating the entire scheduling process. A limitation of this study lies in the increased computational overhead introduced by the fault-tolerant mechanisms and multi-objective computations. As task volumes and system scale increase, the algorithm’s complexity may impact real-time responsiveness, making it less suitable for latency-sensitive applications without further optimization.

The study in [27] propose a cuckoo optimization algorithm (COA)-based framework for addressing the task scheduling problem in cloud computing environments. The method leverages the exploration-exploitation balance of cuckoo search behavior to improve task distribution and resource utilization across virtual machines, particularly in systems experiencing workload imbalances. By mimicking the brood parasitism behavior of cuckoos, the algorithm is able to explore a wide solution space and converge toward optimal task-to-resource mappings. This not only enhances system performance but also mitigates the risk of resource bottlenecks and underutilization. The study frames the scheduling challenge as a multi-objective optimization problem, targeting both task processing time and overall response time as primary performance indicators. Additionally, the algorithm supports dynamic resource allocation, adapting task assignments in real-time according to system load and task requirements. However, a noted limitation is the sensitivity of the algorithm to parameter settings such as discovery rate and step size, which can influence convergence behavior and overall solution quality. Fine-tuning these parameters is necessary for optimal deployment in large-scale or time-critical cloud environments.

The authors in [28] propose an adaptive strategy for task scheduling in cloud computing, particularly suited to the demands of big data applications. The approach is built on the adaptive tasmanian devil optimization (ATDO) algorithm, a bio-inspired method modeled after the dual feeding behaviors of Tasmanian devils scavenging (exploration) and active hunting (exploitation). This behavioral modeling allows the algorithm to efficiently navigate the search space and refine potential solutions iteratively. To further enhance diversity and avoid premature convergence, the ATDO algorithm incorporates opposition-based learning (OBL). This strategy improves the convergence rate by considering opposite candidate solutions, thus maintaining a well-distributed population and encouraging exploration of under-sampled areas in the search space. Experimental results show that the ATDO algorithm performs well in terms of execution efficiency, scalability, and adaptability, especially in big data processing contexts where computational loads are high and resources are heterogeneous. However, the study acknowledges that the performance of ATDO can be sensitive to its control parameters, and further research is suggested to explore adaptive parameter tuning techniques for real-time cloud environments.

In this paper [29], the author proposes a solution for cloud task scheduling using the modified reptile search algorithm (MRSA). RSA is a nature-inspired metaheuristic that simulates the encircling and hunting behaviors of crocodiles to explore complex solution spaces. Due to its gradient-free design, RSA is particularly well-suited for addressing the nonlinear and high-dimensional characteristics of task scheduling in cloud environments. To improve upon the original RSA, the MRSA incorporates a distribution estimation strategy, which uses the positional data of high-performing individuals within the population. Additionally, MRSA introduces a shifted distribution mechanism that utilizes global best positional information to generate new offspring populations. This helps the algorithm progressively move toward optimal scheduling configurations through iterative refinement. The study emphasizes a multi-objective optimization perspective, addressing key scheduling concerns such as resource utilization, energy consumption, and execution cost. The proposed algorithm was implemented and evaluated using the CloudSim simulation toolkit under various workload conditions. A limitation noted in the study is that MRSA may require additional tuning of its distribution parameters to adapt effectively to rapidly changing cloud workloads. Without appropriate parameter adjustment, the algorithm’s responsiveness and performance consistency could be affected in real-time scheduling scenarios.

In the study [30], a multi-objective optimization framework aimed at solving the task scheduling problem in computational grid environments was presented. The proposed approach simultaneously addresses several conflicting scheduling objectives, including minimization of turnaround time, execution cost, and communication cost, as well as maximization of overall grid resource utilization. A notable feature of the framework is the use of multiple greedy scheduling strategies, each designed to target specific objectives. For example, one greedy scheduler focuses on reducing turnaround and communication costs while maximizing grid utilization. Other variants aim to minimize execution and communication costs by optimizing task distribution across available computational nodes. These strategies allow the system to respond flexibly to different scheduling priorities and workload characteristics. This multi-attribute decision-making (MADM) method helps rank scheduling alternatives based on their proximity to an ideal solution, facilitating more informed and effective task allocation under complex conditions. While the framework demonstrates effectiveness in managing multiple objectives and supporting flexible scheduling decisions, a limitation lies in its dependence on predefined heuristic rules. The performance and adaptability of the schedulers are closely tied to how well these rules align with the current system state and task demands, which may limit the algorithm’s robustness in highly dynamic or unpredictable grid environments.

The paper [31] presents a multi-objective load balancing and task scheduling methodology based on the adaptive osprey optimization algorithm (AO2). The primary objective of the study is to efficiently schedule user tasks across virtual machines in a way that balances the computational load while simultaneously optimizing multiple conflicting performance metrics. The AO2 algorithm is inspired by the hunting and adaptive behavior of ospreys and is used here to intelligently predict the workload on each VM. Based on this prediction, tasks are assigned in a manner that ensures efficient resource utilization and reduces the risk of overloading or underutilizing specific virtual machines. The algorithm operates within a multi-objective optimization framework, targeting key goals such as minimizing energy consumption, reducing execution time, and lowering operational costs. In highly unpredictable environments, deviations between predicted and actual loads may impact the algorithm’s scheduling effectiveness, suggesting a need for further refinement in predictive modeling components.

The author in [32] presents a task scheduling strategy for cloud computing environments based on the whale optimization algorithm (WOA). The proposed WOA-Scheduler employs a multi-objective optimization framework aimed at simultaneously improving several critical performance metrics, including cost efficiency, execution time, and load balancing. The study addresses the inherent challenges of task scheduling in cloud systems, where workload diversity and dynamic conditions require adaptive and intelligent solutions. A notable feature of the WOA-Scheduler is its support for user-defined weighting of objectives. Comparative evaluations included in the study demonstrate that the WOA-based scheduler outperforms traditional single-objective scheduling methods, particularly in scenarios requiring trade-offs among multiple conflicting goals. The algorithm achieves improved scheduling quality, better load distribution, and lower resource consumption, highlighting its effectiveness in managing the complexities of cloud computing environments. Despite its strengths, the study acknowledges that the performance of the WOA-Scheduler may be influenced by the tuning of algorithmic parameters and the selection of weight values. Inappropriate parameter settings could limit the algorithm’s ability to converge efficiently or balance objectives effectively under certain workloads.

Overall, the reviewed bio-inspired and swarm-based optimization techniques have demonstrated significant potential in addressing the task scheduling challenges within cloud computing environments. These methods have shown effectiveness in handling complex, multi-objective scenarios; however, they still face limitations, particularly in achieving an optimal balance between exploration and exploitation. To address these shortcomings and enhance scheduling efficiency, this paper introduces an optimization algorithm designed specifically for multi-criteria task scheduling in cloud computing systems. The proposed method aims to integrate the strengths of existing approaches while mitigating their inherent weaknesses, thus enabling more robust and scalable scheduling solutions.

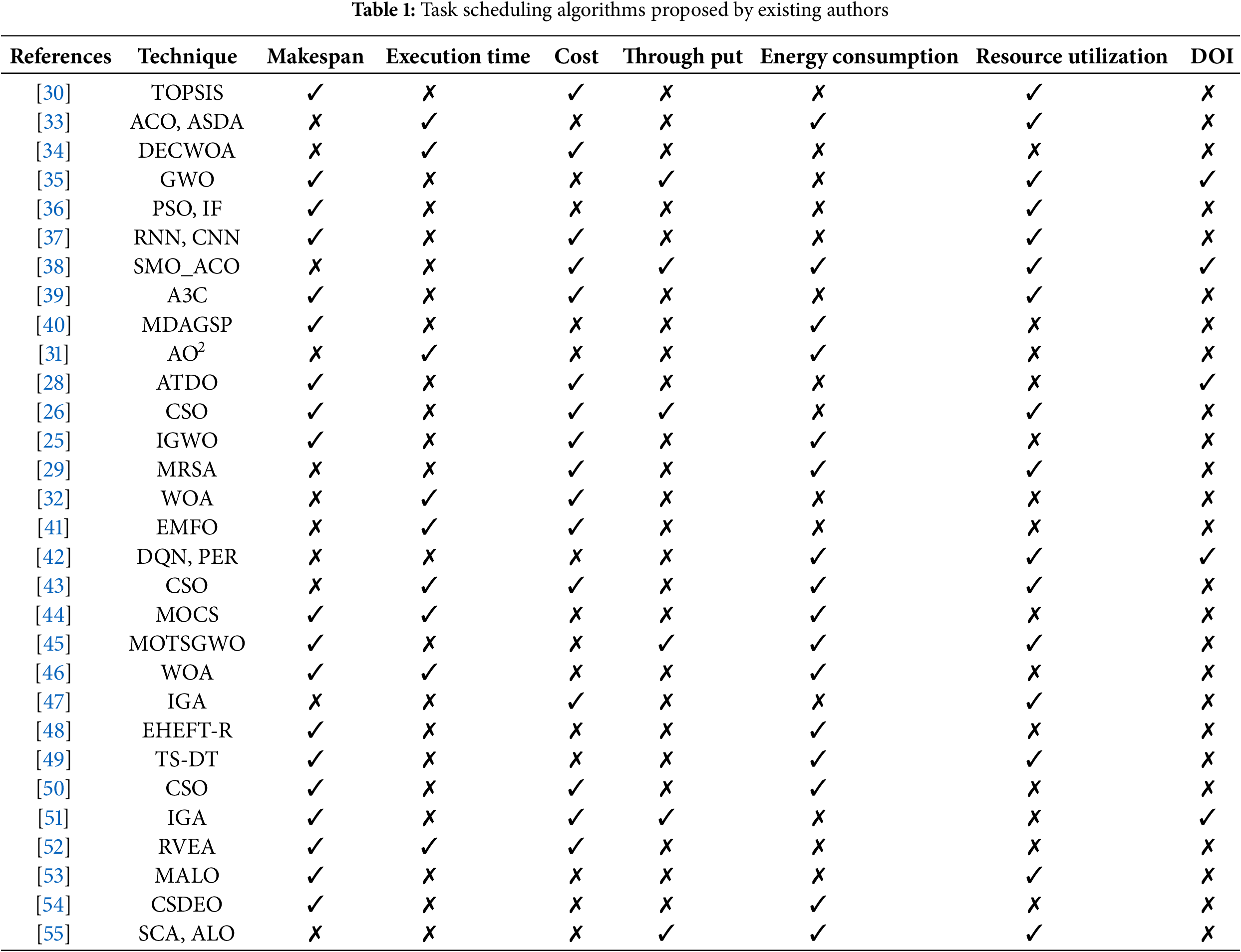

From the analysis presented in Table 1, it is evident that various researchers have proposed a wide range of heuristic, metaheuristic, and machine learning (ML) and deep learning (DL)-based approaches to address the task scheduling problem in cloud computing. These studies have aimed to optimize key performance indicators such as makespan, throughput, degree of imbalance, execution cost, energy consumption, and resource utilization. This comparative overview highlights both the diversity of optimization goals and the performance trade-offs inherent in current scheduling strategies.

3 Problem Description and Formulation

As previously discussed, the task scheduling problem in cloud computing involves assigning user-submitted tasks, often referred to as cloudlets, to a set of available virtual machines within a cloud environment. The infrastructure of a typical cloud data center consists of a pool of physical servers hosting both homogeneous and heterogeneous resources. These servers support multiple virtual machines through a virtualization layer that abstracts the physical hardware and provides clients with flexible and scalable computing resources.

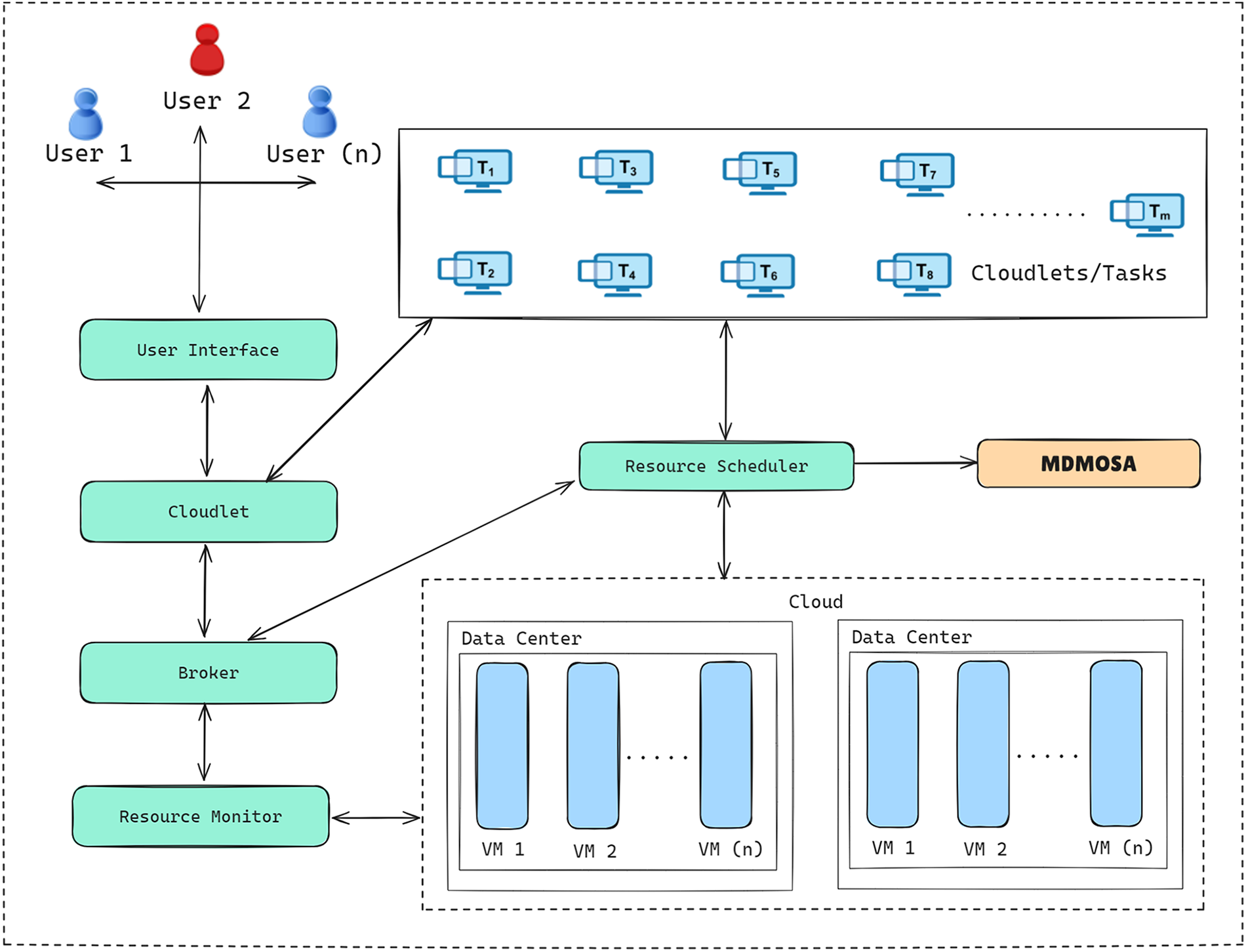

Fig. 1 illustrates the architectural framework for multi-objective task scheduling in a cloud computing environment. The process begins with multiple users submitting task requests (cloudlets) via a user interface. These tasks are managed by the cloudlet module and forwarded to the broker, which coordinates with the resource monitor to obtain real-time information on the status of virtual machines across data centers. Tasks are queued and passed to the resource scheduler, which leverages the proposed MDMOSA algorithm to determine optimal task-to-VM mappings. The scheduler considers key objectives such as minimizing makespan and execution cost. Virtual machines are hosted within one or more data centers and represent the execution environment for user tasks. The interaction among components ensures efficient, dynamic, and scalable task allocation in the cloud infrastructure.

Figure 1: The framework model for task scheduling in the cloud environment

The task scheduling problem addressed in this study focuses on minimizing both makespan and financial cost associated with executing large-scale tasks in an IaaS cloud computing environment. Efficient task scheduling is critical to improving resource utilization and maintaining quality of service in such large-scale, dynamic systems. This section introduces the foundational models that support the formulation of the proposed scheduling algorithm. These include the IaaS cloud data center model, the task execution model, and the formal definition of the task scheduling problem. Together, these models establish the basis for applying the MDMOSA algorithm to optimize scheduling decisions across multiple objectives in heterogeneous cloud environments.

An IaaS cloud data center provides computing resources to users through virtual machines, where each active virtual machine is referred to as an instance. IaaS providers typically offer various instance series, each representing a range of instance types that differ in combinations of CPU, memory, storage, and bandwidth configurations. In this study, CPU capacities are primarily used to estimate the expected execution time of tasks. It is assumed that the IaaS provider offers an unlimited pool of instances, denoted by the set

where

In IaaS-based cloud computing, service providers typically charge users based on the lease duration of virtual machine instances. The billing is usually calculated per unit time, though the pricing strategy varies between providers. For example, Amazon EC2 adopts a per-hour billing model, where users are charged for each full hour of instance usage, with fractional hours rounded up to the next full hour. In contrast, Microsoft Azure follows a per-minute billing model, allowing finer-grained usage tracking and potentially more cost-effective resource consumption for short-duration tasks. Due to these varying pricing schemes, the proposed scheduling model adopts a generalized cost formulation that can accommodate multiple pricing strategies across different providers. Let

3.2 Multi-Objective Task Scheduling Formulation

Given a set of independent tasks

here,

To support task-to-resource allocation decisions in heterogeneous environments, two matrices are used: the expected time to completion (ETC) matrix and the expected cost to completion (ECC) matrix. The ETC matrix, denoted

Similarly, the ECC matrix,

Both matrices are of dimension

The overall makespan is determined as the maximum finish time among all tasks as shown in Eq. (6):

The cost is computed by summing up the lease cost of the instances used for each task, as shown in Eq. (7):

In this study, for simplicity, we assume that only one instance series and one pricing model are considered, and the instances are compute intensive. The multi-objective task scheduling problem is formally stated in Eq. (8) as:

4 Framework of Dwarf Mongoose Optimization (DMO) Algorithm

The present study builds upon the DMO algorithm by refining and adapting it to address the NP-hard problem of task scheduling in cloud environments. The proposed enhancements include strategies for improving initialization, mechanisms to preserve high-quality solutions during evolution, an embedded simulated annealing local search to strengthen exploitation, a balanced objective function that jointly considers makespan and cost, and the maintenance of a Pareto archive for multi-objective evaluation. These refinements render the MDMOSA more suitable for dynamic cloud environments and enable it to achieve consistent improvements in makespan, cost, and resource utilization when compared with baseline approaches.

The DMO algorithm draws inspiration from the unique behavioral patterns of the dwarf mongoose (Helogale spp.), a small but socially advanced species. Their collective behaviors form a rich blueprint for solving complex computational problems, particularly in dynamic, resource-constrained environments such as cloud task scheduling. Dwarf mongooses live in tightly coordinated groups that rely on clear communication and well-defined roles. The alpha female leads the group, initiates foraging activities, and controls reproductive rights, symbolizing centralized decision-making in optimization. Meanwhile, subgroups such as scouts and babysitters support operations, mirroring agent roles in swarm-based algorithms. A central survival strategy is their semi-nomadic foraging behavior, which alternates between intensive local searches and extensive wide-range exploration. This naturally maps to the core exploration and exploitation balance in metaheuristics. Furthermore, the group frequently relocates their territory or mound in response to resource depletion or danger, representing the algorithm’s capacity to dynamically adapt to shifting search landscapes. Territorial boundaries are enforced via scent-marking, which provides safety for group members and deters intruders. Algorithmically, this mechanism inspires the use of memory boundaries and solution space constraints to keep search agents focused and effective. Additionally, their population control strategy, which prefers shrinking group size during resource scarcity, informs how resource consumption and task load can be balanced efficiently. Finally, their aggressive cooperative defense behavior, especially in response to predators, highlights a collective mechanism for responding to suboptimal or violated solutions. This encourages the swarm to redirect or correct itself for better global outcomes. These nuanced survival strategies of the dwarf mongoose, ranging from social hierarchy and communication to adaptive mobility and foraging, are abstracted into the DMO algorithm to enhance its performance in solving multi-objective task scheduling problems in cloud environments.

The DMO algorithm is inspired by the natural behavior and social structure of dwarf mongoose. The model draws on the unique organization of their population, which includes distinct subgroups such as alpha leaders, scouts, and babysitters. These behavioral roles are abstracted into computational processes where the exploration and exploitation phases of optimization are represented by foraging and group relocation activities, respectively. During the exploration phase, scout mongooses leave the mound to search for food, while babysitters remain behind to protect and care for the young. This behavioral distinction reflects a division of labor that maintains group stability and supports adaptive decision-making. When scouts identify a new food source, the entire group relocates to a new mound. This relocation is modeled as the exploitation phase of the optimization process, where the algorithm intensifies its search around promising solutions. The model also supports the dynamic reassignment of roles, particularly the exchange of babysitters among scout members. This exchange facilitates flexibility and diversity in agent behavior, helping to prevent premature convergence and maintain the balance between global and local search capabilities. The simulation of mound discovery adds further realism to the model by representing how dwarf mongooses adaptively shift their search regions based on environmental feedback.

The mathematical foundation of the DMO algorithm is based on modeling the behavioral patterns of the dwarf mongoose population. The population consists of individuals representing candidate solutions, categorized into subgroups including alpha, scouts, and babysitters. The entire population is denoted by a position matrix

The alpha subgroup, particularly the alpha female

The scout group, responsible for exploration and identifying new regions of the solution space, is updated based on a movement strategy that considers group coordination and volatility. The new position of a scout is calculated using the conditional rule described in Eq. (11):

In this equation,

In addition to group movement, individual scout and babysitter members also update their positions based on alpha influence and randomness. This process, which incorporates the alpha female’s guidance, is expressed in Eq. (12):

here, the parameter

This measure enables the algorithm to evaluate whether a change in position has led to a better solution, thereby influencing subsequent movement and adaptation.

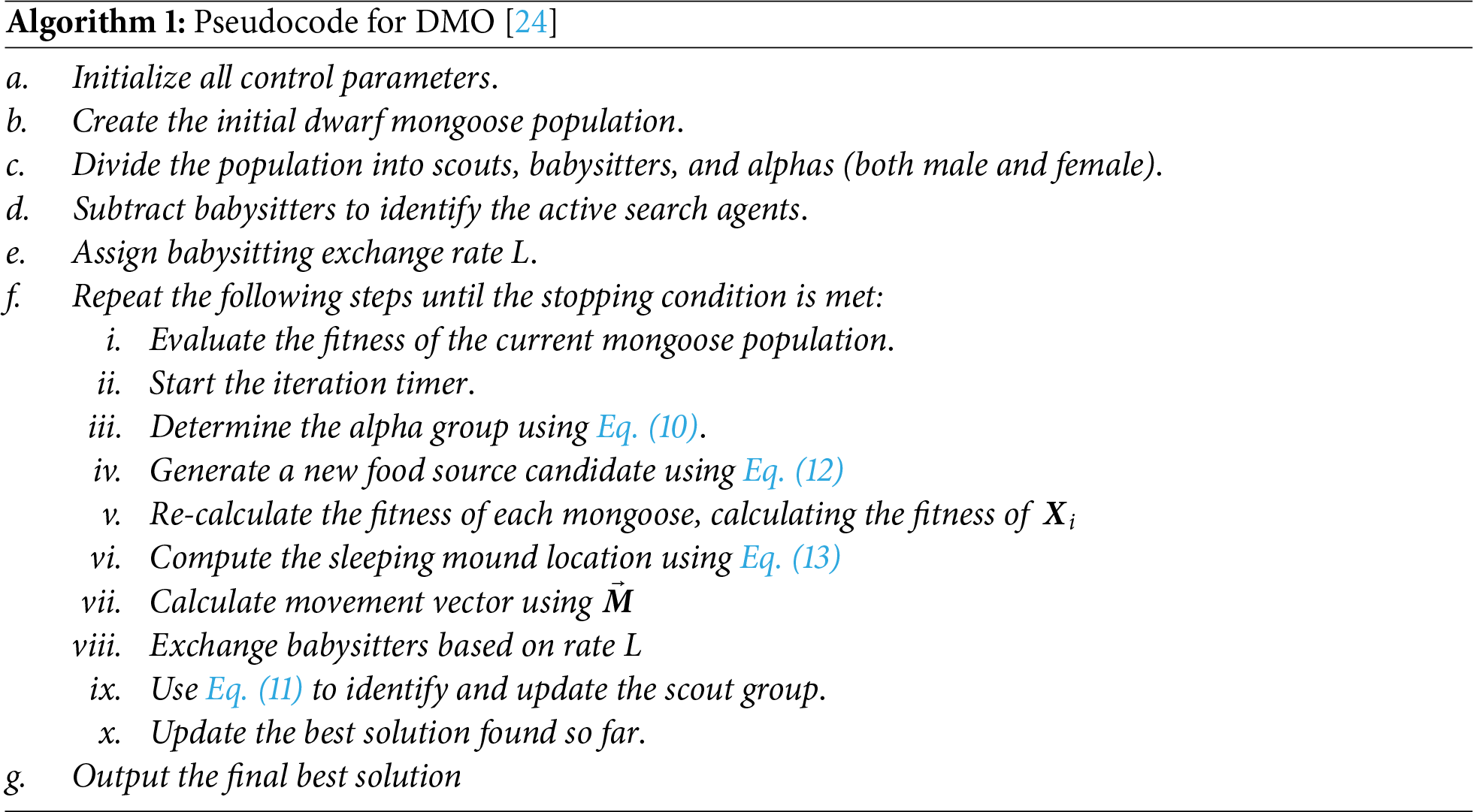

The pseudocode for the algorithm is given in Algorithm 1.

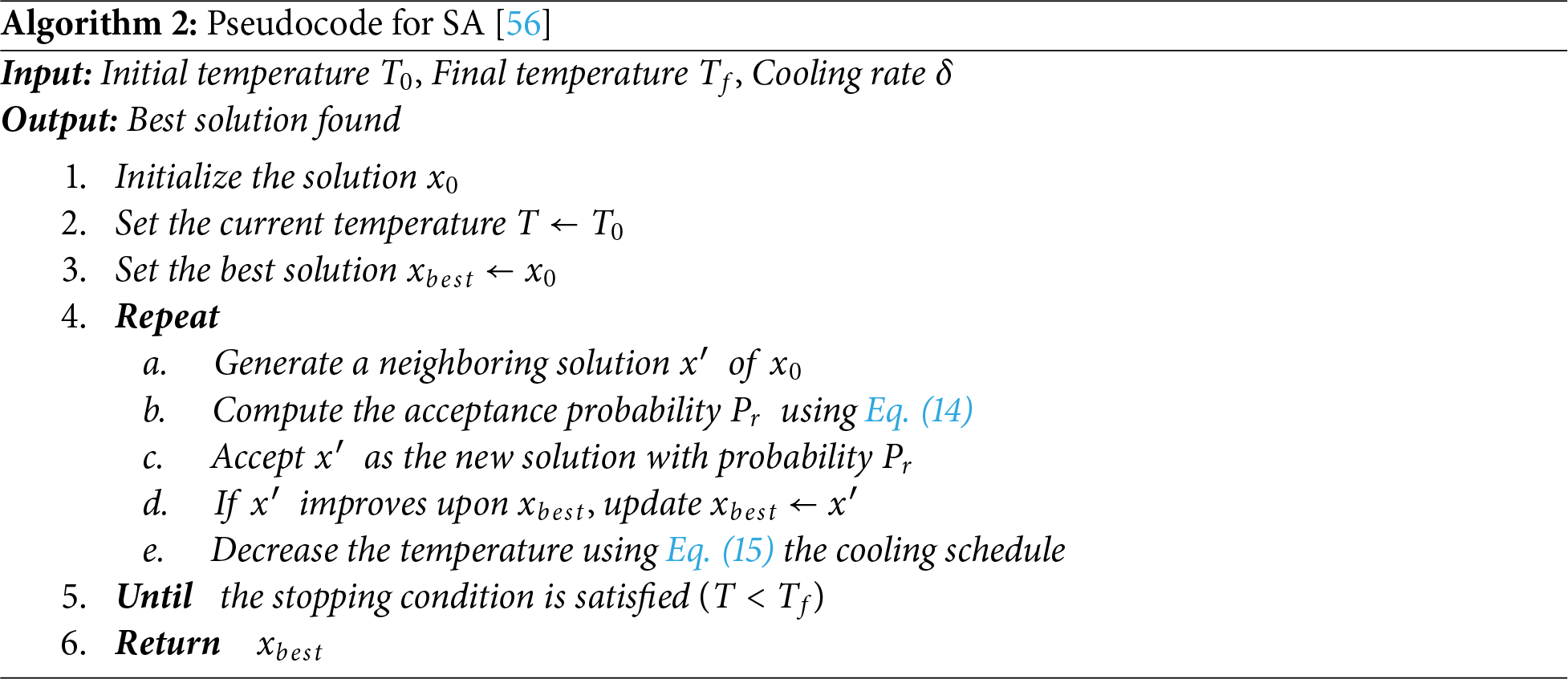

Simulated Annealing (SA) is a robust and probabilistic optimization method inspired by the annealing process in physical systems. In metallurgy, annealing involves heating a solid material to a high temperature and then slowly cooling it. As the temperature rises, the internal energy and molecular disorder increase; when the system is gradually cooled, particles settle into a low-energy, stable configuration. This principle is mapped into optimization by treating the solution’s quality as internal energy and controlling the search behavior using a temperature parameter. In optimization contexts, the SA algorithm begins with an initial solution

here,

where

The basic structure of SA algorithm is presented as Algorithm 2.

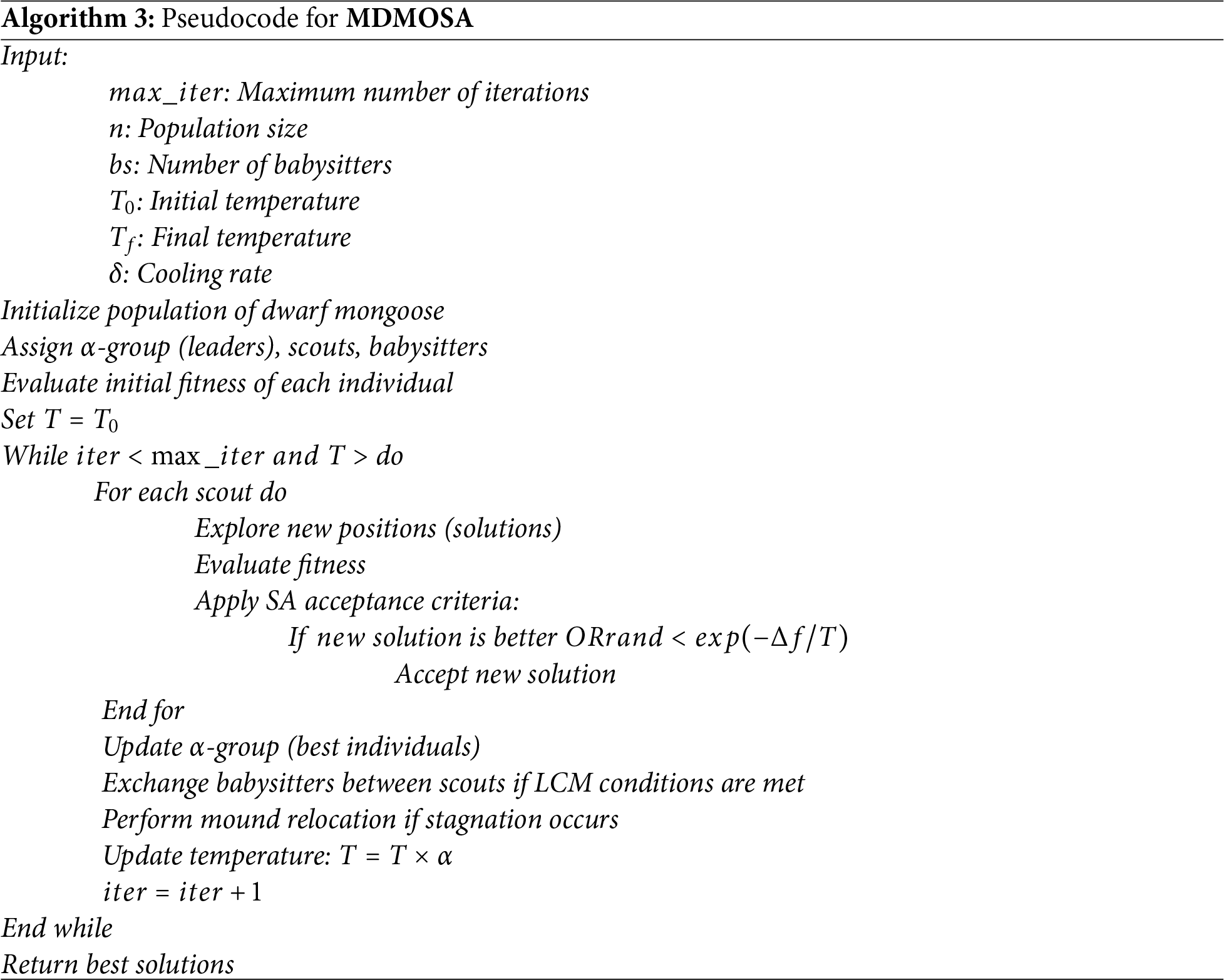

4.4 Multi-Objective Dwarf Mongoose Optimization for Task Scheduling Optimization Algorithm

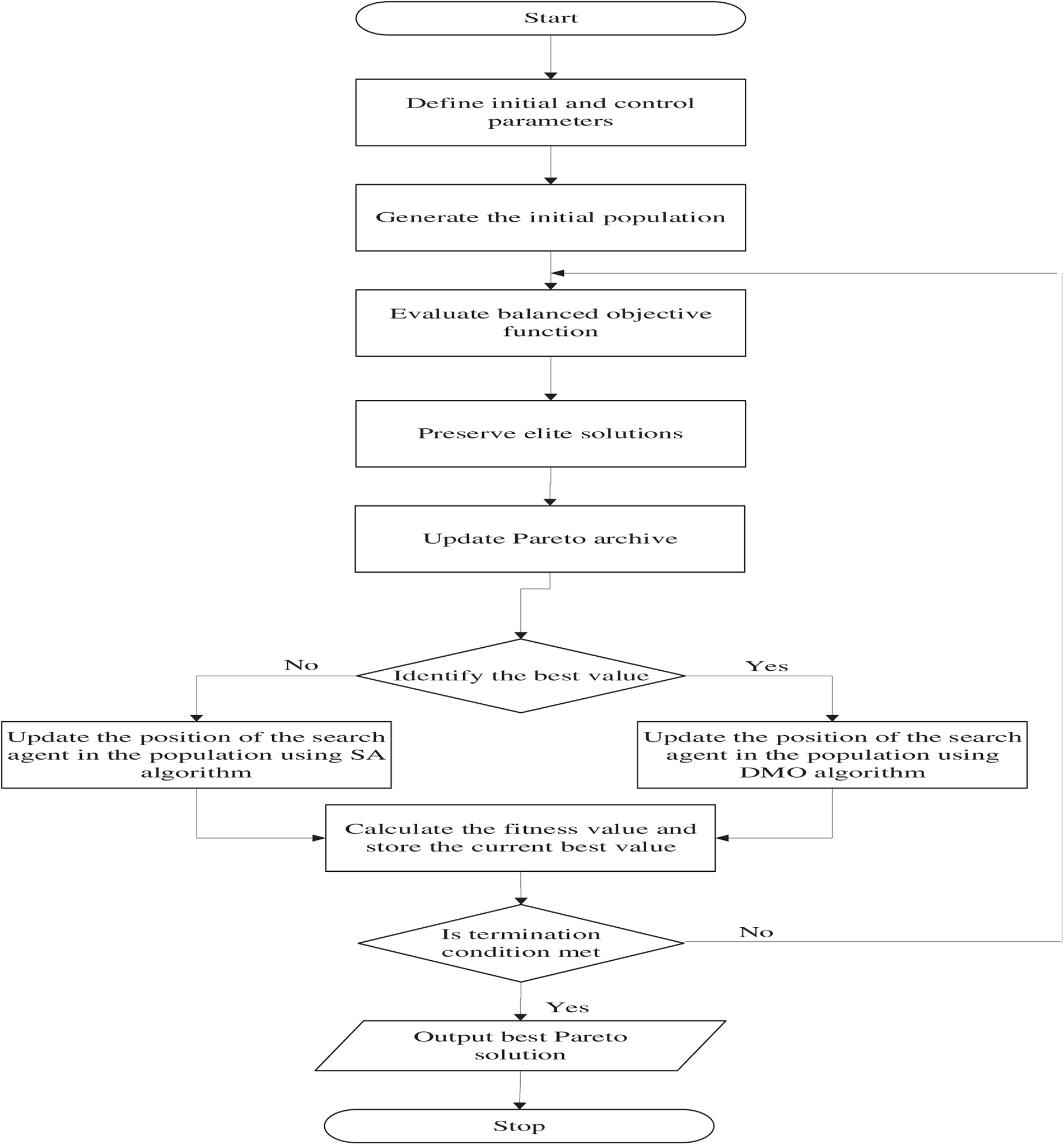

While the basic DMO algorithm exhibits strong global search capabilities through its biologically inspired exploration-exploitation behaviors, it faces certain limitations. In particular, DMO in its basic form may converge prematurely to local optima, especially in complex and high-dimensional search spaces like multi-objective task scheduling in cloud environments. To address these limitations, this study introduces MDMOSA (multi-objective dwarf mongoose optimization with simulated annealing) a hybrid optimization algorithm that enhances the original DMO with the adaptive local search strategy of SA. The integration is designed to reinforce the algorithm’s exploration-exploitation balance, allowing it to escape local optima while steadily converging toward global solutions. In MDMOSA, the global exploration conducted by the scout and alpha-based behaviors of the mongoose population is complemented by the SA mechanism, which probabilistically accepts inferior solutions based on a temperature schedule. This enables the algorithm to maintain population diversity and refine solutions that would otherwise be overlooked. The synergy between DMO’s natural modeling and SA’s stochastic hill-climbing ensures better convergence stability, solution quality, and optimization robustness in multi-objective scenarios involving cost, makespan, and resource utilization. The pseudocode of the MDMOSA algorithm is outlined in Algorithm 3, while the flowchart in Fig. 2 illustrates the overall workflow and interactions among its key components.

Figure 2: Flowchart of MDMOSA technique

5 Performance Evaluation and Results Analysis

To evaluate the performance, scalability, and robustness of the proposed MDMOSA algorithm for multi-objective task scheduling in cloud computing environments, a series of simulations were conducted using CloudSim 3.0.3 simulation toolkit, a widely adopted discrete event simulation toolkit designed specifically for modeling and experimenting with cloud computing infrastructures and services. CloudSim provides a flexible and extensible platform to simulate virtualized data centers, resource provisioning policies, and application workloads under controlled and repeatable conditions.

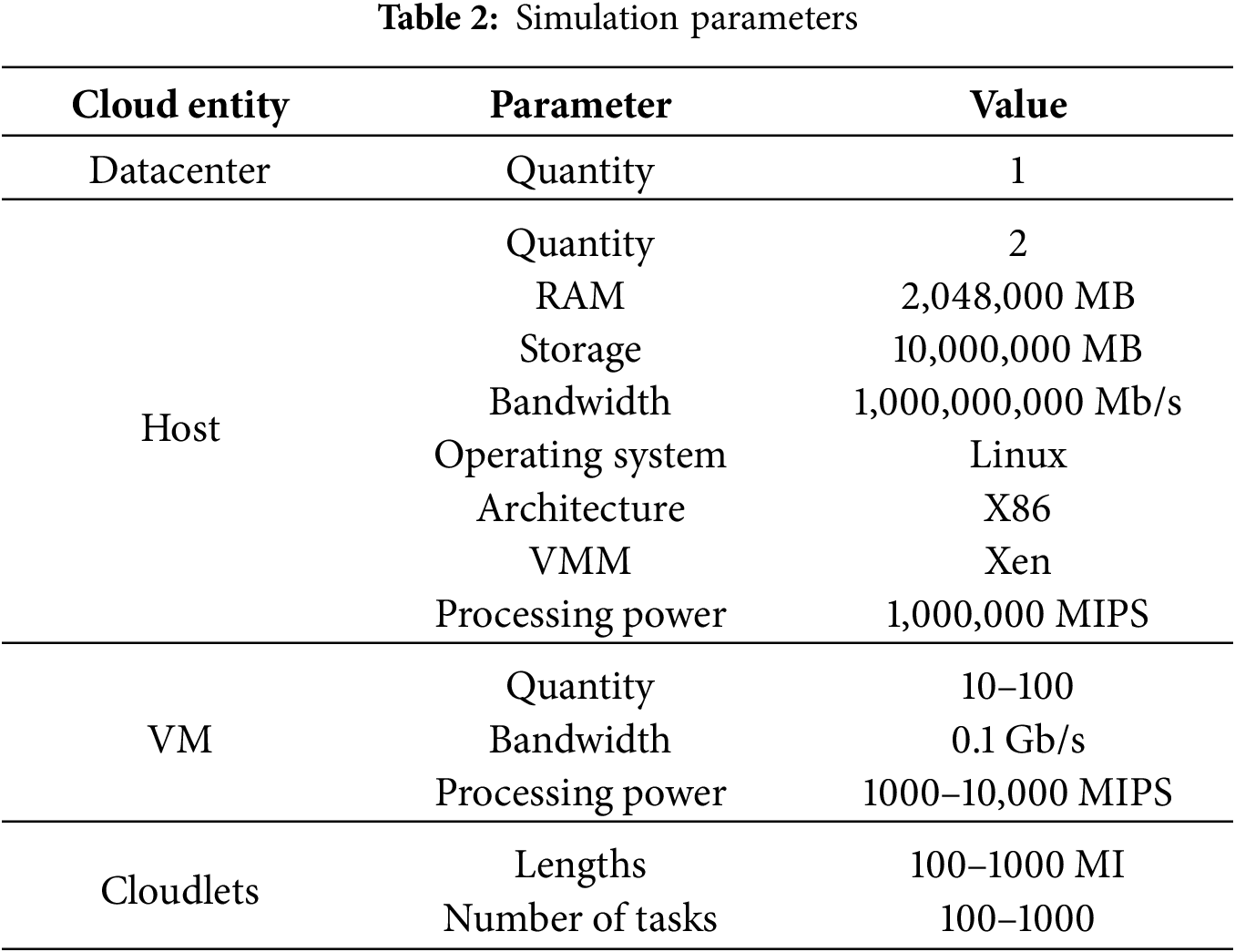

The simulations were executed on a machine running Windows 11, equipped with a 12th Generation Intel(R) Core (TM) i7-12700H processor operating at 2.70 GHz and 16 GB of RAM. All competing algorithms were executed for a fixed number of 100 iterations, which served as the stopping criterion. This choice ensures a fair and consistent basis for comparison. The specification of users, cloudlets, hosts, virtual machines, and data centers used for the computation results and significance analysis is summarized in Table 2. These configurations are consistent with recent studies and adopted standards in the field, as referenced in previous research. To assess the scalability and robustness of the proposed MDMOSA algorithm, a diverse range of cloudlet sizes was utilized. Larger cloudlets were introduced to simulate complex workloads and gain insight into the performance of the algorithms under increasing problem scales and moderate user demands.

In real-world cloud computing systems, numerous virtual machines are typically employed to manage complex services and execute large-scale computational tasks. As such, evaluating task scheduling algorithms solely on synthetic or simulated datasets may not provide an accurate measure of their practical performance. To overcome this limitation, the proposed MDMOSA algorithm is also evaluated using a real-world benchmark known as the Google Cloud Jobs (GoCJ) dataset [57]. The GoCJ dataset captures realistic workload behavior by incorporating task size information derived from actual Google cluster traces and MapReduce execution logs. It includes 21 text files, each containing task sizes represented in millions of instructions (MI). These files are systematically labeled as “GoCJDatasetXXX.txt,” where “XXX” denotes the number of tasks within that file. For example, the file “GoCJDataset400.txt” includes task specifications for 400 jobs. By leveraging this dataset, the performance of the MDMOSA algorithm can be assessed under more practical conditions that closely mirror real-life cloud environments. This enhances the reliability and credibility of the evaluation results.

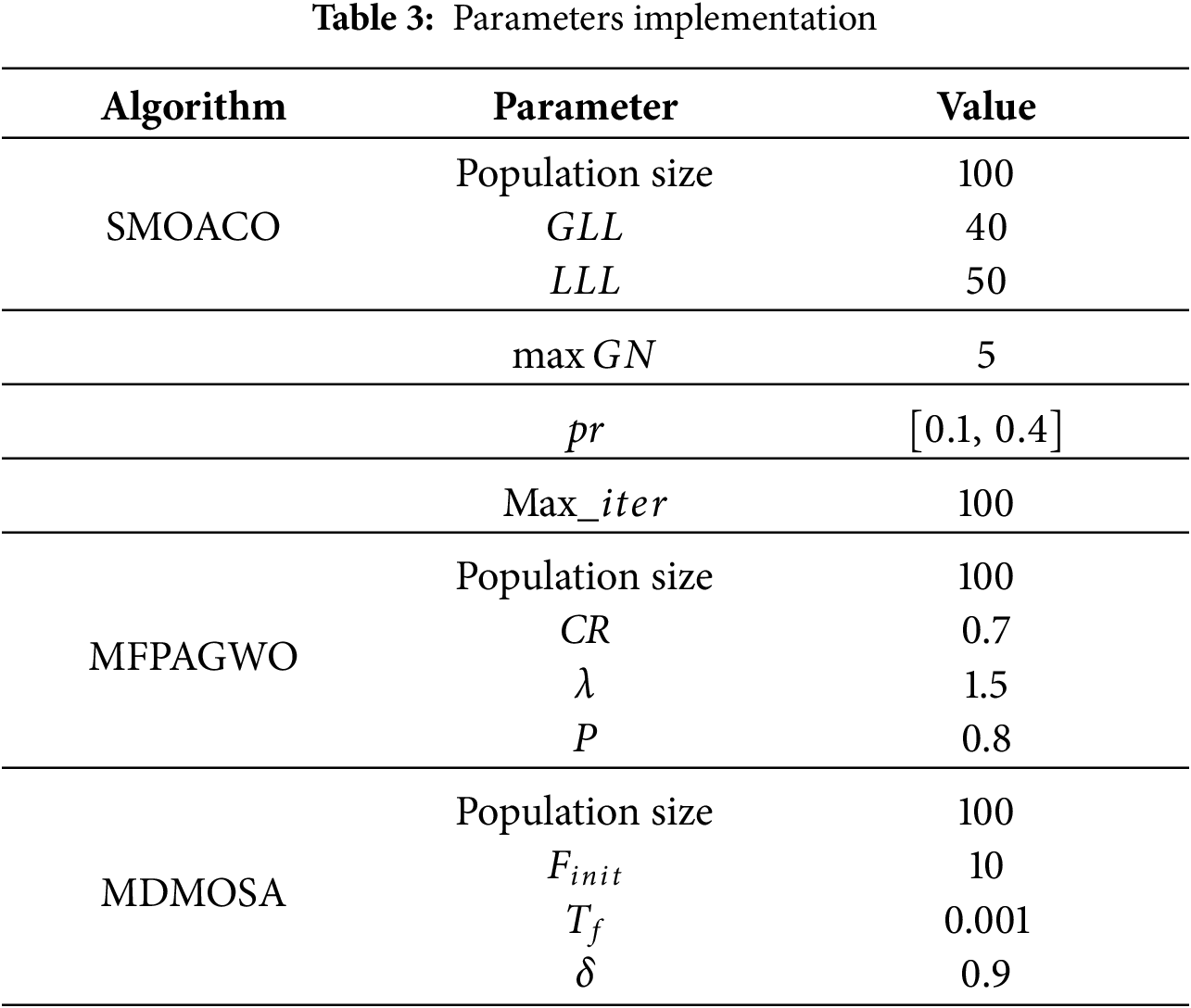

For comparative evaluation, the proposed MDMOSA algorithm is benchmarked against three recently advanced multi-objective scheduling algorithms: hybridized Spider Monkey Optimization and Ant Colony Optimization (SMOACO) [38], Grey Wolf Optimization (MOTSGWO) [45], and hybridized Flower Pollination Algorithm and Grey Wolf Optimizer (MFPAGWO) [35]. The parameter settings for each of these algorithms were carefully chosen based on established configurations in the literature to ensure a fair and reliable comparison, as outlined in Table 3. Each algorithm was evaluated using key performance metrics, including makespan and execution cost in the context of IaaS cloud computing environments. These metrics are particularly relevant for assessing the effectiveness of task scheduling strategies in optimizing computational efficiencyand economic cost in virtualized resource allocation.

To ensure statistical validity, each simulation was executed 20 times under the same conditions. The mean, standard deviation, and best value of each metric were recorded and analyzed. This approach helps capture the consistency and reliability of the algorithms across multiple runs, revealing not only the average performance but also the variability and potential extremes in the outcomes. The comprehensive analysis allows for a deeper understanding of the comparative advantages and limitations of each scheduling technique under varying workload intensities.

5.4 Results Analysis and Discussion

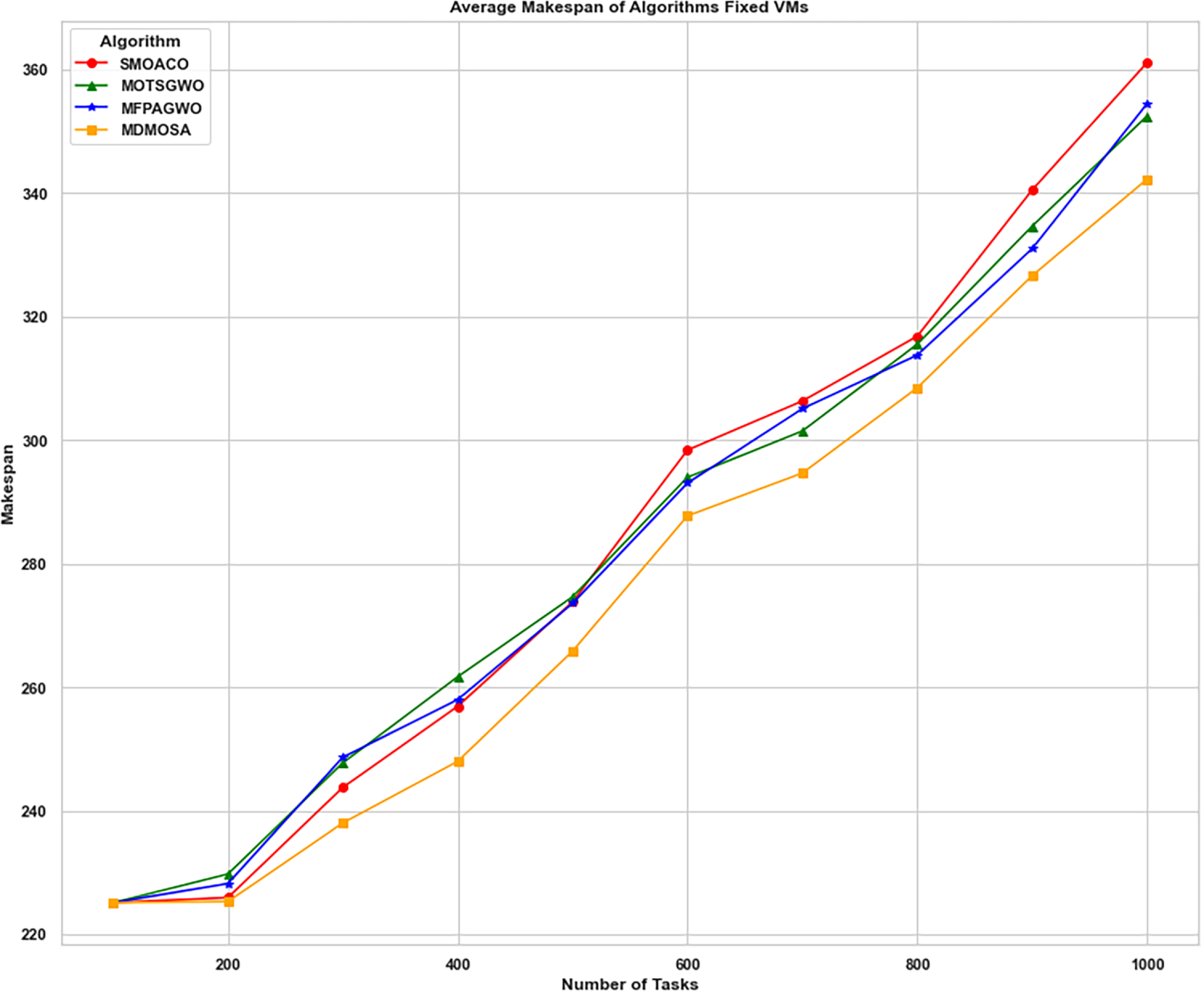

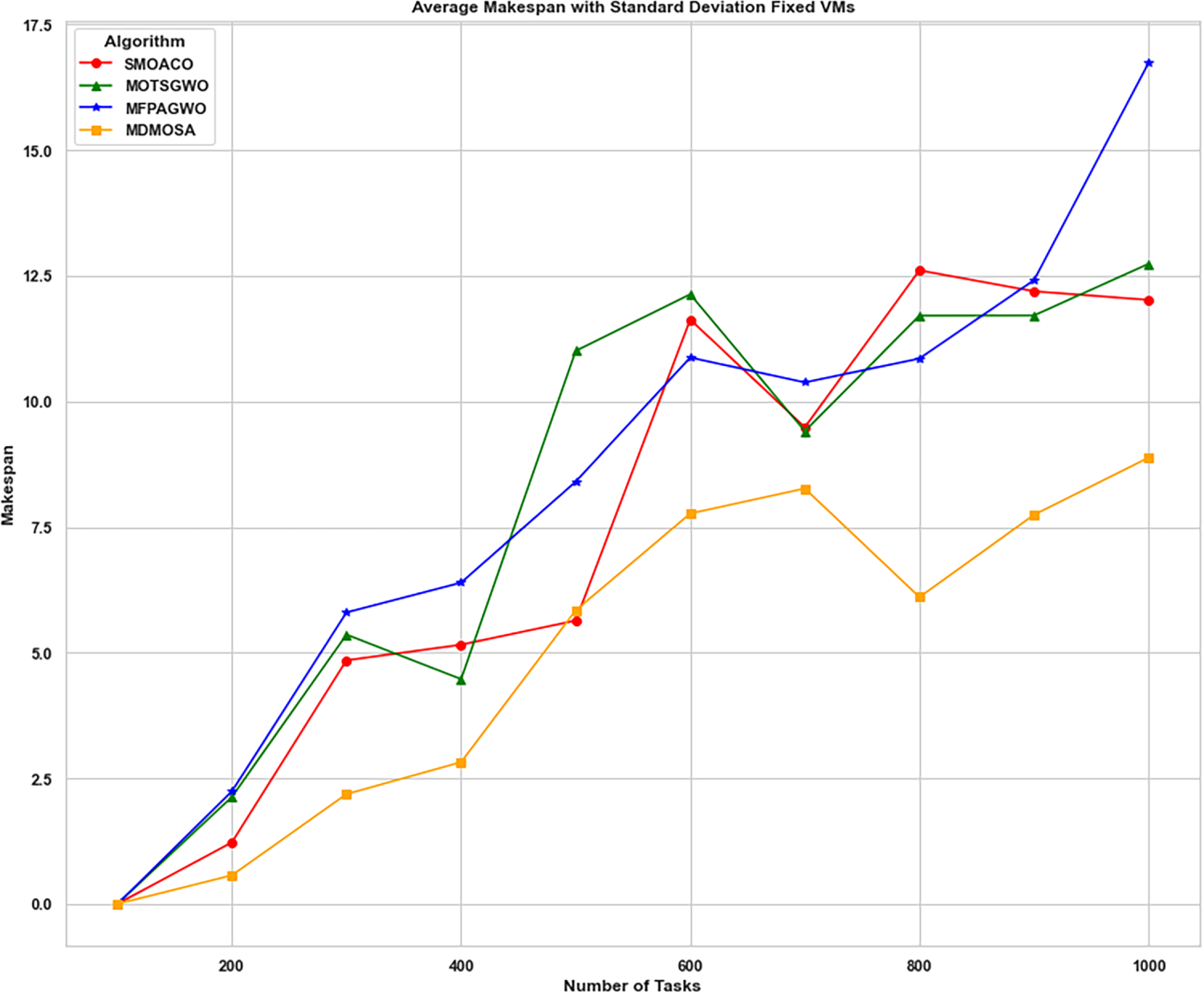

This section presents and analyzes the computational results obtained from the simulation, with a focus on statistical significance to evaluate the effectiveness of the proposed MDMOSA algorithm for multi-objective task scheduling in an IaaS cloud computing environment. Key performance metrics including makespan, execution cost, and resource utilization are assessed and visualized to determine the efficiency of the scheduling approach, as shown in Figs. 3–12.

Figure 3: Average makespan per dataset with fixed VMs

Figure 4: Average makespan per dataset with fixed VMs

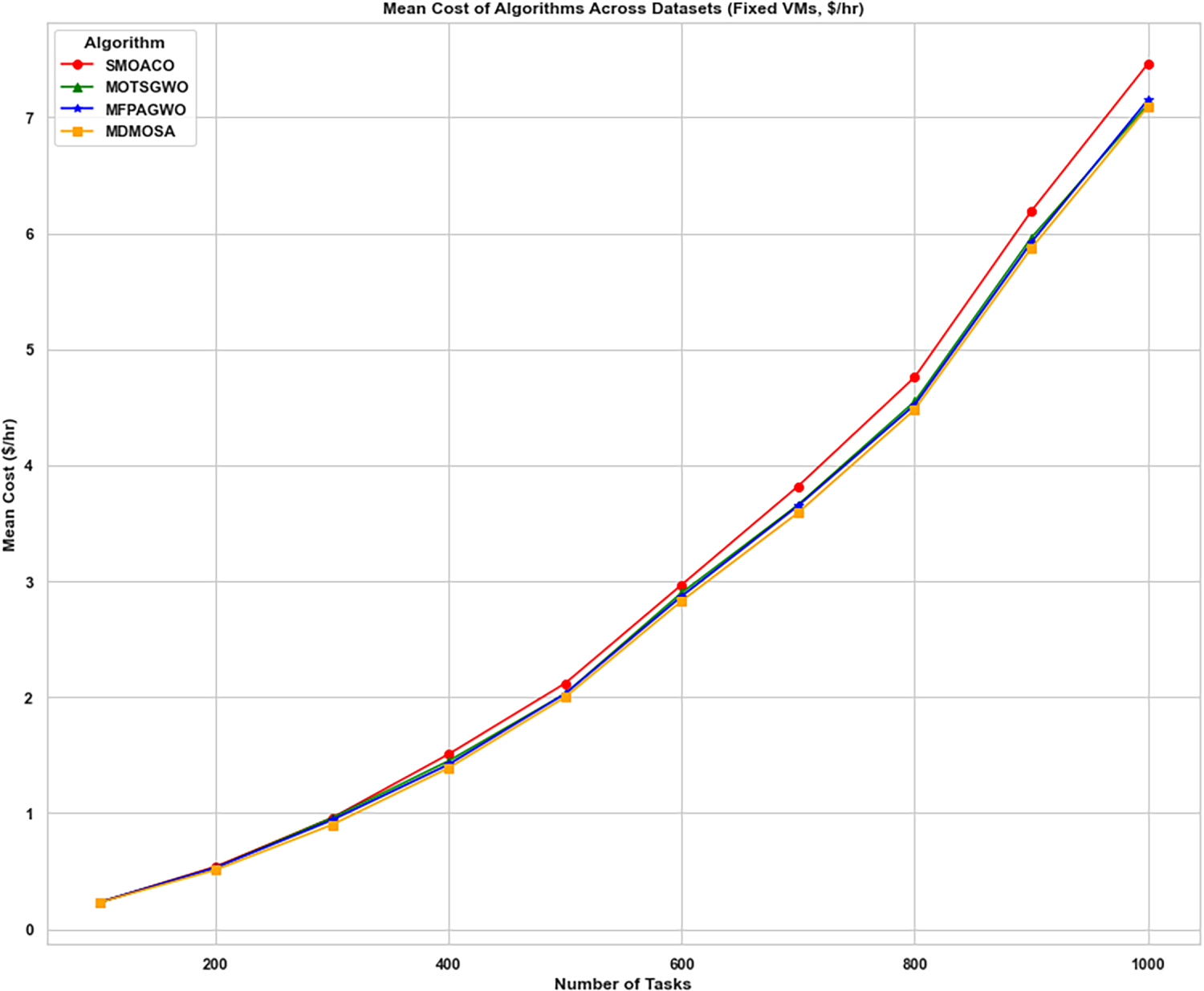

Figure 5: Average cost per dataset with fixed VMs

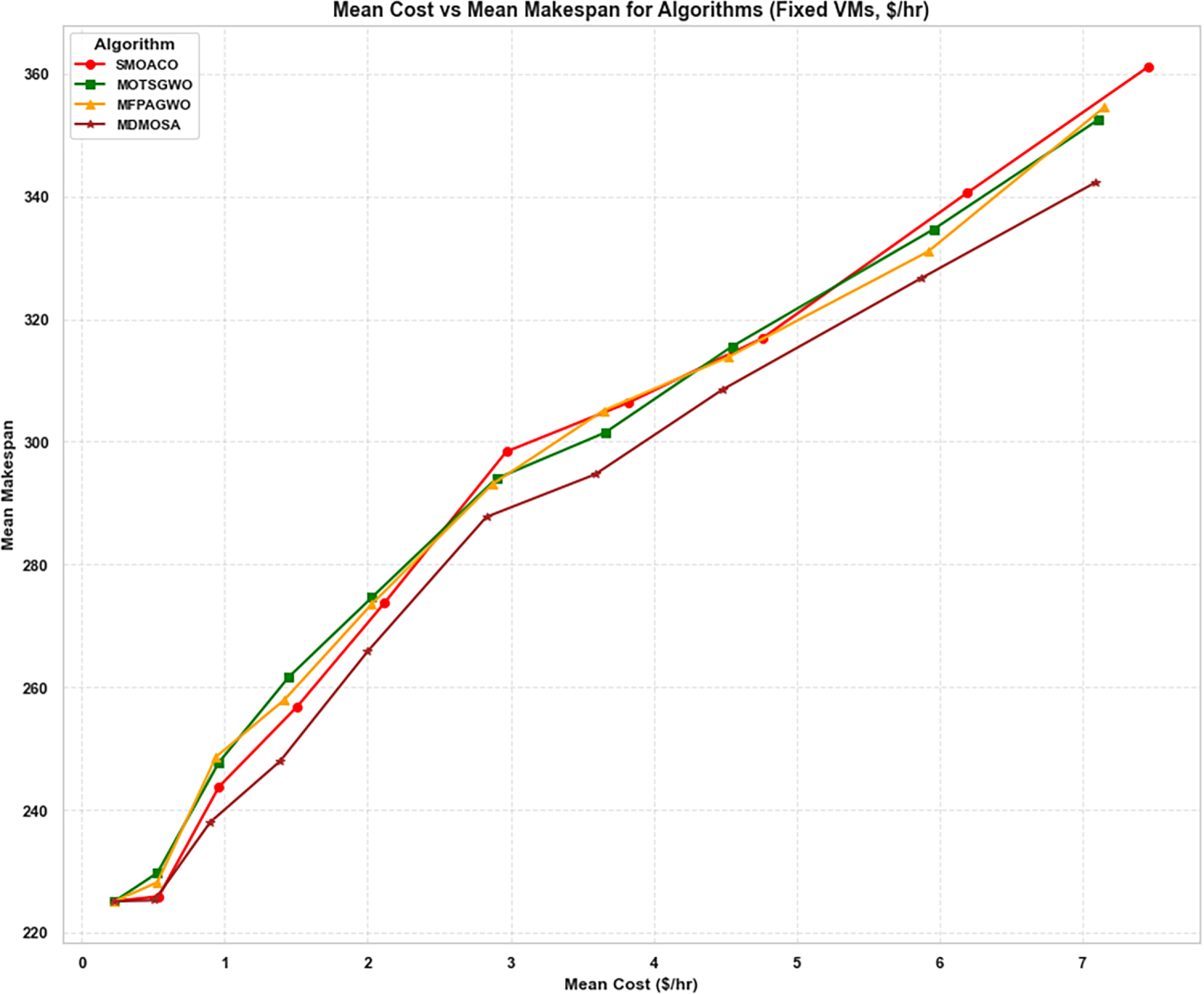

Figure 6: Trade-off between cost and makespan across fixed VMs

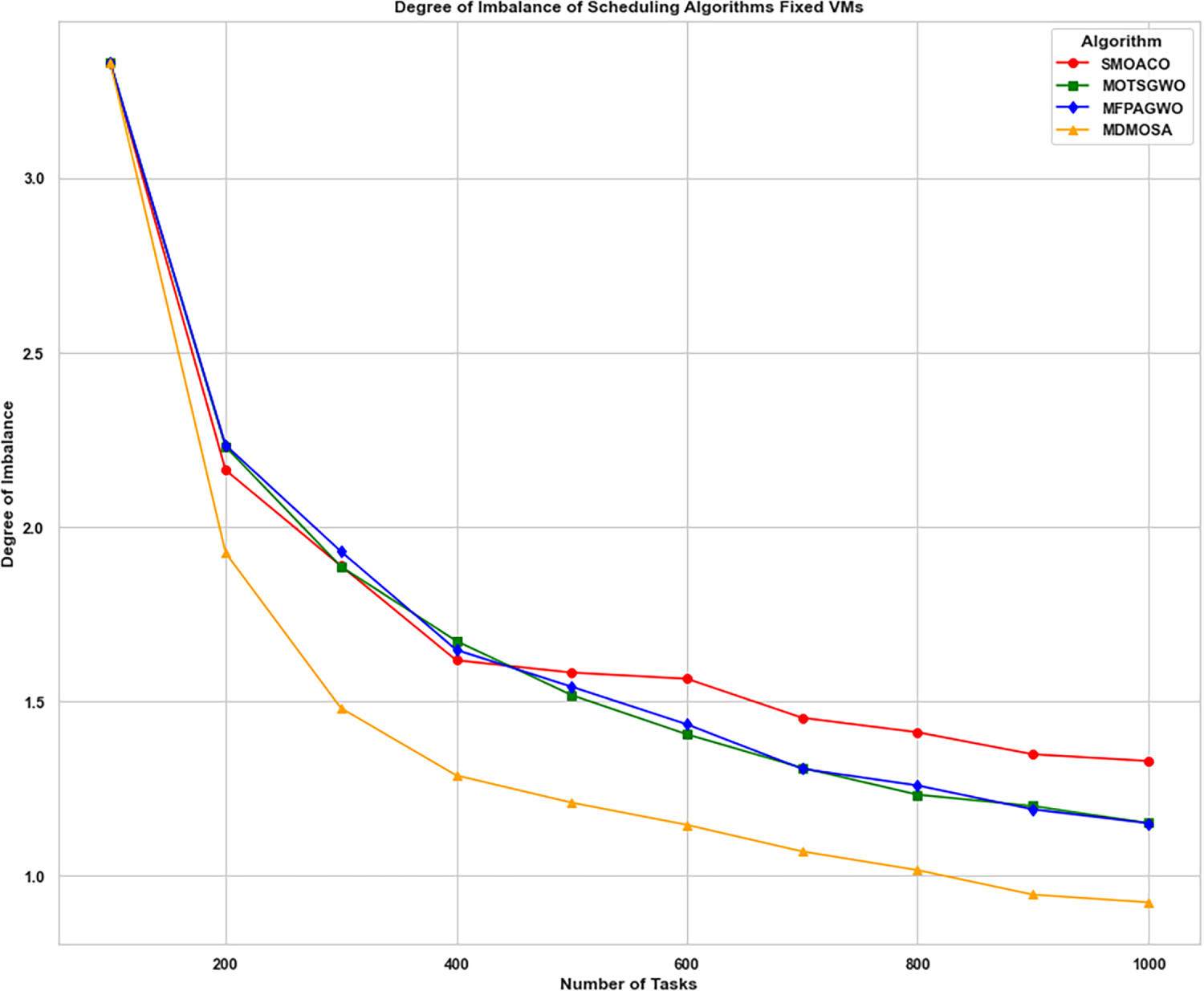

Figure 7: Degree of imbalance per dataset with fixed VMs

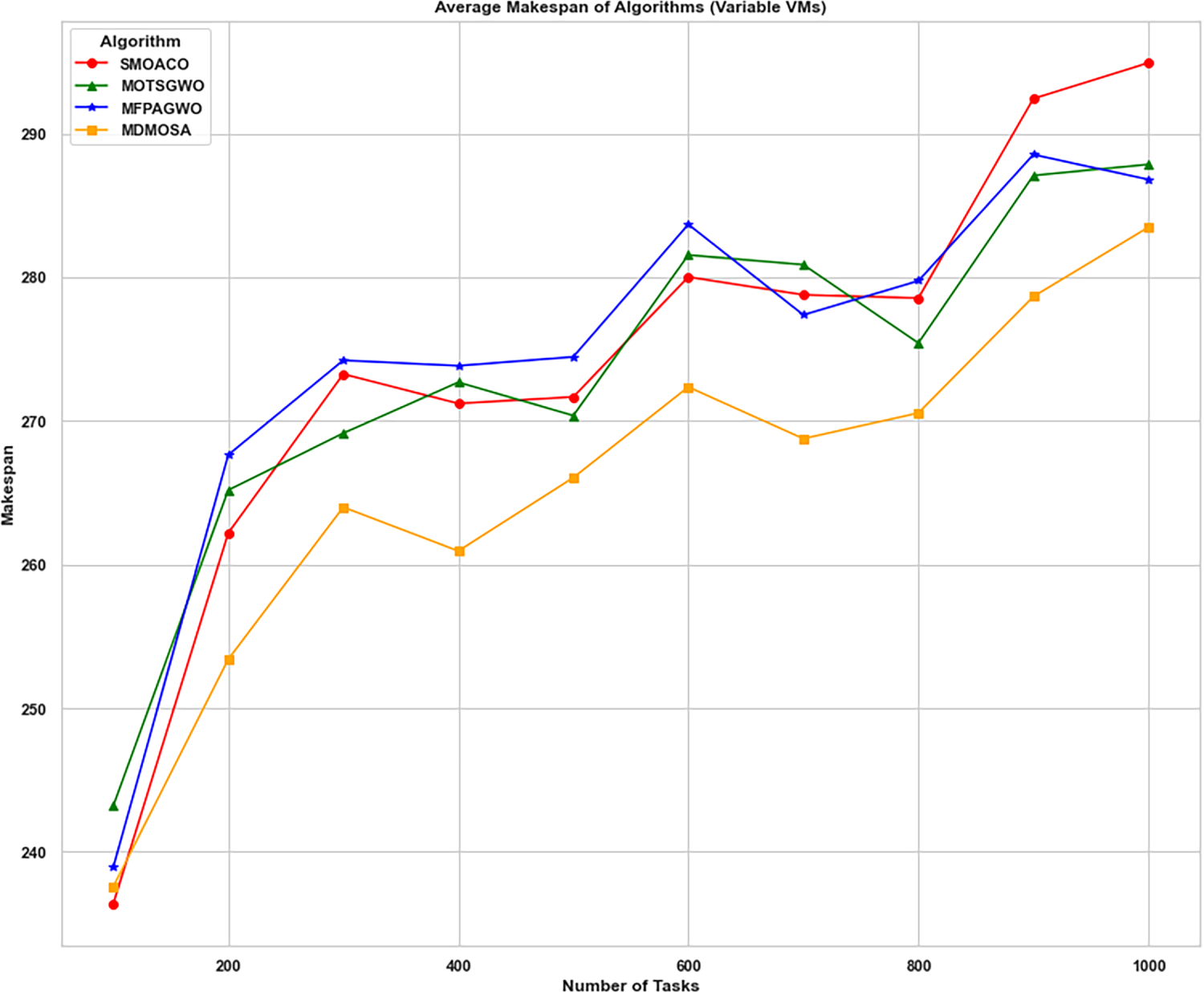

Figure 8: Average makespan per dataset with variable VMs

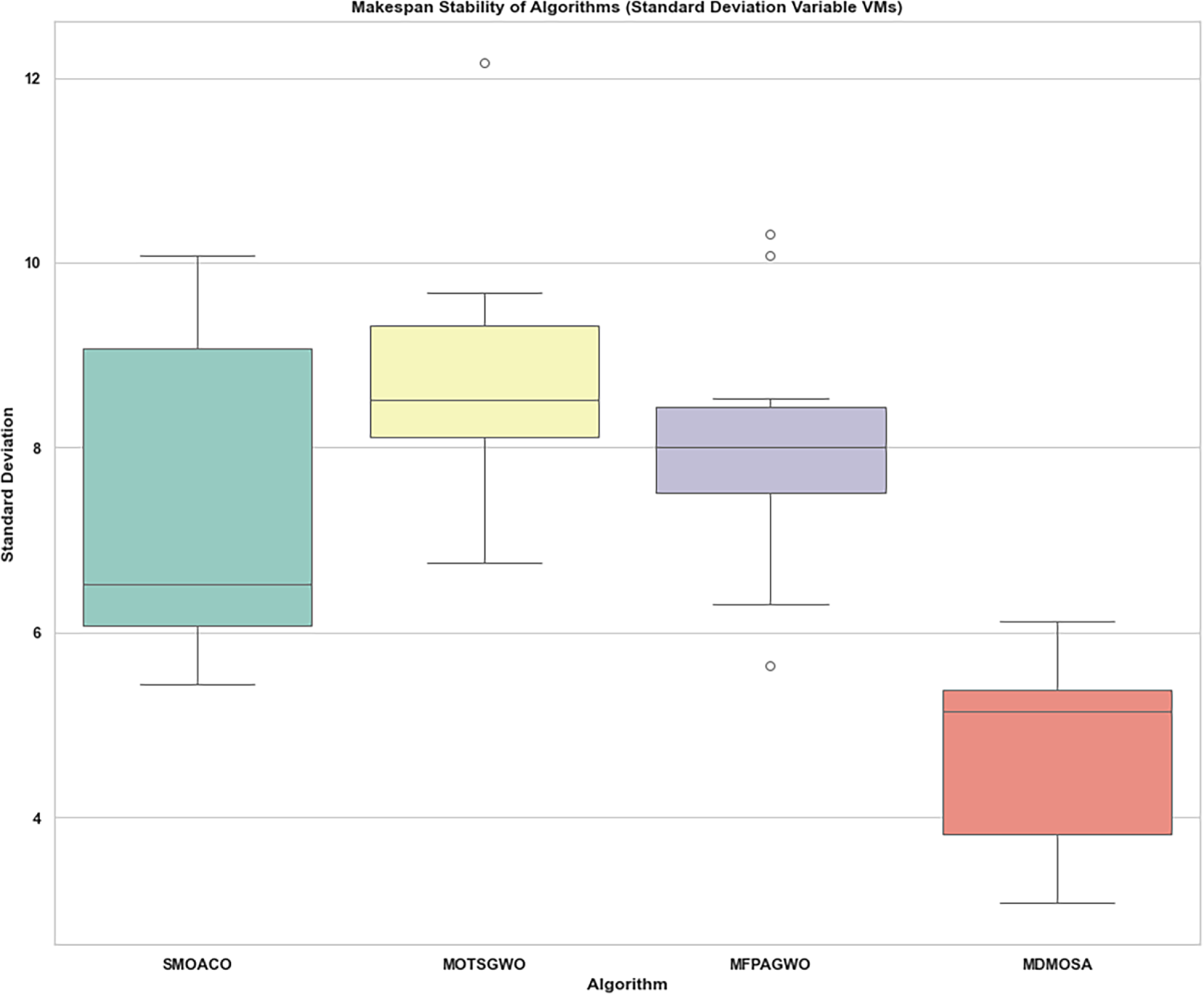

Figure 9: Boxplot analysis of makespan stability under variable VMs

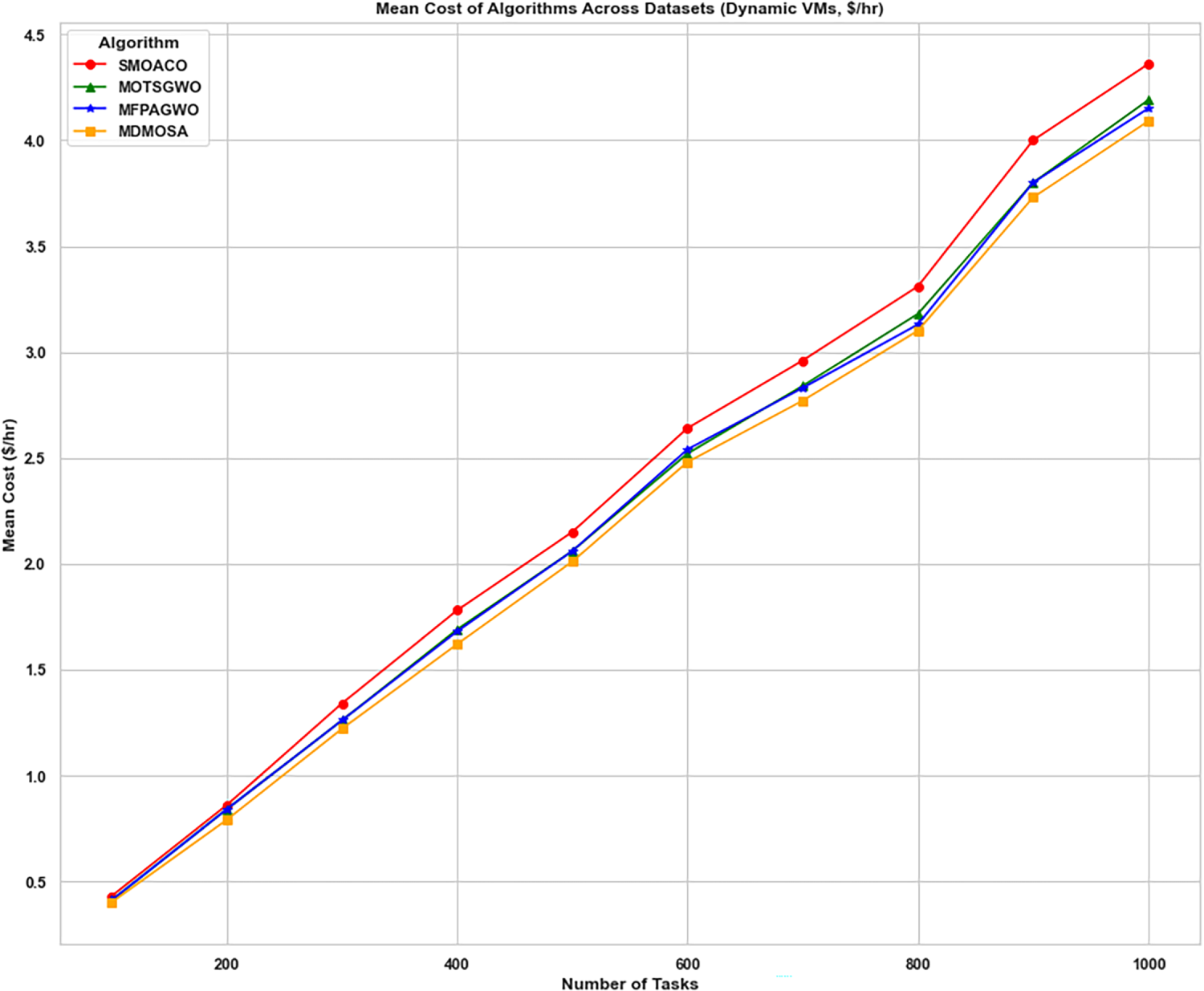

Figure 10: Average cost under variable VMs

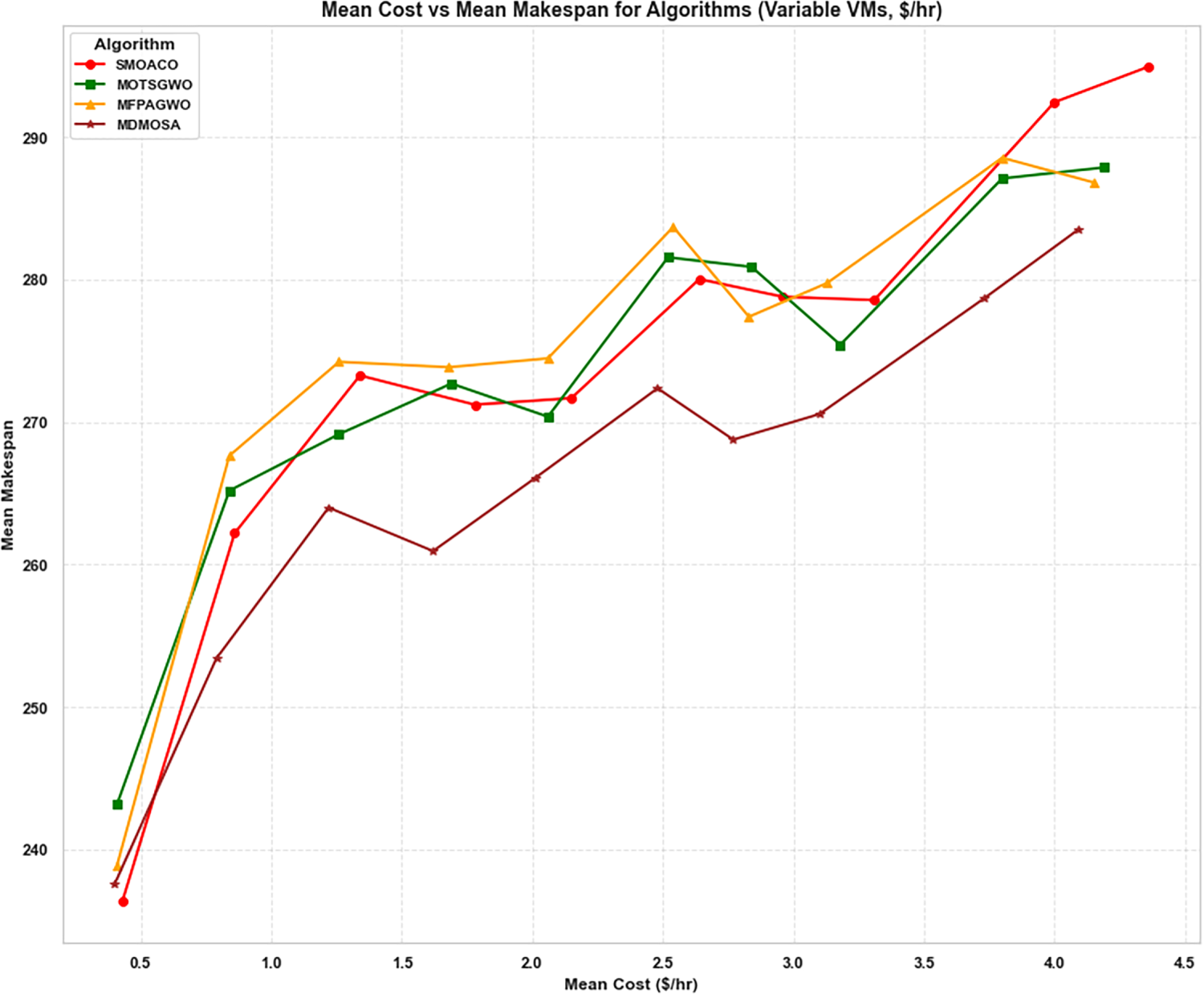

Figure 11: Trade-off between mean cost and mean makespan across variable VMs

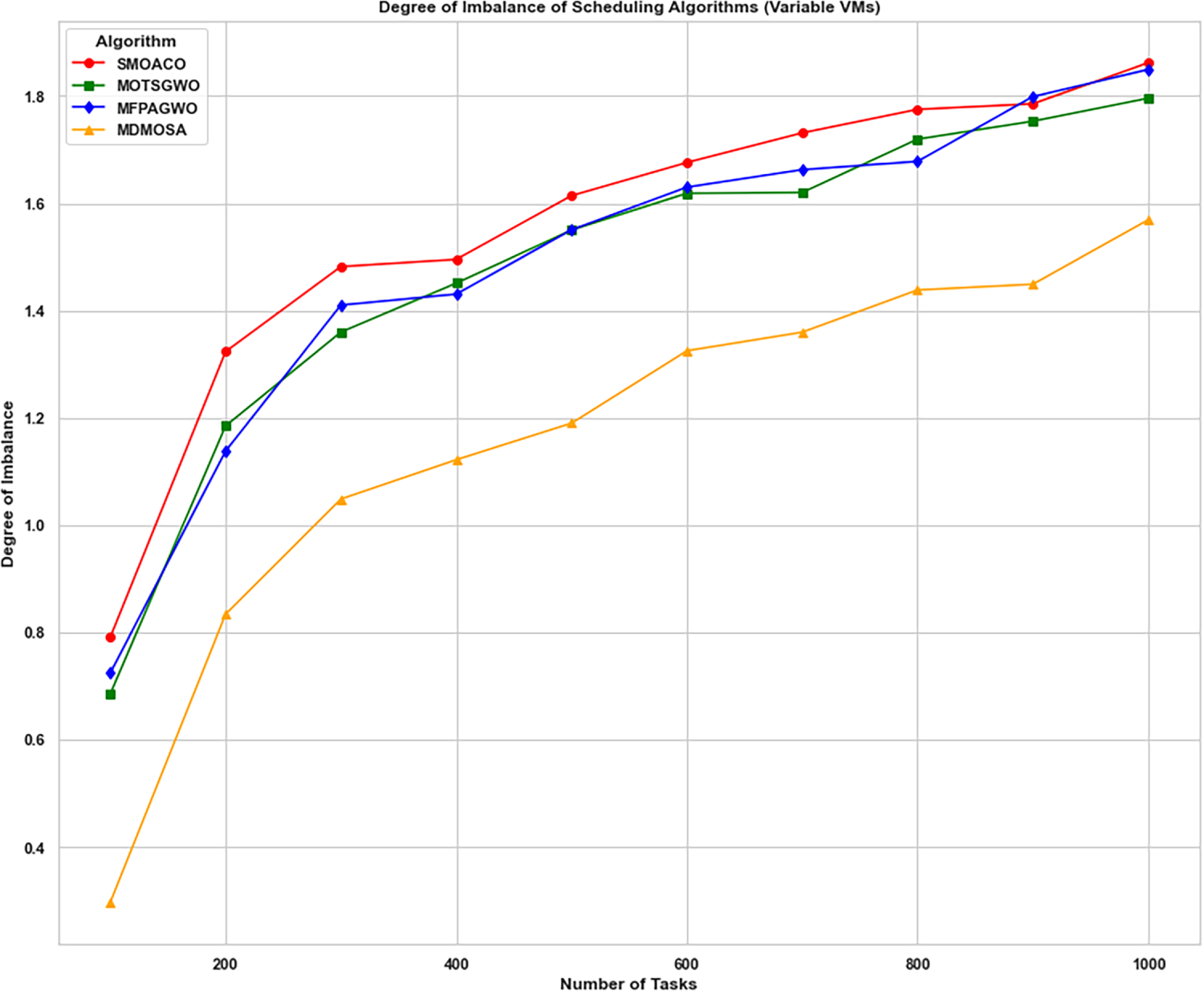

Figure 12: Degree of Imbalance per dataset with variable VMs

5.4.1 Comparison of Performance under a Fixed Number of VMs

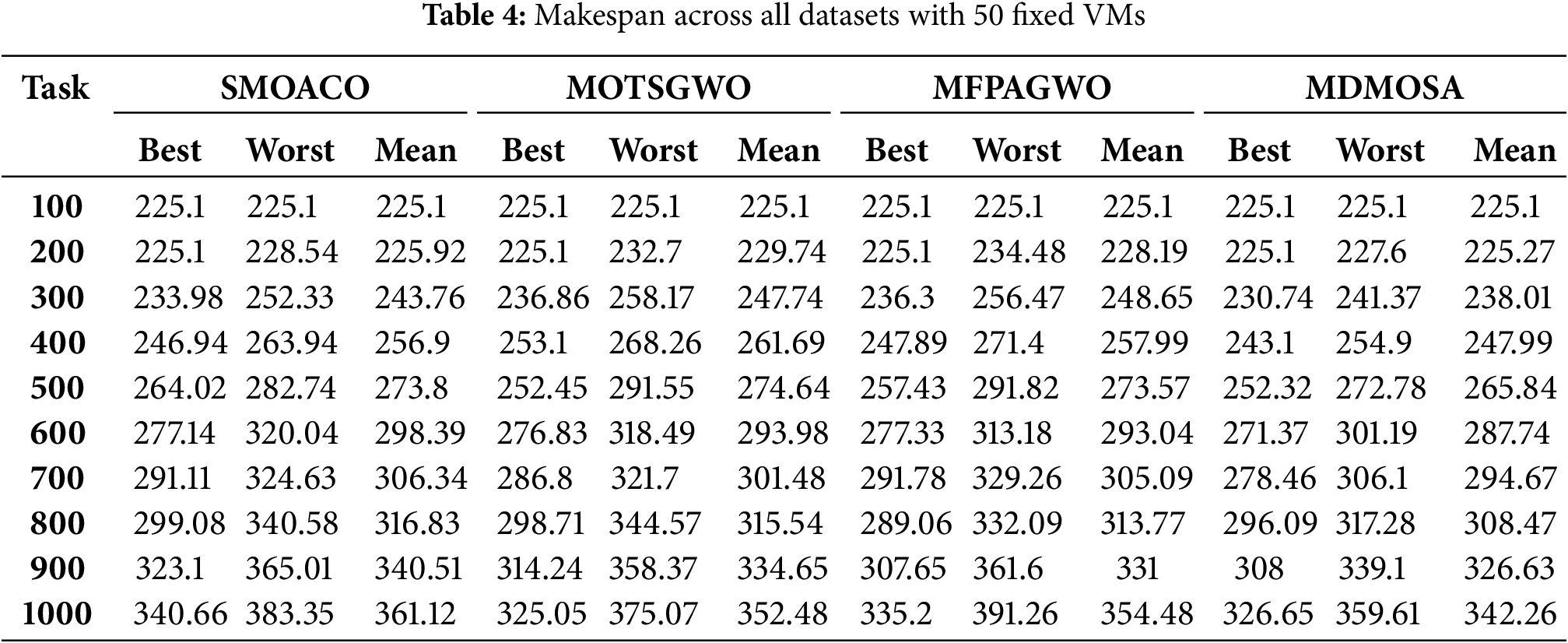

Table 4 presents the makespan results for four task scheduling algorithms SMOACO, MOTSGWO, MFPAGWO, and MDMOSA evaluated across task sizes ranging from 100 to 1000 on a fixed set of 50 virtual machines. The results include the Best, Worst, and Mean values obtained from 20 independent runs for each algorithm. For smaller workloads of 100 tasks, all algorithms achieved identical performance, each recording a mean makespan of 225.1 with zero variation. At 200 tasks, the differences were still minimal, with mean values clustered between 225.1 and 229.74. As the workload increased, clearer distinctions emerged. At 300 tasks, MDMOSA achieved the lowest mean makespan of 238.01, outperforming MFPAGWO (248.65), SMOACO (243.76), and MOTSGWO (247.74). This trend continued for larger workloads: with 600 tasks, MDMOSA maintained a lower mean makespan of 287.74, compared to 298.39 for SMOACO, 293.98 for MOTSGWO, and 293.04 for MFPAGWO. At the largest workload of 1000 tasks, MDMOSA again preserved solution quality most effectively, recording a mean makespan of 342.26. This was lower than 361.12 for SMOACO, 352.48 for MOTSGWO, and 354.48 for MFPAGWO. Overall, the results demonstrate that while all algorithms perform comparably for smaller workloads, MDMOSA consistently achieves the lowest average makespan as task sizes grow, highlighting its superior scalability and robustness in handling complex scheduling scenarios.

Fig. 3 presents the makespan results for four task scheduling algorithms, SMOACO, MOTSGWO, MFPAGWO, and the proposed MDMOSA, evaluated across various task sizes ranging from 100 to 1000 cloudlets. The experiments were conducted using a consistent setup of 50 virtual machines across all scenarios. Fig. 3 shows that all four algorithms perform almost identically for small workloads (100–200 tasks), with makespan around 225–230. As the number of tasks increases, the differences become clearer: MDMOSA consistently achieves lower makespan compared to other algorithms. The gap becomes more pronounced at higher workloads (600–1000 tasks), where MDMOSA maintains better scalability, while SMOACO shows the highest makespan. At 1000 tasks, MDMOSA remains the most efficient at about 342, compared to 350–360 for the others, confirming its stronger ability to handle growing workload complexity under fixed VM resources.

In terms of variability, as shown in Fig. 4, MDMOSA also demonstrates improved consistency, indicated by its lower standard deviation values in most cases. MDMOSA consistently shows the lowest standard deviation, indicating more stable and reliable performance across runs. In contrast, MFPAGWO and MOTSGWO fluctuate more heavily, especially beyond 600 tasks, while SMOACO remains moderate but still less consistent than MDMOSA.

With the largest workload at 1000 tasks, MFPAGWO shows the highest instability, while MDMOSA remains the most stable. MDMOSA not only achieves lower makespan but also delivers greater consistency, making it the most robust method under fixed VM scenarios.

However, Fig. 5 shows that MDMOSA consistently incurs the lowest or near-lowest cost across all task volumes. Notably, SMOACO yields the highest cost throughout, with a sharper rise observed from 600 to 1000 tasks. This trend indicates that SMOACO’s scheduling mechanism likely leads to inefficient VM usage or extended makespan, thereby increasing resource leasing time. MFPAGWO and MOTSGWO show improved cost efficiency over SMOACO but still fall short of MDMOSA, especially under heavier task loads. MDMOSA’s cost curve remains the flattest, reflecting its ability to allocate tasks more effectively and minimize idle or overused resources.

Fig. 6 provides insight into the trade-off dynamics between execution time and cost efficiency. The key observation from this plot is that MDMOSA achieves a better balance, consistently maintaining lower makespan values at comparable or lower cost levels than the competing algorithms. This trend confirms MDMOSA’s strength in simultaneously minimizing both objectives, which is critical in real-world cloud environments where performance and cost are both required. SMOACO, on the other hand, follows a consistently higher trajectory indicating it incurs both higher makespan and higher cost for the same workloads. This implies inefficiencies in both its task distribution and resource utilization strategies. MOTSGWO and MFPAGWO show moderate performance, often overlapping, but they still lag behind MDMOSA in most scenarios. Their plots reveal that while they occasionally approach MDMOSA’s cost efficiency, they struggle to reduce makespan equivalently. The slight curvature of the MDMOSA line suggests that as cost increases, the algorithm is more effective at translating that cost into meaningful reductions in execution time. In contrast, other methods spend more without proportionally improving performance. Fig. 6 highlights the superior cost-to-performance ratio of the proposed MDMOSA model, validating its effectiveness in multi-objective optimization for cloud task scheduling. It stands out as a more resource-conscious and time-efficient solution compared to other state-of-the-art metaheuristics.

From Fig. 7, MDMOSA consistently outperforms the other algorithms, achieving the lowest imbalance across all dataset sizes. This shows that MDMOSA effectively utilizes available VMs, minimizing resource underutilization and avoiding task clustering on select machines. As the number of tasks increases, the imbalance further reduces, indicating improved load distribution as the scheduling scale grows. An important observation is that the reduction in imbalance is non-linear, with sharper improvements at lower task counts. This reflects the challenge of achieving balance with fewer tasks, which becomes more manageable as the task pool increases providing the scheduler with more flexibility to distribute workloads evenly. Fig. 7 further highlights that MDMOSA exhibits superior load-balancing performance, making it more reliable for scalable and efficient resource usage in cloud environments. This ability to maintain uniform task distribution is critical for preventing VM overload and maximizing overall system throughput.

5.4.2 Comparison of Performance under Varying Number of VMs

To evaluate the scalability and responsiveness of the proposed algorithm under fluctuating resource availability, an additional set of experiments was conducted using a variable number of virtual machines for each task dataset. Specifically, for each dataset size, the number of VMs was set to represent 10% of the total number of tasks, resulting in VM counts ranging from 10 to 100. This experimental setup was designed to analyze how the algorithms perform as the ratio of tasks to available resources increases. In doing so, it offers valuable insight into how well each approach adapts to resource constraints, particularly in terms of makespan and financial cost, under conditions that closely resemble real-world dynamic cloud environments.

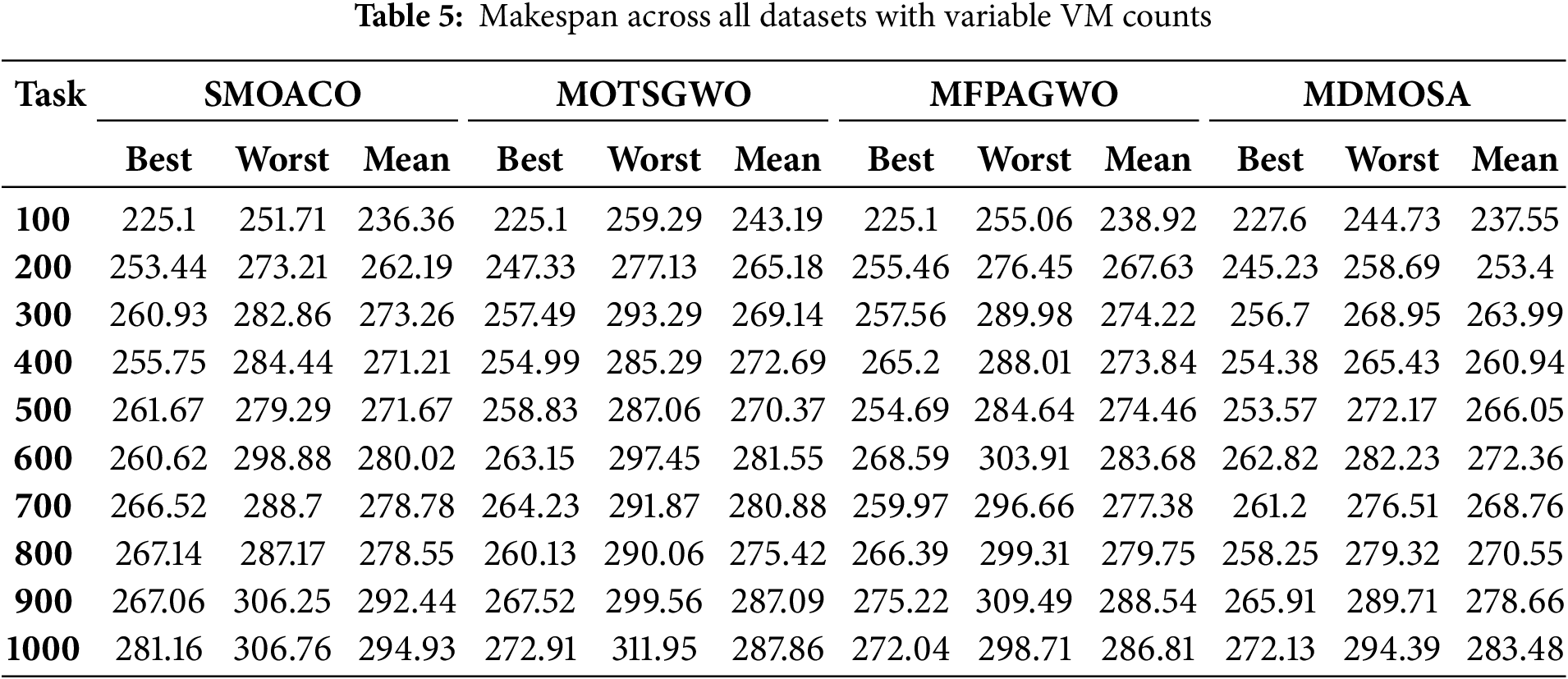

Table 5 reports the makespan results for the four scheduling algorithms evaluated across workloads ranging from 100 to 1000 tasks when the number of virtual machines was allowed to vary. For smaller workloads, such as 100 tasks, MDMOSA already demonstrates a noticeable efficiency advantage, recording a mean makespan of 237.55, compared with 236.36 for SMOACO, 243.19 for MOTSGWO, and 238.92 for MFPAGWO. This early advantage strengthens as the number of tasks increases. At 500 tasks, MDMOSA reaches a mean of 266.05, which is substantially lower than the competing algorithms, all of which exceed 270. The superiority of MDMOSA becomes even clearer at higher workloads.

With 800 tasks, it achieves a mean makespan of 270.55, outperforming SMOACO (278.55), MOTSGWO (275.42), and MFPAGWO (279.75). Finally, at the maximum tested workload of 1000 tasks, MDMOSA maintains its lead with a mean of 283.48, while the alternatives SMOACO (294.93), MOTSGWO (287.86), and MFPAGWO (286.81) lag behind. These results indicate that by leveraging variable VM configurations, MDMOSA consistently delivers better scheduling outcomes, scaling efficiently with workload growth. The algorithm not only reduces execution time but also sustains performance advantages across a wide spectrum of task sizes and resource scenarios, highlighting its robustness and adaptability compared to other methods.

From Fig. 8, MDMOSA consistently achieves the lowest makespan value across almost all task sizes, highlighting its superior efficiency in dynamic environments where VM availability varies with workload. MDMOSA clearly dominates, producing consistently lower makespan from 100 to 1000 tasks. The gap becomes more apparent as workloads increase while SMOACO, MOTSGWO, and MFPAGWO climb toward 290 and above at 1000 tasks, MDMOSA remains lower at around 283, demonstrating its efficiency in leveraging dynamic VM availability.

The stability of MDMOSA’s trend suggests that it adapts more effectively to different task loads by utilizing available resources more judiciously, which is crucial in real-world cloud scenarios where VM availability is not fixed as shown in Fig. 8. This underscores MDMOSA’s scalability and robustness, making it more suitable for real-world applications where workload and resources often fluctuate simultaneously.

Fig. 9 presents a boxplot analysis of the makespan stability for each algorithm under variable VM conditions, measured by standard deviation. This figure offers insight into the consistency and reliability of scheduling performance across multiple simulation runs. The proposed MDMOSA algorithm demonstrates the most stable performance, with the lowest standard deviation range and minimal outlier presence. This reflects high robustness and repeatability, indicating that MDMOSA can reliably maintain efficient task execution times even when the resource availability fluctuates. In contrast, MOTSGWO and SMOACO exhibit wider interquartile ranges and more significant outliers, suggesting higher variability in performance. These inconsistencies could be problematic in environments where predictability and timing guarantees are crucial. Similarly, while MFPAGWO performs better than SMOACO, it still shows greater dispersion compared to MDMOSA. Overall, this boxplot reinforces MDMOSA’s superiority in delivering not just optimal, but consistent results, making it a dependable option for task scheduling in dynamic cloud environments.

Fig. 10 illustrates the mean cost incurred by each algorithm across varying task sizes under a dynamic VM setup, where the number of virtual machines scales with task demand. MDMOSA consistently incurs the lowest cost across all task loads, demonstrating superior cost-efficiency under dynamic resource conditions. Its performance curve remains below those of the other algorithms, indicating its ability to balance task allocation with optimal VM utilization. By contrast, SMOACO results in the highest cost, especially as task volumes approach 1000. This suggests suboptimal VM distribution or over-provisioning, leading to increased operational expenses. MOTSGWO and MFPAGWO follow similar trajectories, closely tracking each other and showing moderate cost performance, but they fall short of MDMOSA’s efficiency.

Fig. 11 presents a comparative visualization of the trade-off between mean cost and mean makespan for the evaluated algorithms under a dynamic VM configuration. From the chart, it is seen that MDMOSA consistently achieves a better balance, maintaining a lower makespan at a moderate cost level. Although MDMOSA does not produce the absolute lowest makespan, its curve remains lower and more stable across increasing cost values, suggesting improved scheduling stability and efficiency. SMOACO, on the other hand, although it occasionally dips in makespan, does so at the highest cost, indicating inefficient scheduling in terms of monetary expense. MDMOSA demonstrates the most favorable cost-to-performance ratio, making it well-suited for dynamic environments where both cost control and time efficiency are critical.

Fig. 12 illustrates the degree of imbalance across scheduling algorithms when executed under variable VM configurations, with the number of tasks ranging from 100 to 1000. MDMOSA exhibits the lowest and most stable imbalance values across all task volumes. This performance suggests superior task allocation and adaptability to changes in VM availability, which is critical in real-world dynamic cloud environments. The gradual and controlled increase in imbalance indicates that MDMOSA maintains equitable resource utilization even as workload scales up. SMOACO, MOTSGWO, and MFPAGWO show significantly higher and steeper imbalance trends. Notably, SMOACO consistently records the highest imbalance, implying a tendency to overburden or underutilize certain VMs. MOTSGWO and MFPAGWO perform moderately better but still lag behind MDMOSA, especially as task sizes increase. MDMOSA delivers a more balanced and scalable scheduling solution, crucial for optimizing cloud infrastructure utilization under fluctuating workload and VM availability conditions.

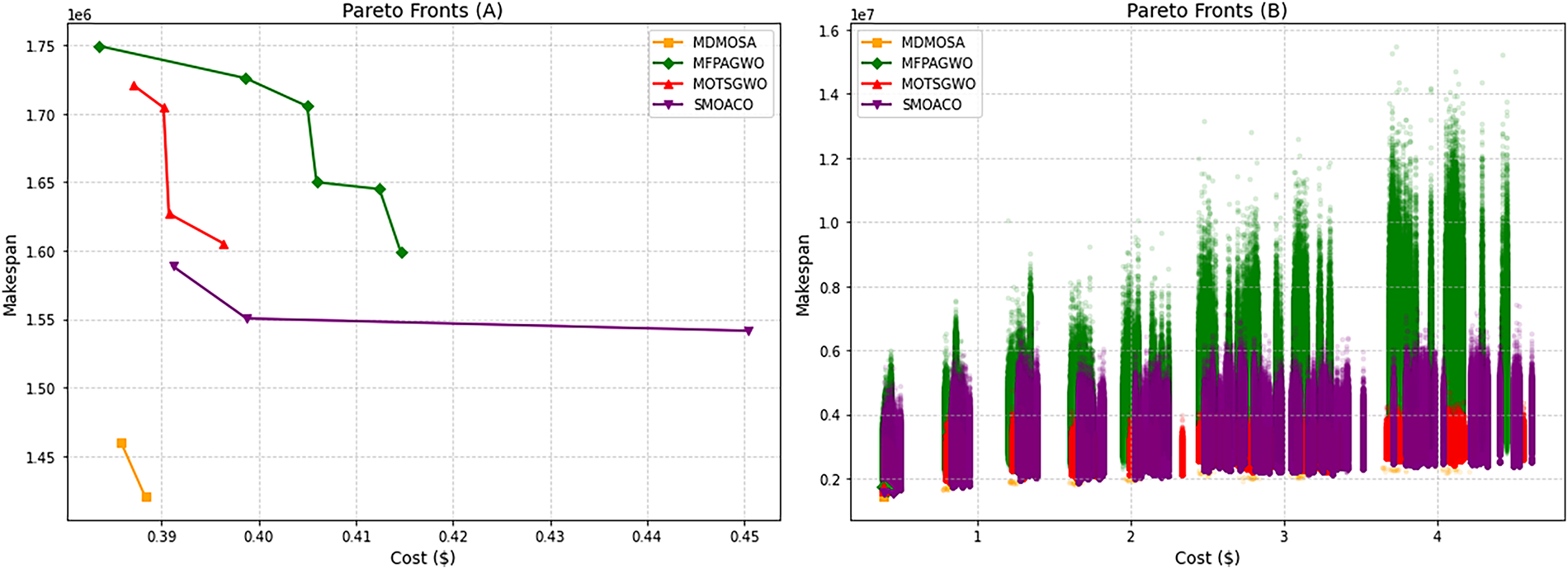

The Pareto front results shown in Fig. 13 highlight the superiority of MDMOSA in balancing makespan and cost. In Fig. 13A, its Pareto front lies clearly below those of SMOACO, MOTSGWO, and MFPAGWO, achieving both lower execution times and reduced costs. Fig. 13B reinforces this advantage, with MDMOSA’s solutions clustering tightly in the lower region, showing stability and consistency, whereas the other algorithms display wider, less efficient spreads, particularly MFPAGWO, which produces many dominated solutions. From a hypervolume (HV) perspective, MDMOSA’s dominance in covering more of the desirable objective space implies higher HV values, further confirming its ability to deliver better trade-offs. These findings confirm that MDMOSA not only delivers superior solutions but also maintains robustness across multiple runs, making it the most effective algorithm for multi-objective scheduling under varying workload and cost conditions.

Figure 13: Pareto fronts of scheduling algorithms

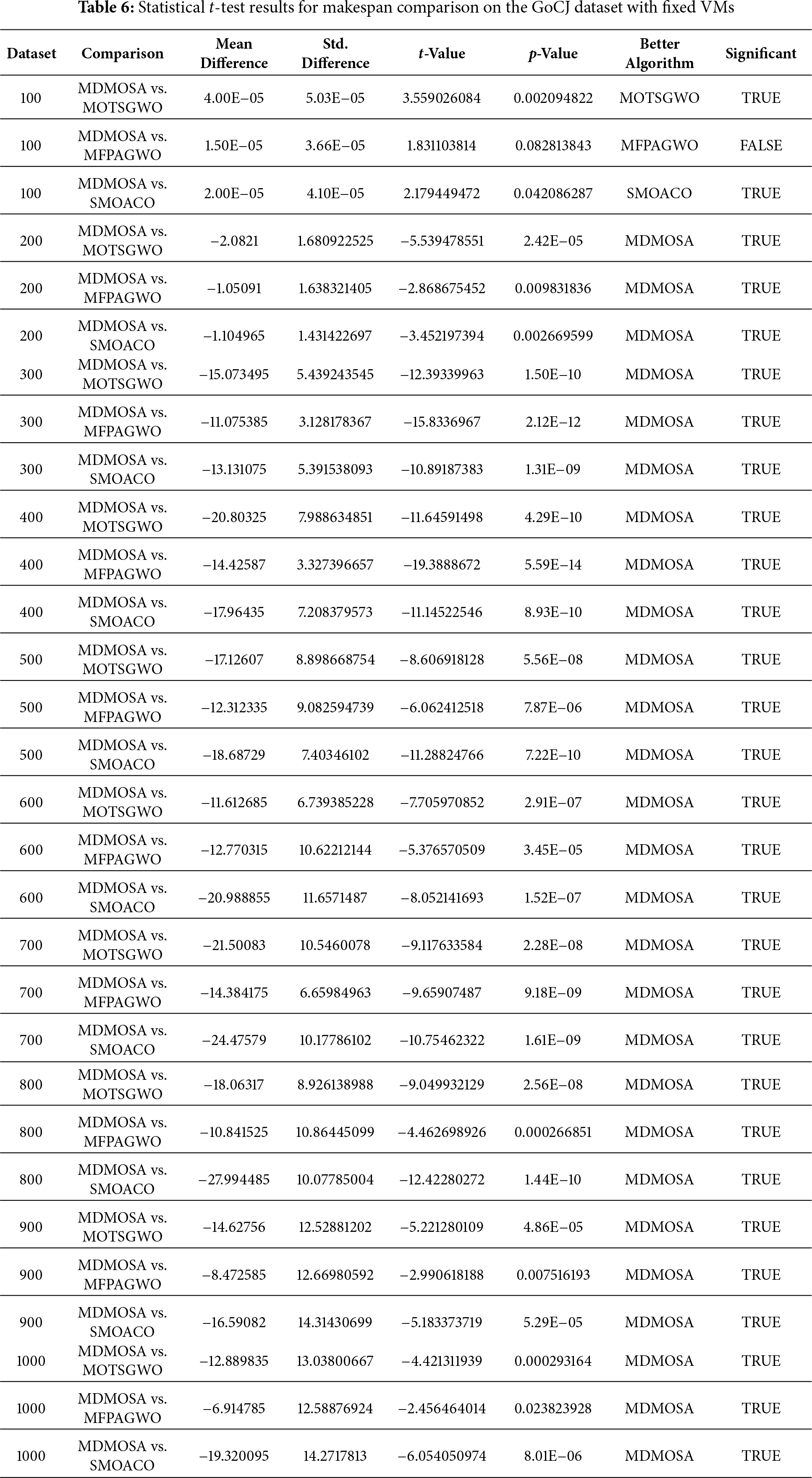

Table 6 presents the t-test results for the makespan performance of MDMOSA compared to SMOACO, MOTSGWO, and MFPAGWO on the GoCJ dataset across different task sizes. A one-sided t-test was carried out to examine whether the makespan obtained by MDMOSA is significantly lower than that of the competing algorithms across all task instances under the same stopping criterion. The analysis was conducted at a significance level of 𝛼 = 0.05, with the critical t-value being 2.093. The majority of p-values are well below this threshold, indicating that the null hypothesis that MDMOSA does not outperform the compared algorithms can be rejected in most cases. This confirms that MDMOSA achieves statistically significant improvements in makespan over the benchmark algorithms for nearly all task sizes. Exceptions occur in smaller workloads, such as the comparison with MFPAGWO at 100 tasks, where the p-value exceeds 0.05 and the result is not statistically significant. The results demonstrate that MDMOSA’s superiority is most evident as workload size increases, consistently yielding lower makespan values with high statistical confidence.

In this study, a novel hybrid algorithm, multi-objective dwarf mongoose optimization with simulated annealing (MDMOSA), was proposed to address the complex challenge of task scheduling in Infrastructure-as-a-Service (IaaS) cloud computing environments. By combining the biologically inspired behavior of the dwarf mongoose optimization (DMO) algorithm with the local search refinement capabilities of simulated annealing (SA), the proposed method aimed to achieve an optimal balance between global exploration and local exploitation during task-to-resource mapping. The effectiveness of MDMOSA was evaluated using both synthetic task distributions and a real-world workload dataset (GoCJ). Simulation experiments were conducted in the CloudSim toolkit under both fixed and variable VM scenarios. Results across multiple metrics, including makespan, execution cost, resource utilization, load balancing, and stability, consistently demonstrated that MDMOSA outperforms existing baseline algorithms such as SMOACO, MOTSGWO, and MFPAGWO. Notably, MDMOSA achieved superior load distribution and computational efficiency while maintaining lower deviation levels, making it a robust solution for dynamic and large-scale scheduling environments.

While the proposed MDMOSA demonstrates significant gains in makespan, cost, and resource utilization, we recognize that simulation-based evaluation has limitations. Future work will focus on extending the scheduler with energy-aware and fault-tolerant capabilities and exploring hybridization with deep reinforcement learning to further enhance adaptability in real-world deployments. One promising avenue involves the integration of advanced parameter optimization techniques to enable the algorithm to self-adjust in response to dynamic and heterogeneous cloud scenarios. This will allow for improved responsiveness and generalization across varying workloads. Additionally, there is a need to extend the scalability of the algorithm to handle significantly larger datasets and cloud infrastructures, ensuring performance remains robust under high task and resource loads. A theoretical investigation into the convergence properties of the algorithm will also be essential, offering formal guarantees on performance and deepening the understanding of its optimization behavior. Moreover, incorporating objectives beyond makespan and cost, such as energy efficiency, reliability, and QoS, will allow the model to be tailored for mission-critical and energy-sensitive applications. Finally, validating the algorithm in real-world cloud environments and across diverse use cases will be a crucial step toward assessing its practical effectiveness and refining its deployment for industry-scale cloud resource management.

Acknowledgement: During the preparation of this manuscript, the authors utilized [ChatGPT, GPT-5] for linguistic editing. The authors have carefully reviewed and revised the output and accept full responsibility for all content.

Funding Statement: The authors received no specific funding for this study.

Author Contributions: The authors confirm contribution to the paper as follows: Conceptualization: Olanrewaju Lawrence Abraham, Md Asri Ngadi, Johan Bin Mohamad Sharif and Mohd Kufaisal Mohd Sidik; methodology: Olanrewaju Lawrence Abraham, Md Asri Ngadi, and Johan Bin Mohamad Sharif; software: Olanrewaju Lawrence Abraham and Mohd Kufaisal Mohd Sidik; validation: Md Asri Ngadi and Johan Bin Mohamad Sharif; formal analysis: Olanrewaju Lawrence Abraham, Md Asri Ngadi, Johan Bin Mohamad Sharif and Mohd Kufaisal Mohd Sidik; data curation: Olanrewaju Lawrence Abraham, Md Asri Ngadi, Johan Bin Mohamad Sharif and Mohd Kufaisal Mohd Sidik; writing—original draft preparation: Olanrewaju Lawrence Abraham and Mohd Kufaisal Mohd Sidik; writing—review and editing: Md Asri Ngadi and Johan Bin Mohamad Sharif; visualization: Olanrewaju Lawrence Abraham; supervision: Md Asri Ngadi and Johan Bin Mohamad Sharif; project administration: Olanrewaju Lawrence Abraham. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data can be shared on valid requests made to corresponding author.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Houssein EH, Gad AG, Wazery YM, Suganthan PN. Task scheduling in cloud computing based on meta-heuristics: review, taxonomy, open challenges, and future trends. Swarm Evol Comput. 2021;62(3):100841. doi:10.1016/j.swevo.2021.100841. [Google Scholar] [CrossRef]

2. Ghafari R, Kabutarkhani FH, Mansouri N. Task scheduling algorithms for energy optimization in cloud environment: a comprehensive review. Clust Comput. 2022;25(2):1035–93. doi:10.1007/s10586-021-03512-z. [Google Scholar] [CrossRef]

3. Prity FS, Gazi MH, Aslam Uddin KM. A review of task scheduling in cloud computing based on nature-inspired optimization algorithm. Clust Comput. 2023;26(5):3037–67. doi:10.1007/s10586-023-04090-y. [Google Scholar] [CrossRef]

4. Maniah, Soewito B, Lumban Gaol F, Abdurachman E. A systematic literature Review: risk analysis in cloud migration. J King Saud Univ Comput Inf Sci. 2022;34(6):3111–20. doi:10.1016/j.jksuci.2021.01.008. [Google Scholar] [CrossRef]

5. Rama Krishna MS, Mangalampalli S. A systematic review on various task scheduling algorithms in cloud computing. EAI Endorsed Trans IoT. 2023;10:1–6. doi:10.4108/eetiot.4548. [Google Scholar] [CrossRef]

6. Hosseini Shirvani M. A survey study on task scheduling schemes for workflow executions in cloud computing environment: classification and challenges. J Supercomput. 2024;80(7):9384–437. doi:10.1007/s11227-023-05806-y. [Google Scholar] [CrossRef]

7. Krishnasamy KG, Periasamy S, Periasamy K, Prasanna Moorthy V, Thangavel G, Lamba R, et al. A pair-task heuristic for scheduling tasks in heterogeneous multi-cloud environment. Wirel Pers Commun. 2023;131(2):773–804. doi:10.1007/s11277-023-10454-9. [Google Scholar] [CrossRef]

8. Boroumand A, Hosseini Shirvani M, Motameni H. A heuristic task scheduling algorithm in cloud computing environment: an overall cost minimization approach. Clust Comput. 2024;28(2):137. doi:10.1007/s10586-024-04843-3. [Google Scholar] [CrossRef]

9. Zhao S, Yan H, Lin Q, Feng X, Chen H, Zhang D. Hybrid hierarchical particle swarm optimization with evolutionary artificial bee colony algorithm for task scheduling in cloud computing. Comput Mater Contin. 2024;78(1):1135–56. doi:10.32604/cmc.2024.045660. [Google Scholar] [CrossRef]

10. Chandrashekar C, Krishnadoss P, Poornachary VK, Ananthakrishnan B. MCWOA scheduler: modified chimp-whale optimization algorithm for task scheduling in cloud computing. Comput Mater Contin. 2024;78(2):2593–616. doi:10.32604/cmc.2024.046304. [Google Scholar] [CrossRef]

11. Krishnadoss P, Kedalu Poornachary V, Krishnamoorthy P, Shanmugam L. Improvised seagull optimization algorithm for scheduling tasks in heterogeneous cloud environment. Comput Mater Contin. 2023;74(2):2461–78. doi:10.32604/cmc.2023.031614. [Google Scholar] [CrossRef]

12. Yu N, Zhang AN, Chu SC, Pan JS, Yan B, Watada J. Innovative approaches to task scheduling in cloud computing environments using an advanced willow catkin optimization algorithm. Comput Mater Contin. 2025;82(2):2495–520. doi:10.32604/cmc.2024.058450. [Google Scholar] [CrossRef]

13. Hamed AY, Kh Elnahary M, Alsubaei FS, El-Sayed HH. Optimization task scheduling using cooperation search algorithm for heterogeneous cloud computing systems. Comput Mater Contin. 2023;74(1):2133–48. doi:10.32604/cmc.2023.032215. [Google Scholar] [CrossRef]

14. Prity FS. Nature-Inspired optimization algorithms for enhanced load balancing in cloud computing: a comprehensive review with taxonomy, comparative analysis, and future trends. Swarm Evol Comput. 2025;97(9):102053. doi:10.1016/j.swevo.2025.102053. [Google Scholar] [CrossRef]

15. Kathole AB, Vhatkar K, Lonare S, Kshirsagar AP. Optimization-based resource scheduling techniques in cloud computing environment: a review of scientific workflows and future directions. Comput Electr Eng. 2025;123(3):110080. doi:10.1016/j.compeleceng.2025.110080. [Google Scholar] [CrossRef]

16. Abraham OL, Asri Bin Ngadi M, Bin Mohamad Sharif J, Kufaisal Mohd Sidik M. Task scheduling in cloud environment–techniques, applications, and tools: a systematic literature review. IEEE Access. 2024;12(2):138252–79. doi:10.1109/ACCESS.2024.3466529. [Google Scholar] [CrossRef]

17. Zhang SW, Wang JS, Zhang SH, Xing YX, Sui XF, Zhang YH. Task scheduling in cloud computing systems using multi-objective honey badger algorithm with two hybrid elite frameworks and circular segmentation screening. Artif Intell Rev. 2024;58(2):48. doi:10.1007/s10462-024-11032-6. [Google Scholar] [CrossRef]

18. Abraham OL, Ngadi MAB, Bin Mohamad Sharif J, Sidik MKM. Multi-objective optimization techniques in cloud task scheduling: a systematic literature review. IEEE Access. 2025;13:12255–91. doi:10.1109/ACCESS.2025.3529839. [Google Scholar] [CrossRef]

19. Jamil B, Ijaz H, Shojafar M, Munir K, Buyya R. Resource allocation and task scheduling in fog computing and Internet of everything environments: a taxonomy, review, and future directions. ACM Comput Surv. 2022;54(11s):1–38. doi:10.1145/3513002. [Google Scholar] [CrossRef]

20. Devi N, Dalal S, Solanki K, Dalal S, Lilhore UK, Simaiya S, et al. A systematic literature review for load balancing and task scheduling techniques in cloud computing. Artif Intell Rev. 2024;57(10):276. doi:10.1007/s10462-024-10925-w. [Google Scholar] [CrossRef]

21. Pradhan A, Bisoy SK, Das A. A survey on PSO based meta-heuristic scheduling mechanism in cloud computing environment. J King Saud Univ Comput Inf Sci. 2022;34(8):4888–901. doi:10.1016/j.jksuci.2021.01.003. [Google Scholar] [CrossRef]

22. Tabary KA, Motameni H, Barzegar B, Akbari E, Shirgahi H, Mokhtari M. QoS aware task scheduling using hybrid genetic algorithm in cloud computing. IEEE Access. 2025;13(1):51603–16. doi:10.1109/ACCESS.2024.3520412. [Google Scholar] [CrossRef]

23. Abdullahi M, Ngadi MA, Dishing SI, Abdulhamid SM. An adaptive symbiotic organisms search for constrained task scheduling in cloud computing. J Ambient Intell Humaniz Comput. 2023;14(7):8839–50. doi:10.1007/s12652-021-03632-9. [Google Scholar] [CrossRef]

24. Agushaka JO, Ezugwu AE, Abualigah L. Dwarf mongoose optimization algorithm. Comput Meth Appl Mech Eng. 2022;391(10):114570. doi:10.1016/j.cma.2022.114570. [Google Scholar] [CrossRef]

25. Khan MA, Rasool RU. A multi-objective grey-wolf optimization based approach for scheduling on cloud platforms. J Parallel Distrib Comput. 2024;187(2):104847. doi:10.1016/j.jpdc.2024.104847. [Google Scholar] [CrossRef]

26. Suresh P, Keerthika P, Manjula Devi R, Kamalam GK, Logeswaran K, Sadasivuni KK, et al. Optimized task scheduling approach with fault tolerant load balancing using multi-objective cat swarm optimization for multi-cloud environment. Appl Soft Comput. 2024;165(2):112129. doi:10.1016/j.asoc.2024.112129. [Google Scholar] [CrossRef]

27. Mondal B, Choudhury A. Multi-objective cuckoo optimizer for task scheduling to balance workload in cloud computing. Computing. 2024;106(11):3447–78. doi:10.1007/s00607-024-01332-8. [Google Scholar] [CrossRef]

28. Mishra AK, Mohapatra S, Sahu PK. Adaptive Tasmanian Devil Optimization algorithm based efficient task scheduling for big data application in a cloud computing environment. Multimed Tools Appl. 2025;84(23):26977–96. doi:10.1007/s11042-024-19887-1. [Google Scholar] [CrossRef]

29. Ding H, Zhang M, Zhou F, Ding X, Chu S. Multi-objective scheduling of cloud tasks with positional information-enhanced reptile search algorithm. Int J Interact Des Manuf Ijidem. 2024;18(7):4715–28. doi:10.1007/s12008-024-01745-x. [Google Scholar] [CrossRef]

30. Hegde SN, Srinivas DB, Rajan MA, Rani S, Kataria A, Min H. Multi-objective and multi constrained task scheduling framework for computational grids. Sci Rep. 2024;14(1):6521. doi:10.1038/s41598-024-56957-8. [Google Scholar] [PubMed] [CrossRef]

31. Panneerselvam K, Nayudu PP, Banu MS, Rekha PM. Multi-objective load balancing based on adaptive osprey optimization algorithm. Int J Inf Technol. 2024;16(6):3871–8. doi:10.1007/s41870-024-01823-z. [Google Scholar] [CrossRef]

32. Gupta S, Singh RS. User-defined weight based multi objective task scheduling in cloud using whale optimization algorithm. Simul Model Pract Theory. 2024;133(2):102915. doi:10.1016/j.simpat.2024.102915. [Google Scholar] [CrossRef]

33. Hemanth SV, Kirubha D, Reddy SR, Chelladurai T, Soundari AG, Amirthayogam G. Multi objective ant colony optimization technique for task scheduling in cloud computing. In: Proceedings of the 2024 3rd International Conference on Applied Artificial Intelligence and Computing (ICAAIC); 2024 Jun 5–7; Salem, India. p. 830–5. doi:10.1109/ICAAIC60222.2024.10575423. [Google Scholar] [CrossRef]

34. Cui X. Multi-objective task scheduling in cloud data centers: a differential evolution chaotic whale optimization approach. Int J Interact Des Manuf Ijidem. 2025;19(6):4417–27. doi:10.1007/s12008-024-02078-5. [Google Scholar] [CrossRef]

35. Malti AN, Hakem M, Benmammar B. A new hybrid multi-objective optimization algorithm for task scheduling in cloud systems. Clust Comput. 2024;27(3):2525–48. doi:10.1007/s10586-023-04099-3. [Google Scholar] [CrossRef]

36. Mohammad Hasani Zade B, Mansouri N, Javidi MM. Multi-objective task scheduling based on PSO-Ring and intuitionistic fuzzy set. Clust Comput. 2024;27(8):11747–802. doi:10.1007/s10586-024-04561-w. [Google Scholar] [CrossRef]

37. Khan AR. Dynamic load balancing in cloud computing: optimized RL-based clustering with multi-objective optimized task scheduling. Processes. 2024;12(3):519. doi:10.3390/pr12030519. [Google Scholar] [CrossRef]

38. Amer DA, Attiya G, Ziedan I. An efficient multi-objective scheduling algorithm based on spider monkey and ant colony optimization in cloud computing. Clust Comput. 2024;27(2):1799–819. doi:10.1007/s10586-023-04018-6. [Google Scholar] [CrossRef]

39. Mangalampalli SS, Karri GR, Mohanty SN, Ali S, Khan MI, Abdullaev S, et al. Multi-objective prioritized task scheduler using improved asynchronous advantage actor critic (a3c) algorithm in multi cloud environment. IEEE Access. 2024;12:11354–77. doi:10.1109/ACCESS.2024.3355092. [Google Scholar] [CrossRef]

40. Hao Y, Zhao C, Li Z, Si B, Unger H. A learning and evolution-based intelligence algorithm for multi-objective heterogeneous cloud scheduling optimization. Knowl Based Syst. 2024;286(11):111366. doi:10.1016/j.knosys.2024.111366. [Google Scholar] [CrossRef]

41. Cui Z, Zhao T, Wu L, Qin AK, Li J. Multi-objective cloud task scheduling optimization based on evolutionary multi-factor algorithm. IEEE Trans Cloud Comput. 2023;11(4):3685–99. doi:10.1109/TCC.2023.3315014. [Google Scholar] [CrossRef]

42. Chraibi A, Ben Alla S, Touhafi A, Ezzati A. A novel dynamic multi-objective task scheduling optimization based on Dueling DQN and PER. J Supercomput. 2023;79(18):21368–423. doi:10.1007/s11227-023-05489-5. [Google Scholar] [CrossRef]

43. Srichandan S, Jena RK. Multi-objective task scheduling in cloud data center using cat swarm optimization framework. In: Proceedings of the 2023 Asia Conference on Power, Energy Engineering and Computer Technology (PEECT); 2023 Jul 21–23; Qingdao, China. p. 73–8. doi:10.1109/PEECT59566.2023.00020. [Google Scholar] [CrossRef]

44. Shetty P, Veeraiah V, Khidse SV, Rai M, Gupta A, Dhabliya D. Enhancing task scheduling in cloud computing: a multi-objective cuckoo search algorithm approach. In: Proceedings of the 2023 3rd International Conference on Advancement in Electronics & Communication Engineering (AECE); 2023 Nov 23–24; Ghaziabad, India. p. 869–74. doi:10.1109/AECE59614.2023.10428205. [Google Scholar] [CrossRef]

45. Mangalampalli S, Karri GR, Kumar M. Multi objective task scheduling algorithm in cloud computing using grey wolf optimization. Clust Comput. 2023;26(6):3803–22. doi:10.1007/s10586-022-03786-x. [Google Scholar] [CrossRef]

46. Mangalampalli S, Karri GR, Kose U. Multi objective trust aware task scheduling algorithm in cloud computing using whale optimization. J King Saud Univ Comput Inf Sci. 2023;35(2):791–809. doi:10.1016/j.jksuci.2023.01.016. [Google Scholar] [CrossRef]

47. Li J, Zhang R, Zheng Y. QoS-aware and multi-objective virtual machine dynamic scheduling for big data centers in clouds. Soft Comput. 2022;26(19):10239–52. doi:10.1007/s00500-022-07327-x. [Google Scholar] [CrossRef]

48. Zhang H, Wu Y, Sun Z. EHEFT-R: multi-objective task scheduling scheme in cloud computing. Complex Intell Syst. 2022;8(6):4475–82. doi:10.1007/s40747-021-00479-7. [Google Scholar] [CrossRef]

49. Mahmoud H, Thabet M, Khafagy MH, Omara FA. Multiobjective task scheduling in cloud environment using decision tree algorithm. IEEE Access. 2022;10(3):36140–51. doi:10.1109/ACCESS.2022.3163273. [Google Scholar] [CrossRef]

50. Mangalampalli S, Swain SK, Mangalampalli VK. Multi objective task scheduling in cloud computing using cat swarm optimization algorithm. Arab J Sci Eng. 2022;47(2):1821–30. doi:10.1007/s13369-021-06076-7. [Google Scholar] [CrossRef]

51. Devi KL, Valli S. Multi-objective heuristics algorithm for dynamic resource scheduling in the cloud computing environment. J Supercomput. 2021;77(8):8252–80. doi:10.1007/s11227-020-03606-2. [Google Scholar] [CrossRef]

52. Xu J, Zhang Z, Hu Z, Du L, Cai X. A many-objective optimized task allocation scheduling model in cloud computing. Appl Intell. 2021;51(6):3293–310. doi:10.1007/s10489-020-01887-x. [Google Scholar] [CrossRef]

53. Abualigah L, Diabat A. A novel hybrid antlion optimization algorithm formulti-objective task scheduling problems in cloud computing environments. Clust Comput. 2021;24(1):205–23. doi:10.1007/s10586-020-03075-5. [Google Scholar] [CrossRef]

54. Chhabra A, Singh G, Kahlon KS. Multi-criteria HPC task scheduling on IaaS cloud infrastructures using meta-heuristics. Clust Comput. 2021;24(2):885–918. doi:10.1007/s10586-020-03168-1. [Google Scholar] [CrossRef]

55. Gharehpasha S, Masdari M. A discrete chaotic multi-objective SCA-ALO optimization algorithm for an optimal virtual machine placement in cloud data center. J Ambient Intell Humaniz Comput. 2021;12(10):9323–39. doi:10.1007/s12652-020-02645-0. [Google Scholar] [CrossRef]