Open Access

Open Access

ARTICLE

HATLedger: An Approach to Hybrid Account and Transaction Partitioning for Sharded Permissioned Blockchains

School of Computer Science & Technology, Beijing Institute of Technology, Beijing, 100081, China

* Corresponding Author: Zhiwei Zhang. Email:

(This article belongs to the Special Issue: Recent Advances in Blockchain Technology and Applications)

Computers, Materials & Continua 2026, 86(3), 67 https://doi.org/10.32604/cmc.2025.073315

Received 15 September 2025; Accepted 04 November 2025; Issue published 12 January 2026

Abstract

With the development of sharded blockchains, high cross-shard rates and load imbalance have emerged as major challenges. Account partitioning based on hashing and real-time load faces the issue of high cross-shard rates. Account partitioning based on historical transaction graphs is effective in reducing cross-shard rates but suffers from load imbalance and limited adaptability to dynamic workloads. Meanwhile, because of the coupling between consensus and execution, a target shard must receive both the partitioned transactions and the partitioned accounts before initiating consensus and execution. However, we observe that transaction partitioning and subsequent consensus do not require actual account data but only need to determine the relative partition order between shards. Therefore, we propose a novel sharded blockchain, called HATLedger, based on Hybrid Account and Transaction partitioning. First, HATLedger proposes building a future transaction graph to detect upcoming hotspot accounts and making more precise account partitioning to reduce transaction cross-shard rates. In the event of an impending overload, the source shard employs simulated partition transactions to specify the partition order across multiple target shards, thereby rapidly partitioning the pending transactions. The target shards can reach consensus on received transactions without waiting for account data. The source shard subsequently sends the account data to the corresponding target shards in the order specified by the previously simulated partition transactions. Based on real transaction history from Ethereum, we conducted extensive sharding scalability experiments. By maintaining low cross-shard rates and a relatively balanced load distribution, HATLedger achieves throughput improvements of 2.2x, 1.9x, and 1.8x over SharPer, Shard Scheduler, and TxAllo, respectively, significantly enhancing efficiency and scalability.Keywords

Blockchains [1–3] have garnered sustained attention from both academia and industry. Various sharded blockchains [4–6] have been proposed to improve system performance. In SharPer [4], each account is managed by only one shard. Each shard

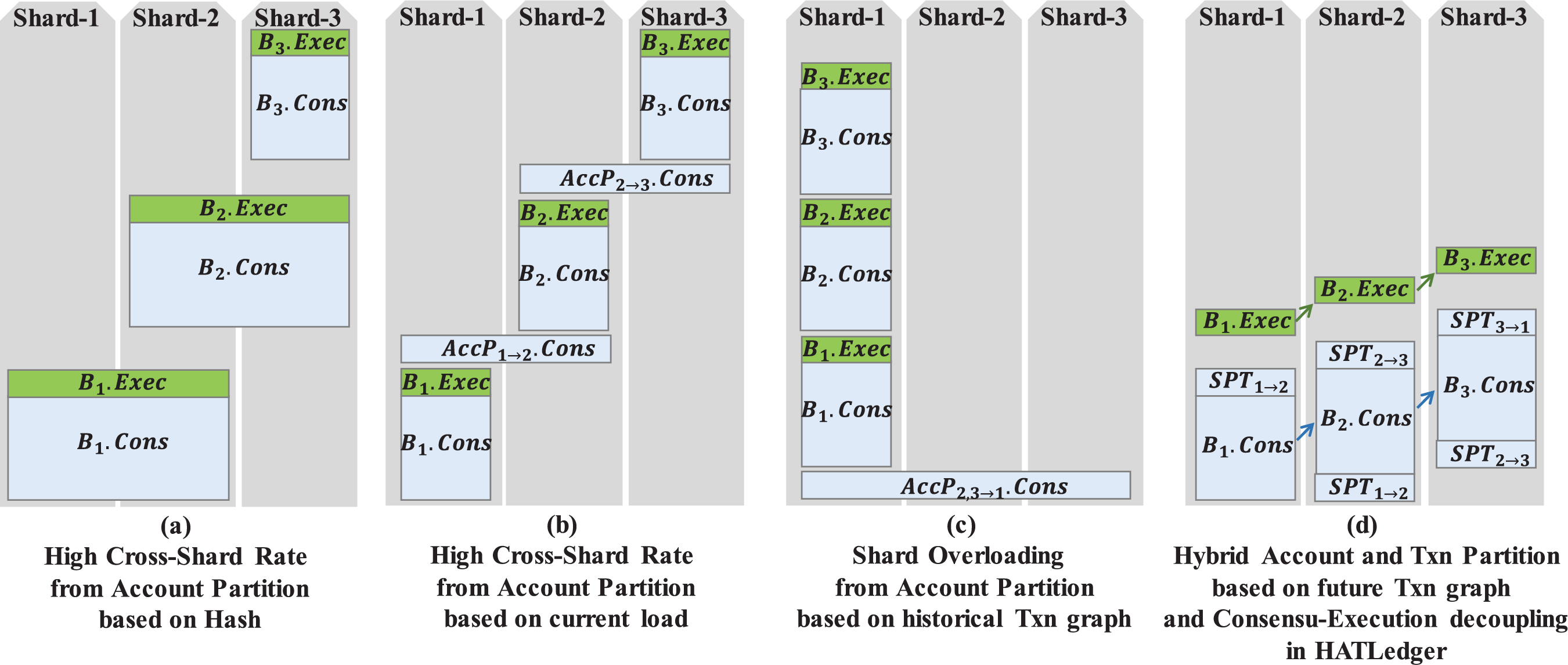

As shown in Fig. 1, the Hash-based account partitioning method [4] is limited by high cross-shard transaction rates. Shard Scheduler [8] proposes partitioning transactions and their associated accounts across low-load shards based on the current load distribution, achieving better load balancing but still encountering high cross-shard transaction rates. In contrast, TxAllo [9] constructs a hotspot account graph based on historical transactions, and assigns hotspot accounts to the same shard to reduce cross-shard transaction rates. However, TxAllo faces challenges that shard with hotspot accounts is overloading, while other shards remain underutilized.

Figure 1: Account partition in different systems: (a) Account Partition in SharPer [4]; (b) Account partition in shard scheduler [8]; (c) Account Partition in TxAllo [9]; (d) Hybrid account and transaction partition in our HATLedger

As shown in Fig. 1a, the hash-based account partitioning method SharPer [4] distributes accounts randomly across shards, forcing

We analyze the causes of high cross-shard transaction rates and shard overloading. Based on [8,10,11], the workload in blockchains can be divided into transaction consensus load and transaction execution load. Additionally, account partition can be regarded as a special type of consensus load. In a sharded blockchain system, the consensus load requires relevant nodes to reach consensus through multi-round communication protocols, such as PBFT [12]. In contrast, the transaction execution load only requires each node to locally execute and commit transactions in the consensus order. Therefore, the transaction consensus load is significantly higher than the transaction execution load. As shown in Fig. 1a–c, existing account partitioning and transaction partitioning are limited by Consensus-Execution coupling. Therefore, the target shard can only begin reaching consensus on the partitioned transactions after receiving both transactions and accounts.

However, we observe that transaction consensus only requires the relative partition order between shards, whereas transaction execution relies on account data. Moreover, the overhead associated with consensus among multiple nodes is considerably higher than that of local transaction execution on individual nodes. This creates an opportunity to decouple consensus and execution during partition. As shown in Fig. 1d, in HATLedger, the source shard can partition

A sharding approach aimed at lowering the cross-shard rate and mitigating load imbalance faces several challenges: (1) How to select appropriate accounts for partitioning to effectively reduce the cross-shard transaction rate. As the system load fluctuates dynamically, existing methods based on historical transactions for account selection incur significant latency, thereby failing to effectively reduce the cross-shard transaction rate. (2) How to decouple consensus and execution during transaction partitioning, thereby effectively mitigating load imbalance. (3) How to ensure the security of system in the presence of faulty nodes.

To address the aforementioned challenges, we propose a sharded blockchain ledger based on the Hybrid Account and Transaction partitioning, denoted as HATLedger. First, at the beginning of each epoch, we propose constructing a future transaction graph based on the pending transactions in each shard. The proposed future transaction graph can more effectively identify upcoming hotspot accounts. Hotspot accounts are detected, and an account sharding strategy is developed for this epoch, thereby effectively reducing the cross-shard transaction rate. Next, we propose Simulated Partition Transactions to decouple consensus and execution. When an overload is imminent, the source shard first constructs two blocks,

In summary, this paper provides the following contributions:

Contribution 1: Compared to outdated hotspot accounts detected from historical transaction graphs, we propose constructing a future transaction graph based on pending transactions. By leveraging the identified upcoming hotspot accounts, we achieve more precise account partitioning, which effectively reduces the cross-shard transaction rate.

Contribution 2: We propose simulated partition transactions to decouple consensus and execution. We implement a sharded blockchain ledger based on the hybrid account and transaction partitioning, denoted as HATLedger. In the event of an impending overload, the source shard quickly partitions pending transactions to target shards. The target shard can simultaneously initiate consensus on the partitioned blocks without additional waiting for account data.

Contribution 3: We provide a detailed analysis showing that HATLedger ensures both safety and liveness even in the presence of malicious attacks from faulty nodes.

Contribution 4: We conduct extensive scalability experiments under real workloads. The results show that HATLedger’s hybrid account and transaction partitioning effectively reduces cross-shard transaction rates and mitigates load imbalance, thereby leading to significant performance gains.

Existing research [4–6,8,9,13] has made extensive attempts to explore sharding structures in sharded blockchains. SharPer [4], Shard Scheduler [8], and TxAllo [9] adopt a one-to-one account-to-shard mapping, where each account is managed by exactly one shard. Zilliqa [14] and LMChain [5] employ sharding technology based on a beacon chain. While each account is managed by a single shard, the beacon chain in the system acts as a scheduler, synchronizing the global state across all shards. BrokerChain [6] and SharDAG [13] adopt a one-to-many account-to-shard mapping, where some accounts can be replicated or distributed across multiple shards for concurrent processing. Therefore, to ensure greater generality and adaptability, this work focuses on the one-to-one sharding structure. Next, we introduce the system model and assumptions under the one-to-one sharding structure.

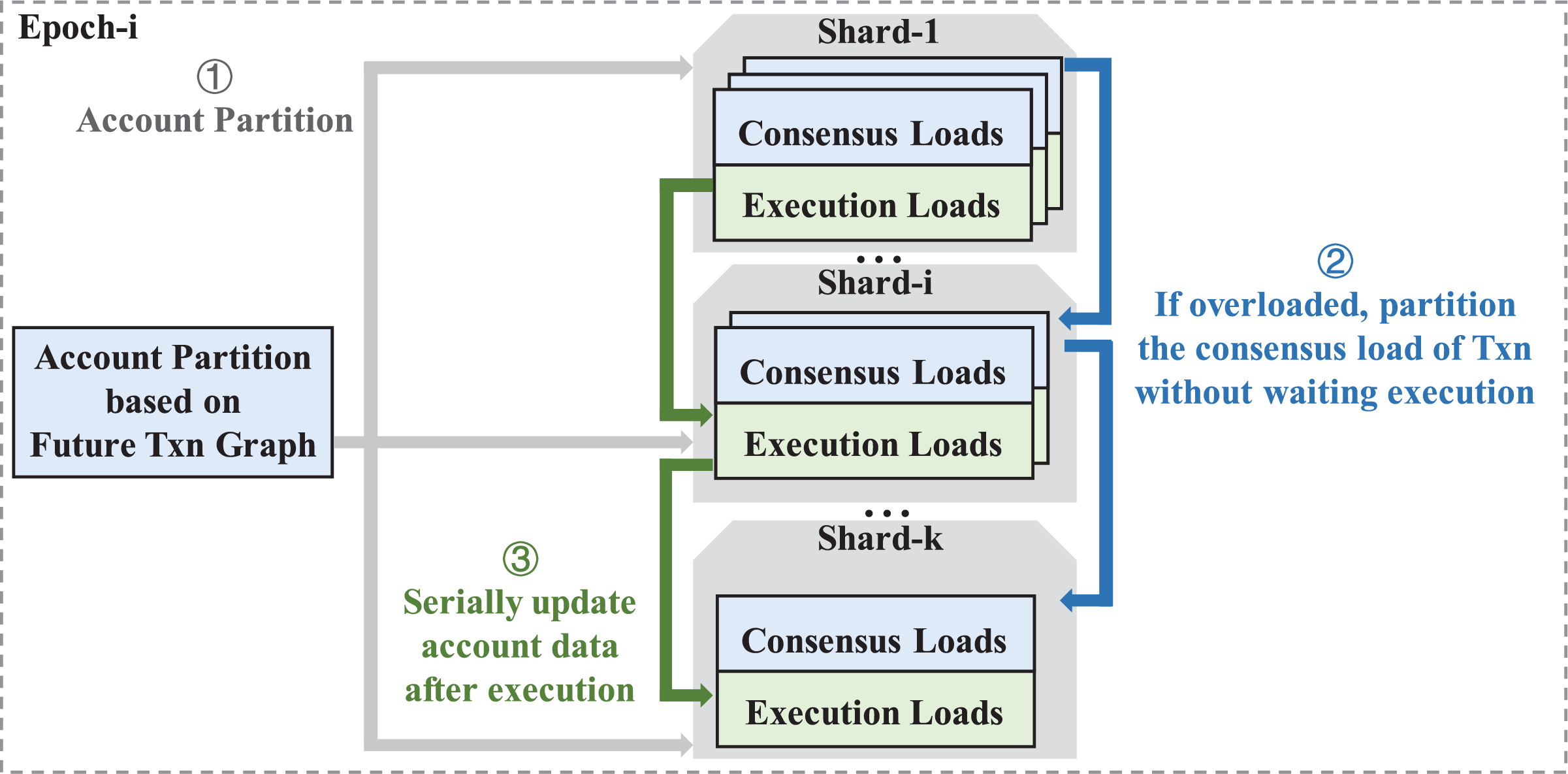

Table 1 summarizes existing works in one-to-one blockchain sharding models. In SharPer [4], accounts are evenly distributed across shards based on account hashes. Under ideal conditions with uniformly distributed account access, the Hash-based partition strategy achieves load balancing. However, when account access becomes skewed, the shard containing hotspot accounts may experience overloading. Shard Scheduler [8] allocates pending transactions and new accounts to low-load shards based on the current load of each shard. This ensures load balancing across shards even under skewed account access by leveraging account partition and transaction partition. Nevertheless, both SharPer and Shard Scheduler face the limitation of high cross-shard transaction rates due to account dispersion. On the other hand, TxAllo [9] constructs a historical transaction graph from historical transactions and applies community detection algorithms to identify hotspot accounts, which are then assigned to the same shard. While this approach reduces the cross-shard transaction rate for transactions involving hotspot accounts, it inevitably leads to overloading of shards due to the concentration of hotspot accounts within the same shard.

By analyzing the real transaction history on Ethereum, existing studies [5,8–10] consistently reveal that a small portion of hotspot accounts dominate the majority of transactions, highlighting the skewed distribution of account access. Hu et al. [5] pointed out that approximately 14.3% of accounts are involved in nearly 90% of transactions, while the top 14.3% of accounts are not constant. Therefore, as the load dynamically changes, outdated hotspot accounts identified from the historical transaction graph cannot effectively reduce the cross-shard transaction rate in future workloads.

Next, we provide a detailed analysis of how hotspot account distribution affects sharding performance. The distribution of hotspot accounts impacts system performance primarily by influencing the cross-shard transaction rate and the workload balance among shards. (1) When hotspot accounts are uniformly distributed across shards, shard workloads become balanced. However, because most transactions involve hotspot accounts stored in different shards, the cross-shard transaction rate increases substantially. Consequently, the frequency of cross-shard consensus operations—characterized by high latency and communication overhead—rises significantly, thereby degrading overall system performance. (2) When hotspot accounts are partitioned within a single shard, most transactions access hotspot accounts located in that shard. As a result, the cross-shard transaction rate drops sharply, and intra-shard consensus—characterized by low latency and minimal communication overhead—becomes dominant. However, because most transactions are processed within the same shard, the limited processing capacity of that shard leads to overload, while other shards remain idle. Consequently, overall performance deteriorates due to inefficient resource utilization.

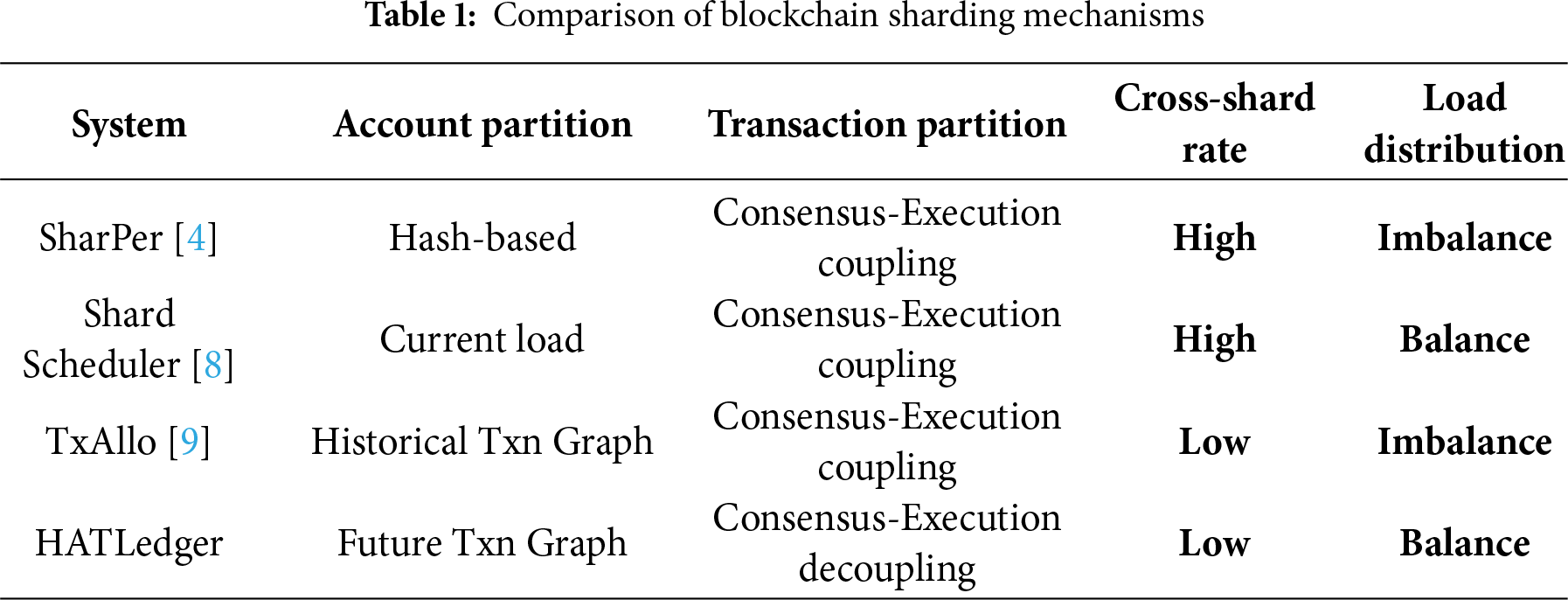

As shown in Table 1, by comparing existing studies, we further analyze the causes that limit system performance and identify potential opportunities for improvement. As shown in Fig. 2a, we observe that the transaction partition with Consensus-Execution Coupling in existing works typically follows the sequence: Transaction Consensus Loads and Execution Loads in the Source Shard

Figure 2: Transaction partition in different systems: (a) Transaction partition with consensus-execution coupling in existing works; (b) Transaction partition with consensus-execution decoupling in HATLedger

We propose HATLedger, a sharded blockchain ledger based on the Hybrid Account and Transaction partitioning, to further enhance system performance.

System Model and Assumptions: The system model and assumptions used in this work, HATLedger, are consistent with those of SharPer [4]. HATLedger consists of a series of distributed nodes. Each shard

Transaction Processing Workflow: When a transaction accesses accounts within a single shard, it is classified as an intra-shard transaction and is processed solely within that shard for both consensus and execution. When a transaction accesses accounts across multiple shards, it is classified as a cross-shard transaction. For cross-shard transactions, multiple leaders from the involved shards compete to become the proposer of the transaction. This proposer coordinates the involved shards to reach consensus on the cross-shard transaction using the PBFT protocol. Existing studies [4,11] have thoroughly explored the workflows and security of transaction processing for intra-shard and cross-shard transactions. As this work focuses on account partitioning, transaction processing is beyond the scope of this paper.

Design Goals: HATLedger proposes a novel hybrid partitioning approach to address the challenges of dynamic hotspot accounts and shard overload in dynamic workloads.

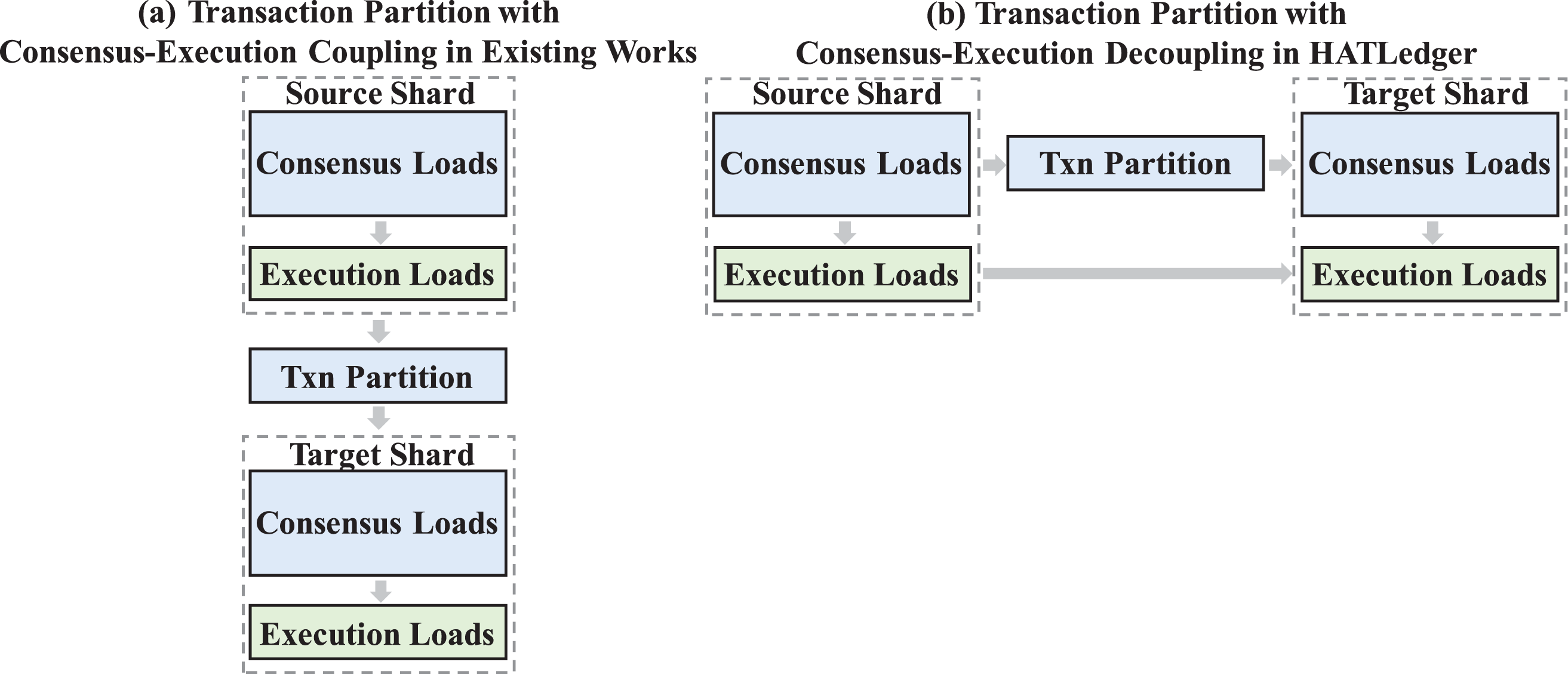

System Workflow: As shown in Fig. 3, HATLedger operates in epochs. The workflow of HATLedger is as follows:

Figure 3: System workflow of HATLedger

(1) At the beginning of a new epoch, HATLedger constructs a future transaction graph based on the pending transactions of all shards. Using this graph, HATLedger detects the upcoming hotspot accounts and repartitions all accounts across shards accordingly, thereby reducing the cross-shard transaction rate.

(2) Each shard then reaches consensus on transactions under the new account partition, executes the transactions, and updates the account data. When a shard detects an impending overload, it can further repartition the numerous pending transactions of hotspot accounts to different underloaded shards. Upon receiving these transactions, the underloaded shards proactively initiate intra-shard concurrent consensus.

(3) Once the source shard completes processing all relevant transactions, it transfers the updated account data to the underloaded shards. The underloaded shard then utilizes the received account data to serially execute transactions that have already achieved consensus.

3.2 Account Partitioning Based on Future Transaction Graph

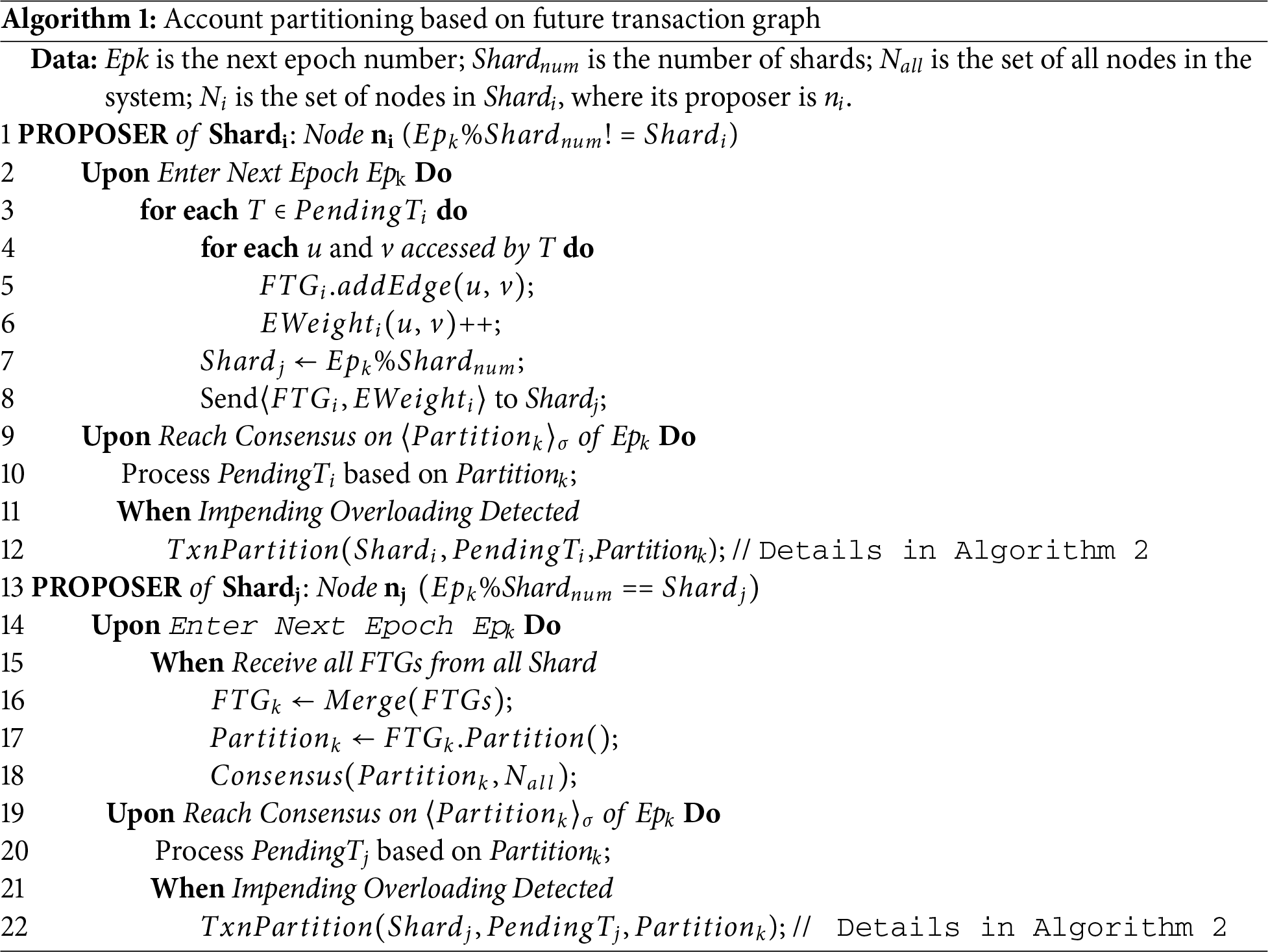

As shown in Algorithm 1, at the beginning of a new epoch

The sharding model guarantees that each account is managed exclusively by a single shard. Suppose accounts

Analysis: (1) The account partition based on the future transaction graph has the same cost and security guarantees as TxAllo’s method, which uses the historical transaction graph. Through global consensus, all shards adopt a consistent account partition. Moreover, the future transaction graph enables more precise detection of upcoming hotspot accounts, leading to more effective account partitioning and significantly reducing the cross-shard transaction rate. Experiments in Section 5 validate this observation. (2) HATLedger maintains both security and liveness even in the presence of malicious behavior. The potential malicious actions include incomplete FTGs sent by proposers from other shards and inappropriate account partitioning schemes proposed by the coordinator shard’s proposer. However, consensus protocols such as PBFT [12] ensure that all shards reach an agreement on a consistent account partitioning scheme, guaranteeing the system’s security. Additionally, the coordinator shard rotates the coordinator role and repartitions accounts at the beginning of each new epoch, effectively mitigating the long-term impact of inappropriate partitioning and enhancing system robustness. While a malicious proposer from the coordinator shard may cause temporary performance degradation by proposing an inappropriate partitioning scheme, other shards can promptly identify and report such behavior. Furthermore, the transaction partitioning techniques introduced in the next section effectively address shard overloading caused by inappropriate partitioning schemes. Therefore, HATLedger maintains both security and liveness.

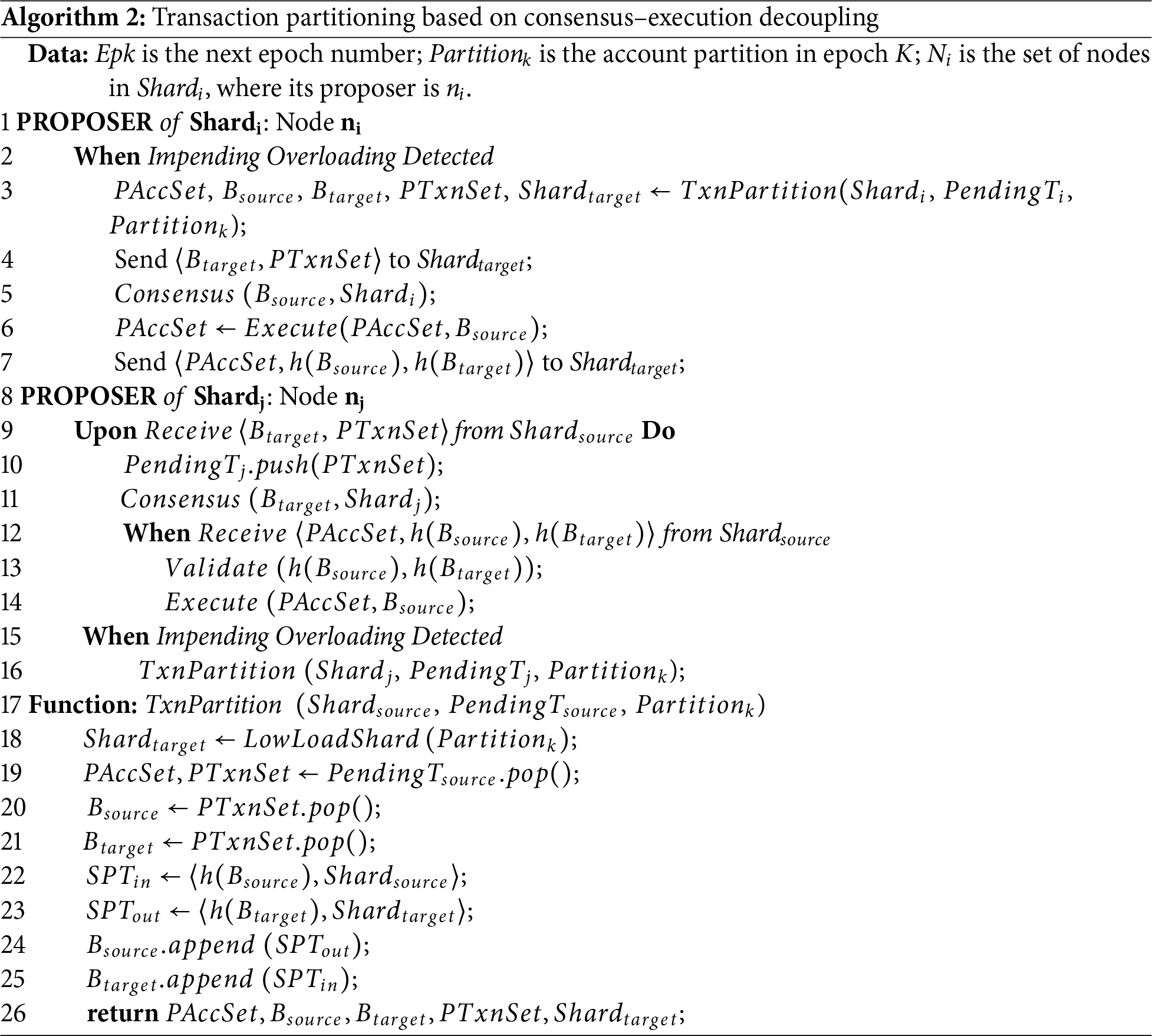

3.3 Transaction Partitioning Based on Consensus–Execution Decoupling

As discussed in Section 2, assigning hotspot accounts to the same shard effectively reduces the cross-shard transaction rate. However, it introduces a critical issue: overloading the associated shard and underutilizing other shards. To address this issue, we propose a transaction partitioning approach with Consensus–Execution decoupling.

As described in [8,9], the transaction partitioning in existing works, which is based on Consensus–Execution coupling, is as follows: consensus and execution can begin in a shard only when it holds both the partitioned account data and the corresponding assigned pending transactions.

In contrast, our proposed transaction partitioning in HATLedger, which is based on Consensus–Execution decoupling, is as follows: upon receiving the partitioned pending transactions, a shard promptly initiates consensus without the corresponding account data. The shard then executes the agreed transactions once the corresponding account data becomes available.

Therefore, we present the following theorem:

Theorem 1: Both the transaction partitioning in existing works, which is based on Consensus–Execution coupling, and the transaction partitioning in HATLedger, which is based on Consensus–Execution decoupling, achieve equivalent results for transaction consensus and execution, thereby ensuring system consistency.

Proof: Assume the source shard contains a partitioned account

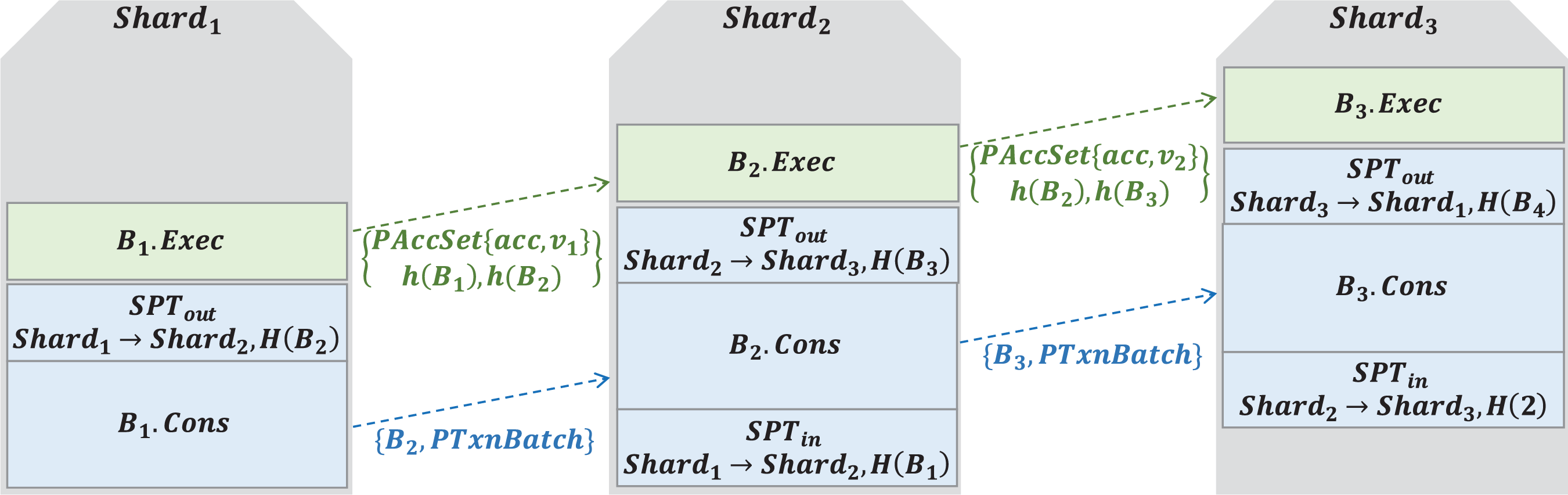

Therefore, as shown in Fig. 4, when

Figure 4: Transaction partitioning based on consensus–execution decoupling in HATLedger

In Algorithm 2, we present the details of transaction partitioning upon detecting an impending overload. We propose using simulated partition-out transactions

Subsequently, the source shard sends the partitioned block

As a result, source and target shards can leverage the simulated partition to begin consensus on related transactions without waiting for the execution involving actual account data. This innovative transaction partitioning strategy allows HATLedger to mitigate shard overloading while significantly improving transaction throughput and overall system efficiency.

Analysis: (1) In terms of performance, HATLedger achieves a lower cross-shard transaction rate while effectively addressing shard overloading. Specifically, HATLedger constructs a future transaction graph to better identify upcoming hotspot accounts, enabling more effective account partitioning to reduce the cross-shard transaction rate. When impending overload is detected, the shard performs fine-grained transaction partitioning to efficiently reach consensus on pending transactions without requiring the source and target shards to wait on each other. (2) In terms of security, both the source shard and the target shard independently reach consensus on the simulated partition-out and partition-in transactions, ensuring consistency in the transaction partition order and the hashes of

In this section, we analyze the safety and liveness of HATLedger. Specifically, HATLedger adopts the same system model and assumptions, as well as the intra-shard and cross-shard transaction processing workflows of SharPer [4]. Consequently, it inherits the same level of security and liveness as SharPer in these aspects. Next, we analyze whether the solutions proposed by HATLedger—account partitioning based on the future transaction graph and transaction partitioning based on Consensus–Execution decoupling—may be impacted by malicious behavior and how they may affect its safety and liveness.

When faulty nodes act as followers in shards, Byzantine fault-tolerant protocols such as PBFT [12] are able to maintain the safety and liveness of HATLedger. Consequently, this discussion emphasizes scenarios where shard proposers are faulty nodes and examines their potential impact on the system.

Safety: (1) The account partitioning based on the future transaction graph does not affect the safety of HATLedger. This is because the account partitioning scheme proposed by the coordinator shard’s proposer is agreed upon by all nodes in all shards through the consensus protocol. (2) To mitigate shard overloading, we propose the transaction partitioning method. In this process, we construct a block

Liveness: Consistent with existing sharded blockchain systems [4,8,9], when a malicious proposer intentionally delays initiating a proposal, HATLedger ensures liveness through the timeout detection mechanism of the consensus protocol’s view-change process, which promptly replaces the proposer. At the beginning of a new epoch, if the proposer of the coordinator shard is a faulty node and proposes an inappropriate account partitioning scheme, it does not compromise the security of HATLedger, as previously discussed. However, it may lead to overloading in certain shards. To address this, the proposed transaction partitioning method effectively mitigates the impact of shard overloading. Furthermore, when a shard detects that its performance is significantly constrained by the inappropriate account partitioning, it can preemptively request a transition to the next epoch. Entering a new epoch involves a different shard serving as the coordinator, which proposes a new account partitioning scheme based on the future transaction graph. This mechanism guarantees that any adverse effects of inappropriate account partitioning are transient, ensuring long-term system stability and liveness. Therefore, HATLedger ensures liveness.

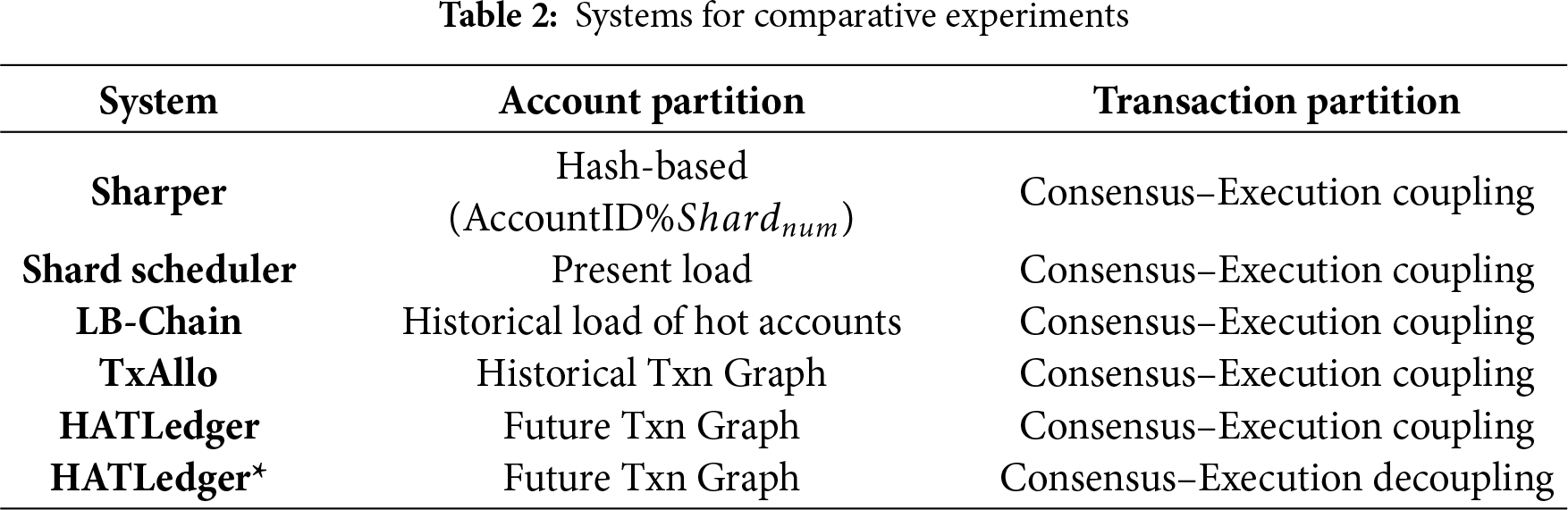

System: We implement HATLedger in C++. As shown in Table 2, we compare the following systems: SharPer [4], Shard Scheduler [8], TxAllo [9], HATLedger, and HATLedger*. Specifically, SharPer, Shard Scheduler, TxAllo, and HATLedger adopt different account partitioning strategies under Consensus–Execution Coupling. Compared to these systems, HATLedger* employs transaction partitioning based on Consensus–Execution decoupling, as described in Section 3.3.

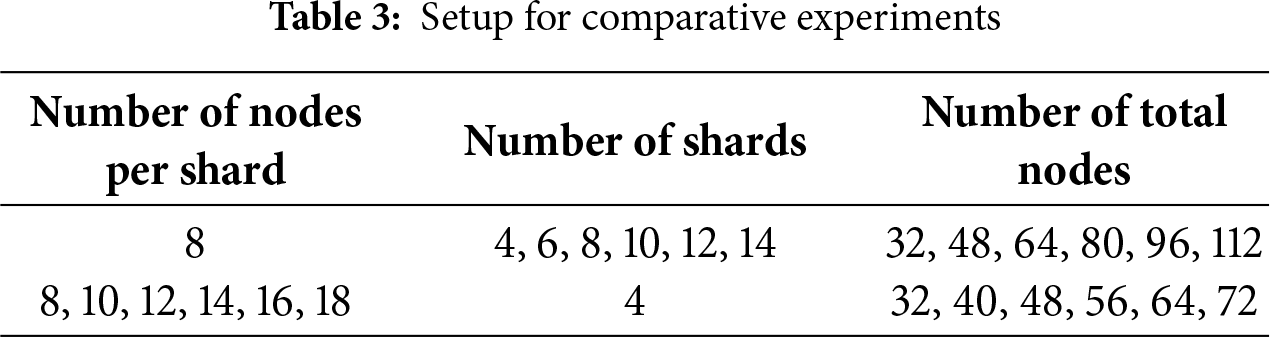

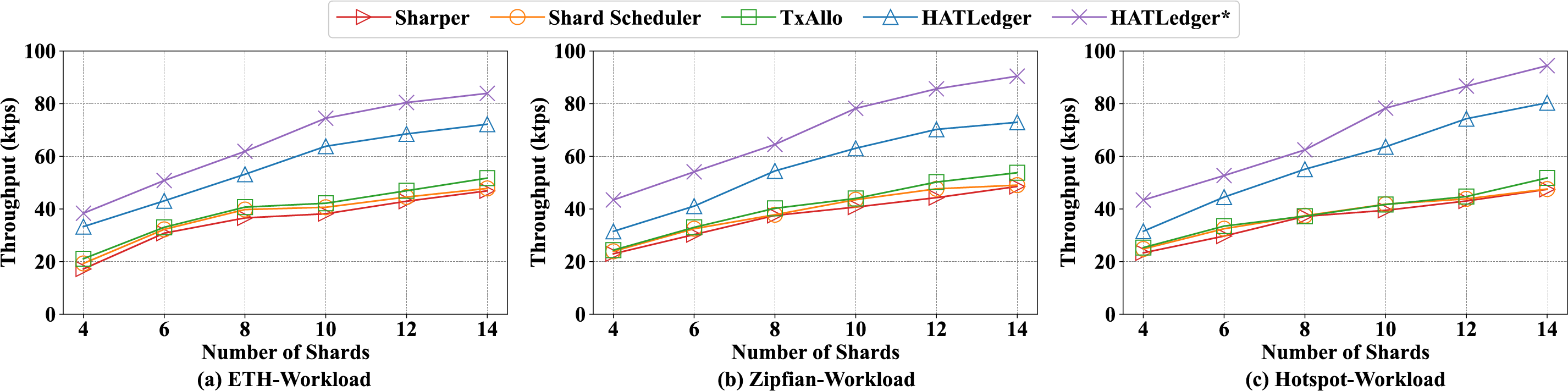

Setup: Our experiments are conducted on Alibaba Cloud, where the default cluster consists of 8 clients and 4 shards. Each shard is composed of 8 nodes, totaling 32 nodes across the 4 shards. The parameters for the scalability experiments are listed in Table 3, where Number of total nodes = Number of nodes per shard * Number of shards. Each node is equipped with a 4-core Intel Xeon (Sapphire Rapids) Platinum 8475 B processor and 16 GB memory. The operating system is 64-bit Ubuntu 20.04 LTS. We adopt a transaction-countbased epoch configuration, where the coordinating shard (

Workload: Consistent with the workloads used in existing studies [4,9,11], we employ three types of workloads: ETH-Workload, Zipfian-Workload, and Hotspot-Workload. By default, all systems create 100,000 accounts. Specifically, ETH-Workload uses the Ethereum transactions between block heights of 13 to 13.2M (Aug.–Oct. 2021), as accounts were particularly active during this period [17]. The default parameter for the Zipfian-Workload (Zipf = 0.99) reflects common workload distributions in distributed systems. For the Hotspot-workload, 1% of the accounts are designated as hotspot accounts, and 90% of the transactions access these hotspot accounts, following a distribution similar to that described in [5]. For each test, we repeat the experiment five times and take the average as the final result.

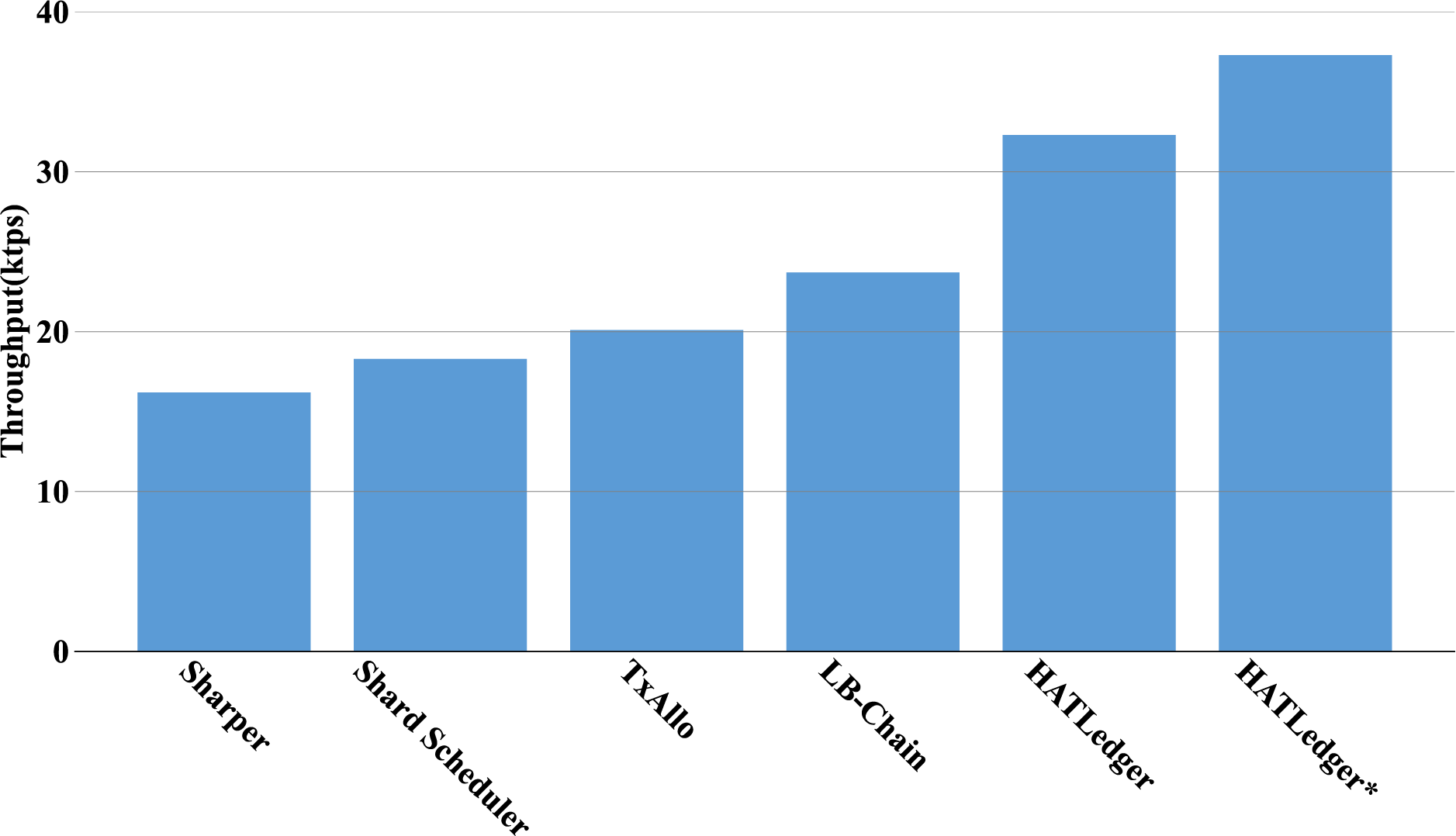

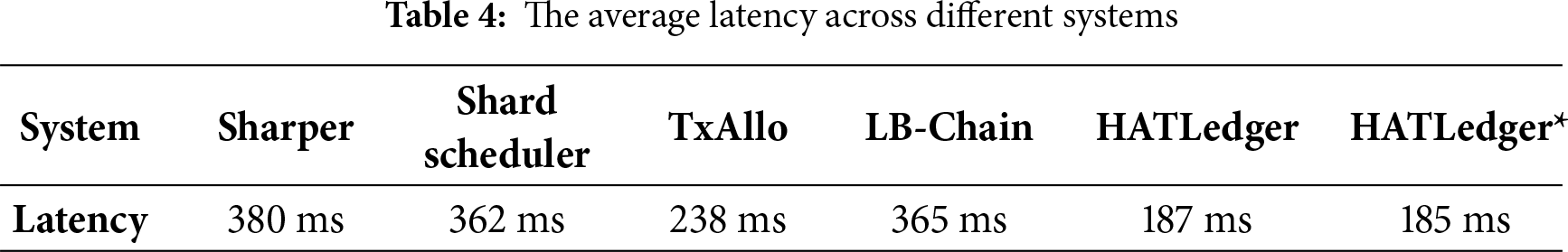

As shown in Table 3, we conducted extensive sharding scalability experiments to compare the performance of different systems. Using the default parameters underlined in Table 3 and the Eth-Workload, we evaluated the overall performance of all systems. As illustrated in Fig. 5, HATLedger* achieved the highest throughput of 37,398 tps, followed by HATLedger (32,317 tps), LB-Chain (23,703 tps), TxAllo (20,771 tps), Shard Scheduler (19,675 tps), and SharPer (16,929 tps). Compared to SharPer, Shard Scheduler, TxAllo, and LB-Chain, HATLedger* demonstrated significant throughput improvements of 2.2x, 1.9x, 1.8x, and 1.6x, respectively. Meanwhile, as shown in Table 4, HATLedger and HATLedger* exhibited the lowest average latency, attributable to the significant reduction in the cross-shard transaction rate. Across all experiments (Figs. 6–9), HATLedger* consistently achieved the best performance. Additionally, the results of the ablation experiments validated the efficiency of the techniques proposed by HATLedger* for account partitioning based on the future transaction graph and transaction partitioning based on Consensus–Execution decoupling.

Figure 5: The overall performance of different systems

Figure 6: The throughput with different numbers of shards under (a) ETH-Workload, (b) Zipfian-Workload, and (c) Hotspot-Workload

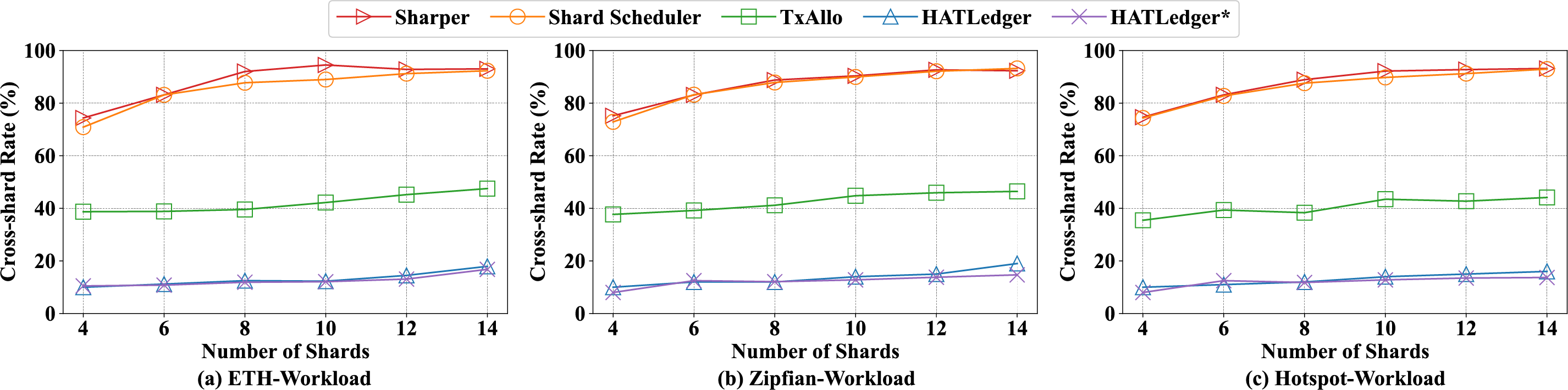

Figure 7: The cross-shard rate with different numbers of shards under (a) ETH-Workload, (b) Zipfian-Workload, and (c) Hotspot-Workload

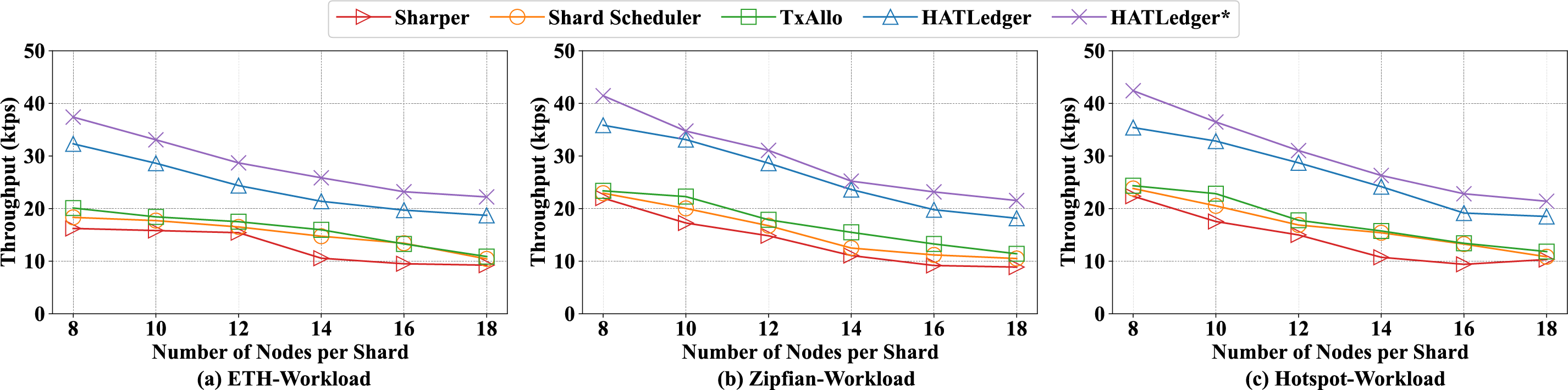

Figure 8: The throughput with different numbers of nodes per shard under (a) ETH-Workload, (b) Zipfian-Workload, and (c) Hotspot-Workload

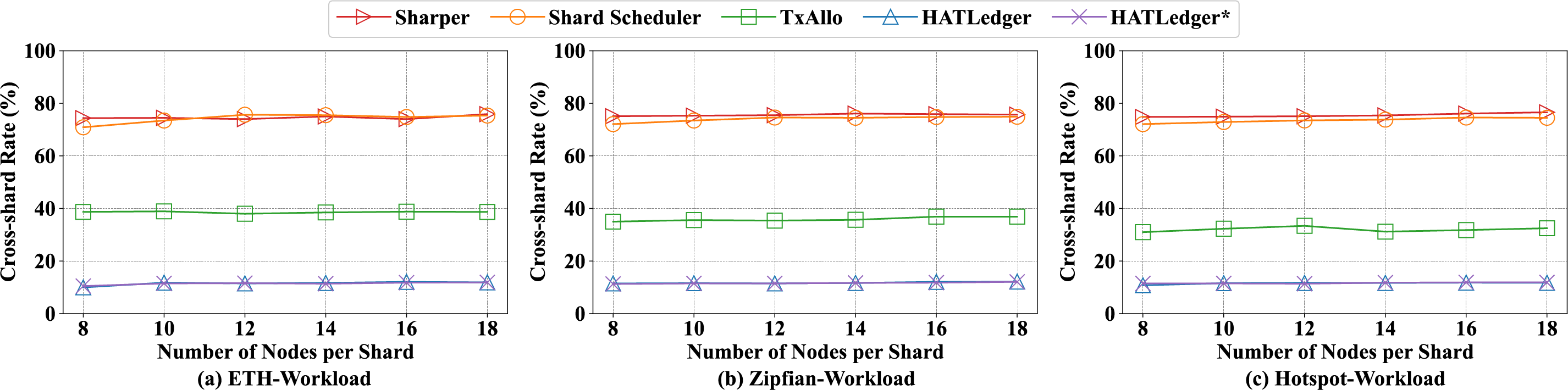

Figure 9: The cross-shard rate with different numbers of nodes per shard under (a) ETH-Workload, (b) Zipfian-Workload, and (c) Hotspot-Workload

5.3 The Impact of Different Numbers of Shards

First, we investigate the impact of varying the number of shards on system performance. As shown in Table 3, we fix the number of nodes per shard at 8 and incrementally increase the number of shards from 4 to 14. Under the Eth workload, the throughput of HATLedger* increases from 37,398 to 83,927 tps as the number of shards grows, while HATLedger’s throughput rises from 32,317 to 72,196 tps. In contrast, other systems exhibit a smaller improvement, with throughput increasing from 20,000 to 50,000 tps. Moreover, the cross-shard transaction rates of HATLedger and HATLedger* remain around 10%, significantly lower than those of TxAllo (40%) and other systems (80%). These results demonstrate that both HATLedger and HATLedger* enhance performance by accurately identifying upcoming hotspot accounts through future transaction graph analysis, thereby reducing the cross-shard rate. Additionally, HATLedger* outperforms HATLedger due to its execution-consensus decoupled transaction partitioning strategy. As the number of shards increases, the probability that transaction-accessed accounts are distributed across different shards also rises. The results in Fig. 7 validate this observation. As the number of shards increases, the cross-shard transaction rates of SharPer and Shard Scheduler sharply rise from 70% to 90%. This trend occurs because SharPer ignores account partitioning, while Shard Scheduler allocates transactions to currently underloaded shards—achieving load balance but sacrificing cross-shard rate. In comparison, TxAllo’s cross-shard rate increases more gradually from 40% to 50%, as it detects hotspot accounts using historical transaction graphs and groups them into the same shard to reduce cross-shard transactions. However, hotspot detection based on historical graphs exhibits delayed adaptation. In contrast, HATLedger and HATLedger* exploit the future transaction graph to more precisely identify forthcoming hotspot accounts, thereby maintaining a stable cross-shard rate between 10% and 20%.

All systems show slightly better performance under the Zipfian and Hotspot workloads than under the Eth workload. The Eth workload, being more random in hotspot distribution and transactions, leads to a small performance drop in all systems.

5.4 The Impact of Different Numbers of Nodes per Shard

As shown in Table 3, we fixed the system at four shards and gradually increased the number of nodes per shard from 8 to 18. As shown in Fig. 9, when the number of shards remains constant but the number of nodes per shard increases, all systems exhibit noticeable performance degradation. The throughput of HATLedger* declines from 37,398 to 22,173 tps, while HATLedger drops from 32,317 to 18,721 tps. SharPer’s throughput decreases from 16,929 to 9251 tps. During this process, the cross-shard transaction rates of all systems remain stable. This result indicates that increasing the node number per shard only increases the consensus cost without affecting the cross-shard rate. Moreover, since cross-shard consensus incurs higher overhead than intra-shard consensus, SharPer—with the highest cross-shard rate—suffers the most significant performance decline. In contrast, HATLedger* effectively alleviates shard overload through transaction partitioning based on Consensus–Execution decoupling, achieving the most stable performance among all systems.

5.5 The Workload Distribution of Different Systems

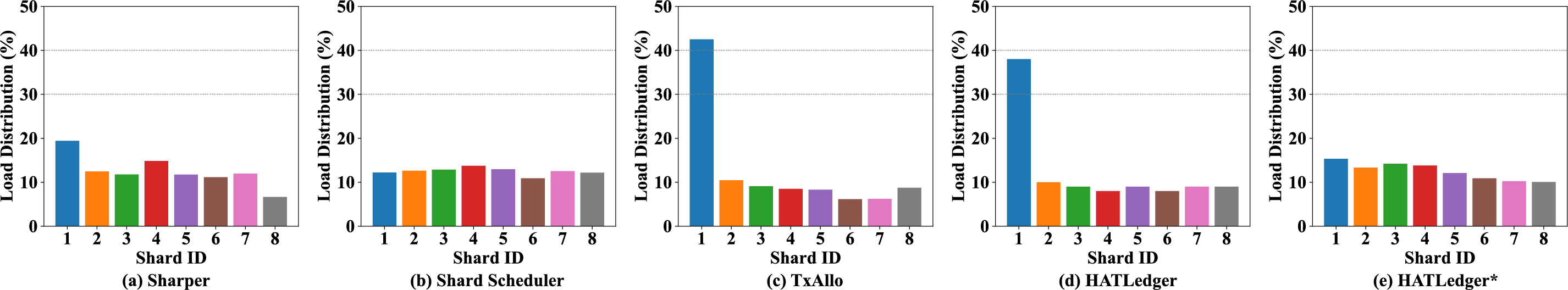

Each shard consists of 8 nodes, and we evaluated load distribution across different systems in a setup with 8 shards. As shown in Fig. 10c,d, both TxAllo and HATLedger partition hotspot accounts to the same shard (Shard 1), which reduces cross-shard transaction rate but leads to workload imbalance, as Shard 1 becomes significantly overloaded. In contrast, as shown in Fig. 10e, HATLedger* achieves a more balanced workload distribution across all shards. This improvement is attributed to the consensus–Execution decoupled transaction partitioning mechanism, which redistributes pending transactions from Shard 1 to other relatively idle shards. As a result, the load across shards in HATLedger* remains relatively balanced.

Figure 10: The workload distribution of each shard in (a) Sharper, (b) Shard scheduler, (c) TxAllo, (d) HATLedger, and (e) HATLedger*

5.6 The Performance under Overloads

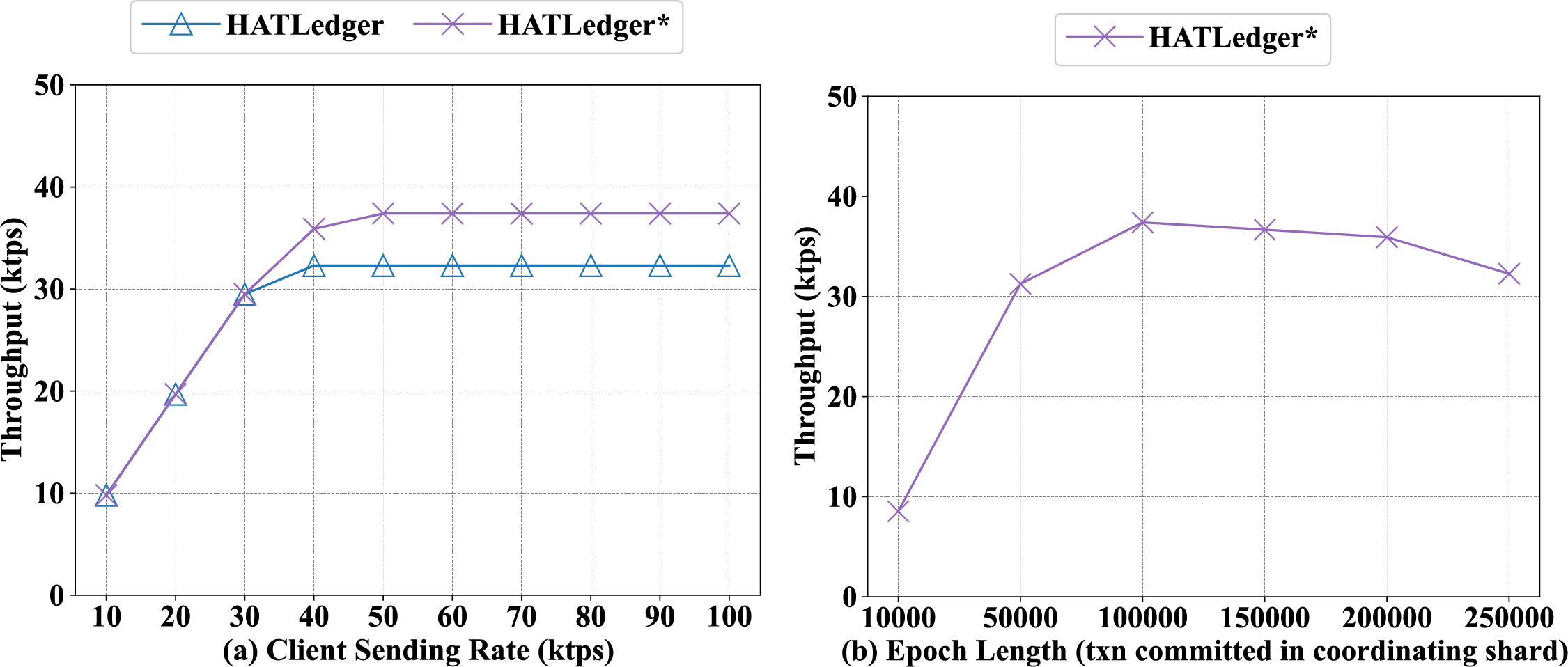

We simulated an overload DDoS attack by increasing the client transaction sending rate from 10,000 to 100,000 tps. In Fig. 11a, the throughput of HATLedger* and HATLedger initially increases with the growth of the sending rate and eventually stabilizes at 37,398 and 32,317 tps, respectively. When the sending rate is below 30,000 tps, HATLedger* and HATLedger exhibit similar performance because both systems have sufficient capacity to process all transactions. When the sending rate exceeds 30,000 tps, the performance of HATLedger approaches saturation. HATLedger* reach saturation when the sending rate exceeds 40,000 tps. This demonstrates that HATLedger* handles sudden overloads more effectively through execution-consensus decoupled transaction partitioning mechanism, thereby providing better resilience against DDoS attacks.

Figure 11: The performance under different scenarios: (a) The performance under different client sending rates; (b) The performance under different epoch lengths

5.7 The Performance under Different Epoch Lengths

We adopt a transaction-count-based epoch configuration, in which the epoch length is controlled by the number of transactions committed in the coordinating shard (

Liu et al. [18] provided a comprehensive review of existing consensus protocols and proposed the Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS) to systematically assess their respective advantages and limitations. Luo et al. [19] proposed the Symbiotic Blockchain Consensus (SBC), an energy-efficient sharding mechanism designed for wireless networks to satisfy the low-power demands of 6G systems. Xiong et al. [20] proposed a group-based approach to improve the efficiency of blockchains. Chien et al. [21] proposed that making effective predictions is very important for resource allocation. A more advanced step was made by OmniLedger [7], which introduced state sharding so that each shard only manages a portion of the global ledger. RapidChain [22] was developed to substantially mitigate high reconfiguration costs. SharPer [4] targets scalability by dynamically redistributing shards across network clusters. Shard Scheduler [8] proposes to partition transactions and their associated accounts to low-load shards based on current load distribution, achieving better load balancing but still suffering from high cross-shard transaction rates. In contrast, TxAllo [9] constructs a hotspot account graph based on historical transactions and partitions hotspot accounts to the same shard, thereby reducing high cross-shard transaction rates but leading to load imbalance.

HATLedger is an efficient sharded blockchain ledger built on Hybrid Account and Transaction partitioning. Leveraging the future transaction graph, HATLedger identifies upcoming hotspot accounts and effectively reduces the cross-shard transaction rate. To address potential shard overloading, HATLedger supports transaction partitioning based on Consensus-Execution decoupling, eliminating the need for waiting between source and target shards. Experimental results demonstrate that HATLedger achieves up to a 2.2

Acknowledgement: We sincerely thank Beijing Institute of Technology for providing support for the completion of this research.

Funding Statement: This research was funded by the National Key Research and Development Program of China (Grant No. 2024YFE0209000), the NSFC(Grant No. U23B2019).

Author Contributions: Shuai Zhao: Investigation, Methodology, Software, Validation, Visualization, Writing—original draft; Zhiwei Zhang: Formal analysis, Funding acquisition, Resources, Writing—review & editing; Junkai Wang: Data curation, Formal analysis, Writing—review & editing; Ye Yuan: Funding acquisition, Project administration; Guoren Wang: Funding acquisition, Project administration, Resources. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data that support the findings of this study are openly available in the transaction history of Ethereum with block heights of 13 to 13.2M (Aug.–Oct. 2021) at https://ethereum.org (accessed on 12 October 2025).

Ethics Approval: Not applicable.

Conflicts of Interest: Prof. Guoren Wang, one of the co-authors, currently holds the position of Editor-in-Chief at Computers, Materials & Continua. The submission was made via the journal’s regular online system. In line with the author guidelines, manuscripts involving an editor of the journal as a co-author are overseen by a different editor who has minimal potential conflicts of interest, thereby ensuring an impartial review process. Furthermore, since this manuscript is submitted to a special issue, we confirm that no conflicts of interest exist with any of the guest editors associated with this issue.

References

1. Bitcoin Project [Internet]. 2024 [cited 2025 Aug 26]. Available from: http://bitcoin.org. [Google Scholar]

2. Ethereum Foundation [Internet]. 2025 [cited 2025 Aug 26]. Available from: https://ethereum.org. [Google Scholar]

3. Hyperledger Project [Internet]. 2024 [cited 2025 Aug 26]. Available from: http://github.com/hyperledger/fabric. [Google Scholar]

4. Amiri MJ, Agrawal D, Abbadi AE. SharPer: sharding permissioned blockchains over network clusters. In: Li G, Li Z, Idreos S, Srivastava D, editors. SIGMOD ’21: international conference on management of data. New York, NY, USA: ACM; 2021. p. 76–88. doi:10.1145/3448016.3452807. [Google Scholar] [CrossRef]

5. Hu D, Wang J, Liu X, Li Q, Li K. LMChain: an efficient load-migratable beacon-based sharding blockchain system. IEEE Trans Comput. 2024;73(9):2178–91. doi:10.1109/TC.2024.3404057. [Google Scholar] [CrossRef]

6. Huang H, Peng X, Zhan J, Zhang S, Lin Y, Zheng Z, et al. Brokerchain: a cross-shard blockchain protocol for account/balance-based state sharding. In: IEEE INFOCOM 2022-IEEE conference on computer communications. Piscataway, NJ, USA: IEEE; 2022. p. 1968–77. doi:10.1109/INFOCOM48880.2022.9796859. [Google Scholar] [CrossRef]

7. Kokoris-Kogias E, Jovanovic P, Gasser L, Gailly N, Syta E, Ford B. Omniledger: a secure, scale-out, decentralized ledger via sharding. In: 2018 IEEE symposium on security and privacy (SP). Piscataway, NJ, USA: IEEE; 2018. p. 583–98. doi:10.1109/SP.2018.000-5. [Google Scholar] [CrossRef]

8. Król M, Ascigil O, Rene S, Sonnino A, Al-Bassam M, Rivière E. Shard scheduler: object placement and migration in sharded account-based blockchains. In: Proceedings of the 3rd ACM Conference on Advances in Financial Technologies; 2021 Sep 26–28; Arlington, VA, USA. New York, NY, USA: ACM. p. 43–56. doi:10.1145/3479722.3480989. [Google Scholar] [CrossRef]

9. Zhang Y, Pan S, Yu J. Txallo: dynamic transaction allocation in sharded blockchain systems. In: 2023 IEEE 39th international conference on data engineering (ICDE). Piscataway, NJ, USA: IEEE; 2023. p. 721–33. doi:10.1109/ICDE55515.2023.00390. [Google Scholar] [CrossRef]

10. Li M, Wang W, Zhang J. LB-Chain: load-balanced and low-latency blockchain sharding via account migration. IEEE Trans Parallel Distrib Syst. 2023;34(10):2797–810. doi:10.1109/TPDS.2023.3238343. [Google Scholar] [CrossRef]

11. Ruan P, Dinh TTA, Loghin D, Zhang M, Chen G, Lin Q, et al. Blockchains vs. distributed databases: dichotomy and fusion. In: Proceedings of the 2021 international conference on management of data. New York, NY, USA: ACM; 2021. p. 1504–17. doi:10.1145/3448016.3452789. [Google Scholar] [CrossRef]

12. Castro M, Liskov B. Practical byzantine fault tolerance. In: OSDI ’99: proceedings of the third symposium on operating systems design and implementation. Berkeley, CA, USA: USENIX Association; 1999. p. 173–86. [Google Scholar]

13. Cheng F, Xiao J, Liu C, Zhang S, Zhou Y, Li B, et al. Shardag: scaling dag-based blockchains via adaptive sharding. In: 2024 IEEE 40th international conference on data engineering (ICDE). Piscataway, NJ, USA: IEEE; 2024. p. 2068–81. doi:10.1109/ICDE60146.2024.00165. [Google Scholar] [CrossRef]

14. The ZILLIQA Team. The ZILLIQA Technical Whitepaper [Internet]. 2017 [cited 2025 Aug 26]. Available from: https://docs.zilliqa.com/whitepaper.pdf. [Google Scholar]

15. Dwork C, Lynch N, Stockmeyer L. Consensus in the presence of partial synchrony. J ACM. 1988;35(2):288–323. doi:10.1145/42282.42283. [Google Scholar] [CrossRef]

16. Karypis G, Kumar V. METIS: A Software Package for Partitioning Unstructured Graphs, Partitioning Meshes, and Computing Fill-reducing Orderings of Sparse Matrices. Technical Report. Minneapolis, MN, USA: University of Minnesota. 1997 [cited 2025 Aug 26]. No. 97-061. Available from: https://hdl.handle.net/11299/215346. [Google Scholar]

17. InPlusLab. Ethereum BlockChain Datasets [Internet]. 2025 [cited 2025 Aug 26]. Available from: https://xblock.pro/#/. [Google Scholar]

18. Liu J, Liu C, Lin M, Xu G. Comprehensive survey of blockchain consensus mechanisms: analysis, applications, and future trends. Comput Netw. 2025;272:111661. doi:10.1016/j.comnet.2025.111661. [Google Scholar] [CrossRef]

19. Luo H, Sun G, Chi C, Yu H, Guizani M. Convergence of symbiotic communications and blockchain for sustainable and trustworthy 6G wireless networks. IEEE Wireless Commun. 2025;32(2):18–25. doi:10.1109/mwc.001.2400245. [Google Scholar] [CrossRef]

20. Xiong H, Jin C, Alazab M, Yeh KH, Wang H, Gadekallu TR, et al. On the design of blockchain-based ECDSA with fault-tolerant batch verification protocol for blockchain-enabled IoMT. IEEE J Biomed Health Inform. 2021;26(5):1977–86. doi:10.1109/JBHI.2021.3112693. [Google Scholar] [PubMed] [CrossRef]

21. Chien WC, Lai CF, Chao HC. Dynamic resource prediction and allocation in C-RAN with edge artificial intelligence. IEEE Trans Ind Inform. 2019;15(7):4306–14. doi:10.1109/TII.2019.2913169. [Google Scholar] [CrossRef]

22. Zamani M, Movahedi M, Raykova M. Rapidchain: scaling blockchain via full sharding. In: Proceedings of the 2018 ACM SIGSAC conference on computer and communications security. New York, NY, USA: ACM; 2018. p. 931–48. doi:10.1145/3243734.3243853. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2026 The Author(s). Published by Tech Science Press.

Copyright © 2026 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools