Open Access

Open Access

ARTICLE

Quantum Fuzzy Support Vector Machine for Binary Classification

1 School of Cybersecurity, Chengdu University of Information Technology, Chengdu, 610225, China

2 Sichuan Key Laboratory of Advanced Cryptography and System Security, Chengdu, 610225, China

3 International Business Machines Corporation (IBM), New York, 14201, USA

* Corresponding Author: Shibin Zhang. Email:

Computer Systems Science and Engineering 2023, 45(3), 2783-2794. https://doi.org/10.32604/csse.2023.032190

Received 10 May 2022; Accepted 01 August 2022; Issue published 21 December 2022

Abstract

In the objective world, how to deal with the complexity and uncertainty of big data efficiently and accurately has become the premise and key to machine learning. Fuzzy support vector machine (FSVM) not only deals with the classification problems for training samples with fuzzy information, but also assigns a fuzzy membership degree to each training sample, allowing different training samples to contribute differently in predicting an optimal hyperplane to separate two classes with maximum margin, reducing the effect of outliers and noise, Quantum computing has super parallel computing capabilities and holds the promise of faster algorithmic processing of data. However, FSVM and quantum computing are incapable of dealing with the complexity and uncertainty of big data in an efficient and accurate manner. This paper research and propose an efficient and accurate quantum fuzzy support vector machine (QFSVM) algorithm based on the fact that quantum computing can efficiently process large amounts of data and FSVM is easy to deal with the complexity and uncertainty problems. The central idea of the proposed algorithm is to use the quantum algorithm for solving linear systems of equations (HHL algorithm) and the least-squares method to solve the quadratic programming problem in the FSVM. The proposed algorithm can determine whether a sample belongs to the positive or negative class while also achieving a good generalization performance. Furthermore, this paper applies QFSVM to handwritten character recognition and demonstrates that QFSVM can be run on quantum computers, and achieve accurate classification of handwritten characters. When compared to FSVM, QFSVM’s computational complexity decreases exponentially with the number of training samples.Keywords

Support Vector Machine (SVM) is a machine learning algorithm that can overcome the local minimum and curse of dimensionality in traditional machine learning algorithms. It is based on the rule of Vapnik-Chervonenkis theory and structural risk minimization principle in statistical learning theory. It is a binary classification technique that uses the training dataset to predict an optimal hyperplane in an n-dimensional space. This hyperplane is used to classify new data sets. There are currently several types of SVM, such as twin SVM [1], Gaussian SVM [2], multi-kernel SVM [3] and so on. Furthermore, SVM has been widely applied in a variety of fields, for example, handwritten hindi character recognition [4], face recognition [5], network intrusion detection [6] and breast cancer diagnosis [7], and so on. Although SVM is a common algorithm for classification problems that treats all samples equally and ignores the effect of outliers and noise on the construction of optimal hyperplanes, it fails to perform well when classifying new sets of data with fuzzy information.

To address this issue, some researchers incorporate fuzzy set theory into SVM and propose fuzzy support vector machine (FSVM) algorithms. Inoue et al. [8] proposed the first FSVM algorithm in 2001, which introduces a fuzzy membership function and uses the FSVM classification technique to eliminate the inseparable area. To reduce the influence of noise or outliers, Taiwan scholars Lin et al. [9] combined fuzzy set theory and proposed an FSVM algorithm in 2002, which assigns a fuzzy membership grade to each training sample to make different training samples have different contributions in predicting an optimal hyperplane in an n-dimensional space. Following that, FSVM was rapidly developed. Wang et al. [10] proposed a bilateral-weighted fuzzy support vector machine (B-FSVM), which improves generalization by assigning different memberships to each training sample. Li et al. [11] proposed a regularized monotonic fuzzy support vector machine model that takes into account the various contributions of each training data as well as prior knowledge of monotonicity. Tao et al. [12] proposed an affinity and class probability-based fuzzy support vector machine technique (ACFSVM) that has a better generalization performance when dealing with imbalanced data set classification problems. The key to the FSVM is to construct the fuzzy membership function and choose a suitable fuzzy membership function, which can effectively reduce the effects of outliers and noise when solving the classification problem. Tang et al. [13] proposed a new fuzzy membership function combined with the concept of the k-nearest neighbor algorithm, in which the distance between each training sample and the center of classification, as well as sample affinity, are considered concurrently. Tang [14] proposed a new fuzzy membership function based on the structural information of two classes in the input space and feature space that can effectively distinguish the support vectors and the outliers. To deal with classification problems with outliers or noises, Yang et al. [15] proposed a kernel fuzzy c-means clustering-based fuzzy SVM algorithm (KFCM-FSVM). Currently, the FSVM algorithm has demonstrated superior generalization performance in a variety of fields, including bankruptcy prediction [16], short-term load forecasting [17], and Breast cancer diagnosis [18]. Despite the fact that the FSVM algorithm can deal with a set of data with fuzzy information, it cannot efficiently deal with the complexity and uncertainty of big data.

Due to the high parallelism of quantum computing, some researchers have set out to combine quantum computing and machine learning algorithms and propose quantum machine learning algorithms [19–22]. Rebentrost et al. [23] proposed a quantum support vector machine (QSVM) algorithm in 2014, which uses a swap test [24] to solve inner products and the HHL algorithm [25] to solve matrix inversion. This work demonstrated the feasibility of executing QSVM in a near-term quantum computer by implementing an experimental realization of QSVM for handwriting recognition on a four-qubit NMR test [26]. Ding et al. [27] proposed a quantum-inspired classical algorithm for the least-square support vector machine (LS-SVM) using an improved fast sampling technique, which was inspired by the QSVM algorithm. Lin et al. [28] proposed a novel quantum algorithm for simplifying quantum LS-SVM, as well as a hybrid quantum-classical version for sparse solutions of LS-SVM. Although QSVM is a generalization of the traditional SVM and it can hold the promise of faster algorithmic processing of data, it cannot deal with classification problems for training samples with fuzzy information, so research into QSVM with fuzzy training samples is very meaningful. Moreover, there are many uncertainties in the objective world, how to deal with the ambiguity of big data efficiently and accurately is the premise and key to the SVM algorithm.

Based on the analysis presented above, this paper proposes a novel quantum fuzzy support vector machine for binary classification, paving the way for dealing with the complexity and uncertainty of big data efficiently and accurately. This paper uses the fact that quantum computing can efficiently process big data and FSVM is easy to deal with the complexity and uncertainty problems to research and propose an efficient and accurate QFSVM algorithm. The core idea of the proposed algorithm is to use the least-squares method to convert the quadratic programming problem in FSVM into a linear equation and then solve the linear equation using the HHL algorithm, which can effectively improve the computational complexity of FSVM. The proposed QFSVM algorithm can efficiently deal with classification problems for training samples with fuzzy information, and it can determine whether a sample belongs to the positive or negative class. Moreover, it is applied to the handwritten characters and the experimental results show that the proposed QFSVM algorithm can achieve accurate classification of handwritten characters, and that executing QFSVM in a near-term quantum computer is feasible.

The rest of this paper is structured as follows. A fuzzy support vector machine is introduced in Section 2. The proposed quantum fuzzy support vector machine for binary classification is described in Section 3. Experimental realization of quantum fuzzy support vector machine is given in Section 4. Discussion and conclusion are contained in Section 5.

2 Fuzzy Support Vector Machine

Classical set theory: crisp set A of X is defined by the characteristic function

where

Fuzzy Set Theory [29]: If the set X denotes a collection of objects defined by x, then a fuzzy set A in X can be formulated as a set of ordered pairs:

where

2.2 Fuzzy Support Vector Machine

The data points of FSVM are given by

where

The FSVM algorithm, like the SVM algorithm, seeks an optimal separate hyperplane

where

To solve the above optimization problem, we construct the following Lagrangian function:

where

we differentiate Eq. (5) to

Substituting Eq. (6) into Eq. (4), maximizing

where

To solve the quadratic programming problem, we can get

where

3 Quantum Fuzzy Support Vector Machine for Binary Classification

3.1 Fuzzy Membership Function Based on K-Nearest Neighbor Algorithm

The fuzzy membership function based on K-nearest neighbor algorithm is described in Algorithm 1.

From Eq. (11), it can be seen that when

3.2 Quantum Fuzzy Support Vector Machine

The solution to the quadratic programming problem of Eq. (4) has high computational complexity. By introducing the least squares method, Eq. (4) is transformed into the following optimization problem to solve the quadratic programming problem:

To solve the above quadratic programming problem, we construct the following Lagrangian function:

We differentiate the Lagrangian function

After eliminating variables

where

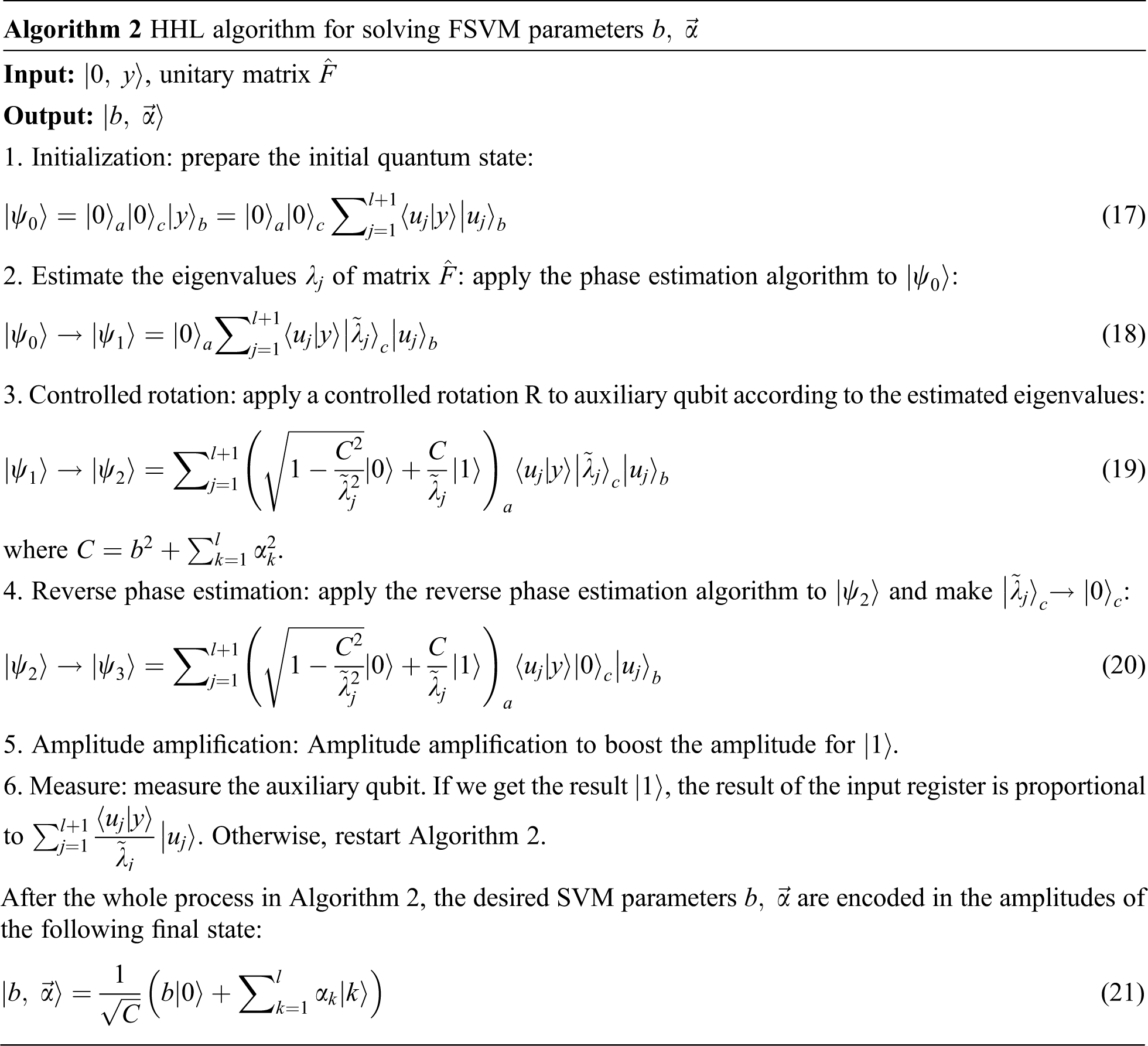

FSVM parameters

Therefore, the SVM parameters are determined schematically by

A step-by-step procedure for obtaining the SVM parameters

The final state in Eq. (21) can be used for classifying the new data x, and determine whether it belongs to the positive or negative class (

where

The classification process is shown in Algorithm 3.

The flowchart of the proposed QFSVM algorithm can be seen in Fig. 1.

Figure 1: The flowchart of the proposed QFSVM algorithm

For nonlinear FSVM, a kernel function is introduced, a nonlinear mapping

Assuming that the kernel function is a polynomial function

Solving the nonlinear function is equivalent to the inner product of

The inner product can be calculated by swap test algorithm. Arbitrary polynomial kernels can be constructed using this trick. The polynomial kernel in the original space is converted into a linear hyperplane optimization in the d-times tensor product space.

The whole quantum circuit of QFSVM for binary classification can be seen in Fig. 2 below.

Figure 2: Quantum circuit of QFSVM for binary classification. H denotes the Hadamard gate, SWAP denotes the swap gate, S denotes the phase gate,

This section demonstrates the experimental realization of a quantum fuzzy support vector machine for binary classification. The proposed QFSVM algorithm is trained with the handwritten characters “d” and “q”, and then eight handwritten characters “d” and “q” chosen from the Modified National Institute of Standards and Technology database (MNIST database) are divided into two-character groups by performing the algorithm. It is worth noting that each handwritten character should be preprocessed, including resizing the pixels and calculating the features. In our experiment, the feature values of the handwritten character are chosen as the horizontal (HR) and vertical ratios (VR), which can be calculated from the pixels in the left (upper) half over the right (lower) half. For the handwritten character picture, calculating its horizontal ratio (HR) and vertical ratio (VR), its angle value

As an excellent classifier, the train points in (quantum) support vector machines must be specific sets, and the QSVM and SVM cannot deal with the classification problems for training samples with fuzzy information. In comparison to QSVM and SVM, the proposed QFSVM is a generalization of FSVM, and it can not only deal with the training samples with fuzzy information efficiently and accurately, but also assign a fuzzy membership degree to each training sample to reduce the effect of outliers and noise in constructing an optimal hyperplane. In comparison to FSVM, which has the computational complexity of

In conclusion, a quantum fuzzy support vector machine for binary classification is proposed in this paper. It is derived from the fuzzy support vector machine algorithm and can process training samples with fuzzy membership efficiently and accurately, as well as deal with the complexity and uncertainty of big data efficiently and accurately. The proposed algorithm is applied to the handwritten characters, and experimental results show that the proposed QFSVM has good classification accuracy and that executing QFSVM in a near-term quantum computer is feasible. More importantly, it opens up a new path for processing large amounts of data with fuzzy information. In the future, we will focus on the quantum fuzzy support vector machine for multiclass classification and the quantum fuzzy support vector machine for privacy protection.

Funding Statement: This work is supported by the National Natural Science Foundation of China (No. 62076042), the Key Research and Development Project of Sichuan Province (No. 2021YFSY0012, No. 2020YFG0307, No. 2021YFG0332), the Science and Technology Innovation Project of Sichuan (No. 2020017), the Key Research and Development Project of Chengdu (No. 2019-YF05-02028-GX), the Innovation Team of Quantum Security Communication of Sichuan Province (No. 17TD0009), the Academic and Technical Leaders Training Funding Support Projects of Sichuan Province (No. 2016120080102643).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. A. Qing, Y. Kang and A. Wang, “A novel semi-supervised multi-label twin support vector machine,” Intelligent Automation and Soft Computing, vol. 27, no. 1, pp. 205–220, 2021. [Google Scholar]

2. K. S. Bhuvaneshwari, J. Uma, K. Venkatachalam, M. Masud, M. Abouhawwash et al., “Gaussian support vector machine algorithm based air pollution prediction,” Computers, Materials & Continua, vol. 71, no. 1, pp. 683–695, 2022. [Google Scholar]

3. R. Sujatha, J. M. Chatterjee, N. Jhanjhi, T. A. Tabbakh and Z. A. Almusaylim, “Heart failure patient survival analysis with multi kernel support vector machine,” Intelligent Automation & Soft Computing, vol. 32, no. 1, pp. 115–129, 2022. [Google Scholar]

4. S. Dhankhar, M. K. Gupta, F. H. Memon, S. Bhatia, P. Dadheech et al., “Support vector machine based handwritten hindi character recognition and summarization,” Computer Systems Science and Engineering, vol. 43, no. 1, pp. 397–412, 2022. [Google Scholar]

5. S. Guo, S. Chen and Y. Li, “Face recognition based on convolutional neural network and support vector machine,” in IEEE Int. Conf. on Information and Automation (ICIA), Ningbo, China, IEEE, pp. 1787–1792, 2016. [Google Scholar]

6. Y. Chang, W. Li and Z. Yang, “Network intrusion detection based on random forest and support vector machine,” in IEEE Int. Conf. on Computational Science and Engineering (CSE) and IEEE Int. Conf. on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, IEEE, vol. 1, pp. 635–638, 2017. [Google Scholar]

7. G. Jayandhi, J. Leena Jasmine and S. Mary Joans, “Mammogram learning system for breast cancer diagnosis using deep learning svm,” Computer Systems Science and Engineering, vol. 40, no. 2, pp. 491–503, 2022. [Google Scholar]

8. T. Inoue and S. Abe, “Fuzzy support vector machines for pattern classification,” in IJCNN'01. Int. Joint Conf. on Neural Networks. Proc. (Cat. No. 01CH37222), Washington, D.C., USA, IEEE, vol. 2, pp. 1449–1454, 2001. [Google Scholar]

9. C. F. Lin and S. D. Wang, “Fuzzy support vector machines,” IEEE Transactions on Neural Networks, vol. 13, no. 2, pp. 464–471, 2002. [Google Scholar]

10. Y. Wang, S. Wang and K. K. Lai, “A new fuzzy support vector machine to evaluate credit risk,” IEEE Transactions on Fuzzy Systems, vol. 13, no. 6, pp. 820–831, 2005. [Google Scholar]

11. S. T. Li and C. C. Chen, “A regularized monotonic fuzzy support vector machine model for data mining with prior knowledge,” IEEE Transactions on Fuzzy Systems, vol. 23, no. 5, pp. 1713–1727, 2014. [Google Scholar]

12. X. Tao, Q. Li, C. Ren, W. Guo, Q. He et al., “Affinity and class probability-based fuzzy support vector machine for imbalanced data sets,” Neural Networks, vol. 122, pp. 289–307, 2020. [Google Scholar]

13. H. Tang and L. Qu, “Fuzzy support vector machine with a new fuzzy membership function for pattern classification,” in Int. Conf. on Machine Learning and Cybernetics, Kunming, China, IEEE, vol. 2, pp. 768–773, 2008. [Google Scholar]

14. W. M. Tang, “Fuzzy SVM with a new fuzzy membership function to solve the two-class problems,” Neural Processing Letters, vol. 34, no. 3, pp. 209–219, 2011. [Google Scholar]

15. X. Yang, G. Zhang, J. Lu and J. Ma, “A kernel fuzzy c-means clustering-based fuzzy support vector machine algorithm for classification problems with outliers or noises,” IEEE Transactions on Fuzzy Systems, vol. 19, no. 1, pp. 105–115, 2010. [Google Scholar]

16. A. Chaudhuri and K. De, “Fuzzy support vector machine for bankruptcy prediction,” Applied Soft Computing, vol. 11, no. 2, pp. 2472–2486, 2011. [Google Scholar]

17. J. Yang, Y. Tang and H. Duan, “Application of fuzzy support vector machine in short-term power load forecasting,” Journal of Cases on Information Technology (JCIT), vol. 24, no. 5, pp. 1–10, 2022. [Google Scholar]

18. B. Zheng, S. W. Yoon and S. S. Lam, “Breast cancer diagnosis based on feature extraction using a hybrid of K-means and support vector machine algorithms,” Expert Systems with Applications, vol. 41, no. 4, pp. 1476–1482, 2014. [Google Scholar]

19. S. Lloyd, M. Mohseni and P. Rebentrost, “Quantum algorithms for supervised and unsupervised machine learning,” arXiv preprint arXiv, 1307.0411, 2013. [Google Scholar]

20. S. Lloyd, M. Mohseni and P. Rebentrost, “Quantum principal component analysis,” Nature Physics, vol. 10, no. 9, pp. 631–633, 2014. [Google Scholar]

21. M. Schuld, I. Sinayskiy and F. Petruccione, “Prediction by linear regression on a quantum computer,” Physical Review A, vol. 94, no. 2, pp. 022342, 2016. [Google Scholar]

22. J. Biamonte, P. Wittek, N. Pancotti, P. Rebentrost, N. Wiebe et al., “Quantum machine learning,” Nature, vol. 549, no. 7671, pp. 195–202, 2017. [Google Scholar]

23. P. Rebentrost, M. Mohseni and S. Lloyd, “Quantum support vector machine for big data classification,” Physical Review Letters, vol. 113, no. 13, pp. 130503, 2014. [Google Scholar]

24. H. Buhrman, R. Cleve, J. Watrous and R. D. Wolf, “Quantum fingerprinting,” Physical Review Letters, vol. 87, no. 16, pp.167902, 2001. [Google Scholar]

25. A. W. Harrow, A. Hassidim and S. Lloyd, “Quantum algorithm for linear systems of equations,” Physical Review Letters, vol. 103, no. 15, pp.150502, 2009. [Google Scholar]

26. Z. Li, X. Liu, N. Xu and J. Du, “Experimental realization of a quantum support vector machine,” Physical Review Letters, vol. 114, no. 4, pp. 140504, 2015. [Google Scholar]

27. C. Ding, T. Y. Bao and H. L. Huang, “Quantum-inspired support vector machine,” arXiv preprint arXiv, 1906.08902, 2019. [Google Scholar]

28. J. Lin, D. B. Zhang, S. Zhang, T. Li, X. Wang et al., “Quantum-enhanced least-square support vector machine: Simplified quantum algorithm and sparse solutions,” Physics Letters A, vol. 384, no. 25, pp. 126590, 2020. [Google Scholar]

29. H. J. Zimmermann, “Fuzzy set theory,” Wiley Interdisciplinary Reviews: Computational Statistics, vol. 2, no. 3, pp. 317–332, 2010. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools