Open Access

Open Access

ARTICLE

Fusing Satellite Images Using ABC Optimizing Algorithm

1 University of Information and Communication Technology, Thai Nguyen University, Thai Nguyen, 240000, Vietnam

2 Faculty of Computer Science and Engineering, Thuyloi University, 175 Tay Son, Dong Da, Hanoi, 010000, Vietnam

* Corresponding Author: Nguyen Tu Trung. Email:

Computer Systems Science and Engineering 2023, 46(3), 3901-3909. https://doi.org/10.32604/csse.2023.032311

Received 13 May 2022; Accepted 27 June 2022; Issue published 03 April 2023

Abstract

Fusing satellite (remote sensing) images is an interesting topic in processing satellite images. The result image is achieved through fusing information from spectral and panchromatic images for sharpening. In this paper, a new algorithm based on based the Artificial bee colony (ABC) algorithm with peak signal-to-noise ratio (PSNR) index optimization is proposed to fusing remote sensing images in this paper. Firstly, Wavelet transform is used to split the input images into components over the high and low frequency domains. Then, two fusing rules are used for obtaining the fused images. The first rule is “the high frequency components are fused by using the average values”. The second rule is “the low frequency components are fused by using the combining rule with parameter”. The parameter for fusing the low frequency components is defined by using ABC algorithm, an algorithm based on PSNR index optimization. The experimental results on different input images show that the proposed algorithm is better than some recent methods.Keywords

Several earth observation satellites have dual-resolution sensors. The satellites provide multi-spectral images of low spatial resolution, panchromatic images of high spatial resolution [1,2]. So, image fusion methods are good for producing a fused image of high spatial and spectral resolutions.

Pan sharpening fuses a panchromatic image with higher-resolution and a multiband raster image with lower-resolution [3]. The result is a multiband raster image that has the resolution of the panchromatic image, wherein the two raster images overlap fully. The image companies provide the panchromatic images with higher-resolution and multiband images with low-resolution in the same scene. This process increases the spatial resolution and provides a multiband image with better visualization.

Some image fusion techniques that based on BT (Brovey transform), IHS (intensity hue saturation) or PCA (principal component analysis) provide multispectral images with visual high-resolution but they ignore the high-quality fusing requirement of spectral information [1,4], wavelet transform [5–7]. Wherein the high-quality fusion of spectral information has the big important for most application about remote sensing that based on spectral information [8].

There are two main approaches in fusing image, including spatial domain approach and transform domain approach [9]. By the spatial domain approach, the fused image is chosen from the regions/pixels of the input images without transformation [10]. This approach includes the region based [9] and pixel based [11] methods. The techniques of transform domain do the fusing corresponding transforming coefficients and later apply the inverse transformation for producing the fused image. One of popular fusion techniques is transform of multi scales. There are various multi transforms based on the discrete wavelet transform [12], complex wavelet transform [13], contour transform [14–16] or sparse representing [17]. Mishra et al. [18] presents a method of fusing images. This method based on discrete wavelet transform (DWT). In [19] and [20], the authors presented a method of fusing images using the Principal Component Analysis (PCA).

In [21], a method was proposed for image fusion that based on PCA and High-Pass Filter. In [22], a method, based on Spatial Weighted Neighbor Embedding, was presented for Fusion of Panchromatic and Multispectral Images. While a technique of multi-sensor image fusion was introduced by Ehlers et al. for pan sharpening in remote sensing [23]. In [24], Wu Shulei et al. presented Smart city with satellite image fusion methods based on spatial transformation and convolution sampling.

The fusing methods used wavelet transform, usually apply the average selection rule on low frequency components and max selection rule on high frequency components. This causes the resulting image to be greatly grayed out comparing to the input image because the grayscale values of the frequency components of the input images differ greatly. In addition, using a selection rule of Max or similar maximum for high frequency components can cause the horizontal and vertical streaks to appear, even forming grid cells that distort the image. To overcome these limitations, a novel algorithm for fusing satellite images is proposed in this paper. This algorithm is based on parameter optimization to choose the most suitable parameters for image merging.

The main contributions of this article include:

• Propose parameter optimization method for combining high frequency components using the ABC algorithm.

• Propose a novel algorithm that is used for fusing panchromatic and multispectral satellite images based on Wavelet transform and association rules with parameters found by the optimization method.

The remaining components of the article is structured as follows. Some related works are presented in Section 2. The proposed algorithm for image fusion is presented in Section 3. Section 4 presents some experiments of our algorithm and other related algorithms on selected images. The future researches and conclusions are given in Section 5.

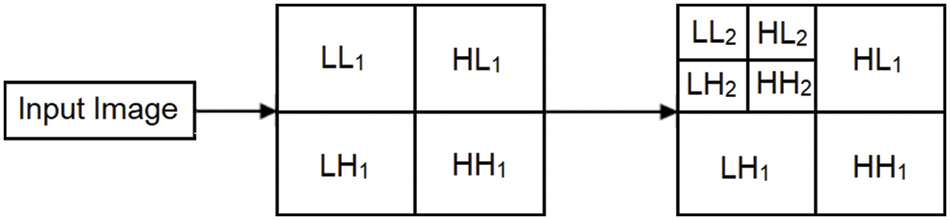

Wavelet Transformation (WT) is a mathematical tool [25]. This tool is used for presenting images with multi-resolution. After transforming, wavelet coefficients are obtained. For remote sensing images, wavelet coefficients can be obtained by Discrete Wavelet Transform (DWT). In which, the most important content has low frequency. This content keeps most of the features of input image and its size is decreased by four times. By using low pass filter with two directions, the approximate image (LL) is achieved.

In our research, DWT is mainly used to decompose the input images into separate parts based on the frequency. Input image of DWT is the one-band image, for example the grey image. In the case of multi-band image, DWT is applied to each band of the input image.

When DWT performed, the size of the image LL at the previous stage is four times bigger than the size of LL image at the current stage. Therefore, if the input image is disaggregated into 3 levels, size of the input image is 64 times bigger than the final approximate. Wavelet transformation of image is illustrated as in Fig. 1.

Figure 1: Image decomposition using DWT

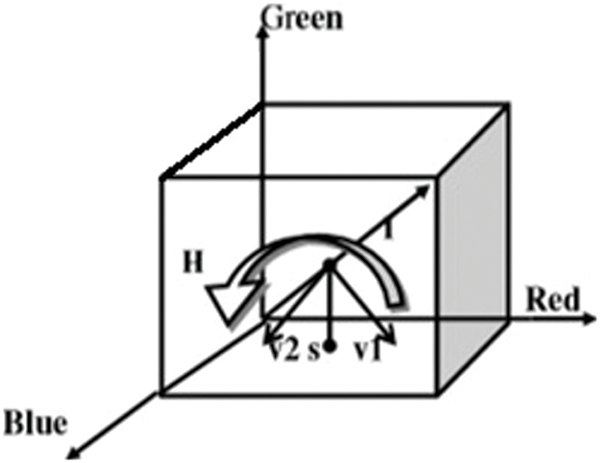

RGB color space is very suitable for display images on electronic screens. However, this color space is elusive to the human eye. Therefore, to better match the human visual system, the IHS color system (Fig. 2) is introduced:

• I is denoted for intensity. This is the characteristic property of luminous intensity.

• H is Hue. This is the principal wavelength-related property in a mixture of light wavelengths. The characteristic tint for the dominant color is perceived.

• S is Saturation, characteristic of relative purity. Saturation depends on the width of the light spectrum and represents the amount of white mixed with chroma.

Convert from RGB to IHS and vice versa are presented in [26].

Figure 2: Color spaces IHS and RGB

The ABC algorithm is an optimization algorithm, proposed in 2005 by Karaboga. This algorithm simulates the foraging behavior of honey bees [27]. The ABC algorithm is applied successfully in the various practical problems. The algorithm belongs to the swarm intelligence algorithm type.

A swarm of honey bees can achieve tasks successfully by social cooperation. So, ABC algorithm has three types of bees: scout bees, employed bees and onlooker bees. The employed bees find food sources according to their memory, then shares this information to the onlooker bees. Next, good food sources are selected by the onlooker bees from the sources that the employed bees found. The food sources with higher fitness (quality) will have a large chance to be selected by onlooker bees. The scout bees are derived from a few employed bees, with the task of finding new sources of foods.

The employed bees belong to the first half of the bee swarm, and the onlooker bees constitute the second half of this swarm. Total of solutions in the swarm is equal to total of the employed or onlooker bees. The initial population of food sources (solutions) are generated randomly.

Let

A new nomination solution

where

where

where

3.1 The New Satellite Image Fusion Algorithm

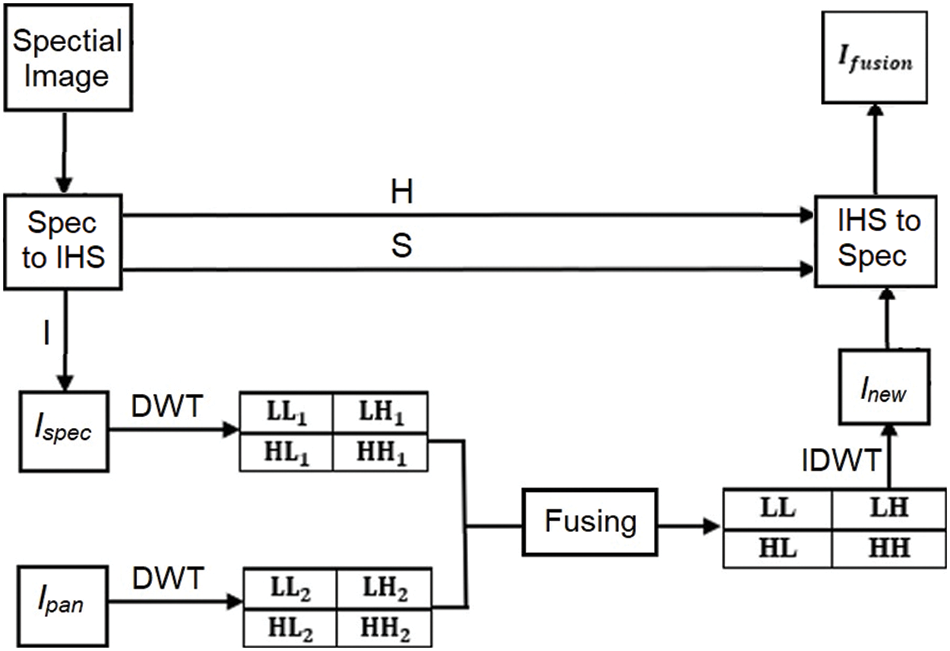

A new algorithm for fusing remote sensing images named as the ABC optimization (shorten as FRSIAO) is proposed in this section. The general framework of the FRSIAO algorithm is given by a flowchart in Fig. 3.

Figure 3: The flowchart of the satellite image fusing algorithm (FRSIAO)

As shown in above diagram, this algorithm includes five main steps:

• Step 1: Converting image img1 from RGB color space to IHS color space to get Ispec, Hspec, Sspec. In which, channel I is used for processing in the next steps is calculated by the formula:

• Step 2: Applying DWT on Ispec and Ipan (the image PAN is a gray image) to get LH1, LL1, HH1, HL1 and LH2, LL2, HH2, HL2.

• Step 3: Fusing the components (LL1, HH1, HL1, LH1 and LL2, HH2, HL2, LH2) to get LL, HH, HL, LH as follows:

- Fusing the components with high frequency HH1, HL1, LH1, and HH2, HL2, LH2, to get HH, HL and LH using the following rule:

- Fusing the low frequency components LL1 and LL2 to get LL using the following rule:

where,

• Step 4: Transforming the components LL, LH, HL and HH to Inew using IDWT transformation.

• Step 5: Convert the components Inew, Hspec, Sspec from HIS color space to RGB colour space to obtain the ouput fused image.

3.2 Some Advantages of the Proposed Algorithm

The proposed algorithm has some advantages as follows:

i) Combining the low frequency components with adaptive fusing parameter obtained by using the algorithm ABC.

ii) Proposing an algorithm for fusing panchromatic satellite and multi-spectral images named as FRSIAO.

Input data is downloaded from links: https://www.l3harrisgeospatial.com and https://opticks.org. On this dataset, our proposed algorithm is compared with other available methods, including Wavelet based fusing image WaveletIF [18], PCA based image fusing PCAIF [19,20] and CSST [24].

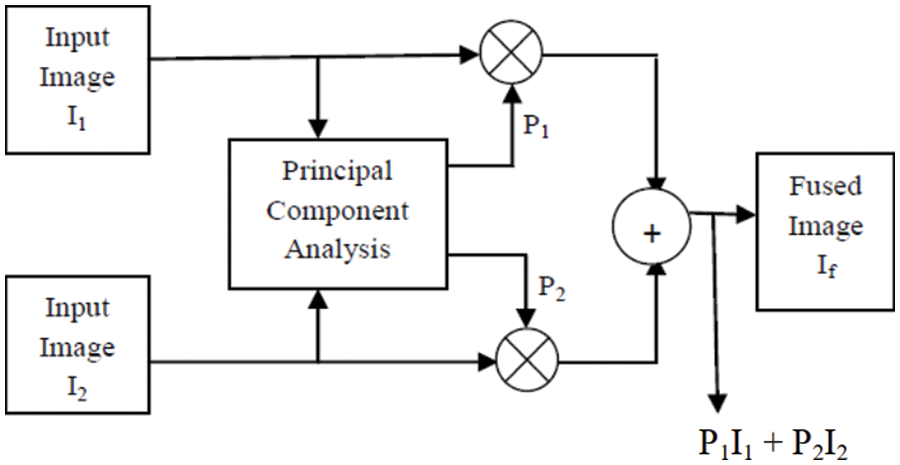

PCAIF is a method of fusing images using the PCA. The diagram of PCAIF is introduced in Fig. 4.

Figure 4: Image fusion by PCA

The eigen values and eigen vector are caculated. The normalized components P1 and P2 are caculated from the eigen vector. The fused image is defined by:

The measures include PSNR, FMI (Feature Mutual Information) [28] and SSIM (Structural Similarity Index) are used to assess the image quality.

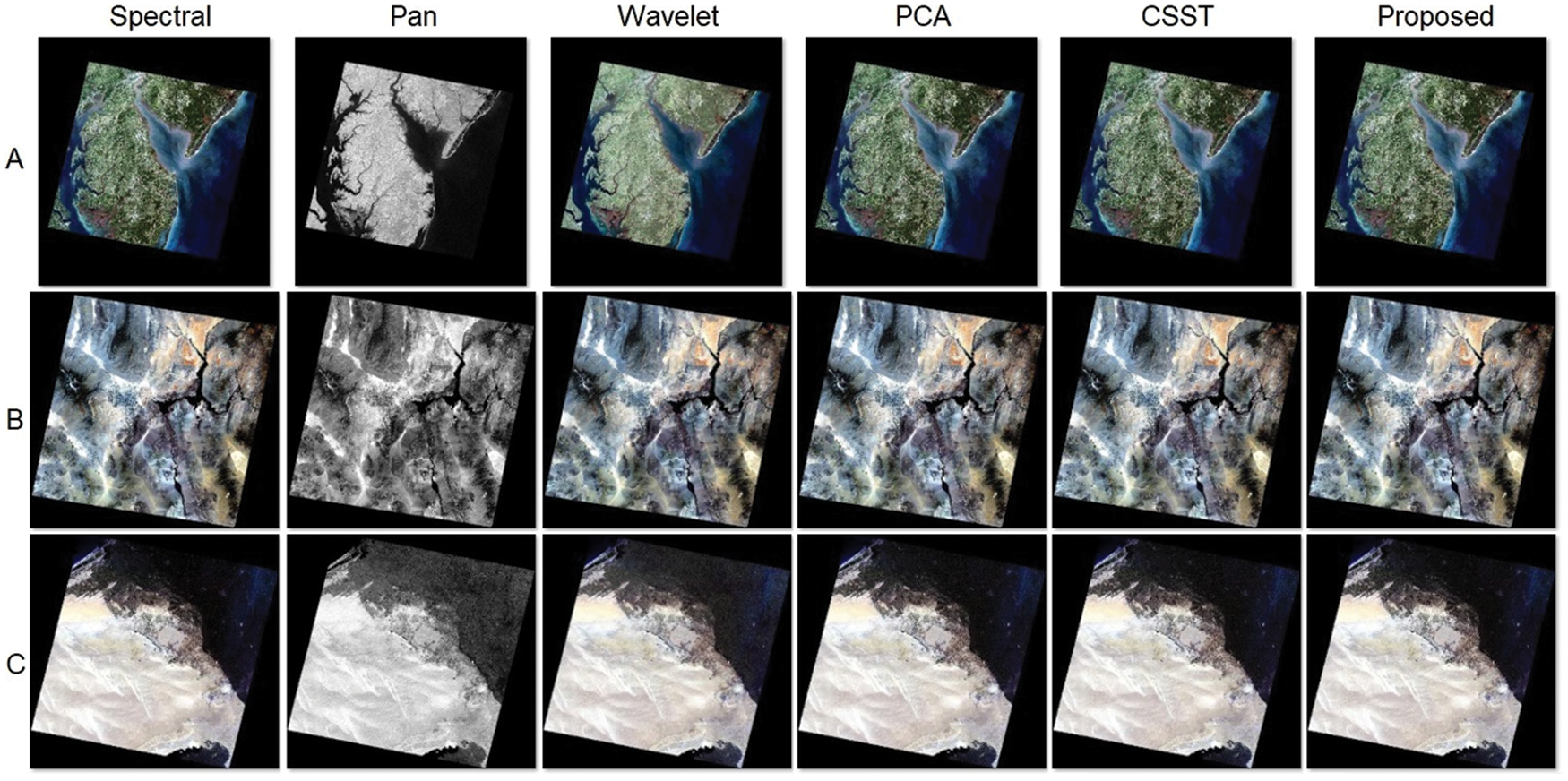

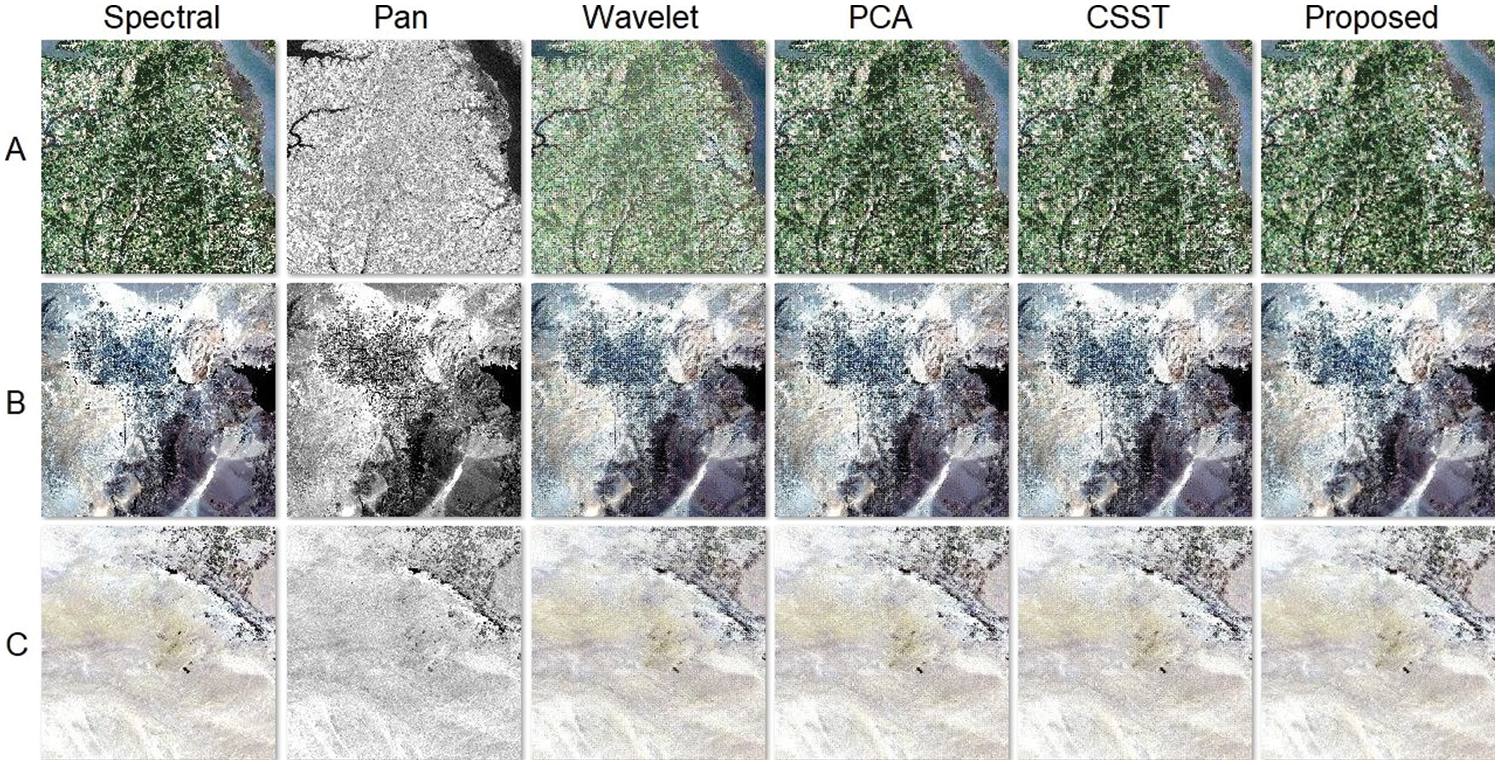

Fig. 5 illustrates the experiment with 3 examples (A, B and C). Fig. 5 includes input image, output images obtained by applying Wavelet, PCA, CSST and our algorithm, respectively. And Fig. 6 includes a area of experiment images in Fig. 5.

Figure 5: Input and output images of the fused methods

Figure 6: A part of input, output images of the fused methods

From the output images of four methods in Fig. 5, visually, some characteristics of the results can be summarized as below:

• The fusing image by the Wavelet method is greatly modified because using the average rule for components LL of the Spectral and Pan images.

• All three methods, including WaveletIF, PCAIF and CSST, create small grids on the resulting images due to the use of the max rule.

• The fusing image obtained by applying our proposed method is not distorted as well as creating grid cells comparing with other selected methods.

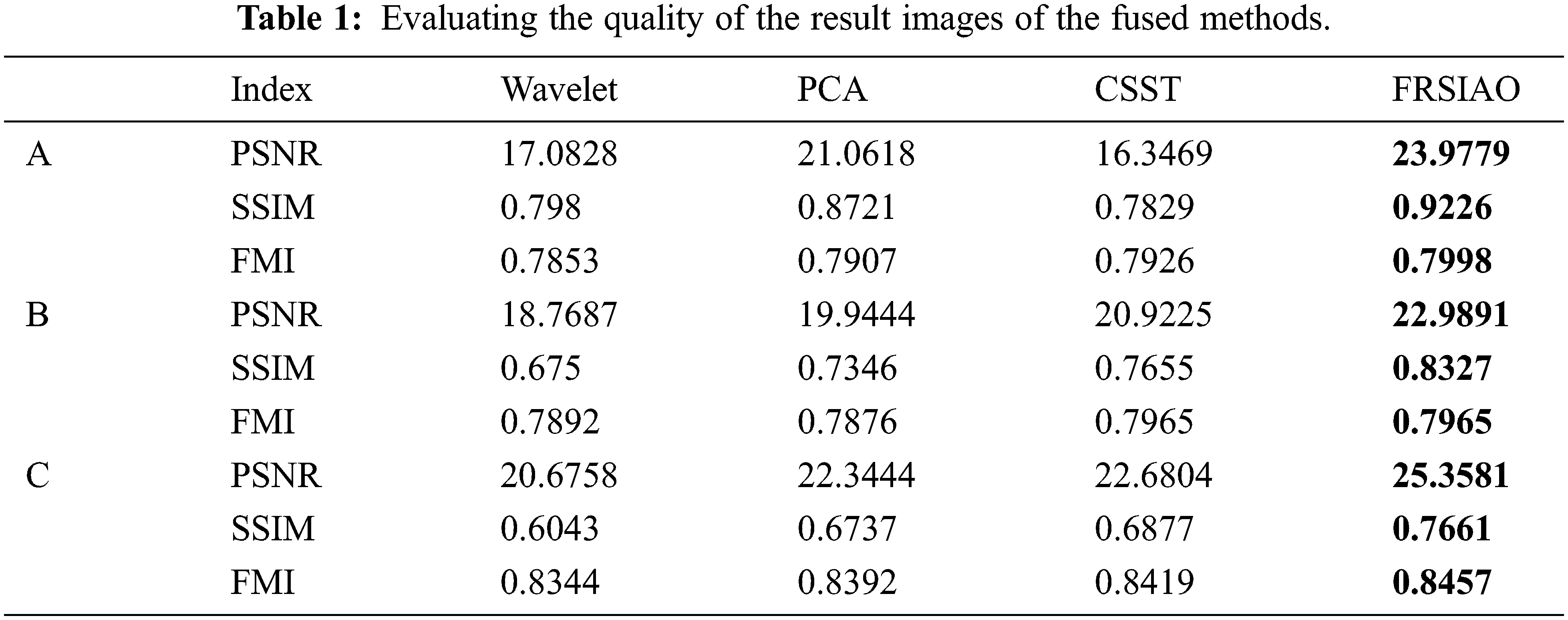

For the quality evaluation, the values of measures of the output images, generated by the fusion methods, are calculated and given in Table 1 below.

The results in the above table shows that:

- In experiment A, the CSST method gave the smallest PSNR and PSNR index, but the FMI index was larger than the WaveletIF and PCAIF methods. Meanwhile, the proposed method gives the largest results in all 3 indices.

- In experiments B and C, the WaveletIF method gives the smallest psnr and ssim indices. For the FMI index, the PCAIF method gives the smallest value. The CSST method gives the value of 3 indices, which are all larger than the WaveletIF and PCAIF methods. Meanwhile, the proposed method gives the largest results in all 3 indices.

These mean that FRSIAO for fusing images has better quality than some recent methods.

5 Conclusions and Future Works

This paper introduces a new algorithm for fusing remote sensing images based on optimization algorithm ABC denoted as FRSIAO). The proposed method has some advantages such as the adaptability of combining high frequency components; the high performance in combining the low frequency components based on the weighted parameter obtained by using ABC algorithm. Apart from that, our proposed method overcomes the limitations of wavelet transform based approaches. The experimental results show the higher performance of proposed method comparing with other related methods. For further works, we intend to integrate the parameter optimization in image processing and to apply the improvement method in other problems. Further, the comparison of FRSIAO with other optimization techniques will be done.

Funding Statement: Our work is partially supported by Thuyloi university.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Z. Wang, D. Ziou, C. Armenakis, D. Li and Q. Li, “A comparative analysis of image fusion methods,” IEEE Transactions on Geoscience and Remote Sensing, vol. 43, no. 6, pp. 1391–1402, 2005. [Google Scholar]

2. Y. Yang, C. Han, X. Kang and D. Han, “An overview on pixel-level image fusion in remote sensing,” in Proc. ICAL, Jinan, China, pp. 2339–2344, 2007. [Google Scholar]

3. https://pro.arcgis.com/en/pro-app/latest/help/analysis/raster-functions/fundamentals-of-pan-sharpening-pro.htm [Google Scholar]

4. X. Otazu, M. González-Audícana, O. Fors and J. Núñez, “Introduction of sensor spectral response into image fusion methods. Application to wavelet based methods,” IEEE Transactions on Geoscience and Remote Sensing, vol. 10, no. 10, pp. 2376–2385, 2005. [Google Scholar]

5. S. T. Li, J. T. Kwok and Y. N. Wang, “Using the discrete wavelet frame transform to merge Landsat TM and SPOT panchromatic images,” Information Fusion, vol. 3, no. 1, pp. 17–23, 2002. [Google Scholar]

6. W. Shi, C. Q. Zhu, Y. Tian and J. Nichol, “Wavelet-based image fusion and quality assessment,” International Journal of Applied Earth Observation & Geoinformation, vol. 6, no. 3–4, pp. 241–251, 2005. [Google Scholar]

7. S. Wu, Z. Zhao and H. Chen, “An improved algorithm of remote sensing image fusion based on wavelet transform,” Journal of Computational Information Systems, vol. 8, pp. 8621–8628, 2012. [Google Scholar]

8. J. G. Liu, “Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details,” International Journal of Remote Sensing, vol. 21, no. 18, pp. 3461–3472, 2000. [Google Scholar]

9. S. Li, X. Kang, L. Fang, J. Hu and H. Yin, “Pixel-level image fusion: A survey of the state of the art,” Information Fusion, vol. 33, no. 6583, pp. 100–112, 2017. [Google Scholar]

10. H. Li, H. Qiu, Z. Yu and B. Li, “Multifocus image fusion via fixed window technique of multiscale images and non-local means filtering,” Signal Processing, vol. 138, no. 3, pp. 71–85, 2017. [Google Scholar]

11. M. Zribi, “Non-parametric and region-based image fusion with Bootstrap sampling,” Information Fusion, vol. 11, no. 2, pp. 85–94, 2010. [Google Scholar]

12. Y. Yang, “A novel DWT based multi-focus image fusion method,” Procedia Engineering, vol. 24, no. 1, pp. 177–181, 2011. [Google Scholar]

13. B. Yu, B. Jia, L. Ding and Z. Cai, “Hybrid dual-tree complex wavelet transform and support vector machine for digital multi-focus image fusion,” Neurocomputing, vol. 182, no. 11, pp. 1–9, 2016. [Google Scholar]

14. S. Yang, M. Wang, L. Jiao, R. Wu and Z. Wang, “Image fusion based on a new contourlet packet,” Information Fusion, vol. 11, no. 2, pp. 78–84, 2010. [Google Scholar]

15. F. Nencini, A. Garzelli, S. Baronti and L. Alparone, “Remote sensing image fusion using the curvelet transform,” Information Fusion, vol. 8, no. 2, pp. 143–156, 2007. [Google Scholar]

16. H. Li, H. Qiu, Z. Yu and Y. Zhang, “Infrared and visible image fusion scheme based on NSCT and low-level visual features,” Infrared Physics and Technology, vol. 76, no. 8, pp. 174–184, 2016. [Google Scholar]

17. H. Li, H. Qiu, Z. Yu and Y. Zhang, “Multifocus image fusion and restoration with sparse representation,” IEEE Transactions on Instrumentation and Measurement, vol. 59, no. 4, pp. 884–892, 2010. [Google Scholar]

18. H. O. S. Mishra and S. Bhatnagar, “MRI and CT image fusion based on wavelet transform,” International Journal of Information and Computation Technology, vol. 4, no. 1, pp. 47–52, 2014. [Google Scholar]

19. S. Mane and S. Sawant, “Image fusion of CT/MRI using DWT, PCA methods and analog DSP processor,” Int. Journal of Engineering Research and Applications, vol. 4, no. 2, pp. 557–563, 2014. [Google Scholar]

20. S. Deb, S. Chakraborty and T. Bhattacharjee, “Application of image fusion for enhancing the quality of an image,” Computer Science & Information Technology (CS & IT), vol. 6, pp. 215–221, 2012. [Google Scholar]

21. R. M. Mohamed, A. H. Nasr, S. F. A. Osama and S. El-Rabaie, “Image fusion based on principal component analysis and high-pass filter,” in Proc. ICComputerES, Cairo, Egypt, pp. 63–70, 2010. [Google Scholar]

22. Z. Kai, Z. Feng and Y. Shuyuan, “Fusion of multispectral and panchromatic images via spatial weighted neighbor embedding,” Remote Sensing, vol. 11, no. 5, pp. 2033–2055, 2019. [Google Scholar]

23. E. Manfred, K. Sascha, A. Par and R. Pablo, “Multisensor image fusion for pansharpening in remote sensing,” International Journal of Image and Data Fusion, vol. 1, no. 1, pp. 25–45, 2010. [Google Scholar]

24. W. Shulei and C. Huandong, “Smart city oriented remote sensing image fusion methods based on convolution sampling and spatial transformation,” Computer Communications, vol. 157, pp. 444–450, 2020. [Google Scholar]

25. S. G. Mallat, “A theory for multi resolution signal decomposition, the wavelet representation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 11, no. 7, pp. 674–693, 1989. [Google Scholar]

26. R. Haydn, G. W. Dalke and J. Henkel, “Application of the IHS color transform to the processing of multisensor data and image enhancement,” in Proc. ISRSASL, Cairo, pp. 599–616, 1982. [Google Scholar]

27. D. Karaboga, “An idea based on honey bee swarm for numerical optimization,” Technical Report-TR06. Technical Report, Erciyes University, 2005. [Google Scholar]

28. M. B. A. Haghighat, A. Aghagolzadeh and H. Seyedarabia, “A non-reference image fusion metric based on mutual information of image features,” Computers & Electrical Engineering, vol. 37, no. 5, pp. 744–756, 2011. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools