Open Access

Open Access

ARTICLE

Ash Detection of Coal Slime Flotation Tailings Based on Chromatographic Filter Paper Sampling and Multi-Scale Residual Network

1 School of Mechatronic Engineering and Automation, Foshan University, Foshan, 528000, China

2 China Coal Technology Engineering Group Tangshan Research Institute, Tangshan, 063000, China

* Corresponding Author: Zhengjun Zhu. Email:

Intelligent Automation & Soft Computing 2023, 38(3), 259-273. https://doi.org/10.32604/iasc.2023.041860

Received 09 May 2023; Accepted 09 November 2023; Issue published 27 February 2024

Abstract

The detection of ash content in coal slime flotation tailings using deep learning can be hindered by various factors such as foam, impurities, and changing lighting conditions that disrupt the collection of tailings images. To address this challenge, we present a method for ash content detection in coal slime flotation tailings. This method utilizes chromatographic filter paper sampling and a multi-scale residual network, which we refer to as MRCN. Initially, tailings are sampled using chromatographic filter paper to obtain static tailings images, effectively isolating interference factors at the flotation site. Subsequently, the MRCN, consisting of a multi-scale residual network, is employed to extract image features and compute ash content. Within the MRCN structure, tailings images undergo convolution operations through two parallel branches that utilize convolution kernels of different sizes, enabling the extraction of image features at various scales and capturing a more comprehensive representation of the ash content information. Furthermore, a channel attention mechanism is integrated to enhance the performance of the model. The combination of the multi-scale residual structure and the channel attention mechanism within MRCN results in robust capabilities for image feature extraction and ash content detection. Comparative experiments demonstrate that this proposed approach, based on chromatographic filter paper sampling and the multi-scale residual network, exhibits significantly superior performance in the detection of ash content in coal slime flotation tailings.Keywords

Flotation is a critical process in coal production that plays a significant role in separating coal from impurities. The ash content of the final product is a crucial indicator of its quality, necessitating real-time adjustments during production. Visual detection methods, which combine machine vision and deep learning, are increasingly used for assessing the ash content in coal slime flotation tailings. Ash content detection based on visual inspection requires acquiring tailings images. However, during the flotation process, the constantly agitated and turbulent liquid surface in the flotation cell can lead to irregular foam and impurities on the coal slime water surface, affecting image sampling. Additionally, varying lighting conditions at industrial sites can disrupt the collection of tailings images, thereby impacting the detection of ash content.

A coal slime flotation tailings ash content detection method is proposed based on chromatographic filter paper sampling and a multi-scale residual network. Initially, chromatographic filter paper is used to sample the tailings, enabling the acquisition of static tailings images from the turbulent coal slime water. Subsequently, a multi-scale residual network is constructed to extract features from the tailings images. The use of residual blocks at different scales allows for obtaining more comprehensive ash content information for the detection task. To enhance the network’s feature extraction capabilities and improve ash content detection, a channel attention mechanism is incorporated into the residual structure, resulting in the network model named MRCN. A control group, denoted as MRCN-noca, is created by removing the channel attention mechanism. Additionally, several classic deep learning models are selected for comparative experiments alongside MRCN. The results indicate that MRCN exhibits the fastest convergence during training and achieves the highest accuracy in ash content detection during testing. This suggests that the proposed method, based on chromatographic filter paper sampling and the multi-scale residual network, can effectively handle the challenges posed by bubbles, impurities, and lighting variations in the coal slime flotation field, and outperforms other models in tailings ash content detection.

The coal slime flotation tailings ash content detection method, based on chromatographic filter paper sampling and multi-scale residual network, features three noteworthy innovations:

1. Mitigation of interference from the flotation site through the application of chromatographic filter paper for tailings sampling. This method transforms the observation of tailings samples, eliminating adverse factors such as foam, impurities, and lighting conditions. Consequently, the extraction of ash content features from images becomes more convenient.

2. Utilization of multi-scale residual networks to extract image ash content information. Features in tailings images exhibit varying information at different scales, and extracting them using multi-scale residuals enables a more comprehensive analysis of tailings image characteristics, facilitating ash content computation compared to using a single-scale residual approach.

3. Integration of a channel attention mechanism to optimize the model. This mechanism assigns different weights to the importance of various feature outputs, uncovering key features beneficial for ash content detection. This augmentation significantly improves the accuracy and robustness of the model.

In order to ensure accurate guidance for coal preparation production, ash detection needs to be fast, accurate, and timely. However, conventional coal quality inspection in China is predominantly manual, involving on-site management, high labor intensity, and significant human intervention factors. Furthermore, the inspection results are substantially delayed compared to production. For instance, the fast ash experiment involving burning tailings typically takes over an hour from coal sample collection to test results, resulting in time-consuming and environmentally unsustainable processes incompatible with modern industrial production and management needs.

In addition to manual detection, there has been development of a flotation ash detection technology based on ionizing radiation. Presently, X-rays or γ-rays are primarily utilized for online detection of ash content in flotation feed, flotation clean coal, and flotation tailings [1,2]. However, the use of ionizing radiation introduces new safety concerns to coal preparation plant production, and the associated detection equipment is relatively costly. As a result, its implementation in coal preparation plants has not been widespread.

Traditional ash measurement methods have their limitations, and the visual ash detection method, with its advantages of being fast, safe, and low cost, has garnered significant attention from both domestic and international researchers. Due to the complexity of the coal flotation process, there exists a non-linear relationship between tailing ash content and visual features, posing challenges in establishing an accurate correlation model through direct mechanism analysis. However, the production process of flotation exhibits a complex correlation mechanism with rich visual features, making the use of historical label data for data-driven modeling via the visual ash measurement method a viable approach.

A considerable amount of air bubbles is generated during flotation, prompting researchers to explore the relationship between air bubbles and tailings ash content. Cao et al. utilized a semi-supervised clustering method based on the Gaussian mixture model to cluster morphological feature samples of mixed sign foam images, resulting in various clusters [3]. The labeled foam image morphological feature sample information was then mapped to the unlabeled foam image morphological feature samples within different clusters. Building upon this research, they further employed the maximum correlation minimum redundancy (MRMR) algorithm to select optimal features and constructed a semi-supervised Gaussian mixture model (SSGMM) [4]. This combined classification model enhances the accuracy of ash identification using foam characteristics. In 2020, Tan et al. conducted a review of the advancements in machine vision-based mineral flotation monitoring technology in recent years and discussed the current application status of the flotation foam monitoring system [5]. In the same year, Bai proposed a two-stage coal flotation foam image processing system based on 5G Industrial Internet of Things (IIoT) and edge computing [6]. Ding et al. extracted features such as bubble color, bubble collapse, bubble size and shape, and bubble moving speed. They utilized the support vector regression algorithm to develop an ash prediction system based on flotation foam image recognition [7]. In that same year, Yang constructed the CAPNet model by combining the classic CNN model and attention mechanism. The hyperparameters of CAPNet were optimized using the orthogonal experimental design method. Through extensive comparisons with baseline models, it was observed that CAPNet outperforms other methods in accuracy and stability in detecting ash content by analyzing foam images [8]. Despite the many studies on air bubbles, several limitations exist. Compared to traditional ash detection methods such as chemical analysis or physical sieving, studying flotation froth and calculating ash allows for non-destructive measurement that does not require complex sample handling or destructive sampling. Monitoring the foam characteristics in the flotation process enables the real-time acquisition of ash content information for coal slime, facilitating timely adjustments of flotation process parameters or process control. However, the accuracy of calculating ash content through flotation foam may be somewhat limited due to various factors that affect the ash content in coal slime, such as ore composition, particle size distribution, and changes in ore properties. Additionally, the coal slime flotation process, being a complex physical and chemical process, is influenced by numerous factors, including the pH value of the suspension and the type and quantity of reagents used.

In 2017, Guo et al. utilized a vector consisting of the ash content distribution of tailings images, incident light intensity, and reflected light intensity of tailings as input to establish a prediction model for ash identification using the I-ELM method. The model was compared to models established by the BP neural network and the fixed extreme learning machine [9]. Subsequently, Guo et al. proposed a dosage prediction method for flotation chemicals based on the GRNN algorithm, demonstrating that the GRNN model was faster, more accurate, and more suitable for predicting flotation chemicals compared to the BP neural network [10]. In 2020, Wang et al. developed an ash prediction model based on GA-SVMR, employing four input parameters: average gray value, energy, skewness, and coal slurry concentration [11]. In 2021, Zhou et al. established a tailings ash prediction model called GSA-SVR, which utilized grayscale and color features extracted from images [12]. In 2023, Fan et al. conducted research on online detection technology for flotation ash by fusing multi-source heterogeneous information. Real-time flotation tailings images and flotation process variable data were combined to form 11 features as input variables, and the ash content of flotation clean coal was predicted using the IGWO-BP model [13]. Machine learning has played a crucial role in various fields [14], serving as an excellent tool for industrial intelligence. Although these methods have achieved certain levels of tailings ash detection using machine learning, these algorithms typically require manual design and feature extraction. Additionally, the representation ability of machine learning algorithms is limited, preventing them from fully extracting and utilizing complex features and relationships in data [15]. The ash detection problem in coal slime flotation tailings involves nonlinear and high-dimensional data relationships, making it challenging for machine learning algorithms to capture these complexities. Deep learning models, on the other hand, possess powerful representation capabilities and can learn abstract feature representations through multi-layer nonlinear transformations. This enables deep learning models to effectively capture complex data relationships when detecting ash in coal slime flotation tailings.

With the rapid development of deep learning, it has found increasing applications in various fields such as face recognition [16], intelligent driving [17], traffic prediction [18], and intelligent robots [19], playing an irreplaceable role. Furthermore, the introduction of deep learning has enhanced the effectiveness of ash visual measurement, leading to its wider utilization in tailing ash detection [20]. For instance, in 2017, Horn et al. employed deep convolutional neural network modeling to extract visual features from flotation images and predict ash content [21]. Likewise, in 2018, Fu and Aldrich’s team proposed a similar method to investigate and validate the performance advantages of deep networks compared to other shallow learning methods [22]. In the domain of general flotation field control problem, Jiang presented an improved online reinforcement learning model for data flow learning, which involved connecting the model and neural network in series [23]. Building on their previous work, Fu and Aldrich’s team further expanded their research and demonstrated that deep learning networks can achieve superior predictive performance [24]. Additionally, Nakhaei extensively explored the research on visual ash measurement in the flotation industry and emphasized the need for online updates based on real-time data to maintain model prediction performance due to the influence of changes in flotation feed composition and production process drift [25]. In 2020, Guo et al. developed an ash detection method for coal slime flotation tailings utilizing deep convolutional neural networks. Their study involved the construction of an eight-layer neural network and achieved satisfactory results through laboratory training and testing [26]. In a separate study on gray detection, Zhang introduced separable convolution (SC) and attention modules into the multi-branch (MB) block of the backbone. SC was utilized to fuse spatial and channel information, while the attention module enhanced feature extraction. This approach inspired us to consider implementing the attention mechanism to enhance network performance [27].

Several models have attempted to address the issue of tailings ash detection; however, machine vision-based ash content measurement heavily depends on tailings images as the primary data source. However, the process of coal slime flotation involves the continuous tumbling and stirring of the coal slime and water mixture in the tailings tank, resulting in the generation of substantial foam and impurities on the liquid surface. Additionally, the lighting conditions at the flotation site can interfere with the capture of clear images of the coal slime. Consequently, images obtained directly from the tailings tank often lack distinct feature information and display an unclear relationship with the ash content.

Therefore, addressing the issue of data acquisition is crucial for achieving intelligent ash detection of coal slime flotation tailings. This study presents a novel method for tailings ash detection based on chromatographic filter paper sampling and a multi-scale residual network. By employing chromatographic filter paper to sample tailings, dynamic slime water samples can be transformed into static images for observation. The use of chromatographic filter paper results in clear image textures and rich details in the sampled tailings. Subsequently, a deep learning-based multi-scale residual network is constructed, where the sample image undergoes convolution to extract feature information. The residual module within the multi-scale residual network adopts a dual-channel structure, with each channel equipped with a convolution kernel of different sizes to perform convolution operations on the image’s feature information. Furthermore, the channel attention mechanism is employed to enhance the network’s ability to extract essential information. Through training, this network model can effectively accomplish the task of tailings ash detection, with the multi-scale residual and channel attention mechanism structures displaying robust ash calculation capability. The ash detection operation is efficient and rapid, and the detection method demonstrates stability and reliability, remaining unaffected by the complex working conditions of the coal slime flotation industrial site.

3 Chromatographic Filter Paper Sampling

Ash content detection in coal slime involves quantifying the mass percentage of inorganic solids that remain after complete combustion relative to the mass of coal. The slow ashing method is the universally recognized standard for ash content detection. This method involves subjecting the coal sample to controlled heating in a high-temperature muffle furnace, gradually increasing the temperature to (815 ± 10) °C. The sample is then burned until the mass stabilizes. Finally, the ratio of the mass of residual ash to the initial mass of the coal sample is determined and considered as the ash content.

The filter paper is composed of cotton fibers and features numerous small surface holes, enabling the filtration of liquids while retaining larger solid particles. This characteristic facilitates the separation of mixed liquid and solid substances. To swiftly separate water and slime particles in the slime aqueous solution, high-quality chromatographic filter paper can be used. The separated slime particles adhere to the filter paper, facilitating their transfer and subsequent observation. The preliminary work of this study resulted in an invention patent (patent name: Static Coal Slime Flotation Image Ash Detection Method Based on Chromatography Filter Paper Sampling, patent number: ZL202211330473.9) that elucidates how tailings with varying ash contents exhibit distinct physical properties, which can be further discerned through filtration using chromatographic filter paper. Hence, it is proposed to employ this method for capturing images of tailings samples and transforming dynamic slime water into static samples. Chromatographic filter paper sampling was conducted on tailings samples with ash content levels of 26.2%, 32.5%, and 38.7%, with the results depicted in Fig. 1.

Figure 1: Tailings samples with different ash contents

After conducting filter paper sampling on tailings, it was observed that tailings samples with different ash contents exhibit distinct appearances. The aggregation of tailings particles displays noticeable textures, and the overall color distribution of samples with varying ash content differs significantly. To conduct a more detailed examination of the particles, a high-definition industrial camera is utilized. Fig. 2 showcases the enhanced clarity and richness of the tailings particles under the camera lens. Comparing samples with different ash content reveals significant variances in texture characteristics.

Figure 2: Details of tailings samples with different ash contents

The proposed approach for detecting ash content in coal slime flotation tailings involves capturing static images through chromatographic filter paper sampling. In the laboratory, a comprehensive collection of images with varying ash contents was acquired, and a deep convolutional network model was trained for ash content detection in coal slime flotation images. The experimental process is illustrated in Fig. 3. Coal slime tailings samples with different ash contents were carefully prepared and sampled using chromatographic filter paper. Subsequently, a residual network model was established, and the collected tailings images were transformed into a dataset for model training and testing. Finally, the experimental results were analyzed.

Figure 3: Ash detection experiment process

4.1 Multi-Scale Residual Network

This paper presents a novel approach to improving the extraction of image features by neural networks. Specifically, we propose a multi-scale residual network with a channel attention mechanism (MRCN) that modifies the existing ResNet-34 architecture [28]. By introducing a two-branch channel structure in the Residual block, our MRCN enhances the network’s ability to detect feature information at different scales. In the modified structure, each branch uses convolutional kernels of different sizes, allowing for the cross-sharing of feature information. This multi-scale residual structure enables a wider range of receptive fields, resulting in a better understanding of the image’s structure and feature information. In contrast, a conventional residual structure can only transfer information between adjacent layers or jumps, while the multi-scale residual structure can transfer information between features of different scales. As a result, the network can better capture multi-scale features and optimize the flow of information. Furthermore, the integration of multi-scale residuals allows for richer feature representations through convolution operations and feature fusion. Features at different scales can complement each other and enhance the model’s ability to analyze and extract complex information. Additionally, we introduce a channel attention mechanism at the end of the module, which adaptively assigns scaling weights to all channel features. This mechanism enhances the network’s ability to recognize important features [29]. The modified structure, referred to as the multi-scale residual block (MRCB), is illustrated in Fig. 4. The overall structure of our proposed MRCN is depicted in Fig. 5.

Figure 4: Multi-scale residual with channel attention mechanism block (MRCB) structure

Figure 5: Multi-scale residual network with channel attention mechanism (MRCN) structure

As the primary feature extraction module of the network, the MRCB comprises two parts: multi-scale feature fusion and channel attention mechanism. The multi-scale feature fusion includes 3 × 3 and 5 × 5 convolutional layers in parallel for module’s the first and second layers of the module. After passing the input

Channel attention mechanism: To increase the network’s focus on the most informative features, the channel attention mechanism adaptively weights the multi-scale features of the previous layer’s output. This is expressed by the following formula:

In order to improve the network’s performance and achieve faster convergence, the output feature map of the channel attention mechanism is summed with the module input using a local residual connection to obtain the module output:

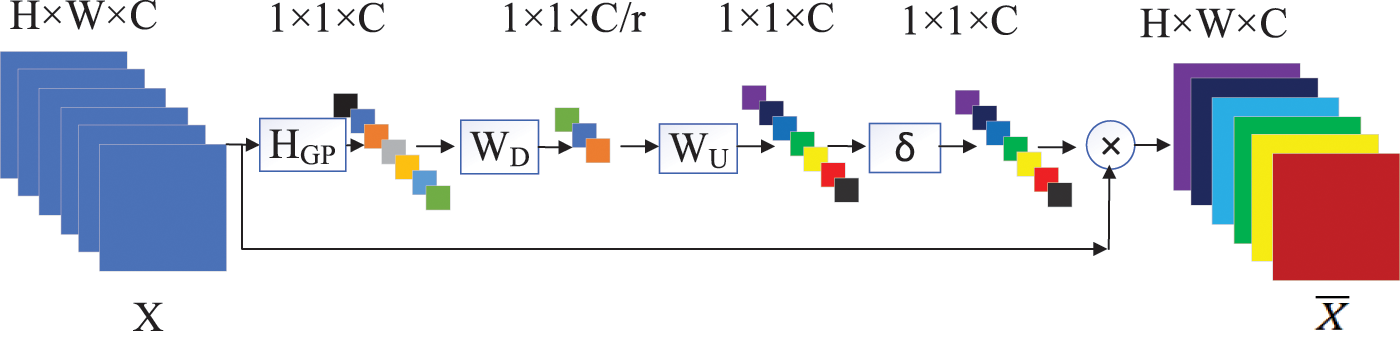

4.2 Channel Attention Mechanism

The interconnection between each channel feature in the previous layer establishes a learning model using the channel attention mechanism. Different weights are assigned to the output of different features based on their importance, enabling the extraction of key features that are more conducive to image recognition from the structure shown in Fig. 6.

Figure 6: Channel attention structure

The overall mapping relationship of the channel attention mechanism can be expressed as:

Five types of ash slime water samples were prepared in the experiment, as shown in Fig. 7, with concentrations of 26.2%, 32.5%, 38.7%, 44.1%, and 50.9%, respectively. The experiment utilized Whatman 3MM cellulose chromatography filter paper, which has a thickness of 0.34 mm and higher adsorption capacity compared to ordinary filter paper. Images of the samples were captured using the HY2307 HDMI industrial camera, resulting in a total of 2000 image samples.

Figure 7: Sample images of five ash contents

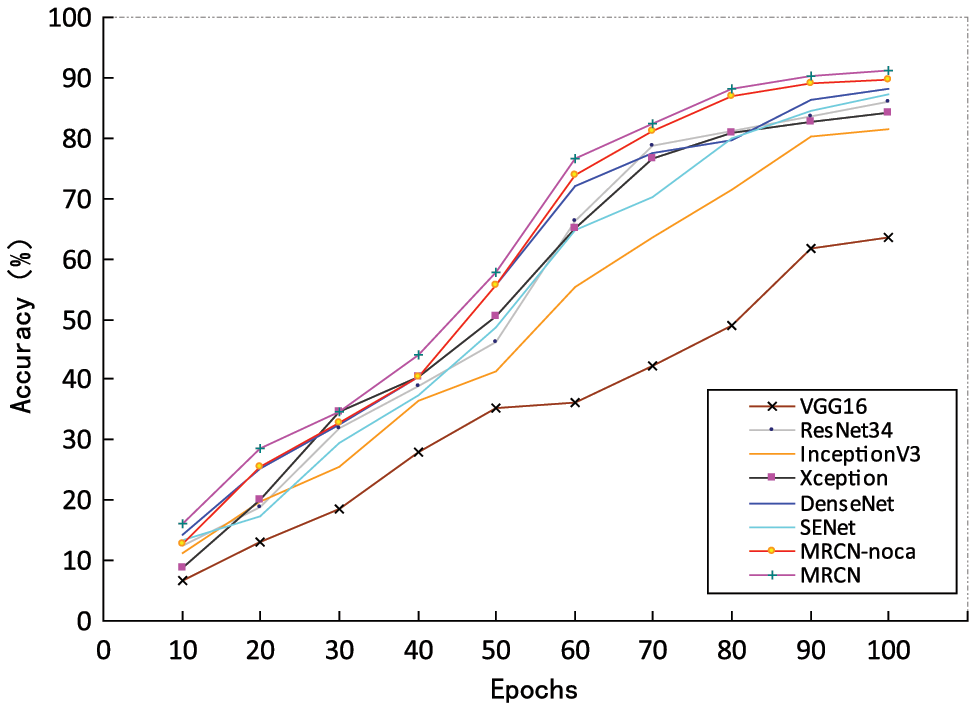

The MRCN model was implemented using the PyTorch framework, with the CrossEntropyLoss serving as the loss function and the Adam optimizer for parameter optimization via backpropagation. The model was trained on the training dataset, starting with an initial learning rate of 0.1. Every 20 epochs, the learning rate was halved. To evaluate the effectiveness of the introduced channel attention mechanism, the attention mechanism in the MRCN model was removed, resulting in the MRCN-noca model. Furthermore, classic deep learning models including VGG16, ResNet34, InceptionV3, Xception, DenseNet, and SENet were employed as a control group to compare the performance of the MRCN network in ash detection. After 100 epochs of training, a comparative analysis was conducted among these network models.

The training process and results are presented in Fig. 8 and Table 1, respectively. The MRCN network model achieved an accuracy of 91.3%, indicating its superior ability to extract and learn image features. In comparison, the accuracy rates of the MRCN-noca, ResNet34, InceptionV3, Xception, DenseNet, and SENet models were 89.7%, 86.1%, 81.5%, 84.3%, 88.2%, and 87.3%, respectively. Among these, VGG16 exhibited the lowest accuracy, reaching only 63.4%. These results suggest that as the training iterations increase, the accuracy of the MRCN network model gradually improves, consistently outperforming other network models and demonstrating a satisfactory training effect.

Figure 8: Training accuracy

Once trained, these network models undergo evaluation of their detection performance using the test dataset. The results of the evaluation are presented in Table 2. A noticeable finding is that the accuracy of MRCN-noca, which excludes the attention mechanism, is slightly lower than that of MRCN, but still significantly better than other network models. This suggestively demonstrates that the multi-scale residual structure of the MRCB is more effective in extracting tailings image features for ash content calculation, resulting in superior classification performance. Remarkably, MRCN achieves the highest accuracy, indicating that the incorporation of the channel attention mechanism into the MRCB and assigning varying weights to different feature outputs further enhances the network model’s ability to extract image features.

In terms of model complexity, MRCN is not considered the most lightweight due to its parameter count (Params) and floating-point operations (FLOPs) not being the smallest. Nonetheless, it surpasses the other seven models in terms of overall accuracy and kappa coefficient. This indicates a robust and well-balanced detection ability for samples with varying ash content, ensuring stability. Despite the existing time-consuming ash test method in practical coal slime flotation industrial sites, it remains prevalent due to its reliable accuracy. Accuracy holds paramount importance in ash detection, thus prioritizing high accuracy is crucial in designing detection methods. Notably, the MRCN model exhibits an impressive total accuracy rate of 84.2% and a kappa coefficient of 0.812, underscoring its significant advantage in accurately detecting ash content.

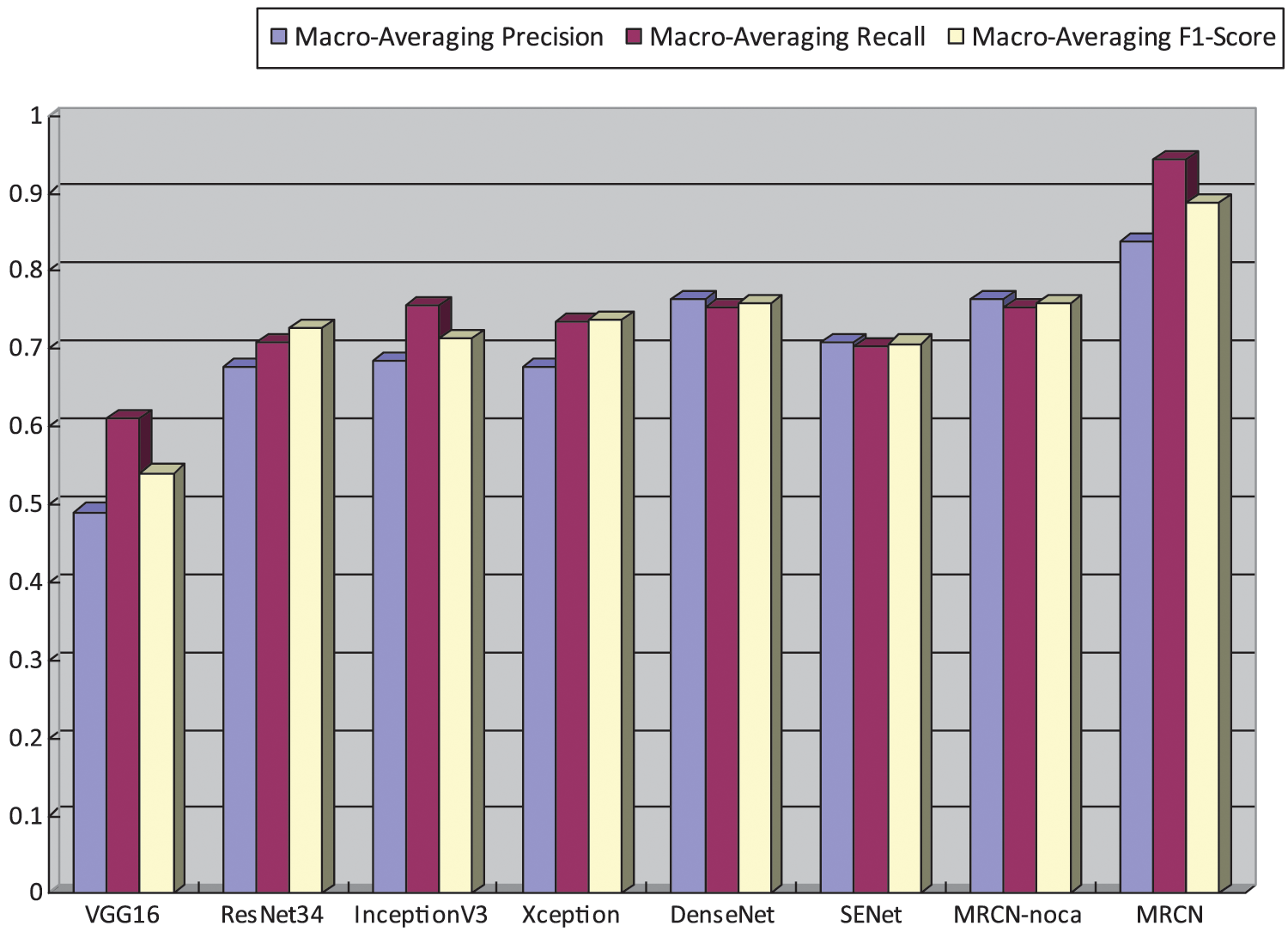

The test results were further compared using three metrics: Precision, Recall, and F1-Score. Since the testing experiment involved a five-class classification task, Macro-Averaging Precision, Macro-Averaging Recall, and Macro-Averaging F1-Score were utilized to evaluate the model’s performance. Macro-Averaging Precision assessed the model’s average accuracy in predictions across each class. Macro-Averaging Recall measured the model’s average capability to capture positives within each class. Macro-Averaging F1-Score, as a composite metric of Precision and Recall, considered both the model’s accuracy and its ability to capture positives. The specific results are presented in Fig. 9.

Figure 9: Performance metrics visualization

MRCN exhibits exceptional performance in the test experiments for ash content detection, as evidenced by all metrics indicating excellence. The accuracy of MRCN in detecting each category of the five ash contents in tailings samples is illustrated in Fig. 10. This demonstrates that MRCN possesses a well-balanced detection capability for the ash content of tailings, maintaining consistently high levels of accuracy across various ash content categories.

Figure 10: Accuracy of detection with different ash contents

This innovative approach, which combines chromatographic filter paper sampling and a multi-scale residual network for ash detection in coal slime flotation tailings, showcases both uniqueness and practicality. It is important to highlight that the network parameters and model complexity of MRCN are not optimal. This realization motivates us to not only strive for higher precision in future work, but also to further optimize the lightweight design of the network model.

In this study, we employed chromatographic filter paper to sample flotation tailings from coal slime, thereby obtaining static images of the tailings. Subsequently, we developed a network model called MRCN that combines a multiscale residual structure with a channel attention mechanism to enhance the network’s capability to extract features from the images. The network models were trained and tested using image datasets to achieve a fast and accurate detection of the ash content in coal slime flotation tailings. A comparative analysis with seven other models revealed that the MRCN network model outperformed in both extracting features from tailings images and calculating ash detection in this experiment. In our future work, we will prioritize enhancing the accuracy and lightweight design of the network model. The coal industry holds promising prospects for intelligent development, particularly in the advancements of ash detection technology for coal slime flotation tailings. The fusion of machine vision and deep learning techniques presents significant potential for researchers and practitioners in the field of slime flotation, paving the way for noteworthy breakthroughs.

Acknowledgement: Throughout the writing of this dissertation, I have received a great deal of support and assistance from various individuals and sources. I would like to express my heartfelt gratitude to my colleagues H.L. and J.W. for their continuous encouragement and insightful discussions during the course of this research. I am also deeply thankful to my friend Y.H. for their invaluable guidance and expertise throughout this journey. Lastly, I would like to extend my appreciation to my family for their unwavering support and understanding during this academic pursuit.

Funding Statement: This work was supported by National Natural Science Foundation of China: Grant No. 62106048. Funding for this research activity was provided to W.Z., and more information about the funding organization can be found at https://www.nsfc.gov.cn.

Author Contributions: All authors contributed for the preparation of manuscript. W.Z., N.L. and Z.Z. developed the concept and manuscript, Z.Z., H.L., W.F. and X.Z. supervised and reviewed the manuscript. All authors have read and approved the final submitted version.

Availability of Data and Materials: Not applicable.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Z. Z. Qiao, W. Liu, J. L. Hu and E. F. Peng, “Application of X-ray coal quality online detection system in Harwusu coal preparation plant,” Coal Engineering, vol. 52, no. 2, pp. 51–54, 2020. [Google Scholar]

2. D. Cheng, X. D. Tang, F. H. Li and Y. Dai, “Research on optimization method of measurement accuracy of γ-ray backscattering ash analyzer,” Journal of Hunan University (Natural Science Edition), vol. 46, no. 2, pp. 92–96, 2019 (In Chinese). [Google Scholar]

3. W. Y. Cao, R. F. Wang, M. Q. Fan, X. Fu and Y. L. Wang, “A classification method for coal slime flotation foam images based on semi-supervised clustering,” Industrial and Mine Automation, vol. 45, no. 7, pp. 38–42+65, 2019. [Google Scholar]

4. W. Y. Cao, R. F. Wang, M. Q. Fan, X. Fu and Y. L. Wang, “MRMR and SSGMM joint classification model for slurry condition image recognition of coal slime flotation system,” Control Theory and Application, vol. 38, no. 12, pp. 2045–2058, 2021. [Google Scholar]

5. L. P. Tan, Z. B. Zhang, W. Zhao, R. H. Zhang and K. M. Zhang, “Research progress in mineral flotation froth monitoring based on machine vision,” Mining Research and Development, vol. 40, no. 11, pp. 123–130, 2020. [Google Scholar]

6. Y. Bai, “5G industrial IoT and edge computing based coal slime flotation foam image processing system,” IEEE Access, vol. 8, pp. 137606–137615, 2020. [Google Scholar]

7. J. J. Ding, F. Y. Bai, X. Y. Ren and M. Q. Fan, “Clean coal ash prediction system based on flotation foam image recognition,” Coal Preparation Technology, vol. 50, no. 4, pp. 89–93, 2022. [Google Scholar]

8. X. L. Yang, K. F. Zhang, C. Ni, H. Cao, J. Thé et al., “Ash determination of coal flotation concentrate by analyzing froth image using a novel hybrid model based on deep learning algorithms and attention mechanism,” Energy, vol. 260, pp. 125027, 2022. [Google Scholar]

9. Z. P. Guo and J. M. Yang, “Application of incremental extreme learning machine in tailings ash detection,” Machinery Design and Manufacturing, vol. 318, no. 8, pp. 17–19, 2017. [Google Scholar]

10. X. J. Guo and X. T. Chen, “Prediction of flotation dosage based on generalized regression neural network,” Coal Technology, vol. 36, no. 2, pp. 286–288, 2017. [Google Scholar]

11. G. H. Wang, T. He, Y. L. Kuang and Z. Lin, “Optimization of soft-sensing model for ash content prediction of flotation tailings by image features tailings based on GA-SVMR,” Physicochemical Problems of Mineral Processing, vol. 56, no. 4, pp. 590–598, 2020. [Google Scholar]

12. B. W. Zhou, R. F. Wang and X. Fu, “Experimental research on ash detection based on flotation tailing coal image,” Mining Research and Development, vol. 41, no. 8, pp. 172–177, 2021. [Google Scholar]

13. F. B. Fan, R. F. Wang and X. Fu, “Research and application of online detection technology for flotation clean coal ash based on multi-source heterogeneous information fusion,” Coal Technology, vol. 42, no. 2, pp. 233–238, 2023. [Google Scholar]

14. M. Shafiq, Z. H. Tian, A. K. Bashir, X. J. Du and M. Guizani, “CorrAUC: A malicious Bot-IoT traffic detection method in IoT network using machine-learning techniques,” IEEE Internet of Things Journal, vol. 8, no. 5, pp. 3242–3254, 2021. [Google Scholar]

15. C. Janiesch, P. Zschech and K. Heinrich, “Machine learning and deep learning,” Electron Markets, vol. 31, pp. 685–695, 2021. [Google Scholar]

16. I. Adjabi, A. Ouahabi, A. Benzaoui and A. Taleb-Ahmed, “Past, present, and future of face recognition: A review,” Electronics, vol. 9, no. 8, pp. 1188, 2020. [Google Scholar]

17. Y. F. Cai, L. Dai, H. Wang, L. Chen, Y. C. Li et al., “Pedestrian motion trajectory prediction in intelligent driving from far shot first-person perspective video,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 6, pp. 5298–5313, 2022. [Google Scholar]

18. G. X. Xu and X. T. Hu, “Multi-dimensional attention based spatial-temporal networks for traffic forecasting,” Wireless Communications and Mobile Computing, vol. 2022, pp. 1358535, 2022. [Google Scholar]

19. F. J. Ren and Y. W. Bao, “A review on human-computer interaction and intelligent robots,” International Journal of Information Technology & Decision Making, vol. 19, no. 1, pp. 5–47, 2020. [Google Scholar]

20. X. H. Zhao, M. M. Zhang and Z. P. Wen, “Application of intelligent control technology in coal slime flotation process control,” Coal Preparation Technology, vol. 50, no. 3, pp. 85–91, 2022. [Google Scholar]

21. Z. C. Horn, L. Auret, J. T. Mccoy, C. Aldrich and B. M. Herbst, “Performance of convolutional neural networks for feature extraction in froth flotation sensing,” IFAC-PapersOnLine, vol. 50, no. 2, pp. 13–18, 2017. [Google Scholar]

22. Y. Fu and C. Aldrich, “Using convolutional neural networks to develop state-of-the-art flotation froth image sensors,” IFAC-PapersOnLine, vol. 51, no. 21, pp. 152–157, 2018. [Google Scholar]

23. Y. Jiang, J. L. Fan, T. Y. Chai, J. N. Li and F. L. Lewis, “Data-driven flotation industrial process operational optimal control based on reinforcement learning,” IEEE Transactions on Industrial Informatics, vol. 14, no. 5, pp. 1974–1989, 2017. [Google Scholar]

24. Y. Fu and C. Aldrich, “Flotation froth image recognition with convolutional neural networks,” Minerals Engineering, vol. 132, pp. 183–190, 2019. [Google Scholar]

25. F. Nakhaei, M. Irannajad and S. Mohammadnejad, “A comprehensive review of froth surface monitoring as an aid for grade and recovery prediction of flotation process. Part A: Structural features,” Energy Sources, Part A: Recovery, Utilization, and Environmental Effects, vol. 45, no. 1, pp. 2587–2605, 2023. [Google Scholar]

26. X. J. Guo, L. A. Wei and C. B. Yang, “Research on ash detection method of coal slime flotation tailings based on deep convolutional network,” Coal Technology, vol. 39, no. 2, pp. 144–146, 2020. [Google Scholar]

27. K. Zhang, W. Wang, Z. Lv, L. Jin, D. Liu et al., “A CNN-based regression framework for estimating coal ash content on microscopic images,” Measurement, vol. 189, pp. 110589, 2022. [Google Scholar]

28. K. M. He, X. Y. Zhang, S. Q. Ren and J. Sun, “Deep residual learning for image recognition,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 770–778, 2016. [Google Scholar]

29. J. Hu, L. Shen and G. Sun, “Squeeze-and-excitation networks,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp. 7132–7141, 2018. [Google Scholar]

30. K. M. He, X. Y. Zhang, S. Q. Ren and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proc. of the IEEE Int. Conf. on Computer Vision (ICCV), Santiago, Chile, pp. 1026–1034, 2015. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools