Open Access

Open Access

REVIEW

An Overview of Double JPEG Compression Detection and Anti-detection

Nanjing University of Information Science and Technology, Nanjing, 210044, China

* Corresponding Author: Kun Wan. Email:

Journal of Information Hiding and Privacy Protection 2022, 4(2), 89-101. https://doi.org/10.32604/jihpp.2022.039764

Received 02 February 2023; Accepted 06 March 2023; Issue published 17 April 2023

Abstract

JPEG (Joint Image Experts Group) is currently the most widely used image format on the Internet. Existing cases show that many tampering operations occur on JPEG images. The basic process of the operation is that the JPEG file is first decompressed, modified in the null field, and then the tampered image is compressed and saved in JPEG format, so that the tampered image may be compressed several times. Therefore, the double compression detection of JPEG images can be an important part for determining whether an image has been tampered with, and the study of double JPEG compression anti-detection can further advance the progress of detection work. In this paper, we mainly review the literature in the field of double JPEG compression detection in recent years with two aspects, namely, the quantization table remains unchanged and the quantization table is inconsistent in the double JPEG compression process, Also, we will introduce some representative methods of double JPEG anti-detection in recent years. Finally, we analyze the problems existing in the field of double JPEG compression and give an outlook on the future development direction.Keywords

As information technology advances, computers and smart phones have become an indispensable part of our lives. Online communication often involves the exchange of images, which may undergo various types of editing, leading to differences between the original and processed images. Image manipulation tools such as Photoshop and smartphone editing software make it easier for people to alter images. In addition, for the reason of deep learning, deep forgery and style conversion have been widely used, which helps people edit images more effectively to some extent. However, these artificially modified images can hardly be recognized by our eyes, leaving people vulnerable to manipulation. In order to solve these problems, image forensics has been extensively studied. It aims to verify the reality of the picture and usually study the traces of tampering by copy-moves [1], splicing [2], retouching [3], deepfakes [4], etc.

Similar incidents of image tampering abound, permeating all areas of our daily lives. Against this background, a new discipline, digital image forensics, has been born. JPEG (Joint Photographic Experts Group) is currently the most widely used image format on the Internet and has a very important application in our daily life. It has a very important application in our daily life. Currently, most of the images captured by cell phones and digital cameras are stored in JPEG format. Therefore, the analysis and forensics of JPEG images are undoubtedly of great importance. There have been cases that many tampering operations occur on JPEG images, and the basic process of the operation is to first decompress JPEG files, tamper them in the null field, and then save the tampered images in JPEG format after the tampering is completed, so that the tampered images may be compressed twice or even many times. Therefore, the detection of double JPEG compression can be an important basis for determining whether an image has been tampered, and is an important branch in the field of digital image tampering detection at present.

This paper mainly reports a series of achievements in the field of double JPEG compression detection and anti-detection in recent years, which are organized as follows: firstly, a brief introduction to JPEG format, followed by detailed descriptions of double compression detection in the case of two inconsistent quantization tables, double compression detection algorithms and ideas in the case of two consistent quantization tables, and a brief performance analysis and evaluation, followed by the introduction of some progress in double JPEG compression anti-detection in this year. Finally, the existing problems of double JPEG compression detection are analyzed, and the future development direction is prospected.

2 Double JPEG Compression Detection

2.1 The JPEG Compression and Decompression of Color Images

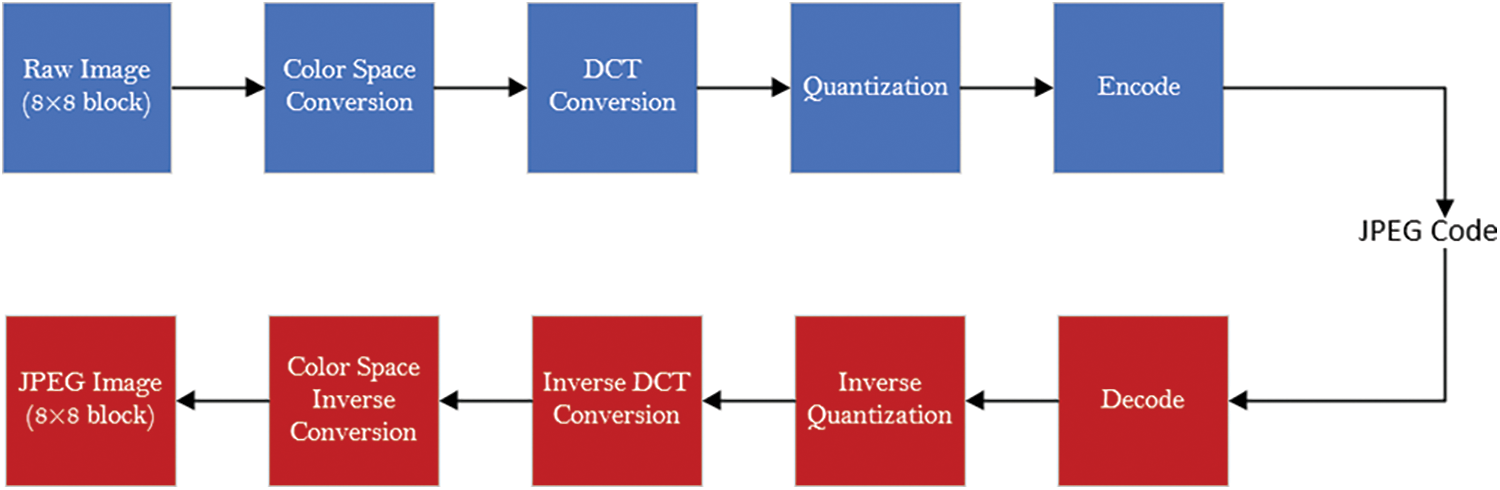

In order to better explain our proposed method, we firstly introduce the JPEG compression and decompression process of color images. The color image JEPG compression is mainly composed of four steps: color space conversion, DCT conversion, quantization, and encoding. The decompression process uses opposite operations, consisting of decoding, dequantization, inverse DCT conversion, and inverse color space transform. All steps are based on 8 × 8 blocks. The JPEG compression and decompression of color images are shown in the Fig. 1.

Figure 1: The process of JPEG compression and decompression

Color space conversion refers to converting an image from RGB to YCbCr space to facilitate better compression of the space, which includes the process of downsampling. In order to preserve the information of the original picture as much as possible, the downsampling frequency of 4:4:4 is adopted by default in this paper. Afterwards, the image is mapped from the spatial domain to the DCT domain by DCT conversion. Quantization is the most important step in JPEG compression. It can effectively reduce the space required for image storage. Quantization needs to calculate the quantization step according to the quality factor and then quantize and round the DCT coefficients. Eq. (1) expresses the relationship between the quantization step and the quality factor, where QF represents the quality factor, q(i, j) represents the corresponding number in the base quantization table, Q(i, j) is the quantization step, floor(·) represents rounding down. Because there is no information loss in the encoding and decoding processes in JPEG compression, we will omit these two processes in the experiment. The process of JPEG decompression is exactly the opposite of the compression process. The process of JPEG decompression is exactly the opposite of the compression process.

According to whether the two compressions are aligned, it can be divided into aligned and non-aligned detection of double compression. According to the quantization step length of the two JPEG compressions, it can be divided into two classes. One is the detection of double JPEG compression with different quantization matrix (DJDQM) and the other is the detection of double JPEG compression with same quantization matrix (DJSQM). Detection of DJDQM means that two compressions use different quantization steps. Detection of DJSQM means that two compressions use the same quantization steps.

2.2 Aligned Detection of Double JPEG Compression

2.2.1 Double JPEG Compression with Different Quantization Matrix

According to the introduction above, the JPEG compression process starts with a blocked DCT transformation, followed by a quantization rounding operation on the blocked DCT coefficients. In a single JPEG compressed image (let the quantization matrix be Q1), the histogram of quantized DCT coefficients Dki, j (1 ≤ k ≤ N) obtained by quantizing the coefficients corresponding to any frequency (i, j) (0 ≤ i, j ≤ 7) in the 8 × 8 sub-blocks can usually be fitted with a generalized Gaussian distribution [5], where N denotes the number of all 8 × 8 sub-blocks in the image. When a single JPEG compressed image (quantization matrix Q1) is decompressed into the spatial domain and then compressed again with another quantization matrix Q2 (Q2 ≠ Q1), the resulting histogram of the quantized DCT coefficients will usually no longer obey the generalized Gaussian distribution.

Lukáš et al. [6] and Pevny et al. [7] studied the problem of JPEG compression earlier and pointed out that if different quantization tables were used in the first and second compression, the histograms of quantized DCT coefficients of the two JPEG compressed images would show obvious “zeros” and “double peaks”. If different quantization tables are used in the first and second compressions, anomalies such as “zero points” and “double peaks” appear in the histogram of the quantized DCT coefficients of the two JPEG compressions. Fig. 2 shows the histogram of quantized DCT coefficients at frequencies (0, 1) after two JPEG compressions, where the horizontal direction indicates the quantized DCT coefficient value and the vertical direction indicates the frequency of the coefficient. As can be seen in Fig. 2, when the quantization step chosen for both JPEG compressions is the same, the DCT coefficient histogram does not change significantly, as shown in Fig. 2a; when the quantization step chosen for the first compression is 8 and the quantization step chosen for the second is 4, the frequency of odd DCT coefficients (e.g., 1, 3, 5, etc.) in both compressed JPEG images will be zero, as shown in Fig. 2b; When the first quantization step is 6 and the second compression step is 4, the histogram will show local double peaks at the positions corresponding to the coefficient pairs 1 ↔ 2, 4 ↔ 5, 7 ↔ 8, etc., as shown in Fig. 2c; when the first quantization step is 3 and the second compression step is 4, the histogram will be significantly different from the original histogram, but the local double peaks are not very obvious, as shown in Fig. 2d. Fig. 2 shows that when different combinations of quantization steps are chosen for the two quantization steps, different traces are left in the DCT coefficient histogram of the two JPEG compressed images, and this observation becomes the main idea for the subsequent detection of the problem.

Figure 2: Histogram of quantized DCT coefficients at frequency (i, j) = (0, 1) of doubly compressed JPEG image

At the same time, Popescu et al. [8] showed that the first and second compressions with different quantization steps would introduce anomalous patterns in the histogram of DCT coefficients at some specific frequencies, which would exhibit some periodic characteristics after Fourier transform, thus allowing effective detection of double JPEG compression. Feng et al. [9] also pointed out that the cycle effect, histogram discontinuity and other features that may be introduced by the double compression process can be used to detect two JPEG compressions. In the literature [10], Fu et al. showed that the distribution of the first digit of the DCT coefficient of a single JPEG compressed image satisfies the generalized Benford’s law [11], while the first effective digit of the DCT coefficient of a twice JPEG compressed image no longer satisfies the generalized Benford’s law due to the introduction of artificial perturbations. In 2008, Li et al. [12] showed that the distribution of the first digit of the DCT coefficients can be fitted by using the generalized Benford’s law for some specific frequency exchanges, which can further improve the detection performance of two JPEG compressions. Dong et al. [13] proposed a machine learning-based scheme to extract a 324-dimensional feature set consisting of Markov transfer probabilities in a given JPEG image to characterize the intra- and inter-block correlations of the DCT coefficients, and with the help of a Support Vector Ma-chine (SVM), they can effectively distinguish between single JPEG compressed images and inter-block correlations. In 2011, Chen et al. [14] further investigated the intra- and inter-block correlation of DCT coefficients by extracting the adjacent joint density features and edge density features on the DCT coefficients, and then classifying these features with the help of SVM to achieve double JPEG compression detection with improved performance compared with the method of Chen et al. In 2016, Liu et al. [15], based on the method of Shang et al. [16], proposed to divide the image into texture complex regions and smooth regions, and to characterize the intra and inter block correlations of the DCT coefficients with the help of a high-order Markov transfer probability matrix, which can further improve the performance of the algorithm. In order to effectively capture the intra- and inter-block correlation of DCT coefficients of JPEG images, Li et al. [17] proposed that the performance can be further improved by first Zigzag sorting of DCT coefficients, followed by row-scanning and column-scanning in a specific order, combined with Markov transfer probability matrices.

Recently, Taimori et al. [18] performed a statistical analysis of single compressed and double compressed JPEG images with different quantization factors and found that single compressed and double compressed data with different JPEG encoder settings are represented as a finite number of coherent clusters in the feature space, and proposed a targeted learning strategy to detect double JPEG compression by a classifier, which has a low The method has a low complexity and a good detection performance. Considering that in the image tampering process, if the original image is a JPEG image, it needs to be decompressed first, then some of the regions are tampered, and then compressed a second time after the image is tampered. Obviously, the non-tampered region is compressed twice, so there are “zero values” and “double peaks” in the DCT coefficient histogram for that part of the region. If the tampered region is from an uncompressed image (or if it is from a JPEG image but the 8 × 8 grid of the region was not aligned with the 8 × 8 grid of the original image during the process of pasting the region to the original image), then there is no “zero value” or “double peak” effect in this region. Using this property, the detection and localization of image tampering regions can be accomplished. For example, in [19], He et al. use this property to detect whether a JPEG image is tampered and to further localize the tampered region.

2.2.2 Double JPEG Compression with Same Quantization Matrix

From the JPEG compression and decompression process, it is clear that if the same quantization table is used in both two compressions and the 8 × 8 sub-blocks are kept aligned between the two compressions, theoretically there should be no difference between the second compressed image and the original JPEG image. For quite some time after the double JPEG compression problem was introduced, most researchers believed that if the same quantization table was used for the second compression and the first compression, and the 8 × 8 sub-block grid was kept strictly aligned, the two compressed images would be identical to the one compressed image in this case and could not be detected effectively. However, we must acknowledge that JPEG compression employs a lossy compression technique, and as such, there are three different kinds of errors that may occur during the compression and decompression process. The first is quantization error, which arises during the compression phase. The DCT coefficients are floating point numbers before quantization and are generally rounded to the nearest whole number after quantization, and the difference before and after quantization of such DCT coefficients is generally referred to as the quantization error. The second and third types of error are present during decompression. Applying IDCT to inverse the quantized DCT coefficients yields a series of floating point numbers in the null domain. In order to reconstruct the image data in the null domain, values less than 0 are truncated to 0 and values greater than 255 are truncated to 255, an operation that results in an error known as truncation error. In addition, when reconstructing the spatial image, the floating point numbers that belong to the series must be rounded to the nearest integer, resulting in a rounding error.

Huang et al. [20] conducted an earlier study on the problem of keeping the quantization table constant. The horizontal coordinate indicates the number of compressions and the vertical coordinate indicates the number of different DCT coefficients between the two compressed JPEG files with the same quantization table. As can be seen in Fig. 3, the number of DCT coefficient changes between two successive compressions decreases monotonically with the increase in the number of compressions. Based on this observation, Huang et al. [20] proposed a method to randomly perturb the post-quantization DCT coefficients in UCID database [21], which can effectively solve the problem of double compression detection when the quantization table remains constant and the 8 × 8 chunked grid remains aligned. 2019, Niu et al. [22] showed that perturbing only the post-quantization DCT coefficients with a value of ±1 can further improve the detection of Huang et al. Lai et al. [23] proposed a new block convergence analysis method that can detect JPEG recompressed images with a quality factor of 100 and can estimate the times of compressions more accurately. Yang et al. [24] obtained an error image (this error image mainly contains truncation and rounding errors) by calculating the difference between the values obtained after IDCT and the pixel values in the reconstructed image, and then proposed a set of features to characterize the statistical difference between the error image obtained after single and double JPEG compression, and used SVM to identify whether a given JPEG image was double compressed. To address the problem that the detection accuracy of existing algorithms is not high in the case of low quality factors, Wang et al. [25] proposed a detection algorithm based on spherical coordinates, which proposed conversion errors by analyzing the effect of JPEG compression on the color space conversion, classifying truncation errors and rounding errors at the pixel level and then projecting them onto spherical coordinates to obtain more obvious features while avoiding mixing errors. The experimental results show that the detection accuracy of this method in the UCID database is on average 5% better than that of Yang et al. [24] for quality factors less than 80, and significantly better for quality factors of 80 and 85. Deshpande et al. [26] proposed a feature to describe the difference in quantized DCT coefficients based on the number of quantized DCT coefficients changed in the subsequent compression stage, and combined it with the feature proposed by Yang et al. [24] proposed a feature that can be used to describe the difference in quantized DCT coefficients, and combined it with the feature proposed by Yang et al. [24] to classify single JPEG compressed and twice JPEG compressed images using a multi-layer perceptron (MLP), which can achieve better performance for smaller image sizes and lower quality factors.

Figure 3: Variation tendency about the average values of Dn (n = 1, 2, …, 10) for different image QFs

With the impressive performance of deep learning in the field of image processing, some scholars have also introduced deep learning methods to the field of double JPEG compression detection twice in the same case of quantization tables in recent years. Unlike traditional methods that only extract a specific type of feature, deep learning-based algorithms can extract double JPEG compression features through network training. Huang et al. [27] exploited the advantage of error images in double JPEG compression detection by using the error images obtained through pre-processing as the input to a convolutional neural network (CNN). To overcome the effect of gradient disappearance, dense connections were introduced between each layer, and a CNN architecture consisting of eight convolutional layers was proposed to detect JPEG recompressed images. JPEG recompressed images are detected. In addition, for JPEG images compressed three times or more, Bakas et al. [28] proposed a deep convolutional neural network model that can detect JPEG compressed images twice or even more.

2.3 Non-Aligned Detection of Double JPEG Compression

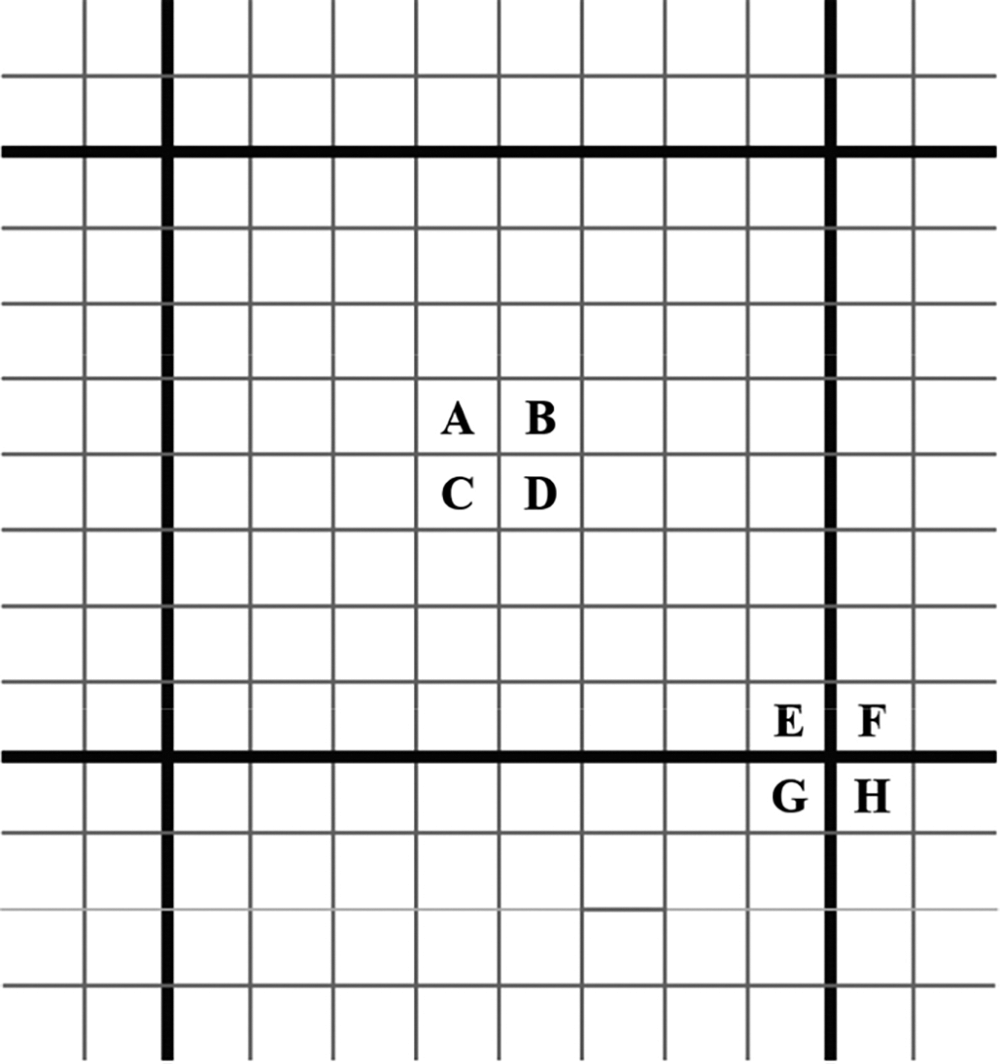

An important starting point for double JPEG compression detection in the non-aligned case is the fact that JPEG images are compressed in chunks, and in general there is a significant block effect in JPEG images, especially when the quality factor of the JPEG image is low. As shown in Fig. 4 below, the bold black lines represent the corresponding 8 × 8 block grid, with A, B, C and D representing the four pixels within the sub-blocks and E, F, G and H representing the four pixels at the edges of the sub-blocks. By comparing the difference between Z′ = A − B − C + D and Z″ = E − F − G + H, it is possible to effectively discriminate whether the original image has been JPEG-compressed or not.

Figure 4: Block effect of JPEG compression

Based on this, Luo et al. [29] proposed a blocking artifact characteristics matrix (BACM). Chen et al. [30] proposed to first calculate the block period of the whole image in the spatial domain, then transform the period model to the frequency domain and extract the block effect from the frequency energy distribution. Chen et al. [30] proposed to first calculate the block period of the whole image in the spatial domain, then transform the period model to the frequency domain, and extract statistical features from the frequency-energy distribution to detect two JPEG compressions with the help of support vector machines. In the literature [31], the authors assumed that all source images were in JPEG format, modelled the possible periodicity of JPEG images in the spatial domain and the transform domain, and designed a robust detection method based on this to detect aligned or non-aligned JPEG compressions twice. Luo et al. [32] proposed to use RID (re-fined intensity difference) to measure the block effect in JPEG-compressed images. The block effect in JPEG-compressed images is measured by RID. To avoid the effect of image texture on the block effect metric, the authors also proposed a new calibration strategy to effectively remove the effect of image texture on the block effect metric scheme. To address the problem of missing feature information and block effect detection in color images, Wang et al. [33,34] proposed a non-aligned double JPEG compression detection algorithm based on the quaternion discrete cosine transform (QDCT) domain fine Markov chain. In [35], the authors also proposed a method for automatic detection and localization of tampered regions based on the estimation of double compression offsets of JPEG images. The nearest neighbour algorithm is used to initially match the extracted feature vectors. For false matches caused by inconsistent color information, the authors introduced the hue saturation intensity (HIS) color feature to optimize it. Then, the RANSAC (random sample consensus) algorithm was used to estimate the affine transformation parameters between the matched pairs and eliminate the mismatch, and to determine the complete copy-paste region. Finally, the copied and tampered regions are determined and distinguished according to the different double compression offsets of each region. The experimental results show that the method can effectively locate the copy-tampered regions of JPEG images, and is robust to geometric transformations of the copy regions and common post-processing operations.

In addition, considering that non-aligned double JPEG compression does not have the obvious bimodal and zero-value effects in the quantized DCT coefficient histogram, the double compression process still leaves traces in the quantized DCT coefficient histogram. Some authors have also proposed detection schemes based on the properties of the DCT coefficients. For example, Qu et al. [36] showed that non-aligned double JPEG compression breaks the symmetry of the IVM (independent value map) based on the chunked DCT coefficients, and thus extracted 13 features to detect non-aligned double JPEG compression from the perspective of blind source separation. Bianchi et al. [37,38] found that the DCT coefficients exhibit significant periodicity when recompressed under alignment, and that the non-uniformity of the integer periodicity map (IPM) can be used to detect the 8 × 8 network offset in both compressions. However, it was shown in subsequent studies that this IPM-based detection method can be easily misleading. In 2019, Mandal et al. [39] found that the IPM can be easily modified without affecting the visual quality of the image and proposed a counter-detection method that generates an estimated image (which is not compressed) from a statistical DC coefficient model of a single compressed JPEG image. Model to generate an estimated image (which is not affected by the quantization artifacts present in the IPM) and then recompress the estimated image with non-aligned JPEG, which can mislead the JPEG double compression detection method proposed by Bianchi et al. [37,38].

Recently, Wang et al. [40] proposed a JPEG double compression detection algorithm based on a convolutional neural network (CNN). Considering the difference in the DCT coefficient histogram between the single-compressed and double-compressed regions after JPEG compression, the authors used the DCT coefficient histogram of the image as input to achieve effective detection of non-aligned double JPEG compression. The experimental results show that the algorithm has good performance in the case where the first compression quality factor is higher than the second compression quality factor. Luo et al. [41] used manually extracted features, the original image and the denoised image as the input alignment of the CNN for training, respectively, and showed that the manual feature-based CNN was able to achieve better accuracy in the case of aligned double JPEG compression, while Li et al. [42] proposed a double domain CNN-based algorithm for non-aligned JPEG double compression detection, using the DCT coefficients of JPEG and the pixels of the decompressed image as the input of the network, where the DCT domain Verma et al. [43] proposed a deep convolutional neural network based on the DCT domain, which extracts histogram-based features from the image as input to the network for training, and can effectively classify double JPEG compression. Li et al. [44] proposed a multi-branch CNN-based JPEG double compression for detection scheme, which takes the original DCT coefficients of JPEG images as input and does not require histogram extraction features or manual preprocessing operations. In 2019, Zeng et al. [45] proposed an improved DenseNet (densely connected convolutional network) for detecting the compressed history of a given image, with a special filtering layer used at the front end of the network to help the network behind it to recognize the image more easily. The method achieves high performance in images with low quality factors. Alipour et al. [46] proposed to segment JPEG block boundaries by training a deep convolutional neural network that can accurately detect block boundaries associated with various JPEG compressions. Niu et al. [47] presented a comprehensive end-to-end system for image block-based compression. Their proposed approach involved estimating the original quantization matrix clustering and refining the outcomes via morphological reconstruction. This method demonstrated excellent performance in diverse settings, such as detecting double JPEG compression, whether the images were aligned or not.

Compared to the large number of detection methods, there are relatively few anti-detection methods for double JPEG compression. There is still a need for better anti-detection methods for double JPEG compression.

Sutthiwan et al. [48] resize images based on bilinear interpolation, which aims to destroy the JPEG grid structure while maintaining good image quality. Given a double compressed JPEG image, the image is modified by decompressing, shrinking and zooming. The image is bilinearly interpolated before one more JPEG compression, which uses the same quality factor as the given image. It can make the accuracy of double JPEG compression detector degrade, especially for cases where the first quality factor is lower than the second quality factor.

Li et al. [49] proposed an anti-detection method for the detection model of DJSQM. The method attempts to slightly modify the DCT coefficients in order to confuse the artifacts introduced by the double JPEG compression with the quantization matrix. By studying the relationship between the DCT coefficients of the first and second compressions, the amount of modification is determined by constructing a linear model. In addition, to improve the concealment of the anti-forensics, the method adaptively selects the location of the modifications according to the complexity of the image texture. This leaves fewer other detectable statistical artefacts while maintaining a high visual quality.

Wang et al. [50] examines the impact of tampering with JPEG header files on JPEG images and highlights the use of anti-forensic and forensic techniques through header file modifications. To achieve anti-forensics, the quantization step in the header file is increased while limiting the number of modified steps to prevent noticeable differences to the human eye. This has led to a significant reduction in the forensics performance of various algorithms. Conversely, to enhance forensics performance, the quantization step is reduced in the header file. Specifically, a quantization step of 1 enables the concentration of B, E, and C values in the distribution of single and double compressed images, thereby allowing for easier feature extraction and improved performance in forensics. As a result, the performance of different algorithms has been enhanced to some degree, and adaptive forensics has become a broader issue.

Also, the anti-detection of JPEG compression needs to be able to deceive both the JPEG compression detector and the double JPEG compression detector. As proposed by Fan et al. [51], Their proposed anti-detection of JPEG compression is also applicable to the double JPEG compression anti-detection.

JPEG is the most widely used image format on the Internet, and the detection of double JPEG compressed images is of great importance in the field of image tampering forensics. This paper presents a review of the literature in the field of JPEG recompression detection and anti-detection in recent years, and introduces some representative methods in this field. In general, a series of meaningful results have been achieved in the field of JPEG recompression, but there are still some problems:

1) Most of the existing methods are aimed at grayscale image recompression detection, and the features of each channel in color JPEG images are less considered for color image recompression detection.

2) There are few successful cases of using double JPEG compression methods for tampering forensics for specific tampering problems. When the tampered area is small, it cannot be detected accurately especially.

3) There are fewer existing related works on double JPEG compression anti-detection, which makes it difficult to implement JPEG recompression detection into applications with poor robustness.

In addition, due to the rapid development of research in fields such as information and computers, some fields related to double compression detection research are also being innovated. For example, the current rapid development of deep learning provides a series of new detection means for JPEG image double compression detection research. However, while the field of deep learning has made great breakthroughs, it has also brought many security risks. How to design new and more efficient detection and anti-detection algorithms is a problem worthy of attention. Only by truly dealing with the two issues can double JPEG compression detection be made more robust, Then the achieved series of results can be pushed to practical engineering applications.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. X. Chen, Z. Zhang, A. Qiu, Z. Xia and N. N. Xiong, “Novel coverless steganography method based on image selection and StarGAN,” IEEE Transactions on Network Science and Engineering, vol. 9, no. 1, pp. 219–230, 2022. https://doi.org/10.1109/TNSE.2020.3041529 [Google Scholar] [CrossRef]

2. H. Yang, H. He, W. Zhang and X. Cao, “FedSteg: A federated transfer learning framework for secure image steganalysis,” IEEE Transactions on Network Science and Engineering, vol. 8, no. 2, pp. 1084–1094, 2021. https://doi.org/10.1109/TNSE.2020.2996612 [Google Scholar] [CrossRef]

3. R. Meng, Q. Cui, Z. Zhou, Q. Wu and X. Sun, “High-capacity steganography using object addition-based cover enhancement for secure communication in networks,” IEEE Transactions on Network Science and Engineering, vol. 9, no. 2, pp. 848–862, 2022. [Google Scholar]

4. B. Wang, S. Jiawei and W. Wang, “Image copyright protection based on blockchain and zero-watermark,” IEEE Transactions on Network Science and Engineering, vol. 9, no. 4, pp. 2188–2199, 2022. https://doi.org/10.1109/TNSE.2022.3157867 [Google Scholar] [CrossRef]

5. R. Reininger and J. Gibson, “Distributions of the two-dimensional DCT coefficients for images,” IEEE Transactions on Communications, vol. 31, no. 6, pp. 835–839, 1983. [Google Scholar]

6. J. Lukáš and J. Fridrich, “Estimation of primary quantization matrix in double compressed JPEG images,” in Proc. Digital Forensic Research Workshop, Cleveland, Ohio, pp. 5–8, 2003. [Google Scholar]

7. T. Pevny and J. Fridrich, “Detection of double-compression in JPEG images for applications in steganography,” IEEE Transactions on Information Forensics and Security, vol. 3, no. 2, pp. 247–258, 2008. https://doi.org/10.1109/TIFS.2008.922456 [Google Scholar] [CrossRef]

8. A. C. Popescu and H. Farid, “Statistical tools for digital forensics,” in Information Hiding: 6th Int. Workshop, Toronto, Canada, pp. 128–147, 2004. [Google Scholar]

9. X. Feng and G. Doërr, “JPEG recompression detection,” in Media Forensics and Security II, San Jose, USA, vol. 7541, pp. 188–199, 2010. [Google Scholar]

10. D. Fu, Y. Q. Shi and W. Su, “A generalized Benford’s law for JPEG coefficients and its applications in image forensics,” in Security, Steganography, and Watermarking of Multimedia Contents IX, San Jose, USA, vol. 6505, pp. 574–584, 2007. [Google Scholar]

11. S. Newcomb, “Note on the frequency of use of the different digits in natural numbers,” American Journal of Mathematics, vol. 4, no. 1, pp. 39–40, 1881. https://doi.org/10.2307/2369148 [Google Scholar] [CrossRef]

12. B. Li, Y. Q. Shi and J. Huang, “Detecting doubly compressed JPEG images by using mode based first digit features,” in 2008 IEEE 10th Workshop on Multimedia Signal Processing, Cairns, Australia, pp. 730–735, 2008. [Google Scholar]

13. L. Dong, X. Kong and B. Wang, “Double compression detection based on Markov model of the first digits of DCT coefficients,” in 2011 Sixth Int. Conf. on Image and Graphics, Anhui, China, pp. 234–237, 2011. [Google Scholar]

14. C. Chen, Y. Q. Shi and W. Su, “A machine learning based scheme for double JPEG compression detection,” in 2008 19th Int. Conf. on Pattern Recognition, Tampa, Florida, pp. 1–4, 2008. [Google Scholar]

15. Q. Liu, A. H. Sung and M. Qiao, “A method to detect JPEG-based double compression,” in Advances in Neural Networks–ISNN 2011: 8th Int. Symp. on Neural Networks, ISNN 2011, Guilin, China, May 29–June 1, 2011, Proceedings, Part II 8, Berlin, Heidelberg, Springer, pp. 466–476, 2011. [Google Scholar]

16. S. Shang, Y. Zhao and R. Ni, “Double JPEG detection using high order statistic features,” in 2016 IEEE Int. Conf. on Digital Signal Processing (DSP), Beijing, China, pp. 550–554, 2016. [Google Scholar]

17. J. Li, W. Lu and J. Weng, “Double JPEG compression detection based on block statistics,” Multimedia Tools and Applications, vol. 77, no. 24, pp. 31895–31910, 2018. https://doi.org/10.1007/s11042-018-6175-2 [Google Scholar] [CrossRef]

18. A. Taimori, F. Razzazi, A. Behrad, A. Ahmadi and M. Babaie-Zadeh, “A part-level learning strategy for JPEG image recompression detection,” Multimedia Tools and Applications, vol. 80, no. 8, pp. 12235–12247, 2021. https://doi.org/10.1007/s11042-020-10200-4 [Google Scholar] [CrossRef]

19. J. He, Z. Lin and L. Wang, “Detecting doctored JPEG images via DCT coefficient analysis,” in Proc. Computer Vision-ECCV 2006: 9th European Conf. on Computer Vision, Graz, Austria, pp. 423–435, 2006. [Google Scholar]

20. F. Huang, J. Huang and Y. Q. Shi, “Detecting double JPEG compression with the same quantization matrix,” IEEE Transactions on Information Forensics and Security, vol. 5, no. 4, pp. 848–856, 2010. https://doi.org/10.1109/TIFS.2010.2072921 [Google Scholar] [CrossRef]

21. G. Schaefer and M. Stich, “UCID: An uncompressed color image database,” in Proc. Storage and Retrieval Methods and Applications for Multimedia 2004, San Jose, USA, vol. 5307, pp. 472–480, 2003. [Google Scholar]

22. Y. Niu, X. Li and Y. Zhao, “An enhanced approach for detecting double JPEG compression with the same quantization matrix,” Signal Processing: Image Communication, vol. 76, pp. 89–96, 2019. [Google Scholar]

23. S. Y. Lai and R. Böhme, “Block convergence in repeated transform coding: JPEG-100 forensics, carbon dating, and tamper detection,” in Proc. 2013 IEEE Int. Conf. on Acoustics, Speech and Signal Processing, British Columbia, Canada, pp. 3028–3032, 2013. [Google Scholar]

24. J. Yang, J. Xie, G. Zhu, S. Kwong and Y. Q. Shi, “An effective method for detecting double JPEG compression with the same quantization matrix,” IEEE Transactions on Information Forensics and Security, vol. 9, no. 11, pp. 1933–1942, 2014. https://doi.org/10.1109/TIFS.2014.2359368 [Google Scholar] [CrossRef]

25. J. Wang, H. Wang and J. Li, “Detecting double JPEG compressed color images with the same quantization matrix in spherical coordinates,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 8, pp. 2736–2749, 2019. https://doi.org/10.1109/TCSVT.2019.2922309 [Google Scholar] [CrossRef]

26. A. U. Deshpande, A. N. Harish and S. Singh, “Neural network based block-level detection of same quality factor double JPEG compression,” in Proc. 2020 7th Int. Conf. on Signal Processing and Integrated Networks, Noida, India, pp. 828–833, 2020. [Google Scholar]

27. X. Huang, S. Wang and G. Liu, “Detecting double JPEG compression with same quantization matrix based on dense CNN feature,” in Proc. 2018 25th IEEE Int. Conf. on Image Processing (ICIP), Athens, Greece, pp. 3813–3817, 2018. [Google Scholar]

28. J. Bakas, S. Ramachandra and R. Naskar, “Double and triple compression-based forgery detection in JPEG images using deep convolutional neural network,” Journal of Electronic Imaging, vol. 29, no. 2, pp. 023006, 2020. [Google Scholar]

29. W. Luo, Z. Qu, J. Huang and G. Qiu, “A novel method for detecting cropped and recompressed image block,” in 2007 IEEE Int. Conf. on Acoustics, Speech and Signal Processing, Honolulu, Hawaii, pp. II-217, 2007. [Google Scholar]

30. Y. Chen and C. T. Hsu, “Image tampering detection by blocking periodicity analysis in JPEG compressed images,” in 2008 IEEE 10th Workshop on Multimedia Signal Processing, Cairns, Australia, pp. 803–808, 2008. [Google Scholar]

31. Y. Chen and C. T. Hsu, “Detecting recompression of JPEG images via periodicity analysis of compression artifacts for tampering detection,” IEEE Transactions on Information Forensics and Security, vol. 6, no. 2, pp. 396–406, 2011. https://doi.org/10.1109/TIFS.2011.2106121 [Google Scholar] [CrossRef]

32. W. Luo, Z. Qu, J. Huang and G. Qiu, “Detecting non-aligned double JPEG compression based on refined intensity difference and calibration,” in Digital-Forensics and Watermarking: 12th Int. Workshop, Auckland, New Zealand, pp. 169–179, 2014. [Google Scholar]

33. J. Wang, W. Huang and X. Luo, “Double JPEG compression detection based on Markov model,” in Digital Forensics and Watermarking, Melbourne, Australia, pp. 141–149, 2020. [Google Scholar]

34. J. Wang, W. Huang and X. Luo, “Non-aligned double JPEG compression detection based on refined Markov features in QDCT domain,” Journal of Real-Time Image Processing, vol. 17, no. 1, pp. 7–16, 2020. https://doi.org/10.1007/s11554-019-00929-z [Google Scholar] [CrossRef]

35. J. Zhao, J. Guo and Y. Zhang, “Automatic detection and localization of image forgery regions based on offset estimation of double JPEG compression,” Journal of Image and Graphics, vol. 20, no. 10, pp. 1304–1312, 2015. [Google Scholar]

36. Z. Qu, W. Luo and J. Huang, “A convolutive mixing model for shifted double JPEG compression with application to passive image authentication,” in 2008 IEEE Int. Conf. on Acoustics, Speech and Signal Processing, Las Vegas, Nevada, pp. 1661–1664, 2008. [Google Scholar]

37. T. Bianchi and A. Piva, “Analysis of non-aligned double JPEG artifacts for the localization of image forgeries,” in 2011 IEEE Int. Workshop on Information Forensics and Security, Tenerife, Spain, pp. 1–6, 2011. [Google Scholar]

38. T. Bianchi and A. Piva, “Detection of nonaligned double JPEG compression based on integer periodicity maps,” IEEE Transactions on Information Forensics and Security, vol. 7, no. 2, pp. 842–848, 2012. https://doi.org/10.1109/TIFS.2011.2170836 [Google Scholar] [CrossRef]

39. A. B. Mandal and T. K. Das, “Anti-forensics of a NAD-JPEG detection scheme using estimation of DC coefficients,” in Information Systems Security: 15th International Conference, Hyderabad, India, pp. 307–323, 2019. [Google Scholar]

40. Q. Wang and R. Zhang, “Double JPEG compression forensics based on a convolutional neural network,” EURASIP Journal on Information Security, vol. 2016, no. 1, pp. 1–12, 2016. [Google Scholar]

41. W. Luo, Z. Qu, J. Huang and G. Qiu, “Aligned and non-aligned double JPEG detection using convolutional neural networks,” Journal of Visual Communication and Image Representation, vol. 49, pp. 153–163, 2017. https://doi.org/10.1016/j.jvcir.2017.09.003 [Google Scholar] [CrossRef]

42. B. Li, H. Zhang, H. Luo and S. Tan, “Detecting double JPEG compression and its related anti-forensic operations with CNN,” Multimedia Tools and Applications, vol. 78, no. 7, pp. 8577–8601, 2019. https://doi.org/10.1007/s11042-018-7073-3 [Google Scholar] [CrossRef]

43. V. Verma, N. Agarwal and N. Khanna, “DCT-domain deep convolutional neural networks for multiple JPEG compression classification,” Signal Processing: Image Communication, vol. 67, pp. 22–33, 2018. [Google Scholar]

44. B. Li, H. Luo and H. Zhang, “A multi-branch convolutional neural network for detecting double JPEG compression,” arXiv preprint arXiv:1710.05477v1, 2017. [Google Scholar]

45. X. Zeng, G. Feng and X. Zhang, “Detection of double JPEG compression using modified Dense Net model,” Multimedia Tools and Applications, vol. 78, no. 7, pp. 8183–8196, 2019. https://doi.org/10.1007/s11042-018-6737-3 [Google Scholar] [CrossRef]

46. N. Alipour and A. Behrad, “Semantic segmentation of JPEG blocks using a deep CNN for non-aligned JPEG forgery detection and localization,” Multimedia Tools and Applications, vol. 79, no. 11, pp. 8249–8265, 2020. https://doi.org/10.1007/s11042-019-08597-8 [Google Scholar] [CrossRef]

47. Y. Niu, B. Tondi and Y. Zhao, “Image splicing detection, localization and attribution via JPEG primary quantization matrix estimation and clustering,” arXiv preprint arXiv:2102.01439, 2021. [Google Scholar]

48. P. Sutthiwan and Y. Q. Shi, “Anti-forensics of double JPEG compression detection,” in Proc. of the Int. Workshop on Digital Watermarking, Berlin, Heidelberg, Springer, pp. 411–424, 2011. [Google Scholar]

49. H. Li, W. Luo and J. Huang, “Anti-forensics of double JPEG compression with the same quantization matrix,” Multimedia Tools and Applications, vol. 74, no. 17, pp. 6729–6744, 2015. https://doi.org/10.1007/s11042-014-1927-0 [Google Scholar] [CrossRef]

50. H. Wang, J. Wang and X. Luo, Modify the Quantization Table in the JPEG Header File for Forensics and Anti-forensics. Beijing, China: Springer International Publishing, pp. 72–86, 2022. [Google Scholar]

51. W. Fan, K. Wang and F. Cayre, “JPEG anti-forensics with improved tradeoff between forensic undetectability and image quality,” IEEE Transactions on Information Forensics and Security, vol. 9, no. 8, pp. 1211–1226, 2014. https://doi.org/10.1109/TIFS.2014.2317949 [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2022 The Author(s). Published by Tech Science Press.

Copyright © 2022 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools