Open Access

Open Access

REVIEW

Stochastic Fractal Search: A Decade Comprehensive Review on Its Theory, Variants, and Applications

1 Department of Mathematics, College of Science and Humanities in Al-Kharj, Prince Sattam bin Abdulaziz University, Al-Kharj, 11942, Saudi Arabia

2 Faculty of Science and Technology, Hassan II University of Casablanca, Mohammedia, 28806, Morocco

3 School of Engineering and Technology, Sunway University Malaysia, Petaling Jaya, 27500, Malaysia

4 Centre for Research Impact & Outcome, Chitkara University Institute of Engineering and Technology, Chitkara University, Rajpura, 140401, Punjab, India

5 Faculty of Engineering, Helwan University, Cairo, 11792, Egypt

6 Applied Science Research Center, Applied Science Private University, Amman, 11937, Jordan

* Corresponding Author: Anas Bouaouda. Email:

(This article belongs to the Special Issue: Swarm and Metaheuristic Optimization for Applied Engineering Application)

Computer Modeling in Engineering & Sciences 2025, 142(3), 2339-2404. https://doi.org/10.32604/cmes.2025.061028

Received 15 November 2024; Accepted 10 February 2025; Issue published 03 March 2025

Abstract

With the rapid advancements in technology and science, optimization theory and algorithms have become increasingly important. A wide range of real-world problems is classified as optimization challenges, and meta-heuristic algorithms have shown remarkable effectiveness in solving these challenges across diverse domains, such as machine learning, process control, and engineering design, showcasing their capability to address complex optimization problems. The Stochastic Fractal Search (SFS) algorithm is one of the most popular meta-heuristic optimization methods inspired by the fractal growth patterns of natural materials. Since its introduction by Hamid Salimi in 2015, SFS has garnered significant attention from researchers and has been applied to diverse optimization problems across multiple disciplines. Its popularity can be attributed to several factors, including its simplicity, practical computational efficiency, ease of implementation, rapid convergence, high effectiveness, and ability to address single- and multi-objective optimization problems, often outperforming other established algorithms. This review paper offers a comprehensive and detailed analysis of the SFS algorithm, covering its standard version, modifications, hybridization, and multi-objective implementations. The paper also examines several SFS applications across diverse domains, including power and energy systems, image processing, machine learning, wireless sensor networks, environmental modeling, economics and finance, and numerous engineering challenges. Furthermore, the paper critically evaluates the SFS algorithm’s performance, benchmarking its effectiveness against recently published meta-heuristic algorithms. In conclusion, the review highlights key findings and suggests potential directions for future developments and modifications of the SFS algorithm.Keywords

Nomenclature

| ANN | Artificial Neural Network |

| CSFS | Chaotic Stochastic Fractal Search |

| DE | Differential Evolution |

| FDB | Fitness-Distance Balance |

| FS | Fractal Search |

| GA | Genetic Algorithm |

| GWO | Grey Wolf Optimizer |

| MAE | Mean Absolute Error |

| ORPD | Optimal Reactive Power Dispatch |

| PID | Proportional-Integral-Derivative |

| PSO | Particle Swarm Optimization |

| RESs | Renewable Energy Sources |

| RMSE | Root Mean Square Error |

| SFS | Stochastic Fractal Search |

| WOA | Whale Optimization Algorithm |

| WSN | Wireless Sensor Networks |

Optimization aims to determine the optimal values for decision variables that minimize or maximize objective functions while satisfying specific constraints. Numerous real-world optimization problems present significant challenges, such as high computational costs, nonlinear constraints, non-convex search spaces, dynamic or noisy objective functions, and extensive solution spaces [1]. These factors influence the choice between exact and approximate methods for addressing complex problems. While exact methods can accurately identify the global optimum, their computational time typically increases exponentially with the number of variables [2]. In contrast, stochastic optimization algorithms can effectively find near-optimal or optimal solutions within a practical timeframe. Heuristic and meta-heuristic methods are among the most effective approximate algorithms for solving complex optimization problems [3].

Heuristic algorithms are techniques employed to find near-optimal solutions efficiently. They generate feasible solutions within a predefined number of iterations, demonstrating adaptability to the problem being addressed. Heuristics typically employ a trial-and-error approach to explore potential solutions, aiming to do so within reasonable computational time and resource constraints. However, their inherent tendency towards local exploration can lead to convergence at local optima, which may prevent the attainment of the global optimum [3].

On the other hand, meta-heuristic algorithms were developed to address the limitations of heuristic algorithms. Each meta-heuristic employs distinct strategies to guide the search process, aiming for a comprehensive search space exploration to identify near-optimal solutions. These algorithms encompass diverse techniques, ranging from simple local exploration to sophisticated learning methods [4]. Meta-heuristics are often considered problem-independent as they do not rely on the specific characteristics of the problem being solved. Furthermore, their non-greedy approach enables a comprehensive search space exploration, potentially resulting in improved solutions that may sometimes align with global optima [5]. Consequently, meta-heuristic algorithms can uncover superior solutions for many optimization problems while avoiding local optima. These robust and adaptable algorithms tackle many challenges, including path planning, feature selection, wireless sensor networks, image processing, and neural network optimization [6].

Several vital attributes contribute to the appeal of meta-heuristic algorithms, such as simplicity, flexibility, black-box nature, and the capability to avoid local optima traps [6]. Unlike heuristic algorithms, meta-heuristics effectively manage the balance between exploration and exploitation, two essential components of the optimization process [7]. Exploration focuses on discovering solutions in unexplored regions of the search space, while exploitation aims to identify optimal solutions among the current candidate solutions. By employing iterative and efficient processes that effectively balance these components, meta-heuristic algorithms guide the search towards optimal or near-optimal solutions [7].

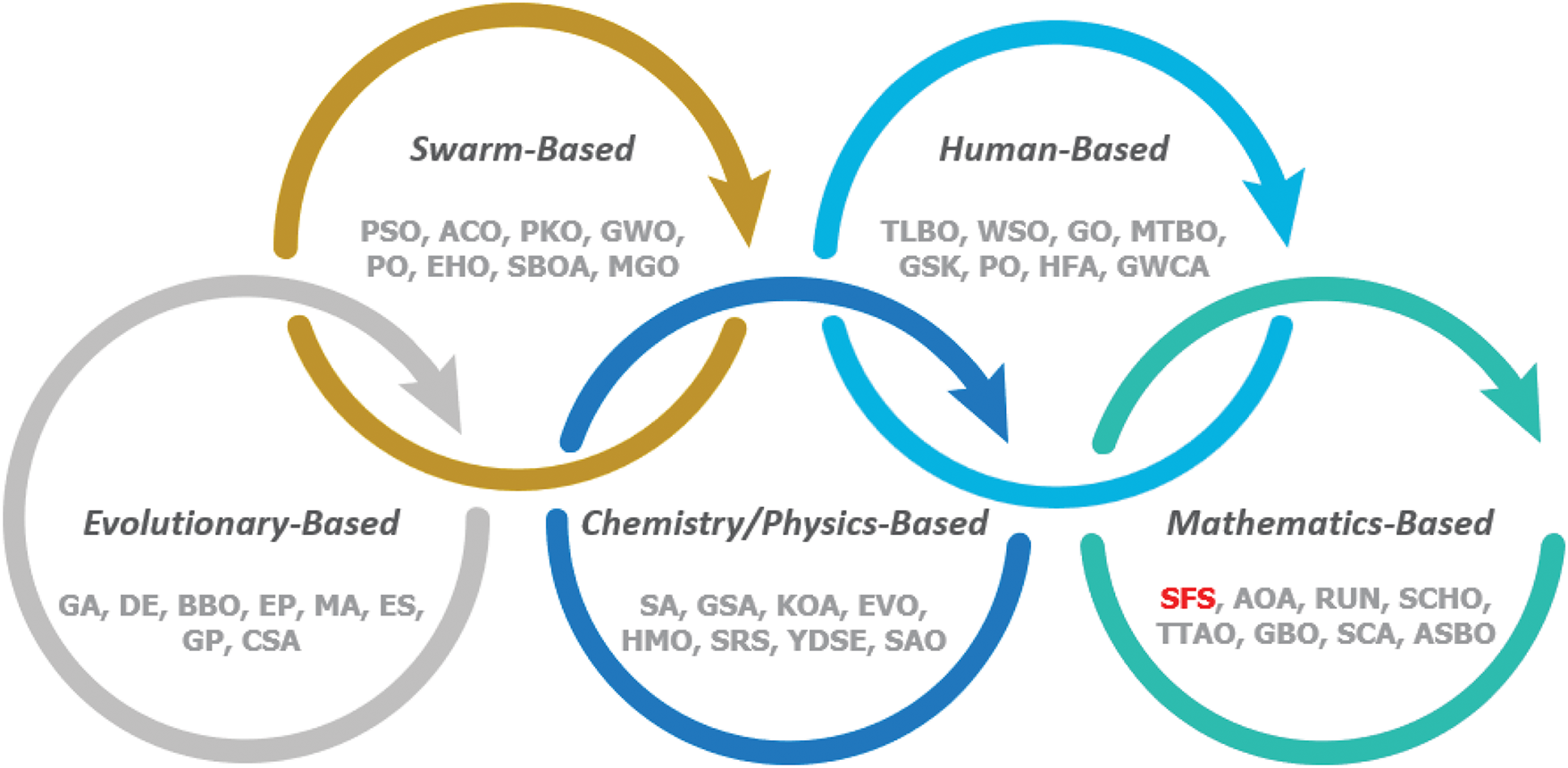

Numerous meta-heuristic algorithms have been developed over the past few decades [8]. Fig. 1 illustrates the five primary categories of meta-heuristic algorithms, which include evolutionary-based, swarm-based, human-inspired, chemistry/physics-based, and mathematics-based methods.

Figure 1: A brief classification of meta-heuristic techniques

The first class encompasses evolutionary techniques inspired by the biological principle of survival of the fittest [9]. The most well-known evolutionary algorithm is the Genetic Algorithm (GA), developed by Holland [10]. GA emulates Darwin’s theory of evolution by generating improved solutions through mating the fittest parents using the crossover mechanism, which enhances exploitation by producing offspring similar to the parents. On the other hand, the mutation operator helps maintain diversity by introducing new genetic material into the population, facilitating the exploration of different regions in the search space. Another notable evolutionary algorithm is Differential Evolution (DE) [11], introduced by Storn and Price. DE addresses optimization problems by refining candidate solutions through an effective evolutionary process that includes mutation, crossover, and selection. Other developed evolutionary algorithms, such as Biogeography Based Optimization (BBO) [12], Evolutionary Programming (EP) [13], Memetic Algorithm (MA) [14], Genetic Programming (GP) [15], Clonal Selection Algorithm (CSA) [16], Evolution Strategy (ES) [17], have demonstrated significant effectiveness in addressing several optimization challenges.

The second class comprises swarm intelligence techniques inspired by the collective behaviors observed in natural swarms, such as those of social animals. Group intelligence emerges from the interactions among simple individuals within the group, allowing the emergence of specific intelligent traits without centralized control [9]. A prominent example is Particle Swarm Optimization (PSO) [18], which simulates the foraging behavior of birds to guide the search process. PSO effectively utilizes individual and collective information during the search, typically achieving optimal solutions through collaboration and information sharing among individuals within the population. Another noteworthy algorithm is Ant Colony Optimization (ACO) [19], which mimics how ants find the shortest paths while foraging. In ACO, ants deposit pheromones as they traverse paths, leading to a positive feedback mechanism where more ants gradually favor shorter routes over time. This process allows the ant colony to determine the shortest paths. Other significant swarm-based algorithms include the Pied Kingfisher Optimizer (PKO) [20], Grey Wolf Optimizer (GWO) [21], Puma Optimizer (PO) [22], Elk Herd Optimizer (EHO) [23], Secretary Bird Optimization Algorithm (SBOA) [24], Mountain Gazelle Optimizer (MGO) [25], White Shark Optimizer (WSO) [26], and others.

The third class encompasses chemistry/physics-based algorithms replicating physical or chemical phenomena. These algorithms simulate the interactions among search agents based on fundamental principles from physics or chemistry [9]. The most popular physics-based approach is Simulated Annealing (SA) [27], inspired by the annealing process of metals. In SA, the metal is initially heated to a molten state and then gradually cooled, allowing it to form an optimal crystal structure. Another notable algorithm is the Gravitational Search Algorithm (GSA) [28], which models Newton’s laws of gravity by treating search agents as a group of masses that interact according to the laws of gravity and motion, facilitating the search for an optimal solution. Several other chemistry/physics-based algorithms have been introduced, including Kepler Optimization Algorithm (KOA) [29], Energy Valley Optimizer (EVO) [30], Homonuclear Molecules Optimization (HMO) [31], Special Relativity Search (SRS) [32], Young’s Double-Slit Experiment (YDSE) optimizer [33], Snow Ablation Optimizer (SAO) [34], Artificial Chemical Reaction Optimization Algorithm (ACROA) [35], Chemical Reaction Optimization (CRO) [36], and so on.

The fourth class includes human-inspired techniques inspired by human societal activities [9]. An example is Teaching-Learning-Based Optimization (TLBO) [37], which draws inspiration from the interaction between teachers and learners. TLBO mimics the traditional classroom teaching method, encompassing teacher and learner phases. In the teacher phase, each student learns from the highest-performing individuals, while in the learner phase, students acquire knowledge randomly from their peers. Other notable examples of human-inspired algorithms include War Strategy Optimization (WSO) [38], Growth Optimizer (GO) [39], Mountaineering Team-Based Optimization (MTBO) [40], Gaining Sharing Knowledge based algorithm (GSK) [41], Political Optimizer (PO) [42], Human Felicity Algorithm (HFA) [43], Great Wall Construction Algorithm (GWCA) [44], Coronavirus herd immunity optimizer (CHIO) [45], and more.

Finally, mathematics-based algorithms represent a contemporary category of meta-heuristic algorithms that do not imitate specific phenomena or behaviors but instead rely on mathematical formulations. A well-known example is the Sine Cosine Algorithm (SCA) [46], inspired by the properties of the transcendental functions sine and cosine. Another notable algorithm, the Arithmetic Optimization Algorithm (AOA) [47], utilizes the distribution behavior of the four basic arithmetic operators. Recently developed mathematics-based algorithms include the Average and Subtraction-Based Optimizer (ASBO) [48], RUNge Kutta optimizer (RUN) [49], Geometric Mean Optimizer (GMO) [50], Sinh Cosh Optimizer (SCHO) [51], Topology Aggregation Optimizer (TTAO) [52], and Gradient-Based Optimizer (GBO) [53], among others. Although mathematics-based algorithms are less prevalent than the previously mentioned categories, their significance in the optimization process should not be underestimated. These algorithms offer an innovative perspective for developing effective search strategies, distinguishing themselves from conventional metaphor-based approaches through their unique mathematical formulations.

The Stochastic Fractal Search (SFS) algorithm is an example of a robust mathematics-based meta-heuristic method developed by Salimi [54] in 2015 based on the mathematical concept of fractals. SFS leverages the diffusion properties observed in random fractals, enabling its particles to navigate the search space more effectively. The SFS algorithm process involves two main stages: diffusion and updating [54]. Each particle disperses from its initial position during diffusion, promoting local exploitation. In the updating stage, SFS utilizes statistical techniques to refine the position of each agent, enabling the exploration of more promising areas within the search space. This dynamic allows for efficient information sharing among particles, facilitating rapid convergence towards optimal solutions [54].

The SFS algorithm has effectively addressed many complex optimization problems, including engineering design, machine learning, deep learning, energy optimization, feature selection, and image segmentation. The exceptional performance of SFS has garnered significant attention from researchers, leading to the development of numerous advanced variants. These variants can effectively tackle single- and multi-objective problems across discrete and continuous search spaces, addressing challenges associated with unknown and potentially complex landscapes. Despite the sustained interest and ongoing research in SFS, a comprehensive literature review is still lacking. We believe that such a review is crucial for several reasons. First, it would offer researchers a clear and concise summary of the latest advancements in SFS. Second, it would provide valuable insights and inspiration for exploring future research directions in this domain.

Therefore, this paper provides a comprehensive review of the existing literature on the SFS algorithm, aiming to identify the most commonly studied problems, significant modifications to its framework, and prevalent hybridization techniques. Our objective is to assess the current state of SFS research, highlighting its strengths and weaknesses across diverse problem variants while offering an extensive overview of its applications. In summary, the primary contributions of this review paper are as follows:

• A detailed overview of SFS, including its mathematical foundations and research trends;

• An examination of all SFS variants to demonstrate the algorithm’s adaptability across several contexts;

• A presentation of SFS applications in diverse optimization scenarios, as well as its integration with other optimization algorithms;

• A discussion of the challenges associated with the SFS algorithm, aiding researchers in recognizing key issues;

• A roadmap of future research directions aimed at guiding subsequent studies toward critical gaps and emerging trends.

The subsequent sections of the paper are organized as follows: Section 2 provides a concise overview of the classical SFS algorithm and its core concepts. Section 3 explores the evolution of the SFS algorithm and its spread in the literature, highlighting the volume of relevant papers and citations published over the past decade. Section 4 focuses on analyzing and evaluating diverse SFS variants. Section 5 presents a selection of studies that apply the SFS algorithm across diverse domains. Section 6 introduces open-source software and online resources related to SFS. Section 7 offers a comparative performance analysis of SFS against the latest published meta-heuristic algorithms using the CEC’2022 benchmark test. Section 8 identifies ongoing challenges and potential future research directions for users of the SFS algorithm. Finally, Section 9 concludes the review paper by summarizing the study and its key findings.

2 Fundamentals of the SFS Algorithm

This section discusses the origins, core theoretical foundations, fundamental structure, shared characteristics, computational complexity, advantages, and limitations of the SFS algorithm.

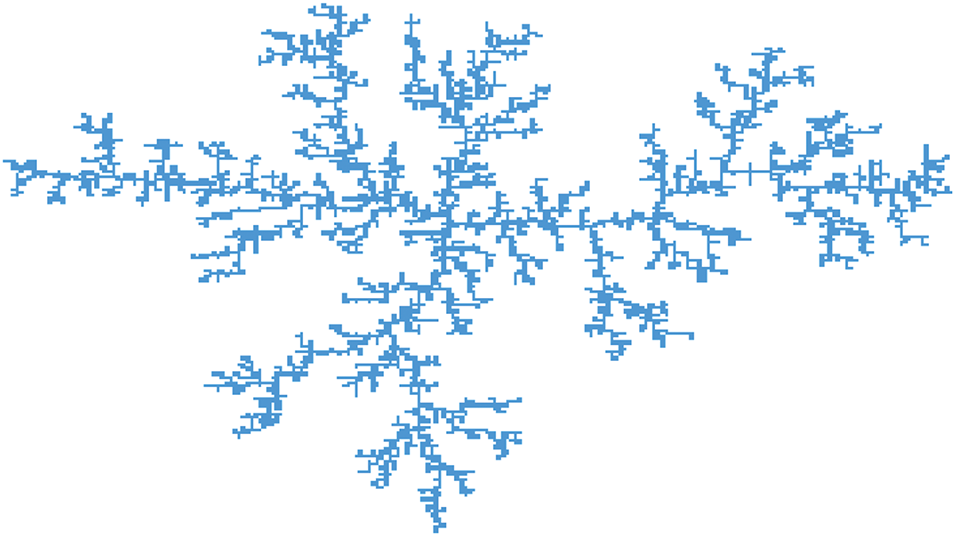

The SFS algorithm is a mathematics-based meta-heuristic algorithm inspired by the fractal growth patterns observed in natural materials, introduced by Salimi in 2015 [54]. Unlike most existing meta-heuristic algorithms, which often rely on the collective behavior of organisms such as ACO or PSO, SFS’s optimization principle is based on the mathematical concept of fractals. Fractals are complex geometric shapes exhibiting self-similarity across different scales, a concept first introduced by Benoît Mandelbrot in 1975 [55]. Common methods for generating fractal shapes include Random fractals [56], Finite subdivision rules [57], L-systems [58], Strange attractors [59], and Iterated function systems [60]. Among these, Diffusion Limited Aggregation (DLA) [61] is a widely used method. Salimi was the first to apply DLA to the Fractal Search (FS) algorithm by modeling DLA growth using Lévy flights and Gaussian walks [54]. Fig. 2 illustrates a random fractal generated using the DLA method.

Figure 2: Diffusion-limited aggregation fractal

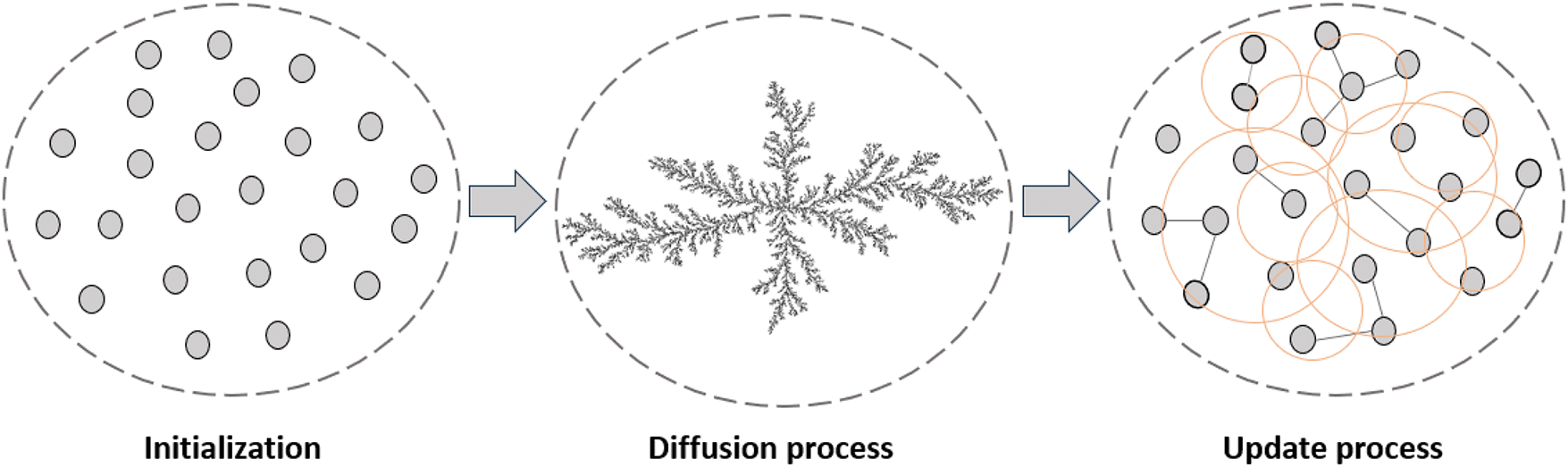

The SFS algorithm comprises two primary processes: diffusion and updating [54]. Inspired by the FS algorithm, the diffusion process aims to intensify or exploit the search space by allowing each particle to diffuse around its current position. This characteristic is crucial for enhancing the likelihood of locating the global optimum while minimizing the risk of being trapped in local optima. Unlike the basic FS algorithm, the diffusion process in SFS remains static, preventing a significant increase in active particles [54]. Only the best-generated particle during the diffusion phase is retained, while the others are discarded. In contrast, the updating process serves as an exploration phase, during which each particle modifies its position based on the locations of other chosen particles within the group [54].

The mathematical model of the SFS algorithm comprises three main procedures: initialization, diffusion, and updating, as illustrated in Fig. 3. Initially, a group of particles is generated, each assigned a predetermined level of electrical potential energy. The diffusion process focuses on exploiting the search within promising areas, while the updating process prioritizes exploring new regions of the solution space. These procedures are mathematically defined as follows:

Figure 3: Main processes of the SFS algorithm

Like other optimization algorithms, the SFS begins by initializing a set of particles representing a collection of initial solutions. The population size, denoted as N, defines the number of particles in the system. Each particle is described as a

where

During this process, each particle diffuses around its current location to exploit the search space, increasing the likelihood of discovering better solutions and avoiding local minima. This is achieved by generating new points using the Gaussian walk, which is mathematically represented by the following equations [54]:

where

where

This process involves two statistical procedures designed to explore the search space effectively. In the initial procedure, all points are ranked based on their fitness values, and a probability value,

where

where

where

2.3 Framework of the SFS Algorithm

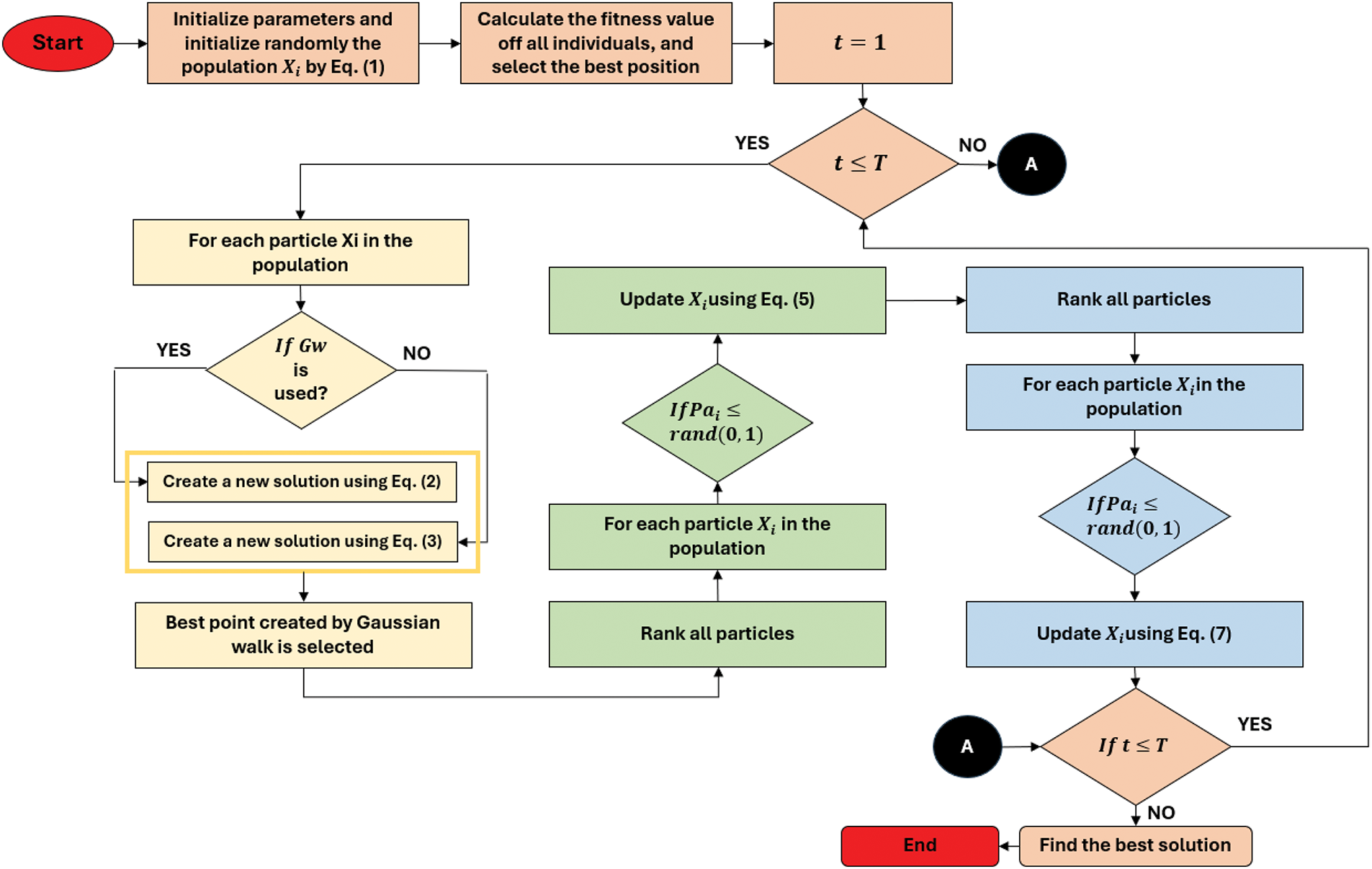

Fig. 4 illustrates the flowchart of SFS, which comprises four distinct stages: initialization, diffusion, and two update processes. Each stage plays a critical role in the overall search process. The initialization phase randomly establishes the initial population, while the diffusion process focuses on local exploitation. In contrast, the two update processes prioritize global exploration, enhancing solution accuracy and robustness. The steps for implementing the SFS are detailed as follows:

Figure 4: Flowchart of the SFS algorithm

Step 1: Define the relevant parameters and initialize the population using Eq. (1).

Step 2: Compute and evaluate the fitness values of all individuals in the population, then record the optimal individual.

Step 3: Each individual disperses around its current position. Eqs. (2) and (3) represent the diffusion equations.

Step 4: Determine the updated probability for all individuals in the population using Eq. (6). If

Step 5: The updated probability for the new individual is recalculated using Eq. (6). Likewise, if

Step 6: Continue repeating steps 2 to 5 until the loop’s termination condition is met.

Understanding algorithmic complexity is crucial for researchers to demonstrate an algorithm’s practicality and effectiveness. While some algorithms may achieve minimal error rates, extended computation times limit their applicability. High computational complexity often diminishes an algorithm’s appeal, impacting its usability and efficiency. Therefore, an algorithm’s effectiveness is not solely defined by error or convergence metrics; convergence speed and computational efficiency are equally vital considerations. In the SFS algorithm, computational complexity is primarily influenced by the initialization phase, the diffusion process, and the first and second update stages. The total computational complexity of SFS can be represented as follows:

where T denotes the maximum number of generations, D represents the dimensionality of the problem, N denotes the population size, and MDN represents the maximum diffusion number.

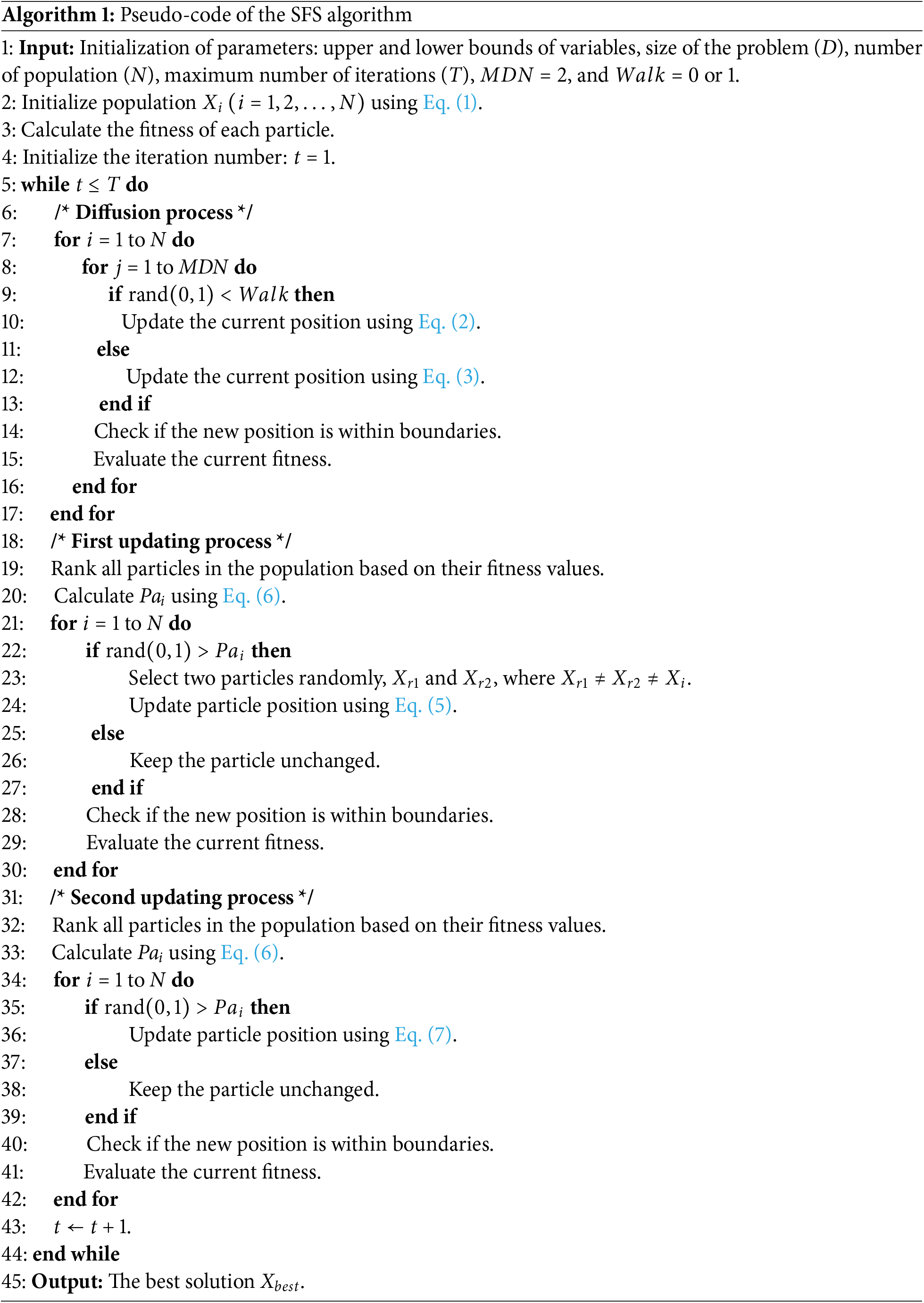

Algorithm 1 gives the pseudo-code of the SFS algorithm.

The effectiveness of meta-heuristic algorithms in addressing optimization challenges is significantly influenced by the strategies employed for generating new solutions and the associated parameter settings. An adaptive approach to parameter tuning is crucial for optimizing algorithm performance, especially when dealing with complex problems characterized by non-separability, poor conditioning, multimodality, or high dimensionality. While the SFS algorithm reduces the number of parameters inherent in basic FS, it still relies on fixed parameter values throughout generations. This reliance on static values can limit the algorithm’s adaptability, as optimal parameter settings can vary significantly across different problem types [62]. Furthermore, knowledge sharing between stages within the SFS algorithm remains limited. An adaptive scheme, leveraging optimal values from high-performing individuals, could enhance the generation of new solutions and improve the algorithm’s overall performance [63].

Additionally, the update process facilitates exploration, while the diffusion process focuses on exploitation. Achieving an optimal balance between these two stages in terms of function evaluations remains challenging. Specifically, the diffusion phase requires

3 The Popularity and Growth of the SFS Algorithm in the Literature

The SFS algorithm has garnered considerable attention in recent years due to its simplicity and versatility. This growing interest is reflected in a noticeable increase in published research and citations related to the SFS algorithm. To explore this trend, we conducted a comprehensive literature review using the Scopus1 database. Scopus offers advanced search capabilities, including regular expressions and complex query design, allowing for precise searches based on authors, article titles, and publication dates. The following criteria guided the search in the Scopus database:

• The search query was “Stochastic Fractal Search”.

• Only papers published in English were considered for inclusion.

• The timeframe for the search was restricted to articles published between 2015 and 2024.

• The data were collected on 01 October 2024.

In summary, the comprehensive search query executed in the Scopus database is as follows: (TITLE-ABS-KEY(“Stochastic Fractal Search”) AND PUBYEAR > 2014 AND PUBYEAR < 2025 AND (LIMIT-TO (LANGUAGE,“English”))). The results of this search are discussed below:

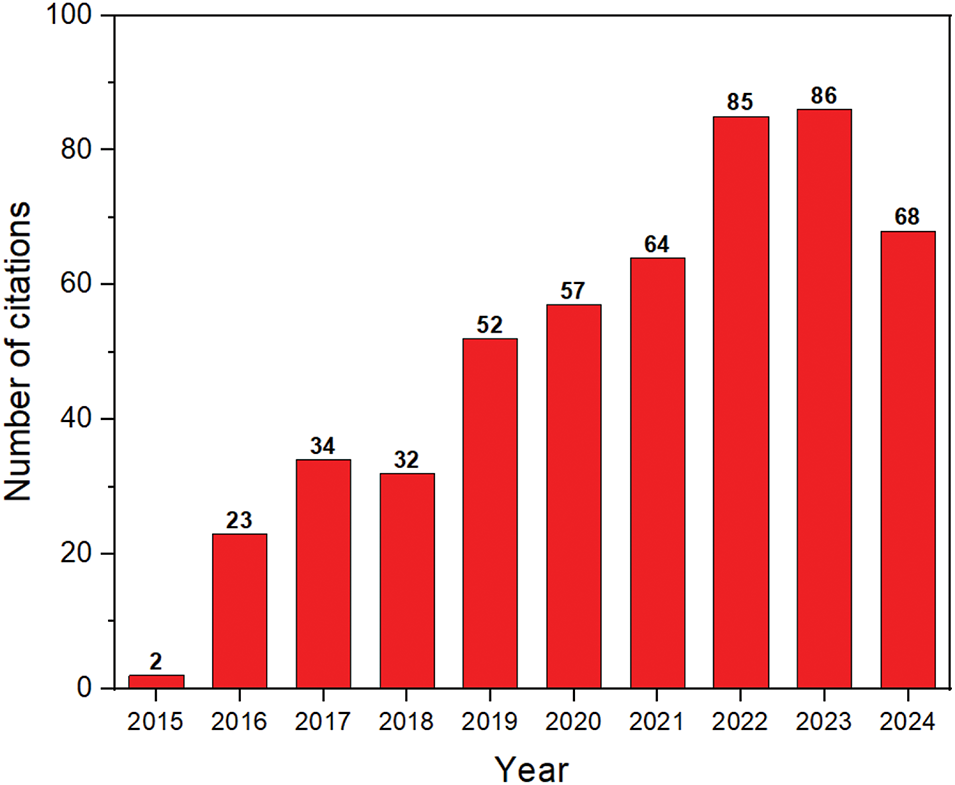

Based on statistical analysis of data from the Scopus database, Fig. 5 illustrates the cumulative citations of the foundational SFS paper from 2015 to 2024. The citation count commenced at 2 in 2015 and increased steadily to 23 in 2016, followed by a sharp rise in subsequent years. By 2019, the cumulative total had reached 52, reflecting the growing recognition and application of the SFS algorithm across numerous domains. The peak citation count was observed in 2023, with 86 citations, highlighting the algorithm’s significant impact on research. While the cumulative citation count is projected to exceed 68 in 2024, the overall trend indicates sustained interest and ongoing research into the algorithm’s applications.

Figure 5: Number of citations of SFS algorithm per year

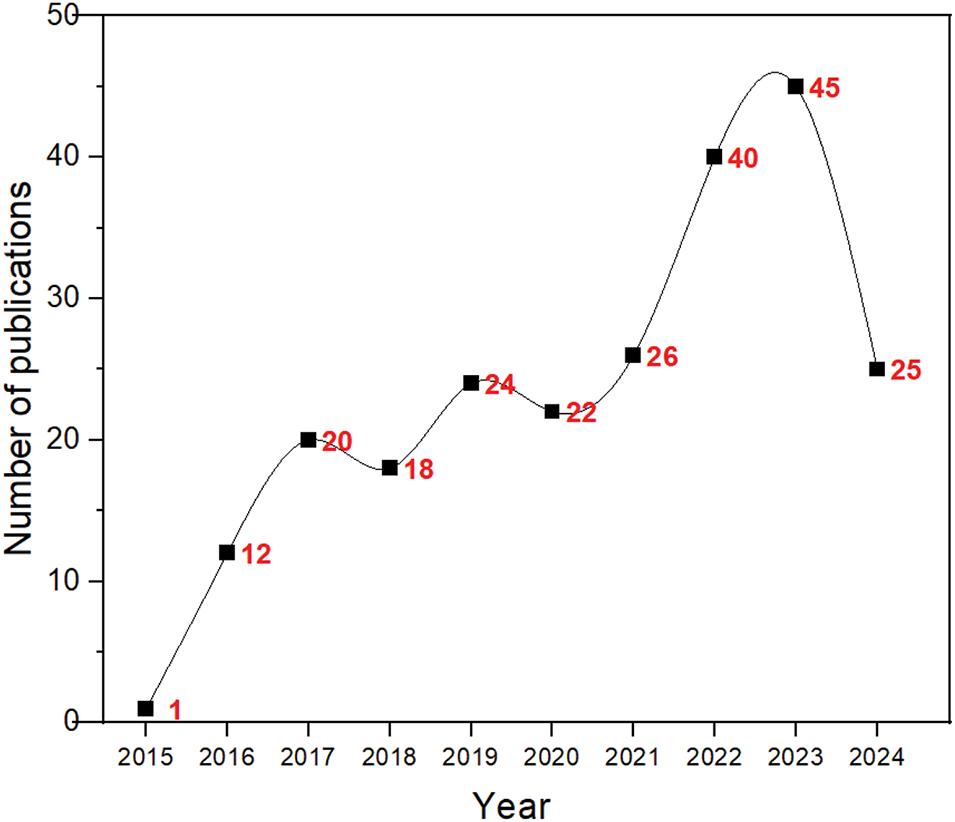

Fig. 6 illustrates the significant increase in publications related to the SFS algorithm from 2015 to 2024. Throughout this period, the SFS algorithm has experienced a notable surge in popularity across diverse research domains. Starting with only one publication in 2015, the number of SFS-related publications steadily increased to 12 in 2016 and doubled to 24 by 2019, reflecting growing acceptance within the scientific community. In subsequent years, the SFS algorithm continued to gain momentum, reaching 40 publications in 2022 and peaking at 45 by the end of 2023. This substantial growth underscores the algorithm’s extensive applicability across diverse fields, solidifying its position as a robust optimization method. The projected publication count for 2024 remains strong at 25, indicating sustained interest and ongoing research into the SFS algorithm among researchers.

Figure 6: Number of publication of SFS algorithm per year

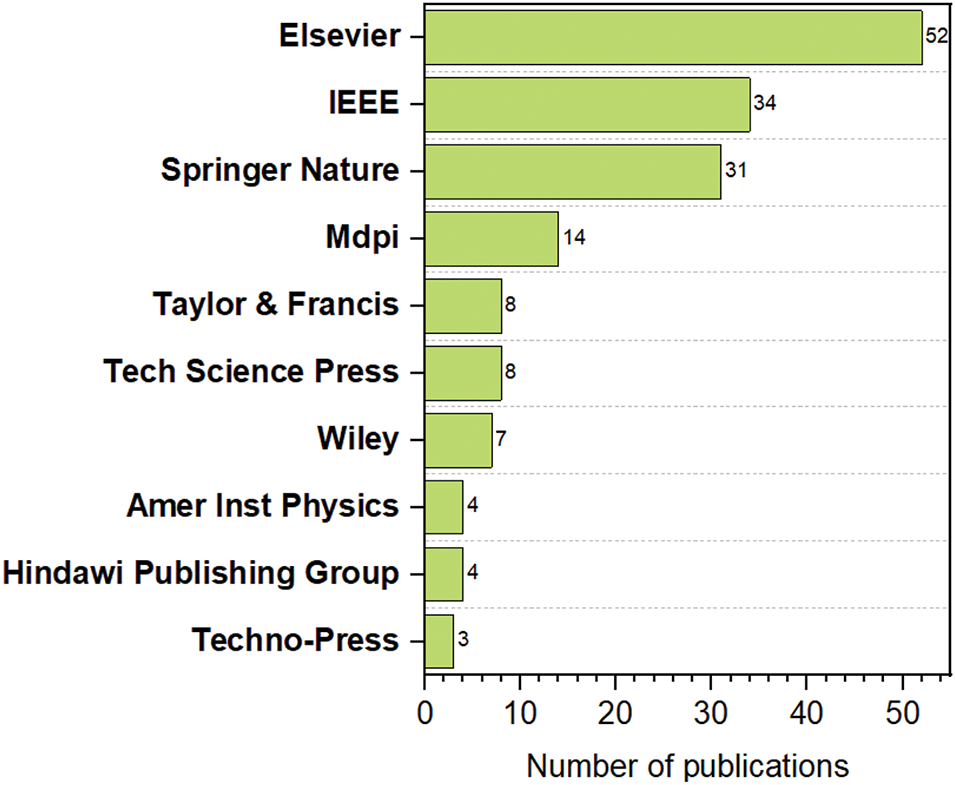

Fig. 7 graphically illustrates the leading publishers who have published studies on the SFS algorithm in several journals. Elsevier leads the list with 52 published articles, followed by IEEE with 34 and Springer with 31. MDPI has contributed 14 papers, while the remaining publications are distributed among diverse other publishers, as depicted in Fig. 7. The prominent presence of SFS research in reputable publishing outlets such as Elsevier, IEEE, and Springer underscores the algorithm’s strong theoretical foundation and notable attributes.

Figure 7: Number of publication of SFS algorithm per publisher

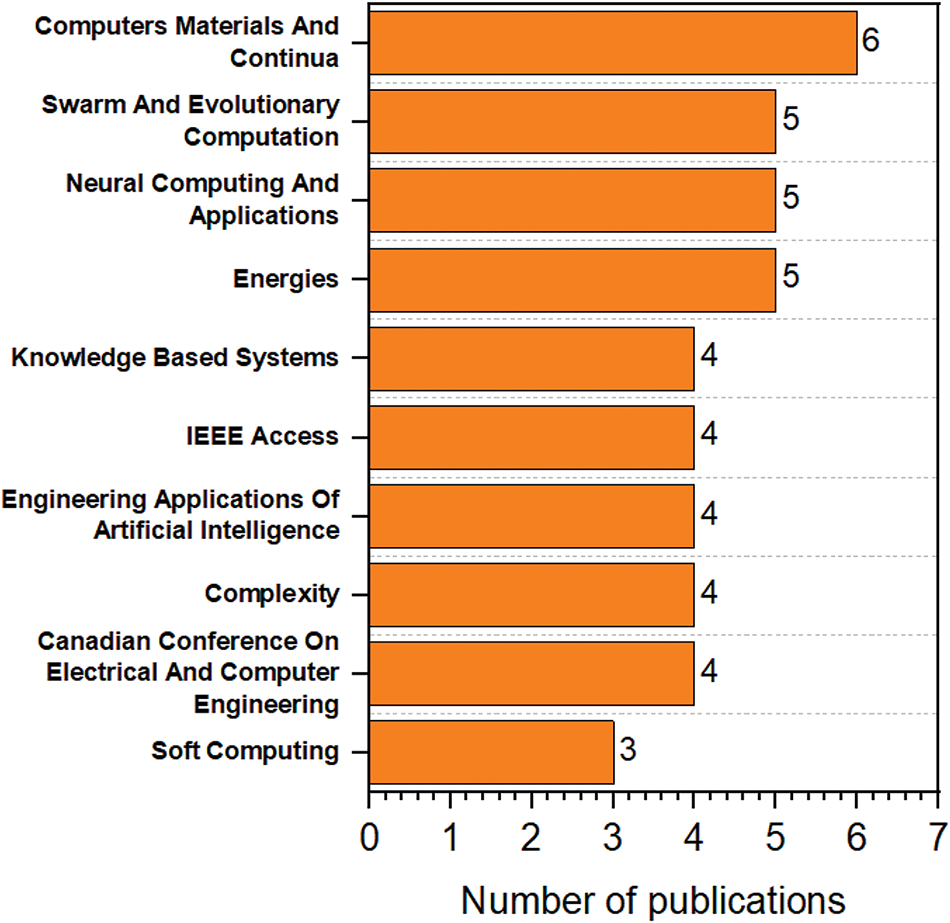

Among the journals, the “Computers Materials and Continua” journal has published the most SFS-related articles, totaling six publications. Meanwhile, the “Swarm and Evolutionary Computation”, “Neural Computing and Applications”, and “Energies” journals each have five articles, as depicted in Fig. 8. Additionally, the “Knowledge Based Systems”, “IEEE Access”, “Engineering Applications of Artificial Intelligence”, “Complexity”, and “Canadian Conference on Electrical and Computer Engineering” journals have each contributed four articles focused on applying the SFS algorithm to numerous optimization problems. On the other hand, the “Soft Computing” journal has published three articles.

Figure 8: Number of publication of SFS algorithm per journal

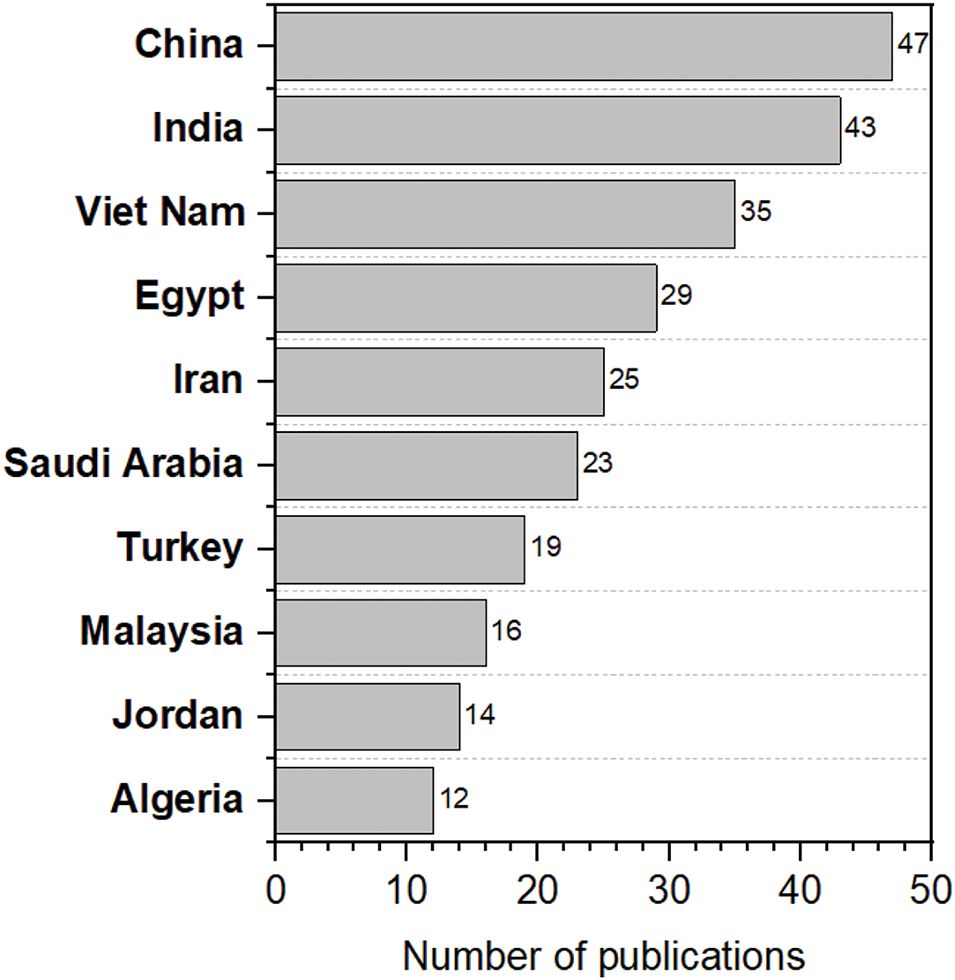

Chinese researchers are the most prolific contributors to the SFS algorithm literature, with 47 published articles. They are closely followed by Indian researchers with 43 publications and Vietnamese researchers with 35 articles. Egyptian researchers have published 29 articles, while Iranian and Saudi Arabian researchers have 25 and 23 publications, respectively. Furthermore, Turkish, Malaysian, and Jordanian researchers have published 19, 16, and 14 articles, respectively, with Algerian researchers contributing 12, as illustrated in Fig. 9.

Figure 9: Number of publication of SFS algorithm per country

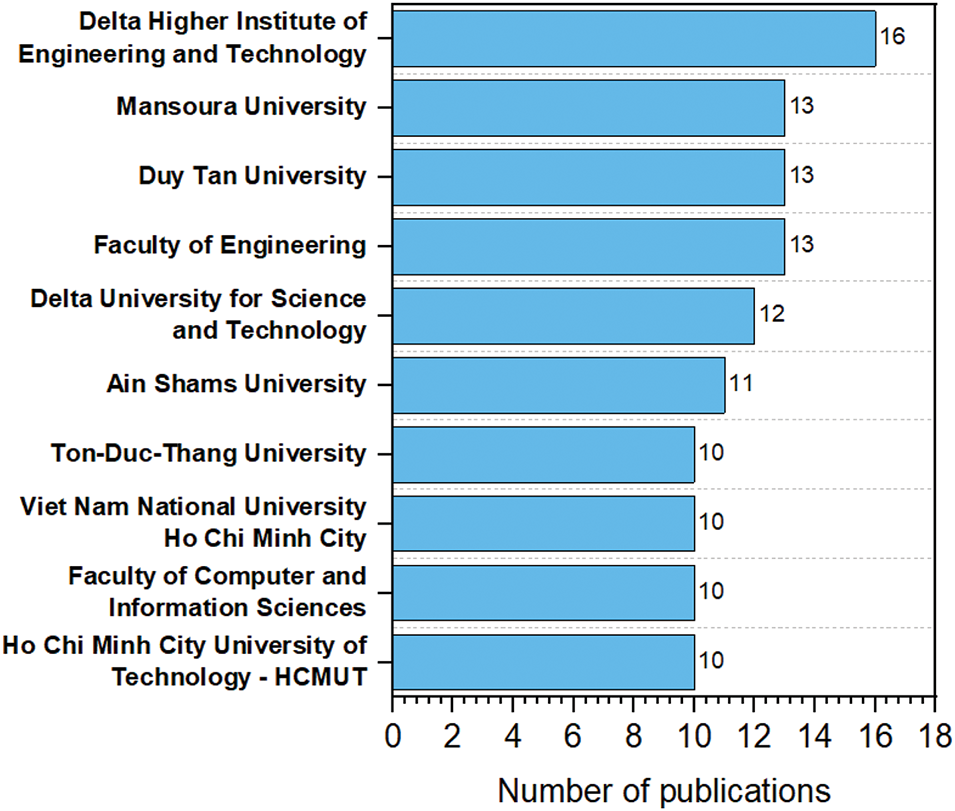

Regarding SFS publications by affiliation, researchers from the “Delta Higher Institute of Engineering and Technology” have published over 16 studies related to the SFS algorithm, as illustrated in Fig. 10. Researchers from “Mansoura University,” “Duy Tan University,” and the “Faculty of Engineering” have each published more than 13 research papers on SFS. Researchers from “Delta University for Science and Technology” and “Ain Shams University” have contributed 12 and 11 SFS-related studies, respectively. In contrast, the remaining affiliations listed in Fig. 10 have each published ten or fewer articles.

Figure 10: Number of publication of SFS algorithm per affiliation

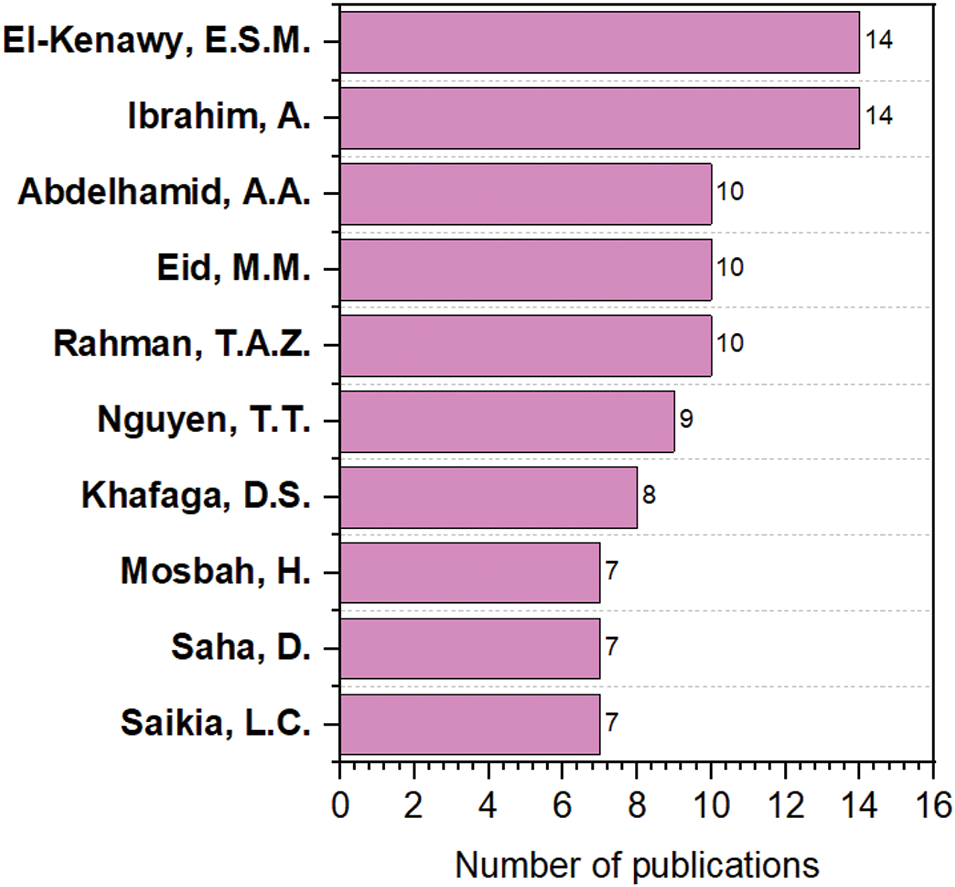

Fig. 11 presents the top 10 most prolific researchers applying the SFS algorithm and its variants to optimization problems. “Ibrahim, A.” and “El-Kenawy, E.S.M.” are the leading contributors, each with 14 publications. They are followed by “Eid, M.M.”, “Abdelhamid, A.A.”, and “Rahman, T.A.Z.”, each of whom has contributed 10 articles. The remaining authors listed in the figure have published nine or fewer articles.

Figure 11: Number of SFS algorithm publication per author

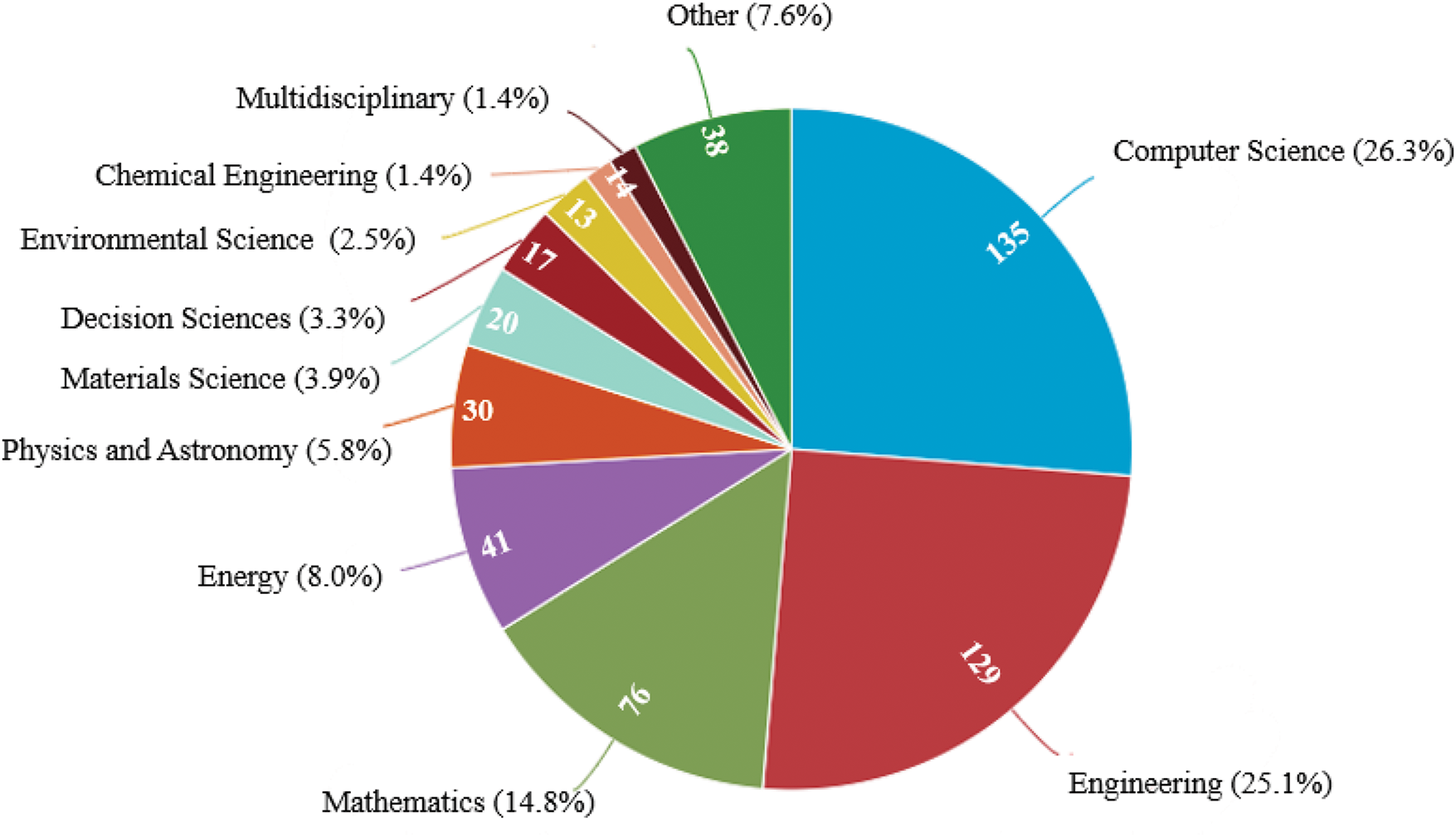

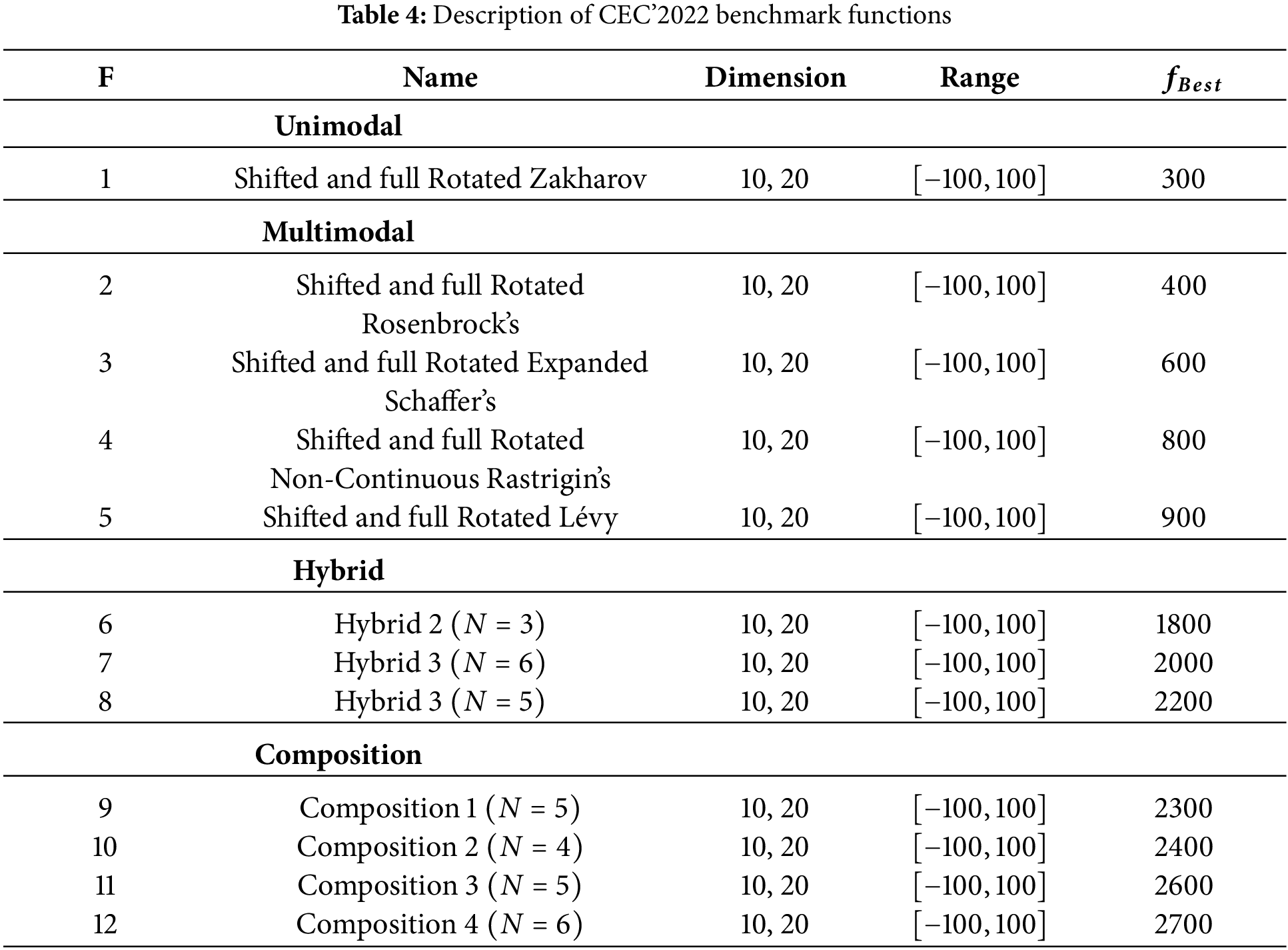

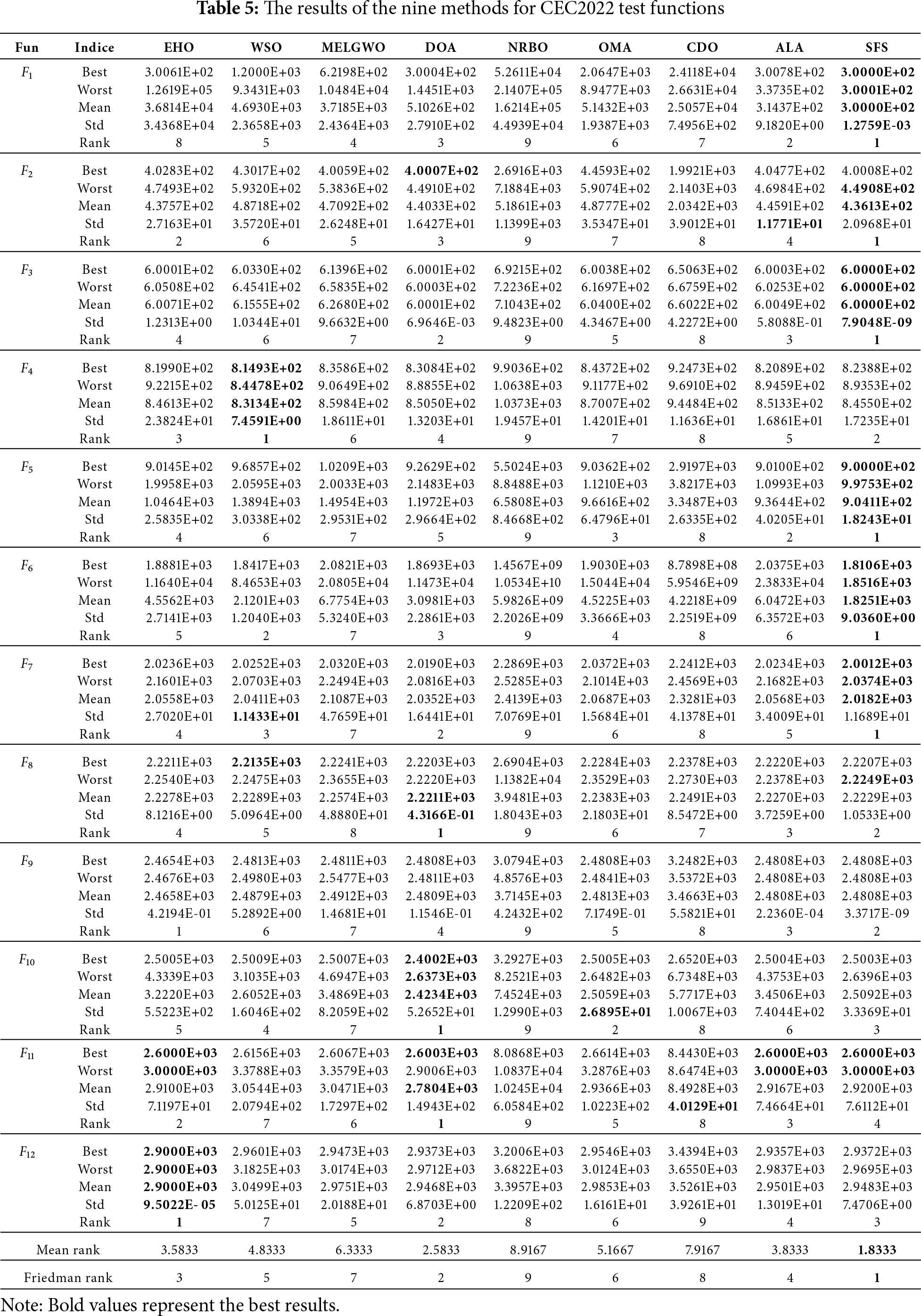

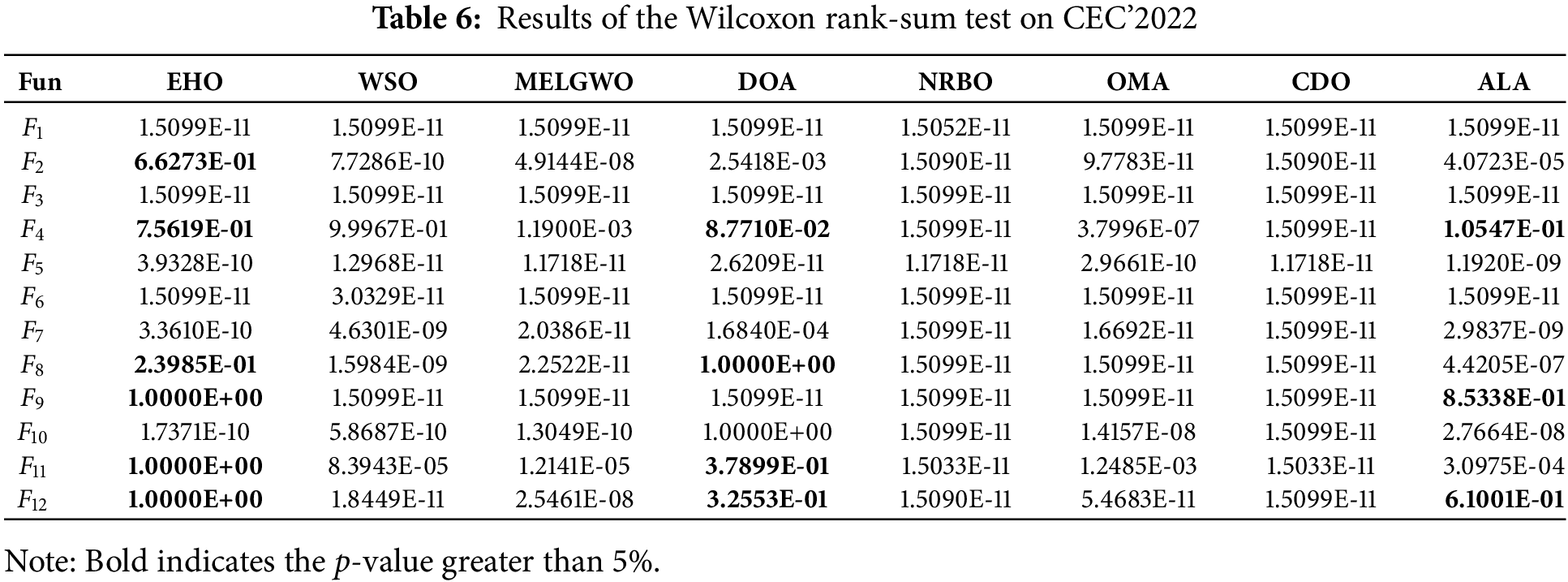

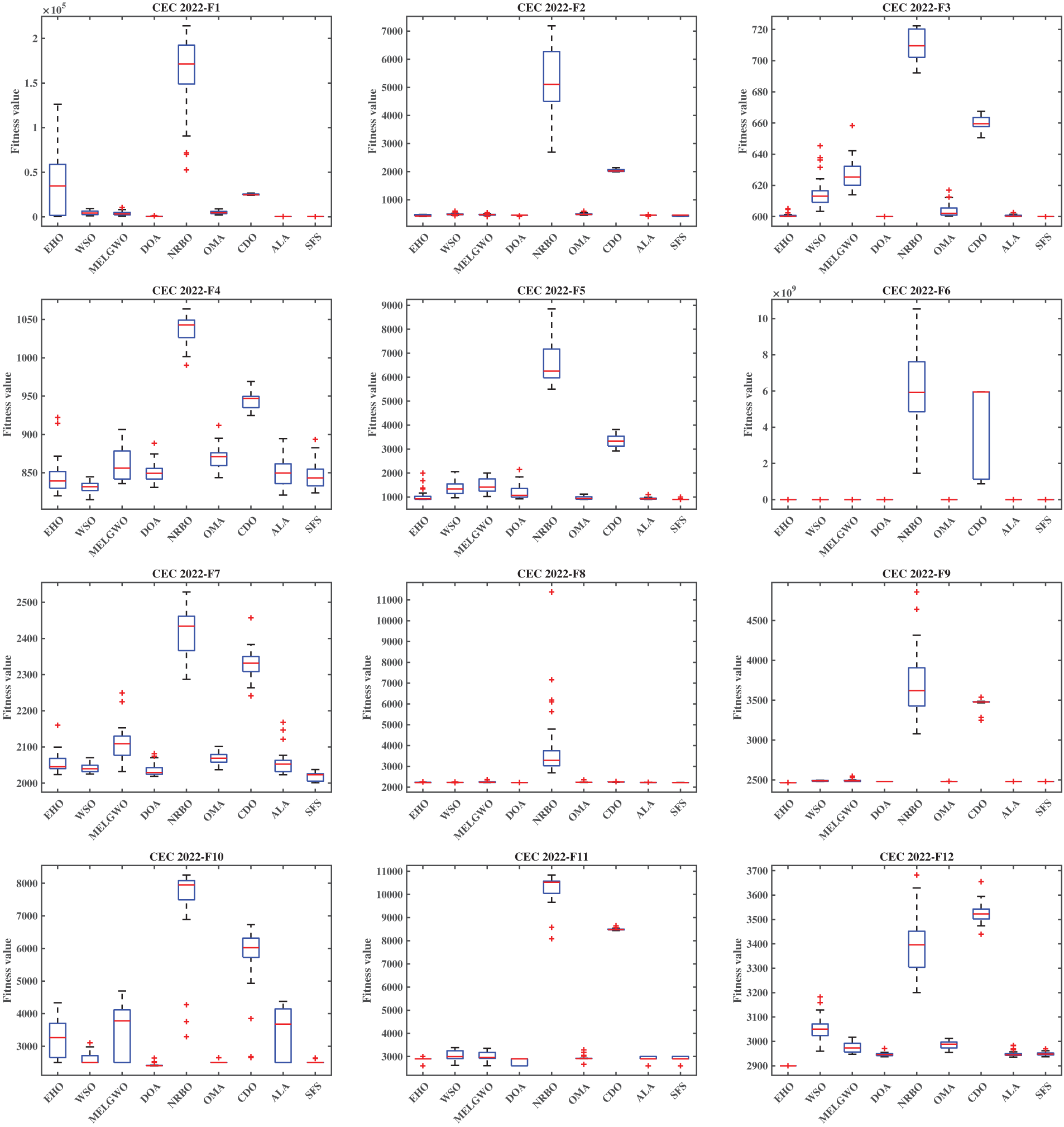

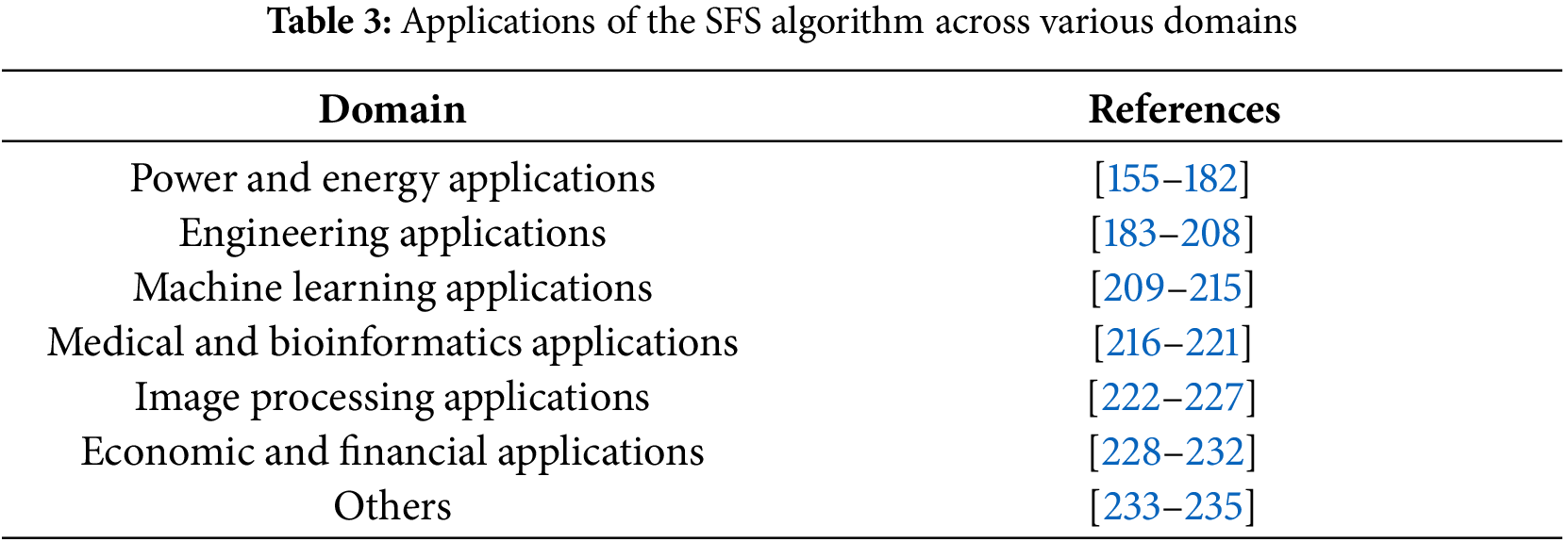

Finally, the SFS algorithm has been successfully applied to diverse optimization problems. Fig. 12 categorizes these applications into ten primary research areas. According to the Scopus dataset, over 50% of SFS-related articles are concentrated in engineering and computer science, with the algorithm employed 135 times for computer science challenges and 129 times for engineering-related issues. Furthermore, the SFS algorithm has been applied 76 times to mathematical problems and 41 times to energy-related challenges. Other research domains, including chemical engineering, environmental science, decision sciences, materials science, physics and astronomy, and multidisciplinary studies, have fewer than 30 publications each.

Figure 12: Distribution of SFS algorithm application per domain

4 Variants of the SFS Algorithm

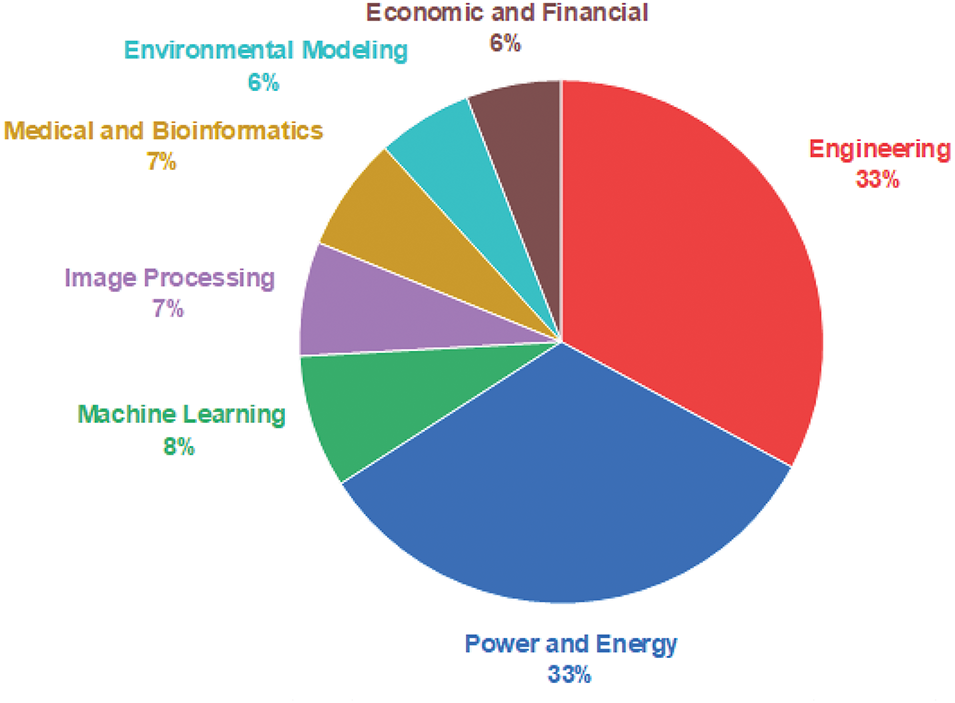

The original SFS algorithm was developed to tackle numerical optimization problems, with its performance evaluated on standard benchmark functions, similar to other meta-heuristic approaches. Several enhancements have been introduced to adapt SFS for problems with distinct characteristics or complex structures, including modified, hybridized, and multi-objective versions. A concise summary of each version and relevant examples from the literature are provided below.

4.1 Modified Versions of the SFS Algorithm

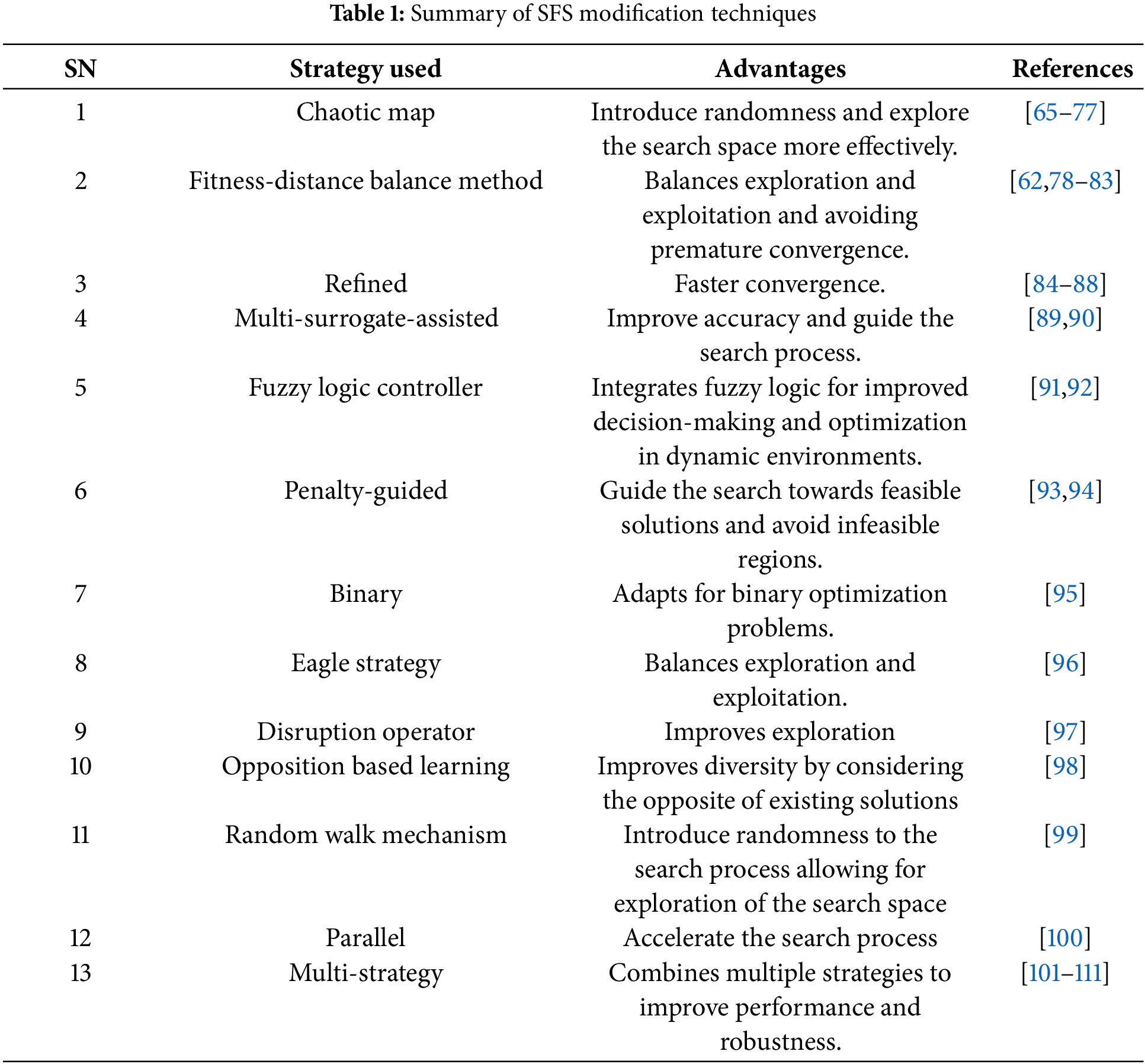

The effectiveness and robustness of the SFS algorithm in addressing diverse optimization problems depend significantly on the complexity and intricacies of the search space. To navigate these challenges, researchers have introduced modifications to the fundamental framework of the SFS algorithm. Table 1 summarizes these modification approaches, which are discussed in detail in the subsequent sections.

Rahman et al. [65] proposed several modifications to the SFS algorithm to improve its convergence speed and accuracy for diverse optimization problems. The authors enhanced the localized search through Gaussian jump adjustments by integrating five chaotic maps, particularly the Gauss/Mouse map. Later, Rahman et al. [66] introduced the Chaotic SFS (CSFS) algorithm to optimize an AutoRegressive exogenous (ARX) model for a Twin Rotor System (TRS) operating in hovering mode. The CSFS algorithm outperformed other SFS variants primarily due to its enhanced diffusion and update processes.

The CSFS algorithm was further employed by Rahman et al. [67] to optimize the parameters of Support Vector Machines (SVM) for diagnosing ball-bearing conditions. Utilizing vibration data from the Case Western Reserve University Bearing Data Center, their approach significantly improved the classifier’s convergence speed and accuracy.

Expanding their research, Rahman et al. [68] applied the CSFS algorithm to active vibration control of flexible beam structures. The CSFS algorithm achieved substantial vibration suppression when integrated with a PID controller. Furthermore, it demonstrated enhanced optimization capabilities for PID and Proportional-Derivative (PD) fuzzy logic controllers in a twin-rotor system [69].

In 2019, Rahman et al. [70] utilized the CSFS algorithm to model a flexible beam structure with Feedforward Neural Networks (FNNs) for active vibration control. This method achieved superior convergence rates and accuracy compared to other meta-heuristic algorithms. They also explored the application of the CSFS algorithm for training FNNs in the Structural Health Monitoring (SHM) of aircraft structures [71], employing experimental spectral testing and Principal Component Analysis (PCA) to enhance vibration data analysis.

Furthermore, Rahman et al. [72] introduced the CSFS algorithm to optimize an ANN’s weight and bias parameters for predicting glove transmissibility to the human hand. Through experimental data collection, their method achieved an impressive average accuracy of 97.67% in estimating the human hand’s apparent mass.

In another study, Çelik et al. [73] introduced an improved SFS (ISFS) that integrates chaos-based search mechanisms and a modified cost function to address automatic generation control (AGC) challenges in power systems. The study optimizes the gains of a PID controller for models such as a two-area non-reheat thermal system and a three-area hydro-thermal power plant, minimizing a cost function that includes integral time absolute error (ITAE) along with frequency and tie-line power deviations. The results show that the ISFS-tuned PID controller outperforms the traditional SFS in terms of settling times and oscillations, demonstrating improved tuning capabilities and convergence speed, marking a significant advancement in power system optimization.

Moreover, Bingöl et al. [74] enhanced the SFS algorithm by integrating chaotic map values to improve accuracy and convergence speed. The authors evaluated the improved SFS against the original using seven classical mathematical benchmark functions. The main improvement involved modifying the Gaussian walk function in the diffusion process by adding chaotic map values to optimize the step length for a better local search. Simulation results showed that seven of the ten chaotic maps tested significantly enhanced the performance of the original SFS, demonstrating superior convergence and accuracy.

Following this, Nguyen et al. [75] developed the CSFS algorithm to optimize the sizing, placement, and quantity of distributed generation (DG) units in electrical distribution systems. The method aims to minimize power losses while meeting constraints such as power balance and voltage limits. By incorporating chaotic maps into the traditional SFS, the CSFS improves solution accuracy and convergence speed, using ten chaotic variants to determine the best strategy. Validation on the IEEE 33/69/118-bus systems demonstrated that CSFS outperformed the original SFS and other optimization methods, showcasing its effectiveness for optimal DG placement.

Additionally, Tran et al. [76] introduced the CSFS algorithm to address the reconfiguration problem in distribution systems, focusing on minimizing power loss and enhancing voltage profiles. This method improves the traditional SFS algorithm by using a Gaussian walk for point generation and an update mechanism to refine particle positions. The CSFS enhances diffusion efficiency by incorporating chaos theory, accelerating convergence and solution discovery. Validation on several test systems, including the 33/84/119/136-bus networks, demonstrated that CSFS outperforms existing optimization methods, establishing it as a promising approach for solving the reconfiguration problem in electrical distribution systems.

Finally, Duong et al. [77] proposed the Chaotic Maps Integrated SFS (CMSFS) algorithm to address the optimal distributed generation placement (ODGP) problem in radial distribution networks. The primary objective was to minimize real power losses while adhering to the operational constraints of distributed generations (DGs) and the network. CMSFS significantly improved solution quality and convergence rates by enhancing the standard SFS with chaotic maps. Experimental validations on the IEEE 33/69/118-bus networks demonstrated power loss reductions of 99.21%, 99.43%, and 92.36%, respectively, with optimally placed DGs having non-unity power factors. The results indicate that CMSFS outperforms several existing optimization methods, positioning it as a promising solution for ODGP challenges in radial distribution networks.

4.1.2 Fitness-Distance Balance-Based SFS Algorithm

Aras et al. [62] introduced the Fitness-Distance Balance SFS (FDBSFS), an enhanced version of the SFS algorithm designed to improve the balance between exploration and exploitation. By integrating a novel diversity operator based on the Fitness-Distance Balance method, FDBSFS effectively addresses the diversity issues present in the original SFS. Experimental evaluations involving thirty-nine meta-heuristic algorithms and several test functions demonstrated that FDBSFS significantly mitigated premature convergence and outperformed other algorithms, showcasing its superior capabilities in solving complex optimization problems.

Furthermore, Dalcali et al. [78] developed a hybrid model combining multiple linear regression with a feedforward artificial neural network (MLR-FFANN) to enhance electricity consumption forecasting in Bursa, Turkey, particularly during the COVID-19 pandemic. The model optimizes polynomial coefficients and neural network parameters using multiple algorithms, including the SFS-based Fitness Distance Balance (SFSFDB), slime mold algorithm, equilibrium optimizer, and adaptive guided differential evolution. The optimized MLR-FFANN model was evaluated using metrics such as root mean square error (RMSE) and mean absolute error (MAE), showing that the SFSFDB-optimized model outperformed others, effectively forecasting energy demand during the pandemic and improving resource management in the energy sector.

In another work, Ramezani et al. [79] introduced a Markovian process-based method to enhance the Reliability-Redundancy Allocation Problem (RRAP), focusing on the reliability of cold-standby systems with imperfect switching mechanisms. The authors adapted the Fitness-Distance Balance SFS (FDBSFS) algorithm to address this NP-hard optimization problem. Numerical experiments on benchmark problems demonstrated the algorithm’s effectiveness, and a practical case study involving a pump system in a chemical plant further highlighted its applicability in real-world scenarios.

Moreover, Duman et al. [80] introduced the Adaptive Fitness-Distance Balance Selection-based SFS (AFDB-SFS) algorithm to optimize the complex optimal power flow (OPF) problem in power systems with renewable energy resources (RESs). Evaluated under diverse scenarios involving tidal, small hydro, solar, wind, and thermal systems, the AFDB-SFS algorithm effectively addressed load demand uncertainties. Statistical comparisons showed that AFDB-SFS achieved cost optimization improvements ranging from 0.0954% to 7.6244% over other algorithms. It also offered faster convergence to optimal solutions, highlighting its effectiveness in enhancing efficiency and reliability in modern power systems.

Following this, Bakir et al. [81] introduced the Fitness-Distance Balance-based SFS (FDB-SFS) algorithm to improve solar PV system performance by accurately estimating the electrical parameters of solar cells. Compared with PSO, SPBO, and AGDE, the FDB-SFS achieved the lowest RMSE values, demonstrating superior accuracy. This study highlights FDB-SFS as an effective method for parameter extraction, enhancing efficiency in solar energy applications.

In a related study, Bakir et al. [82] introduced an optimized power flow (OPF) model that integrates renewable energy sources with voltage source converter-based multiterminal direct current (VSC-MTDC) transmission lines. To tackle the OPF problem, the authors utilized several meta-heuristic algorithms, including atom search optimization, marine predators algorithm, adaptive guided differential evolution, SFS algorithm, and FDB-SFS. Their study on a modified IEEE 30-bus power network revealed that FDB-SFS outperformed SFS and other algorithms in minimizing fuel costs, emissions, voltage deviation, and power loss. Statistical tests validated FDB-SFS’s superior performance and robustness for OPF optimization.

Finally, Kahraman et al. [83] introduced an enhanced SFS algorithm with a dynamic fitness-distance balance (dFDB) selection method to improve optimization capabilities. Six dFDB-SFS variants were developed to balance exploration and exploitation, replicating natural selection processes. Tested on CEC 2020 benchmark functions, the best-performing variant was identified and applied to parameter estimation for PV modules. The dFDB-SFS outperformed existing algorithms in accuracy and robustness for single and double-diode models, demonstrating its potential in PV system design and engineering applications.

Nguyen et al. [84] proposed a modified SFS algorithm (MSFS) to optimize reactive power dispatch (ORPD) by minimizing the L-index, voltage deviation, and power loss. Key enhancements include simplifying the diffusion process and refining solution updates, resulting in fewer solutions per iteration and improved execution time and solution quality. Tests on IEEE 30/118-bus systems demonstrated that MSFS outperformed other optimization methods, such as PSO and GA, confirming its robustness and efficiency in solving ORPD problems.

Furthermore, Pham et al. [85] introduced the MSFS to tackle the economic load dispatch (ELD) problem, addressing complexities such as prohibited operating zones and valve-point effects. Key modifications include a new strategy for generating solutions and an update mechanism prioritizing the worst solutions first. Testing on 3/6/10/20-unit configurations showed that MSFS outperformed conventional SFS and other methods in terms of solution quality, stability, and convergence speed, highlighting its potential as a robust optimization technique for ELD and related electrical engineering applications.

In another work, Nguyen et al. [86] introduced an improved version of the SFS algorithm (ISFSA) to optimize the optimal power flow (OPF) problem by addressing five single objectives while satisfying key system constraints. ISFSA improves upon the original SFS by refining its diffusion and update processes, enhancing the algorithm’s ability to find optimal solutions. Tested on three standard IEEE power systems, ISFSA outperformed conventional SFS and other existing methods in terms of solution quality, speed, and success rate. The results indicate that ISFSA effectively reduces fuel costs, power losses, and emissions while improving voltage profiles, making it a recommended approach for high-voltage power systems.

Following this, Van et al. [87] introduced an improved SFS optimization algorithm (ISFSOA) to tackle the ORPD problem, minimizing total voltage deviation and total power loss while enhancing voltage stability. By improving the diffusion process of the original SFS algorithm, ISFSOA achieves more effective exploration of large search spaces and better exploitation of local zones. Comparative analysis using the IEEE 30-bus system demonstrated that ISFSOA outperforms standard SFSOA and other methods in terms of solution quality, stability, and robustness, making it highly suitable for complex engineering optimization tasks.

Finally, Xu et al. [88] developed a modified SFS algorithm to accurately estimate the unknown parameters of solar cell models, including single- and double-diode models. Key enhancements include adjustments to the diffusion and update processes and a mechanism to reduce population size, thereby improving implementation and performance. The modified algorithm was tested on three benchmark cases and compared with seven advanced algorithms, demonstrating superior accuracy, faster convergence, and enhanced stability. It consistently achieved optimal solutions with low root mean square error (RMSE) values across several photovoltaic modules, highlighting its effectiveness for parameter estimation in solar energy applications.

4.1.4 Multi-Surrogate-Assisted SFS Algorithm

Cheng et al. [89] introduced a multi-surrogate-assisted SFS (MSASFS) algorithm to address high-dimensional, computationally intensive problems. Key enhancements include an improved surrogate-assisted differential evolution (SDE) updating mechanism combining coordinate systems for better exploration and a pre-screening strategy using a Gaussian process model to identify promising solutions. Additionally, two surrogate models are employed to enhance robustness. Numerical experiments demonstrated that MSASFS outperforms other surrogate-assisted algorithms, particularly in high-dimensional problems and chaotic system parameter estimation.

In another study, Cheng et al. [90] introduced the scale-free network-based multi-surrogate-assisted SFS (SF-MSASFS) algorithm. This variant integrates multiple surrogate models, such as Radial Basis Function (RBF) and Kriging, to boost robustness and efficiency. Leveraging a scale-free network topology, SF-MSASFS enhances particle interaction and offspring generation. An adaptive mechanism with three update strategies based on reward values further increases adaptability. Tested against advanced surrogate-assisted meta-heuristic algorithms, SF-MSASFS demonstrated superior performance on high-dimensional expensive optimization problems.

4.1.5 Fuzzy Logic Controller-Based SFS Algorithm

Lagunes et al. [91] developed the Stochastic Fractal Dynamic Search (SFDS) algorithm that incorporates a fuzzy inference system for the dynamic adjustment of the diffusion parameter within the SFS algorithm. This innovation enhances SFS’s adaptability and performance, as shown in tests against the CEC 2017 benchmark functions. The results demonstrated that SFDS significantly improved SFS’s optimization capabilities, highlighting its robustness and versatility for complex optimization tasks.

Similarly, Lagunes et al. [92] introduced the Dynamic SFS (DSFS), an enhanced version of the SFS algorithm that uses fuzzy logic to manage the diffusion parameter adaptively. The DSFS incorporates chaotic stochastic diffusion and type-1 and type-2 fuzzy inference models to dynamically adjust the diffusion parameter based on particle diversity and iteration. Tested on multimodal, hybrid, and composite benchmark functions, DSFS demonstrated improved adaptability and performance, effectively generating new fractal particles for complex optimization tasks.

4.1.6 Penalty-Guided SFS Algorithm

Mellal et al. [93] introduced the penalty-guided SFS algorithm for reliability optimization problems, tested on ten case studies, including redundancy and reliability allocation. The results showed that this approach outperformed previous methods in terms of solution accuracy, robustness, and computational efficiency. With smaller standard deviations and fewer function evaluations, the penalty-guided SFS proved highly effective in optimizing system reliability across diverse scenarios.

In another work, Mellal et al. [94] introduced the penalty-guided SFS (PSFS) algorithm to address the reliability-redundancy allocation problem. Compared to GA and cuckoo optimization with penalty functions (PFCOA), PSFS demonstrated superior performance in system reliability and execution efficiency. The use of dynamic penalty factors in PSFS was a key advantage, enhancing its effectiveness for this optimization problem, particularly in systems with ten and thirty subsystems in series.

Hosny et al. [95] introduced an algorithm for classifying galaxy images that leverages quaternion polar complex exponential transform moments (QPCET) to capture color information. The authors used a binary SFS algorithm combined with Extreme Machine Learning (EML) to optimize feature selection, enhancing classification accuracy. Testing on the EFIGI galaxy dataset demonstrated that this method outperformed existing approaches, achieving high accuracy.

4.1.8 Eagle Strategy-Based SFS Algorithm

Das et al. [96] introduced a fuzzy clustering methodology that integrates a two-stage Eagle Strategy with SFS for better segmentation of white blood cells (WBC) in Acute Lymphoblastic Leukemia (ALL) images. This method overcomes the limitations of classical clustering techniques, such as Fuzzy C-means and K-means, which are sensitive to noise and local optima. By incorporating morphological reconstruction, the approach enhances noise immunity and cluster robustness. Comparative experiments demonstrated that the ES-SFS-based method outperformed traditional clustering techniques in terms of accuracy, computational efficiency, and robustness.

4.1.9 Disruption Operator-Based SFS Algorithm

Xu et al. [97] introduced an Improved SFS algorithm (ISFS) for optimizing the joint operation of cascade reservoirs, aiming to improve flood control and maximize hydropower generation. ISFS effectively manages the complex, high-dimensional challenges of long-term hydropower operations by incorporating a disruption operator. Tested on 13 benchmark functions, ISFS demonstrated superior optimization capabilities, and simulations on the Yangtze River cascade reservoirs showed faster convergence and higher solution quality, enhancing overall power generation.

4.1.10 Opposition-Based Learning SFS Algorithm

Gonzalez et al. [98] introduced a hybrid optimization technique that combines the SFS algorithm with an opposition-based learning strategy to design high-quality substitution boxes (S-boxes) for cryptographic systems. The method uses a sequential model algorithm configuration for parameter tuning, focusing on maximizing nonlinearity. Validated against cryptographic criteria such as bijectivity and the strict avalanche criterion, the designed S-boxes demonstrated superior performance, enhancing cryptographic security and robustness.

4.1.11 Random Walk Mechanism-Based SFS Algorithm

Pham et al. [99] introduced the SFS algorithm to address the economic load dispatch (ELD) problem, proposing two variants: SFS-Gauss, which uses a Gaussian random walk, and SFS-Lévy, which employs a Lévy flight random walk. Tested on three systems with varying units, both variants outperformed standard, modified, and hybrid optimization methods, delivering high solution quality and faster convergence for the ELD problem.

Najmi et al. [100] introduced the parallel SFS algorithm to solve the reliability-redundancy allocation problem (RRAP) within a realistic framework that accommodates heterogeneous components and tailored redundancy strategies for each subsystem. Using an exact Markov model to calculate subsystem reliability, the approach was tested on three benchmark cases, demonstrating significant improvements over existing models and proving effective in enhancing system reliability through stochastic fractal search.

4.1.13 Multi-Strategy SFS Algorithm

Lin et al. [101] proposed a hybrid SFS (HSFS) algorithm for parameter identification in fractional-order chaotic systems. This algorithm integrates several advanced techniques to enhance optimization performance, such as the opposition-based learning method to improve population diversity and accelerate convergence, and the differential evolution algorithm for better exploitation in the search process. Additionally, a re-initialization mechanism is employed to avoid local optima. The HSFS was tested on three fractional-order chaotic systems, and numerical simulations showed that it outperforms existing methods in global optimization.

Furthermore, Pang et al. [102] developed an adaptive decision-making framework to optimize conflict resolution strategies for unmanned aircraft systems (UAS) in urban environments. The framework addresses low-altitude air traffic management through a double-layer problem incorporating rerouting, speed adjustment, and scheduling. It enhances the SFS algorithm with a conflict penalty-guided fitness function that prioritizes solutions with fewer flight conflicts. It also incorporates an exploration and exploitation balance strategy to improve search diversity. Simulation results demonstrate significant optimization of 4D flight routes, reducing operational costs, flight conflicts, and delays while showcasing the effectiveness of the improved SFS algorithm across varying traffic densities.

In another study, Zhou et al. [103] developed an improved Stochastic Fractal Search algorithm (ISFS) to solve the protein structure prediction (PSP) problem, which is crucial for biological research and drug development. By transforming the prediction into a non-linear programming problem using an AB off-lattice model, ISFS addresses the limitations of traditional SFS by incorporating Lévy flight strategies and internal feedback mechanisms to enhance search efficiency. Simulations on Fibonacci and real peptide sequences showed that ISFS significantly improved performance, demonstrating greater robustness in finding global minima while avoiding local traps, highlighting its potential for complex optimization challenges in biology.

Moreover, Mosbah et al. [104] developed a modified SFS (M-SFS) technique to enhance accuracy and computational efficiency in power system state estimation (PSSE). Key modifications include replacing the logarithmic function in the diffusion process with benchmark functions and incorporating chaotic maps instead of uniform distribution parameters during the diffusion and updating phases. These enhancements significantly improved algorithm performance. Validated on IEEE 30/57/118-bus systems, M-SFS demonstrated superior accuracy and faster computational times than the original SFS technique.

Following this, Lin et al. [105] introduced an improved SFS (ISFS) algorithm to address the complex multi-area economic dispatch (MAED) problem, characterized by high non-linearity and non-convexity. The ISFS enhances the exploration-exploitation balance through opposition-based learning for population initialization and generation jumping. It incorporates a hybrid diffusion process using differential evolution to improve local search capabilities. A repair-based penalty approach is also integrated to generate feasible solutions efficiently. Tested on systems with 16 to 120 generating units, ISFS outperformed state-of-the-art algorithms in solving MAED problems effectively and robustly.

Additionally, Chen et al. [106] proposed the perturbed SFS (pSFS) algorithm to improve parameter estimation in PV systems, addressing challenges related to non-linearity and multi-modality. This innovative meta-heuristic integrates unique searching operators to balance global exploration with local exploitation. Furthermore, pSFS employs a chaotic elitist perturbation strategy to enhance search performance. Demonstrated across three PV estimation scenarios, experimental results showed that pSFS significantly outperformed several recent algorithms, achieving higher estimation accuracy and robustness in solar PV modeling.

In other research, Nguyen et al. [107] introduced the Improved SFS (ISFS) algorithm, incorporating Chaotic Local Search (CLS) and Quasi-opposition-Based Learning (QOBL) mechanisms to tackle the optimal capacitor placement (OCP) problem in radial distribution networks (RDNs). This approach minimizes the total annual cost while meeting operational constraints. Tested on IEEE 69/119/152-bus RDNs, ISFS outperformed the original SFS and other methods, particularly in large-scale, complex networks, demonstrating high-quality solutions and robust performance.

Similarly, Kien et al. [108] introduced a modified SFS (MSFS) algorithm to optimize capacitor bank placement and sizing in distribution systems comprising 33/69/85 buses. To minimize power loss and total costs, MSFS incorporates three enhancements, including an innovative diffusion approach and two update strategies to improve the standard SFS. The results showed that MSFS achieved up to a 3.98% reduction in power loss and more significant cost savings, converging to global optima faster than SFS and other methods, underscoring its effectiveness in capacitor optimization for power distribution systems.

In another study, Huynh et al. [109] proposed an Improved SFS (ISFS) algorithm to enhance PV power generation by accurately estimating key PV module parameters. This method frames parameter estimation as an RMSE minimization problem. It incorporates two modifications to improve SF,S including replacing the logarithmic function with an exponential function to enhance exploration and using a sine map instead of a uniform distribution for optimized diffusion and update processes. The results show that ISFS outperforms traditional meta-heuristic algorithms, including the original SFS and PSO, in achieving accurate model parameters and maximum power points (MPPs) for PV optimization.

Besides that, Alkayem et al. [110] introduced the SA-QSFS algorithm for structural health monitoring and damage identification. This approach combines a triple modal-based objective function and an improved self-adaptive framework to enhance damage detection accuracy, alongside quasi-oppositional learning to strengthen exploration at diverse stages. The SA-QSFS algorithm showed superior performance in diverse damage scenarios under noisy conditions and proved effective for continuous optimization in structural damage assessment.

Finally, Isen et al. [111] introduced the FDB-NSM-SFS-OBL algorithm, which enhances parameter extraction for renewable energy sources by incorporating Opposite-Based Learning (OBL), Fitness Distance Balance (FDB), and Natural Survivor updating Mechanism (NSM) within the SFS framework. Experimental results demonstrated improved accuracy in optimizing parameters for photovoltaic cells, PEMFCs, and Li-Ion batteries.

4.2 Hybrid Versions of the SFS Algorithm

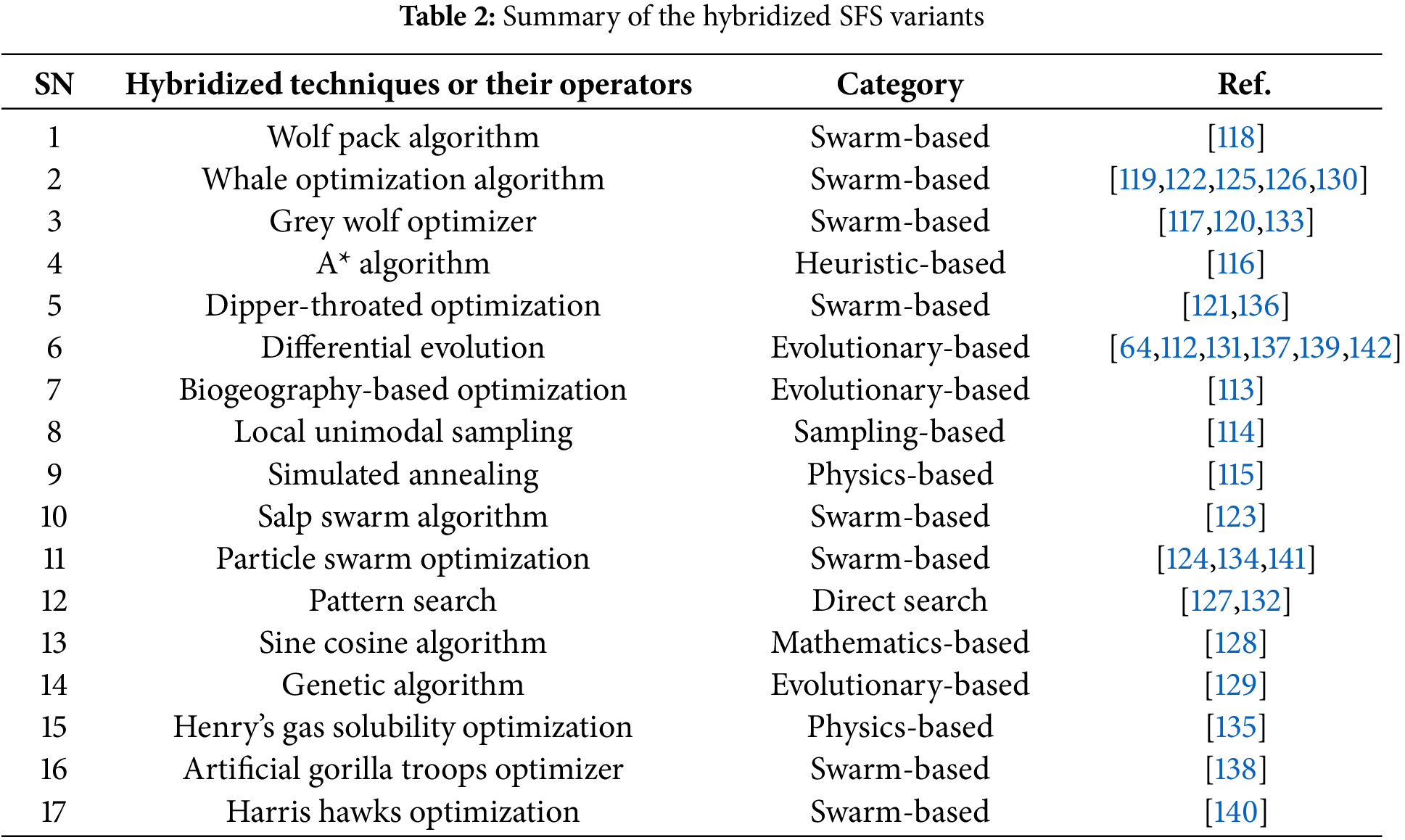

A hybrid algorithm combines two or more algorithms to solve a given problem, aiming to mitigate weaknesses and enhance computational speed and accuracy. Researchers have improved the performance of SFS by integrating it with other established heuristics and meta-heuristic algorithms. Table 2 provides an overview of hybridized SFS variants, with the following sections offering detailed insights into integrating SFS with diverse meta-heuristic and local search algorithms.

Awad et al. [64] introduced a hybrid approach that combines Differential Evolution (DE) with the SFS algorithm to enhance optimization performance. This method leverages the diffusion properties of fractal search and incorporates two innovative update processes to improve exploration. The algorithm enhances exploration by replacing random fractals with a DE-based diffusion process while reducing computational costs. Validated against 30 benchmark functions from the IEEE CEC2014 competition, the hybrid algorithm outperformed the original SFS and other contemporary techniques, particularly in hybrid and composite test functions.

In another study, Awad et al. [112] introduced an enhanced evolutionary algorithm that combines DE algorithm with the SFS framework, termed SFS-DPDE-GW, to tackle complex optimization challenges. This approach improves the traditional SFS diffusion process by integrating DE and Gaussian Walks, enhancing search efficiency. Evaluated using 30 functions from the CEC’2014 test suite, SFS-DPDE-GW outperformed the standard SFS and other well-known algorithms, demonstrating its potential for solving diverse optimization problems.

Furthermore, Ashraf et al. [113] developed a hybrid biogeography-based optimization (BBO) algorithm enhanced with SFS to tackle vendor-managed inventory (VMI) systems with various supplier-retailer configurations, aiming to minimize total costs. The algorithm significantly improved search and exploitation capabilities by integrating SFS’s diffusion process into BBO. The hybrid algorithm outperformed conventional methods in multiple VMI configurations, effectively addressing this complex nonlinear optimization problem.

Moreover, Sivalingam et al. [114] introduced a hybrid optimization technique that combines the SFS algorithm with Local Unimodal Sampling (LUS) for tuning a multistage Proportional Integral Derivative (PID) controller to improve Automatic Generation Control (AGC) in power systems. Initially, SFS optimized parameters for a single-area, multi-source system, outperforming methods like DE and TLBO. The addition of LUS further enhanced performance. When extended to complex multi-area systems, the approach demonstrated the superiority of the hybrid SFS-LUS algorithm in managing nonlinearities such as generation rate constraints and time delays.

Following this, Mosbah et al. [115] introduced a hybrid approach that combines the SFS algorithm with Simulated Annealing (SA) to solve distributed multi-area state estimation (SE) problems. The method operates at two levels: local SE using SFS for individual area data, and coordination SE using SA to exchange boundary information for overall state estimation. Tested on the IEEE 118-bus system divided into four areas, the approach significantly reduced computational time, demonstrating its efficiency in distributed SE.

Additionally, Yu et al. [116] proposed a hybrid approach for path planning in autonomous unmanned vehicles by integrating the A* algorithm with the SFS algorithm. This method considers the vehicle’s front-wheel-drive model and mechanical constraints to enhance path calculation. The A* algorithm identifies the shortest path on a raster map, which SFS then refines to generate the vehicle’s trajectory based on status information. Simulation results in MATLAB R2012a show that this composite algorithm effectively addresses path-planning challenges in complex urban environments.

In other research, El-Kenawy et al. [117] introduced a Modified Binary Grey Wolf Optimizer (MbGWO) that integrates the SFS algorithm to enhance feature selection by balancing exploration and exploitation. MbGWO features an exponential iteration scheme to expand the search space and incorporates crossover and mutation operations to increase population diversity. SFS’s diffusion mechanism employs a Gaussian distribution for random walks to refine the solutions, converting continuous values into binary for feature selection. Tested on nineteen UCI machine learning datasets using K-Nearest Neighbor (KNN), the MbGWO-SFS algorithm outperformed several state-of-the-art optimization techniques, with results found to be statistically significant at the 0.05 level.

Similarly, Chen et al. [118] proposed a novel method for task allocation in heterogeneous multi-UAV systems by integrating an enhanced SFS mechanism into a modified Wolf Pack Algorithm (WPA). The authors categorized complex combat tasks into reconnaissance, strike, and evaluation, enabling a tailored task allocation model based on UAV load capacities. The chaotic Wolf Pack Algorithm with enhanced SFS (MSFS-CWPA) employs Gaussian walking optimized through chaos to improve adaptive task assignment. Performance evaluations show that MSFS-CWPA outperforms the standard WPA and other variants in convergence accuracy, highlighting the robustness of SFS in multi-UAV task allocation strategies.

In another study, Alsawadi et al. [119] introduced an advanced method for skeleton-based action recognition using the BlazePose system, which models human body skeletons as spatiotemporal graphs. They enhanced the Spatial-Temporal Graph Convolutional Network (STGCN) by integrating the SFS-Guided Whale Optimization Algorithm (GWOA), leveraging SFS’s diffusion process for optimization. This approach achieved accuracies of 93.14% on X-View and 96.74% on X-Sub using the Kinetics and NTU-RGB+D datasets, surpassing existing techniques.

Additionally, Bharani et al. [120] proposed a novel method for exoplanet detection using the Kepler dataset, combining the Grey Wolf Optimizer (GWO) with an Enhanced SFS Algorithm (ESFSA) for feature selection. This hybrid approach reduced input features and classified exoplanets into False Positive, Not Detected, and Candidate categories using a Random Forest (RF) model. The GWO-ESFSA method outperformed existing techniques, achieving high performance across recall, specificity, accuracy, sensitivity, precision, and F1-score.

In addition, El-Kenawy et al. [121] proposed a novel digital image watermarking method that combines the SFS algorithm, Dipper-Throated Optimization (DTO), Discrete Wavelet Transform (DWT), and Discrete Cosine Transform (DCT). The technique uses DWT to decompose the cover image, followed by DCT for frequency-domain transformation. To optimize the scale factor for embedding, they introduced the DTOSFS algorithm. Rigorous evaluations using metrics like Image Fidelity (IF), Peak Signal-to-Noise Ratio (PSNR), and Normalized Cross-Correlation (NCC) demonstrated the method’s robustness against attacks and its superiority over traditional watermarking techniques, improving watermark quality and resilience.

In related research, El-Kenawy et al. [122] proposed a novel method for diagnosing COVID-19 using chest CT scans that integrates the SFS algorithm within a feature selection framework. The approach consists of three phases: extracting features from CT scans using the AlexNet Convolutional Neural Network (CNN), selecting relevant features with an enhanced Guided Whale Optimization Algorithm (Guided WOA) integrated with SFS, and balancing the features. A voting classifier, utilizing a PSO-based method, aggregates predictions from classifiers such as Decision Trees (DT), k-Nearest Neighbor (KNN), Neural Networks (NN), and Support Vector Machines (SVM). The framework achieved an area under the curve (AUC) of 0.995 on COVID-19 datasets, demonstrating the effectiveness of the SFS-Guided WOA in enhancing diagnostic processes.

Likewise, Zhang et al. [123] introduced a hybrid algorithm named GBSFSSSA, which combines the Gaussian Barebone SFS algorithm with the Salp Swarm Algorithm (SSA) to enhance image segmentation. This approach improves the balance between local and global search capabilities, addressing the limitations of the basic SSA. Tested on the CEC2017 dataset and applied to COVID-19 CT image segmentation, GBSFSSSA outperformed other algorithms in PSNR, SSIM, and FSIM metrics, making it a reliable solution for multi-threshold image segmentation in medical imaging.

Furthermore, Xu et al. [124] introduced an improved SFS (ISFS) algorithm to optimize cascade reservoir operations in the Yalong River, considering the complexities of the Lianghekou Reservoir’s multiyear regulation capacity. Inspired by PSO, the ISFS algorithm integrates global and individual best points and employs an adaptive variation rate (Fw) to enhance convergence. The study evaluated power generation and water abandonment across three operational modes using inflow data from five years. Results indicated that ISFS outperformed traditional SFS and PSO in both speed and accuracy, with the global joint operation mode being most effective in high-inflow scenarios, highlighting the significance of operational strategies in reservoir management.

In another study, Salamai et al. [125] developed a hybrid algorithm combining the SFS algorithm and the Guided Whale Optimization Algorithm (WOA) to optimize e-commerce sales forecasting. This method fine-tunes the parameter weights of Bidirectional Recurrent Neural Networks (BRNN) for time series demand forecasting. The hybrid algorithm outperformed other techniques, including PSO, WOA, and GA, achieving significantly lower RMSE, with results validated through ANOVA analyses.

Moreover, Eid et al. [126] introduced an innovative hybrid method combining the SFS algorithm and Guided WOA to optimize electroencephalography (EEG) channel selection for motor imagery classification and seizure detection. The SFS-Guided WOA effectively identifies relevant features, enhancing Brain-Computer Interface (BCI) systems by reducing dataset complexity and improving machine learning performance. The algorithm outperformed others in statistical tests, including ANOVA and the Wilcoxon rank-sum test.

Following this, Saini et al. [127] proposed a hybrid optimization approach that combines the SFS algorithm with Pattern Search (PS) to optimize PID controller parameters for DC motor speed control. Using ITAE as the objective function, the method leverages SFS’s diffusion property alongside PS’s derivative-free technique to enhance local search while maintaining robust global exploration. Simulation results demonstrated that the hybrid SFS-PID controller outperformed recent techniques, providing faster, smoother dynamic responses with reduced oscillations.

Additionally, Eid et al. [128] proposed a weighted average ensemble model for predicting hemoglobin levels from blood samples, which is critical for diagnosing conditions like anemia. The authors used a sine cosine algorithm combined with SFS (SCSFS) to optimize the ensemble weights. This hybrid method effectively fine-tuned model parameters, and comparisons with traditional machine learning techniques such as Decision Trees, MLP, SVR, and Random Forest, demonstrated that the SCSFS model significantly outperformed existing models, providing accurate hemoglobin level estimates and highlighting the effectiveness of SFS in optimizing ensemble learning for medical applications.

In other research, Cheraghalipour et al. [129] proposed optimizing a closed-loop agricultural supply chain with an eight-echelon logistics network, including suppliers, farms, and biogas centers. The authors developed a bi-level programming model to minimize costs and maximize profits from biogas and compost sales. To address the NP-hard nature of the problem, they used meta-heuristic algorithms, specifically SFS and GA, along with hybrid approaches (GA-SFS and SFS-GA). The SFS-GA hybrid outperformed other methods, demonstrating its effectiveness in supply chain optimization. The study highlights the benefits of incorporating biogas and compost production, reducing pollution while enhancing profitability in agricultural-dependent countries.

Similarly, Abdelhamid et al. [130] enhanced speech emotion recognition by augmenting datasets with noise and optimizing a CNN-LSTM deep learning model’s hyperparameters using a hybrid method that combines the SFS algorithm and Whale Optimization Algorithm (WOA). This approach improved recognition accuracy on four datasets such as IEMOCAP, Emo-DB, RAVDESS, and SAVEE, to 98.13%, 99.76%, 99.47%, and 99.50%, respectively, outperforming existing methods.

In another study, Abdel-Nabi et al. [131] developed eMpSDE, a hybrid optimization framework combining the SFS algorithm with Success-History based Adaptive Differential Evolution (SHADE) to address local minima and premature convergence issues. The three-phase structure includes a local search to enhance solution quality and convergence speed. Evaluations across several optimization problems showed that eMpSDE is competitive with contemporary algorithms, demonstrating the effectiveness of integrating SFS with adaptive techniques for improved optimization performance.

Additionally, Saini et al. [132] introduced a hybrid SFS (HSFS) algorithm that combines pattern search optimization with a fractional-order proportional-integral-derivative (FOPID) controller to improve DC motor speed control. The HSFS algorithm optimizes the FOPID controller parameters by minimizing the integral time multiplied by the absolute error (ITAE). The HSFS-FOPID approach demonstrates enhanced performance in motor control, outperforming existing methods in metrics such as rise time, settling time, overshoot, and overall ITAE, highlighting its effectiveness in this application.

In related research, El-Kenawy et al. [133] proposed a hybrid model integrating Grey Wolf Optimization and SFS (GWO-SFS) with a Random Forest regressor to predict sunshine duration accurately. Using meteorological data and a pre-feature selection process, the model reduced prediction errors by over 20% and achieved correlation coefficients above 0.999, outperforming previous models in accuracy and precision.

In addition, Tarek et al. [134] developed an optimization method combining the SFS algorithm and PSO to enhance wind power generation predictions using an optimized Long Short-Term Memory (LSTM) network. The authors constructed several regression models and utilized a dataset of 50,530 instances. The SFS-PSO optimized LSTM model achieved a coefficient of determination (

Likewise, Zhang et al. [135] developed the SFS-HGSO algorithm, an enhanced version of Henry’s Gas Solubility Optimization (HGSO), to improve feature selection and engineering optimization. It incorporates SFS strategies, such as Lévy flight and Gaussian walk, to enhance search diversity and local search capabilities. The algorithm effectively addresses slow convergence issues and was validated on 20 UCI benchmark datasets, outperforming other algorithms such as Whale Optimization and Harris Hawks Optimization.

Furthermore, Khafaga et al. [136] introduced a hybrid approach that combines Long Short-Term Memory (LSTM) units with the SFS algorithm and Dipper-Throated Optimization (DTO) to improve energy consumption forecasting. The dynamic DTO-SFS algorithm optimizes LSTM parameters, resulting in enhanced accuracy. Comparative analyses with five benchmark algorithms showed that the proposed model achieved an RMSE of 0.00013, indicating its effectiveness in energy prediction, further supported by statistical validation of its performance.

In another study, Abdel-Nabi et al. [137] introduced a hybrid algorithm called 3-sCHSL, which integrates the SFS algorithm with L-SHADE to tackle real-parameter, single-objective numerical optimization. This approach enhances diversity and reduces stagnation through a guided initialization strategy. Tested on the CEC 2021 benchmark suite, 3-sCHSL demonstrated superior performance in handling complex transformations and achieved competitive results in two engineering design problems, surpassing well-known optimization algorithms.

Moreover, Rahman et al. [138] developed the Chaotic SFS-GTO (CSGO) algorithm to solve flow-shop scheduling problems (FSP). Combining features from the SFS algorithm, the Artificial Gorilla Troops Optimizer (GTO), and the Marine Predators Algorithm (MPA) with chaotic operators, the algorithm aims to minimize processing costs on non-identical parallel machines. Tested on ten benchmark FSPs, CSGO demonstrated strong performance in lower-dimensional problems but faced challenges in scalability as problem dimensions increased, showing reduced accuracy compared to earlier algorithms.

Following this, Abdel-Nabi et al. [139] developed the Iterative Cyclic Tri-strategy with an adaptive Differential Stochastic Fractal Evolutionary Algorithm (Ic3-aDSF-EA) to solve numerical optimization problems. This novel evolutionary algorithm combines SFS with a DE variant to improve exploration and exploitation. Operating in iterative cycles, it focuses on the best-performing strategies while incorporating diverse contributions. The algorithm was tested on 43 benchmark problems, demonstrating high-quality solutions, scalability, and potential for addressing real-world optimization challenges.

Additionally, Zhang et al. [140] developed a framework for diagnosing Alzheimer’s disease (AD) and mild cognitive impairment (MCI) using MRI data. The authors enhanced a Fuzzy k-nearest neighbor (FKNN) model with a hybrid algorithm, SSFSHHO, which combines the Sobol sequence, Harris Hawks Optimizer (HHO), and SFS algorithm. This approach improves initial population diversity and helps avoid local optima. The SSFSHHO-FKNN model achieved high accuracy on the ADNI dataset, surpassing other algorithms and demonstrating its potential for early diagnosis.

In other research, Zaki et al. [141] developed a hybrid optimization method combining SFS and PSO to improve the K-Nearest Neighbors (KNN) algorithm in wireless sensor networks. This approach optimizes KNN by adjusting particle positions and velocities, significantly reducing the RMSE to 0.00894 and the mean MAE. The results highlight the effectiveness of SFS-PSO in enhancing KNN’s predictive performance for data collection applications.

In another study, Xu et al. [142] proposed an enhanced SFS algorithm to improve energy efficiency and security in clustering for mission-critical IoT-enabled WSNs. The authors introduced a multitrust fusion model using an entropy weight method (EWM) to evaluate sensor trustworthiness. The improved SFS algorithm employs differential and adaptive mutation factors to select optimal cluster heads based on residual energy, integrated trust, and distance to the base station. Experimental results show that this model significantly outperforms existing clustering protocols in energy efficiency and security.

Besides that, Alsawadi et al. [143] developed an action recognition method integrating BlazePose skeletal data with a hybrid optimization technique combining the SFS algorithm and WOA. This approach optimizes the graph structure used in spatial-temporal graph convolutional networks, enhancing action recognition performance. The method was tested on the NTU-RGB+D and Kinetics datasets, showing competitive results compared to traditional techniques.

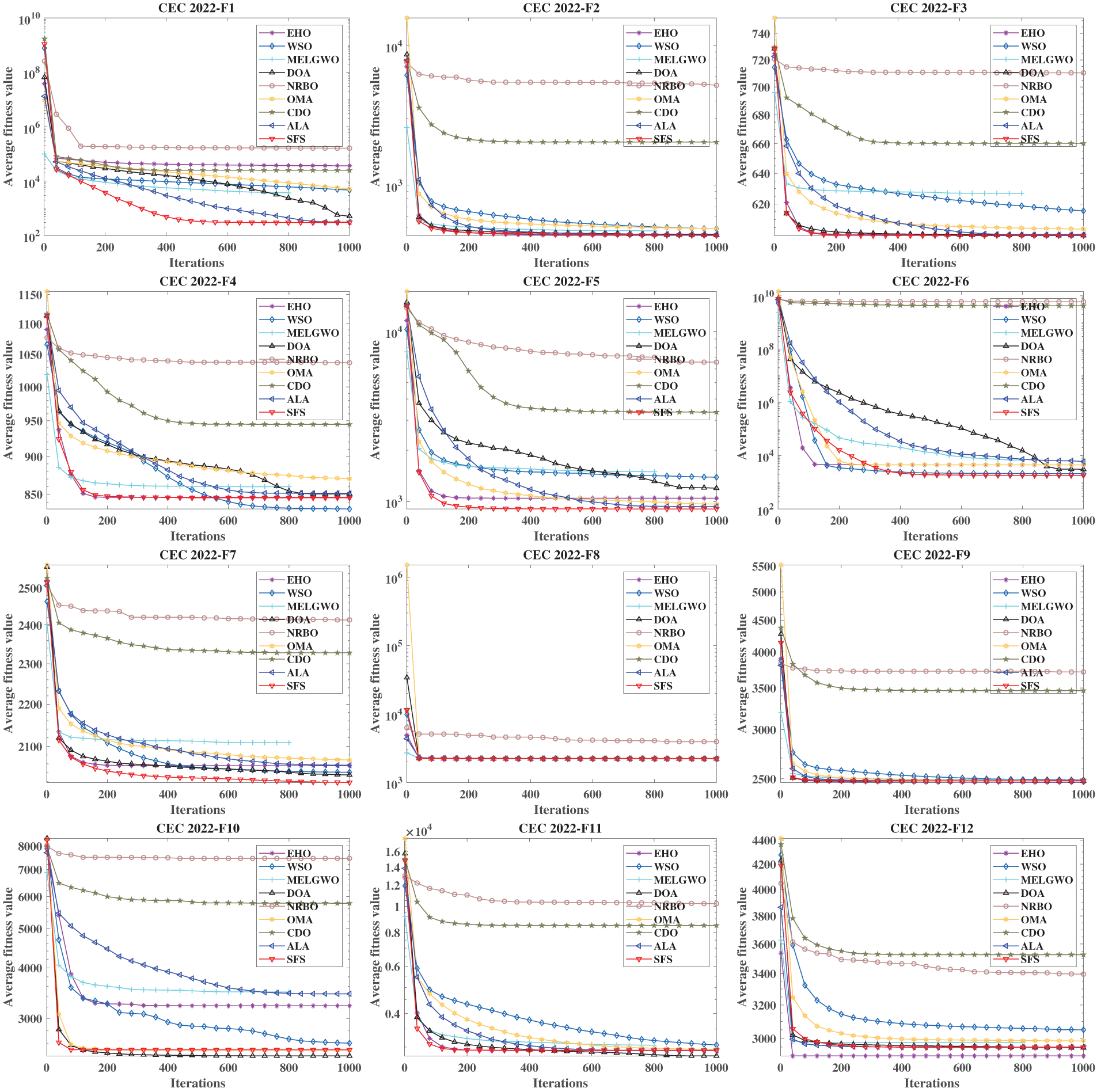

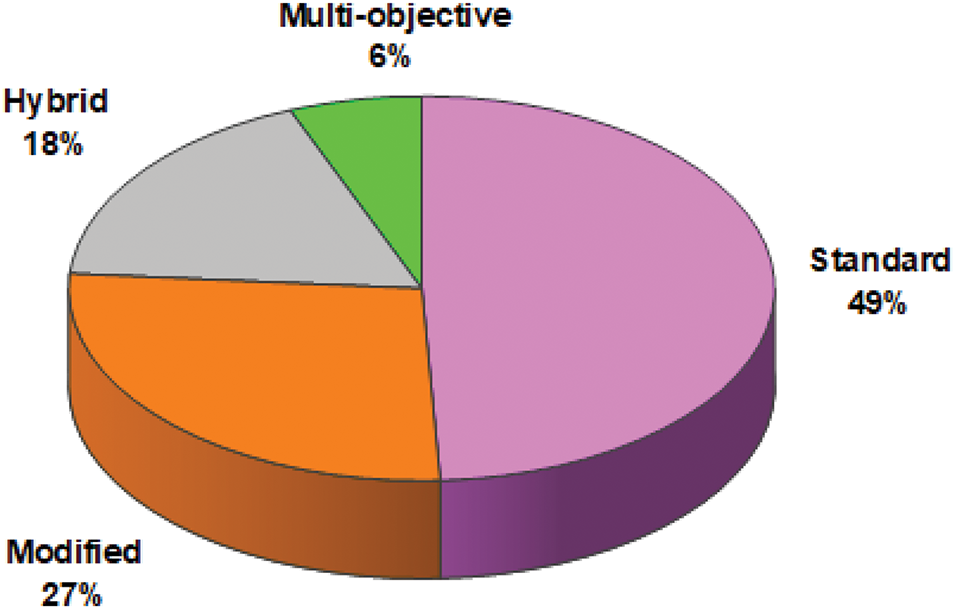

In another study, Bandong et al. [144] introduced a method to optimize gantry crane automation control by integrating the SFS algorithm with the Flower Pollination Algorithm (FPA) for PID controllers. The approach focused on enhancing position control and minimizing sway angle during crane operation, which is crucial for reducing damage and safety risks in port and construction settings. Results showed that the SFS-FPA method outperformed the PSO-based PID controller in terms of speed response and positional accuracy, although there were trade-offs in settling time.