Open Access

Open Access

REVIEW

A Comprehensive Review of Face Detection Techniques for Occluded Faces: Methods, Datasets, and Open Challenges

1 Department of Computer Systems Engineering, Arab American University, Jenin, P.O. Box 240, Palestine

2 Department of Computer Science, Birzeit University, Birzeit, P.O. Box 14, Palestine

3 Department of Computer Systems Engineering, Faculty of Engineering and Technology, Palestine Technical University–Kadoorie, Tulkarm, P.O. Box 7, Palestine

4 Information Systems and Business Analytics Department, A’Sharqiyah University (ASU), Ibra, 400, Oman

5 Department of Electrical Engineering and Computer Science, College of Engineering, A’Sharqiyah University (ASU), Ibra, 400, Oman

* Corresponding Author: Thaer Thaher. Email:

Computer Modeling in Engineering & Sciences 2025, 143(3), 2615-2673. https://doi.org/10.32604/cmes.2025.064857

Received 25 February 2025; Accepted 30 May 2025; Issue published 30 June 2025

Abstract

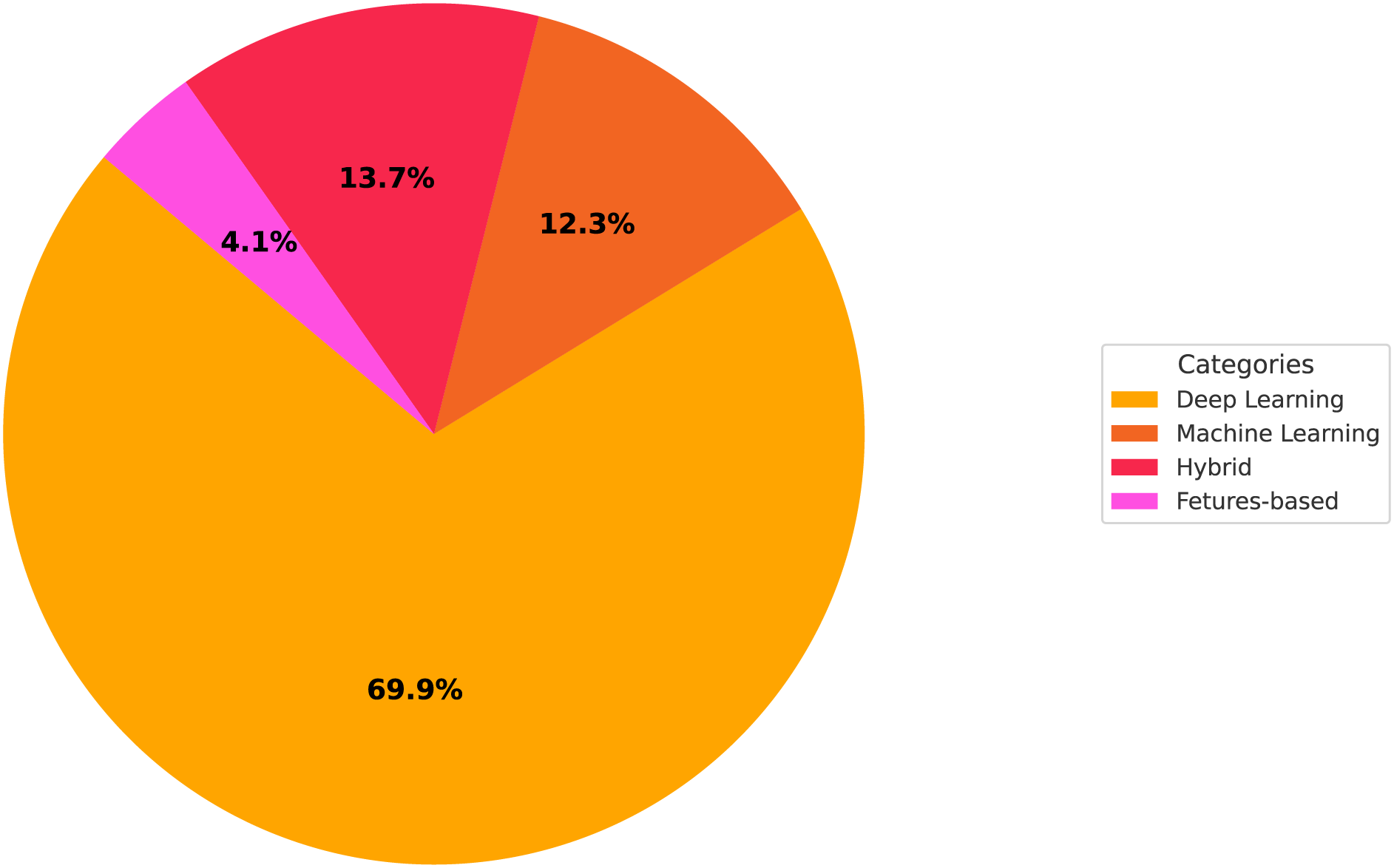

Detecting faces under occlusion remains a significant challenge in computer vision due to variations caused by masks, sunglasses, and other obstructions. Addressing this issue is crucial for applications such as surveillance, biometric authentication, and human-computer interaction. This paper provides a comprehensive review of face detection techniques developed to handle occluded faces. Studies are categorized into four main approaches: feature-based, machine learning-based, deep learning-based, and hybrid methods. We analyzed state-of-the-art studies within each category, examining their methodologies, strengths, and limitations based on widely used benchmark datasets, highlighting their adaptability to partial and severe occlusions. The review also identifies key challenges, including dataset diversity, model generalization, and computational efficiency. Our findings reveal that deep learning methods dominate recent studies, benefiting from their ability to extract hierarchical features and handle complex occlusion patterns. More recently, researchers have increasingly explored Transformer-based architectures, such as Vision Transformer (ViT) and Swin Transformer, to further improve detection robustness under challenging occlusion scenarios. In addition, hybrid approaches, which aim to combine traditional and modern techniques, are emerging as a promising direction for improving robustness. This review provides valuable insights for researchers aiming to develop more robust face detection systems and for practitioners seeking to deploy reliable solutions in real-world, occlusion-prone environments. Further improvements and the proposal of broader datasets are required to develop more scalable, robust, and efficient models that can handle complex occlusions in real-world scenarios.Keywords

Face detection is one of the most popular, fundamental, and practical tasks in computer vision. It involves detecting human faces in images and returning their spatial locations through bounding boxes [1], serving as a critical foundation for various advanced vision-based applications [2]. As a vital first step in facial analysis systems, face detection enables subsequent activities such as face alignment, recognition, verification, parsing, emotion detection, and biometric authentication. Its primary purpose is to determine the presence of faces in an image and, if detected, provide their location and extent for further analysis [3–6]. This preprocessing step reduces the amount of data to process and improves the accuracy of the next stages by concentrating on a smaller, relevant portion of the image. It is particularly helpful when dealing with images that have different backgrounds, lighting, and orientations [5]. By removing non-face data, face detection boosts both the speed and accuracy of recognition, making it ideal for large-scale, real-world applications.

Historically, the effectiveness of face recognition technologies has relied on improvements in face detection [7,8]. Early models, such as Viola-Jones [9], used basic features and simple classifiers, while today’s deep learning methods utilize advanced convolutional neural networks (CNNs) to achieve greater accuracy. This evolution highlights how progress in detection enhances the overall field of facial analysis. Fig. 1 provides representative examples of typical challenges encountered in face detection tasks. These diverse challenges include variations in scale, atypical poses, occlusions, exaggerated expressions, and extreme illumination. These challenges highlight the need for robust face detection models that can perform accurately in real-world, unconstrained environments. Accurate face detection in such unpredictable environments ensures high-quality images, allowing for enhanced feature extraction and better matching accuracy. This capability is crucial in high-security applications–such as surveillance, biometric identification, law enforcement, airport security, and access control systems–where reducing false positives and improving reliability are paramount [10].

Figure 1: Illustrative examples of face detection challenges including simple cases, variations in scale, atypical poses, occlusions, exaggerated expressions, and extreme illumination. These images, sourced from [1] (WIDER FACE dataset), are provided for illustration purposes and are not linked to any specific models reviewed in this paper

Face detection technology has improved a lot, but it still faces challenges in complex and unpredictable environments [11]. This is why ongoing research is so important to make it more reliable and valuable in practical use. Finding obscured faces is more challenging since important facial traits are sometimes obscure and difficult to identify. The difficulty is to identify faces without depending on clear landmarks, deal with differences in appearance, and even estimate missing elements of the face [12]. One main problem is that occlusions can obscure vital face traits as the lips, nose, or eyes. External objects, body parts, or ambient elements including clothes, hands, sunglasses, or masks [13] can all cause these obstructions (as illustrated in Fig. 2).

Figure 2: Example of occluded face images from the MAFA dataset [14]

Usually, depending on the evaluation of the complete face or particular landmarks, standard face detection techniques fail when these features are obscured, resulting in missed detections or large false positives [15]. For real-time applications like surveillance and security, where failing to identify obstructed faces can lead to major mistakes, this is particularly problematic. Accordingly, more advanced methods are required that can identify and infer facial features even in cases when significant portions of the face are covered in order to enhance occluded face detection.

Another big challenge in face detection is the way occlusions change a face’s appearance. In specific, the size, shape, and texture of an occlusion can be different even when it covers the same part of the face [13,16]. This makes it harder for traditional face detection systems, which expect faces to look consistent. Occlusions also cause confusion by altering the usual relationships between facial features. Deep learning models, especially CNNs, have been very effective in handling these challenges by learning patterns in both clear and occluded faces [17,18]. These models require large datasets of faces with different levels of occlusion for training, but there is one major problem: there is a lack of well-annotated datasets. As for the models, they fail to detect the occlusions from different angles and other conditions due to the insufficient variety of examples [6].

Furthermore, a crucial part in the case of an occluded input is to preserve the balance between the detection of the visible features and the comprehension of the entire face. Traditional methods are based on the recognition of the complete facial structures, and, in case of partial obfuscation, the models learn to complete the sequence based on the available information. Recent techniques such as attention mechanisms and region-based detection are applied to focus on the regions of interest and context to infer missing regions [19,20]. However, such approaches are computationally expensive and therefore infeasible for real-time applications [21].

These challenges highlight the unique difficulties of detecting occluded faces compared to regular face detection. Continued research in this area is essential for developing more robust face detection systems capable of handling diverse and unpredictable real-world conditions. The emphasis on occluded face detection has gained importance in the AI and computer vision domain due to the rising need for reliable detection in practical applications. Facial analysis technology, integrated into fields like healthcare, security, retail, and social media, must function effectively in unregulated environments where occlusions are common. The COVID-19 pandemic, with widespread mask usage, underscored the need for algorithms capable of efficient detection under partial visibility [22–24]. In security and surveillance, detecting occluded faces is critical, as individuals often obscure their faces with items like hats, scarves, sunglasses, or masks, whether intentionally or not [25,26]. Improved occluded face detection can enhance AI’s effectiveness in such critical applications [27]. In healthcare, occlusions from medical devices or environmental factors can obstruct critical facial regions, complicating tasks like emotion detection, gaze tracking, and diagnosing neurological disorders. Advances in AI-based facial analysis are contributing to more reliable telemedicine and assistive technologies, supporting consistent and accurate assessments even in challenging visual conditions [28]. Similarly, in social media, marketing, and retail, occluded face detection is vital for analyzing expressions, demographics, and engagement in dynamic situations. Retail environments often involve occlusions due to product displays or interactions, while social media platforms must detect faces obstructed by accessories or filters. Enhancing detection in these scenarios ensures AI systems perform accurately and fairly and improve their effectiveness across diverse use cases. These advancements are vital for addressing the challenges outlined across diverse applications, particularly in hidden face detection.

1.2 Objectives and Contributions of the Review

As highlighted earlier, the past few years have seen significant growth in research on face detection under occlusions. This increase reflects a growing demand to review and assess the impact of advancements in this field. The analogous challenges and notable progress in occluded face detection have motivated us to conduct this review study. The main objective of this study is to provide a comprehensive resource for researchers and practitioners interested in this topic. Face detection under occlusion remains critical for improving the reliability of real-world applications, such as surveillance, biometric authentication, and access control, where partial facial visibility is common. By providing an organized analysis of current methods, challenges, and datasets, this review aims to guide future research efforts and support practitioners in developing more robust face detection systems. To achieve this objective, we make the following key contributions:

1. We comprehensively review recent state-of-the-art approaches in the domain of occluded face detection, categorized into traditional feature-based methods, machine-learning-based approaches, advanced deep learning techniques, and hybrid methodologies.

2. We highlight key advancements, persistent challenges, and gaps in the field of occluded face detection, providing valuable insights into utilizing emerging technologies for diverse research directions.

3. We summarize and compare the reviewed approaches under varying conditions, offering a clear understanding of their strengths and limitations.

4. We analyze and compare benchmarking datasets commonly used to evaluate the performance of face detection systems under occlusions, emphasizing their characteristics and applicability.

5. We outline current challenges and promising research directions, inspiring further innovation and progress in this important area.

This paper focuses exclusively on face detection techniques, distinguishing them from face recognition methods. Specifically, it addresses the unique challenges and methodologies associated with detecting faces under occlusions. By narrowing the scope to this critical task, the review offers an in-depth analysis of improved detection approaches, contributing to the broader domain of face analysis. A specialized review paper on detecting occluded faces is essential, as most existing review studies on face detection and recognition approaches overlook the challenges posed by occlusions. Current review articles primarily concentrate on general face detection techniques or face recognition methodologies, with few explicitly addressing obstructed faces. For instance, some research examines face recognition algorithms in the context of occlusion [14,29–32], but their main focus is on identity verification rather than the foundational task of identifying the existence and location of occluded faces. Reviews on general face detection, such as [5,12,33–35], often assume full facial visibility and inadequately address the unique challenges of partial visibility or feature masking caused by occlusions. Our review paper, as a recent contribution to the field, addresses this gap by examining both past and recent studies on occluded face detection. It provides a focused resource delivering insights into detection strategies designed to solve diverse occlusion difficulties, offering a timely and essential reference for advancing this critical area of research. To sum up, the main contributions of this timely study are as follows.

The rest of this paper is organized as follows: Section 2 explains the detection of faces under occlusion, focusing on the source, type, and level of occlusions. Section 3 presents the structured methodology used to prepare this review study. Section 4 analyzes, categorizes and compares state-of-the-art methods for the detection of occluded faces. Section 5 lists and compares the benchmark datasets used for occluded face detection. The challenges and future directions in the detection of occluded faces are highlighted in Sections 6 and 7, respectively. Finally, Section 8 concludes the study.

2 Background and Foundations of Occluded Face Detection

Before presenting a detailed review of detection techniques, it is important to first establish the necessary background and terminology that will be used throughout the rest of the paper. Section 2.1 clarifies the differences between face detection and face recognition. Section 2.2 discusses the various sources of occlusions, and Section 2.3 presents the types and levels of occlusions. Understanding these sources, types, and severity levels is crucial for accurately assessing and comparing face detection methods.

2.1 Face Detection vs. Face Recognition

Face detection and face recognition are two distinct but interrelated activities within the realm of computer vision. Face detection is the process of recognizing and localizing faces in an image or video frame, typically preceding additional facial analysis [7]. Detection systems focus on precisely identifying the facial region, regardless of identity, pose, or expression, and are essential for applications such as security monitoring, photo tagging, and human-computer interaction [15,36]. Face detection can be represented as a function. Given an input image

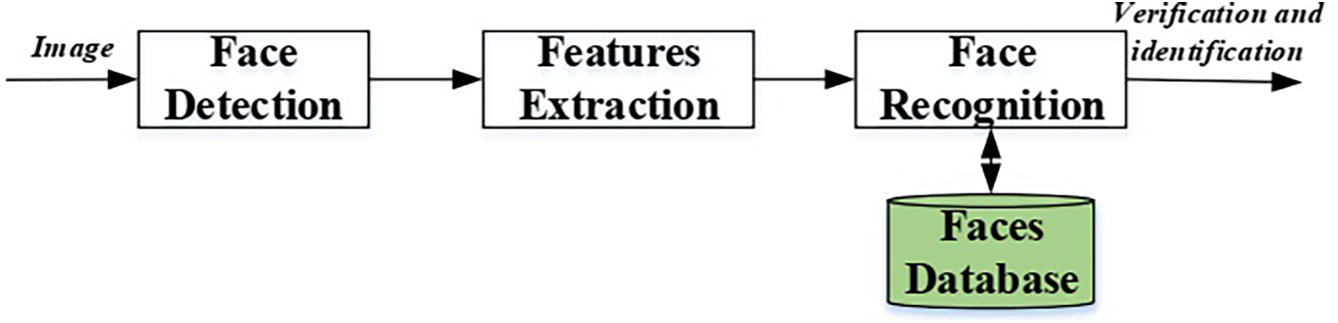

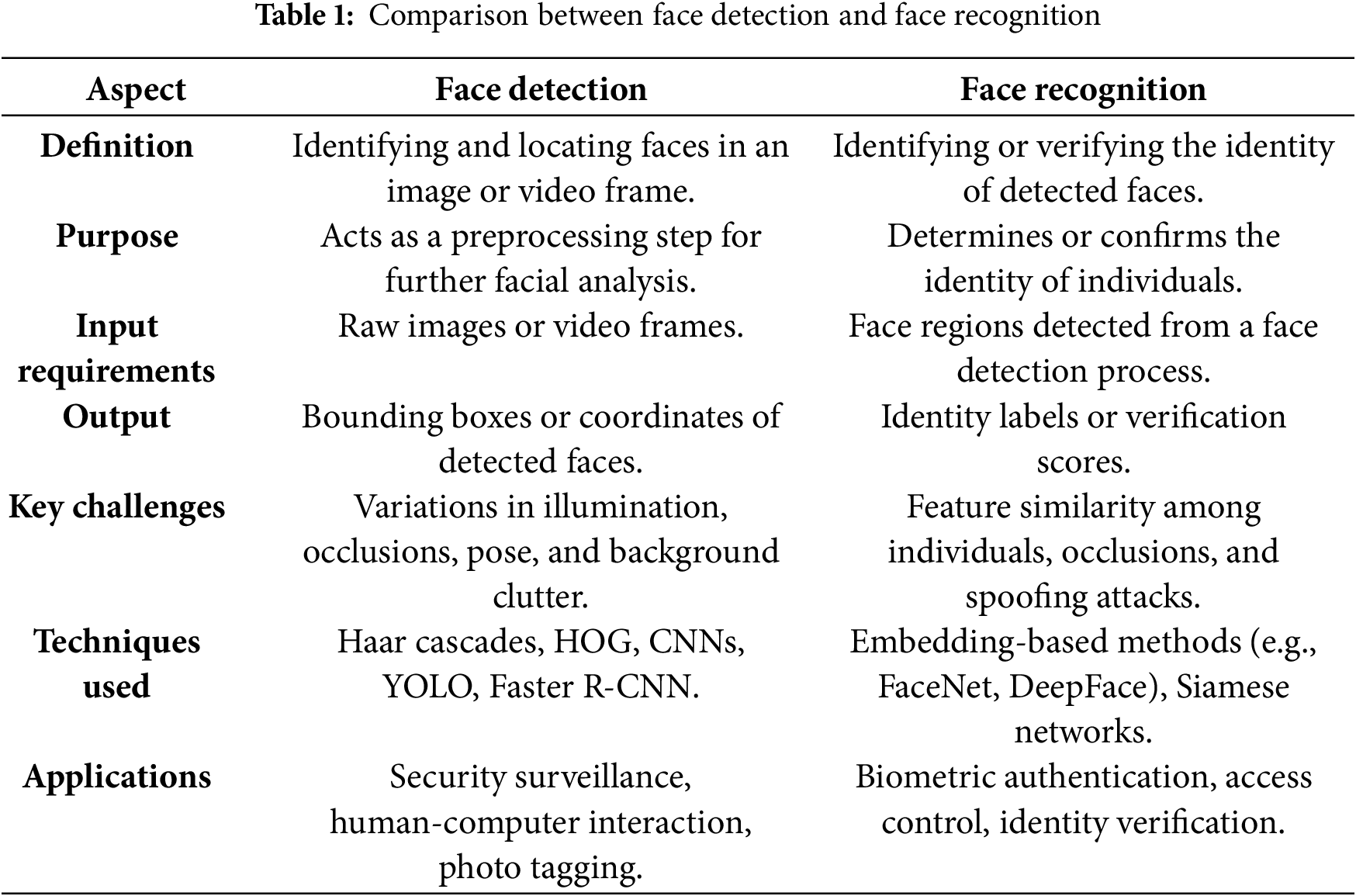

In contrast, face recognition involves the identification or verification of the identity of a recognized face. Recognition jobs often depend on extracting robust features from the identified face to compare it with established identities in a database [38]. Although face recognition is based on detection, its objectives and challenges are significantly different. Recognition systems emphasize feature extraction and comparison, frequently utilizing methods such as feature embedding [39], while detection systems concentrate on rapid localization and generalization under diverse situations, including variations in illumination and occlusions. Fig. 3 illustrates the general face analysis pipeline, highlighting the steps of detection, feature extraction, and recognition. To clarify the difference between face detection and recognition, Table 1 presents a comprehensive comparison of the two tasks across various aspects.

Figure 3: General pipeline illustrating the difference between face detection and face recognition [27]

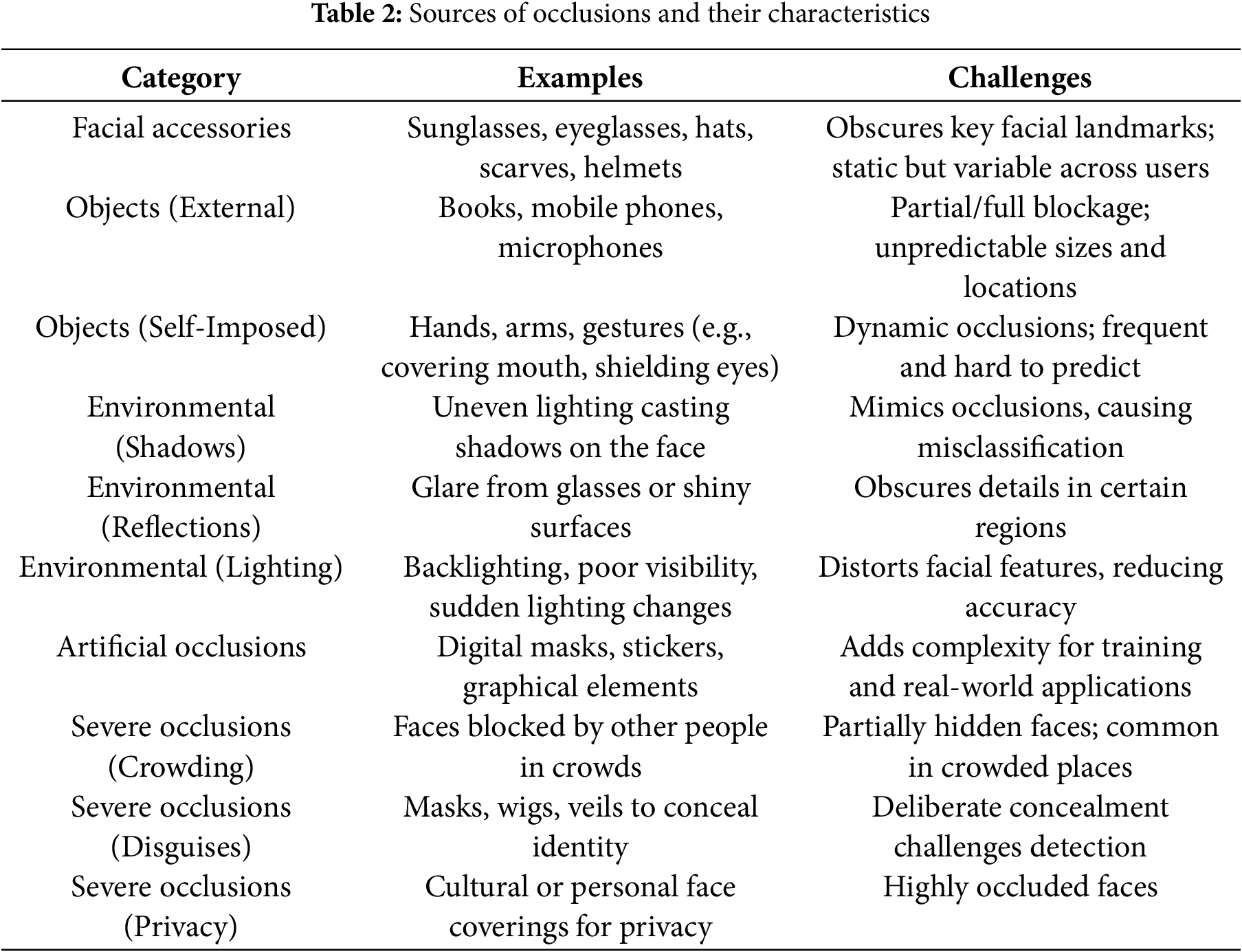

Occlusions in face detection arise from a variety of sources, each introducing unique challenges for detection algorithms [14]. To organize these challenges, occlusions can be broadly categorized based on their source and context. These categories reflect whether the obstruction arises from personal accessories, physical objects, environmental conditions, artificially created elements, or severe real-world challenges such as disguises or crowding. These sources, summarized in Table 2, are detailed as follows:

1. Facial accessories: Everyday accessories such as sunglasses, eyeglasses, hats, scarves, and helmets often block important facial features. For instance, sunglasses obscure the eyes, while masks cover the nose and mouth, disrupting algorithms that depend on these features for detection [40,41]. Although these occlusions are typically static, their variety across individuals makes them challenging to handle.

2. Objects: Occlusions caused by objects can be divided into two types:

• External objects: Items like books, phones, or microphones can partially or fully block the face, especially during activities like reading or speaking [12,42].

• Self-imposed obstructions: Hands, arms, or gestures, such as covering the mouth or shielding the eyes, create dynamic occlusions. These vary in size, shape, and location, making them particularly hard to predict and handle [16].

3. Environmental factors: The environment can create occlusions that interfere with detection systems in various ways:

• Shadows: Uneven lighting can cast shadows on the face, making it appear partially occluded [34].

• Reflections: Glare from glasses or shiny surfaces can hide important facial details [43].

• Lighting variations: Sudden changes in lighting, such as backlighting or low visibility, can distort facial features and lower detection accuracy [35].

4. Artificial occlusions: These are intentionally created occlusions, often used to test algorithms or ensure privacy. Examples include digital masks, stickers, or other graphical overlays on faces [14]. While useful for training models, artificial occlusions can make real-world detection more challenging.

5. Severe occlusions in specialized scenarios: Some occlusions are more extreme and deliberate, particularly in real-world contexts like security or surveillance [44]. These include:

• Crowding: Faces may be partially blocked by other people in crowded places like public transport or events.

• Disguises: Deliberate obstructions such as masks, wigs, or veils are often used to conceal identity.

• Privacy measures: Cultural or personal practices, such as wearing face coverings for religious or privacy reasons, can make detection systems less effective [45].

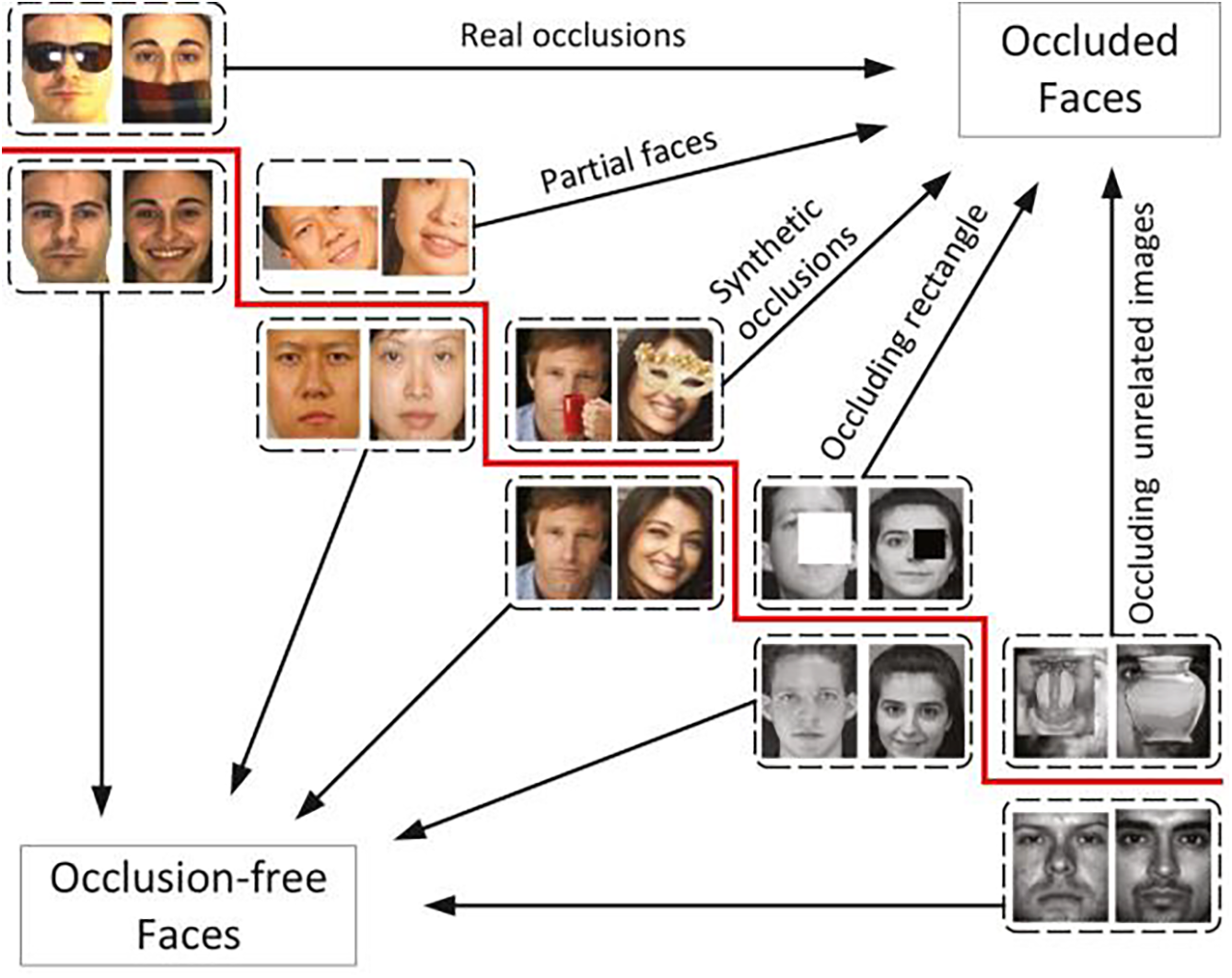

To better illustrate the different sources and forms of occlusions, Fig. 4 presents a range of occluded face examples, including real-world occlusions (e.g., sunglasses, scarves), synthetic occlusions (e.g., digital masks), and unrelated obstructing objects.

Figure 4: Examples of occlusion types commonly encountered in face detection tasks, including real-world occlusions, synthetic occlusions, partial faces, and unrelated occluding objects. Adapted from [14]

2.3 Face Occlusion Types and Levels

Handling occlusion in face detection requires a comprehensive understanding of the different types and degrees of occlusion. These elements directly affect the design and effectiveness of detection algorithms. This section examines the basic types of occlusion, their characteristics, and their consequences for detection methodologies, with reference to current literature. Occlusion can be categorized from two complementary perspectives: the overall level of coverage (partial and high occlusion) and the areas that are most impacted (spatial occlusion). Together, these classifications provide a comprehensive and practical foundation for addressing the various issues posed by occlusion.

2.3.1 Partial and High Occlusion

Occlusion can be categorized based on the degree of coverage into partial and high occlusion. For example, studies like [4,46] classify faces into three groups: non-occluded, partially occluded, and heavily occluded, depending on the percentage of the face area covered. Partial occlusion is defined as 1% to 30% coverage, while heavy occlusion exceeds 30%. Although this method offers a clear framework for classification, it may fail to account for extreme cases, such as fully obscured faces where 100% of the face is covered. Another study by [13] divides the face into four main regions: eyes, nose, mouth, and chin. They categorized occlusion levels based on how many regions are covered. Faces with one or two covered regions are classified as weakly occluded, three regions as medium occlusion, and all four as heavily occluded. While this approach adds more detail, it struggles to distinguish between different degrees of heavy occlusion, such as 70% vs. 100% coverage. To address this, reference [37] refines the classification by dividing the face into five regions: the forehead, two eyes, nose, mouth, and chin. This finer division helps differentiate between heavily occluded and fully occluded faces, which is particularly useful in scenarios like faces covered with niqabs (a cultural or religious head covering worn by some Muslim women [6]). By accounting for occlusion levels from 70% to 100%, this method provides a more detailed understanding of high occlusion.

According to the aforementioned studies, occlusions can be classified into partial occlusion and high occlusion, with additional distinctions based on the extent of covered and certain facial parts that are obscured.

• Partial occlusion arises when a segment of the face is obstructed, for instance, when the eyes are concealed by glasses or the mouth is obscured by a hand [47]. Partial occlusion disturbs the symmetry of face landmarks, upon which numerous algorithms depend for precise detection. Traditional feature-based approaches, such as Haar cascades and HOG, frequently struggle to generalize effectively under partial occlusions due to their reliance on the total visibility of essential face features.

• High occlusion denotes instances in which over 50% of the face is concealed. Typical instances encompass faces obscured by masks, scarves, or environmental obstacles such as foliage [13]. High occlusion is a considerable obstacle for conventional techniques and certain deep learning methodologies, as the visible areas may lack adequate information for dependable detection. Recent advancements in deep learning, including attention mechanisms and occlusion-aware models, have demonstrated the potential to tackle these scenarios.

In addition to the overall degree of coverage, occlusion can also be categorized based on the specific regions of the face that are obstructed. Spatial occlusion examines how particular areas of the face are obscured, which can have distinct impacts on detection algorithms. For example:

• Upper Occlusion: Obstructions of the forehead and eyebrows, such as those caused by hats or hair, can interfere with alignment algorithms that rely on these features.

• Lower Occlusion: Covering the mouth and chin, as with masks or scarves, poses challenges for recognition tasks that depend on these regions.

To better understand the impact of occlusion, it is essential to categorize it into levels:

• Low Occlusion: Less than 25% of the face is obscured. Examples include glasses or slight shadows.

• Medium Occlusion: Between 25% and 50% of the face is obscured. Examples include medical masks or objects partially blocking the face.

• High Occlusion: More than 50% of the face is obscured, further subdivided into:

– Heavily Occluded: 50% to 70% of the face is covered, such as by scarves or environmental barriers.

– Fully Occluded: 70% to 100% of the face is covered, as in cases like niqabs or full veils.

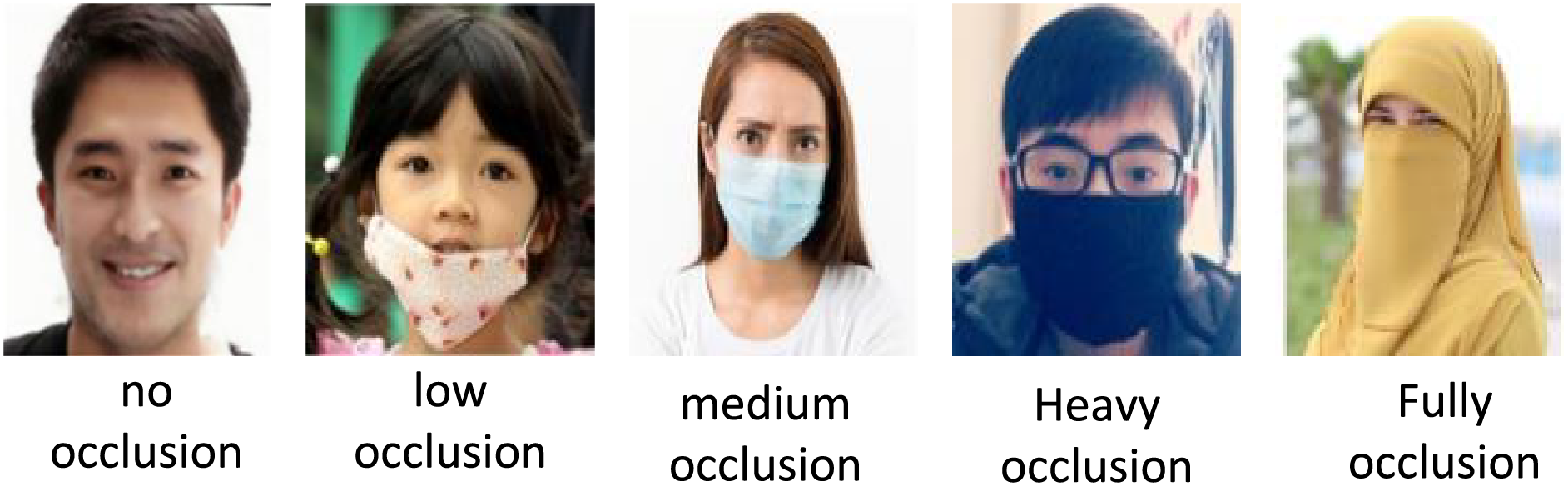

Fig. 5 illustrates examples of faces with varying degrees of occlusion, ranging from no occlusion to fully occluded faces. Occlusion levels in Fig. 5 are based on the approximate percentage of the facial area covered by occluding objects (e.g., hands, masks, glasses). High occlusion is defined as more than 50% of the face being obscured by objects such as masks, hands, or other barriers. The visualization highlights the progressive challenges introduced as occlusion levels increase, demonstrating the necessity for robust algorithms capable of handling each scenario effectively.

Figure 5: Examples illustrating different levels of occlusion, ranging from no occlusion to full occlusion. The images are sourced from publicly available benchmark datasets [6,13]. Low occlusion covers less than 25% of the face, medium occlusion covers 25%–50%, heavily occluded faces have 50%–70% coverage, and fully occluded faces exceed 70%. The images highlight the increasing challenge for face detection as occlusion levels rise

3 Review Scope and Methodology

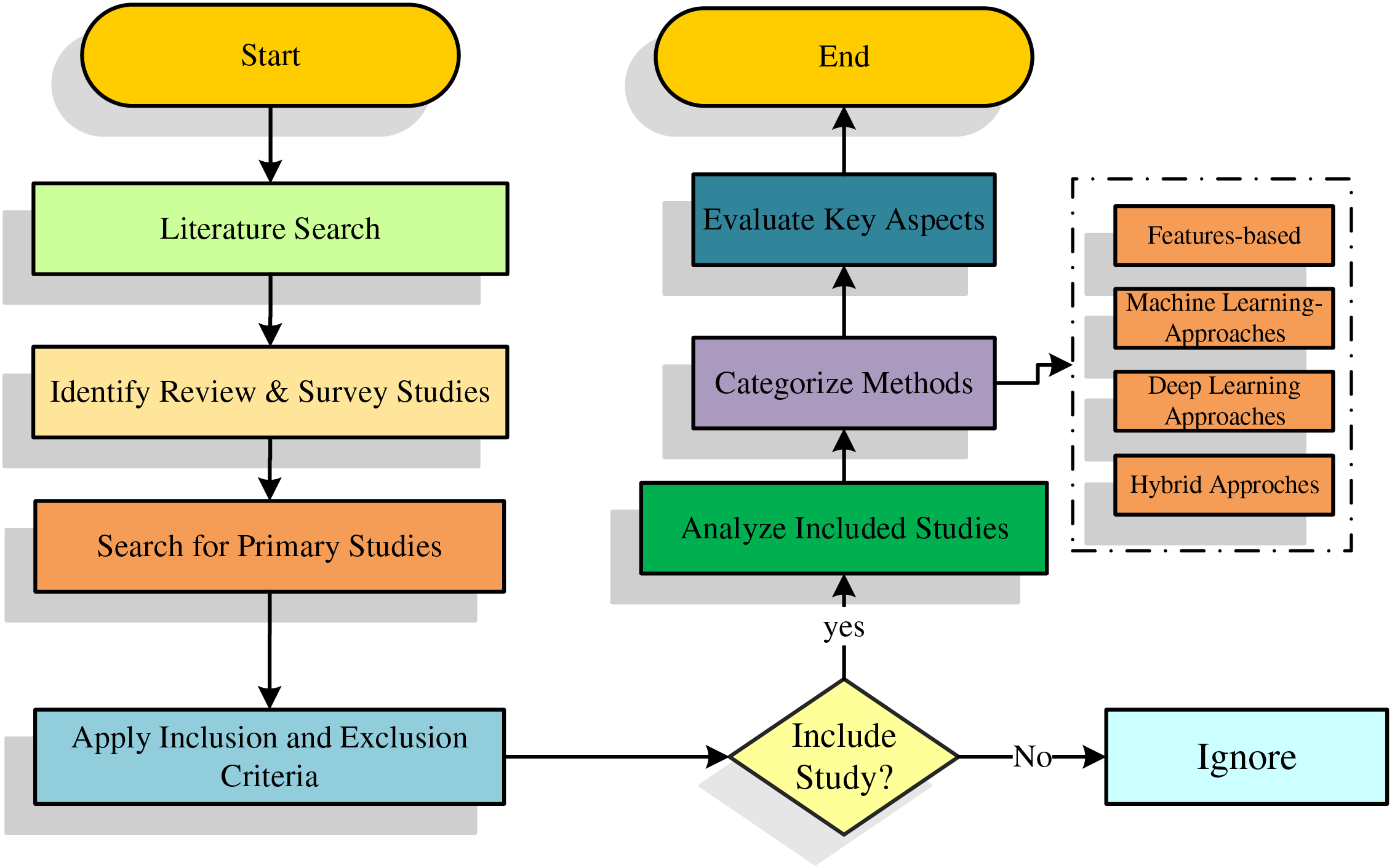

This section describes the methodology used to choose and evaluate the existing literature on face detection under occlusion. It also includes a summary and gap analysis of previously published review studies in this domain. This helps justify the need for the present review and clarify its distinct contributions. This review study followed a structured methodology to ensure a comprehensive evaluation of face detection techniques for occluded faces. The methodology was divided into several stages, as shown in Fig. 6, which illustrates the process from the literature search to classification and summarization of results.

Figure 6: Structured methodology for evaluating face detection techniques under occlusion

3.1 Search Strategy and Inclusion Criteria

To ensure comprehensive coverage, our review was conducted in two phases. In the first phase, we focused on identifying and analyzing existing review and survey studies related to face detection and recognition. After identifying gaps in previous surveys, we proceeded to the second phase, where a systematic search was conducted across multiple academic databases, including IEEE Xplore, SpringerLink, ScienceDirect, ACM Digital Library, and Google Scholar. Keywords used during the search included “face detection,” “face recognition,” “occlusion,” “occluded face detection,” “partially occluded faces,” “masked face detection,” “face detection with masks,” “face detection under unconstrained environments,” “survey,” and “review paper.” For the second phase, the scope included both partial and heavy occlusions to cover a wide range of scenarios. We applied specific criteria to include or exclude studies in our review. Studies published between 2010 and 2024 were considered. In addition, the inclusion criteria focused on studies that address face detection techniques under occlusion, papers proposing novel algorithms, frameworks, or datasets, and studies providing experimental evaluations using standard benchmarks or self-customized datasets. In contrast, the exclusion criteria eliminated studies that focused entirely on face detection or face recognition rather than handling occlusion scenarios. Studies focusing purely on face recognition or identity verification under occlusion were excluded unless they included detection components.

3.2 Categorization and Evaluation of Methods

Based on the analysis of the retrieved studies, the selected papers were grouped into four primary categories: feature-based approaches, traditional machine learning approaches, advanced deep learning-based approaches, and hybrid approaches. Each category was further divided into subcategories to capture the specific methodologies employed. These subcategories allowed us to highlight key differences in techniques, such as handcrafted feature extraction, statistical modeling, ensemble learning methods, CNNs, recent advancements based on attention mechanisms, transformer architectures, and Generative Adversarial Networks (GANs), and combinations of traditional and modern approaches.

To evaluate and compare the reviewed methods, we analyzed several key aspects across all studies. Firstly, we captured the proposed approaches and methodologies employed in each study. This involved identifying whether the methods relied on handcrafted features, machine learning algorithms, deep learning frameworks, or hybrid techniques. After that, we examined the validation datasets, including standard benchmarks and custom datasets designed for occlusion scenarios. Then, the occlusion levels that were considered in each study were evaluated in order to determine the adaptability of the proposed methods. The evaluation metrics used in the studies were also summarized, including precision, recall, mean average precision (mAP), F1 scores, and intersection over union (IoU). Finally, the advantages and disadvantages of each approach were evaluated, and its strengths were noted in a specific context, such as computational cost, generalization ability, and sensitivity to variations in occlusion type or severity. This methodology allowed us to determine the gaps and trends, especially in the complex scenarios of detecting covered faces. It also allowed us to distinguish the open problems, propose directions for detecting faces under partial and severe occlusions. It also enabled us to assess the potential of the reviewed techniques for real-time applications and challenging unconstrained environments.

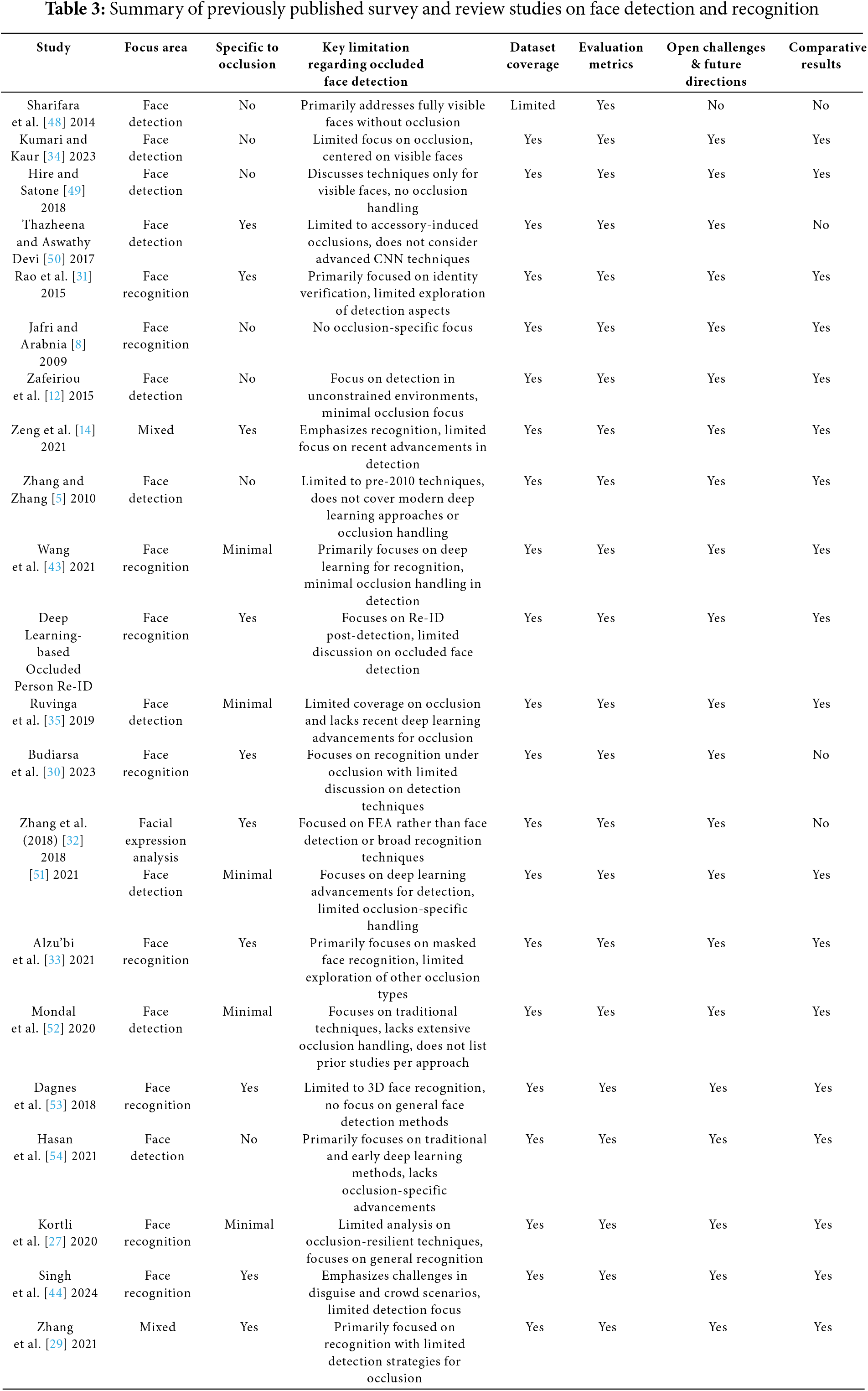

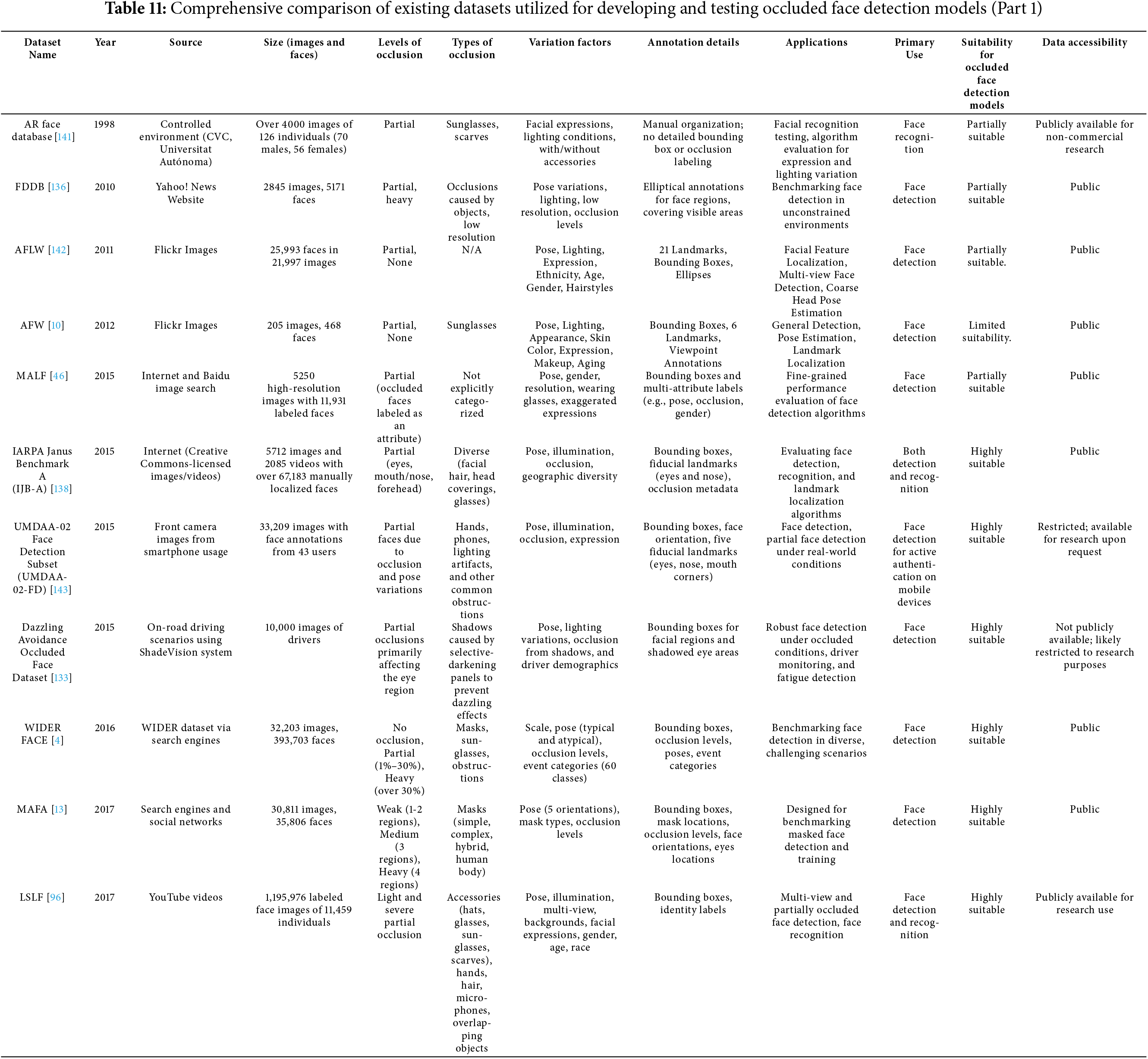

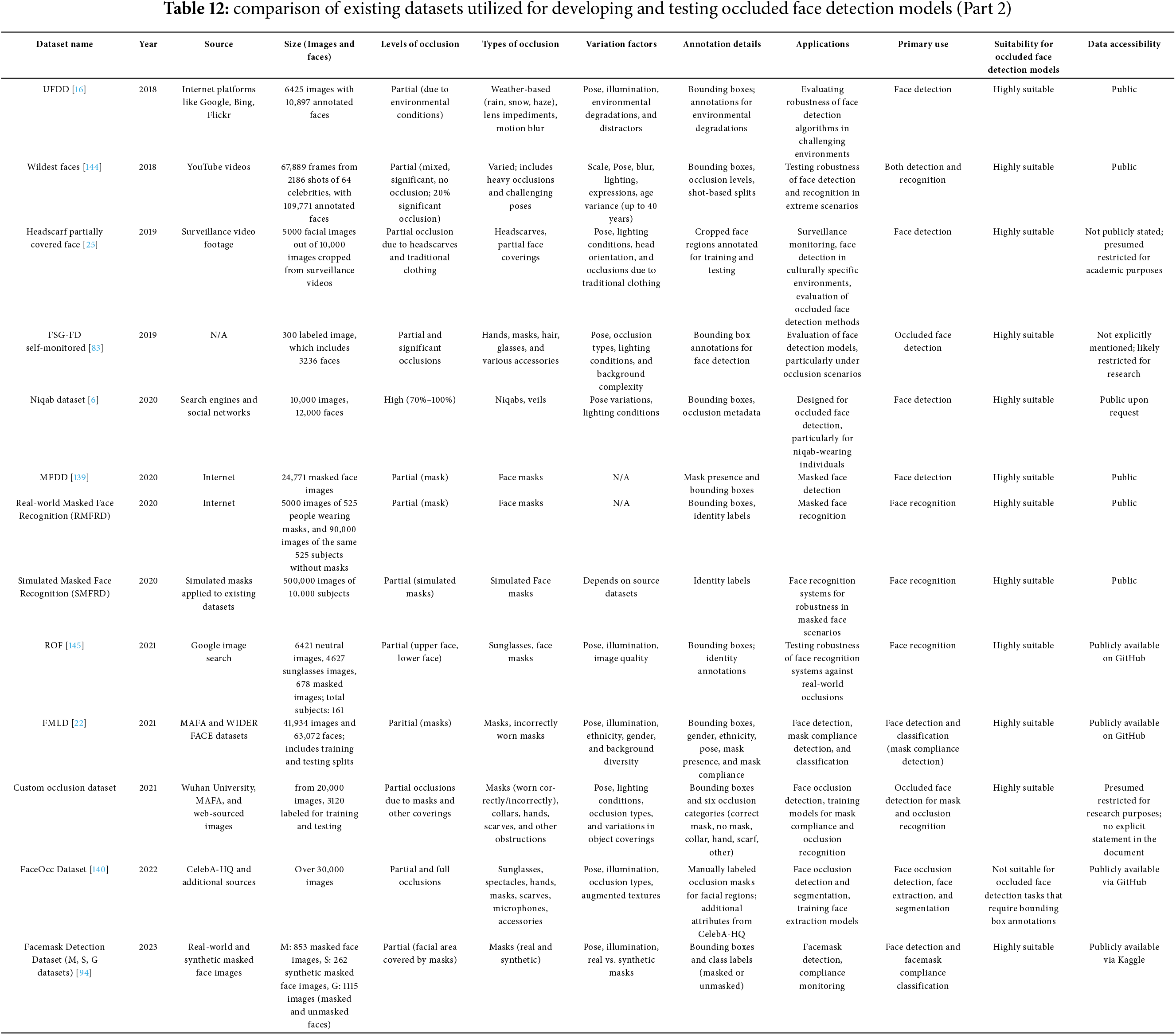

3.3 Summary of Existing Review and Survey Studies

This section examines and classifies existing review articles and surveys related to face detection, particularly those that have systematically examined progress in this field. Based on our analysis of the existing literature, review studies can be classified into three main groups: face detection, face recognition, and a combination of the two approaches. Table 3 presents a detailed summary of previously published review and survey studies related to face detection and recognition. It highlights each study’s focus area, specificity to occlusion challenges, dataset coverage, evaluation metrics, open challenges, and comparative results. This table helps to clearly identify the gaps in existing surveys, reinforcing the motivation for a focused and dedicated review of occluded face detection.

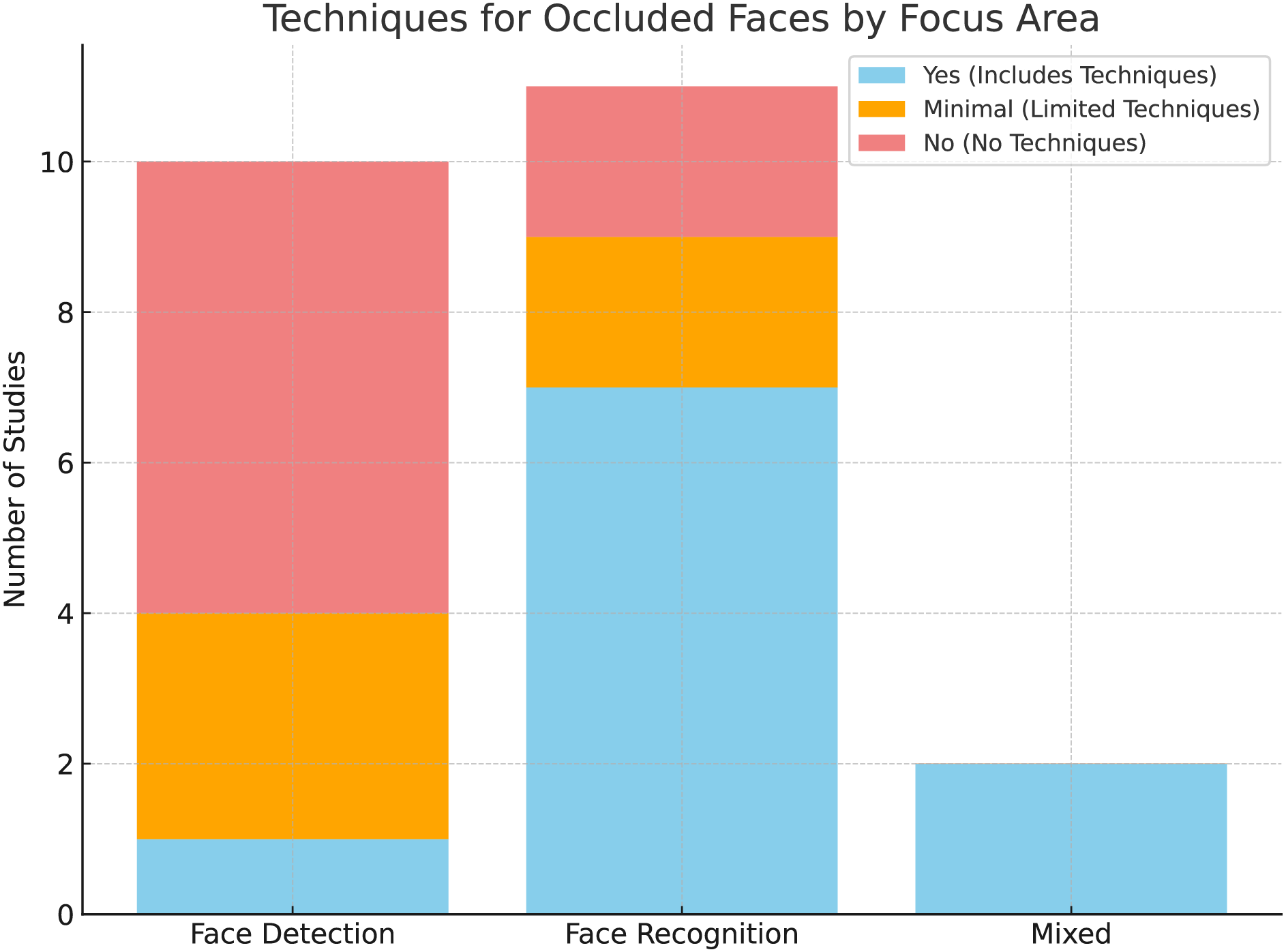

As summarized in Table 3 and highlighted in Fig. 7, most existing reviews do not specifically focus on occlusion-related techniques or provide only minimal insights. This highlights the need for a dedicated review of face detection under occlusion. Reviews such as those of [34,48,49], focus primarily on challenges such as pose, lighting, and background clutter, assuming full facial visibility. These studies offer limited insight into scenarios where occlusions significantly reduce detection accuracy. On the other hand, face recognition reviews, such as those of [30,31], focus on managing occlusions during identity verification. While these studies explore feature extraction and reconstruction techniques to mitigate occlusion effects, they do not address the critical challenge of detecting occluded faces. Similarly, mixed approaches, as shown by [14,29], touch on detection methods but lack an in-depth analysis of techniques specifically tailored to occluded face detection. In addition, many studies fail to adequately address data sets and evaluation metrics for occluded face detection. Furthermore, challenges such as reducing false positives caused by occlusion patterns, creating generalizable detection models, and handling different occlusion levels remain underexplored. Therefore, this review aims to address these gaps by providing a focused analysis of occluded face detection techniques, datasets, challenges, and future directions.

Figure 7: Distribution of review and survey studies on face detection and recognition techniques for occluded faces. The data is based on the studies summarized in Table 3 which are collected through manual database searches using relevant keywords

After defining the scope of existing review studies and confirming the necessity of dedicated occluded face detection analysis, the following section presents a thorough examination of current methodologies. The analysis groups detection methods according to their fundamental approaches, which range from traditional feature-based models to deep learning and hybrid strategies, including recent Transformer and GAN-based techniques.

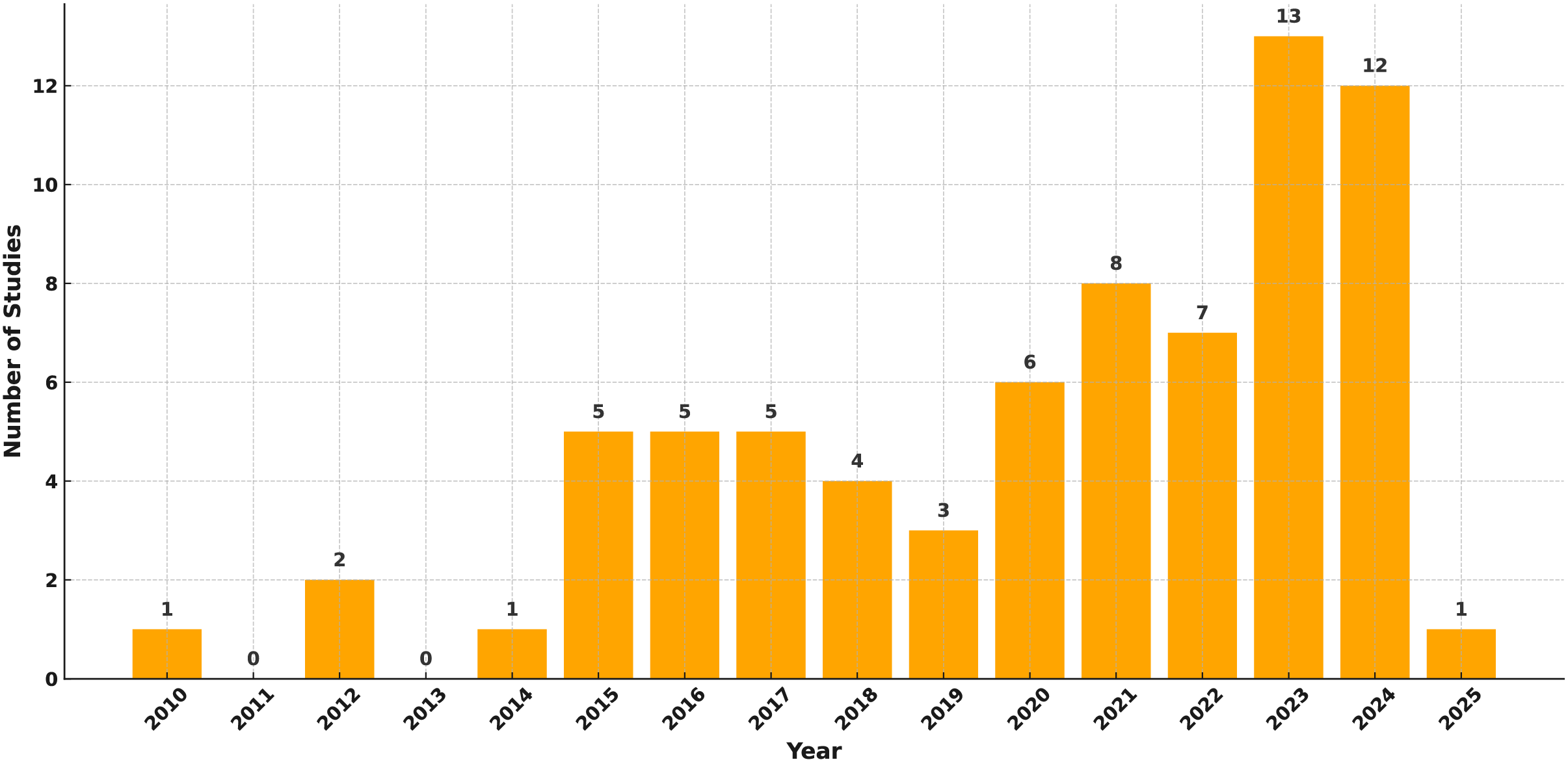

4 Review of Detection Methods for Occluded Faces

In this part, the current studies in detecting occluded faces are systematically and comprehensively reviewed. It analyses and groups several strategies according to their basic approaches. Separate subsections in the section are arranged such that each one corresponds with a main category of techniques. Based on the reviewed literature, methods are categorized into three main groups: (1) classical techniques, including handcrafted feature-based and traditional machine learning-based models; (2) advanced deep learning-based methods, further divided into CNN-based, attention-driven, transformer-based, and GAN-based models; and (3) hybrid methods that integrate traditional and modern strategies. Based on their approaches, applications, degrees of occlusion handled, used datasets, advantages, constraints, and evaluation criteria, a thorough review of the relevant studies is offered for every category. Each section ends with a summary table methodically demonstrating outcomes to enable simple, easy comparisons of approaches. Visualizations are given to highlight important trends and developments in the area, including the publication distribution over time, the relative popularity of every method category, and comparative performance measures across common datasets.

4.1 Classical Approaches for Occluded Face Detection

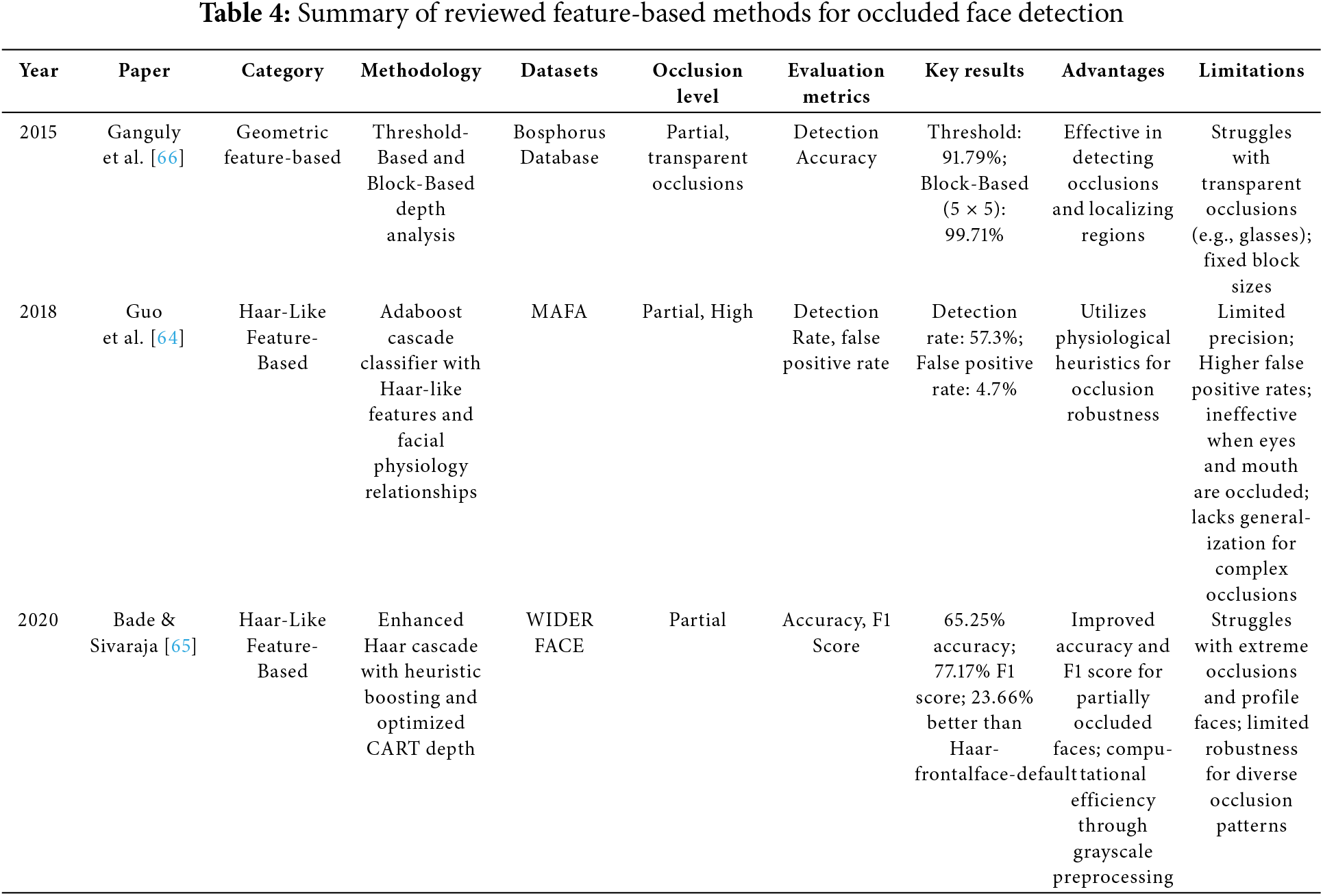

Before the advance of deep learning, face detection relied primarily on hand-crafted features and traditional machine learning [12,55,56]. These traditional methods were able to detect faces despite partial occlusion, but they struggled in complex real-world situations. This section briefly reviews the main types of these methods, including feature-based techniques and early learning models. Tables 4 and 5 present a comparative summary of the reviewed methods. It is worth mentioning that for traditional methods, the evaluation metrics are presented as reported in the original studies, whereas standardized benchmarks such as mAP are used where available for recent methods.

4.1.1 Traditional Feature-Based methods

Early approaches to face detection, such as those based on Haar-like features [9], Local Binary Patterns (LBP) [57], Histogram of Oriented Gradients (HOG) [58], edge-based techniques (e.g., Canny and Sobel filters) [59,60], and statistical models like Active Shape Models (ASM) [61] and Active Appearance Models (AAM) [62], relied heavily on manually designed features to extract facial patterns such as edges, textures, and geometric relationships [54]. These techniques are particularly helpful in cases when computational efficiency is more crucial, since they focus on identifying fundamental face components and apply predetermined descriptors to help in detection. Although computationally efficient and easy to implement [63], their performance significantly deteriorates in the presence of partial occlusions, lighting changes, and complex backgrounds. Some solutions attempted to enhance robustness through part-based or occlusion-aware models. For instance, Guo et al. [64] introduced an AdaBoost cascade classifier that utilized Haar-like features and facial proportion rules to detect occluded faces on the MAFA dataset. Similarly, Bade and Sivaraja [65] proposed enhancements to the Viola-Jones framework using heuristic boosting and decision tree tuning, showing improved results on partially occluded faces from WIDER FACE. On the other hand, Ganguly et al. [66] developed two geometric feature-based methods that employed 3D depth information to localize occlusions, achieving strong detection results on the Bosphorus database. Despite these efforts, traditional methods remain limited in flexibility and robustness, especially when handling transparent or irregular occlusion patterns, and have largely been surpassed by deep learning models. Table 4 presents a detailed summary of the reviewed feature-based methods for occluded face detection.

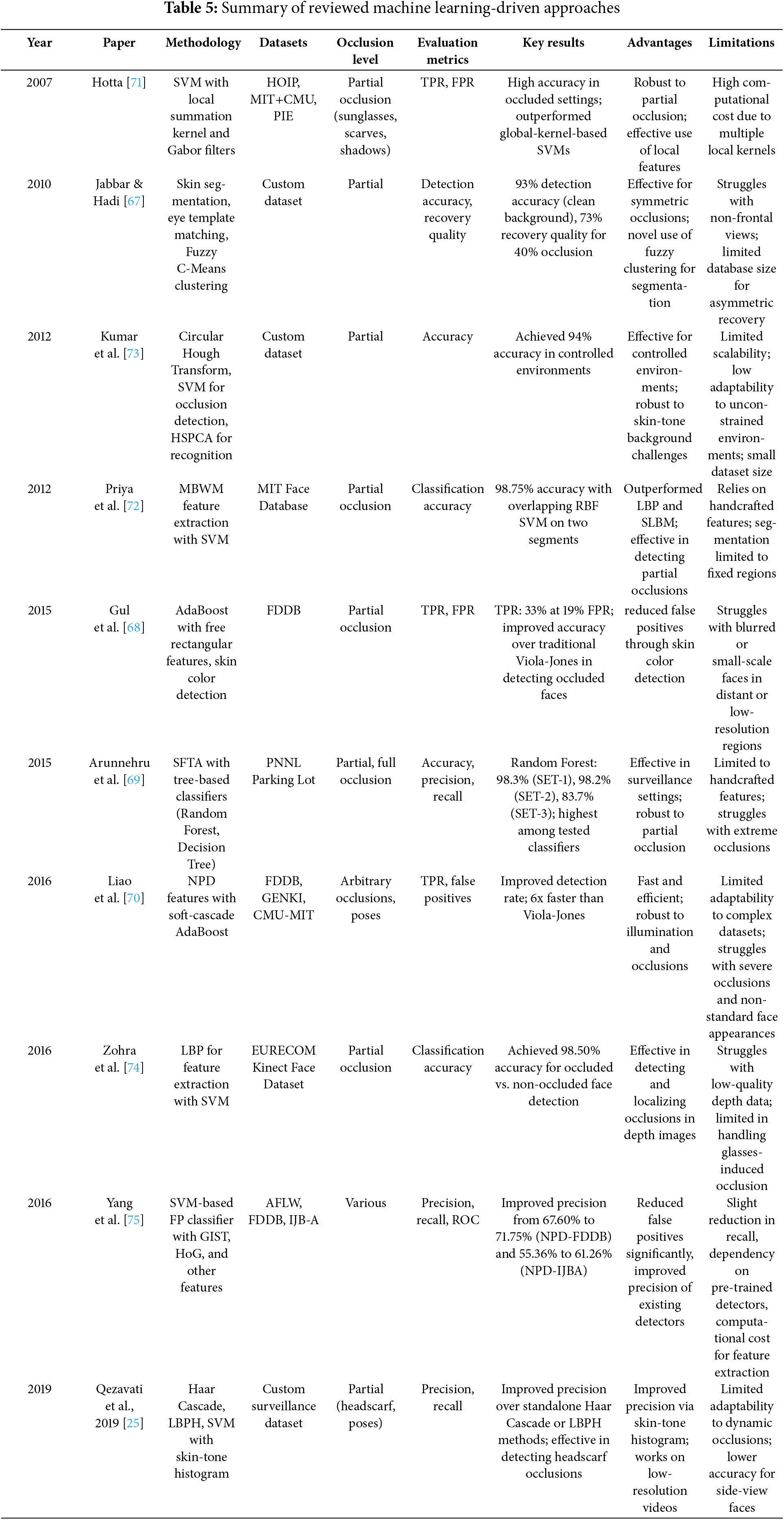

4.1.2 Traditional Machine Learning-Driven Methods

This section reviews traditional machine learning-based approaches used for occluded face detection. These approaches typically rely on predefined features extracted from facial images, followed by the application of classical machine learning algorithms to classify regions as faces or non-faces. Unlike feature-based methods, which depend solely on handcrafted features, machine learning-based methods combine feature extraction with learning algorithms to improve adaptability and accuracy. The studies in this category focus on techniques that learn patterns from labeled training data to detect occlusions and distinguish them from normal facial structures. These methods are particularly effective when paired with dimensionality reduction techniques, such as Principal Component Analysis (PCA). In this paper, the reviewed techniques are categorized into three main groups: (1) statistical and clustering models, (2) ensemble and boosting methods, and (3) Support Vector Machine (SVM)-based methods.

Statistical and clustering models, such as the method by Jabbar and Hadi [67], use fuzzy clustering, pixel similarities, or probabilistic analysis to segment occluded regions and reconstruct missing parts. Although this method was an early effort in addressing occluded face recovery, it is now considered outdated and is not applicable to modern real-time face detection systems. Ensemble and boosting methods, including the works of Gul and Farooq [68], Arunnehru et al. [69], and Liao et al. [70], improve detection by combining multiple weak classifiers using AdaBoost or decision tree ensembles to focus on hard-to-detect samples and manage occlusion and background complexity. Over time, researchers increasingly explored SVM-based techniques due to their strong discriminative power and ability to handle partial occlusions. These methods, such as those proposed by Hotta [71], Priya and Banu [72], SuvarnaKumar et al. [73], Zohra et al. [74], and Yang et al. [75], apply discriminative learning using handcrafted features, local descriptors, or depth-based cues to classify occluded regions. While these machine learning approaches showed improved detection over earlier basic feature-based methods, they still face challenges in handling diverse occlusion types and adapting to complex real-world settings due to their reliance on static feature representations. Table 5 summarizes the key characteristics of the reviewed machine learning-based techniques.

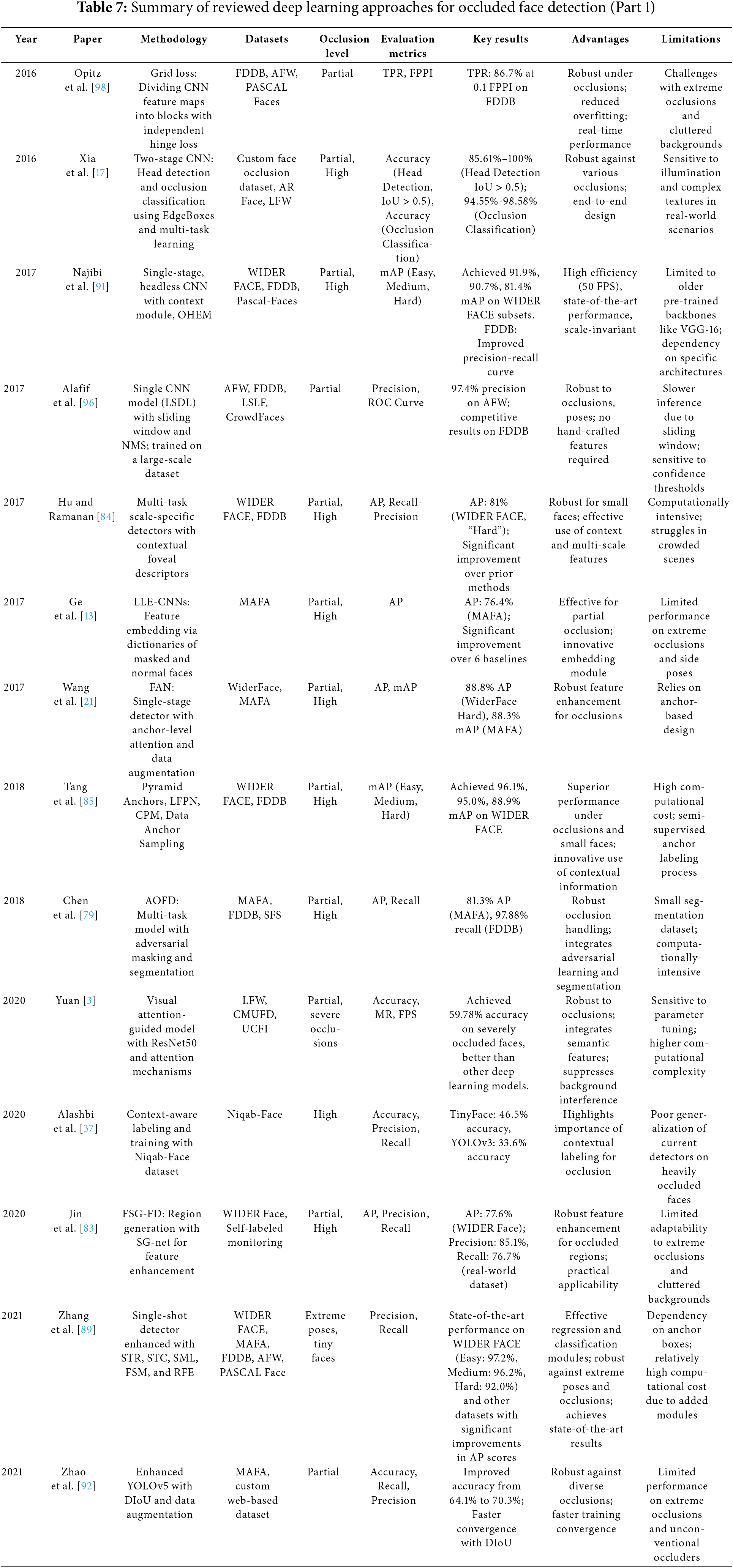

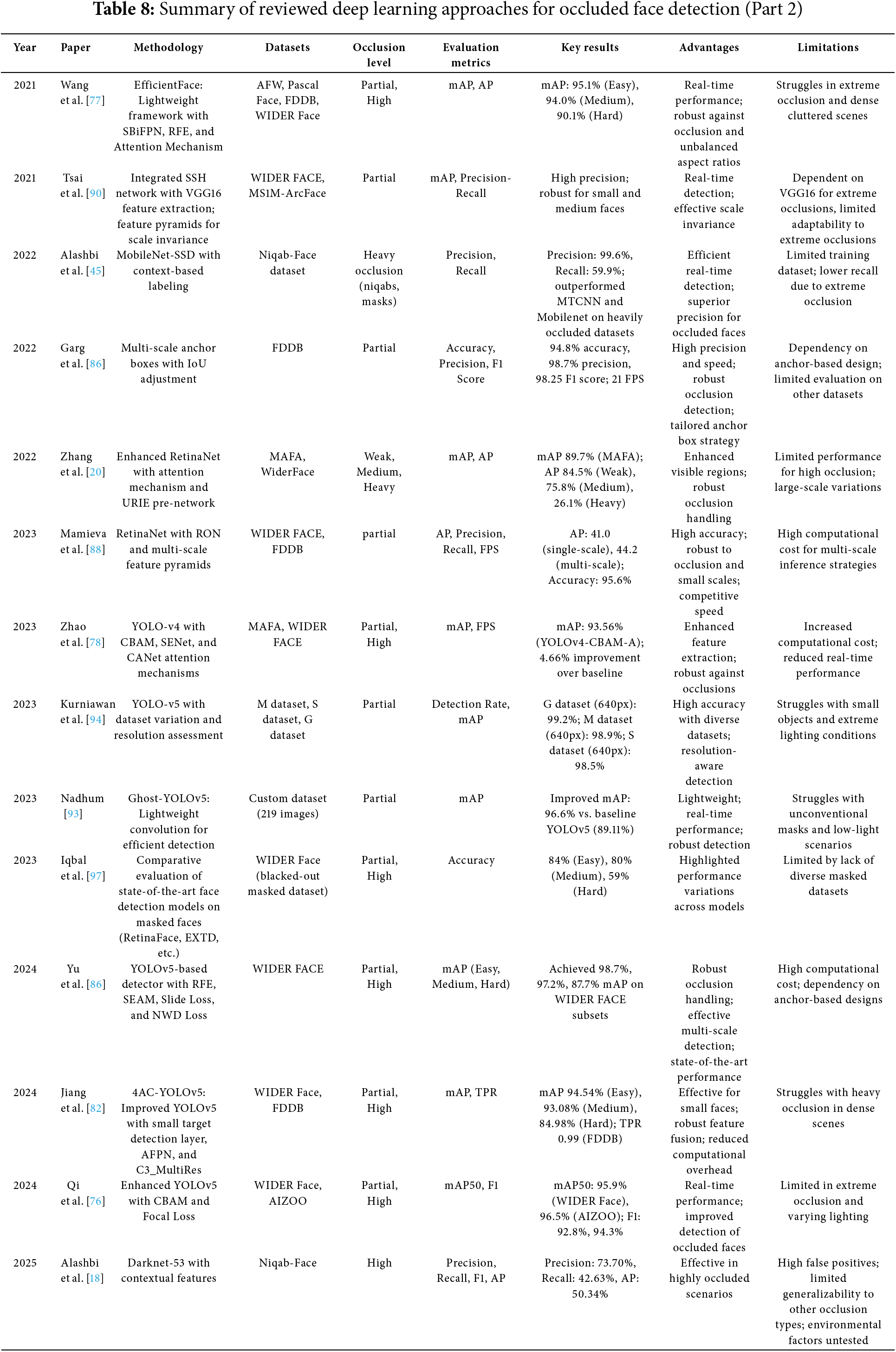

4.2 Advanced Deep Learning-Based Methods

This section reviews advanced deep learning methods for face detection under occlusion. It focuses on approaches that use CNNs and other deep learning architectures. Unlike traditional methods that depend on handcrafted features, deep learning techniques automatically learn patterns and representations from data, which makes them more flexible in handling complex occlusions, lighting variations, and pose changes. These methods have demonstrated strong performance, particularly in detecting occluded faces under unconstrained conditions. Many of the reviewed methods leverage pretrained networks like VGG16, ResNet, and YOLO, while others introduce custom architectures optimized specifically for occlusion scenarios. Several approaches incorporate enhancements such as attention mechanisms, multi-task learning, multi-scale learning, and context-aware processing to further improve accuracy and generalization across datasets. In addition to these enhancements, recent works have introduced Transformer-based and GAN-based models as distinct subcategories. These emerging directions expand the capabilities of deep learning methods in handling complex occlusion scenarios. To highlight key advancements, the studies in this section are categorized based on their architectures and methodologies as presented in Table 6. Meanwhile, Tables 7 and 8 present a detailed summary and comparison of reviewed advanced deep learning based studies.

4.2.1 Attention Mechanism-Based Approaches

Wang et al. [21] introduced the Face Attention Network (FAN), a single-stage face detector designed to handle occlusion challenges in face detection. FAN used an anchor-level attention mechanism to enhance features from facial regions while reducing focus on irrelevant areas, thus minimizing false positives. The model was built on the RetinaNet architecture and included a Feature Pyramid Network (FPN) to preserve both spatial resolution and semantic information which enables it to detect faces at different scales. Extensive data augmentation, including random cropping, was applied during training to simulate occluded faces. For evaluation, FAN was applied to WiderFace and MAFA datasets. It got 88.8% Average Precision (AP) on the hard subset of WiderFace, and 88.3% mean Average Precision (mAP) on MAFA, outperforming methods like Locally Linear Embedding Convolutional Neural Networks (LLE-CNNs) and Adversarial Occlusion-aware Face Detection (AOFD). The results showed that FAN effectively detects occluded faces while maintaining computational efficiency. However, its reliance on anchor-based methods could limit performance with complex occlusions in real-world scenarios. In 2022, Zhang et al. [20] proposed an improved RetinaNet model for detecting occluded faces by incorporating a Universal and Recognition-friendly Image Enhancement (URIE) module as a pre-network, along with an attention mechanism. The URIE network enhanced input images by highlighting visible facial regions and reducing distortions. The attention mechanism, meanwhile, boosted important features while keeping contextual information. The model was tested on the WiderFace and MAFA datasets. On MAFA, it attained a mAP of 89.7% surpassing techniques including FAN and LLE-CNNs. Strong performance was also shown across weak (84.5%), medium (75.8%), and moderate occlusion (26.1%). Although the technique maintained computing efficiency and handled occlusions well, problems with heavily occluded faces and large-scale variances were recognized as issues for further development. In another study, Qi et al. [76] suggested a modified form of YOLOv5 to identify mask-occluded faces in real-time applications. To highlight important facial features while reducing attention on irrelevant areas, the improvements included installing a Convolutional Block Attention Module (CBAM) to the backbone and neck of YOLOv5s. Moreover, focal Loss took the role of the binary cross-entropy loss function to solve sample imbalance and enhance identification in challenging scenarios. The model was investigated on the WIDER Face and AIZOO datasets. On Wider Face, it obtained a mAP50 of 95.9% and an F1-score of 92.8%; on AIZOO, it obtained a mAP50 of 96.5% and an F1-score of 94.3% surpassing the baseline YOLOv5s and other advanced approaches. The model also displayed enhanced sensitivity to finely occluded faces at small scales. Though it had advantages, the technique suffered with severe occlusions and needed further work on environmental conditions and changeable lighting.

Wang et al. [77] also presented EfficientFace, another attention-based model. EfficientFace is a lightweight deep-learning framework designed with a focus on occlusion handling, imbalanced aspect ratios, and feature representation difficulties. Three improvements were introduced: a Receptive Field Enhancement (RFE) module to handle facial aspect ratio variances, a Symmetrically Bi-directional Feature Pyramid Network (SBiFPN) to improve spatial accuracy and feature fusion, and an Attention Mechanism (AM) to concentrate on important areas for identifying occluded faces. Using only one-sixth of the computational resources compared to models like Dual Shot Face Detector (DSFD) and MogFace, EfficientFace achieved mAP scores of 95.1% (Easy), 94.0% (Medium), and 90.1% (Hard) evaluated on datasets including AFW, Pascal Face, FDDB, and WIDER Face. It also outperformed many state-of- the-art detectors. It highlighted areas for future development even with its great performance since it struggled with highly obscured faces and dense clusters in crowded settings.

Zhao et al. [78] proposed an attention-enhanced YOLOv4 framework to improve face mask detection under challenging conditions, such as occlusions and lighting variations. The approach incorporated three attention mechanisms: Convolutional Block Attention Module (CBAM), Squeeze-and-Excitation Networks (SENet), and Coordinate Attention Networks (CANet) into the feature fusion and detection layers. The best version, YOLOv4-CBAM-A, integrated CBAM modules at key points in the network, achieved a 93.56% mAP on the MAFA and WIDER FACE datasets with a 4.66% improvement over the baseline YOLOv4. The CBAM modules enhanced feature extraction by focusing on relevant regions while suppressing irrelevant features, particularly for small or occluded faces. While the model showed higher accuracy, it faced limitations in real-time processing speed due to the computational overhead introduced by the attention mechanisms. To improve face detection and recognition under occlusions, Yuan [3] introduced a visual attention guided model. The model used a visual attention mechanism for concentrating on the visible facial components and excluding the cluttered background. It employed a feature extraction network of ResNet50 size with a spatial and channel attention module for enhanced feature representation. The model treated face detection as a high-level semantic feature detection task and used activation maps for localizing the face and its scale. It was evaluated on datasets including LFW, CMUFD, and UCFI and was found to be more accurate and efficient than the existing methods. In the case of severely occluded faces, it achieved a detection rate of 59.78%, which is better than several deep learning-based methods. Although the model has some good properties, it had a higher computational complexity of the attention modules that increased the training time.

4.2.2 Multi-Task Learning Approaches

Xia et al. (2016) [17] presented an end-to-end framework for facial occlusion detection to enhance ATM security systems. The framework followed a coarse-to-fine strategy by employing two CNN models: one for head detection from upper-body images and another for categorizing occlusions in face parts (e.g., eyes, nose, and mouth). EdgeBoxes was used to create candidate areas; CNNs then were used for feature extraction and classification. Training on a bespoke face occlusion dataset, it was tested on extensively used datasets like LFW and AR. On the custom dataset, the framework attained high detection accuracy of 85.61%; on AR, 97.58%; and 100% for head detection with IoU > 0.5. It reported 94.55%, 98.58%, and 95.41%, respectively, for occlusion classification. However, the method struggled with illumination variations, complicated occlusion patterns, and head variability in real-world circumstances even if it was strong against many degrees of occlusion.

Ge et al. [13] proposed LLE-CNNs (Locally Linear Embedding CNNs), a deep learning framework for detecting masked faces. The authors addressed challenges like the lack of datasets and loss of facial cues caused by occlusions by introducing the MAFA dataset, which includes 30,811 images and 35,806 masked faces with varying orientations, occlusion levels, and mask types. The framework combined three main components. A proposal module first identified candidate facial regions using pre-trained CNNs. Next, an embedding module applied Locally Linear Embedding (LLE) to reconstruct missing facial cues and reduce occlusion noise. Finally, a verification module performed classification and regression to refine detections. Tested on the MAFA dataset, LLE-CNNs achieved an average precision (AP) of 76.4%, outperforming methods like Multi-task Cascaded Convolutional Neural Network (MTCNN) and Speeded Up Robust Features (SURF) Cascade by 15.6% or more. However, it struggled with extreme occlusions and side poses, achieving only 22.5% and 17.2% AP, respectively. The study demonstrated the value of combining feature refinement and contextual reasoning for occluded face detection and established the groundwork for future improvements in masked face detection.

Combining adversarial learning with segmentation, Chen et al. [79] presented a multi-task framework called AOFD to detect faces under significant occlusion. During training, the adversarial masking technique was applied to create occluded face features and push the detector to concentrate on visible facial areas. It also incorporated a segmentation branch to forecast blocked spots, which treated them as supplemental information rather than obstacles therefore enhancing feature extraction and detection accuracy. Outperformance of the model over FAN and LLE-CNNs was achieved with an 81.3% AP on MAFA. On FDDB at 1000 false positives, it attained a 97.89% recall rate, proving its robustness in identifying partially and highly occluded faces, even with low evident landmarks. Nevertheless, the approach depended on a limited manually labeled segmentation dataset (SFS), which restricts its scalability, and needed expensive CPU resources because of its segmentation technique. AOFD demonstrated in spite of these constraints the efficiency of adversarial learning and occlusion segmentation in enhancing occluded face detection.

In 2020, Deng et al. proposed RetinaFace [80], a single-stage, multi-task face detection framework that jointly predicts face bounding boxes, 2D landmarks, and 3D facial vertices. The overall architecture and multi-task design of RetinaFace are shown in Fig. 8. It introduces a unified regression target and enhances training with additional manual and semi-automatic annotations on datasets like WIDER FACE, AFLW, and FDDB. By combining these tasks into one inference process, RetinaFace improves detection robustness under occlusion, pose variations, and scale changes, while maintaining high efficiency.

Figure 8: Architecture of the RetinaFace model, including the feature pyramid network (left), cascade context head module (middle), and multi-task loss design (right), as proposed by Deng et al. [80]

Using a two-stage pipeline comprising face detection and classification to evaluate appropriate mask usage, Batagelj et al. [22] explored face-mask detection for COVID-19 compliance. To enable their investigations, the writers presented the Face-Mask Label Dataset (FMLD), constructed from the MAFA and Wider Face datasets. While the classification stage examined whether masks were worn correctly or incorrectly using CNN models, the detection stage concentrated on face identification and evaluation of mask effects on detection performance. Modern detectors struggled with masked faces, according to results; performance dropped by approximately 15% when compared to unmasked faces. RetinaFace emerged as the most robust detector, while ResNet-152 achieved the highest classification accuracy (over 98%) for identifying compliant and non-compliant mask placements. The combined pipeline achieved mAP values above 90%, outperforming baseline methods. Despite its effectiveness, the study faced limitations, including coarse dataset annotations that failed to capture varying occlusion levels, reliance on pre-trained models instead of custom architectures, and computational complexity, making real-time application challenging.

4.2.3 Multi-Scale Learning Approaches

To address the challenges of detecting faces with large variations in scale, pose, and occlusion, DSFD (Dual Shot Face Detector) was proposed by Li et al. [81] as an extension of the single-shot detectors (SSD) architecture. They introduced a Feature Enhance Module (FEM) and Progressive Anchor Loss (PAL) to improve multi-scale feature learning. An Improved Anchor Matching (IAM) method is also used for better training. Experiments on WIDER FACE and FDDB demonstrated that DSFD outperforms earlier methods like PyramidBox, especially under occlusion.

Jiang et al. [82] introduced 4AC-YOLOv5, an improved version of the YOLOv5 framework designed to detect small and occluded faces. The model featured three enhancements: a small target detection layer to capture low-level features for better detection of small faces, an Adaptive Feature Pyramid Network (AFPN) to dynamically adjust feature importance during multi-scale fusion, and a multi-scale residual module (C3_MultiRes) to improve multi-scale learning while maintaining efficiency. Tested on the WIDER Face and FDDB datasets, the model outperformed YOLOv5 and other methods, achieving mAP scores of 94.54% (Easy), 93.08% (Medium), and 84.98% (Hard) on WIDER Face, and a TPR of 0.99 at 1000 false positives on FDDB. While effective for occluded and small-scale faces, the model struggled with heavily occluded faces in dense scenes and required a balance between efficiency and accuracy. Jin et al. [83] proposed FSG-FD (Feature-Selective Generation for Face Detection), a deep learning model designed to detect occluded faces by combining multi-scale feature extraction and contextual information. The model introduced SG-net, a specialized feature-enhancement module that focuses on unoccluded regions while suppressing noise from occlusions. It then merges these enhanced features with high-level convolution outputs for classification and regression tasks. The model was evaluated on the WIDER Face dataset and a self-labeled surveillance dataset, achieving an average precision (AP) of 77.6% on WIDER Face, outperforming Faster R-CNN and other models. In real-world surveillance videos, it achieved a precision of 85.1% and a recall of 76.7%, demonstrating practical applicability. While the model showed effectiveness in multi-scale feature fusion and occlusion handling, its reliance on predefined feature generation limits adaptability to extreme occlusions and highly cluttered backgrounds.

Hu and Ramanan [84] proposed a face detection framework designed to handle small faces in complex environments. The method combined multi-scale representations, contextual reasoning, and a foveal descriptor that captured both local high-resolution features and global low-resolution context to improve detection accuracy. The framework used a multi-task model with scale-specific detectors trained on a coarse image pyramid, which enables it to detect faces across different scales. It leveraged large receptive fields to incorporate contextual information, which enhances performance for tiny faces as well as larger faces. Evaluated on the WIDER FACE and FDDB datasets, the method achieved state-of-the-art performance, with an AP of 81% on the WIDER FACE “hard” subset, outperforming earlier methods (29%–64% AP). It also showed robustness with respect to the scale, pose, and environmental conditions. Although the approach was effective, it had some drawbacks in terms of computational complexity and performance in crowded scenes, which point to further improvements for real-time applications. Tang et al. [85] proposed PyramidBox, a framework for detecting small, blurred, and partially occluded faces in complex scenarios. It enhanced the feature context by using pyramid anchors to include contextual information such as head and body contexts, which did not need any extra labels. The model incorporated Low-Level Feature Pyramid Networks (LFPN) to fuse the spatial detail information at different scales as coarse-level features and the semantic information as fine-level features to enhance the detection performance, especially for small faces. It also incorporated a Context Sensitive Prediction Module (CPM) to enhance the localization and classification performance. To enhance the training diversity and robustness, the authors proposed Data Anchor Sampling that involved face samples resizing and reshaping. The framework got the best results on the WIDER FACE and FDDB datasets, with mAP of 96.1% (easy), 95.0% (medium), and 88.9% (hard). However, there were some drawbacks to the method because it was costly and used semi-supervised anchor labeling.

To overcome the challenges of scale variation, occlusion, and imbalanced samples in training data, Yu et al. suggested an improved face detection algorithm based on YOLOv5 [86]. In this study, the model made several changes to boost the accuracy and robustness. The framework was also incorporated with a Receptive Field Enhancement (RFE) module that employed multi-branch dilated convolutions to deal with the multi-scale detection problem. To this end, it employed a Separated and Enhancement Attention Module (SEAM) to highlight the features in the occluded regions and a Repulsion Loss function to prevent the overlapping bounding boxes from affecting the detection performance in the case of occlusion. To address the sample imbalance, a Slide Loss was used to learn to dynamically rank hard samples; then, Normalized Wasserstein Distance (NWD) Loss was incorporated to improve the detection of small faces. The effectiveness of the model was validated by the experiments on the WIDER FACE dataset, and the mAP values of 98.7% (easy), 97.2% (medium), and 87.7% (hard) were achieved. However, the model proposed in this paper relied on anchor-based designs and had high computational costs, which may limit its applicability in environments with scarce resources.

Garg et al. [87] proposed a single-stage deep CNN for detecting partially occluded faces in video sequences. The approach used multi-scale anchor boxes to help capture the shapes and sizes of the facial regions. The approach reduced the computational costs by restricting the number of scales and the number of anchor boxes without sacrificing the accuracy. The network was designed to improve the detection performance in the occluded regions by using five max pooling layers for feature extraction and 22 convolutional layers for anchor-based identification of partially occluded faces. In this paper, the researchers have suggested a different Intersection-IoU threshold of 0.4 to eliminate the bounding boxes that are not relevant. The model was evaluated on the FDDB dataset and the results show that the model has an accuracy of 94.8%, precision of 98.7%, and F1-score of 98.25% at the frame rate of 21 fps. Its anchor-based approach, however, may limit the generality to non-standard face shapes or other datasets.

To enhance the accuracy and recall of face detection especially under occlusion, blurring, and at small scales, Mamieva et al. [88] presented a face detection method based on deep learning using the RetinaNet framework. The model design had a two-part architecture that consisted of a region-offering network (RON) to propose potential facial regions and a prediction network to further refine and classify these regions. To this end, the method adopted multi-scale features for robustness by employing a high and low feature generation pyramid that improves the ability to detect faces at different scales. The model was trained on the WIDER FACE dataset and fine-tuned on FDDB. It has an AP of 41.0 (single-scale) and 44.2 (multi-scale) and a detection accuracy of 95.6%. Though the method is powerful, it has a high computational cost, especially in multi-scale inference, which may be unfeasible in resource-constrained environments.

To improve the regression and classification performance in face detection from challenging poses and small sizes, Zhang et al. proposed a single-shot face detector [89]. Introducing five specialized modules; Selective Two-step Regression (STR) and Selective Two-step Classification (STC) to enhance bounding box localization and recall efficiency, Scale-aware Margin Loss (SML) to improve the scale, Feature Supervision Module (FSM) to improve feature alignment, and Receptive Field Enhancement (RFE) to increase the context for detection of faces at different scales. On WIDER FACE, MAFA, FDDB, AFW, and PASCAL Face datasets, the model achieved state-of-the-art results. At Video Graphics Array (VGA) resolution, using ResNet-18 as backbone, the detection rate and speed were very good with frame rate of 37.3 FPS. The model had some limitations, like reliance on anchor boxes and relatively high computational complexity due to extra modules, but it performed strongly.

In another interesting study, Tsai et al. [90] proposed a system based on SSH and feature extraction using VGG16 to detect and recognize partially-occluded faces. The SSH network had three detection branches, M1, M2, and M3, to detect small, medium, and large faces, respectively. It also incorporated a context module to improve feature maps by increasing the receptive field and feature pyramids for improved detection accuracy at low computational cost. The system was tested on the WIDER FACE and MS1M-ArcFace datasets and had very good accuracy for different levels of occlusion. However, it relied on VGG16, which might fail in extreme occlusion cases. Nevertheless, the method could process frames in real time with high precision for partially occluded faces.

4.2.4 Single-Stage Detection Approaches

Najibi et al. [91] proposed the SSH face detector, a single-stage, completely convolutional network for effective and accurate face detection. SSH was better than two-stage methods that rely on region proposals and classification because it performed classification and regression in a single pass, which reduces computational overhead while maintaining high precision. The model was able to work on all sizes and could find small, medium, and large faces by using many convolutional layers with different steps. It had a context module that, without an image pyramid, increased the receptive field to effectively capture contextual information. To increase robustness during training, SSH also employed online hard example mining (OHEM). Demonstration of modern performance on WIDER FACE, FDDB, and Pascal-Faces datasets. On Wider Face, it had mAP rates of 91.9% (easy), 90.7% (mid), and 81.4% (hard). It also increased mAP by 4% when coupled with an input pyramid. The model was also quite efficient, processing images GPU at 50 frames per second. However, there were some disadvantages: SSH was dependent on pre-trained backbones like VGG-16, which limited the ability to more recent architectures.

In 2020, Alashbi et al. [45] proposed the Niqab-Face-Detection model which is a deep learning framework for detecting mostly niqab-covered, highly occluded faces. The proposed framework, namely MobileNet-SSD, combines MobileNet for effective feature extraction and Single Shot Multiboxin Detector (SSD) for real-time detection. The approach of context-based labeling which pays attention to the context around the face rather than just the part of the face that is actually visible improves the detection accuracy. To improve performance, especially in challenging conditions, focus loss, and hard sample mining were employed. It was evident from the evaluation findings that current models including MTCNN, and MobileNet were outperformed by the proposed model with a precision of 99.6% and recall of 59.9%. The model however had a poor recall rate attributed to the small dataset and the high level of occlusion, which suggested the need for more data and optimization.

To improve the detection of partially-occluded faces, Zhao et al. [92] proposed an enhanced YOLOv5 framework. The method aimed at increasing the detection accuracy through changes in the loss function to replace the standard one with Distance Intersection over Union (DIoU) for faster convergence and better localization. In addition, it also applied data augmentation strategies such as flipping, scaling and brightness changes, and label smoothing to improve robustness. The model was trained on a large dataset which was collected from the MAFA dataset and web-sourced images, and it had six classes of occlusions: masks, collars, hands, scarves, objects, and no occlusions. The enhanced YOLOv5 had an accuracy of 70.3%, which is better than the initial YOLOv5 accuracy of 64.1%. Nonetheless, the model had some difficulties with severe occlusion and irregular objects which are the areas for further optimization in terms of datasets and sophisticated loss functions. Nadhum et al. [93] proposed Ghost-YOLOv5, an improved version of the YOLOv5 deep learning algorithm for real-time detection of faces with and without masks. The model enhanced efficiency and effectiveness through the application of Ghost Convolution instead of the conventional convolution to enhance computation time and performance. A self-collected dataset of 219 images of masked and unmasked faces was used to train and test the model. The model achieved a mean Average Precision (mAP) of 96.6% which is higher than the baseline YOLOv5 model that achieved 89.11%. The model also has a fast inference time which makes it suitable for real time applications. But the study has some limitations like inability to detect faces with uncommon masks and in low light conditions, and the authors thus recommended dataset enhancement and architectural improvement for future work as well.

Kurniawan et al. assessed the performance of the YOLOv5 model for detecting masked and unmasked faces across different image resolutions [94]. For this purpose, the study employed three datasets: the M dataset which has real-world masked faces, the S dataset which has synthetic faces with masks, and the G dataset which is a combination of the M and S datasets. The performances were evaluated at 320 pixels and 640 pixels to determine the costs and benefits of increasing the size of the input image during training. The results indicated that the image resolution (pixels) affected the accuracy of the detection and that high resolution (640 pixels) provided better results at the expense of increased training time. In addition to achieving detection rates of 99.2%, 98.9%, and 98.5% on the G, M, and S datasets, respectively, the model also had some constraints in detecting small objects and in poor illumination. The study concluded that to enhance the detection accuracy, it is essential to employ an appropriate dataset and optimal image resolution. YOLOv5 is suggested for application in public health surveillance.

Li et al. [95] proposed a face detection model for handling the occlusions with the help of a double-channel network architecture. The framework entailed an occlusion perceptron network that learned the features from unoccluded regions and a residual network to learn the features from the entire face for a more complete representation. The output of both the networks were combined using a weighted scheme to improve the feature learning of the occluded faces. In order to address the problems of data scarcity and overfitting, the model employed transfer learning to pre-train convolutional layers. It was evaluated on the AR dataset (sunglasses and scarves’ occlusions) and the MAFA dataset (diverse occlusions). On the AR dataset the model achieved 99.46% accuracy for sunglasses and 99.73% accuracy for scarves and on the MAFA dataset it achieved 80.2% accuracy with a frame rate of 39 FPS. But there were some issues e.g., high computational costs in training and the need to manually tune parameters for occlusion thresholds because they were not learned from the data.

In their paper, Alafif et al. [96] presented LSDL, a CNN based method for face detection in unconstrained environments with partial occlusions and pose variations, using a single CNN trained on a large scale dataset of occluded, posed and illuminated faces. First, it employs a sliding window approach for face localization and then uses a confidence score threshold and Non-Maximal Suppression (NMS) to further localize the detected faces. For training, the authors used four novel datasets (LSLF, LSLNF, CrowdFaces, and CrowdNonFaces) and used AFW and FDDB datasets for evaluation. The precision of AFW was 97.4% and it had a fairly good performance on FDDB. Nevertheless, LSDL was quite robust in detection but had some constraints such as slow inference time due to the sliding window and sensitivity to confidence thresholds that failed to detect in some instances.

Iqbal et al. [97] carried out a comparative analysis of the effectiveness of CNN-based face detection models for the detection of covered faces during the COVID-19 pandemic. The study established that most of the previous models that were trained on unmasked datasets were inefficient in detecting masked faces. The authors classified face detection models into two categories: Anchor-based vs. Anchor free and Single Stage vs. Two Stage architectures. Several models, RetinaFace, CenterFace, FaceBoxes, Extremely Tiny Face Detector (EXTD), TinyFaces, Light and Fast Face Detector (LFFD), and Multitask Cascaded Convolutional Networks (MTCCN) were evaluated for a modified WIDER Face dataset, in which the lower halves of the faces were erased to mimic masks. Of the evaluated models, it was observed that RetinaFace had the best performance at the Easy (84%) and Medium (80%) levels, while EXTD was the best at the Hard level (59%). The study also revealed that while the present models are appropriate for easy sets of data, their efficiency decreases when they are applied to difficult conditions, e.g., small, partially occluded, or complex images. Consequently, there is a requirement for new specific masked face datasets and models for occlusion scenarios.

To improve face detection under partial occlusion, Opitz et al. [98] proposed a grid loss function for CNNs. The method cuts the last convolutional layer’s feature map into spatial blocks and uses a hinge loss for each block, to ensure that even partially visible regions remain discriminative. The approach was tested on FDDB, AFW, and PASCAL Faces datasets. It achieved a TPR of 86.7% at 0.1 FPPI on FDDB, outperforming traditional CNNs. Also reduced overfitting, improved mid-level feature learning, and was efficient with smaller datasets. The model supported real-time detection, processing at 20 frames per second. However, the method had some difficulties with extreme occlusions and cluttered backgrounds.

4.2.7 Context-Aware Approaches

By using contextual information around occluded areas, Alashbi et al. [37] proposed a CNN-based method to identify highly occluded faces. The Niqab-Face dataset, which comprises 10,000 images with high levels of facial occlusion (i.e., faces covered by niqabs) was first introduced by the authors. This dataset was specifically annotated to enable CNN models to train on the visible facial parts and their surroundings to improve detection. The work was evaluated on the Niqab-Face dataset against MTCNN, MobileNet, TinyFace, and YOLOv3. Among the models, TinyFace gave the best accuracy of 46.5%, YOLOv3 followed with 33.6% accuracy while MTCNN and MobileNet had a low accuracy of 18% and 20%, respectively. These results showed that existing detectors have a challenge with extreme forms of occlusion. The authors argued that context-aware labeling is necessary to improve the detection but also that there is a need for better models that are specifically meant for highly occluded faces.

Recently, Alashbi et al. [18] proposed an Occlusion-Aware Face Detector (OFD) to localize covered faces with high levels of occlusion such as niqab covers. To enhance the feature learning process, the model utilized contextual information such as head pose and shoulders, and body aspects. The authors enhanced the Darknet-53 backbone architecture to include more layers to enhance the feature learning of occluded faces. The OFD model outperformed other models including YOLO-v3, Mobilenet-SSD, and TinyFace with a precision of 73.70%, recall of 42.63%, and F-measure of 54.02%. It also offered a 50.34% average precision (AP). However, the model had low generalization capacity to non-niqab occlusions and had high rates of false positives, particularly in complex settings. It also did not really solve the problems of cluttered environments and poor illumination. Furthermore, it did not solve challenges like small dataset size, imbalanced dataset, overfitting, computational expense, and inaccurate detections. These problems are likely to hinder the adoption of this model in real-world applications.

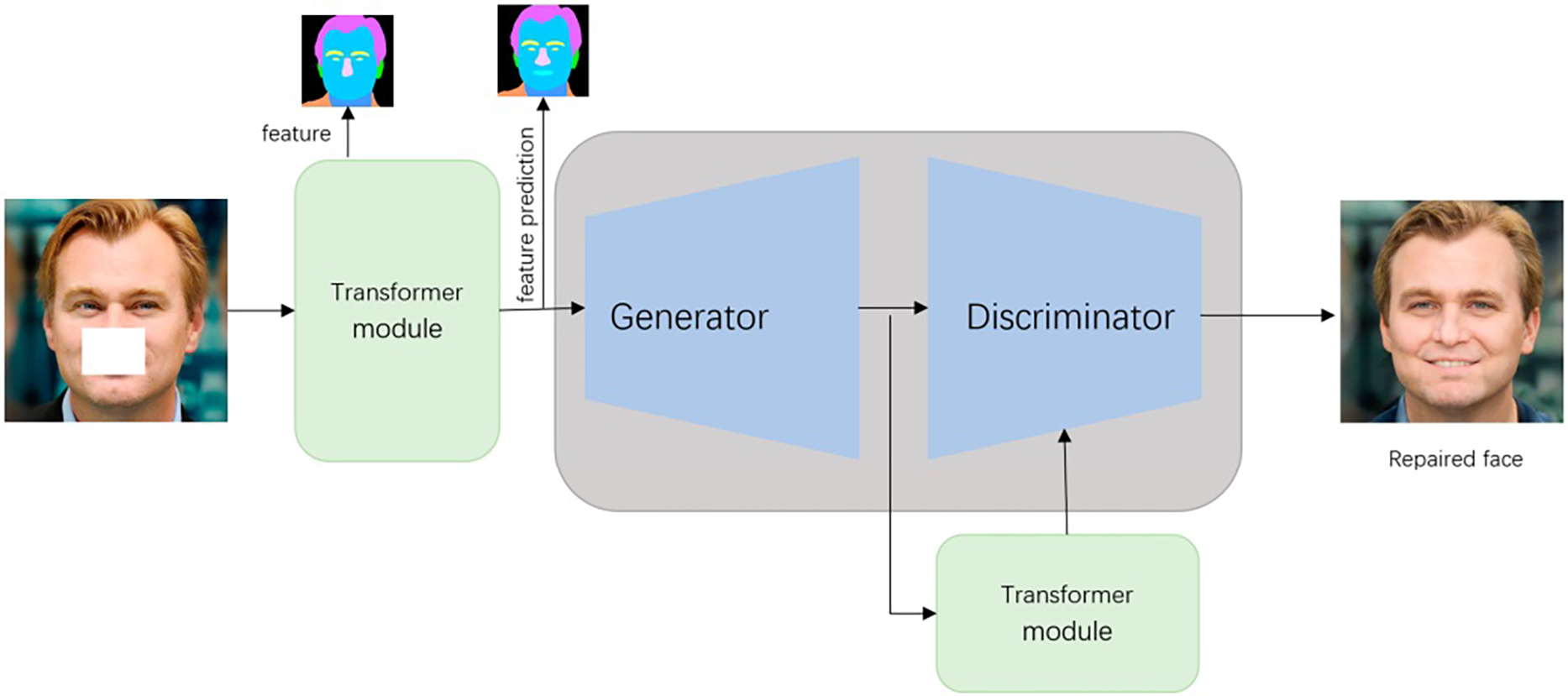

4.3 Transformer-Based Models for Occluded Face Detection

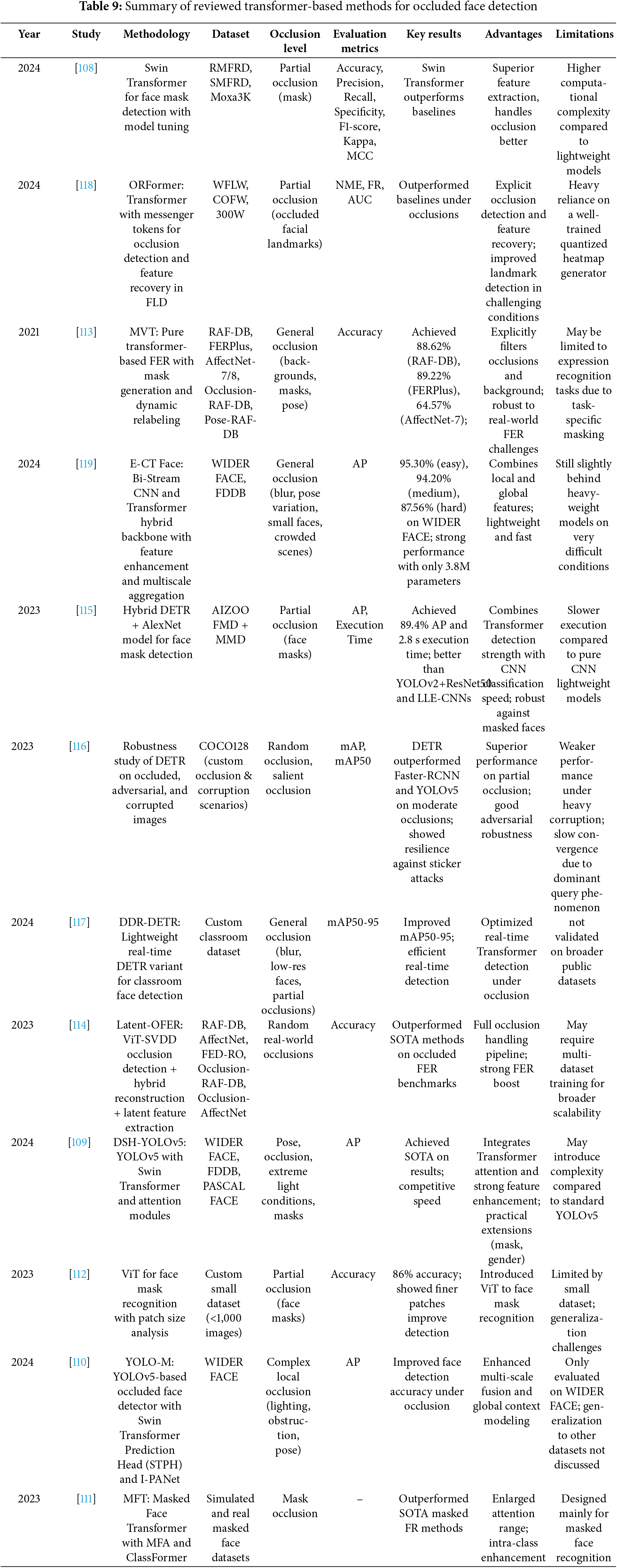

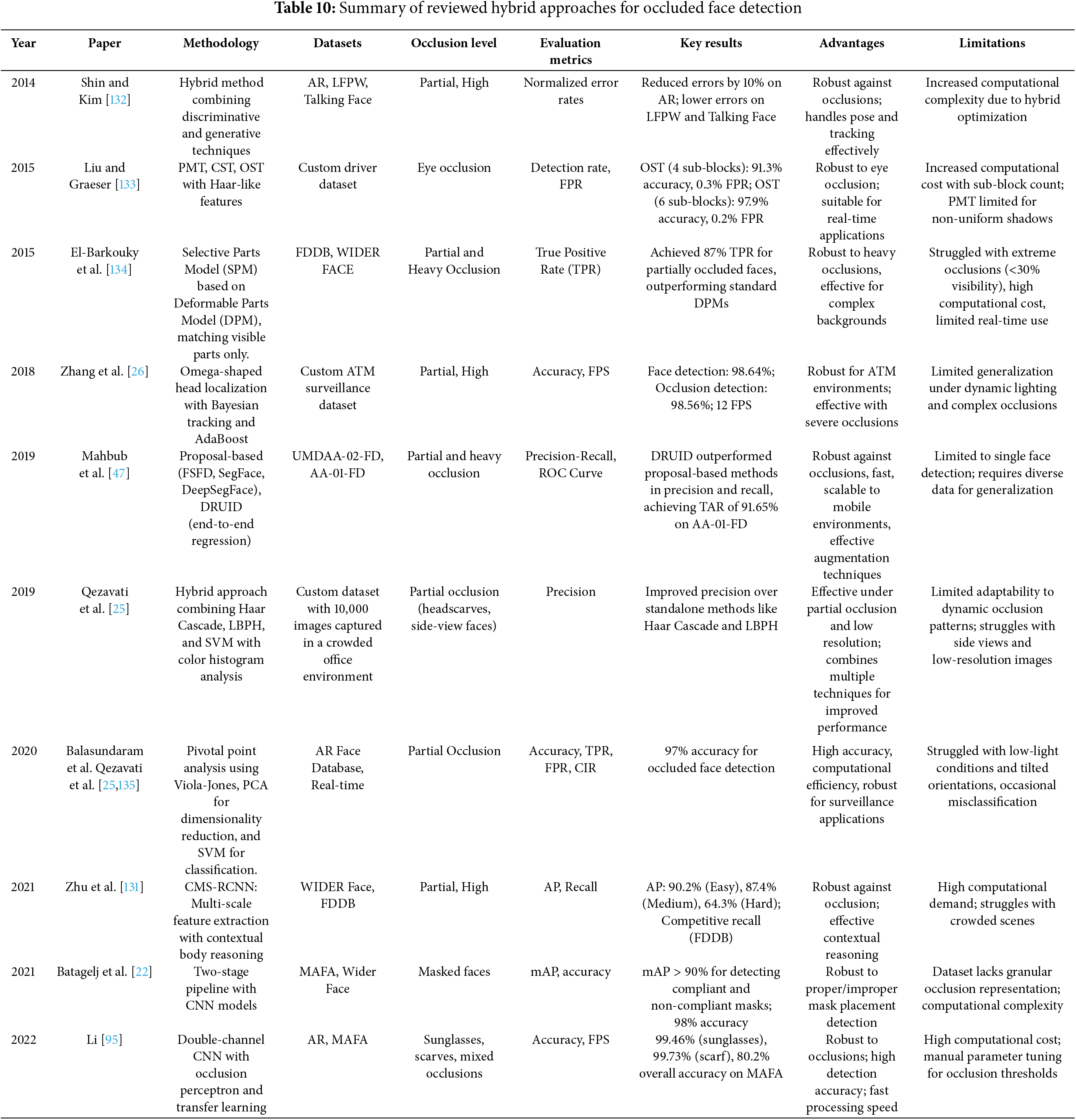

In recent years, transformer architectures [99] and generative adversarial networks (GANs) [79] have gained substantial attention in computer vision because of their impressive ability to capture long-range dependencies and generate realistic imagery. Notably, when addressing occluded face detection, these models effectively manage complex spatial relationships and reconstruct plausible facial structures in areas that are partially obscured, thereby enhancing overall detection performance. Transformer-based models, such as Vision Transformer (ViT) [100–102], Swin Transformer [103–105], and Detection Transformer (DETR) [106], have been widely explored for face-related tasks. For example, SwinFace [107] employs a Swin Transformer backbone to address various face analysis tasks such as face recognition, facial expression recognition, age estimation, and attribute prediction. Similarly, DETR introduces a fully end-to-end transformer-based framework for object detection, which has been adapted in recent studies to improve face detection performance, particularly in challenging scenarios involving occlusions. These approaches highlight the capability of transformer-based models to extract comprehensive facial features and spatial relationships, even under difficult real-world conditions. A summary of the Transformer-based models discussed in this subsection is presented in Table 9.

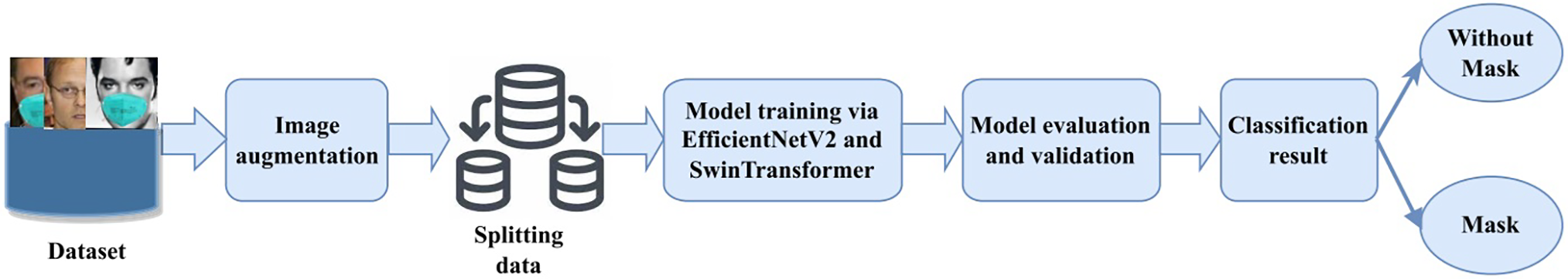

Several recent works have leveraged the Swin Transformer to improve face detection and recognition robustness. For instance, Mao et al. [108] utilized a Swin Transformer backbone to enhance masked face detection performance. Their model, optimized through hyperparameter tuning, demonstrated superior results over classical CNN models but required higher computational resources. The workflow of their proposed model is illustrated in Fig. 9. Building upon YOLOv5, Yuan et al. [109] integrated Swin Transformer layers within a customized detection head, resulting in the DSH-YOLOv5 model, which achieved strong performance on WIDER FACE, FDDB, and PASCAL FACE datasets while maintaining practical efficiency. In another line of work, Zhou [110] designed YOLO-M, embedding Swin Transformer prediction heads within the detection framework to better address local occlusion challenges, achieving noticeable improvements on the WIDER FACE dataset. Furthermore, Zhao et al. [111] addressed masked face recognition by proposing the Masked Face Transformer (MFT). Their approach introduced Masked Face-compatible Attention (MFA) and a ClassFormer module to enlarge attention range and enhance intra-class consistency, outperforming prior methods on masked datasets.

Figure 9: Schematic diagram of the Swin Transformer-based mask detection model [108]

Parallel to Swin Transformer research, other studies have investigated Vision Transformer (ViT) backbones. Pandya et al. [112] explored the use of ViT for face mask classification, achieving 86% accuracy on a small custom dataset. Their analysis highlighted that smaller patch sizes preserved finer facial details, enhancing classification robustness. Despite the promising results, the study acknowledged that the limited dataset size posed challenges to generalization and scalability. In the context of facial expression recognition, Li et al. [113] developed the Mask Vision Transformer (MVT), introducing a mask generation network and a dynamic relabeling strategy to explicitly filter occluded or irrelevant regions, leading to improved robustness on RAF-DB, FERPlus, and AffectNet datasets. However, MVT’s reliance on masking and relabeling strategies may limit its direct applicability to domains beyond expression recognition. In another application, Lee et al. [114] proposed Latent-OFER, a ViT-driven method for occluded Facial Expression Recognition (FER). By detecting and reconstructing occluded regions and extracting latent features via ViT and CNN hybrids, Latent-OFER achieved state-of-the-art results on occluded FER benchmarks. However, the authors noted that while the method showed strong performance, scalability across highly diverse datasets might require multi-dataset training strategies.

Detection Transformer (DETR) and its variants have also been adapted to handle occlusion challenges. Al-Sarrar and Al-Baity [115] combined a DETR face detector with an AlexNet-based mask classifier. Extensive experimental evaluations demonstrated that the proposed hybrid model surpassed previous CNN-based approaches. However, the model’s execution speed, while acceptable for real-time applications, remains slower than lightweight CNN-only models. Beyond application, Zhao et al. [116] systematically analyzed DETR’s behavior under occlusions and adversarial attacks. Their findings revealed DETR’s strong resilience to moderate occlusion but exposed performance degradation under severe occlusion and heavy corruption due to a “main query” imbalance in attention. For real-time detection, Li et al. [117] developed DDR-DETR by optimizing RT-DETR with modules such as StarNet and CGRLFPN. Their model achieved improved mAP50-95 in classroom settings, offering an efficient solution for detecting faces under blur and occlusion.