Open Access

Open Access

REVIEW

A Comprehensive Survey on AI-Assisted Multiple Access Enablers for 6G and beyond Wireless Networks

1 Office of Research Innovation and Commercialization, University of Management and Technology, Lahore, 54770, Pakistan

2 Department of Electrical and Electronics Engineering, Faculty of Engineering, University of Lagos, Akoka, Lagos, 100213, Nigeria

3 Electrical and Electronic Engineering Department, School of Science and Technology, Pan-Atlantic University, Ibeju-Lekki, Lagos, 105101, Nigeria

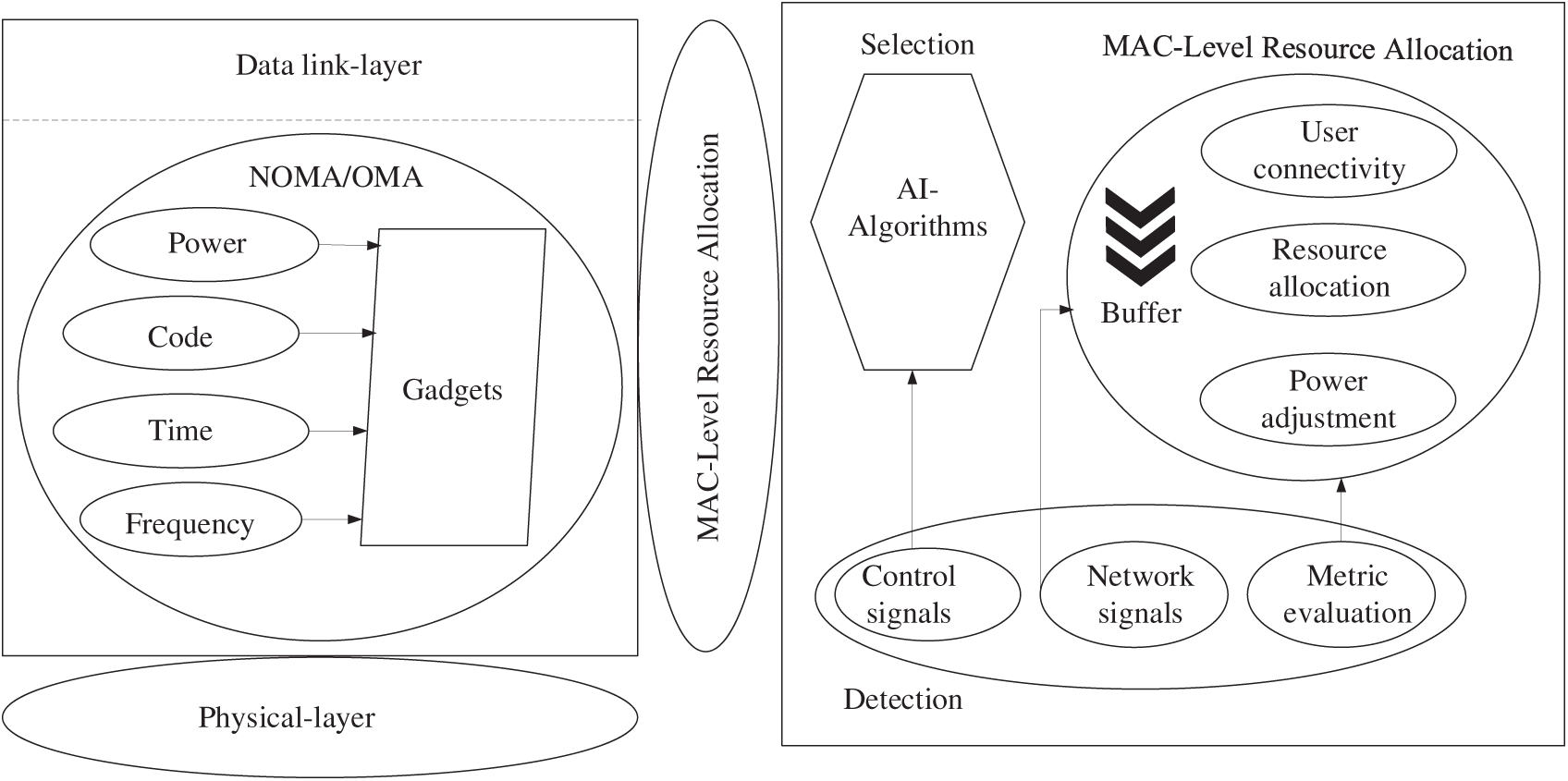

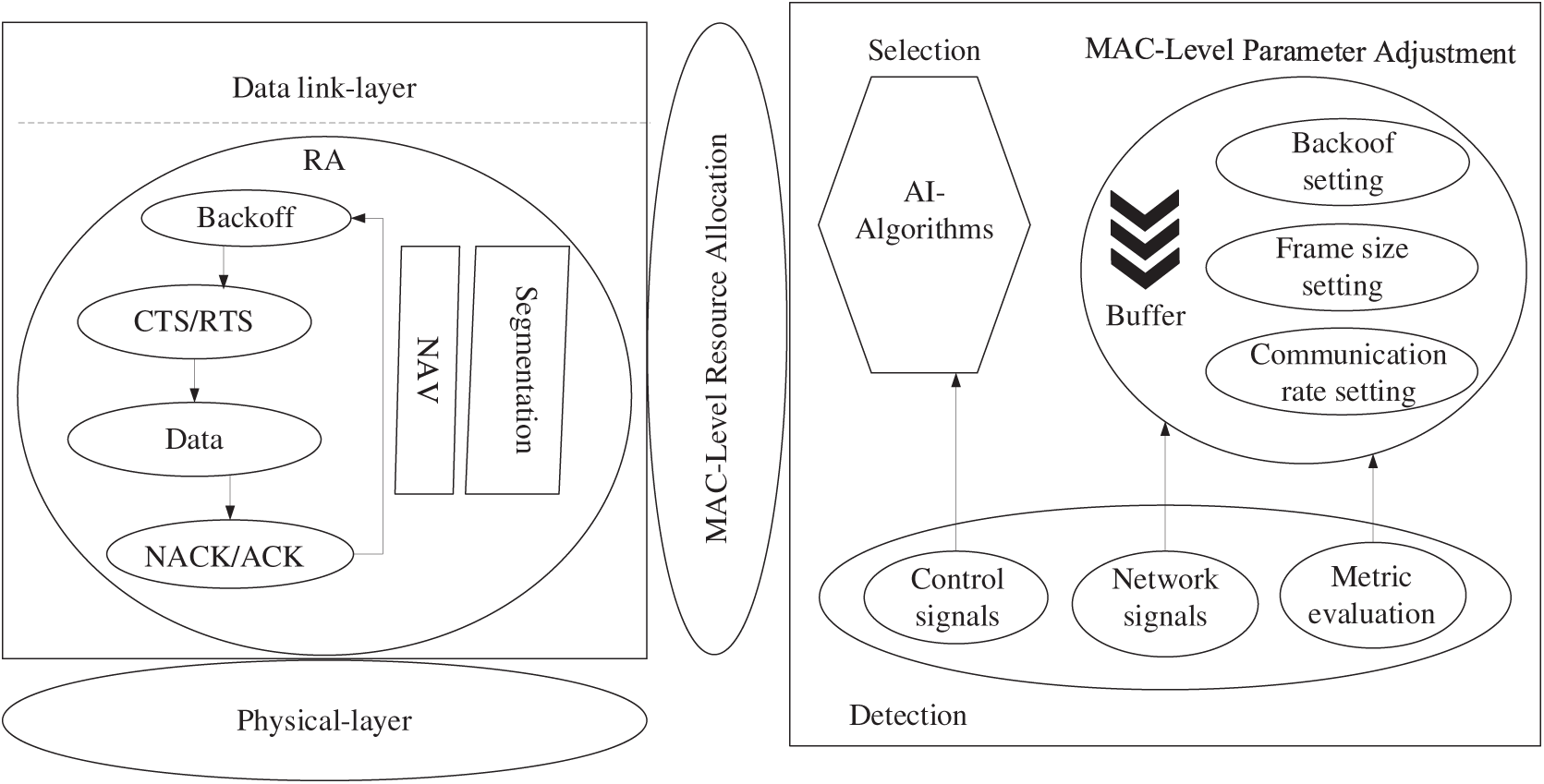

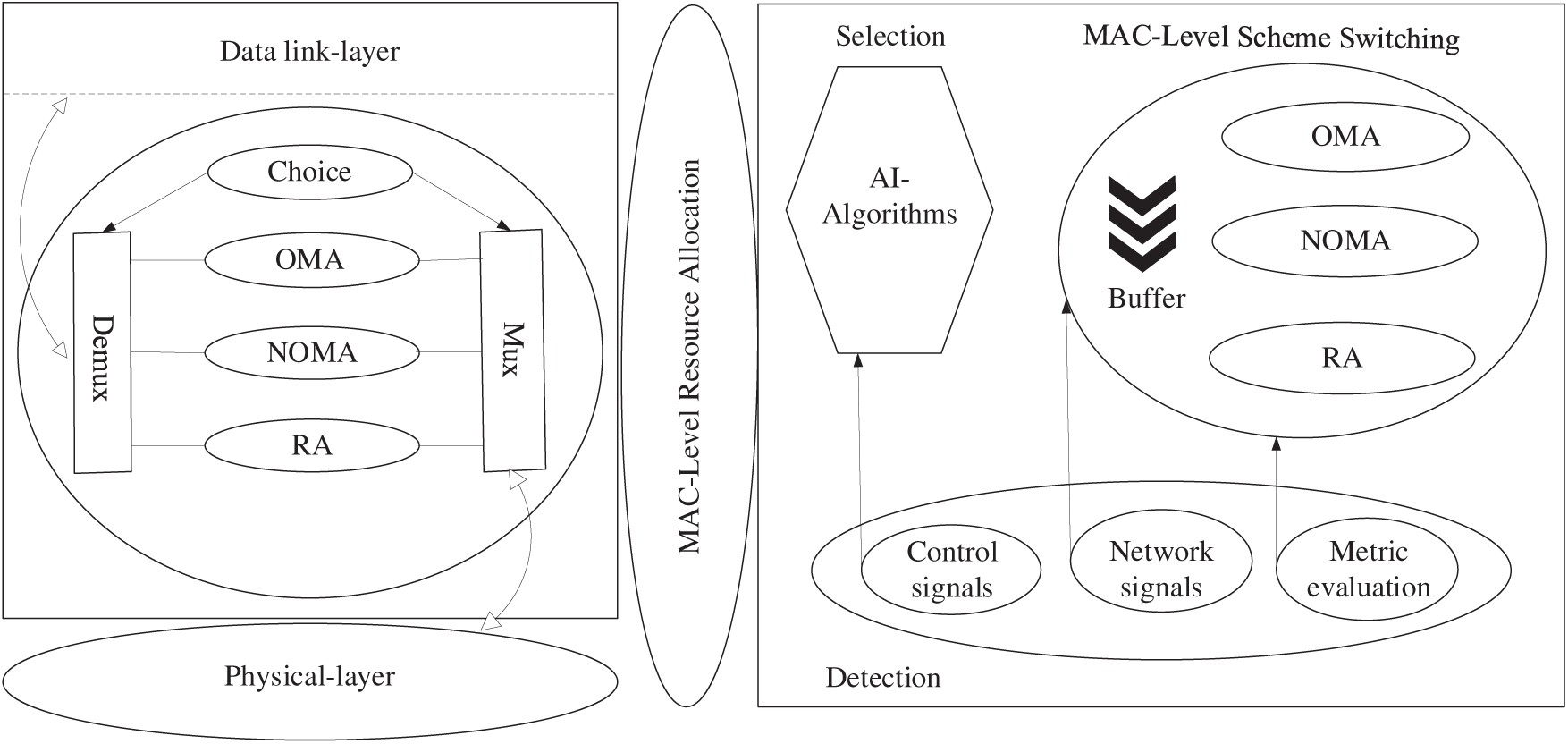

4 Department of Library and Information Science, Fu Jen Catholic University, New Taipei City, 242062, Taiwan

5 Department of Computer Science and Information Engineering, Asia University, Taichung City, 413305, Taiwan

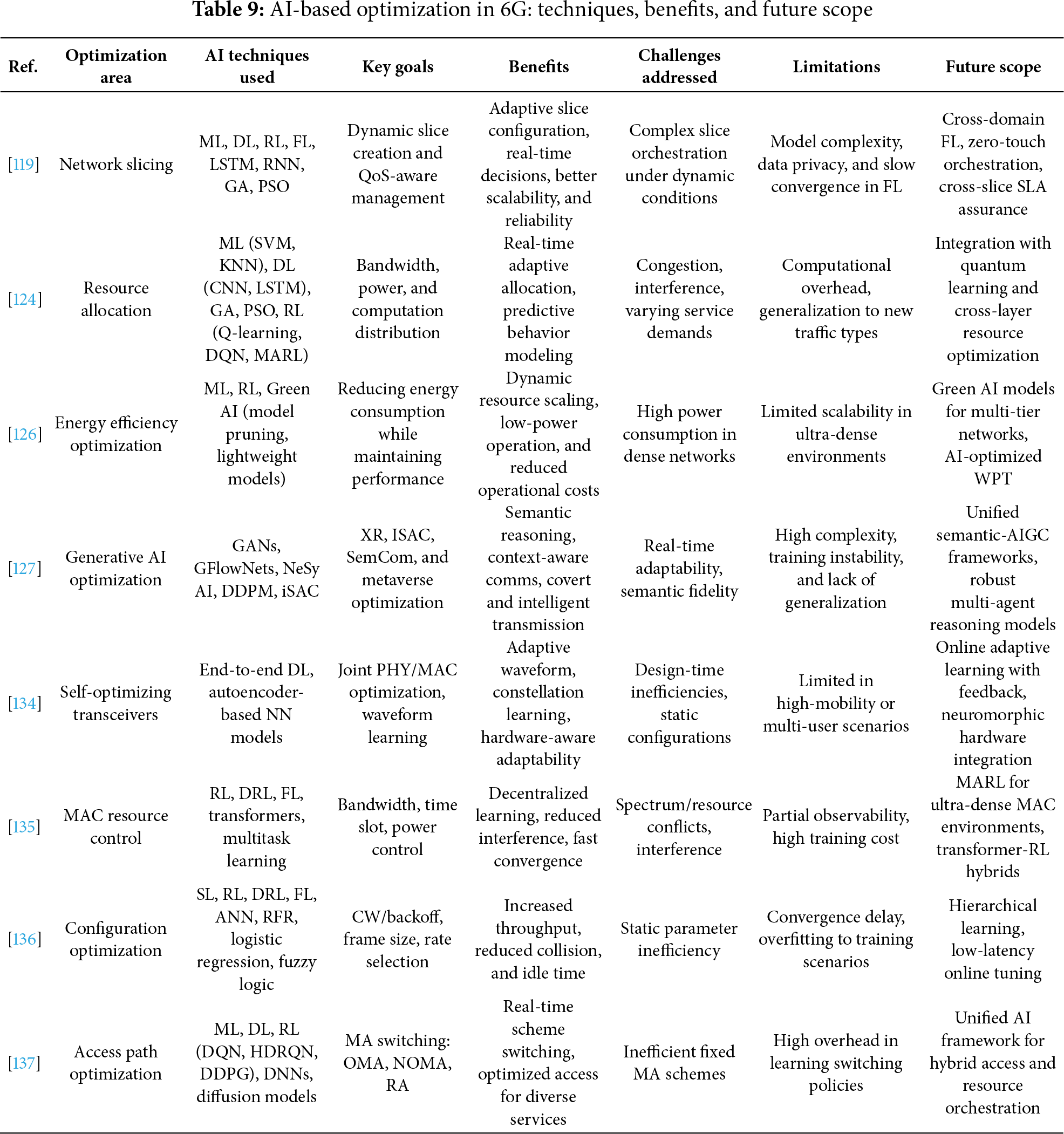

* Corresponding Authors: Agbotiname Lucky Imoize. Email: ; Cheng-Chi Lee. Email:

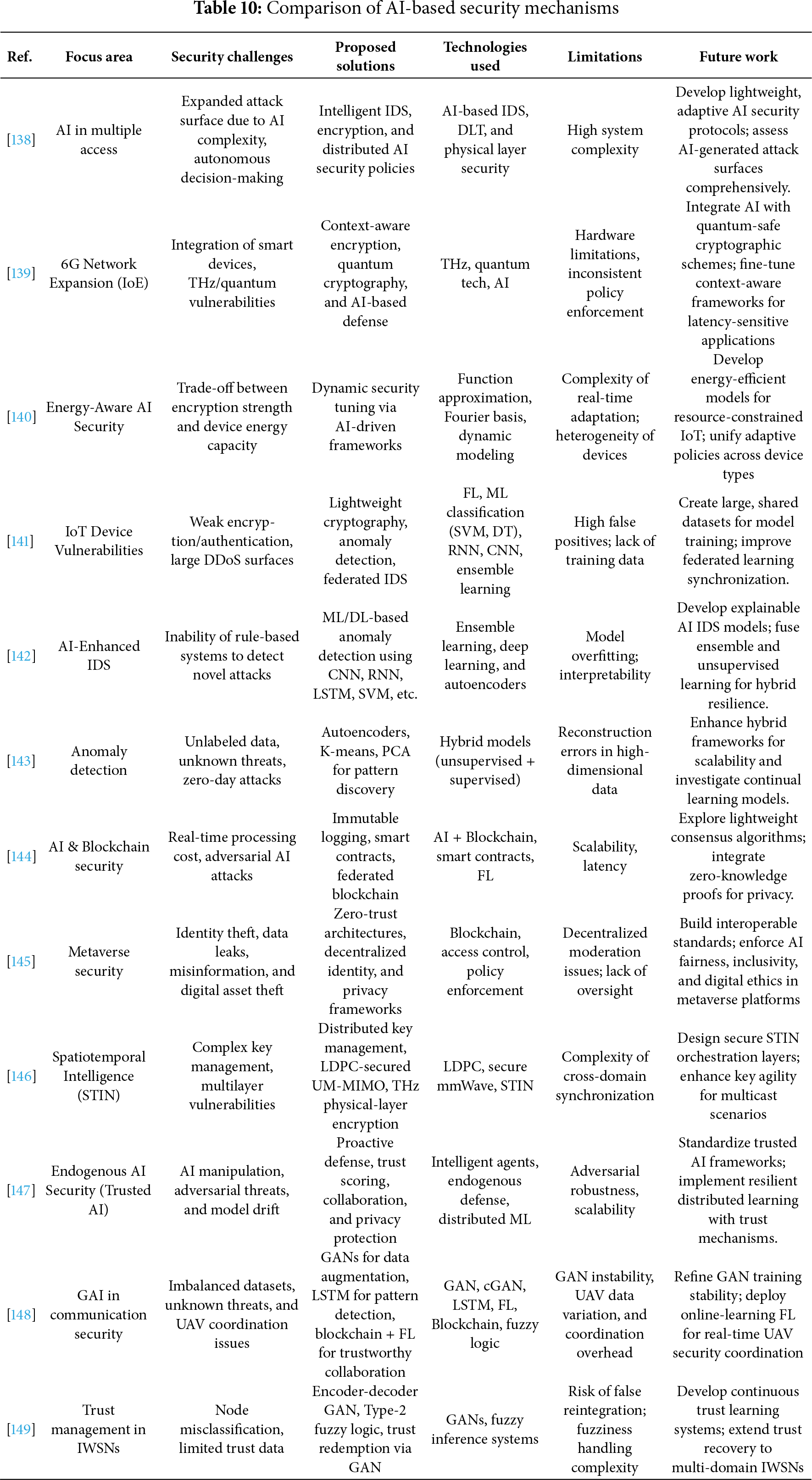

(This article belongs to the Special Issue: Artificial Intelligence for 6G Wireless Networks)

Computer Modeling in Engineering & Sciences 2025, 145(2), 1575-1664. https://doi.org/10.32604/cmes.2025.073200

Received 12 September 2025; Accepted 24 October 2025; Issue published 26 November 2025

Abstract

The envisioned 6G wireless networks demand advanced Multiple Access (MA) schemes capable of supporting ultra-low latency, massive connectivity, high spectral efficiency, and energy efficiency (EE), especially as the current 5G networks have not achieved the promised 5G goals, including the projected 2000 times EE improvement over the legacy 4G Long Term Evolution (LTE) networks. This paper provides a comprehensive survey of Artificial Intelligence (AI)-enabled MA techniques, emphasizing their roles in Spectrum Sensing (SS), Dynamic Resource Allocation (DRA), user scheduling, interference mitigation, and protocol adaptation. In particular, we systematically analyze the progression of traditional and modern MA schemes, from Orthogonal Multiple Access (OMA)-based approaches like Time Division Multiple Access (TDMA) and Frequency Division Multiple Access (FDMA) to advanced Non-Orthogonal Multiple Access (NOMA) methods, including power domain-NOMA, Sparse Code Multiple Access (SCMA), and Rate Splitting Multiple Access (RSMA). The study further categorizes AI techniques—such as Machine Learning (ML), Deep Learning (DL), Reinforcement Learning (RL), Federated Learning (FL), and Explainable AI (XAI)—and maps them to practical challenges in Dynamic Spectrum Management (DSM), protocol optimization, and real-time distributed decision-making. Optimization strategies, including metaheuristics and multi-agent learning frameworks, are reviewed to illustrate the potential of AI in enhancing energy efficiency, system responsiveness, and cross-layer RA. Additionally, the review addresses security, privacy, and trust concerns, highlighting solutions like privacy-preserving ML, FL, and XAI in 6G and beyond. By identifying research gaps, challenges, and future directions, this work offers a structured resource for researchers and practitioners aiming to integrate AI into 6G MA systems for intelligent, scalable, and secure wireless communications.Graphic Abstract

Keywords

The sixth generation (6G) wireless communication systems represent a transformative leap beyond 5G, aiming to deliver ultra-high data rates (up to 1 Terabit-per-second (Tbps)), massive device connectivity (up to 107 devices/km2), ultra-low latency (10–100 µs), and support for high mobility (up to 1000 km/h) [1]. These capabilities are designed to support advanced applications, including immersive Extended Reality (XR) and tactile internet, autonomous systems, and even space tourism [2]. A defining feature of 6G is its native integration with Artificial Intelligence (AI), enabling intelligent, autonomous, and adaptive networking. Technologies such as Machine Learning (ML), Deep Learning (DL), and Natural Language Processing (NLP) will enhance real-time decision-making, predictive maintenance, and DRA [3]. To support this, 6G will utilize a broad-spectrum range—from sub-6 GHz and mmWave (28/39/60 GHz) to THz bands (above 100 GHz)—as well as non-Radio Frequency (RF) domains, such as Visible Light Communication (VLC) and quantum channels. However, efficient spectrum utilization remains a significant challenge [4]. Cloud-native and edge computing architectures will play a central role in 6G by reducing hardware dependency and enabling distributed real-time processing and network function virtualization. AI-driven functions, such as intelligent network slicing, will facilitate support for diverse and heterogeneous applications, including smart cities, Industry 4.0, and Internet of Things (IoT) ecosystems [5].

With the growing complexity and scale of 6G, security and privacy have become critical. AI-based Intrusion Detection System (IDS), Anomaly Detection (AD), and blockchain-enabled, decentralized solutions are being developed to address emerging threats posed by expanded device ecosystems and dynamic architectures [6]. Still, these solutions present challenges, including ethical data handling, computational costs, and uncertainty in AI. Federated Learning (FL) and quantum AI are gaining traction to address scalability, privacy, and adaptability issues in distributed AI systems [7]. The convergence of AI and 6G is expected to redefine connectivity and enable novel digital-physical experiences that align with the goals of 2030–2040 [8].

The telecommunications industry is undergoing rapid decentralization, driven by the need for intelligent, high-speed networks capable of supporting massive machine-type communication (mMTC). Technologies such as Software-Defined Networking (SDN), virtualization, and heterogeneous architectures are being adopted, although they introduce new challenges in integration and standardization [9]. 6G is seen as the next significant advancement, leveraging Edge AI to manage intelligent systems and enable next-generation applications such as Autonomous Vehicles (AVs), smart environments, and the Internet of Everything [10].

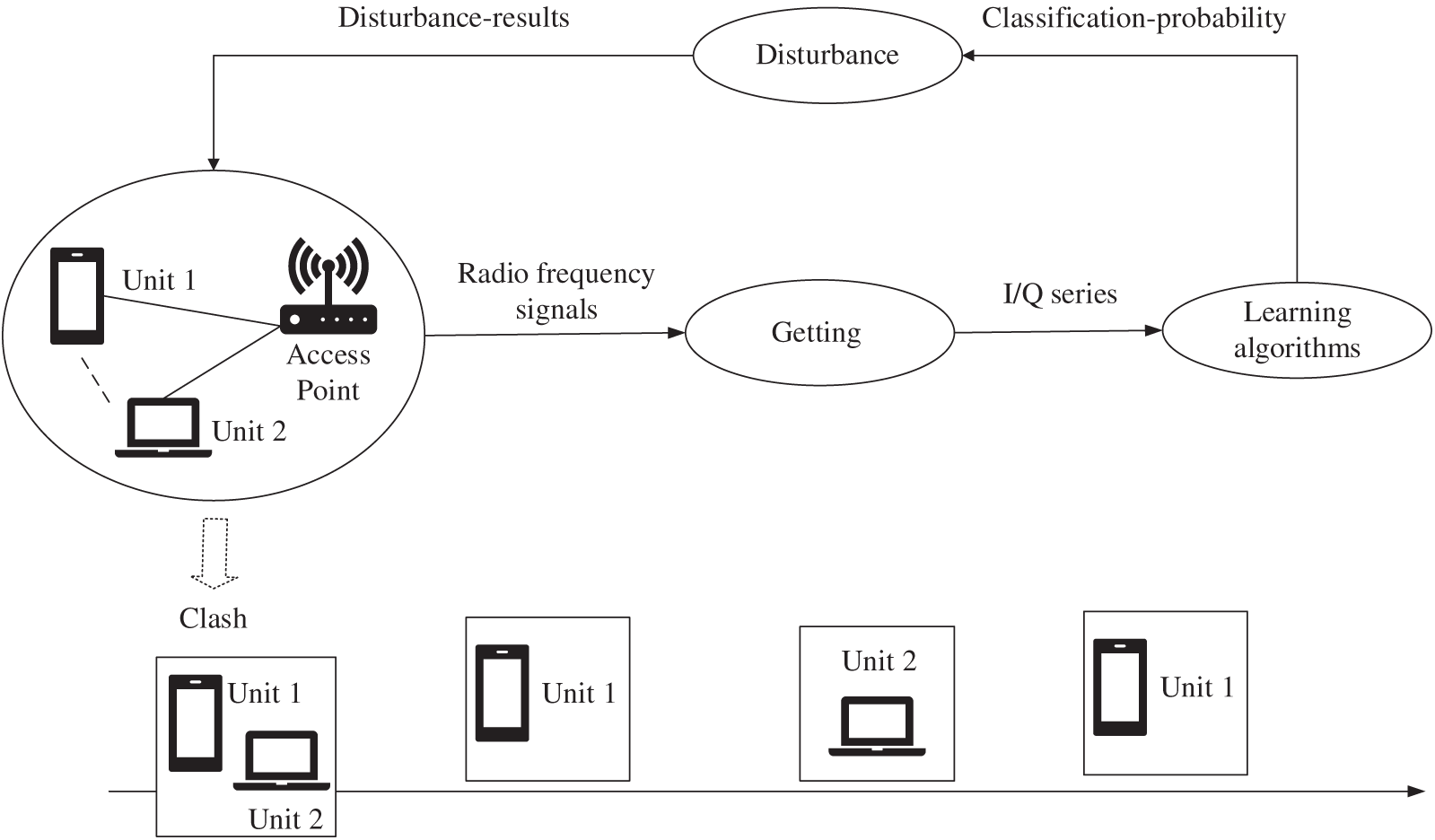

A significant hurdle for 6G is spectrum management. Traditional static allocation methods, regulated by agencies such as the FCC (U.S.) and the National Radio Administration (China), have led to severe underutilization. In some high-demand bands (hundreds of MHz to 3 GHz), utilization is reportedly as low as 5% across time and location [11]. To address this inefficiency, Dynamic Spectrum Access (DSA) and intelligent sharing models are essential. One promising approach is Cognitive Radio (CR), introduced by Joseph Mitola in 1999, which enables devices to sense, analyze, and adapt to their spectral environment [12]. The CR process comprises four stages: SS, analysis, decision-making, and reconstruction, with SS being the most critical for identifying unused frequencies in real-time. CR is widely used in communications, Unmanned Aerial Vehicles (UAVs), radar, and transportation systems for tasks such as anti-jamming and obstacle avoidance. AI-enhanced SS has emerged as a key development. ML, DL, game theory, and optimization algorithms have been used to enhance sensing performance in dynamic environments [13].

Deep learning techniques, particularly Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks, are highly effective in modeling nonlinear spectral characteristics and enhancing detection accuracy and speed. However, most existing DL-based SS systems are non-cooperative, making them vulnerable to limited data and lower robustness. Cooperative SS, in which multiple devices collaborate, has shown promise in improving accuracy and real-time adaptability. For example, Ref. [14] compared CNN architectures, such as LeNet, AlexNet, and VGG-16, on 2D signal data under various fusion rules. The research [15] implemented federated learning in cooperative SS, achieving a 98.78% detection rate at a −15 dB Signal-to-Noise Ratio (SNR) with only 1% false positives.

Simultaneously, Multiple Access (MA) techniques face growing limitations. Conventional schemes—such as Orthogonal Multiple Access (OMA), Space Division Multiple Access (SDMA), Nonorthogonal Multiple Access (NOMA), and Distributed Coordination Function (DCF)-based access struggle to meet 6G’s extreme requirements for latency, reliability, connectivity, and Energy Efficiency (EE). OMA suffers from low spectral efficiency; SDMA is impractical in dense urban or indoor environments due to high antenna complexity; NOMA increases system overhead; and random-access schemes like DCF are prone to collisions [16]. As billions of smart and bandwidth-intensive devices connect to 6G networks, emerging use cases such as smart cities, XR, AVs, and Industry 4.0 are placing immense demands on spectrum, power, and latency. To meet these, AI-driven MA techniques are being developed. AI-empowered next-generation MA solutions enable real-time learning and decision-making, addressing limitations of conventional MA while improving Quality of Service (QoS) for latency-sensitive and bandwidth-intensive applications.

This review aims to gain a deeper understanding of the approach to systematically incorporating the concept of AI in formulating and optimizing MA schemes for 6G and beyond wireless networks. This review outlines the motivations for adopting AI-based solutions, categorizes ongoing research trends, and assesses the effectiveness of specific approaches across various performance indicators to achieve the envisioned goals of 6G and future wireless networks. To be more precise, our interest lies in how AI will enable SS, DRA, user scheduling and management, interference mitigation, and protocol adaptation. The key contributions of the paper are outlined as follows:

• This paper provides a thorough and structured survey of AI-enabled MA techniques aimed at advancing 6G wireless networks. Specifically, the paper emphasizes critical elements of this evolution, including SS, intelligent protocol design, and optimization frameworks, which are essential for meeting the stringent requirements of 6G, such as ultra-low latency, massive device connectivity, and high spectral efficiency. The review begins by examining both fundamental and modern MA schemes, starting from OMA, including Time Division Multiple Access (TDMA) and Frequency Division Multiple Access (FDMA), to more sophisticated NOMA techniques such as power domain-NOMA, Sparse Code Multiple Access (SCMA), and Rate Splitting Multiple Access (RSMA). By analyzing their progression, strengths, and limitations, the paper offers valuable insights into how MA schemes must evolve to support next-generation wireless services and systems.

• The paper covers a broad description of different types of learning in AI categorization, including Supervised Learning (SL), Unsupervised Learning (USL), and Reinforcement Learning (RL), which can be applied in MA for enabling future 6G wireless networks. Moreover, the newly established studies focusing on AI-based SS and MA protocol design have been thoroughly examined, highlighting critical deficiencies and research gaps in existing work.

• One of its significant contributions is the application of a broad range of AI methods (such as ML, DL, RL, FL, and Explainable AI (XAI)) to a set of practical problems in DSM and smart-protocol design. The paper focuses on core optimization approaches using metaheuristic and multi-objective frameworks, providing insights into how AI can enhance real-time, adaptive, and distributed decision-making in future wireless systems. Specifically, one of the key contributions of this work lies in its detailed mapping of AI techniques—including ML, DL, RL, FL, and XAI—to practical challenges in DSM and adaptive protocol development. This structured classification is particularly novel for its connection of specific AI models to roles in prediction, allocation, control, and optimization within complex, evolving 6G environments. Additionally, the paper offers a layered understanding of how these techniques can be integrated into spectrum-aware access mechanisms to make them more flexible, intelligent, and scalable.

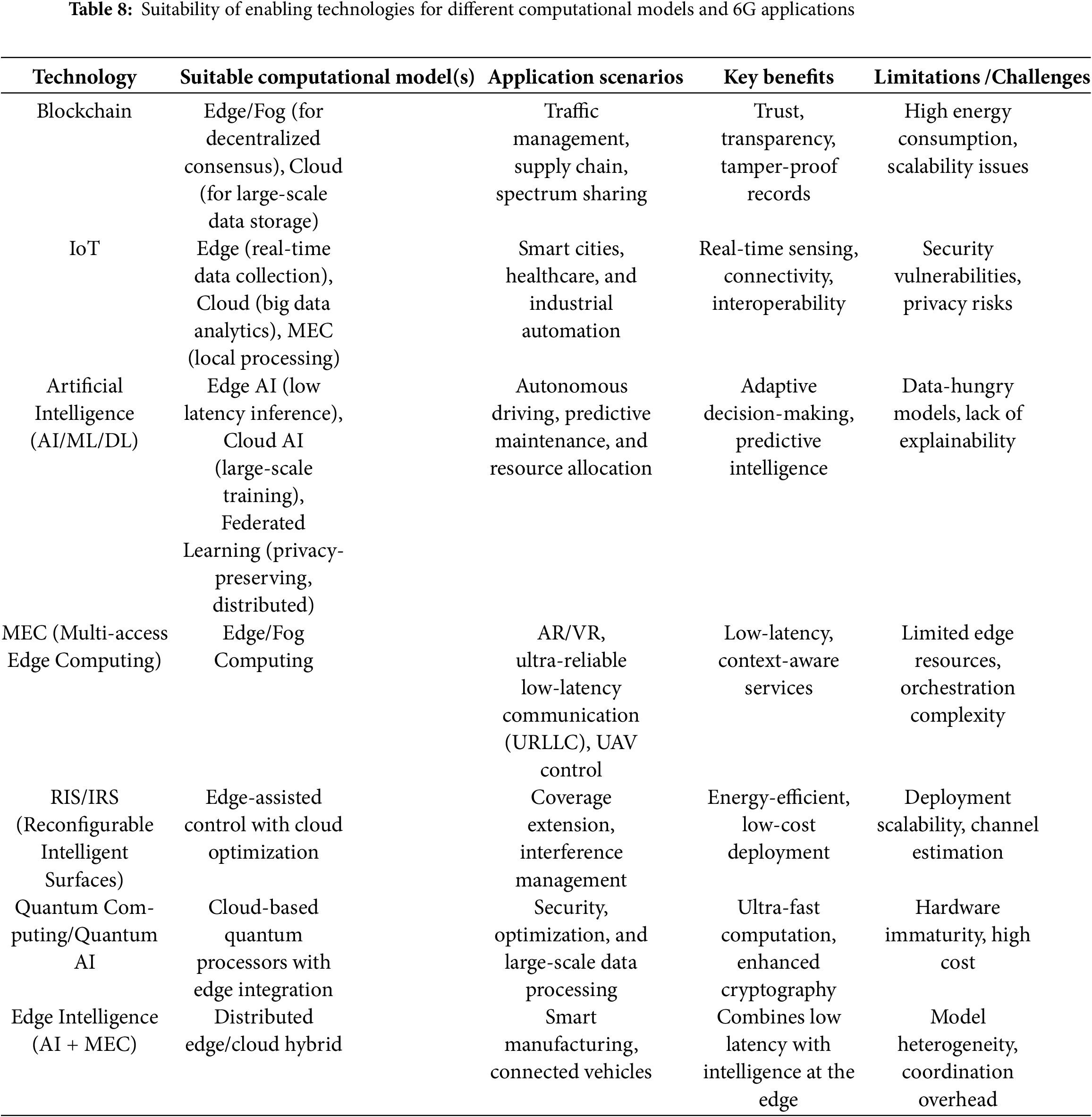

• The survey further delves into AI-based optimization strategies, highlighting metaheuristic methods like Genetic Algorithms (GA), Particle Swarm Optimization (PSO), and Ant Colony Optimization, alongside Deep Reinforcement Learning (DRL) and Multi-Agent Reinforcement Learning (MARL). These approaches are explored in the context of real-time, distributed decision-making, showcasing how AI can enhance cross-layer Resource Allocation (RA), energy efficiency, and system responsiveness. By presenting this comprehensive view, the paper provides a foundation for designing adaptive, low-latency, and resource-optimized 6G MA frameworks. Additionally, we mapped the enabling technologies with the most appropriate computational models and application scenarios in 6G networks.

• Another critical and often overlooked aspect addressed in this work is the role of AI in enhancing security, privacy, and trust in access control. This survey identifies potential vulnerabilities, including adversarial attacks and opaque AI decision-making. It presents possible solutions like privacy-preserving ML, FL, and XAI to ensure trustworthy and transparent systems. The paper also outlines open research challenges, including the lack of empirical validation, data scarcity, hardware constraints, and the need for standardized and ethical deployment of AI in wireless communication systems. Overall, the present work can serve as a baseline resource for both researchers and practitioners who need to leverage AI to deliver the Next Generation (XG) of wireless networks with MA, securely, intelligently, and at large scale.

The rest of the paper is outlined as follows. Section 2 provides a general description of various access technologies and their development in 6G. Section 3 describes the research methodology (PRISMA-Consistent Format). Section 4 introduces the fundamental concepts of 6G and MA technologies, including both traditional and modern schemes, and discusses their limitations in the 6G context. Section 5 presents a detailed survey of AI techniques—ML, DL, RL, and FL—relevant to wireless communication. Section 6 explores the application of AI in SS, including prediction models, sensing strategies, and DSA. Section 7 focuses on AI-driven protocol design, addressing intelligent Medium Access Control (MAC) scheduling, Resource Management (RM), and beamforming strategies. Additionally, Section 8 reviews AI-powered optimization techniques, including metaheuristics and multi-objective frameworks. Section 9 discusses emerging security and privacy challenges in AI-empowered MA systems. Section 10 identifies open research challenges and future directions, while summarizing key lessons learned and recent trends. Finally, Section 11 concludes the paper with a reflection on the transformative role of AI in shaping 6G MA systems.

The vision for 6G connectivity extends far beyond conventional communication paradigms, aiming to seamlessly integrate the digital, physical, and biological realms [17]. In the future network, end devices will no longer act as isolated units but will function as coordinated clusters that serve as man–machine interfaces. This transformation will enable ubiquitous computing across both edge and cloud infrastructures, facilitate knowledge systems that convert raw data into actionable intelligence, and incorporate advanced sensing and actuation capabilities to interact with and control the physical world. A key challenge in realizing this vision is efficiently placing services in Mobile Edge Computing (MEC) systems. Several studies have addressed this issue by optimizing various aspects of MEC operations. For example, the study [18] proposed a joint optimization model for service placement and edge server deployment, aiming to maximize the cumulative profit of edge servers while accounting for storage and computational constraints. Similarly, the work [19] applied Q-learning, an RL technique, to jointly manage task offloading and RA in environments characterized by uncertain computational demands and strict delay constraints.

Further innovations include an offloading framework proposed in [20], which reduces task delay while balancing load across servers, thereby enabling collaborative task execution between user devices and edge nodes. In addition, the work [21] focused on minimizing global energy consumption by optimizing offloading ratios and computing RA under strict delay constraints. However, many of these approaches assume that necessary services are pre-deployed on edge servers. This assumption is increasingly unrealistic in AI-driven systems, where models must adapt dynamically to changing data distributions. To address this, the authors [22] proposed a strategy for broadcasting updated AI models to user devices, optimizing both service placement and RA to minimize energy and computation time. Complementing this, the study [23] developed a collaborative AI training framework in which multiple end users, coordinated by an edge server, work together to reduce latency and energy consumption. While these solutions focus on delay and EE, inference accuracy remains a critical and often overlooked metric. The current body of research begins to address this by proposing AI service placement strategies that balance inference quality with system performance.

Incentive mechanisms have also become vital for promoting data offloading in edge systems. For example, Ref. [24] introduced a reward-based scheme using learning algorithms to manage resources and encourage node participation. However, these approaches often lack formal guarantees. To overcome this, the current study adopts an alternating direction method of multipliers-based optimization strategy, offering scalability and theoretical robustness for large-scale MEC environments. Future 6G networks must support multifunctionality and intelligence to realize their full potential, facilitating the connection of trillions of devices that sense, compute, connect, and analyze data ubiquitously [25]. Unlike previous generations (1G–5G), where users were primarily communication endpoints, users in 6G will also serve as sensing targets, energy receivers, AI nodes, and service consumers. Central to this paradigm shift is the design of advanced MA techniques that efficiently allocate resources across a heterogeneous user base.

While SDMA and Orthogonal Frequency Division Multiplexing (OFDM) access have dominated past generations, growing interest is now directed toward NOMA schemes [26]. However, the traditional OMA vs. NOMA distinction is increasingly seen as insufficient for capturing the complexity of modern MA needs [27]. To address this, Ref. [28] proposed a new classification approach based on how multiuser interference is managed, introducing Rate-Splitting Multiple Access (RSMA) as a unifying and flexible MA strategy. RSMA seamlessly integrates OMA, power-domain NOMA, SDMA, and physical-layer multi-casting, offering practical advantages for deployment in diverse 6G scenarios [29].

Several studies have examined the intersection of 6G and AI, focusing on network design and performance optimization. For example, Ref. [30] presents an in-depth overview of the 6G communication architecture and emphasizes the integration of AI across key areas such as TeraHertz (THz) communication, satellite networks, holographic communication, and quantum communication. The paper also identifies challenges like spectrum scarcity, EE, and ethical considerations, while underlining the importance of global standardization and multi-stakeholder collaboration. Expanding on this, the work [31] outlines a three-stage framework for AI integration in 6G networks: AI for network, which uses AI to enhance performance and efficiency; network for AI, which supports AI functions with enabling infrastructure; and AI as a service, where AI functionalities are embedded directly into the network. The study explores standardization efforts in this emerging area.

Furthermore, Ref. [32] provides a historical and architectural analysis of wireless networks, positioning 6G as a transformative leap enabled by technologies such as THz communication, ultra-massive Multiple-Input Multiple-Output (MIMO), quantum communication, and Reconfigurable Intelligent Surfaces (RIS). The role of AI and ML is again highlighted as central to achieving intelligent, self-optimizing networks, with applications ranging from smart cities and AVs to brain–computer interfaces. The study also addresses challenges such as regulatory complexity, security, and spectrum limitations, concluding that 6G is a foundational step toward even more advanced networks, such as 7G.

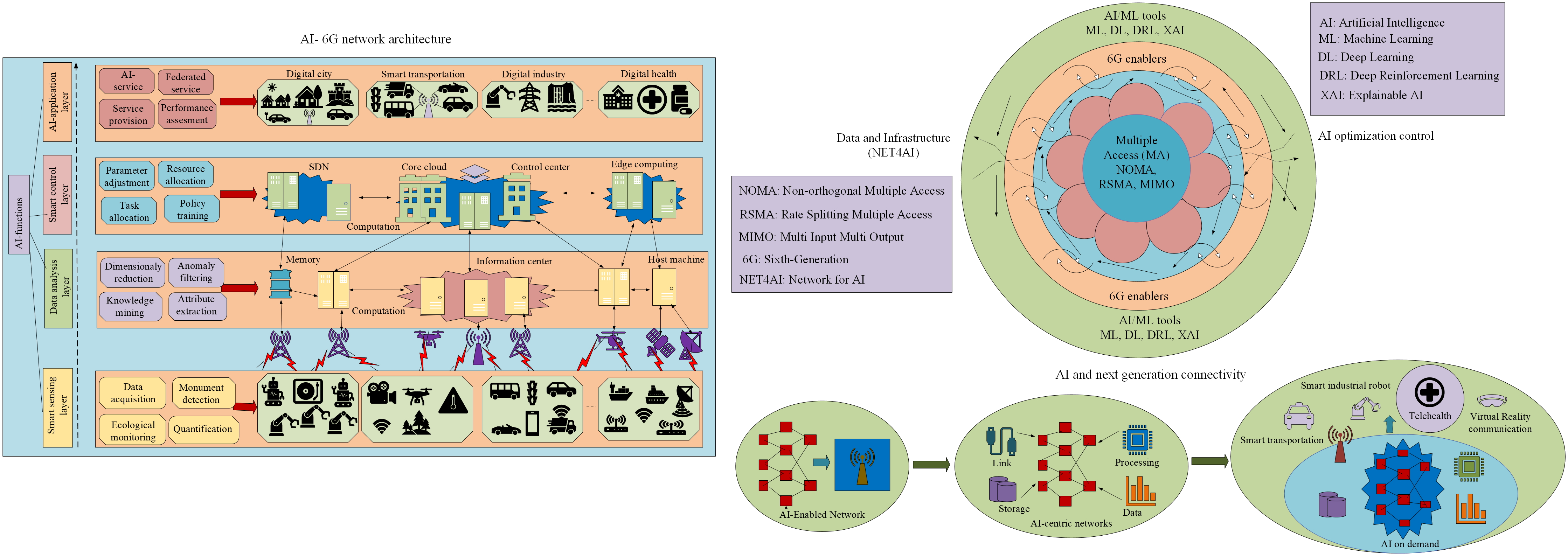

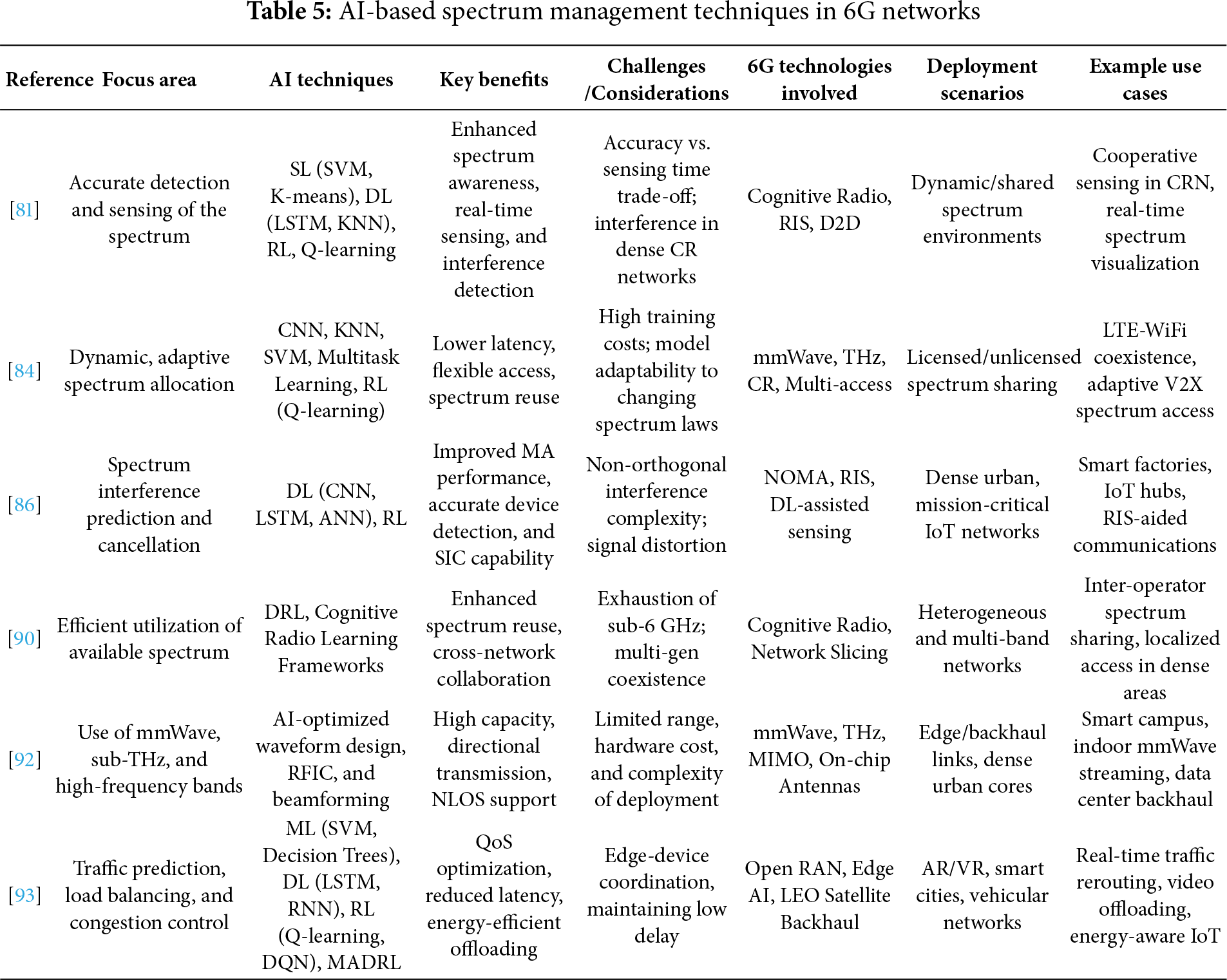

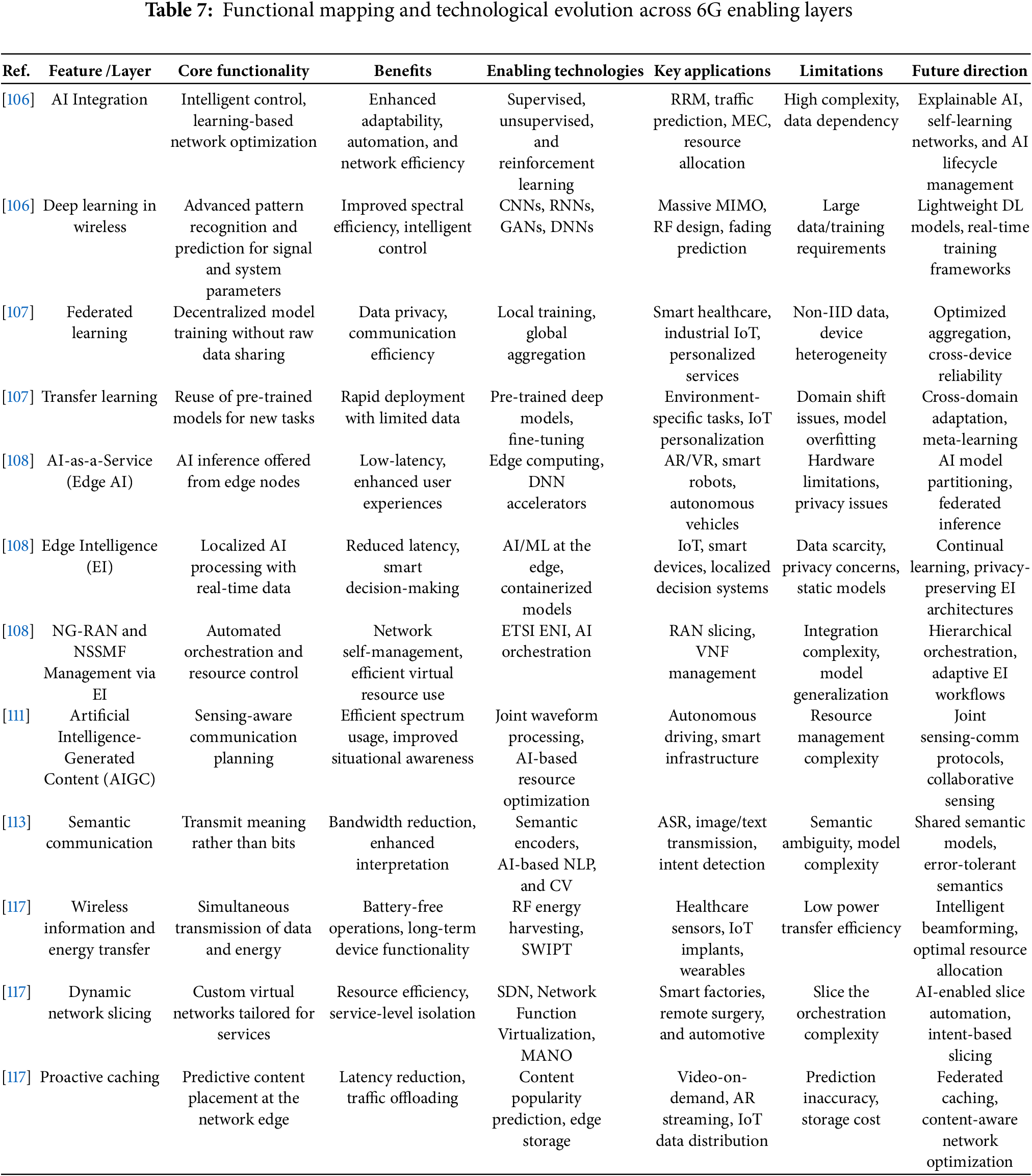

Among the new MA techniques gaining attention for 6G is Fluid Antenna Multiple Access (FAMA). As introduced in [33], FAMA uses fluid antennas to dynamically adjust antenna positions and maximize SNR with a single RF chain. The paper presents a detailed taxonomy of FAMA, examining its system architecture, channel modeling, diversity gain, and integration with other 6G technologies, including RIS, MIMO, THz communication, and AI. FAMA leverages the natural fading characteristics of wireless channels to manage interference, promising to be a valuable component of future 6G systems. Ref. [34] reviews the role of AI in enhancing DSA in wireless networks, focusing on CR, SS, and RM using DL and RL. The paper highlights the potential of generative AI for future 6G systems while addressing key challenges, including data privacy, model complexity, scalability, and regulatory issues. The authors call for further research on AI reliability, ethical deployment, and real-time implementation in edge environments to support intelligent and adaptive wireless communication. Table 1 presents a comparative review of the current literature on the integration of AI with 6G communication systems. It focuses on primary areas, including access technologies, DSA, AI-driven network optimization, and network architecture innovation. The table provides a syntactic summary of current innovations and remaining challenges in 6G and beyond, as well as in AI-powered wireless communication. It also outlines prospective future directions, representing the main contributions of each paper, their limitations, and further ideas.

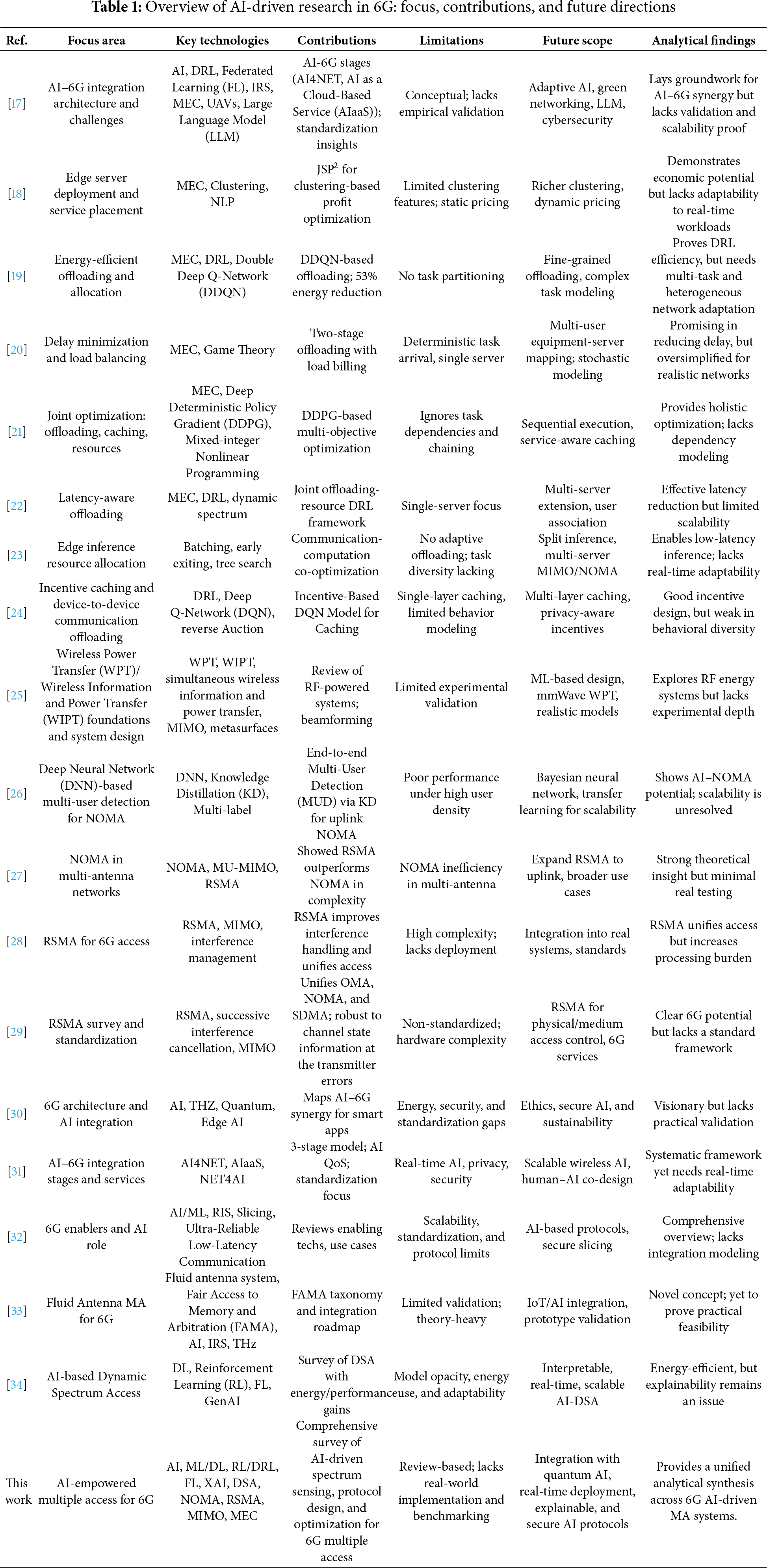

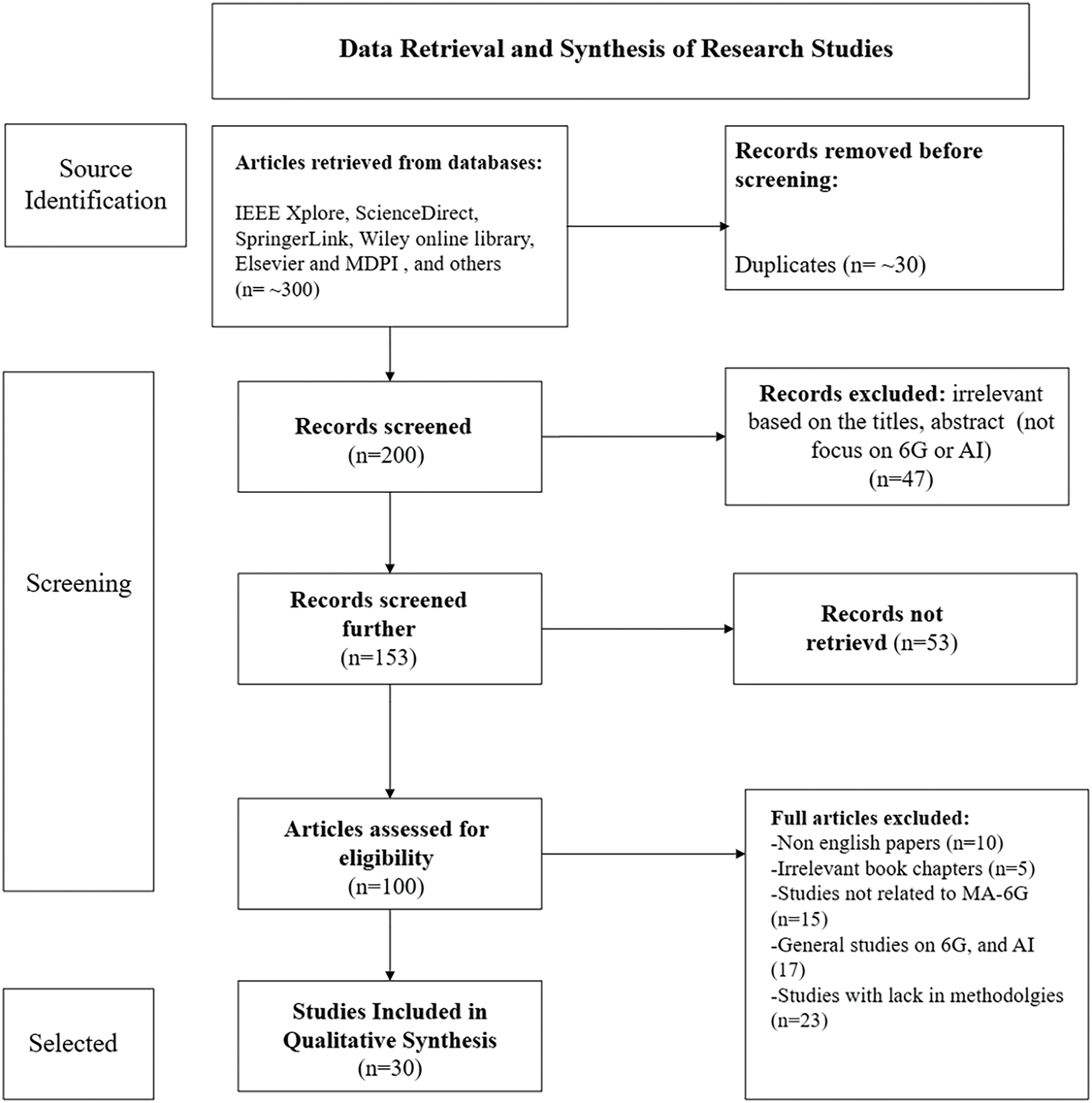

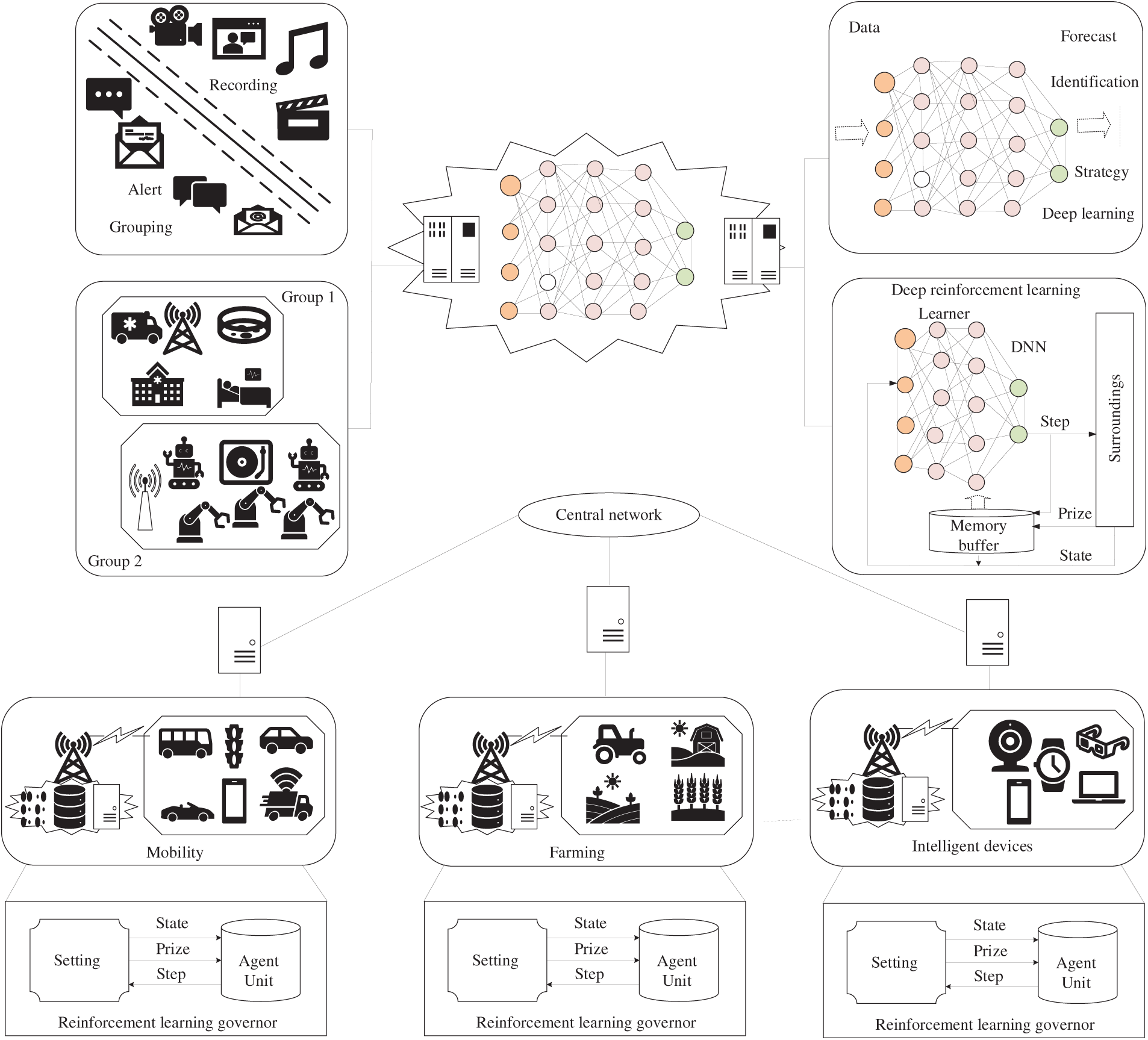

Fig. 1 gives a 3-tier hierarchical model that sketches the integration of intelligence and networking capabilities, which is the core of the research. The high-technology MA Techniques of the core layer include NOMA, RSMA, and Massive MIMO. Such physical-layer technologies control Spectral Efficiency (SE) and significant connectivity but impose complex configuration issues (e.g., power allocation and beamforming vectors). The Outer Layer, which serves as the intelligence engine, addresses these issues. The AI/ML tools that apply DL, more specifically DRL, address the dynamic, non-linear optimization problems of the core network. More importantly, Explainable AI is present in this layer to make these complex control decisions trustworthy and transparent. The Middle Layer of 6G Enablers, which is based on MEC, FL, and DSA links, connects these two layers. The indicated bi-directional flow is a symptom of a symbiotic relationship: AI4NET (AI as an agent of DRL becomes a reality as the parameters of RSMA are dynamically optimized by DRL agents running on MEC servers to match a variety of quality-of-service needs). On the other hand, the network providing the low-latency data streams (i.e., MIMO) and the distributed computing infrastructure (through FL/MEC) needed to constantly and continuously train and evolve the AI models ensures NETwork for AI (NET4AI). Such an organized combination of integration allows the system to intelligently and dynamically cope with a very complex communication environment.

Figure 1: Conceptual framework of AI-empowered multiple access for next-generation (6G) networks

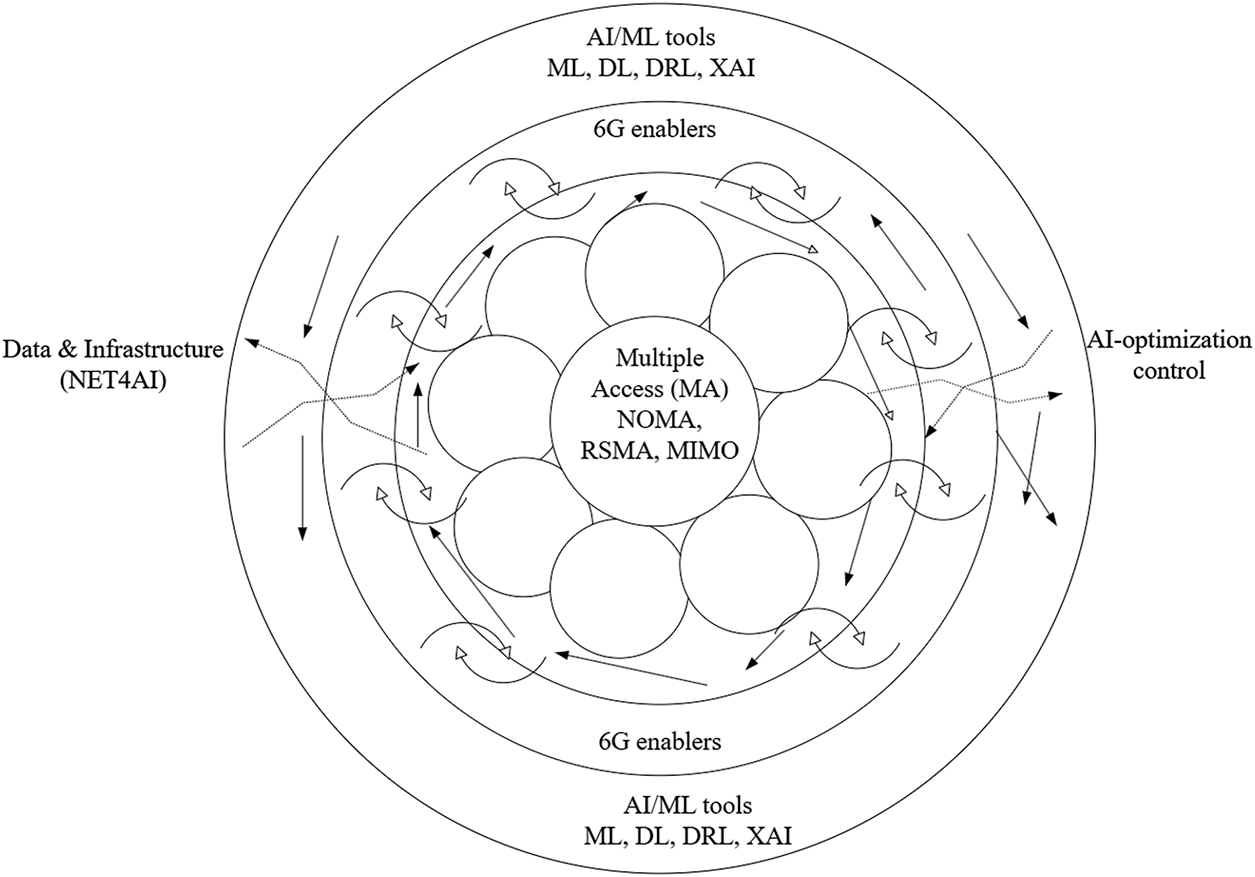

The study is based on a Systematic Literature Review (SLR) following the PRISMA 2020 guidelines to transparently identify, evaluate, and synthesize research on AI-enabled MA-6G wireless networks. The review will unify and segment the main trends in AI-based SS, resource dynamic assignment, protocol design, and the optimization strategies that the 6G communication system must address. The initial search yielded approximately 300 publications. After removing duplicates and applying inclusion/exclusion filters, 153 papers were shortlisted. Following quality assessment and full-text screening, 30 highly relevant studies were selected for detailed analysis, supplemented with benchmark works and standards to ensure completeness.

3.1 Research Question and Aims of the Review

The motivation for this review is the increasing use of AI in 6G wireless communication, where conventional MA schemes are no longer applicable to meet strict performance criteria, such as ultra-low latency, massive connectivity, and high spectral and energy efficiency. Although extensive literature exists on AI in wireless communication, a structured, systematic review that explicitly examines AI-enabled MA mechanisms, such as SS, DSA, resource optimization, and smart protocol construction, has not been available. Consequently, this SLR has the following objectives:

1. Determine and categorize the state-of-the-art AI-based MA schemes for 6G networks.

2. Measure the performance of AI techniques (ML, DL, RL, FL, and XAI) to SS, interference management, and protocol adaptation.

3. Emphasize optimization methods, security issues, and new research to steer future 6G developments.

3.2 Scope and Research Questions

This review will focus on studies published after 2023 that refer to AI-assisted MA schemes in 6G or higher wireless networks. The review contains both theoretical and empirical publications on AI algorithms, network architecture, optimization schemes, and implementation issues. To direct this study, the following research questions (RQs) were developed:

RQ1: What are some of the most essential AI-based solutions in use in 6G multiple access design?

RQ2: What is the use of AI techniques (ML, DL, RL, FL, and XAI) in spectrum sensing, resource allocation, and protocol optimization?

RQ3: What computational models and optimization techniques are applied to enhance efficiency, scalability, and reliability in AI-enabled MA systems?

RQ4: What are the current research gaps, constraints, and outstanding challenges of implementing AI-based MA frameworks for 6G?

The studies were included according to the following criteria: focusing on AI-based MA of the 5G, 6G, or higher networks. Direct application of AI/ML/DL/RL/FL/XAI to SS, protocol design, optimization, or RA. Journal articles, conference papers, and review studies with the publication date between 2020 and 2025. These are studies that lead to performance enhancement, energy savings, security, or interference control in MA designs.

The exclusion criteria were as follows: Literature was limited to physical-layer-based communication or non-AI-based MA methods. Articles that talked about AI in wireless communication without mentioning MA or RM. Non-peer-reviewed sources (e.g., case studies, theses, white papers). Articles that are not based on experimentation, simulation, or theoretical rigor on AI-driven MA.

3.4 Information Retrieval Methodology

Reputable academic databases, such as IEEE Xplore, ScienceDirect, SpringerLink, Wiley Online Library, Elsevier, and MDPI, were systematically searched, with a cross-check using Google Scholar. The search strategy involved the combination of keywords with the Boolean operators to make sure that all the relevant information was retrieved: (“AI” or “ML” or “DL” or “RL”) and (MA) and (NOMA) and (RSMA) and (SS and (DSA) and (Protocol Design) and (Optimization) and (6G, beyond 5G, or Next Generation Networks).

3.5 Data Retrieval and Synthesis

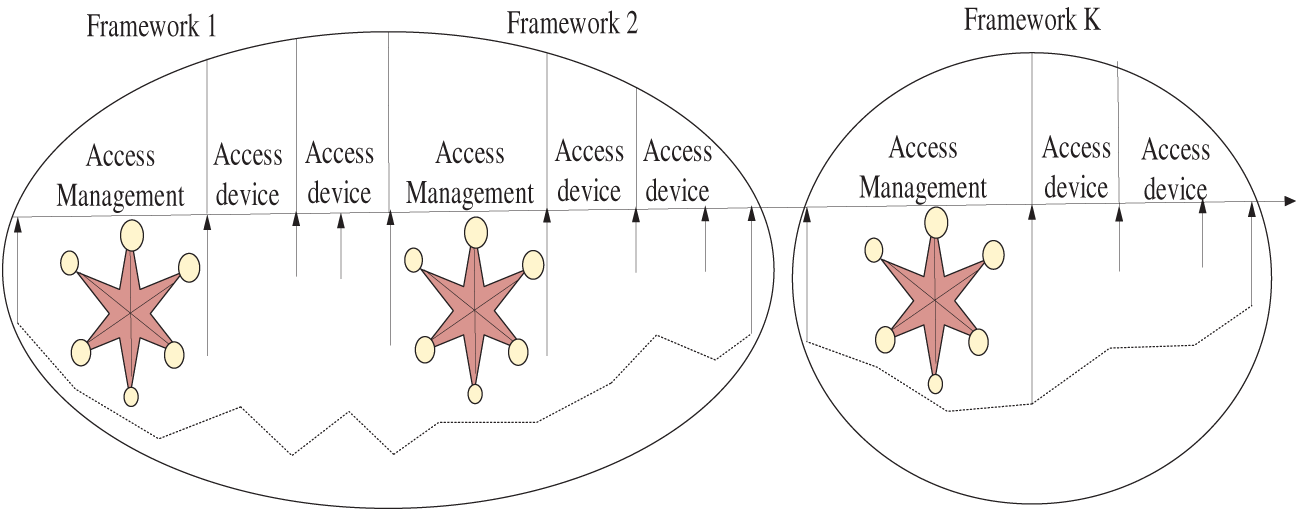

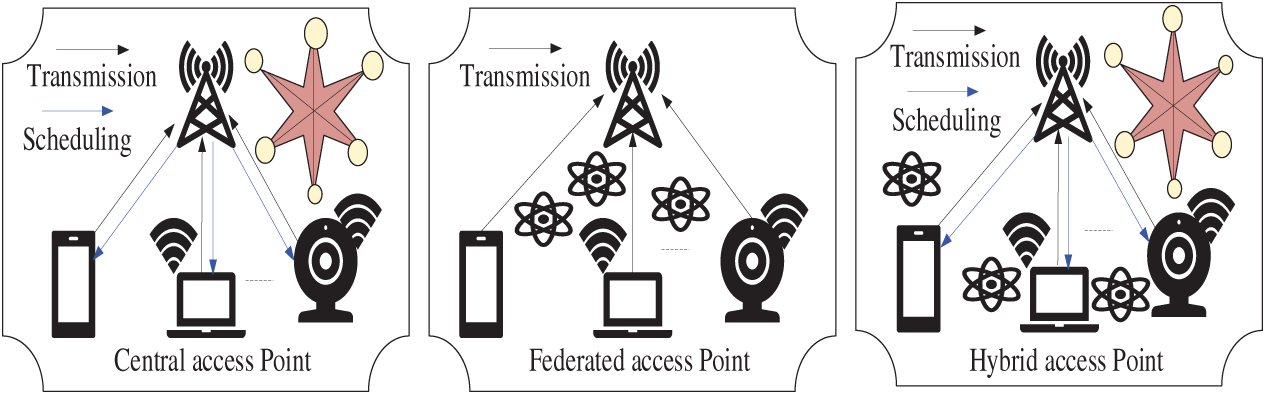

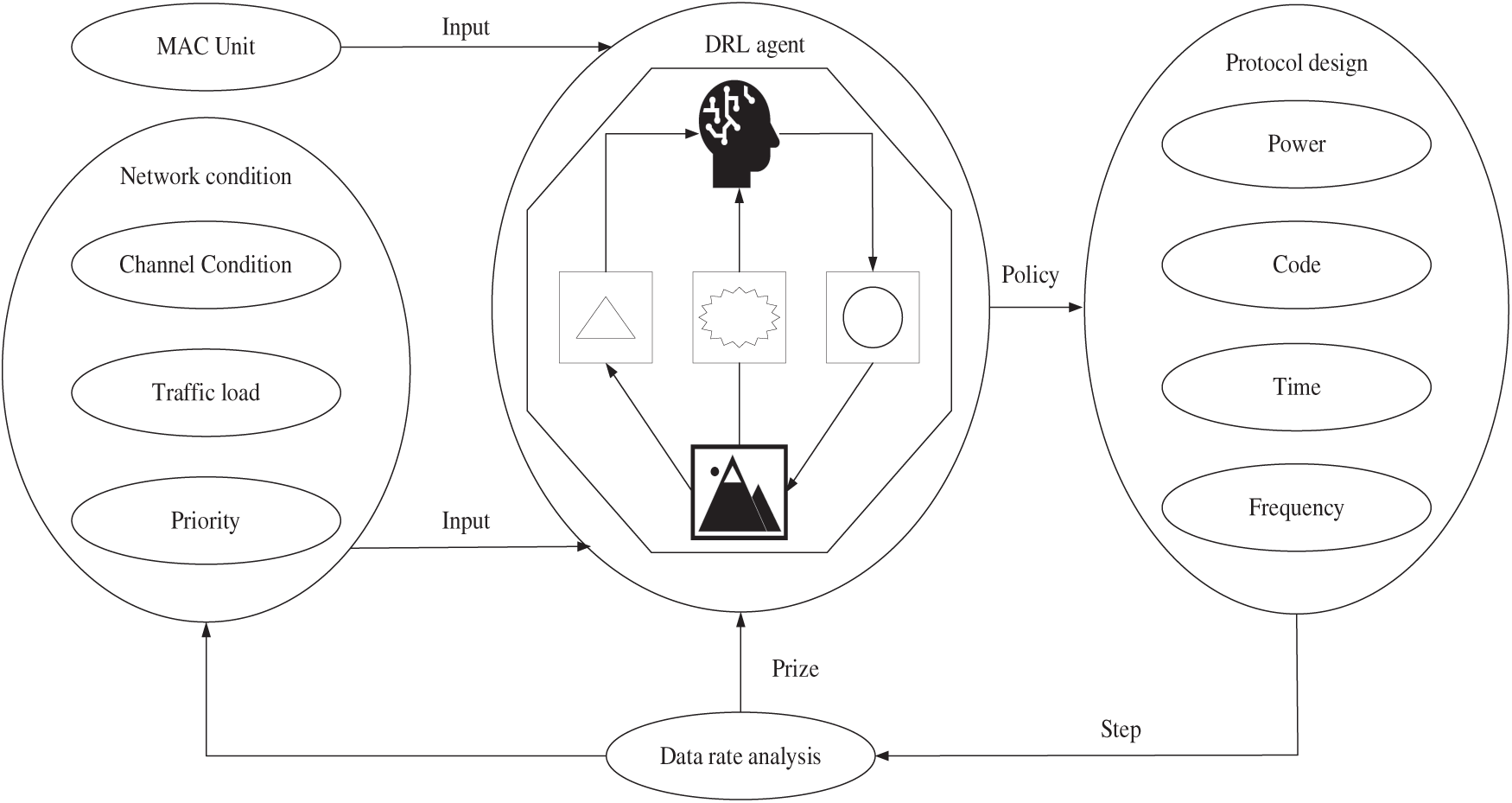

Information was systematically extracted and organized into a structured table, covering the following elements: publication year, research focus, AI method used, contributions, limitations, and future scope. The review used qualitative content analysis to identify trends across four dimensions: spectrum sensing and DSA based on AI; AI-assisted protocol design and scheduling; resource allocation and optimization through AI-based schemes; and AI-empowered MA system security, privacy, and explainability. The taxonomy of the reviewed literature is summarized in visual and tabular maps, as shown in Fig. 2, which illustrate the interrelationships among AI techniques, computational models, and real-world application examples.

Figure 2: PRISMA-style flow diagram of the study selection process

3.6 Novelty and Scope of the Survey

1. This study is methodologically rigorous, which guarantees reproducibility and academic validity. The main contributions are: A systematic review of AI-based MA studies on 6G, demonstrating interdependences between the mechanisms of access and learning paradigms.

2. New mapping of enabling technologies (NOMA, RSMA, RIS, MEC, ISAC, etc.) to appropriate computational models and areas of application.

3. An extensive taxonomy of AI approaches to multiple access, the establishment of their comparative advantages, constraints, and new roles in adaptive communication.

4. Critical research gaps and vision on how to construct secure, explainable, and real-time 6G AI-driven MA frameworks are identified.

4 Fundamentals of 6G and Multiple Access Technologies

6G envisages a paradigm shift against what is currently being offered by 5G and is expected to provide wireless communication abilities called THz communications, holographic beamforming, ultra-massive connections, and sub-millisecond latency. These innovations should meet the demanding needs of future applications, such as immersive XR, autonomous systems, and real-time digital twins. The key to meeting these objectives is advancing MA technologies that govern how scarce spectrum is shared among various users and devices. Although established OMA technologies, including TDMA, FDMA, and Code Division Multiple Access (CDMA), among others, have been successfully used by previous generations, they have severe limitations in the more dynamic, dense conditions expected in 6G. As a result, alternative multiple access schemes have been proposed, with NOMA schemes prioritized (such as Power Domain NOMA, SCMA, and multi-user shared access). In this section, some MA techniques for 6G will be discussed, along with their development, key distinctions, and limitations for designing future connection requirements.

4.1 Key Features of 6G Technology

To meet the stringent performance requirements of sixth-generation (6G) networks—particularly ultra-low latency, extreme data rates, and massive connectivity—recent research has focused on enhancing electromagnetic wave (EW) propagation characteristics, including reflection, refraction, and diffraction. In this context, Index Modulation (IM) techniques have gained significant attention for their ability to exploit reconfigurable antenna structures to transmit additional information, thereby improving SE. The study in [35] introduced a hybrid approach combining IM with Reconfigurable Intelligent Surfaces (RIS), where RIS elements were deployed not only at the receiver but also along the transmission path to enhance signal quality. Two novel modulation schemes—RIS-space shift keying (RIS-SSK) and RIS-spatial modulation (RIS-SM)—were proposed, offering higher energy efficiency and greater structural simplicity than conventional massive MIMO systems. Moreover, the authors designed greedy and maximum-likelihood (ML) detection algorithms to further optimize detection performance.

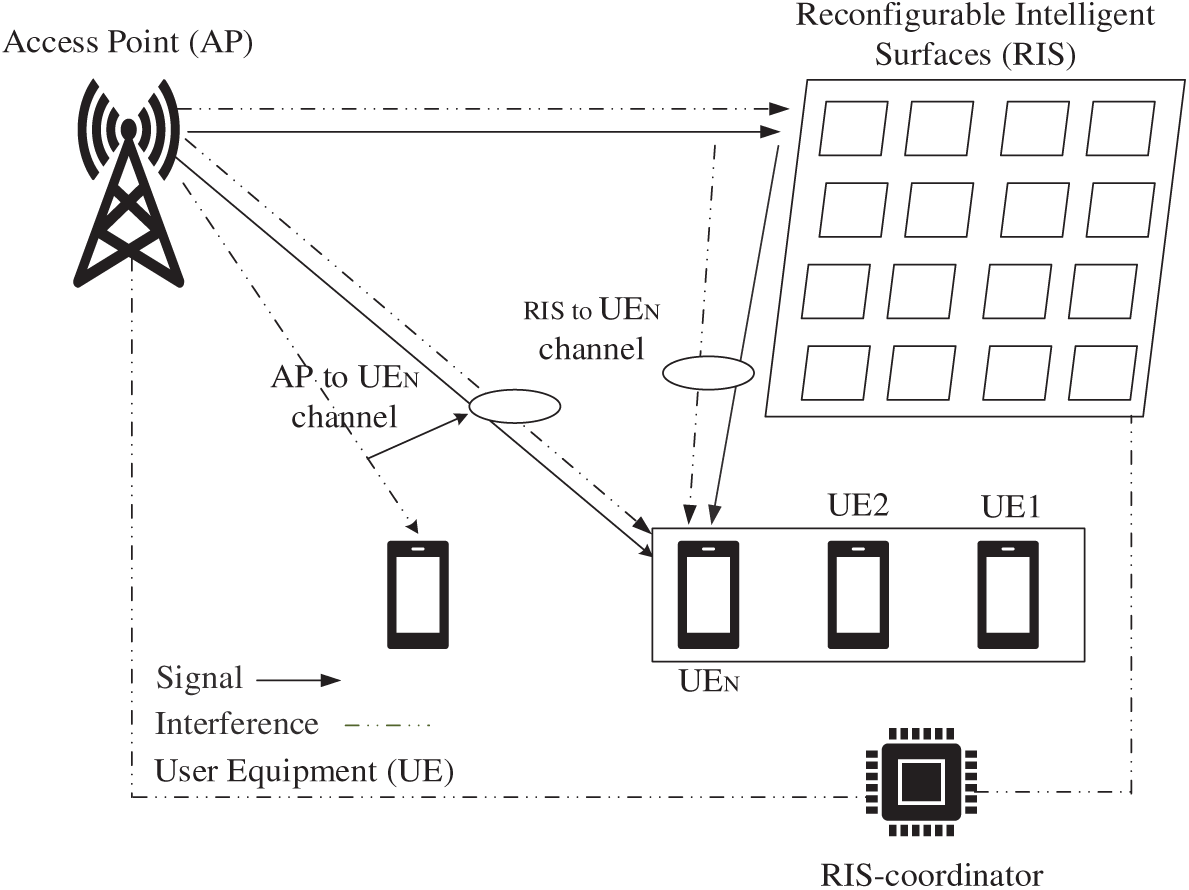

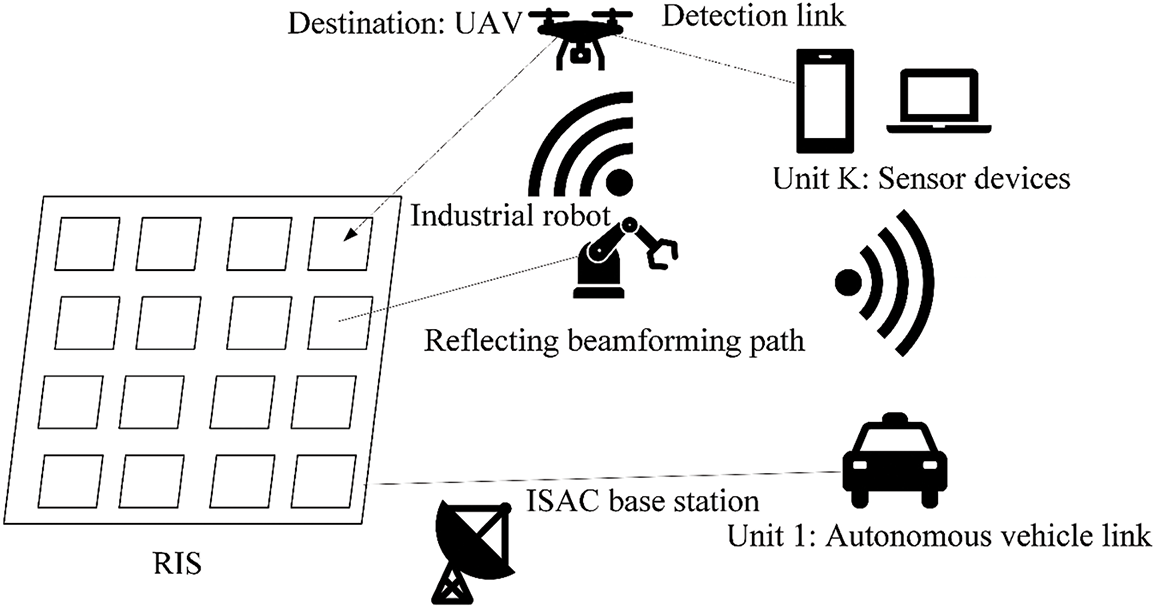

Similarly, the work [36] proposed a non-orthogonal waveform (NOW) modulation technique based on faster-than-Nyquist signaling for DFT-s-OFDM systems. This approach significantly improved SE and reduced peak-to-average power ratio (PAPR) by 1.8–5.8 dB compared to traditional orthogonal waveforms, making it suitable for 5G and 6G communication systems. Fig. 3 demonstrates a high-tech wireless communication case involving an RIS to streamline the connection between an Access Point (AP) and several User Equipment (UEs). The system is designed to overcome signal blockages and enhance spectral efficiency when direct Line-of-Sight (LoS) links have been damaged. The intelligence core of the system is the RIS Controller; it is not a passive relay but rather a dynamically calculated, actively varied set of phase shift and amplitude reflection coefficients (Φ) for the innumerable RIS elements. This is done by a special low-rate control connection (usually wired or short-range wireless) to the RIS. The main objective is joint optimization: the RIS’s passive beamforming is optimized to maximize the received signal at the targeted UE while simultaneously avoiding nulls or reducing interference to other non-target UEs and any additional interfering sources. This adaptive control can be based on channel state information (CSI) obtained through uplink probing and following ML models applied on the RIS controller or a centralized server so that there is a high level of transmission reliability and advanced interference management over the coverage region without extra transmission power expenditure.

Figure 3: RIS-assisted signal enhancement for user connectivity

The millimeter-wave (mmWave) spectrum, initially introduced in 5G New Radio (NR), remains a cornerstone for 6G communications owing to its capability to provide bandwidths up to 300 GHz, significantly exceeding the capacity of sub-6 GHz technologies. According to Shannon’s theorem, this expanded bandwidth directly enhances channel capacity, enabling ultra-high-speed data transmission. Additionally, the shorter wavelengths of mmWave facilitate the integration of compact, high-gain antenna arrays that support directional beamforming, beneficial for both secure communications and sensing applications. However, mmWave systems face challenges such as high path loss, LoS dependency, and mobility-induced fading, necessitating advanced beam management and mobility solutions [37,38]. As data rates approach Tbps, the THz band (0.1–10 THz) has emerged as a promising solution for ultra-high-speed wireless links, backhaul/fronthaul connectivity, and the Internet of Nano-Things (IoNT) [39]. Despite challenges such as severe propagation loss and limited transceiver power, recent advancements—including distance-aware physical-layer designs, ultra-massive MIMO, and RIS-assisted THz links—have demonstrated communication ranges exceeding 100 m under both LoS and NLoS conditions.

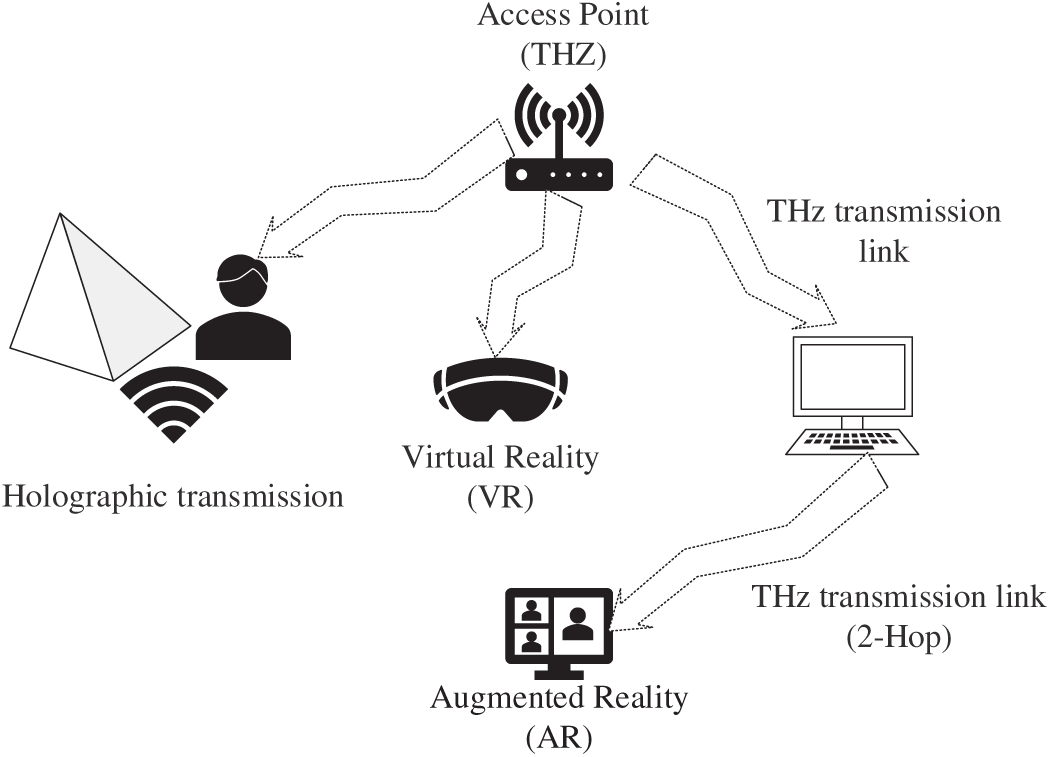

Looking ahead, 6G networks will integrate multiple enabling technologies—such as sub-THz and visible-light communication (VLC), ultra-dense networks, and aerial platforms—to achieve data rates ranging from 100 Gbps to several Tbps. Initial deployments are expected to rely on sub-THz frequencies for short-range LoS communications, supported by multi-polarized high-gain antenna arrays. Nonetheless, hardware complexity and energy efficiency remain significant design challenges [40]. Fig. 4 shows some of the applications of WLAN-based THz that exploit the vast amount of unlicensed bandwidth in the sub-THz and THz (100 GHz–10 THz) bands. This extreme bandwidth is needed to enable Virtual/Augmented Reality (VR/AR) and holographic communication, where peak data rates of over 100 Gbps are required to deliver immersive, high-fidelity content with nearly zero latency. The figure shows short-range, high-gain THz connections that employ the highly directional, pencil-beamforming antennas to overcome the significant path loss and atmospheric absorption of the band. Moreover, the presence of two-hop THz links highlights the need for multi-hop relaying and mesh networking topology to expand coverage and provide strong connectivity, as THz signals have a limited communication range.

Figure 4: Emerging WLAN applications

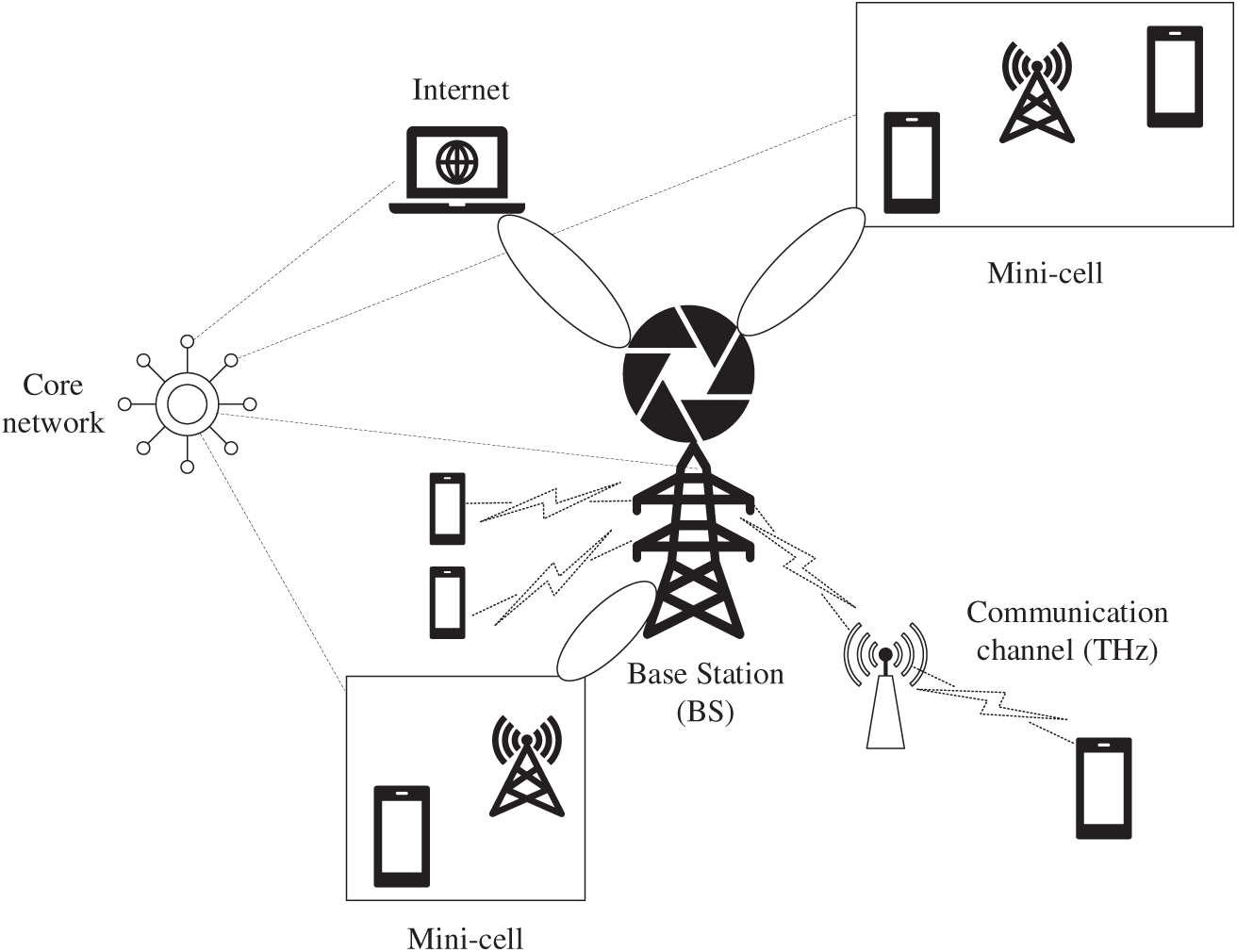

Fig. 5 presents the principal architectural characteristics of THz-based 6G networks, focusing on the incorporation of THz functionality at the network level. It requires deep integration with the architectures of technologies such as SDN and Network function virtualization to control highly sporadic, bandwidth-intensive THz traffic dynamically. To indicate the role of the IoT data center, the data centers are explicitly mentioned to show how THz communication will be used to provide high-speed, high-density intra-data center connections. This approach outgrows traditional fiber to support the high data transfer and processing requirements of the vast amount of data generated by mMTC. Lastly, the figure underscores the importance of THz in high-speed access-backhaul systems. In this role, THz links are used both as wireless fiber to provide multi-gigabit-per-second connectivity to last-mile access (e.g., small-cell front-haul) and as high-capacity backhaul links to connect macro Base Stations (BSs) to the core network, exploiting the low latency and high throughput of the band.

Figure 5: THz-enabled 6G architecture with base stations, small cells, and relay links

Optical Wireless Communication (OWC) has also emerged as a strong complement to the THz spectrum, offering interference-free, high-capacity, and ultra-low-latency wireless links. The optical domain comprises infrared (IR) (760 nm–1 mm), visible light (360–760 nm), and ultraviolet (UV) (10–400 nm) bands, each serving distinct application scenarios [41]. Among these, Visible Light Communication (VLC) enables dual-purpose operation for both illumination and data transmission. Recent advancements in blue laser-based lighting have achieved data rates up to 26 Gbps, allowing high-speed light-based IoT applications [42,43]. Meanwhile, UV communication supports non-line-of-sight (NLoS) transmission through atmospheric scattering, though safety considerations remain crucial [44]. OWC technologies—including LiFi, VLC, Optical Camera Communication (OCC), Light Detection and Ranging (LiDAR), and Free Space Optics (FSO)—are enabling high-throughput connectivity across diverse domains, such as indoor, vehicular, underwater, and satellite systems. While OWC can provide up to 1000× more bandwidth than conventional RF systems, its performance is constrained by the bandwidth of optoelectronic components. Recent innovations in high-speed LEDs and silicon photomultipliers have achieved data rates exceeding 1 Gbps. To mitigate orientation sensitivity, techniques such as adaptive spatial modulation and multi-directional transmitters are being explored [45].

In scenarios where fiber deployment is impractical or economically infeasible, Free Space Optics (FSO) offers a robust backhaul/fronthaul alternative, achieving fiber-like data rates and seamless integration with optical networks—particularly advantageous for micro-cellular and mobile backhaul systems [46]. Expanding beyond terrestrial systems, three-dimensional (3D) network architectures integrating terrestrial, aerial (UAVs), and satellite communications are being developed to achieve global coverage and ubiquitous connectivity. This unicellular network paradigm eliminates traditional cell boundaries, enabling continuous handovers and user-centric communication across heterogeneous technologies [46]. Finally, spectrum scarcity and underutilization remain major challenges in 6G systems. DSM and Cognitive Radio (CR) techniques will play pivotal roles in enhancing SE through listen-before-talk and AI-driven adaptive optimization [47,48]. Moreover, Symbiotic Radio (SR) extends the CR concept by combining it with ambient backscatter communication (AmBC), enabling low-power passive IoT connectivity. Emerging frameworks that integrate AI and blockchain are being explored to allow for secure, autonomous, and transparent spectrum sharing, further reinforcing the intelligence and resilience of future wireless networks [49].

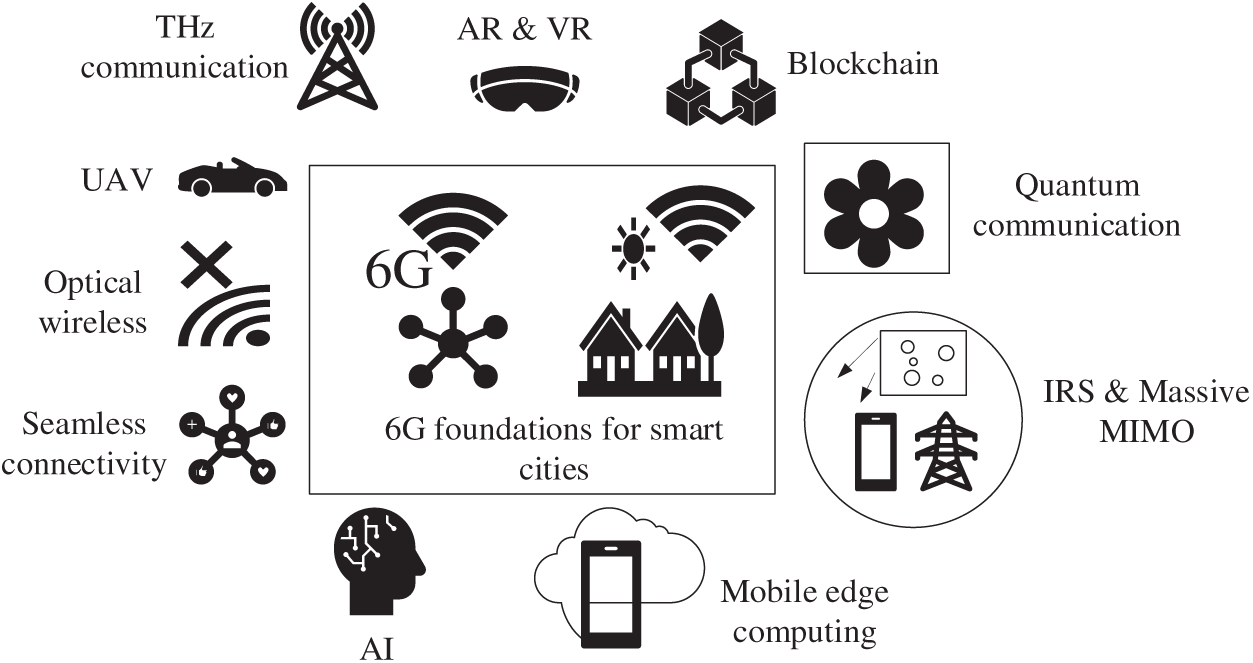

Finally, 6G vision goes vertical, with satellites and UAVs as BS, to offer unprecedented flexibility and continuous global coverage, reaching even previously unreachable areas (oceans and mountainous regions) via a decentralized design. In addition to spectral innovations and architectural changes, the transformative power of 6G lies in the fact that central intelligent technologies connect the physical and digital worlds. The push for ultra-low latency and enormous processing power creates a need to transition to Edge Computing. This approach strategically distributes computational units across the User Equipment (UE) to keep delays to a minimum, supporting applications that require real-time performance, such as self-driving automotive and industrial automation. This spread intelligence, which frequently employs Federated Learning, is vital in privacy and hyper-responsiveness [50]. Moreover, Digital Twins (DTs), which are high-fidelity virtual replications of the network, enable continuous simulation, fault prediction, and dynamic resource allocation. This addresses the complexity of managing the network and improves the fidelity of the system and performance in real-time [51]. Importantly, 6G is also built to serve as the base communication layer of the Metaverse and the high-resolution Extended Reality (XR) applications. These applications require Tb/s data rates and milliseconds latency to be effectively immersive and ubiquitous hologram experiences, which will satisfy one of the fundamental design principles of 6G architecture. Last but not least, Blockchain is incorporated to support the security of this hyper-connected ecosystem by offering a decentralized registry. This establishes secure, transparent operational structures, such as dynamic spectrum sharing and identity management, to enhance the reputation and reliability of the 6G network [52].

4.2 Analysis of Strengths and Limitations across 6G Technologies

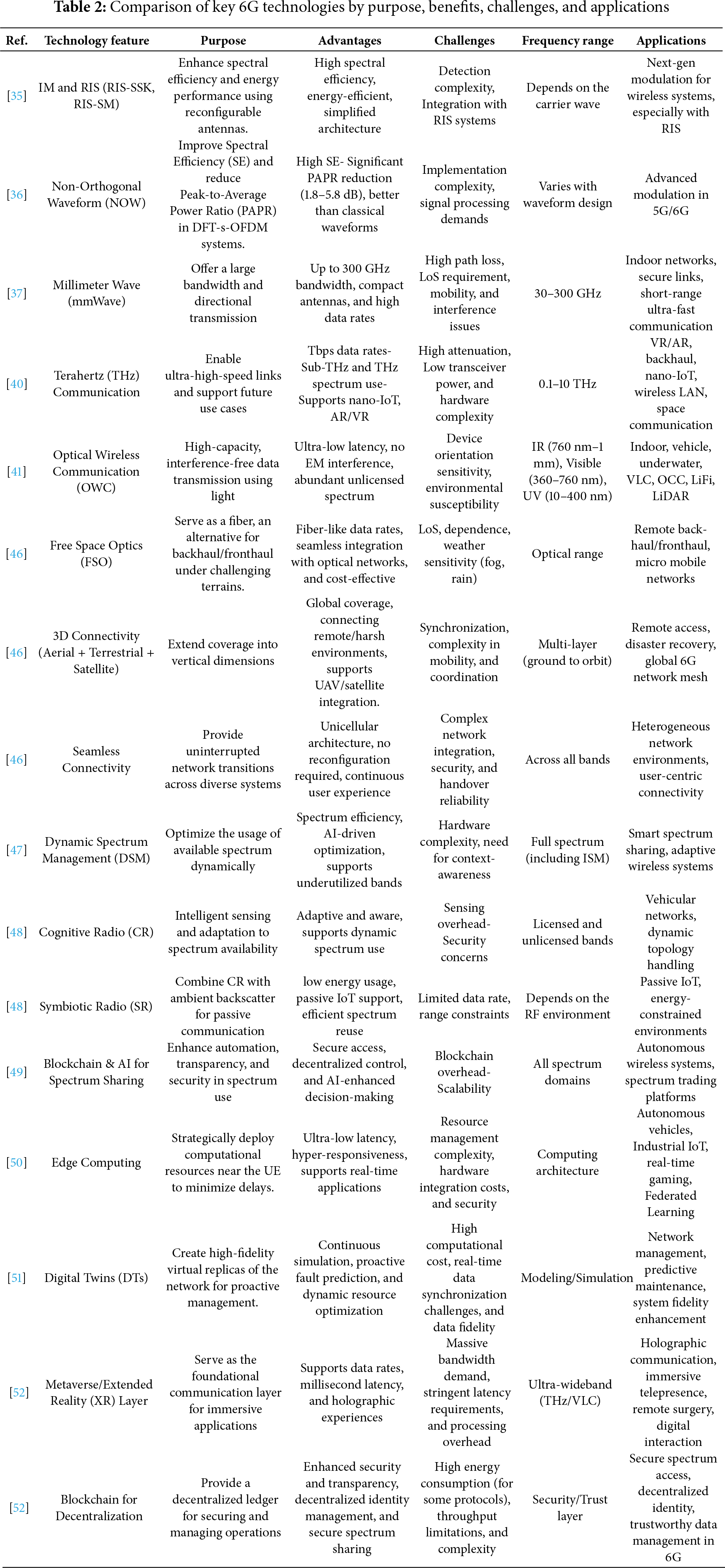

To achieve the promises of 6G wireless communication, a wide range of cutting-edge technologies is being developed and implemented. All these technologies, whether high-frequency communication schemes such as mm-wave and THz bands, OWC, RIS, or DSM, offer distinct benefits and address specific 6G challenges within the ecosystem. But they largely depend on use-case scenarios and deployment environments, with performance, applicability, and limitations varying accordingly. In this section, a comparative analysis is conducted to evaluate the most significant enabling technologies of 6G on various key metrics, including data rate, spectral efficiency, hardware complexity, coverage, and adaptability. This comparison is presented in Table 2 to provide a clear understanding of how these innovations relate to one another and to the broader context of future global, intelligent, and ultra-fast wireless networks.

4.3 Overview of Traditional and Modern Multiple Access Techniques

Since the primary goal of 6G wireless networks is to achieve new heights in connectivity, capacity, and intelligence, it is necessary to innovate at the PL. The future 6G systems have to facilitate ultra-high data rate, massive connectivity of devices, improved spectral and EE, and management of signals in diverse and dynamic circumstances. A diverse set of more sophisticated technologies is being considered to achieve these lofty targets, including new modulation and coding strategies, NOMA, ultra-massive MIMO, and intelligent surface technology. Innovations that complement spectrum utilization and system performance are also being reviewed to optimize spectrum use, such as in-band full-duplex communication, orbital angular momentum-based transmission, and Holographic Radio (HR). These technologies, when combined, form the basis for a flexible, efficient, and intelligent PL that promises to lay the foundation for 6G’s transformative capabilities.

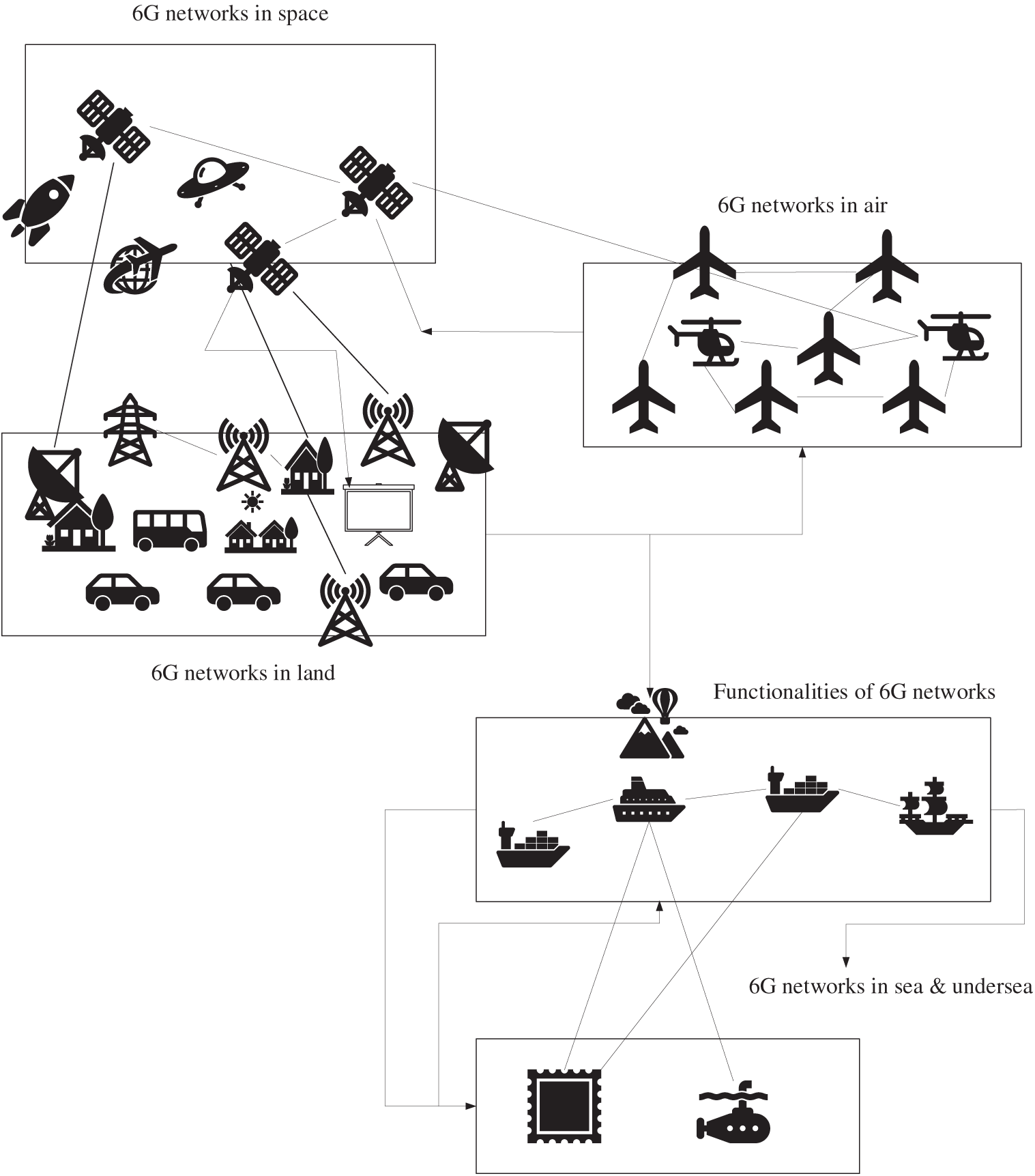

Fig. 6 illustrates the paradigm of ubiquitous 6G-enabled connectivity, highlighting the seamless networking across multiple layers between terrestrial and non-terrestrial networks (NTNs) to enable global data communication and sharing. The situation leverages the key characteristics of 6G—RIS, THz communication, and an integrated SDN Core—to connect highly diverse environments. The networks encompass connectivity between space networks (such as Low-Earth Orbit (LEO) satellites to provide a backhaul), airborne networks (UAVs and High-Altitude Platform Stations acting as aerial BSs), earth networks (dense ground infrastructure), sea networks (maritime communication/sensing), and submarine networks (acoustics or special underwater RF/Optical links). Ease of integration is technically achieved through network slicing and AI-based resource orchestration, which dynamically assign and control connectivity between these incompatible areas. This architecture provides global coverage not only for simple data sharing but also for real-time sensing, edge processing, and shared situational awareness, effectively making the world a single, non-homogeneous, heterogeneous communications and computing platform.

Figure 6: 6G application scenarios across space, air, land, sea, and underwater domains

As 6G aims to enable comprehensive applications, utilize the whole spectrum, ensure global connectivity, support all sensory modalities, provide robust security, and drive complete digitalization, the demand for diverse and sophisticated application environments becomes evident. Achieving ultra-high data rates in the terabit-per-second range, supporting massive connectivity, expanding coverage, and ensuring secure communication present various new challenges, particularly in waveform and modulation design. Waveform design is a cornerstone of communication system performance and must be tailored to meet the specific requirements of different 6G use cases. While 5G systems primarily relied on multi-carrier waveforms, such as OFDM, for their high spectral efficiency, the unique demands of 6G call for more specialized waveform strategies. The use of higher-frequency bands, as expected in 6G, introduces challenges such as increased path loss and the need for efficient broadband power amplification at these frequencies. To mitigate these issues, research has examined low-PAPR single-carrier waveforms, as highlighted in recent studies.

In highly mobile environments, orthogonal time-frequency space (OTFS) and other transform-domain waveforms offer advantages due to their effective handling of Doppler shifts and delay. In scenarios where maximizing data throughput is critical, methods like overlapped multiplexing and SE-oriented frequency multiplexing have been explored to enhance SE [53]. Additionally, integrating ISAC technologies requires waveform designs that support both data transmission and environmental sensing. Modulation practices also play a central part in determining the presentation and reliability of communication systems. While Quadrature Amplitude Modulation (QAM) remains dominant in 5G New Radio (NR) and Long Term Evolution (LTE), emerging alternatives have attracted attention due to their potential advantages. These include group interpolation, asymmetric QAM, multidimensional and specific QAM, and IM. These methods have demonstrated benefits, including lower PAPR, improved robustness, and enhanced performance under diverse channel conditions.

Robust channel coding plays a crucial role in enhancing the trustworthiness, throughput, and performance of contemporary communication systems. The evolution of Error-Correcting Codes (ECCs) has transitioned from algebraic to probabilistic methods, significantly enhancing performance. Among the most widely adopted ECCs are polar codes, Low Density Parity Check (LDPC), and Turbo codes, which are standards for 4G and 5G communication and are used in the data and control channels, respectively. Despite their differences in decoding techniques, these codes share a common Bayesian foundation. They are viewed as strong contenders to meet the stringent demands of 6G, such as ultra-low latency and EE. All three are linear block codes and can be decoded using Belief Propagation (BP) techniques. LDPC codes benefit from sparse parity-check matrices, Turbo coding employs the Bahl, Cocke, Jelinek, and Raviv (BCJR) algorithm over trellis structures, and Polar codes rely on Successive Cancellation (SC) decoding. For short block lengths, where polarization weakens, advanced methods such as SC flip/list and BP flip/list decoding are necessary to improve performance [54].

Although their decoding principles are related, improvements in encoding—such as optimized generator polynomials—have led to simpler factor graphs and more energy-efficient hardware implementations. For instance, 4G LTE uses a partitioned Max-log-BCJR Turbo decoding scheme. However, 5G NR employs LDPC decoding with adaptive min-sum belief propagation and Polar code decoding via node-oriented successive cancellation. Moving toward a unified circuit-level ECC architecture is critical for 6G. With 6G’s requirements for ultra-reliability and minimal latency, shorter code lengths are expected, reducing the efficiency of traditional decoding due to decreased polarization and randomness. Alternative solutions, such as near-maximum-likelihood decoding—including ordered-statistics decoding and guessing-random-additive-noise decoding—offer improved consistency. Additionally, new coding strategies, such as polarization-adjusted convolutional codes and 2D spatiotemporal coding for massive MIMO, can deliver better reliability and throughput with low decoding delay, making them promising candidates for next-generation systems [55].

The move from LTE employing OFDM access to the upgraded OFDM in 5G NR reflects the ongoing reliance on Orthogonal Multiple Access (OMA) strategies. As 6G networks aim to accommodate far higher connection densities than 5G, Non-Orthogonal Multiple Access (NOMA) is considered a promising solution to address critical requirements, including ultra-low latency, cost-effectiveness, large-scale connectivity, and robust reliability [56]. Initially introduced by Nippon Telegraph and Telephone, NOMA departs from the outmoded OMA, which allows multiple user terminals to access the same radio resources by separating them in time, code domains, or frequency. It introduces intentional intrusions during transmission and relies on Successive Interference Cancellation (SIC) at the receiver to separate the signals of different users. This approach improves SE and system capacity, reduces latency, and decreases dependency on CSI. Nevertheless, this results in higher system complexity.

Various NOMA schemes include Power-based NOMA, Code-oriented NOMA (such as SCMA), and Interleave-Based NOMA. Integrating NOMA with other emerging wireless technologies has shown promising results—these include mmWave communications, Massive MIMO systems, VLC, EH, PL Security, CR, collaborative communication, and Wireless Caching. For example, research explores combining NOMA with Artificial Noise in Massive MIMO-NOMA to enhance secrecy and EE. Similarly, incorporating RIS into NOMA frameworks improves passive beamforming and deployment efficiency. Studies also examine NOMA-assisted AmBC as a means of enhancing both spectral and EE, while maintaining system reliability and security. Despite significant academic and industrial interest in NOMA, its full implementation in 5G networks was hindered by technical limitations. For 6G systems, effectively leveraging NOMA will require simplified yet robust multi-user interference suppression, robust security and reliability provisions, and the formalization of a standardized NOMA framework [57].

Ultra-massive MIMO, building on the foundational work by Marzetta in 2009, extends the capabilities of massive MIMO—a cornerstone of 5G known for its SE gains. As 6G evolves, ultra-massive MIMO envisions deploying hundreds to thousands of antennas to further enhance EE, network flexibility, coverage, and precise positioning, particularly across broader frequency bands [58]. This technology promises significant improvements, such as advanced multiplexing, interference mitigation, energy savings, and expanded support for NTNs. Its superior spatial resolution enables accurate 3D positioning, particularly in complex environments. However, near-field and wideband effects, which introduce sparsity in the angle and delay domains, pose new challenges. To address them, research is actively progressing in channel modeling, beam management, codebook design, and beam training. As ultra-massive MIMO expands into high-frequency bands, such as THz and mmWave, ongoing investigations include modulation schemes, channel characteristics, and circuit design. An emerging trend is the integration of RIS, which offers passive alternatives to active antennas, thereby improving coverage, capacity, and energy efficiency. Additionally, distributed ultra-massive antenna systems—where antenna elements are spread across large areas—can maintain high SE, reduce power consumption, and provide consistent service quality [59].

AI is increasingly being used to enhance ultra-massive MIMO operations, including beamforming, channel estimation, and user identification. Despite its potential, real-time deployment and data scarcity remain barriers. Looking ahead, ultra-massive MIMO is also being explored for its applicability in integrated space-air-ground-sea networks, including skywave, underwater acoustic, and satellite communications, broadening its impact beyond terrestrial wireless systems.

Coordinated Multi-Point (CoMP) is a communication strategy where multiple access points work together to serve mobile users, effectively creating a network-layer MIMO system. This approach extends spatial diversity beyond what is achievable with conventional physical-layer MIMO techniques. First introduced in 3rd Generation Partnership Project (3GPP) Release 11 for LTE-Advanced, CoMP has become increasingly crucial in 5G, especially for mitigating downlink inter-cell interference and supporting joint uplink user detection [60]. In the context of 6G, with the expansion into high-frequency spectrum bands above 10 GHz, the role of CoMP becomes even more critical due to challenges such as signal blockage. By utilizing diversity at the BS level, CoMP complements antenna-level spatial techniques and enhances system reliability.

CoMP also supports the evolution toward cell-free Radio Access Networks (RANs), where UE can simultaneously connect to multiple BS within the same radio access technology. In this architecture [61], a centralized processing unit manages coherent transmissions across many distributed, single-antenna APs. Recent studies suggest that cell-free massive MIMO can outperform traditional cellular MIMO in terms of fronthaul efficiency and overall system performance. However, despite its advantages, CoMP faces key implementation challenges. Effective deployment depends on how BS are clustered, which remains an area of active research. Additionally, ensuring tight synchronization among cooperating BSs is essential to prevent inter-symbol and inter-carrier interference. Coherent channel estimation and equalization across multiple BSs further increase the system’s computational demands.

In-Band Full-Duplex (IBFD) is an emerging wireless communication technique that enables simultaneous transmission and reception on the same frequency band, offering a potential twofold increase in SE and greater flexibility in network access compared to conventional Frequency-Division Duplex (FDD) and Time-Division Duplex (TDD) systems. Although its foundational concept dates back to continuous-wave radar systems, the practical application of IBFD has gained momentum only in recent years, thanks to advances in interference cancellation.

Research [62] efforts now focus on a range of IBFD use cases, including relay communication, multi-node full-duplex systems, and joint radar-communication platforms, which promise gains in throughput, sensing, and simultaneous connectivity. However, a significant hurdle remains in effectively managing self-interference that occurs when a device transmits and receives on the same channel. To address this, scientists are developing SIC methods, both electronic and optical, particularly for sub-6 GHz applications. As bandwidth increases, challenges become more complex, especially for THz and OWC bands—key targets for 6G. Solutions under investigation include shared antenna structures, iterative interference suppression, and theoretical modeling of full-duplex systems. Notably, Optical SIC shows promise in high-frequency environments due to its broad bandwidth and precision. These developments mark IBFD as a transformative technology for future wireless systems, with ongoing research aimed at overcoming the technical barriers to real-world deployment.

Orbital Angular Momentum (OAM), a natural attribute of EW, introduces a novel dimension to wireless communication by using spiral-phase wavefronts to carry information. Different orthogonal OAM modes can be transmitted simultaneously over the same frequency band using distinct antennas, significantly enhancing SE and channel capacity without additional bandwidth. Initially explored in optical systems, OAM has expanded into the radio, acoustic, mmWave, and THz domains. Its potential is evident in applications such as FSO, optical fiber links, and acoustic channels, where it supports high-data-rate transmissions. Combining OAM with MIMO architecture further boosts communication capacity. The study [63] proposed two OAM-based MIMO frameworks that achieve improved throughput across diverse scenarios. However, practical deployment faces challenges such as beam divergence, alignment sensitivity, and limitations in NLoS environments. Despite these obstacles, OAM has shown promise in emerging areas such as radar systems and microwave sensing, positioning it as a promising enabler for 6G. To achieve real-world adoption, ongoing work must focus on improving beam control, refining system models, and addressing commercialization barriers.

As wireless communication evolves beyond 10 GHz to meet growing data demands, challenges such as increased signal attenuation, reduced diffraction, and heightened interference emerge. While massive MIMO with active beamforming in the mmWave spectrum offers a partial solution, it is power-intensive and complex. Consequently, researchers are exploring alternative technologies, such as RIS. RIS consists of programmable metasurfaces that passively manipulate EW by adjusting their reflective properties. By deploying RIS on surfaces like walls and ceilings, wireless environments can be transformed into smart radio environments, enabling enhanced signal strength with reduced energy consumption compared to active massive MIMO systems. Unlike traditional massive MIMO arrays tailored to specific radio technologies, RIS operates effectively across a broad range of frequencies, including both RF and optical bands, making it an economical solution for ultra-wideband 6G networks.

Despite its advantages, RIS implementation faces hurdles, including accurately modeling near-field channels and the complexity of integrating devices into third-party infrastructures not owned by mobile network operators. Therefore, standardized frameworks, interface agreements, and communication protocols are crucial for widespread deployment across public and private domains [64]. Additionally, RIS shows promise in aerial network scenarios. UAVs, which are becoming integral to 6G networks due to their mobility and coverage capabilities, often experience THz propagation issues due to motion and obstructions. Recent studies have explored AI-driven solutions—specifically, attention-based models—for predicting 3D RIS beam configurations in UAV-assisted networks. These AI models have demonstrated improved performance over traditional LSTM and gated recurrent unit architectures.

HR introduces a novel approach to wireless communication by leveraging controlled interference from EW to shape and reconstruct the EW environment dynamically. Using spatially continuous microwave apertures enables fine-grained spatial multiplexing, achieving ultra-high spectral and EE, and supporting massive traffic loads and high-capacity demands. Holographic MIMO, a realization of this concept, represents the theoretical limit of multi-antenna systems confined to a finite surface. Rather than mitigating interference, HR exploits it as a constructive tool, enabling applications such as high-precision localization, wireless energy transfer, industrial automation, and Massive IoT (MIoT) connectivity. Additionally, it reduces the need for conventional CSI by capturing RF spectral holograms of transmitters via holographic interference.

Two primary methods are being explored for practical deployment: reconfigurable holographic surfaces, which use densely arranged subwavelength elements, and tightly integrated broadband antenna arrays supported by high-power unitraveling-carrier photodetectors [65]. Both techniques aim to deliver high performance while reducing power consumption and costs. Despite its promise, HR still faces notable challenges. These include the absence of robust theoretical models, the need for accurate and reliable channel modeling, and the difficulty of processing vast volumes of data with low latency and high reliability. Addressing these hurdles is critical for realizing the full potential of HR in future 6G networks.

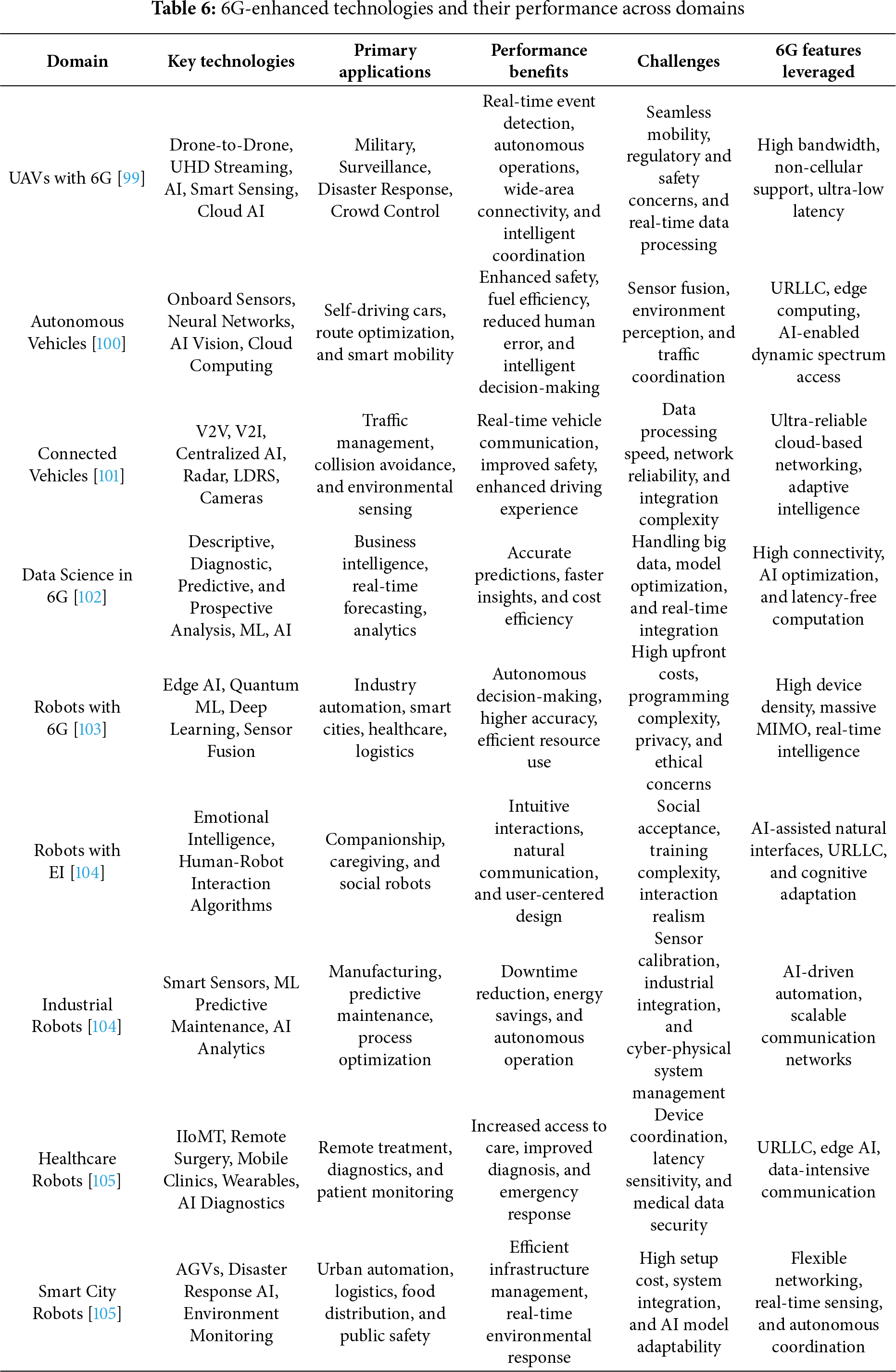

Physical-layer multicasting is a physical technique in which a single coded bit stream can be interpreted as multiple unicast messages to different users. In contrast to conventional multicasting (such as radio or television), physical-layer multicasting allows each user to receive only the part of the stream intended for them (rather than everyone receiving or hearing the same message). The approach differs from OMA, where individual messages are sent at different times, frequencies, or code-based resources. The gain with this method of coding is achieved by jointly encoding multiple medium-sized packets into a single, longer packet, offering the reliability benefit, one of its main advantages. This aspect is beneficial in NTNs, such as geostationary satellite communications (e.g., DVB-S2X), which allows a single coded frame to be used by multiple users in each spot beam, thereby implementing a physical-layer multigroup multicast scheme [66].

Low latency is another advantage, as users can decode their messages in parallel, rather than waiting for TDD techniques. Additionally, this method enables interference-free connections by communicating only one stream, thereby reducing inter-user interference and enhancing overall link quality. But physical-layer multicasting is also compromised by some problems. The most attention-grabbing aspect is spectrum inefficiency, driven by the need for the multicast stream to be readable by everyone regardless of channel conditions. This limits weaker users to the edge, resulting in wasted spectral resources.

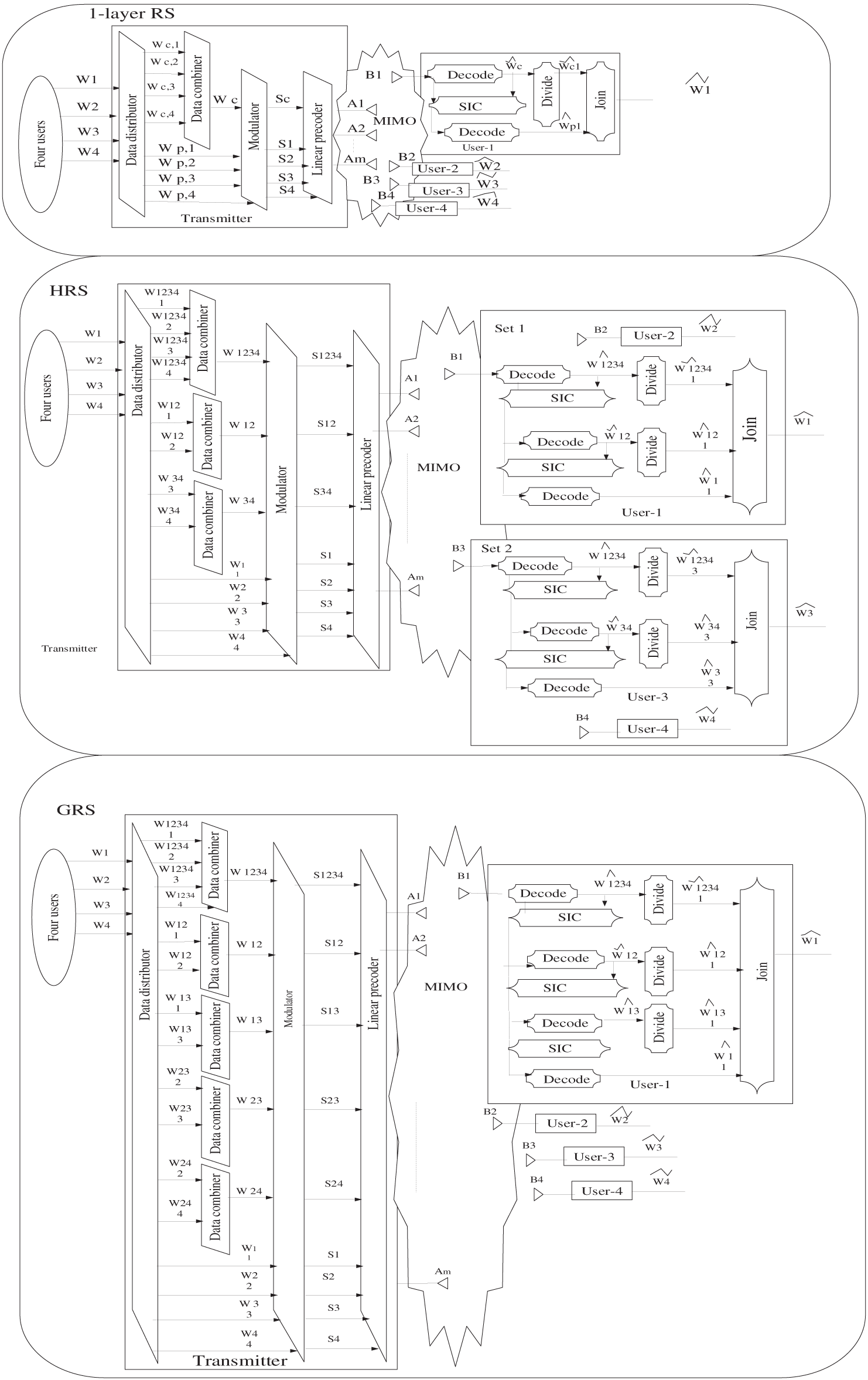

RSMA is a versatile and robust non-orthogonal transmission scheme designed for multi-antenna wireless networks, effectively handling diverse network loads, user distributions, and imperfect channel knowledge. RSMA works by splitting user messages into common and private parts, enabling flexible interference management by partially decoding interference and treating the rest as noise. Variants such as one-layer Rate splitting, Hierarchical RS (HRS), and Generalized RS (GRS) offer increasing flexibility and performance, with GRS supporting layered message splitting and user grouping. RSMA unifies and outperforms traditional schemes such as OMA, SDMA, NOMA, and multicasting by dynamically adapting to network conditions and offering superior spectral efficiency and EE [67]. It also enhances reliability, fairness, security, coverage, and latency, especially under imperfect CSI. However, its challenges include increased receiver complexity due to SIC, higher encoding and signaling overhead, and the need for complex joint optimization. Despite this, RSMA remains a leading candidate for 6G access due to its performance and adaptability.

Fig. 7 shows three advanced rate splitting schemes: 1-layer RS, HRS, and GRS, all of which are optimized for MIMO systems. Essentially, these strategies differ in how they split and encode user messages at the transmitter into common and private streams, enabling interference control. In the 1-layer RS (similar to NOMA), a common preamble is transmitted to all users, while dedicated streams are used for private transmission. Every user first uses SIC to decode and eliminate the common stream, then decodes the private information. HRS adds more flexibility, typically through the introduction of intermediate common messages or user groupings, multi-stage SIC, and a finer-grained treatment compared to the active cancellation of interference. GRS is the most comprehensive approach, dividing each user’s messages systematically into common components (understood by a predetermined group of users) and a personal component. GRS maximizes degrees of freedom by allowing complex multi-layer SIC protocols at the end-users, who are responsible for defining the exact user grouping and decoding sequence. This considerably reduces inter-user interference, thereby optimizing the overall spectrum efficiency and sum-rate performance.

Figure 7: Transmission frameworks for 1-layer RS, HRS, and GRS strategies in MIMO

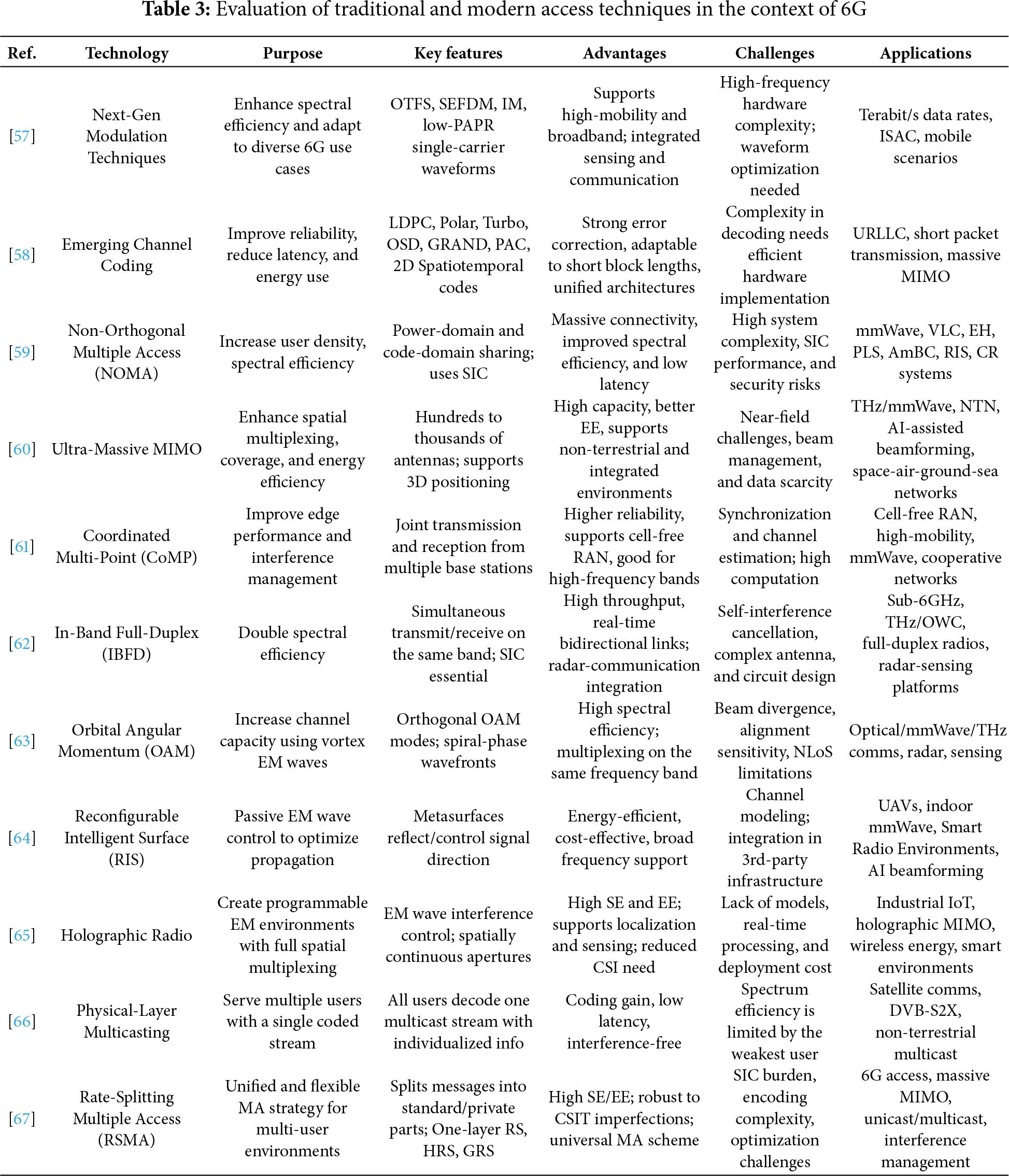

4.4 Comparison of Multiple Access Techniques for 6G

As 6G continues to push the boundaries of connectivity, efficiency, and intelligence, MA schemes are becoming increasingly central. To create a clear picture of how traditional and emerging MA strategies can help meet the performance objectives of 6G, a comparison of these two approaches is provided. Table 3 summarizes the main features, advantages, and shortcomings of methods such as OMA, NOMA, PL Multicasting, RSMA, and other complementary frameworks, including IBFD, ultra-massive MIMO, and HR. The methods are analyzed in terms of SE, EE, implementation complexity, latency, robustness, and suitability for dense, high-mobility, or heterogeneous networks. This comprehensive comparative analysis not only demonstrates the merits of next-generation solutions, such as RSMA and OAM, but also highlights the weaknesses of the legacy approach, considering the essential features of the comprehensive architecture of 6G access technologies.

5 AI Techniques in 6G Wireless Communication Systems

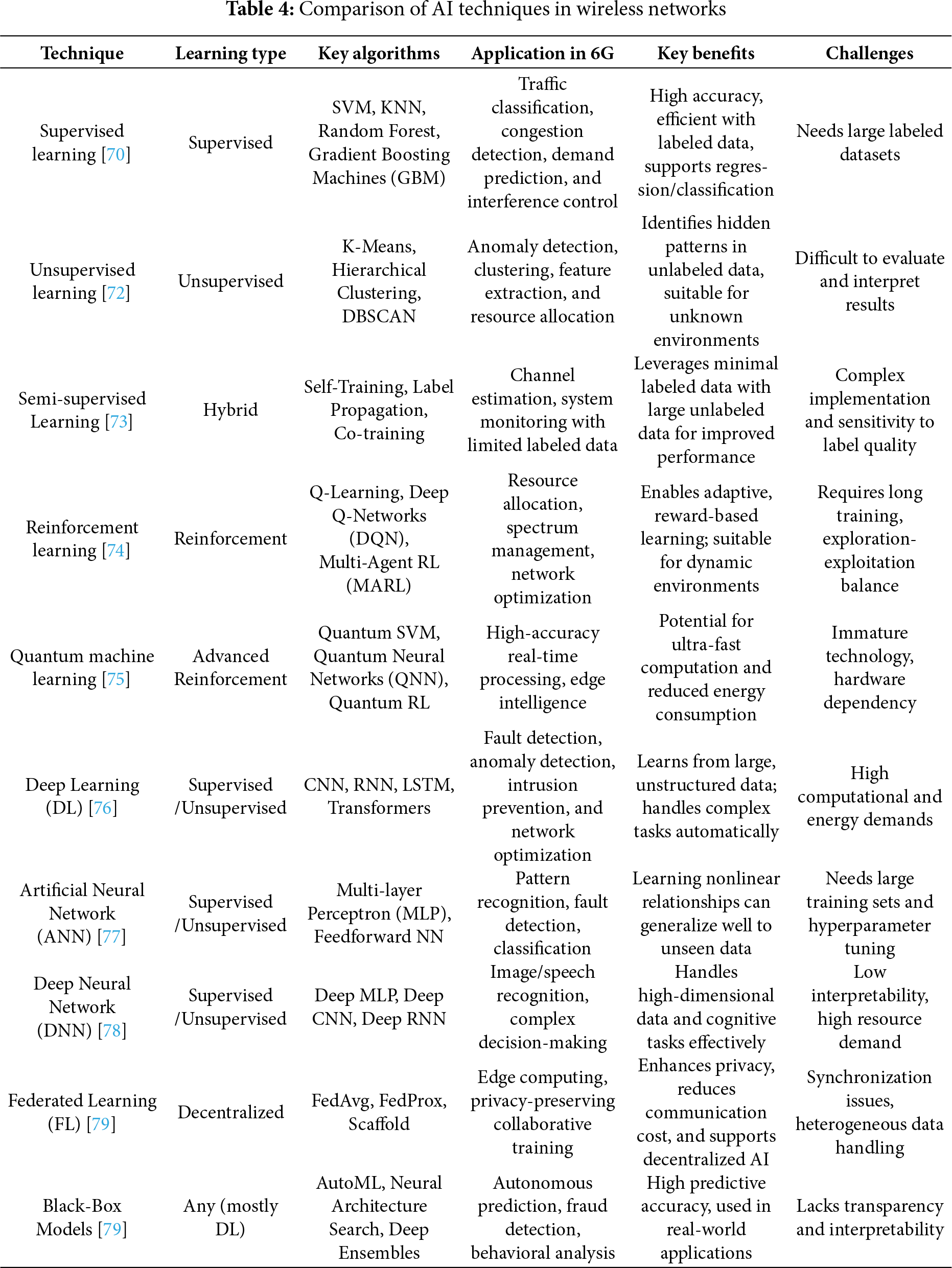

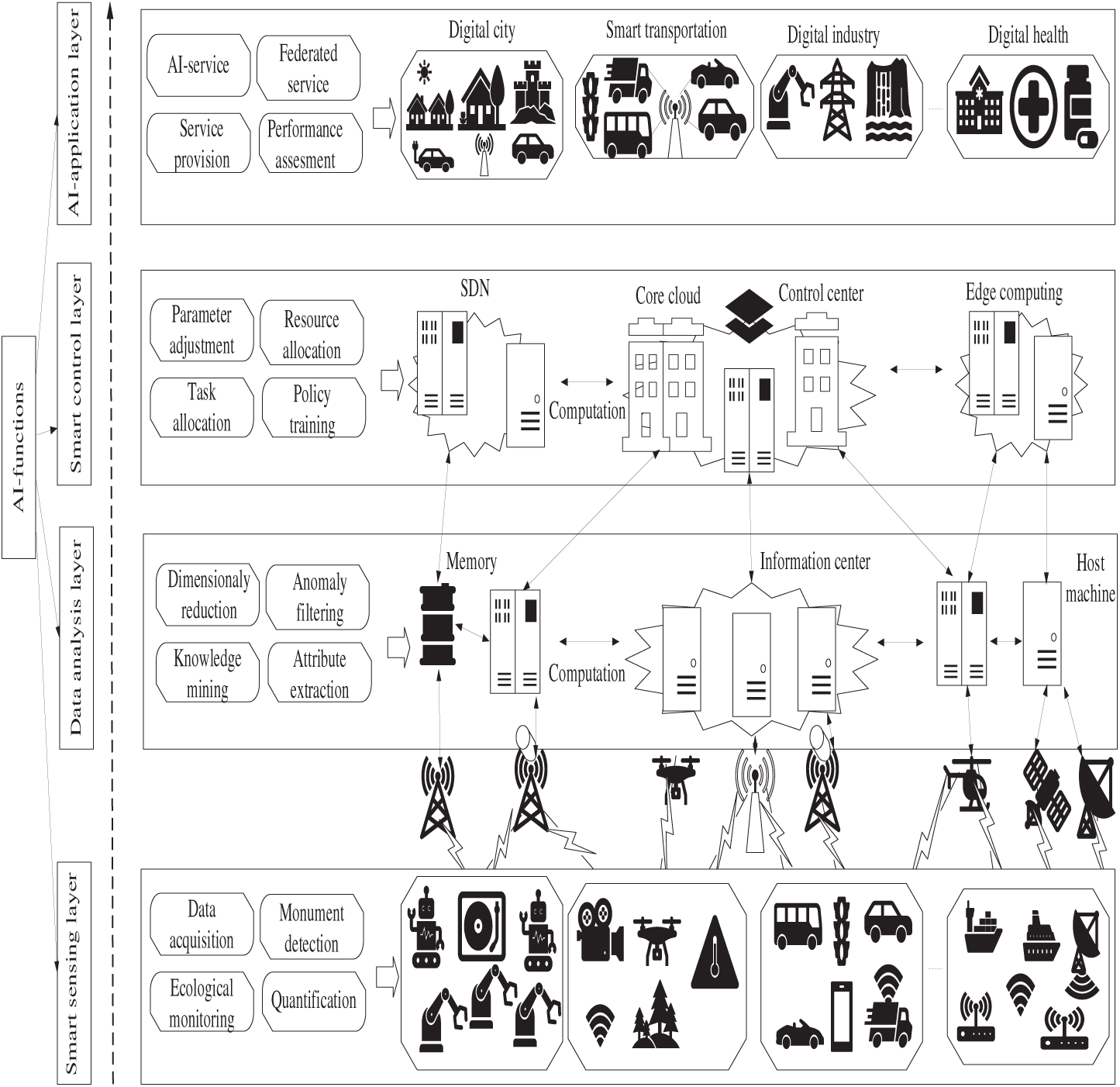

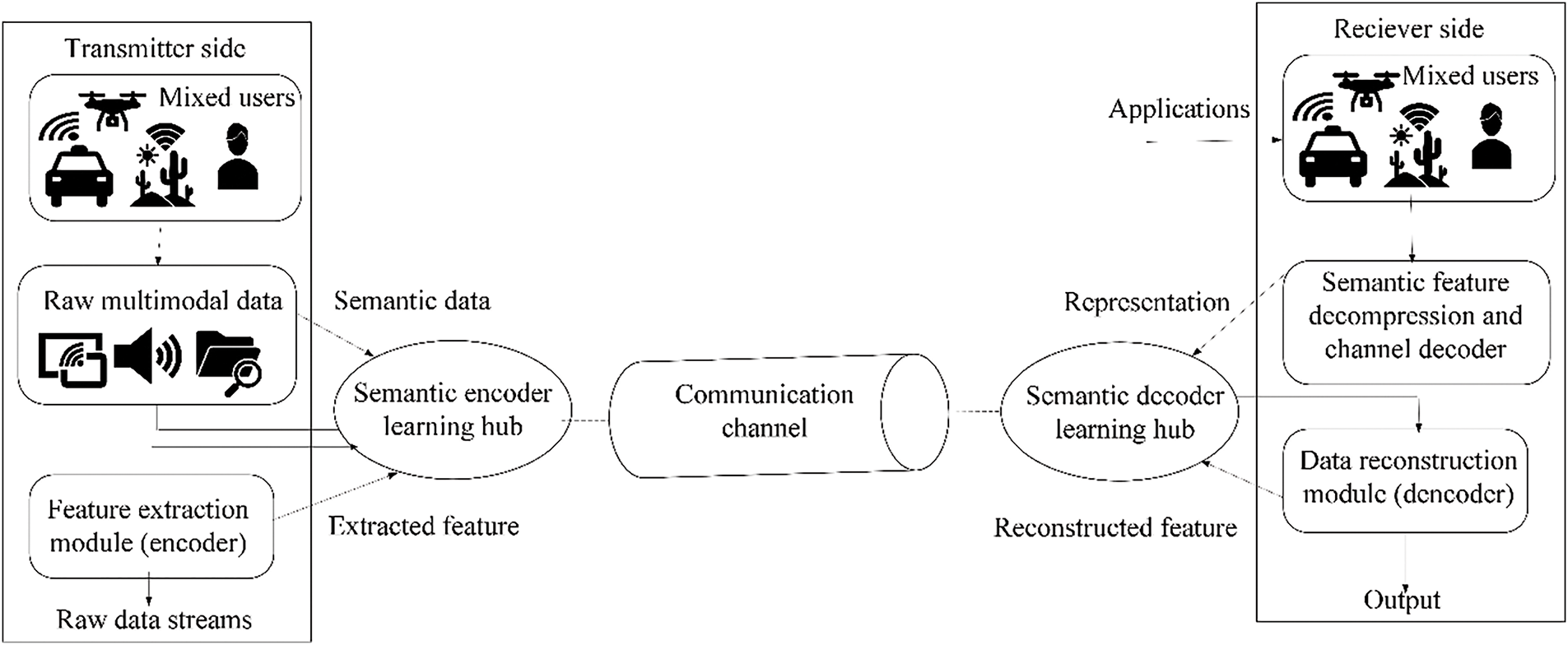

Future wireless networks are evolving into intelligent platforms that integrate communication, sensing, computing, intelligence, and storage to deliver personalized, adaptive services. AI is central to this transformation, offering powerful tools for managing massive, diverse data—expected to reach 491 exabytes daily by 2025 [68]—and enabling real-time decision-making, predictive maintenance, and dynamic resource optimization. Unlike conventional systems with fixed rules, AI learns from data to adapt to changing environments, improve reliability, and jointly optimize multiple network modules. It enhances performance in both the RAN (e.g., AI-driven scheduling and energy-saving handoffs) and the core network (e.g., intelligent QoS, traffic management, and edge computing). Standardization efforts by the International Telecommunication Union, 3GPP, and International Mobile Telecommunications (IMT)-2030 are advancing AI integration, aiming to unlock its full potential in wireless communication infrastructure and services. Key AI technologies include ML (SL, USL, and RL), DL, optimization, game theory, and meta-heuristics, with ML and DL being the most widely applied in wireless networks.

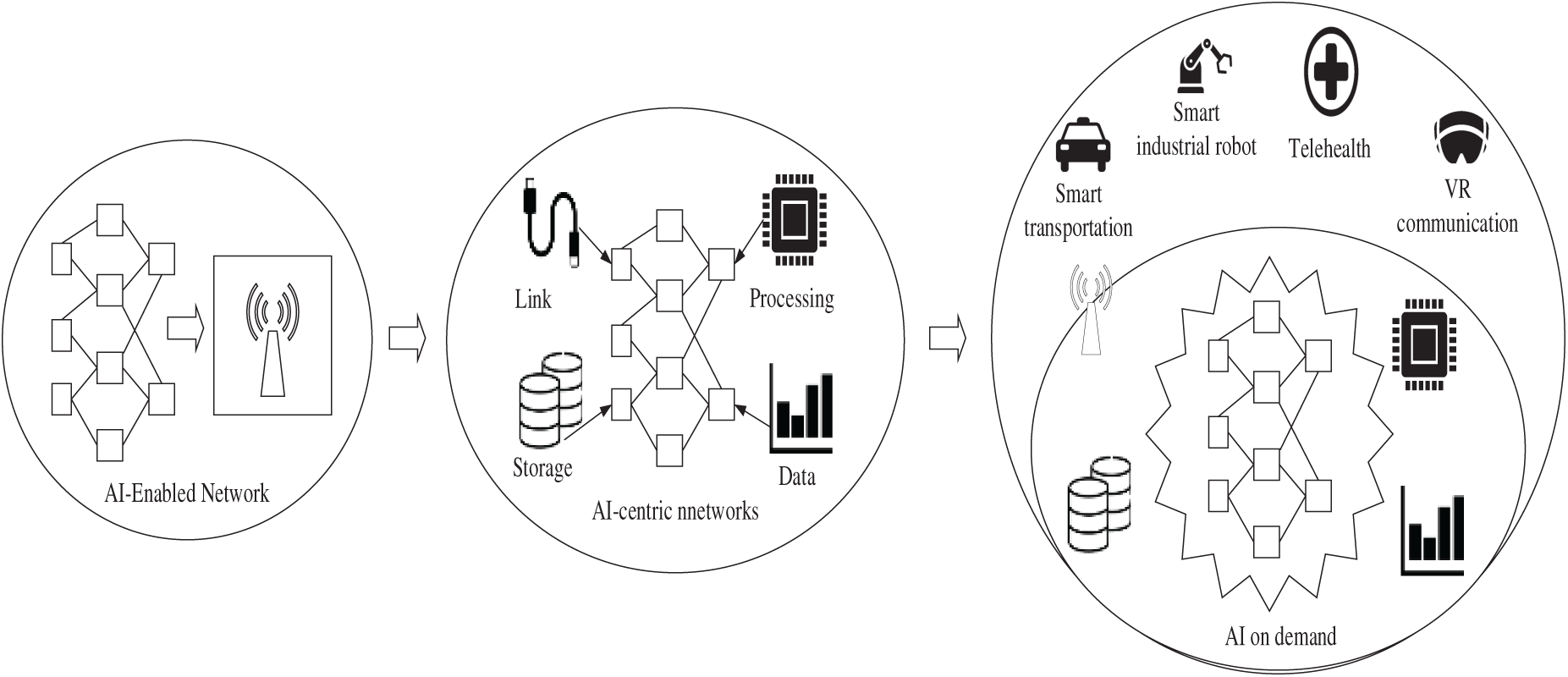

Fig. 8 effectively illustrates the synergistic integration of three dimensions of AI in 6G networks, highlighting a comprehensive and mutually dependent feedback loop of intelligence and infrastructure essential for managing system complexity. The initial one, AI that Optimizes Network Functions (AI4NET), involves implementing AI in the control plane using DRL agents for autonomous control functions such as real-time resource orchestration, physical-layer parameter optimization (Massive MIMO beamforming, RIS phase shifts), and dynamic network slicing to optimize spectral efficiency. The second dimension, Network Infrastructure that Enables AI Operations (NET4AI), provides the necessary computational substrate based on MEC to support low-latency inference and 6G. Its massive connectivity enables FL to train models privately and in a decentralized manner. Lastly, the third dimension of AI, AI as a Cloud-Based Service (AIaaS), leverages the 6G low-latency backbone to provide complex AI services on demand to industry verticals (e.g., smart robotics, tactile health). This is made possible by abstracting AI algorithms through network function virtualization and SDN, which implies the commercialization of AI. All of these create fundamental functional interdependence: AI provides the data and compute through the infrastructure, while also offering the sophisticated intelligence needed to control and commercialize the heterogeneous 6G environment independently.

Figure 8: 6G–AI integration

ML is gaining widespread attention for its ability to learn patterns and system behavior through mathematical models, enabling tasks such as classification, regression, and decision-making in dynamic environments. The availability of advanced ML algorithms, vast datasets, and powerful computational resources enhances these capabilities. Once trained, ML models can operate efficiently with minimal arithmetic operations and be deployed within flexible, high-performance network infrastructures to support real-time data processing. Core ML types—SL, USL, and RL—are all applicable to 6G networks, enabling the efficient handling of massive metadata with reduced resource consumption. The ability of ML to predict and adapt to various constraints makes it essential for future communication systems, primarily as 6G aims to create intelligent, resource-efficient ecosystems. Beyond communications, ML impacts numerous areas of daily life and plays a key role in building a socially beneficial, AI-driven future. Moreover, business intelligence tools powered by ML help organizations extract actionable insights by delivering timely, relevant data. At the same time, 6G further enhances this process through autonomous, intelligent operations that optimize decision-making and performance [69].

In SL, models are trained using labeled data, where inputs are paired with known outputs. The learning process involves estimating key parameters—such as coefficients—based on previously collected data and their corresponding expected results. This approach is most effective when the joint distribution of input and output variables is well understood and can be derived from domain-specific knowledge [70]. For instance, in tasks like precipitation prediction, SL relies on historical input-output data to learn predictive patterns. In wireless communication, particularly at PL, SL can optimize power allocation and manage interference by temporarily adjusting transmission parameters. Beyond the PL, SL also finds applications in the network, transport, and application layers. As 6G evolves, it is expected to significantly enhance and influence the application of SL across these multiple layers. SL algorithms, such as Support Vector Machines (SVMs) and K-Nearest Neighbors (KNNs), leverage historical and real-time network data for tasks such as traffic classification and demand prediction. SVMs are effective at identifying congestion states, while KNNs predict upcoming high-demand periods based on past patterns. Ensemble methods like Random Forests (RF) and Gradient Boosting Machines (GBM) further enhance performance on high-dimensional datasets [71].

In USL, models work without labeled data and instead identify patterns or groupings within the input data independently. In 6G networks, USL is trained on input samples without predefined output labels, enabling tasks such as clustering, feature extraction, feature classification, distribution modeling, and generating samples from specific distributions. This approach [72] is particularly beneficial in complex scenarios, such as vehicular communications, where the limited coherence time at the PL demands faster, more adaptive decision-making. With the broad deployment of 6G, these learning methods will play a key role in higher-layer tasks such as node clustering, pairing, and efficient RA. USL techniques such as K-Means, Hierarchical Clustering, and density-based spatial clustering of applications with noise are effective for detecting anomalies and identifying underutilized resources in real-time traffic monitoring. These methods uncover hidden patterns without labeled data.

Semi-supervised learning, by contrast, operates with a small portion of labeled data combined with a larger set of unlabeled data. Unlike purely USL, this method leverages the limited labeled data to improve model performance while still utilizing the vast unlabeled dataset. In high-frequency communication environments, such as 6G, semi-supervised learning can enhance channel equalization and system monitoring by optimizing performance metrics while reducing computational complexity. Ultimately, 6G technologies are expected to make USL and semi-supervised learning more adaptive and intelligent across network layers. Semi-supervised learning combines a limited set of labeled data with a larger unlabeled dataset, making it a practical solution when annotated 6G data is limited [73].

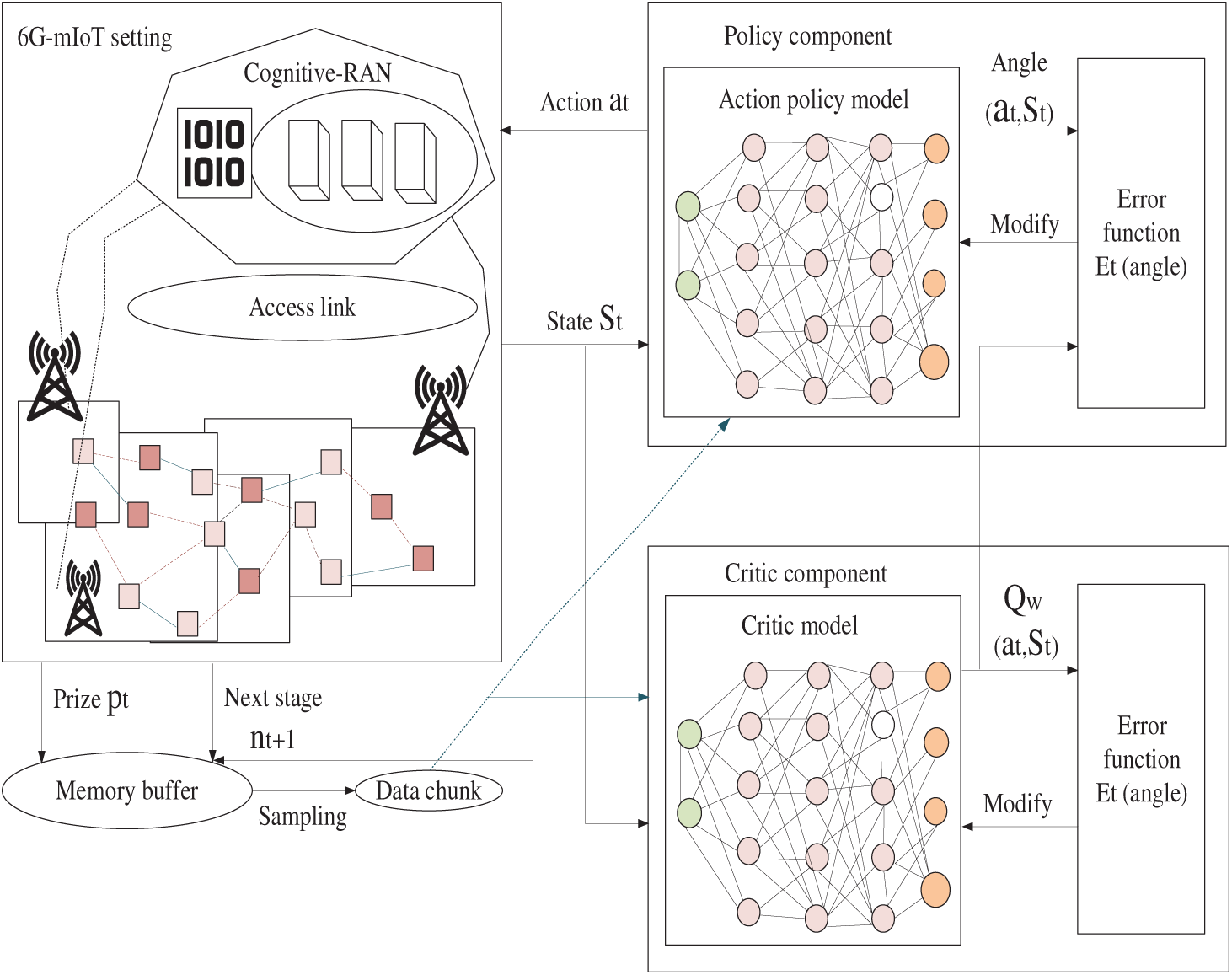

RL involves an agent interacting with its environment to learn optimal actions based on the feedback or rewards it receives. In the context of 6G networks, RL enables more intelligent and adaptive decision-making, where agents collaborate with network nodes to fine-tune parameters and enhance the quality of service. This learning method bridges SL and USL approaches, using prior knowledge to guide learning while aiming to maximize long-term rewards. RL is well-suited for tasks such as RA and performance optimization in wireless systems, with the DRL architecture being applied to various network challenges.

The effectiveness of ML models depends significantly on the quality and volume of training data. Batch learning algorithms, which are suitable for offline processing of large, labeled datasets, often face limitations due to restricted data availability [74]. To overcome these challenges, advanced ML techniques, including Quantum ML, emerge as key enablers for 6G by leveraging cognitive intelligence and Edge AI to deliver high-accuracy, real-time solutions. Technology positions itself as a transformative element in future communication networks [75]. RL supports DRA and spectrum management in 6G by enabling adaptive decision-making. Q-Learning uses reward-based updates to optimize actions, such as bandwidth or power control, while Deep Q-Networks (DQN) handle complex environments using Neural Networks (NNs). MARL allows distributed optimization through cooperative or competitive interactions among network agents, such as BS.

DL, a subset of ML within AI, plays a crucial role in processing both SL and USL data. It enables systems to automatically learn complex patterns and relationships between inputs and outputs across multiple abstraction levels, reducing the need for manually designed features [76]. With the advancement of 6G communication technologies, DL is expected to impact all areas of intelligent networking by enabling real-time data collection and processing. It has already demonstrated effectiveness in applications such as network AD, fault diagnosis, intrusion prevention, and network configuration optimization.

An Artificial Neural Network (ANN) is a data-processing model inspired by the structure and function of the human brain, designed to learn patterns and perform tasks from observed data. Recognized as one of the core deep learning algorithms, an ANN uses a network of interconnected nodes—similar to biological neurons—that efficiently process large volumes of data. These networks consist of multiple layers, commonly referred to as multi-layer perceptrons, where each layer contributes to feature extraction and learning. Neurons in each layer apply activation functions to handle nonlinear transformations, and the design of these connections plays a crucial role in the overall performance of the network. By leveraging cognitive intelligence, ANNs can handle complex tasks. Through extensive training on large datasets, they generalize well to new, unseen inputs [77].

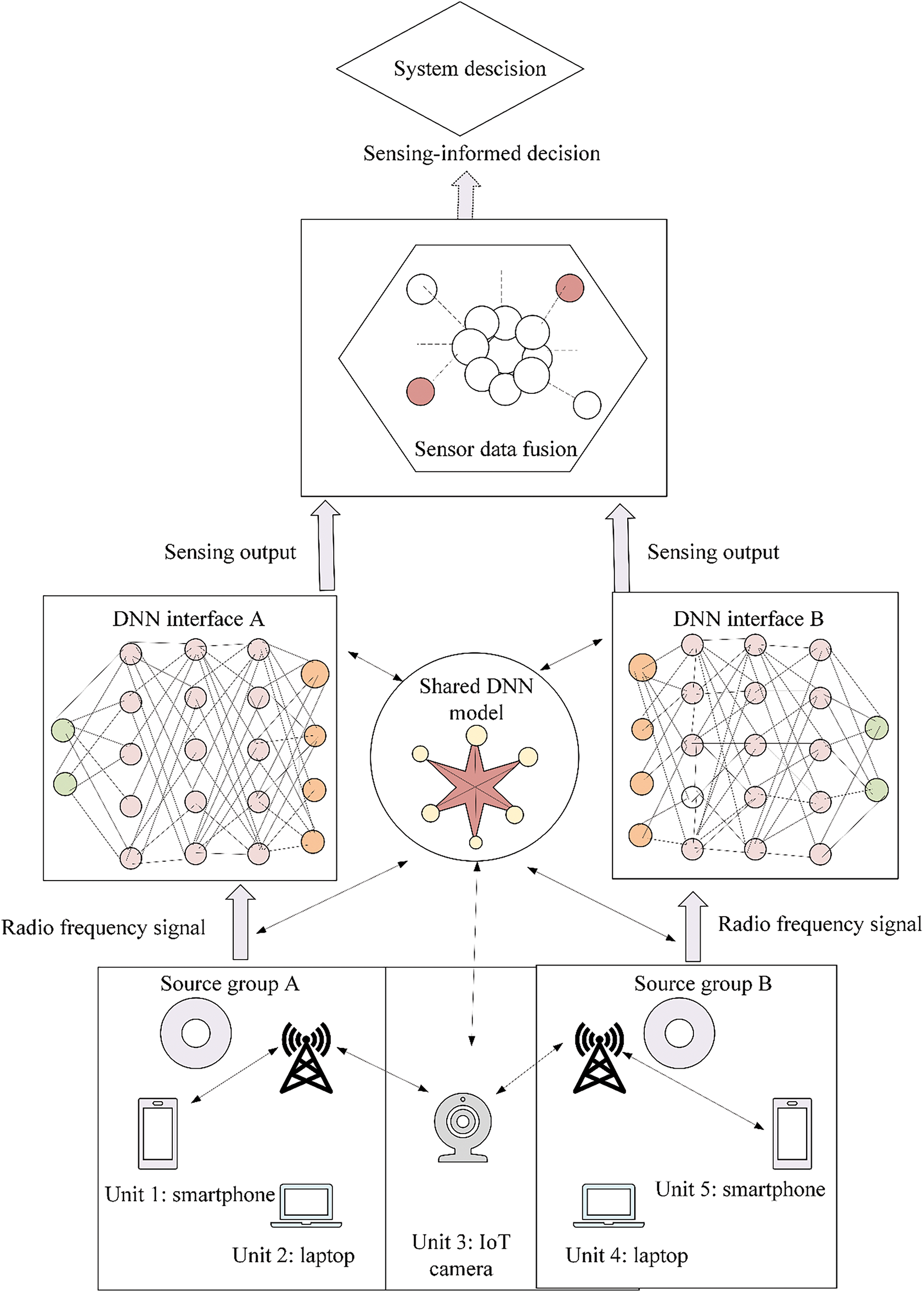

A DNN is an advanced form of ANNs designed for tasks like classification and generalization. It mimics the structure of the human brain, where multiple layers of interconnected neurons process and interpret complex information [78]. Just as the brain can distinguish between different images, a DNN can be trained to recognize patterns and classify inputs, such as images, speech, or handwritten characters. These networks use input vectors—often in matrix form—fed into layers of neurons, including input and hidden layers, enabling the model to learn intricate, nonlinear relationships. Due to this multilayered structure, DNNs are well-suited for handling high-level cognitive tasks that simpler linear models cannot manage. In the context of 6G, DNNs are expected to enhance communication systems by enabling faster processing and improved decision-making through more advanced and adaptable network architectures.