Open Access

Open Access

REVIEW

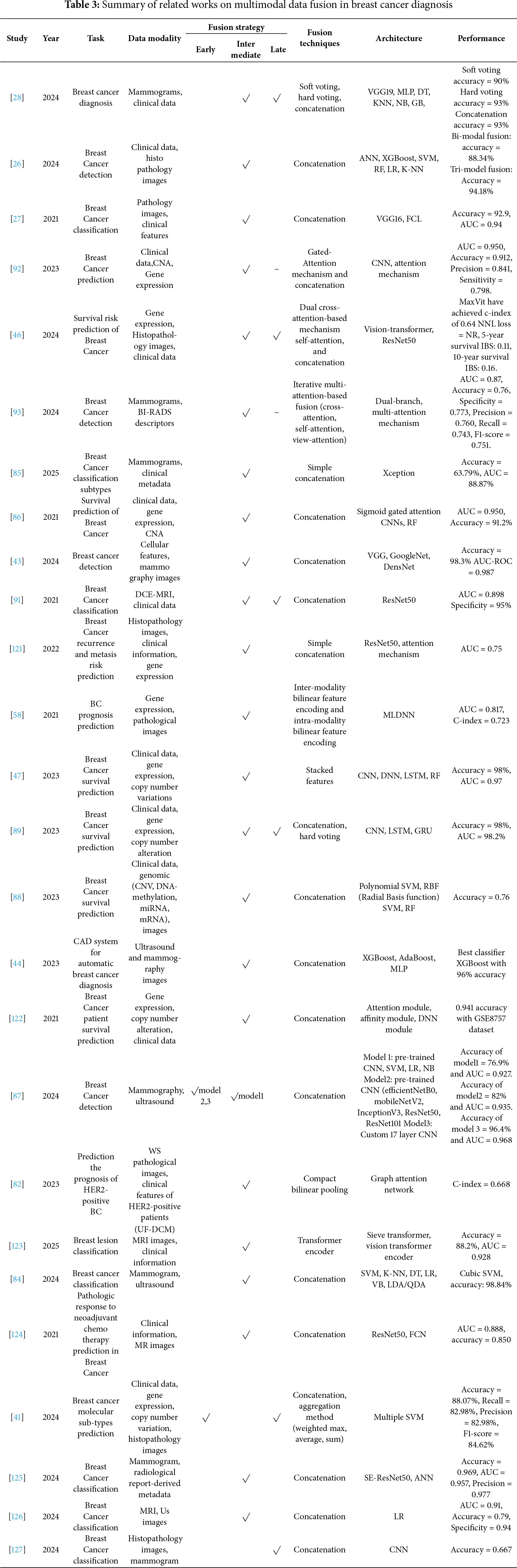

A Systematic Review of Multimodal Fusion and Explainable AI Applications in Breast Cancer Diagnosis

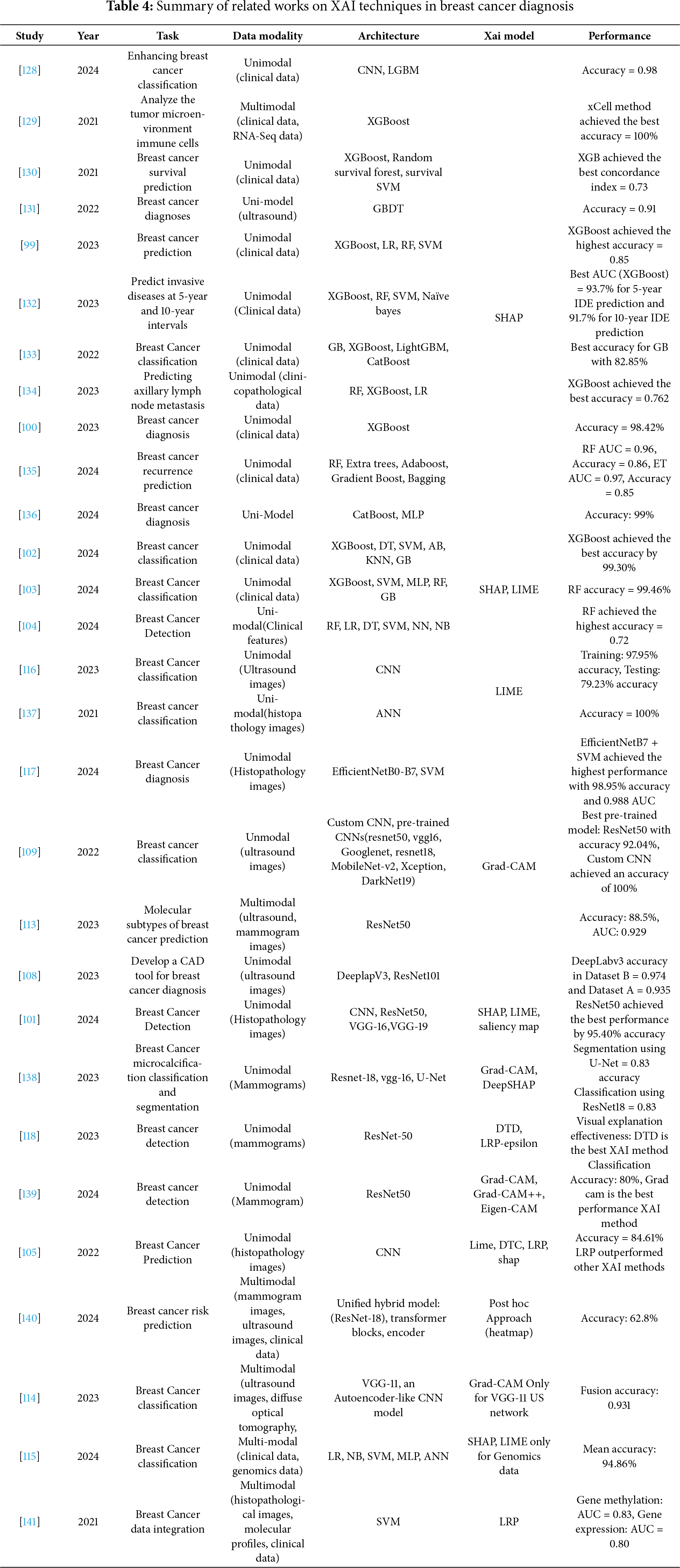

1 Department of Information Systems, College of Computer and Information Technology, Majmaah University, Majmaah, 11952, Saudi Arabia

2 Department of Information Systems, College of Computer and Information Sciences, King Saud University, Riyadh, 11451, Saudi Arabia

3 Research Chair of Pervasive and Mobile Computing and Department of Information Systems, College of Computer and Information Sciences, King Saud University, Riyadh, 11543, Saudi Arabia

* Corresponding Author: Deema Alzamil. Email:

(This article belongs to the Special Issue: Exploring the Impact of Artificial Intelligence on Healthcare: Insights into Data Management, Integration, and Ethical Considerations)

Computer Modeling in Engineering & Sciences 2025, 145(3), 2971-3027. https://doi.org/10.32604/cmes.2025.070867

Received 25 July 2025; Accepted 11 November 2025; Issue published 23 December 2025

Abstract

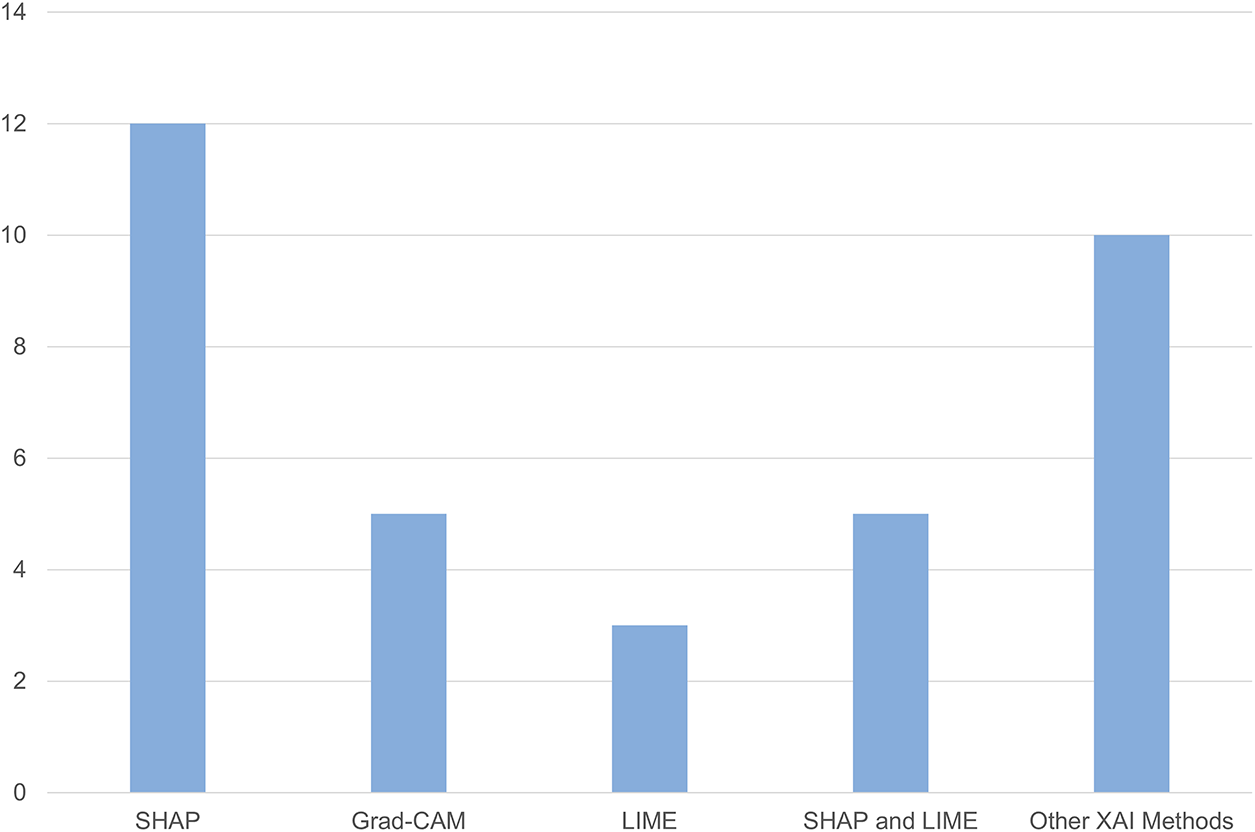

Breast cancer diagnosis relies heavily on many kinds of information from diverse sources—like mammogram images, ultrasound scans, patient records, and genetic tests—but most AI tools look at only one of these at a time, which limits their ability to produce accurate and comprehensive decisions. In recent years, multimodal learning has emerged, enabling the integration of heterogeneous data to improve performance and diagnostic accuracy. However, doctors cannot always see how or why these AI tools make their choices, which is a significant bottleneck in their reliability, along with adoption in clinical settings. Hence, people are adding explainable AI techniques that show the steps the model takes. This review investigates previous work that has employed multimodal learning and XAI for the diagnosis of breast cancer. It discusses the types of data, fusion techniques, and XAI models employed. It was done following the PRISMA guidelines and included studies from 2021 to April 2025. The literature search was performed systematically and resulted in 61 studies. The review highlights a gradual increase in current studies focusing on multimodal fusion and XAI, particularly in the years 2023–2024. It found that studies using multi-modal data fusion achieved the highest accuracy by 5%–10% on average compared to other studies that used single-modality data, an intermediate fusion strategy, and modern fusion techniques, such as cross attention, achieved the highest accuracy and best performance. The review also showed that SHAP, Grad-CAM, and LIME techniques are the most used in explaining breast cancer diagnostic models. There is a clear research shift toward integrating multimodal learning and XAI techniques into the breast cancer diagnostics field. However, several gaps were identified, including the scarcity of public multimodal datasets. Lack of a unified explainable framework in multimodal fusion systems, and lack of standardization in evaluating explanations. These limitations call for future research focused on building more shared datasets and integrating multimodal data and explainable AI techniques to improve decision-making and enhance transparency.Keywords

Breast cancer persists as one of the most prevalent and life-threatening diseases affecting women across the globe. Based on the World Health Organization (WHO) [1], breast cancer is the top cancer killer of women worldwide, accounting for a significant percentage of global cancer fatalities. Early detection and accurate diagnosis are critical factors in improving survival chances and the effectiveness of treatment strategies [2]. The need for timely and reliable diagnostic methods is thus more urgent than ever. However, despite the advancements in medical technology, the field still faces various challenges. These traditional methods are often prone to errors, including false positives (indicate cancer when there is not any) and false negatives (miss real cancer) results. These errors can lead to unnecessary biopsies, missed diagnoses, delayed treatments, and, ultimately, poorer outcomes [3].

In recent years, remarkable developments in artificial intelligence (AI) and machine learning (ML) have demonstrated great promise in addressing these limitations [4]. These technologies can improve diagnostic accuracy and efficiency, enabling healthcare professionals to make more informed and quicker decisions by leveraging the computational power of modern algorithms. Among the most powerful AI techniques for medical imaging is deep learning (DL), particularly convolutional neural networks (CNNs), which have proven extremely efficient in analyzing complex medical images such as mammograms [5]. Using deep learning for advancements in breast cancer diagnosis opens new horizons for upgrading early discovery and progressing treatment results for patients in clinical settings. However, there is still an urgent need for a comprehensive, accurate diagnosis method that integrates multimodal data.

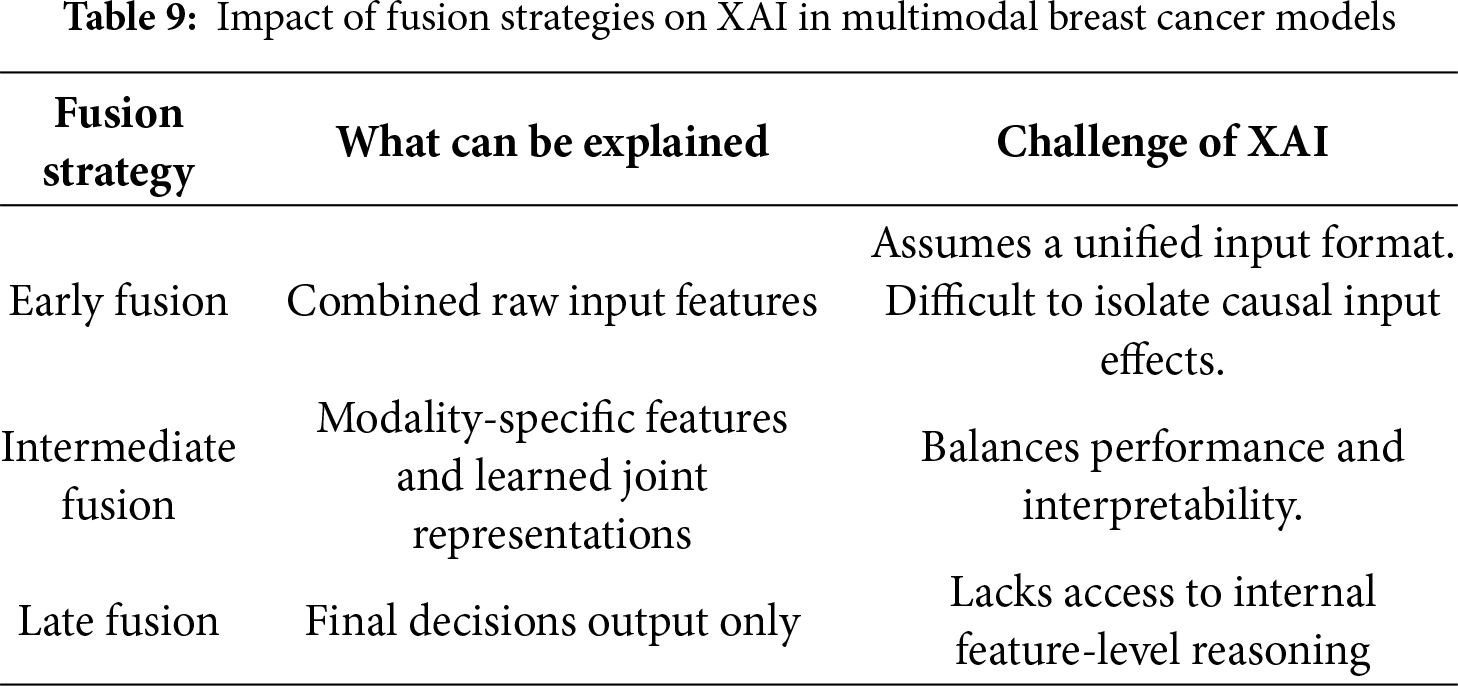

Multimodal learning has great potential to enhance diagnostic accuracy, as it enables the integration of several types of data, such as mammography, ultrasound, genomic profiles, and clinical records, which has made them crucial in the field of breast cancer diagnosis [6]. Integrating different data types from various sources improves diagnostic accuracy because each type of data provides different information: Imaging modalities show the morphological characteristics of the tumor; clinical records provide basic medical information and background on the patient [7], and genetic profiles reveal mutations that can drive cancer. The integration process also helps speed diagnosis and reduces the analysis time required for each type of data separately. Relying on a single source of data affects diagnosis and can lead to errors, emphasizing the importance of integrating different data types [8]. Various data types can be integrated in different stages: early fusion: mix the raw data before it goes into the AI model, intermediate fusion: let each data type learn its own features first, then combine those features, and late fusion: run separate models on each data type, then merge their final decisions [9]. The most widely used fusion strategy in the field of breast cancer diagnosis is intermediate fusion, because it balances modality-specific learning with cross-modal interaction. Despite its advantages, multimodal learning faces challenges, such as a lack of multimodal datasets, dimensional variation, distinct complexity of each data pattern [10], and challenges of explainability [11,12].

This lack of transparency can limit the acceptance and realistic execution of AI models in clinical settings, where healthcare professionals must understand the rationale behind each diagnosis to make informed decisions [13]. Explainable AI (XAI), which aims to make DL models more transparent and interpretable, becomes crucial in this context. In practical terms, without dependable explanations that accurately reflect how the current AI system operates, human users can perceive AI as untrustworthy because of the dynamic and unstable nature of real-world AI applications. Applying XAI techniques in multimodal settings will demonstrate how the combination of multimodal inputs contributes to the model’s output [14].

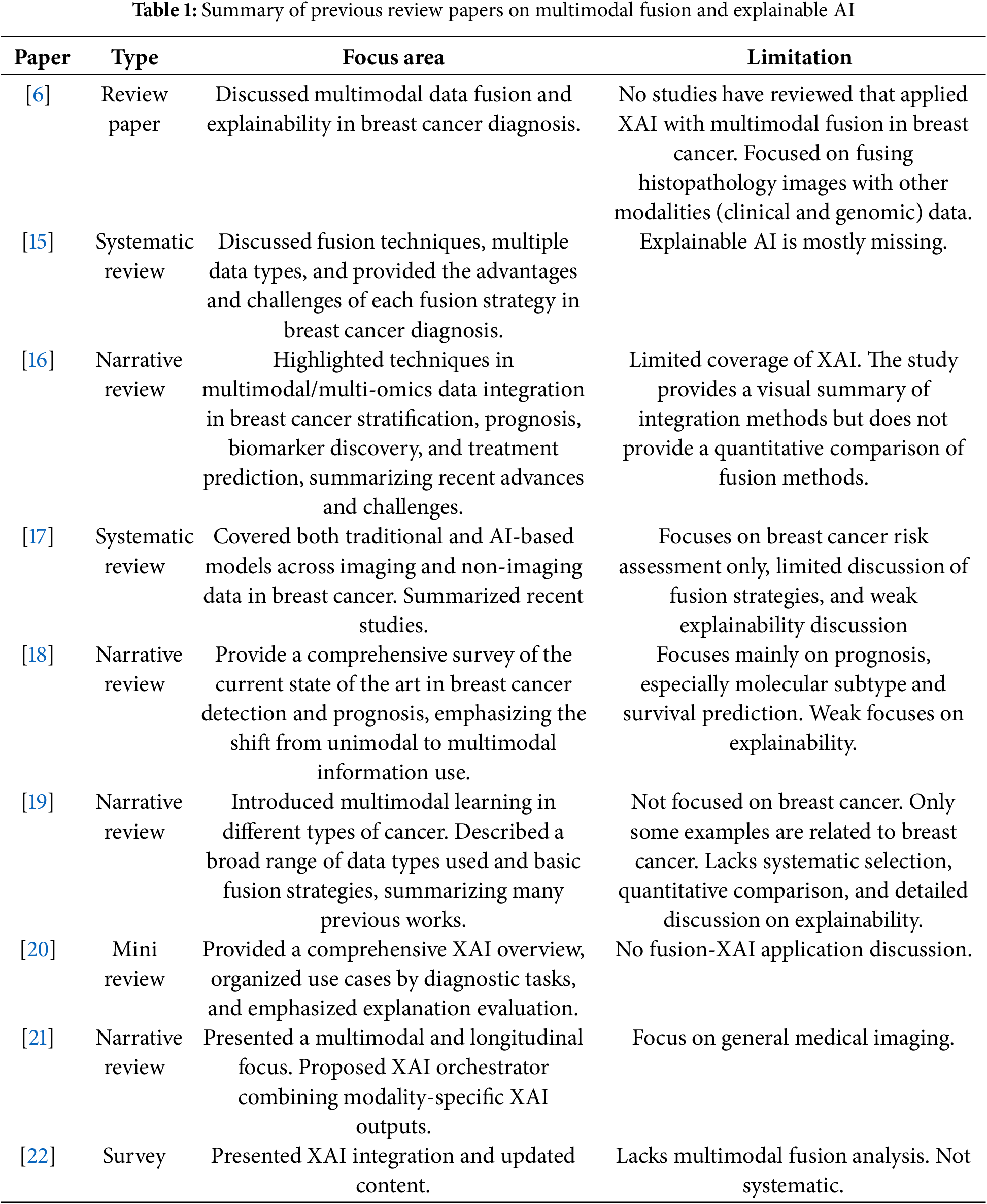

Although several review papers have discussed the use of AI in general medical imaging or breast cancer prediction using multimodal data, they have approached the topic from a specific perspective, by focused on fusion strategies, deep learning/machine learning techniques, or the performance of these models without addressing how explainability techniques can be applied in the context of multimodal fusion for breast cancer neglecting the importance of transparency and reliability in clinical applications. One study theoretically discussed multimodal data fusion with XAI in the field of breast cancer, focusing on histopathology images, without analyzing any studies that implemented XAI with multimodal data fusion. Table 1 provides a comparative summary of these reviews.

This review highlights the gap in the literature, which is the need for a comprehensive framework that combines the application of multimodal fusion and explainable AI in breast cancer diagnosis and how their interaction impacts decision quality. Unlike primary research articles, this review does not propose a new model. Instead, it aims to provide a systematic, technically grounded synthesis of the current state of multimodal and XAI methods in breast cancer diagnosis, with an emphasis on trends and methodological rigor. It explores the types of data used in diagnosis, fusion strategies, machine learning, deep learning models used, explainability techniques applied in single and multimodal learning, and explanation evaluation tools. The review highlights current limitations and indicates directions for future research.

Most current studies in breast cancer diagnosis have focused on single modal data (such as mammograms or clinical records alone), and explainability is treated as a secondary issue. In breast cancer diagnosis, physicians need imaging, clinical, and other information to make decisions. In addition, explainability is critical for AI systems in healthcare, where trust, transparency, and accountability are essential.

However, no reviews have systematically discussed studies that have applied multimodal fusion with explainable AI to breast cancer diagnosis, nor do they discuss explainability evaluation in previous studies. This review fills this gap by comprehensively exploring the intersection of multimodal data fusion and XAI in breast cancer diagnosis.

This review contributes to the following:

1. Following a systematic framework organized based on PRISMA standards in collecting and analyzing studies to ensure transparency and accuracy, and applying QUADAS-2 to evaluate the quality of studies and reduce the possibility of bias.

2. Providing a systematic and organized vision of the basic technical components in the field of breast cancer diagnosis, including data types, AL models, fusion strategies and techniques, and XAI methods.

3. Analyzing how models, fusion strategies, and explanation techniques are operationalized in recent studies to enhance diagnostic accuracy and support clinical decision-making.

4. Comprehensive comparative analysis of performance in previous studies through summary tables and trend analysis based on data type, fusion type, and explanation techniques.

5. Highlighting the relationship between multimodal fusion strategies and explanation techniques, explaining how this relationship can be employed to improve the accuracy of models and enhance their clinical reliability.

6. An analysis of evaluation methodologies, linking explanation evaluation gaps with recognized standards, and emphasizing the lack of unified frameworks to ensure clinical reliability and reproducibility.

7. Presenting a future vision for breast cancer diagnosis research on the application of multimodal fusion and explainable AI, especially considering the progress in generative artificial intelligence technologies.

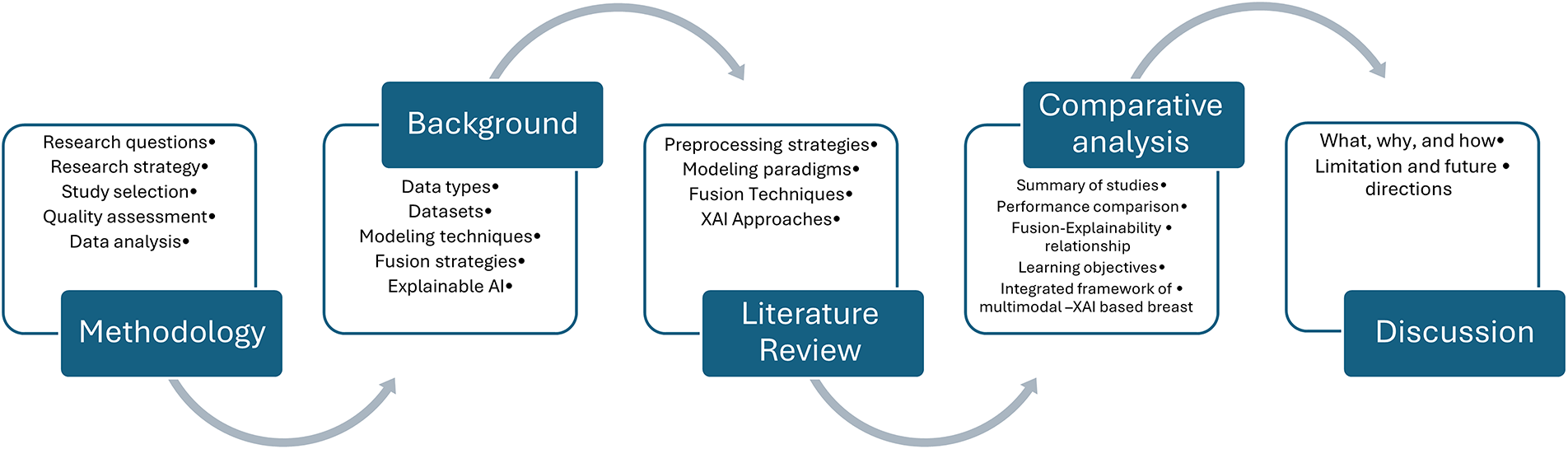

The remaining sections of this review are structured in this manner: Section 2 presents the methodology of this review, including research questions, research strategy, and eligibility criteria, study selection, data extraction, quality assessment, and data synthesis and analysis. Section 3 provides background on multimodal data, modeling strategies, fusion techniques, and XAI. Section 4 reviews previous studies systematically. Section 5 provides a comprehensive analysis of methods and performance. Section 6 discusses limitations and future directions, and Section 7 concludes the review. The following diagram, Fig. 1, illustrates the general structure of a review paper.

Figure 1: Study structure overview

This methodological structure was intentionally designed to reflect current shifts in multimodal AI research driven by generative and foundation models. This review captures how modern techniques reshapes technical design and evaluation in breast cancer diagnosis by systematically mapping techniques such as Transformer, hybrid XAI approaches.

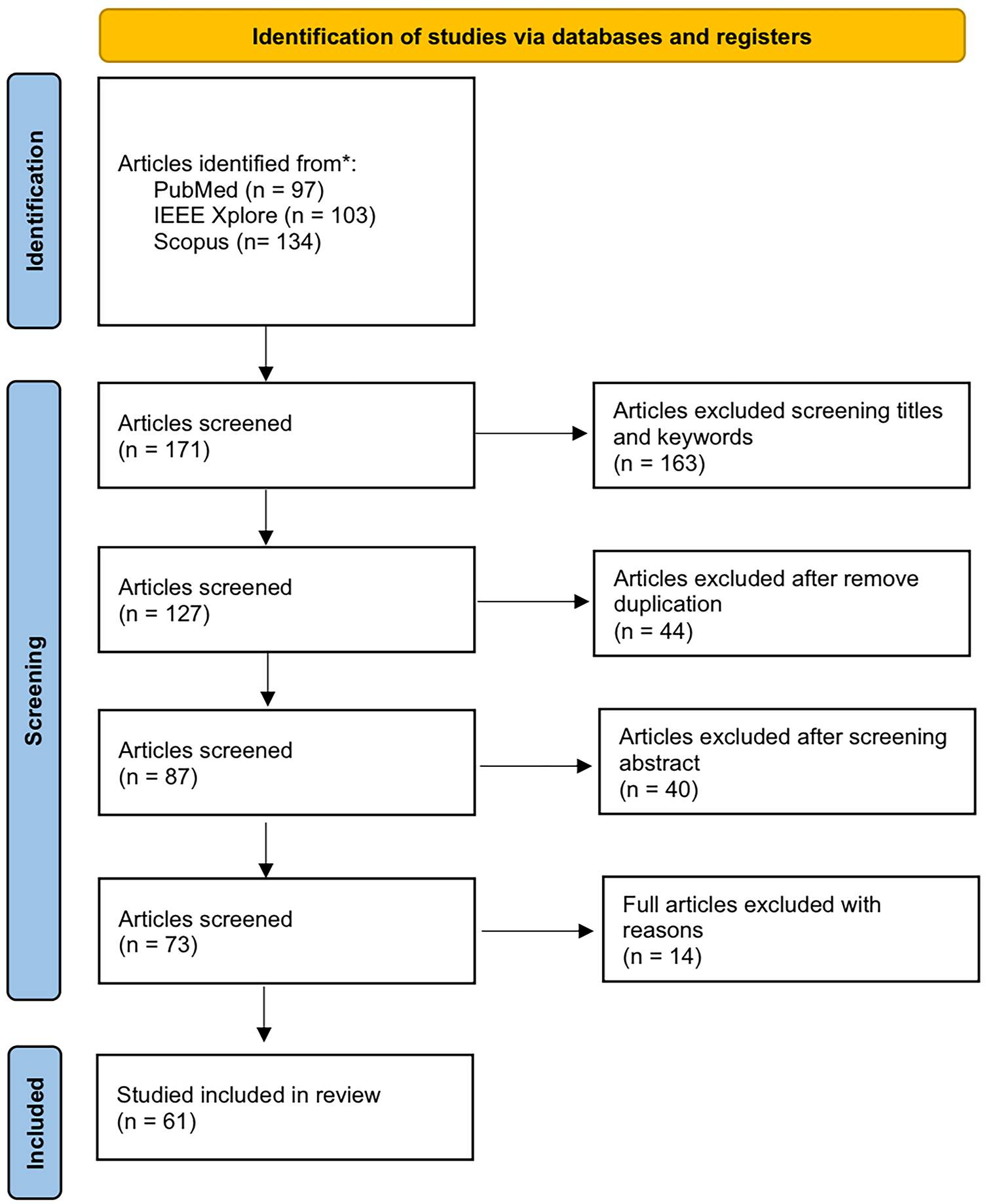

In accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines [23], this systematic review was conducted. The (PRISMA) framework provides a structured approach for study identification, screening, eligibility assessment, and inclusion that ensures transparency and reproducibility in the review process. A PRISMA flow diagram is utilized to clarify the selection process, number of studies retrieved from databases, duplicated articles removed, and studies excluded.

A set of research questions is formulated to guide the scope and focus of this review, which explores how multi-modal data fusion and explainable AI are applied in breast cancer diagnosis and identifies trends and gaps in current studies:

1. What types of multimodal data have been used in breast cancer diagnosis, and what fusion strategies have been applied?

2. What machine learning and deep learning models are commonly employed in the multimodal breast cancer diagnosis system?

3. What explainable AI methods have been applied in breast cancer diagnosis research, and to what extent are XAI methods evaluated for their interpretability?

2.2 Research Strategy and Eligibility Criteria

A comprehensive search strategy was developed to capture recent advances in multimodal fusion and explainable AI, such as Transformer architecture, cross-attention fusion, foundation models, and integrated XAI frameworks. Three main databases were selected for search based on their comprehensiveness of medical, scientific, and technical literature: PubMed, Scopus, and IEEE Xplore. Search terms were created and utilized with Boolean operators to maximize relevant articles to capture concepts related to breast cancer, multi modal data fusion, and explainable artificial intelligence. The keywords used included “Breast Cancer,” “Cancer,” “Deep learning,” “Machine learning,” “Artificial intelligence,” “Explainable Artificial intelligence,” “Xai,” “Multimodal,” “Fusion,” “explainable,” and “Interpretable.” This review includes studies from 2021 to April 2025, where the search is started by title, keywords, and abstract. The full electronic search strategy, is available in Appendix A.

Eligibility Criteria

Studies analyzed in this review were based on the following eligibility criteria: 1. The article must be published in English; 2. The article must fall within the field of breast cancer diagnosis; 3. It must include either multimodal data fusion or the use of XAI techniques or both; 4. Reported quantitative performance metrics (AUC, sensitivity, specificity). 5. It must be a peer-reviewed journal article or conference paper; and 5. It must have been published between January 2021 and April 2025. Exclusion criteria were gray literature, reviews, abstracts without evaluation, and works not entered on diagnostic applications.

The study selection process for this review followed the PRISMA guidelines. Initially, 333 articles were obtained from three major databases (PubMed, Scopus, and IEEE Explore); 171 articles were retained after screening the titles and keywords. When removing duplicate articles, 127 unique articles were obtained. After abstract review, the selection was narrowed to 87 studies; 73 articles met the eligibility criteria after a full-text review. Sixty-one eligible studies were ultimately selected based on their relevance to the review objectives with strong inter-rater agreement (κ > 0.85). Fig. 2 illustrates the selection process based on the PRISMA flow diagram.

Figure 2: The PRISMA structure for the study selection process

A structured data extraction form was developed to ensure consistency and reproducibility and to enable consistent comparison across heterogeneous research settings. For each study, two reviewers independently extracted essential information, including:

Study characteristics (publication year, country).

Data modalities (mammography, MRI, histopathology, clinical or genomic data).

Fusion strategies (early, intermediate, late, or attention-based).

Machine learning/deep learning models (CNN-based frameworks, Transformer-based fusion, Hybrid CNN-Transformer, cross-attention, or MLP).

Explainable AI methods (SHAP, Grad-CAM, attention visualization).

Reported performance metrics (diagnostic accuracy, sensitivity, specificity, AUC, interpretability evaluation).

The extracted information was then utilized to construct comparative tables summarizing the following:

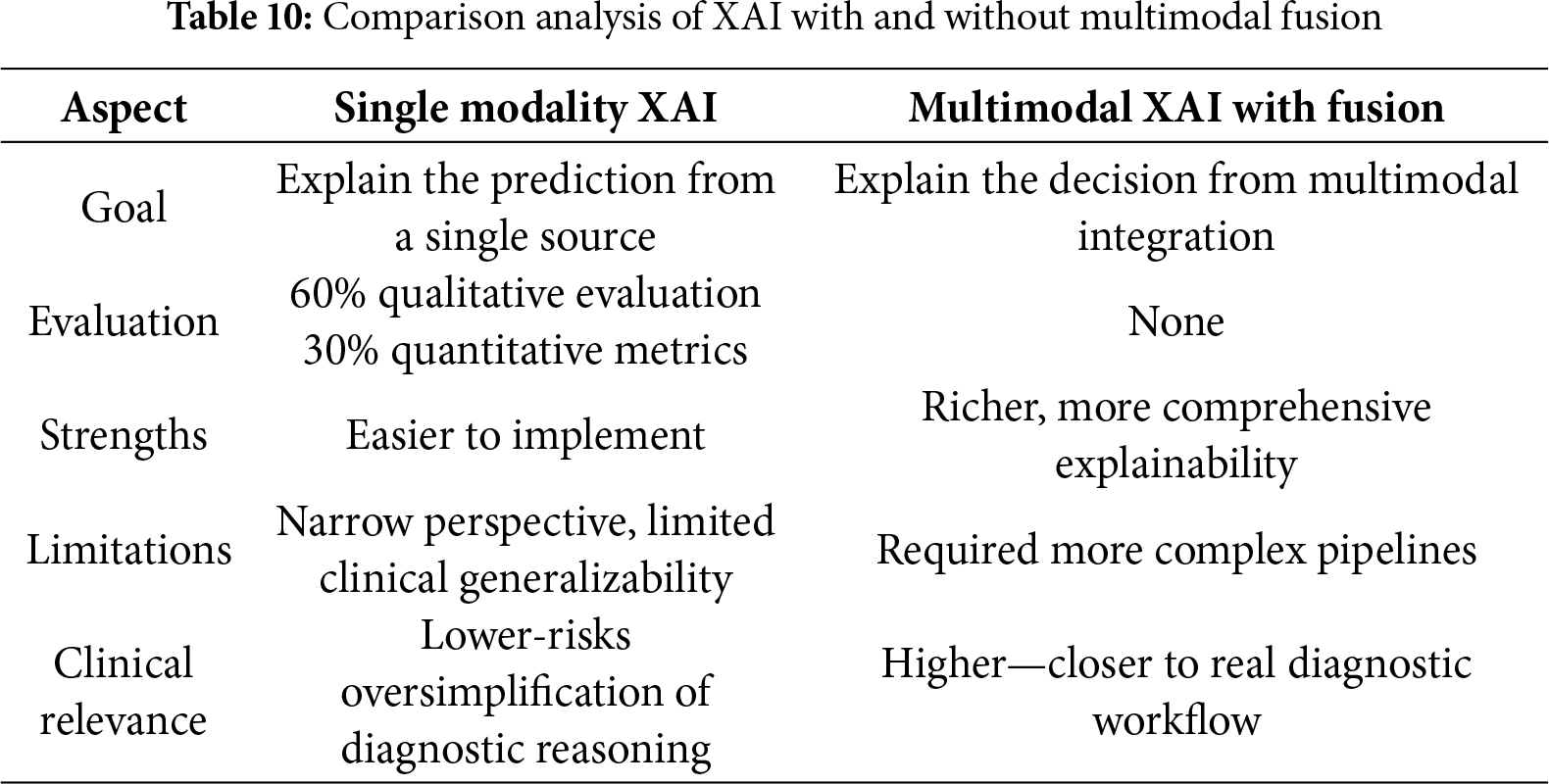

1. Reported performance of different fusion strategies and data modality combinations.

2. Conceptual and methodological comparison between single-modality XAI and multimodal XAI with fusion in terms of objectives, evaluation methods, strengths, limitations, and clinical relevance.

These tables were analyzed descriptively to identify performance trends across studies and highlight frequently adopted approaches. This approach allowed us to provide a transparent overview of how different multimodal fusion strategies and data combinations impact diagnostic performance in breast cancer applications. Rather than focusing on numerical performance alone, the resulting comparison of single and multimodal XAI emphasized how XAI contributes to model interpretability, clinical trust, and diagnostic decision support when integrated with multimodal fusion strategies. The results of these analyses are presented in the performance comparison section.

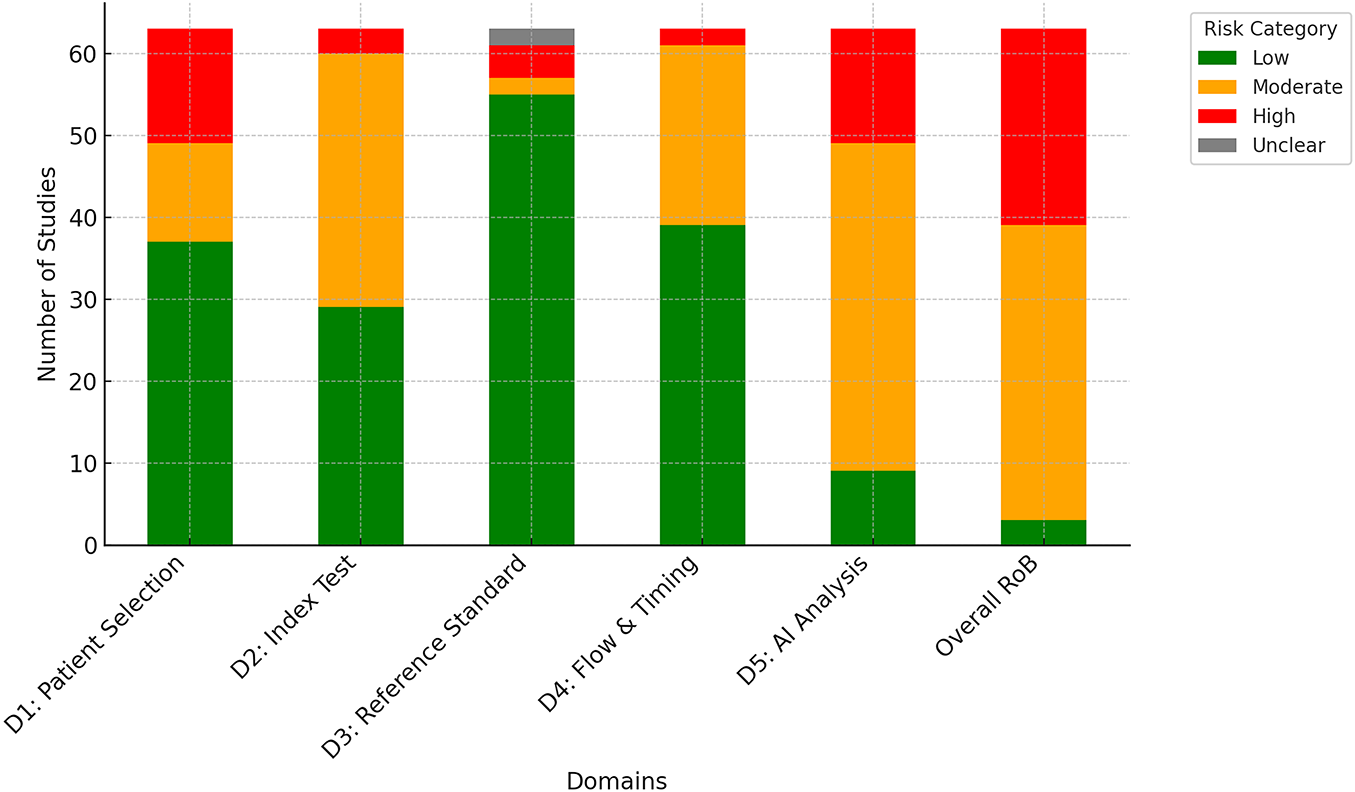

A structured risk of bias assessment was performed using the QUADAS-2 tool. Two independent reviewers (Reviewer A and Reviewer B) evaluated all 61 included studies across five domains:

1. Patient selection—representativeness and avoidance selection bias.

2. Index test—transparency of modeling, blinding, and reproducibility.

3. Reference standard—clarity and consistency of diagnostic ground truth.

4. Flow and timing—completeness of data and avoidance of verification bias.

Although QUADAS-2 traditionally consists of four domains, the AI analysis domain was introduced to capture risks unique to machine learning studies.

5. Artificial intelligence analysis—dataset diversity, external validation, and whether interpretability was systematically evaluated.

Ratings were assigned as low, moderate, high, or unclear risk. Discrepancies were resolved through consensus discussion. Inter-rater reliability was calculated using Cohen’s Kappa prior to consensus.

Risk of Bias Findings

The distribution of risk of bias ratings is listed in Fig. 3. Patient selection and reference standard were rated as low risk in most studies, indicating that selection datasets were generally appropriate, and reference standards were clearly defined. In contrast, greater variability was observed in the flow/timing and AI analysis. This variability often arose from reliance on retrospective datasets without prospective validation, limited use of external validation, and insufficient transparency in explainability methods. Such gaps reduce confidence in the reproducibility and generalizability of results. Most disagreements between reviewers were minor, often between low and moderate ratings. Overall, these results highlight the need for more consistent standards for evaluation in multimodal AI breast cancer research.

Figure 3: Distribution of risk of bias ratings

2.6 Data Synthesis and Analysis

A formal meta-analysis was not conducted due to the substantial heterogeneity across the included studies. The differences were evident in study design, patient cohorts, imaging and clinical modalities, model architecture, outcomes measures, and evaluation metrics. Because of this variability, pooling the results quantitatively will not yield meaningful conclusions and can risk misrepresentation. Instead, a structured narrative synthesis was employed, supported by comparative tables and descriptive statistics, which allowed us to capture and discuss the diversity of findings while maintaining methodological transparency.

Studies were categorized and analyzed by:

• Temporal trends (publications per year, 2021–2025).

• Geographic distribution (country of origin, highlighting concentration in high-resource regions).

• Publication venues (distribution across biomedical, engineering, and AI-focused outlets).

• Methodological features (multimodal data types, fusion strategies, model architectures, XAI techniques).

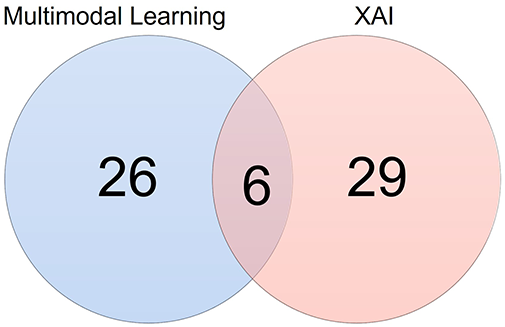

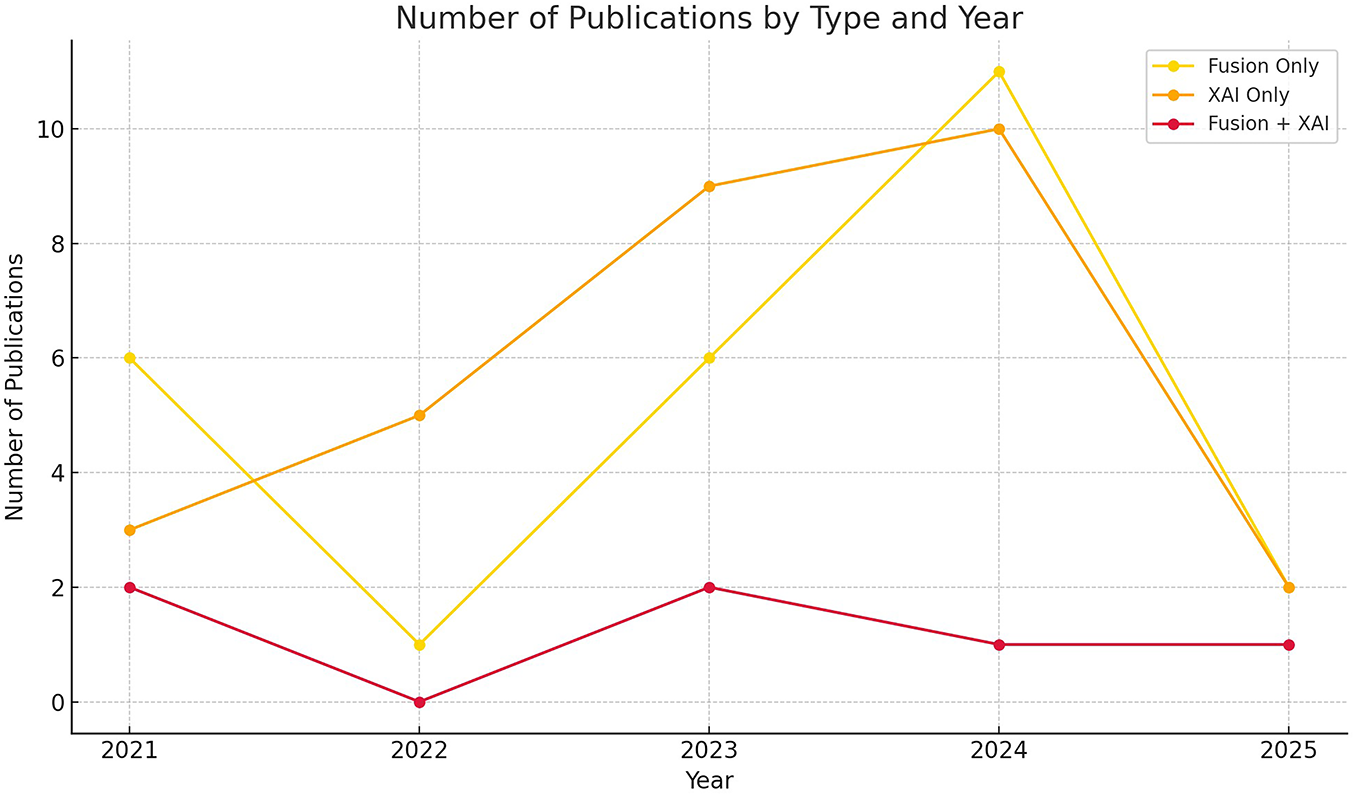

Descriptive statistics (frequency counts, heatmaps, trend plots) revealed fluctuating activity in the number of studies using data fusion techniques, peaking in 2024 with 11 publications—indicating interest in using these data sources in breast cancer research. This rise can relate to the improvement of modern fusion techniques such as attention, transformers, and graph fusion, which enabled more flexible multimodal integration compared to static early fusion. At the same time, there is a steady increase in studies focusing on explainable AI but using single-modality data, demonstrating the importance of transparency and trust in clinical AI applications. The combination of data fusion techniques and explainable AI is still limited, with few studies compared to previous trends. This highlights that the integration between XAI and fusion is still an emerging field of research and needs a comprehensive review to study how explainable techniques can be integrated into multimodal diagnostic systems. Fig. 4 presents the categorization of the selected articles, and Fig. 5 presents the publication trends from 2021 to 2025 across these categories.

Figure 4: Categorization of reviewed articles in breast cancer diagnosis

Figure 5: Publication trends in breast cancer AI research from Jan 2021 to April 2025, showing the number of studies in 1) multimodal fusion without XAI, 2) XAI with unimodal models, and 3) XAI with multimodal modal

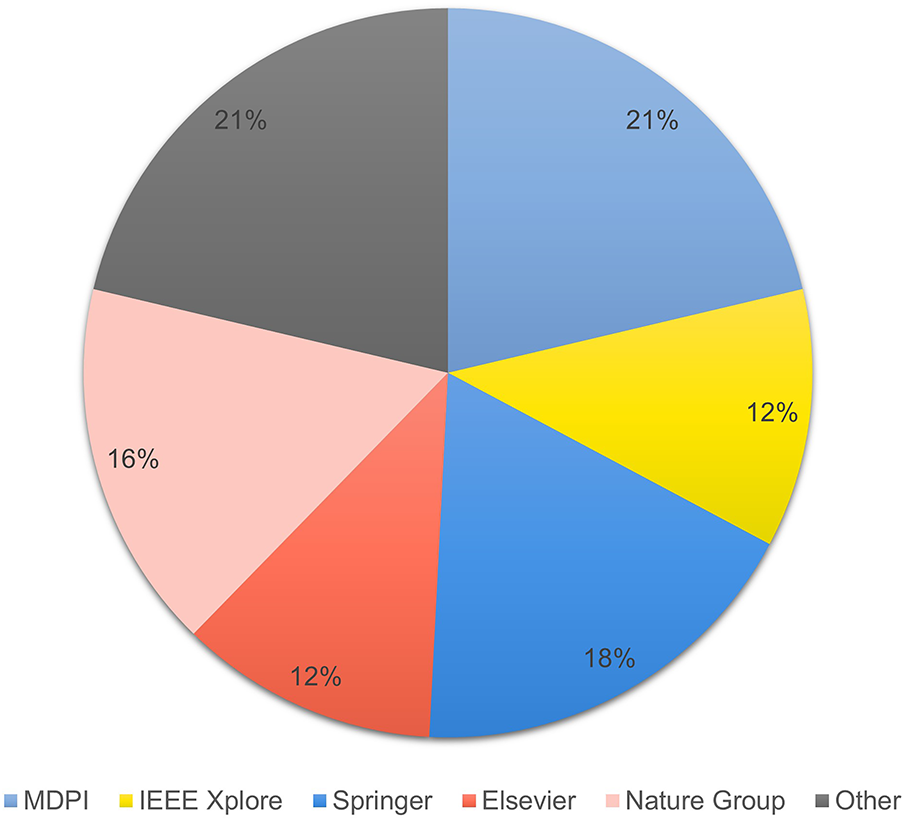

Analyzing the publication sources indicates that the selected studies were published in reputable journals. 13 papers were published by MDPI, followed by Springer and Nature Publishing Group with 11 and 10 papers, respectively. Elsevier and IEEE Xplore published seven papers for each. The remaining publications outside these main venues had fewer than two papers. Therefore, this distribution that multidisciplinary studies are interested in explains explainable artificial intelligence and multimodal integration, as medical and technical fields were covered. Fig. 6 presents a pie chart showing the distribution of reviewed papers by the publisher.

Figure 6: Distribution of reviewed papers by publisher

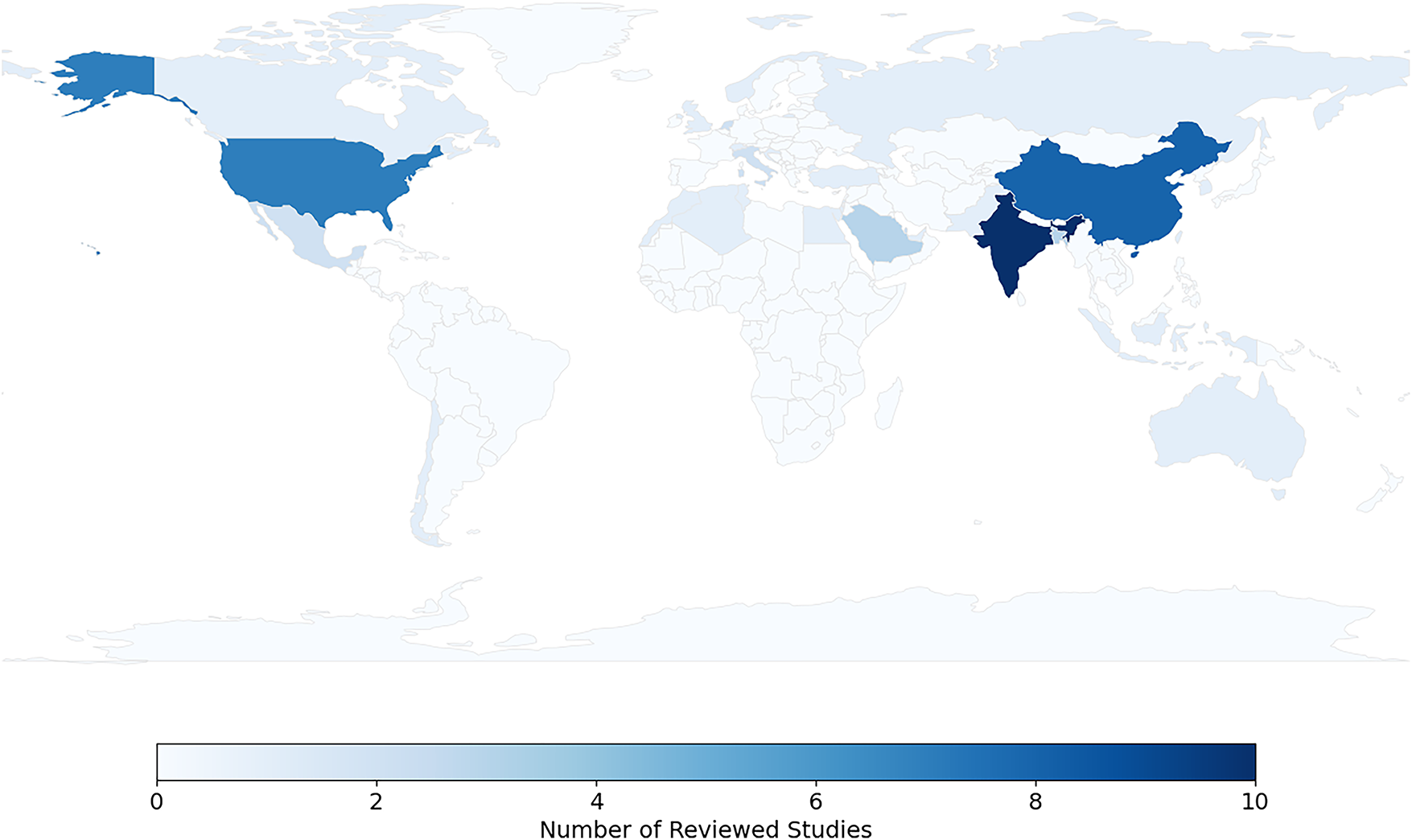

The heatmap in Fig. 7 shows the geographical distribution of the studies included in this review, which dealt with the diagnosis of breast cancer using multimodal data and XAI techniques. India ranked first in terms of the number of studies (11 studies), followed by China (8) and the United States of America (7). Moderate contributions were also recorded from Saudi Arabia, Bangladesh, The Netherlands, and Italy, while the contributions of countries such as Turkey, Norway, and Switzerland were limited to only one study. This distribution indicates the concentration of research efforts in certain regions, especially Asia and North America, which reflect their strong investment in AI healthcare infrastructure, with research gaps in low-resource countries, which opens the way for expanded international cooperation in this area.

Figure 7: Geographic distribution of reviewed studies

Beyond descriptive statistics, a thematic synthesis identified four higher-level trends:

1. Fusion approaches—progressing toward adaptive, explainability-aware strategies. Transition from early fusion toward intermediate fusion, particularly attention-based strategies.

2. Model architectures—movement from traditional ML toward hybrid DL frameworks, including CNNs, transformers, and GNNs.

3. XAI practices—SHAP and Grad-CAM dominate, though quantitative evaluation remains rare.

4. Clinical integration—limited attention to deployment feasibility, robustness to missing modalities, and clinician trust.

Breast cancer is one of the most common types of cancer among women, representing a significant health and economic burden on healthcare systems. It is often not detected early, which increases its severity and reduces the chances of treatment. Early detection plays a crucial role in increasing survival rates. Breast cancer is diagnosed using traditional methods such as clinical examination and Mammography. Although these methods are effective, they rely heavily on human experience, which can lead to varying diagnostic errors. Artificial intelligence has brought about a qualitative shift in the field of diagnosis, improving accuracy and speed of detection and reducing errors. Artificial intelligence supports clinical decision-making, unifies analysis methods, and helps build greater confidence in diagnostic results. This development paves the way for the use of deep learning models and multi-modal fusion techniques, which will be reviewed in subsequent sections.

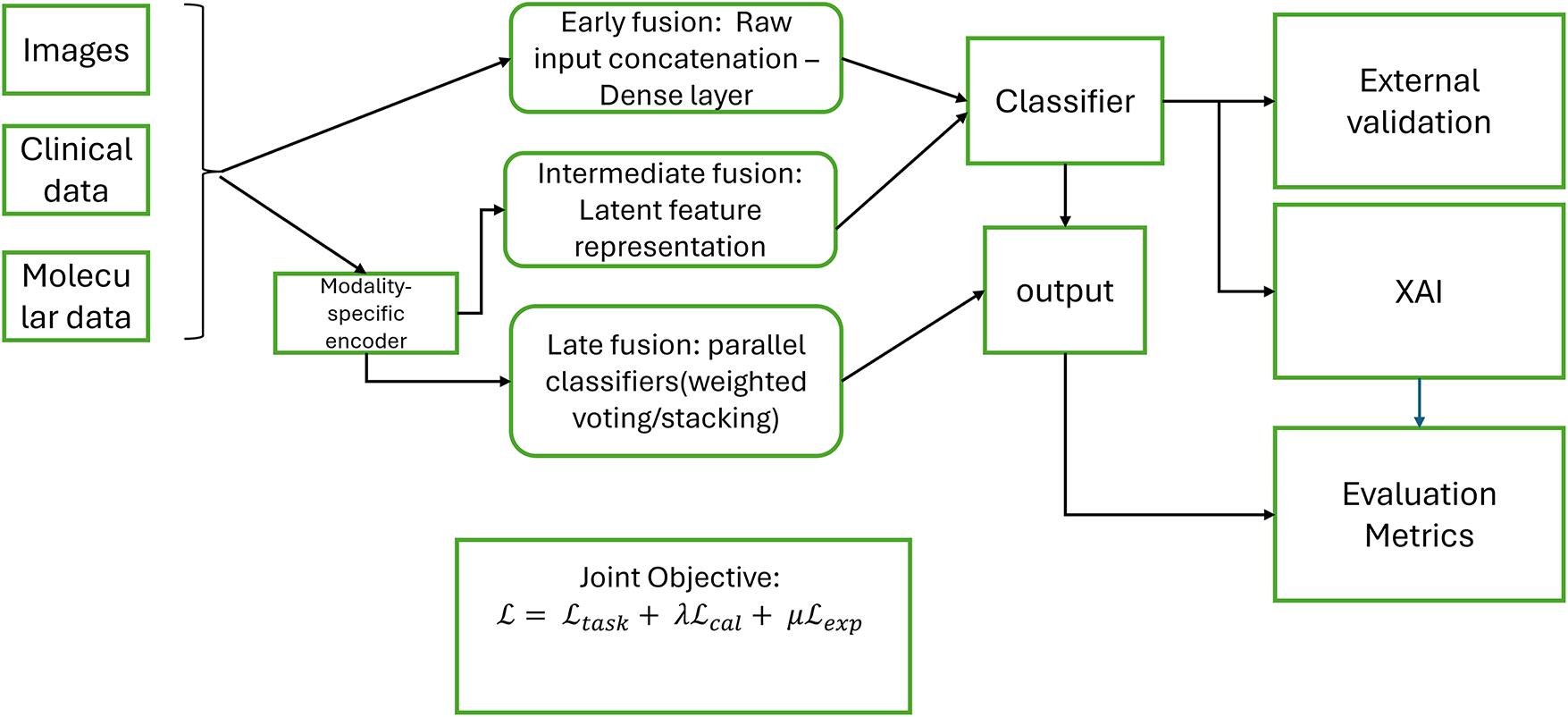

This section provides a brief technical framework for understanding breast cancer diagnosis using multimodal learning and explanation (XAI) methods. First, the nature and characteristics of common data in this field (mammograms, structured clinical data, report texts, and molecular/genomic data) are defined. The model families utilized to extract representations (CNN, Transformer, MLP, and for text, LSTM/Transformer) are then reviewed. A systematic overview of fusion strategies (early, intermediate, and late) and explanation mechanisms is then provided. Finally, common metrics for evaluating performance and explanation quality are outlined, setting the stage for the subsequent comparative analysis in the following sections.

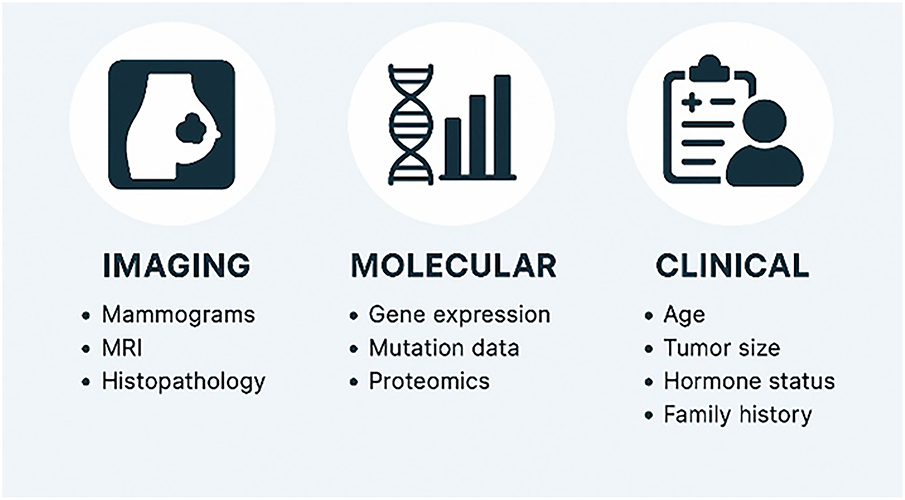

Multimodal data are datasets containing diverse information from various sources. Each type of data provides a different perspective on the information it captures about a particular entity. Examples of data include visual data, textual data, tabular or structured data, graphs, audio, or gestural data. Data in oncology are classified into three categories: clinical, molecular, and imaging [24].

• Molecular data

Molecular data contains data on genetic changes in cancer cells. These include genomics, proteomics, pathology, and radio-genomics. This type of data has a significant role in biomedicine, providing important information for diagnosing various types of cancer at early stages [25].

• Imaging data

This is one of the most important types of data, significant in diagnosing diseases, especially breast cancer. This type includes radiological imaging such as X-rays, mammograms, and magnetic resonance imaging (MRI), which help determine the shape and size of a tumor. Digital histopathology slides are imaging data where tissue samples are taken during surgery or biopsy, and help identify changes in cancer cells and cancer subtypes [24].

• Clinical data

These data are often readily available, including medical history, laboratory test results, medical examinations, treatments, and other information that facilitates understanding and diagnosis of diseases [25]. The data are stored in electronic records (EHRs) in a hospital database [24].

Clinical data is the second most used data type in breast cancer data fusion [26–28]. Each modality type encodes different, but ultimately complementary, information. For example, in disease diagnosis, a tumor can be detected through a mammogram, while patient records can contain vital contextual details such as family history or hormone receptor status. Combining these types of data can get comprehensive predictions [29]. Artificial intelligence models must be developed that require various types of data to accurately diagnose diseases. Fig. 8 presents different types of data, both image and non-image.

Figure 8: Breast cancer data types

Breast cancer datasets vary widely in the quality of the data they provide, the number of samples available, and their accompanying technical specifications. Some datasets are limited to radiographic images only, while others combine imaging with clinical or molecular information, making them more suitable for multimodal studies. These datasets also vary in their accuracy, organization, and level of documentation. This diversity helps researchers choose the dataset that best suits their research objectives, whether in classification, tumor detection, or predictive molecular profiling. These datasets are typically used in training deep learning models on mammography or histopathology images to detect tumor patterns, analyzing structured clinical records to capture patient risk factors. They also serve as standard benchmarks to evaluate model performance using metrics such as accuracy, AUC, sensitivity, and specificity.

This section presents an overview of recent publicly accessible datasets focused on breast cancer research. These datasets are invaluable resources for researchers and healthcare professionals, providing insights and data critical for understanding breast cancer trends, developing new treatments, and improving patient care. Searches in data repositories such as Kaggle, Cancer Imaging Archive, TCIA, and UCI Machine Learning Repository were conducted using keywords such as “breast cancer dataset,” “multimodal,” “open access,” and “imaging and clinical.” Accessing these datasets supports advancements in the field of breast cancer studies and aids in the ongoing battle against this disease.

• TCGA-BRCA (The Cancer Genome Atlas-Breast Invasive Carcinoma):

A large dataset containing diverse data for thousands of patients, including genomic (RNA-seq, DNA methylation, mutation data), histopathology whole slide images, and clinical metadata. Ideal for interpretability research [30].

• METABRIC (Molecular Taxonomy of Breast Cancer International Consortium):

This dataset [31] provides various data for many patients; it includes gene expression, copy number variations (CNVs), and clinical outcomes. The METABRIC dataset is ideal for prognosis modeling because of its longitudinal survival data.

• CBIS-DDSM (Curated Breast Imaging Subset of the digital Database for Screening Mammography):

The CBIS-DDSM [32] established in 2017, is a refined and standardized subset of the DDSM dataset. This dataset includes 1644 cases (each with a spatial resolution of 16 bits per pixel), providing mammogram images with annotated lesion masks, BI-RADS descriptors, and pathology labels (benign and malignant). It is one of the public datasets that provides mammogram images with lesions annotated.

• Wisconsin Breast Cancer Dataset (WBCD):

WBCD is a well-known tabular dataset containing 30 computed features extracted from digitized images of fine-needle aspiration biopsies and based on cellular characteristics (texture, perimeter). It has 569 samples, each classified as benign or malignant [33].

• Mammogram Mastery:

This dataset was published on Mendeley Data in v1 in April 2024. It contains 745 original mammograms of breast cancer patients and healthy controls. Nine thousand six hundred eighty-five processed images were augmented using cropping and rotation techniques to enhance visual diversity. The images were collected from hospitals and clinics in Sulaymaniyah, Iraq, adding distinct demographic and regional dimensions. This dataset is an important resource in medical curricula, providing a robust database for training and testing deep learning models for breast cancer diagnosis [34].

• The Chinese Mammography Database (CMMD):

The CMMD dataset serves as a valuable resource for supporting multimodal breast cancer research and evaluating the performance of deep learning models in diagnostic tasks. It comprises 3728 high-resolution mammogram images collected from 1775 patients who underwent breast cancer screening. Each image is accompanied by essential clinical metadata, including patient age, lesion type, and molecular subtype annotations. As one of the few publicly available multidimensional datasets, CMMD significantly contributes to advancing breast cancer classification and interpretability studies [35].

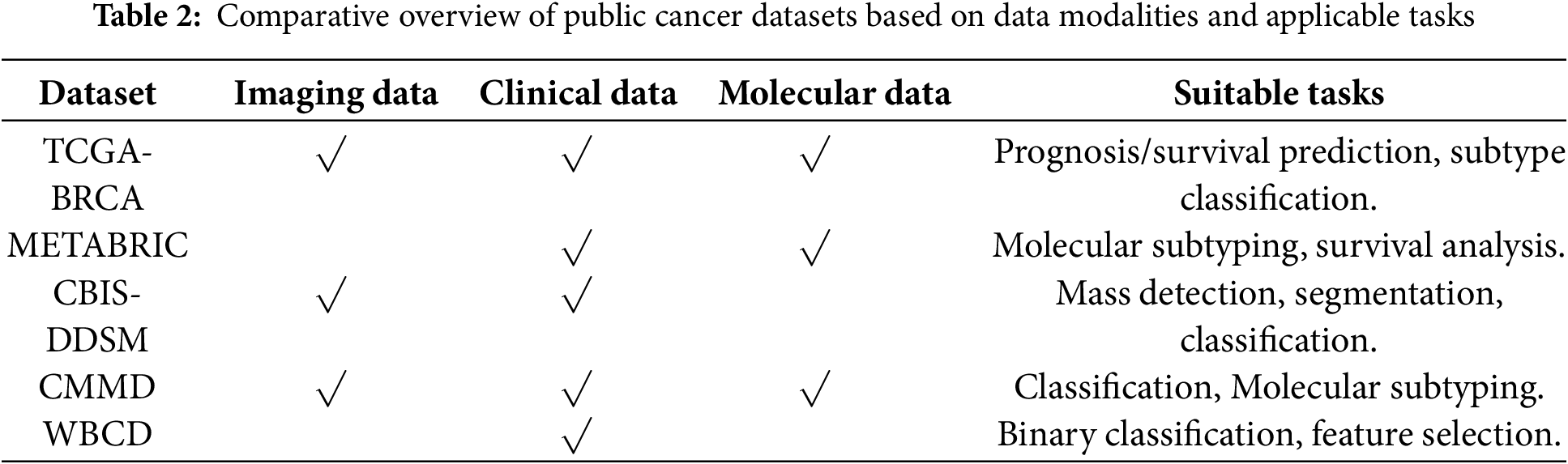

Table 2 classifies breast cancer datasets in terms of the type of data they contain and the key research tasks that can be applied to each dataset.

These datasets are widely used because of their open accessibility, enabling trials to be conducted without institutional restrictions. Some datasets provide multimodal information for the same patient, facilitating integration approaches that mimic clinical decision-making. They are generalizable, supporting large sample sizes. They provide a reliable basis for trials, as they are derived from real-world sources.

Deep learning and machine learning are branches of artificial intelligence that have gained significant importance in recent years [36]. These models have evolved significantly, making them essential tools for analyzing complex medical data. They are characterized by their ability to extract accurate patterns from a large amount of data. Deep learning techniques are effective with large datasets compared to traditional machine learning techniques, which are often used with small data sets [37]. These models are effective in multiple stages of the breast cancer diagnosis process, as they can detect lesions on mammograms, analyze clinical indicators, and combine multiple models to improve prediction accuracy. Deep learning techniques have the potential to extract complex representations from images [38], which in turn helps reduce the burden on human expertise. They can also build explanatory models and analyze relationships between clinical variables. Currently, these models have become the basis for developing reliable diagnostic systems for breast cancer [39].

Data fusion has been associated with deep learning for several years in many fields, and deep learning-based multimodal data fusion approaches have achieved significant success in various applications [29]. This section outlines the most widely used deep learning and machine learning techniques with multi-modal data in the field of breast cancer diagnosis.

• Convolutional neural network—Image modality

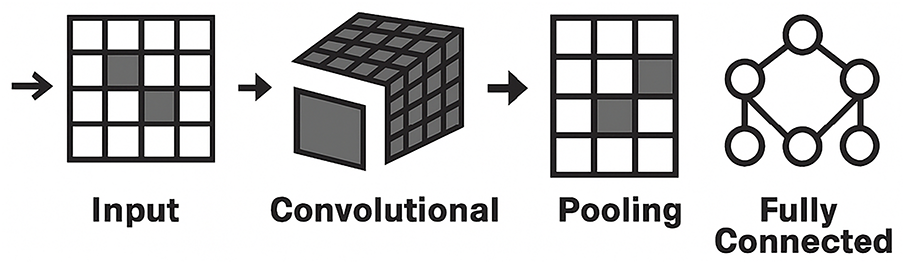

CNN is one of the most popular and used DL networks, which has proven highly effective in medical image analysis. CNNs are designed to automatically detect and learn features from raw image data, making CNNs ideal for tasks such as breast cancer diagnosis from mammograms. Fig. 9 presents the architecture of the CNN. CNNs have multiple layers of convolutions with ReLU activation, followed by pooling, and fully connected layers. CNN uses filters, where a set of linear activations is generated and is followed by non-linear functions to reduce the complexity of input data [40]. A convolutional layer scans the image with small filters to detect patterns such as edges and textures. Then, the pooling layer simplifies the data by down-sampling its size, transferring the activation map into a smaller matrix. The pooling layer solves the overfitting problem by reducing complexity [41].

Figure 9: CNN architecture

Finally, the fully connected layer takes all the extracted features to make a prediction. Alexnet, VGGNet, and ResNet are examples of CNN variants that improve accuracy and efficiency by modifying the standard CNN architecture [42].

CNNs are widely utilized to extract features from mammogram images [43], producing deep representations that capture subtle spatial patterns such as masses and calcifications, which are then combined with clinical or molecular features, improving classification accuracy.

• Multi-layer perception—Clinical modality

Multilayer perception (MLP) is used for tabular data or any task based on numerical or categorical data [42]. MLP is a fundamental building block in deep learning because of its flexibility in architecture, and is often applied to medical record classification and fraud detection applications [42]. The model’s ability to learn data patterns is affected by the number of neurons and the number of network layers. MLP comprises an input layer that has several nodes. This layer receives input features; each neuron represents one feature. Features will pass to the next layer; one or more hidden fully connected layers lie between the input and output layers. The hidden layer receives input from the previous layer and applies an activation function to allow models to learn patterns. The output layer produces the output of the network using the activation function.

MLP is characterized by its ability to capture non-linear relationships between clinical variables. In breast cancer diagnosis, MLP is often utilized to extract feature representations from tabular data such as age, medical history, and vital signs. In multimodal studies, MLP outputs are combined with image representations to form a common vector. MLP can also be used as a classifier in a multimodal breast cancer classification system [44].

• Autoencoders—Fusion enhancement

An autoencoder learns to reconstruct images, text, and other unlabeled data from compressed versions. Three layers make up an autoencoder [45]:

The encoder compresses the input image, encodes it into a compressed representation, and sends it to the decoder layer. The decoder reconstructs the original inputs by decoding the encoded image to its original dimension before encoding. When combining different types of data, the autoencoder reduces the dimensionality of the features of each pattern before or after fusion, making the fusion process more efficient. It can also be used as a preprocessor layer to preserve the underlying information and unify the size of the representations.

• Vision Transformer (ViT)—Image modality

It is employed as an alternative to CNN, especially when the image is of high resolution. The ViT model relies on dividing the image into non-overlapping patches, which are then converted into representative vectors. Position encoding is added to each patch, and these vectors are then passed through an encoding layer that includes self-attention, layer normalization, and MLP mechanisms.

In the field of multi-modality fusion, ViT can be used as an image feature extractor, producing deep representations that can be combined with other types of data, enhancing the model’s predictive accuracy and improving performance in diagnostic tasks [46].

• RNN/Long Short-Term Memory (LSTM)

LSTM networks are commonly utilized to process sequential or temporal data, such as medical records or vital signs. These networks can remember important information and discard unnecessary information across time steps, making them highly effective at capturing complex temporal patterns. LSTM extracts a temporal representation from sequential medical data and combines it with image features, enhancing the model’s predictive ability in the field of multi-modal fusion [47].

3.4 Fusion Strategies and Techniques

Multimodal data fusion produces a unified, information-rich representation by combining information from multiple modalities or several sources for use in various tasks, such as classification and interpretation. Each type of modality possesses a variety of information, fusing those modalities by combining the strengths of each type and compensating for its weaknesses [29]. Individual modality, such as images, provides specific and non-comprehensive information, and can therefore affect the accuracy of the decision [48]. Different data modalities can focus on the same object in different ways and some tasks require a comprehensive understanding that requires multiple data sources. Information from varied sources can be complementary. Data fusion helps discover relationships and properties that cannot be detected using a single modality; in addition, it improves accuracy and interpretability [29]. Fusion is a modern pillar that plays an important role in the medical field, especially in breast cancer diagnosis. Integrating radiological, clinical, and laboratory information improves classification and prediction accuracy, increases the reliability of results, and enhances interpretability. Some of the complex relationships between multiple factors cannot be clear when analyzing each pattern separately, but with multimodal fusion, these relationships and interactions can be understood, and then clinical decisions can be made based on them. In addition, multimodal fusion contributes to improving the ability to generalize across different populations, which enhances the quality of healthcare.

• Early fusion: Raw or pre-processed features are directly concatenated [49]. Mathematically, this can be expressed as Eq. (1):

where

This type of fusion is fast, simple in implementation, and useful when all modalities are correlated [25] On the other hand, it has a high sensitivity to the different nature of the data, and it is difficult for it to deal with heterogeneous data.

In the field of breast cancer detection, early fusion can be used when all patterns are close and can be unified, such as merging mammogram images with clinical features into a single representation, which helps reveal early relationships between data types.

• Intermediate fusion: This approach fuses features for each modality after independent processing [25]. Each modality m is first transformed by an encoder gm( ) Eq. (2):

Intermediate fusion is characterized by its ability to maintain the representation of each data modality. This approach allows for the processing and training of each type of data before fusion, achieving a balance between integration and flexibility, and is suitable for diverse medical data. Although effective, it requires careful alignment between the extracted representations and consumes greater computational resources. The most used techniques in this strategy are concatenation, attention-based fusion, cross-modal transformer, and tensor fusion. This strategy is commonly used in breast cancer diagnosis [50], because it allows each type of data to be best represented before fusion, which in turn improves prediction performance.

• Late fusion: Each modality yields its own prediction

where

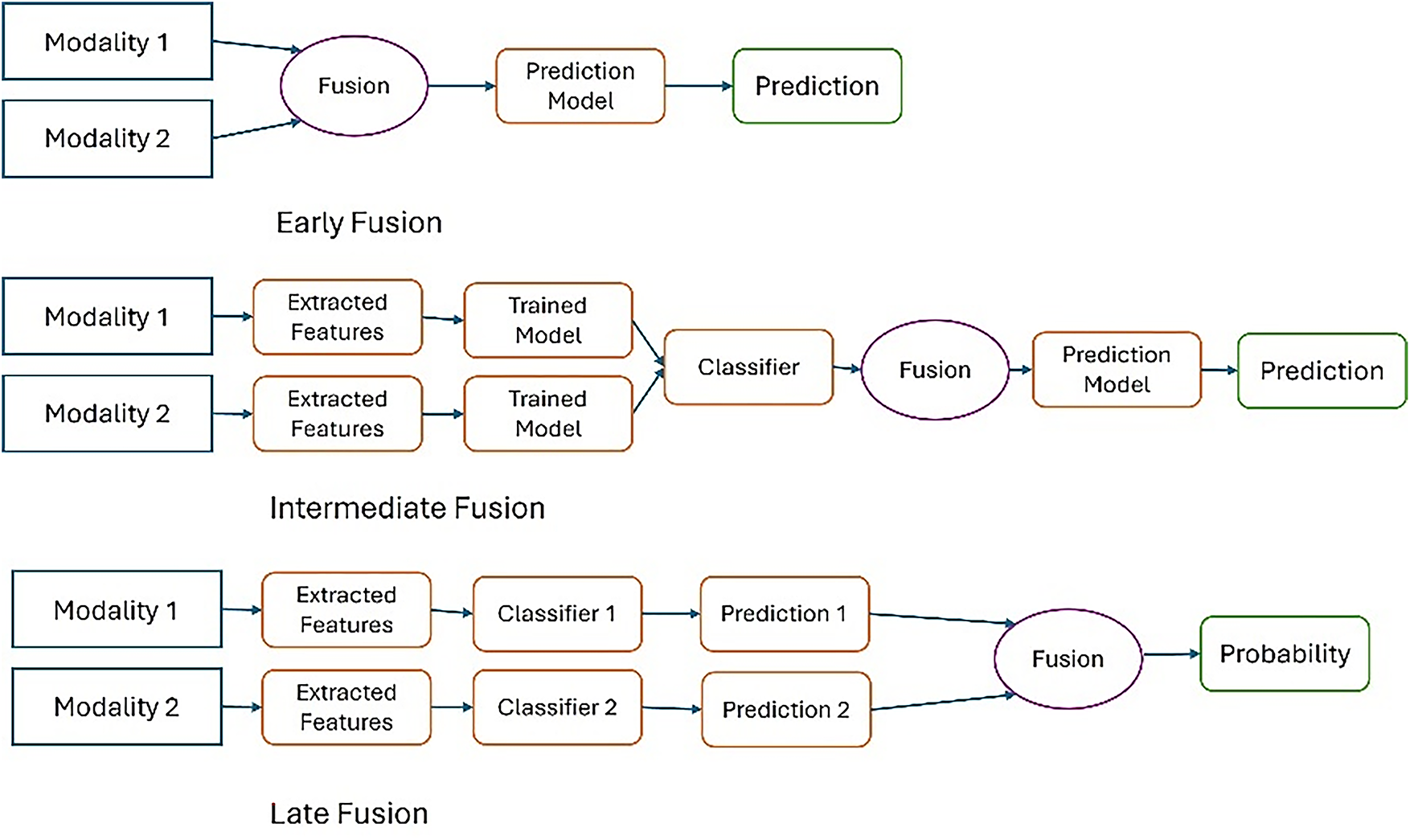

The strategy is easier to implement than the previous types as it allows combining different models for each pattern, but cannot capture interaction between modalities [51], the final decision depends on the collection of outputs only [52]. Average pooling, majority voting, and stacking classifiers are the common techniques used in the late fusion strategy. Fig. 10 illustrates the difference between these strategies in terms of the location of the fusion in the data processing pipeline. In early fusion, raw or preprocessed data is fused at the input stage prior to learning, while in intermediate fusion, features are extracted from each pattern and then fused at a subsequent stage after training. In late fusion, the outputs of the sub-models are fused at the final stage before the final decision is made.

Figure 10: Fusion strategies in multimodal learning

This taxonomy is widely used in medical applications, especially in the field of breast cancer diagnosis, where the selection of the appropriate fusion strategy is based on the type of data and its degree of variance, which in turn plays a major role in enhancing the accuracy of medical models.

3.4.2 Advanced Fusion Techniques

Fusion techniques define how different modalities are integrated at a representational level. Multimodal data fusion techniques are becoming increasingly significant in breast cancer detection because of their capability to enhance diagnostic accuracy and provide comprehensive insights. These techniques integrate various types of data and analytical methods, providing a more robust framework for detecting and characterizing breast cancer. These techniques rely on advanced learning mechanisms such as attention and generative models, which allow focusing on important information and achieving the highest performance.

• Operation-based fusion

Operation-based fusion methods cannot capture the complex interaction between modalities, but they are flexible and simple [53]. These methods combine feature vectors by using simple mathematical operations such as the following:

1. Concatenation, which combines features from multiple modalities into a single representation Eq. (4), it is a simple technique [54].

This mechanism is suitable as a starting point for multimodal systems in the field of breast cancer because it does not require a complex design.

2. Element-wise addition, which requires the same dimension to add feature vector element by element, as shown in Eq. (5).

This method is easy and fast to compute; it does not add any learning weight but rather integrates raw data. It is useful if the features are equally important. Despite the simplicity of this technique, it suffers from some limitations. It assumes that all vectors representing patterns fall into the same representation space and does not consider the relative importance of each pattern, treating all values equally. These challenges make it unsuitable for dealing with complex multimodal breast cancer data.

3. Element-wise multiplication multiplies feature vectors element by element, as expressed in Eq. (6), and focuses on interaction.

This technique is used when patterns contain complementary information. For example, high-density areas on a mammogram can interact with specific clinical factors, such as age, to enhance prediction accuracy. In contrast, this technique is not useful when some values are small or zero, and it is not suitable for heterogeneous data.

• Attention-based fusion

Attention-based is a mechanism that focuses on important regions and hides less important features in the input data [55]. The mechanism assigns learnable weights to specific inputs based on their relevance to the task and prioritizes them, as shown in Eq. (7).

where ω is a trainable parameter vector, and

In this technique, features from various modalities are selectively combined. This approach learns the weights of features and combines them based on their importance to the prediction task, unlike concatenation, which processes all features equally. In attention-based fusion, common methods are utilized to integrate features; for example, intra-modality self-attention weighs the importance of features of the fused representation to better understand the relationship between features. Another method of attention-based fusion is inter-modality cross-attention, which relies on the features of one modality to direct attention in the other. Attention-based fusion method. In the field of breast cancer, this technology is one of the most advanced and effective fusion techniques. For example, radiographic images contain fine visual details that complement clinical information. When attention mechanisms are used, they allow the model to focus on the areas most closely associated with disease in the images, while considering supporting clinical factors, thus improving integration and increasing classification accuracy.

• Tensor-based fusion

Rather than directly merging two feature vectors linearly, tensor fusion forms an outer product between them, generating a multi-dimensional feature space where every possible combination of features across modalities is explicitly modeled [53]. Eq. (8) expressed this fusion method.

where:

•

• r is the index of the rank component.

• R is the rank of the vector decomposition.

• T denotes the transpose operator (the inner product between the weight vector and the modality feature vector) [57].

This process allows the network to capture richer and more complex relationships between modalities compared to simple fusion methods (such as concatenation).

This type of technique is usually used with CNN or Transformer networks. It is very suitable when the data is from multiple sources, as in the field of breast cancer (mammogram images, genomic data, and clinical data), as it is one of the techniques capable of dealing with multidimensional data [58].

• Graph-based fusion

In this approach, every characteristic is represented as a graph node. Nodes relate to edges based on feature similarity, and each node’s weights are updated based on its significance using a model similar to a graph neural network [53]. It is very useful in breast cancer to represent the relationships between clinical factors and radiographic patterns in a flexible way, and opens the way for in-depth explanations at the relationship level [59].

Fusion techniques are the foundation for building highly accurate and reliable multi-model diagnostic systems. Some fusion methods provide simple and easy-to-implement solutions, such as concatenation, while advanced techniques, such as attention and graph, are better able to represent complex interactions between different data sets. In the context of breast cancer, these techniques contribute to improving the quality of predictions and supporting clinical decisions due to their ability to enhance the integration of different data sources. Choosing the appropriate fusion technique is a critical factor in improving performance in medical applications.

The role of fusion techniques goes beyond improving model performance to enhancing explainability, as the fusion method largely determines the type and form of possible explanations. For example, attention techniques allow for highlighting the contribution of each type of data to the final prediction, which helps to understand decisions and increase confidence.

3.5 Explainable Artificial Intelligence

Deep learning achieved remarkable success between 2012 and 2015 in various fields. However, these models are characterized by ambiguity, which raises concerns in sensitive fields such as healthcare and defense systems. In 2015, the Defense Advanced Research Projects Agency (DARPA) emphasized the importance of having understandable and reliable AI systems. Therefore, in 2017, the agency launched the XAI program [60]. XAI is a branch of artificial intelligence designed to make machine learning models and their decisions understandable to humans [61]. The goal of this program is to assist end users, such as operators and physicians, by bridging the gap between high accuracy and understandable interpretation.

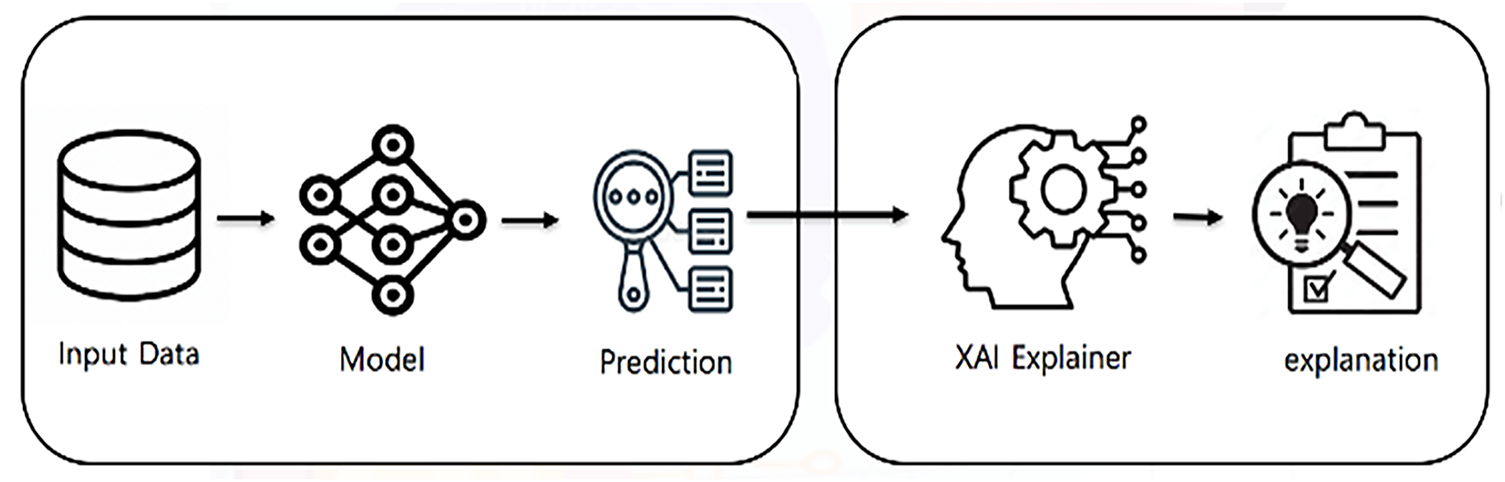

Although deep learning models have demonstrated impressive performance in tasks such as breast cancer classification, one major drawback is their lack of interpretability. Users must perceive the reasoning behind a model’s decision to trust it in critical settings. XAI techniques are designed to address this issue by providing insights into how a model makes predictions. Fig. 11 illustrates the general framework of XAI. XAI techniques enable end users to understand these systems, which in turn enhances trust and supports decision-making. The XAI pipeline consists of three core components: input data, predictive model, and explanation engine.

Figure 11: General framework of XAI

Explanations are essentially additional metadata provided by the AI model, illuminating a particular AI decision or the overall internal workings of the AI model [62]. In the field of XAI, the primary aim is to clarify the inner processes of the model. This elucidation entails providing explanations about the methods, procedures, and results of these processes in a way that is comprehensible to users. XAI is often referred to as the “White box” approach because of its emphasis on clarifying the inner workings of the model [63]. In the medical field, particularly in breast cancer diagnosis, XAI plays a crucial role in establishing trust between AI systems and healthcare practitioners by ensuring that AI algorithms are trustworthy and their decisions are reliable [62]. It also enhanced transparency by making the inner workings of AI models more transparent and understandable to radiologists. XAI can address issues related to bias and fairness within AI algorithms to prevent discriminatory outcomes, which is especially critical in breast cancer diagnosis. Achieving high predictive accuracy is not enough for medical diagnosis; it must understand “why” and “how” the decision was made [64]. XAI explanation allows healthcare practitioners to assess the reliability of models used in diagnosis [65]. As for patients, explaining the model’s decisions enhances confidence and increases their satisfaction with the treatment plan.

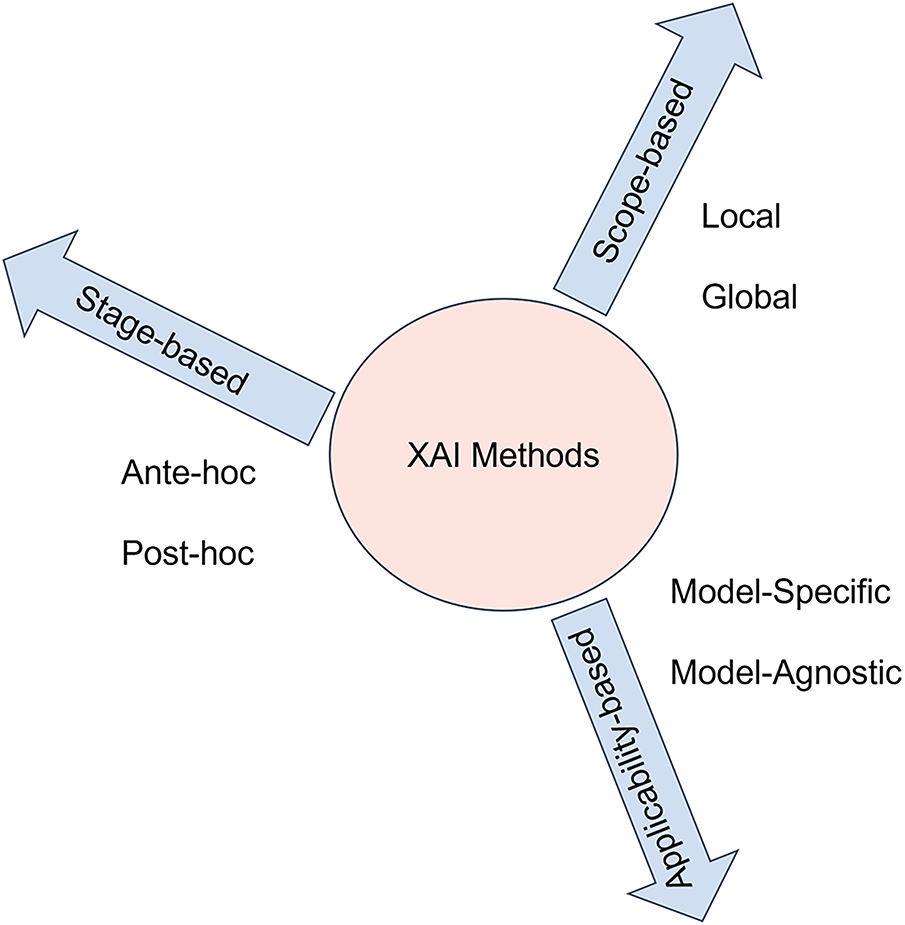

In sensitive medical applications such as breast cancer diagnosis, clinicians need to understand why a model makes a particular decision to assess its clinical reliability. Interpretation techniques support clinical decision-making and enhance transparency. Explanation methods in AI research are generally divided based on the scope of explanation, stages of application, and model applicability [66]. Each dimension focuses on various ways explanations are produced and how well they understand the behavior of the model.

Scope-based methods focus on the extent of the explanation, based either on individual predictions or the entire model, and are divided into local and global explanation methods. Global methods provide a complete overview of the model’s behavior and focus on general rules that are learned by a model. These methods explain how the most important features affect the model in general. However, local methods work at the individual level, explaining how the model arrived at a specific prediction [67].

Stage-based explanation methods show when the explanation occurs in relation to the model’s training process. This type of explanation is divided into ante-hoc, where the model is inherently interpretable, and post-hoc, where explanations are generated after the model makes a prediction [66].

Model-applicability includes model-agnostic and model-specific methods. Model-agnostic methods are designed to be usable with any ML model and provide explanations that are not related to the internal working mechanism of the model, whereas model-specific methods rely on understanding the internal structure of the model and are specific to a particular type of model [68]. Fig. 12 presents a visual taxonomy summarizing this classification.

Figure 12: Taxonomical classification of explainable AI methods

3.5.3 Popular XAI Techniques in Breast Cancer Diagnosis

The most common XAI methods in breast cancer research are SHAP, LIME, and GRAD-CAM [69].

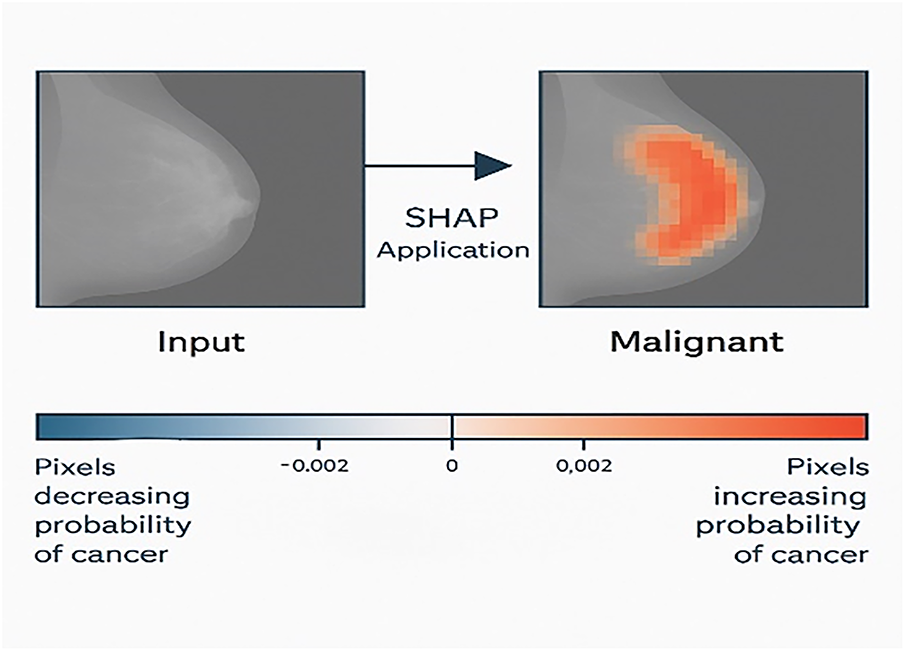

SHAP (Shapley Additive Explanations): SHAP is a post-hoc, model-agnostic, and local/global technique used in several domains, especially in the medical field. SHAP provides explanations for the impact of each feature on the final prediction of the model, based on the concept of cooperative game theory [68].

SHAP relies on a concept known as the Shapley value [70]. Using Shapely values ensures that the contribution of each feature to the prediction or decision of a particular instance is accounted for fairly [71]. In the medical field, SHAP is utilized to analyze the contributions of medical features to specific disease outcomes or predictions. The technique allows for both local and global explanations, enabling clinicians and researchers to examine the impact of individual features as well as the overall influence of feature combinations on disease outcomes. Fig. 13 shows a SHAP explanation of breast cancer classification.

Figure 13: SHAP explanation of breast cancer classification

LIME (Local Interpretable Model-Agnostic Explanations) is another popular technique in healthcare. LIME, developed by Ribeiro and colleagues in 2016 [72], creates a simpler, easier-to-understand model to explain individual predictions. Its primary goal is to provide a local and interpretable model to explain individual predictions made by black-box machine learning models [73]. Unlike SHAP, LIME is applied to a single instance rather than the entire dataset [70]. LIME is widely used in medical images to produce visual explanations by identifying regions that influence a decision. LIME’s strength lies in its ability to interpret the prediction of any classifier.

Grad-CAM (Gradient-Weighted Class Activation Mapping): This advanced variant of Class Activation Mapping (CAM) has gained popularity in deep learning models, particularly CNNs [70].Grad-CAM is a model-specific technique that highlights the region that influences the specific prediction in the input image. It generates a visual heat map for the CNN-based network by using a gradient of the target class in relation to the feature maps of the convolutional layer [74]. Grad-CAM is widely used in mammography and histopathology images by generating heat maps, Grad-CAM can highlight the specific regions that contribute most to the model’s decision, such as calcification or suspicious masses. This visual interpretation allows clinicians to verify that the model is focusing on clinically relevant areas.

LRP (Layer-Wise Relevance Propagation): LRP enables the interpretation of deep neural network decisions by tracking the contribution of each feature to the final model. It starts with the final prediction of the model and tracks the values back layer by layer until the importance is distributed to each input. LRP then produces a map showing the contribution of each input to the decision [75].

Due to the variety of data types used in breast cancer diagnosis, it has become necessary to align XAI techniques with the nature of each type of data. For example, visual explanation techniques such as Grad-CAM are very suitable for medical imaging data, providing heatmaps that highlight the resolution areas within the images. As for structured clinical data, methods based on the analysis of feature importance, such as SHAP and LIME, are more effective in explaining the effect of each variable on prediction. In contrast, molecular data, such as gene expression and mutations, require more complex gradation-based techniques such as DeepLIFT.

3.5.4 XAI and Multimodal Fusion

In the field of breast cancer, data from various sources are combined to provide a comprehensive view of the disease. However, the complexity of these combination models makes decision-making unclear. Explanation techniques address this problem by clarifying how each pattern contributes to the final decision. This, in turn, reassures physicians that the model focuses on medically relevant information.

XAI method can apply to the output of the fusion model to obtain an explanation of the prediction, which is called post-fusion explanation, or the XAI method is applied to each data modality before the fusion to explain it separately, called pre-fusion explanation. This interaction between fusion and XAI bridges the gap between technical model development and clinical decision support.

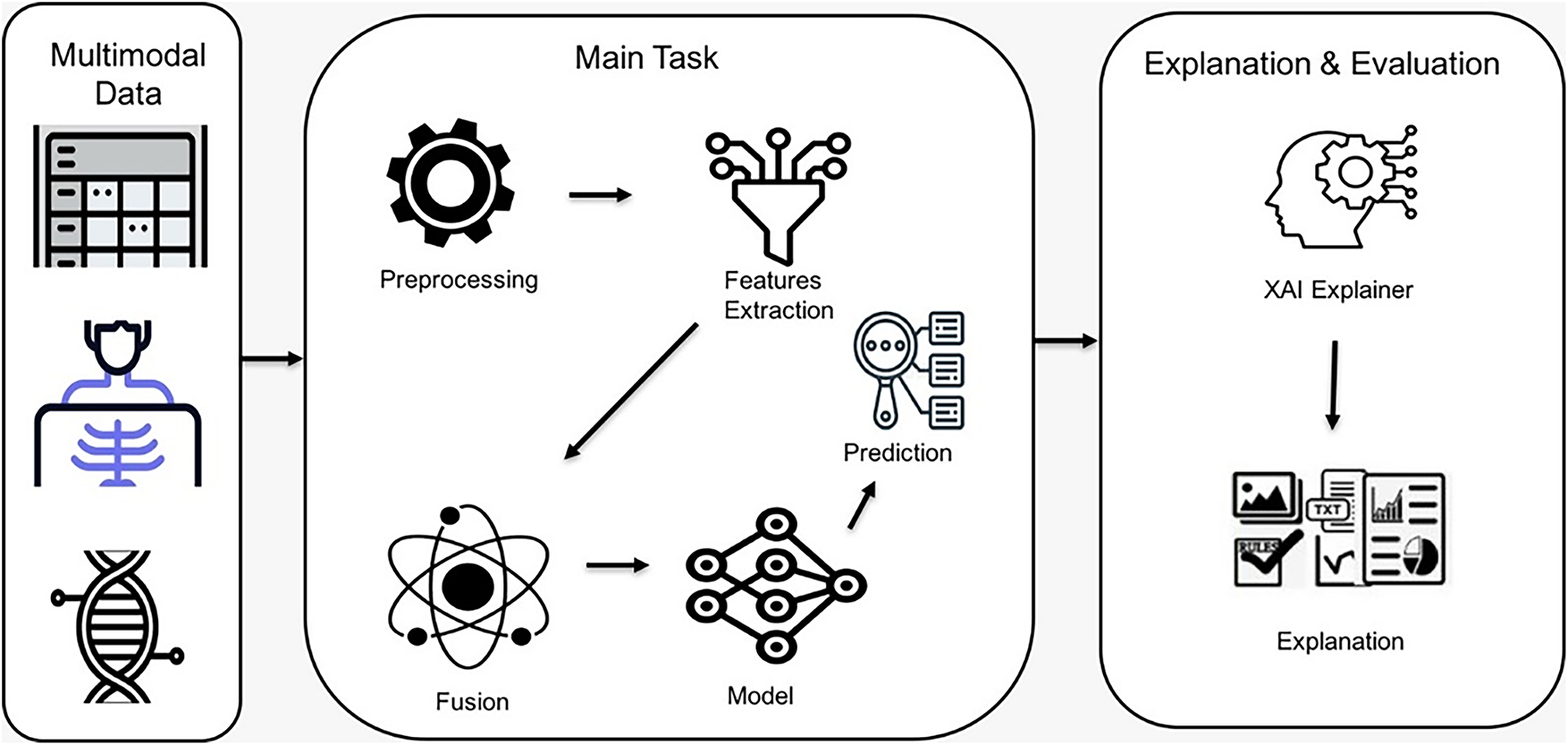

This review presents a unified conceptual model that makes clear the workflow of how to integrate multimodal data and apply explainable algorithms to breast cancer diagnosis. It observes the interaction between preprocessing, feature extraction, and fusion strategies. This is followed by prediction, explanation, and evaluation of the explanation. Fig. 14 illustrates a visual conceptual framework for multimodal breast cancer diagnosis with XAI.

Figure 14: Visual conceptual framework of multimodal breast cancer diagnosis with XAI

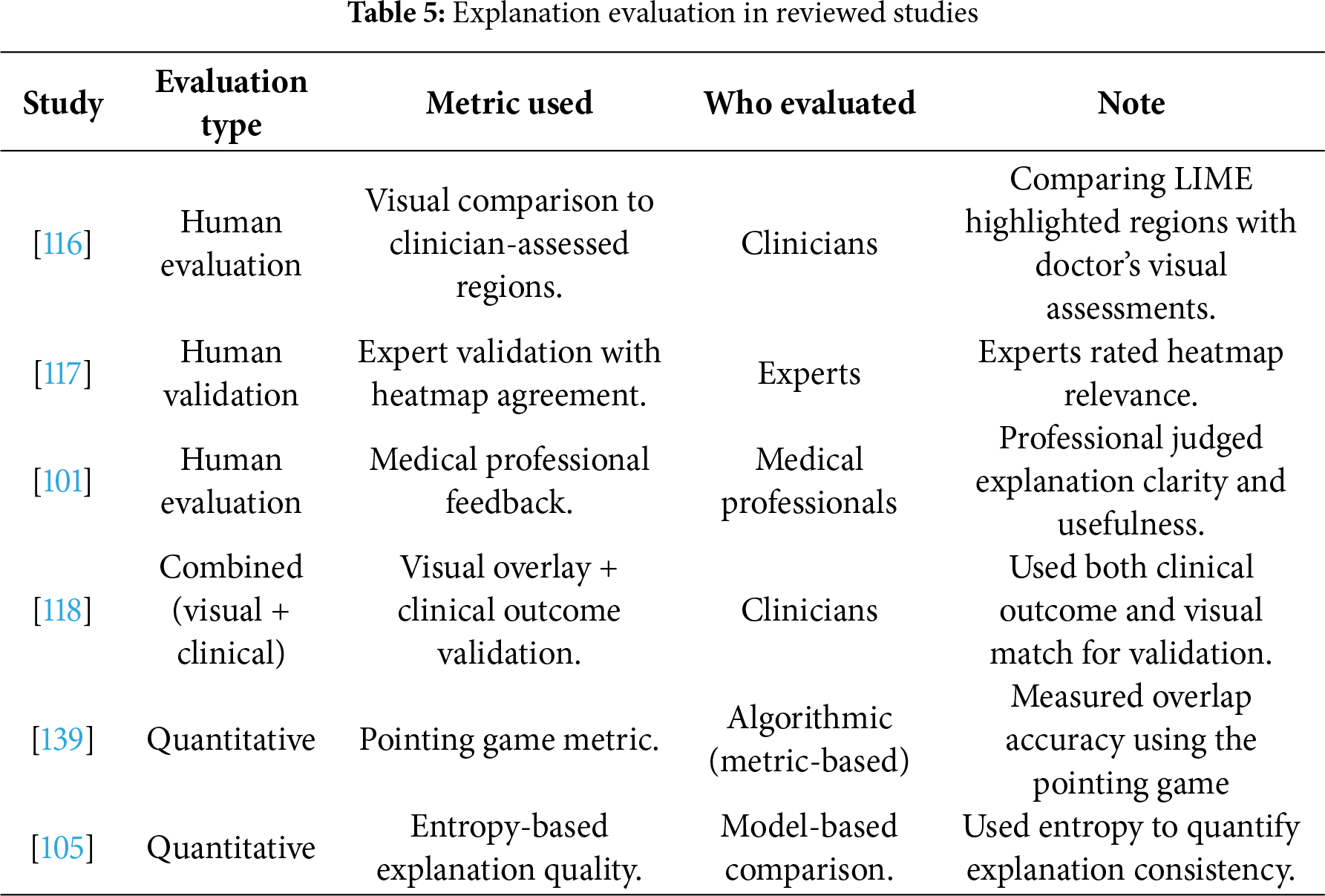

3.5.5 XAI Evaluation Techniques

Evaluation of AI explanations is a process of assessing the accuracy, reliability, and usefulness of the explanation in reflecting a model’s decision-making process [22,76]. Evaluation methods ensure that the explanation is actionable and interpretable. Evaluating explanations focuses on the following: (1) building trust, which enables users to understand and trust decisions, increasing their reliance on AI systems; (2) clinical and ethical compliance, because explanations are essential for ethical decision-making in sensitive areas; (3) model debugging, because evaluating can detect errors and biases in AI models; and (4) facilitating human–AI collaboration, enabling humans to make decisions easily through clear evaluation [76,77].

Based on the level of human involvement, evaluation methods can be categorized into evaluation with and without a user [78]. Evaluation with a user is divided into two groups—human-grounded metrics, involving real humans performing simplified tasks under the core target application, and application-grounded metrics, involving real humans in real tasks in a practical environment to determine whether these explanations can achieve the desired goal [76,79]. Evaluation without user includes functionally grounded metrics that assess the quality of the explanation without human involvement by using a computational proxy [76]. Based on the focus of the evaluation process, evaluation methods can also be classified as human-centric, which involves the user’s trust and understanding of explanation; model-centric, which determines alignment of the explanation with model behavior; and task-centric, which focuses on the effectiveness of the explanation in improving task performance [20].

Overlap-based metrics such as intersection over Union (IoU) are employed Eq. (9) [80] to quantitatively evaluate the alignment between the explanation maps and expert-annotated ground truth:

A higher IoU indicates stronger overlap between predicted and actual relevant areas.

Similarly, the dice coefficient Eq. (10) is widely utilized to measure the similarity between explanation-based regions and ground-truth annotations:

Unlike IoU, Dice emphasize the harmonic mean of precision and recall, providing a more balanced evaluation when regions differ in size.

In addition to overlap-based metrics, the pointing Game metric, Eq. (11), [81], is applied to evaluate whether the most activated point in the explanation heatmap falls within the ground-truth region:

Beyond overlap and localization metrics, Eqs. (9)–(11), additional measures are employed. Entropy-based measures quantify the sharpness of heatmaps, while cross-method agreement is often measured using Jensen-Shannon divergence between attribution distributions. Finally, Clinician validation typically involves inter-rater reliability measured by Cohen’s κ.

Evaluating the explanation is an important step to ensure the accuracy of XAI techniques, especially in sensitive fields such as healthcare.

The field of breast cancer diagnosis has witnessed rapid developments in recent years, thanks to the presence of artificial intelligence technologies, particularly multimodal fusion and explanation techniques.

This section synthesizes findings from the 61 studies in this field in terms of preprocessing techniques, learning models, fusion strategies, explanation techniques, and evaluation methods. This allows us to map not only where and when multimodal breast cancer AI research has advanced, but also how technical methods have evolved—particularly the shift from early concatenation-based fusion toward cross-attention and hybris Transformer-based frameworks with integrated explainability.

4.1 Preprocessing Strategies in Multimodal Breast Cancer Studies

Unifying data from diverse and heterogeneous sources is a major challenge in data fusion because it requires maintaining the integrity of information without losing any important details [6]. The data preprocessing stage is essential to building integrated multimodal systems for breast cancer diagnosis. This stage helps standardize data characteristics and improve input quality before fusion. Common preprocessing techniques include denoising, normalization, encoding, data alignment, and contrast enhancement.

A study conducted in 2021 [27] attempted to address these challenges by integrating pathological images and structured EMR (electronic Medical Record) data in a richer fusion network. Researchers have been able to convert low-dimensional EMR data to high-dimensional data, effectively combining images and structured data and minimizing information loss during the fusion process by using a denoising autoencoder. Dimensionality management is a pivotal challenge in multimodal data fusion. High-dimensional data often leads to computational inefficiencies and overfitting, requiring the use of reduction techniques to balance model complexity and predictive performance. The findings in [26] revealed that methods such as those using neural networks and auto-encoders not only reduced dimensionality but also retained essential diagnostic features. The authors highlighted that effective dimensionality adjustment fosters robustness in diagnostic systems, ensuring that they perform well across varied datasets [26] applied principal component analysis (PCA) and auto-encoders to reduce high-dimensional image features and textual data. This study highlights the importance of dimensional considerations in advancing multimodal approaches, providing a pathway to more reliable and precise breast cancer detection frameworks.

Dimensionality reduction can help in improving model generalization while preserving essential information, as in the study [47]. They applied Minimum Redundancy Maximum Relevance (mRMR) across all data modalities by selecting the most relevant and non-redundant features, which reduced noise in the data and improved performance of the model. Preprocessing is a critical step in multimodal data fusion, especially when working with whole slide images that can contain billions of pixels. Dividing WSLs into smaller patches is a common preprocessing step in multimodal fusion. This study [82] selects the most informative patches based on energy values after dividing Whole slide Images (WSIs) into 256 × 256 patches. Similarly, a study by [46] split 224 × 224 patches from manually annotated tumor regions and applied Vision-Transformer to combine images with genetic and clinical features.

Data are often constrained and limited by cost or privacy. Therefore, there is a need to synthetically increase the diversity and quantity of input samples through data augmentation [83]. This process supports the generalization of multimodal learning models and addresses the limitations imposed by small datasets. In the field of multimodal breast cancer classification, data augmentation plays a major role in enhancing the performance of a model. For example, researchers in [84] increased the dataset from 86 original images to 1032 augmented samples by applying rotation, translation, shifting, and other augmentation techniques on mammograms and ultrasound images. This step helped enhance classification by achieving 98.84% accuracy. Similarly, ref. [27] applied transformations such as random flips, rotations, and brightness to pathological images produced more than 3 million samples, which improved classification accuracy to 92.9%.

These preprocessing strategies play a pivotal role in harmonizing heterogeneous modalities, ensuring that the subsequent learning and fusion steps are built upon clean, consistent, and representative data—a prerequisite for achieving robust and explainable diagnostic models.

4.2 Modeling Paradigms in Multimodal Learning

Modeling techniques are at the heart of the multimodal system, where the choice of model structure and data representation method directly affects the accuracy of prediction. In the field of multimodal breast cancer diagnosis, deep learning and machine learning techniques are used for feature extraction and training. After reviewing the theoretical foundations of the types of models in the background section, this section focuses on studies that have practically employed these models in breast cancer diagnosis, highlighting the relationship between the model used and the accompanying fusion strategies.

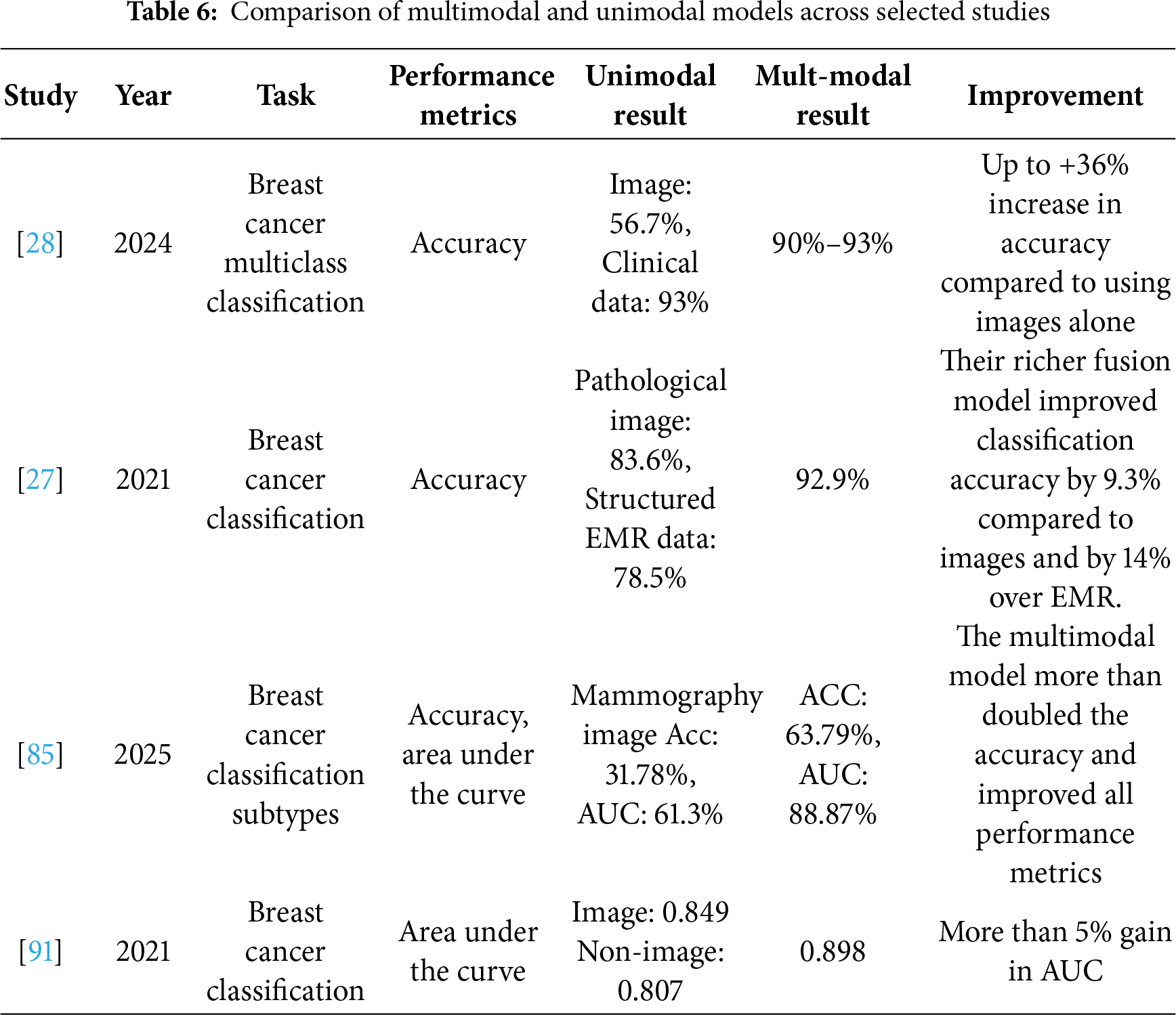

CNN-based modeling approaches

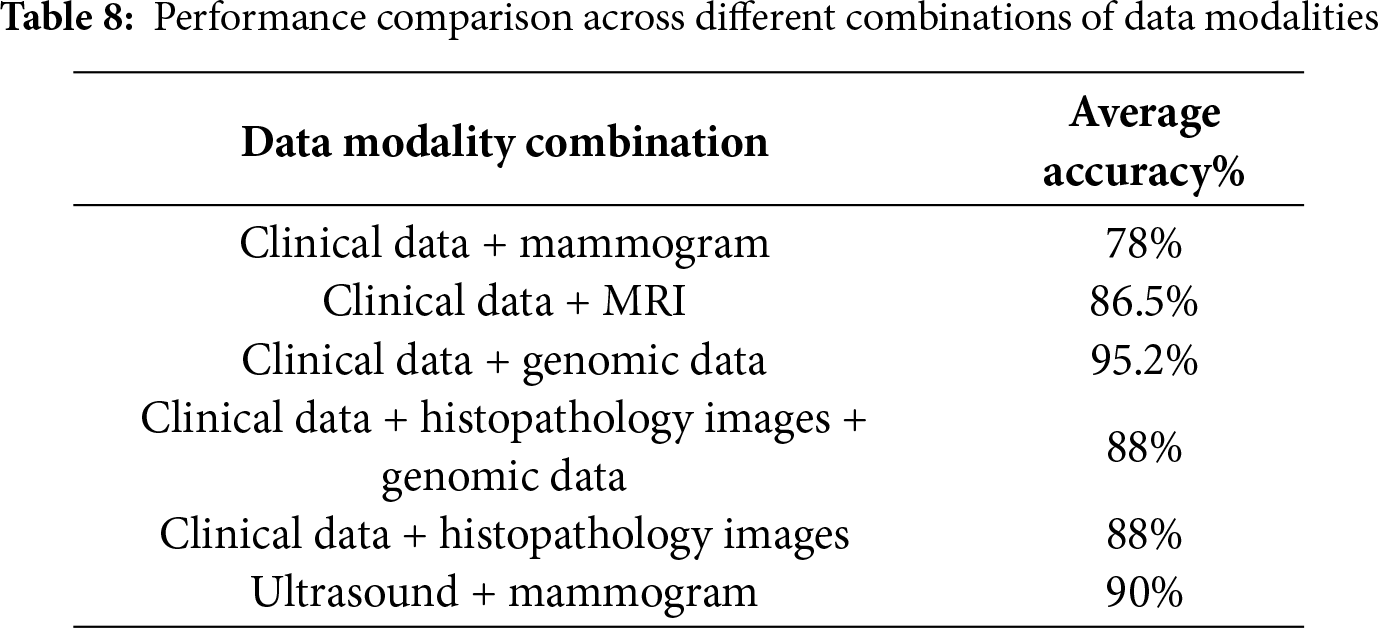

Ben Rabah et al. [85] employed a CNN-based architecture, specifically a pre-trained Xception, to extract features from images, comparing the multimodal approach with a unimodal model based solely on imaging data. The multimodal CNN model achieved an AUC of 88.87% and an accuracy of 63.79%, compared to an AUC of 61.3% and an accuracy of 31.78% for the unimodal model, demonstrating the significant superiority of the multimodal approach over the unimodal model using CNN networks. This comparison highlights the importance of integrating clinical features with imaging data in enhancing diagnostic accuracy and facilitating personalized treatment planning for breast cancer.

Combining multiple CNN structures can improve performance, as demonstrated in this study [43]. VGG, GoogLeNet, and DenseNet were combined to extract diverse features from mammogram images in the CBIS-DDSM dataset, using the strengths of various structures. These features were then combined with clinical data from the Wisconsin Breast Cancer Database, achieving higher diagnostic performance than many previous studies that relied on a single model.

Despite the remarkable success of CNNs in several fields, particularly in image analysis in the medical field, traditional CNNs cannot capture important and distinctive features in heterogeneous medical data. Attention mechanisms and gating functions can be utilized to optimize the feature extraction process and improve model interpretability and performance to improve the ability of these structures to focus on important information and features. In this context, ref. [86] proposed Sigmoid Gated Attention Convolutional Neural Network (SiGaAtCNN), a novel enhanced CNN architecture for breast cancer survival prediction. Using SiGaAtCNN to extract features and combine them as inputs for classification improved classification accuracy compared to using traditional CNN architectures. The authors in [87] designed a 17-layer CNN to balance model complexity, overfitting risk, and computational efficiency. This custom CNN outperforms other proposed models (transfer learning and pre-trained CNN) by achieving an accuracy of 0.96.

MLP-based modeling approaches

MLP can be used as a classifier in a multimodal breast cancer classification system, as used in this study [44] to classify fused features into benign and malignant tumors with a number of classifiers such as XGBoost and AdaBoost. Likewise, a study by [28] used MLP as one of the basic models to process and classify clinical data related to breast cancer risk factors. It played a pivotal role in the proposed multimodal system, achieving high performance in classifying clinical data, as well as when combined with CNN results using custom weights to improve accuracy. MLP contributed to raising the system’s final classification accuracy to 93% in the concatenation fusion method. Another study that used MLP as a primary classifier is [44], where it was applied after extracting and combining the most important features. This model proved effective in distinguishing between benign and malignant tumors, enhancing the reliance on neural networks in clinical decision support systems.

Emerging architecture

In addition to common models such as CNN and MLP, some studies have used advanced models to improve integration between different sources. Some studies have used an Autoencoder to compress representations, for example, the study by [88] highlights the effectiveness of deep autoencoder-based integration in handling multimodal data by applying variational autoencoders (VAEs) to extract informative features from high-dimensional data modalities—clinical records, genomic profiles, and histopathological images. These features form a unified representation of breast cancer classification after integration. Some studies have used LSTM networks to analyze and extract temporal information from gene expression data [47], due to their ability to remember long-term patterns. This model, after combining its features with other models and passing them to a final classifier, achieved an AUC of 0.97. Another study [89] used LSTM as a final classifier on fused data and achieved an AUC of 95%.

Some studies have relied on an ensemble model to combine the advantages of more than one model [90]. This study [26] used various classifiers such as SVM (Support Vector Machine), RF (Random forest), LR (Logistic Regression), KNN (K-Nearest Neighbors), XGBoost (Extreme Gradient Boosting), and ANN (artificial Neural Network). ANN achieved the highest accuracy in multi-modal settings and is thus the best classifier—the accuracy of ViT, BERT, and tabular combination with the auto-denoising encoder reached 94.18% with ANN. Although this type of model is less common with multimodal fusion, it shows a research trend towards taking advantage of the structural depth of advanced models to support the performance of diagnostic systems.

Accordingly, for multimodal breast cancer diagnosis, a wide variety of models are used depending on the data type. CNN is the most common choice for image data, while MLP, SVM, RF, and XGBoost are commonly used for clinical and structured data. For high-dimensional or sequential data, studies have relied on LSTM, GRU, and transformer-based architectures. Hybrid CNN-Transformer models have emerged for joint imaging and tabular clinical data, achieving state-of-the-art results. This reflects a broader trend in healthcare AI, where hybridization improves both predictive performance and explainability.

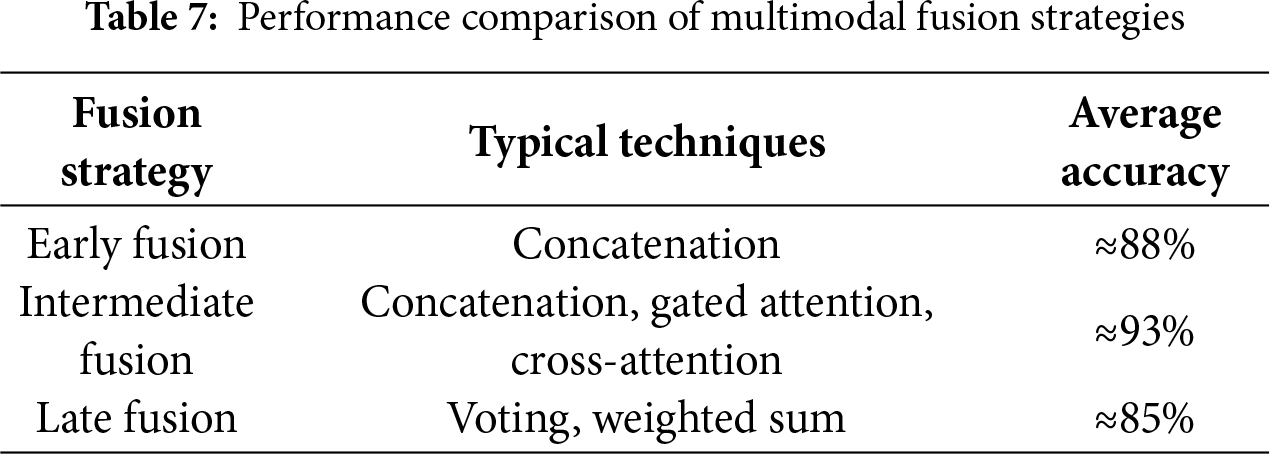

4.3 Fusion Strategies for Integrating Heterogeneous Data

Fusion strategies have become an essential element in the design of diagnostic systems, as the quality of the final representation and the accuracy of the model depend largely on how different types of data are combined. Many studies have employed different fusion strategies, such as early, intermediate, and late, using simple or advanced fusion techniques. Although operation-based techniques (concatenation, addition, multiplication) dominated earlier works, recent breast cancer research has shifted toward attention-tensor and graph-based methods that enable richer interaction modeling and align closely with modern AI architectures. This section highlights the fusion techniques adopted by studies in diagnostic systems and their impact on system performance.

This study [41] demonstrated the superiority of early fusion over late and unimodal models in predicting breast cancer molecular subtypes by integrating gene expression, copy number variation, clinical data features, and histopathological images using different fusion techniques such as concatenation and aggregation methods, achieving an accuracy of 88.07% when used with a random subspace SVM ensemble (MRSVM) model. Most of the studies described in this systematic review used concatenations as a simple fusion technique to integrate multimodal data in breast cancer diagnosis [27,28,87].

Intermediate fusion improved the model’s ability to classify breast cancer subtypes in a study conducted by [85] in which they combined features extracted from mammograms and clinical data. This method was able to use the information of each type comprehensively. The intermediate fusion strategy outperformed single-modality methods with an area under the curve (AUC) of 88.87%. Also, among the studies that applied intermediate integration is this study [91] which applied operation-based fusion methods to integrate MRI images with clinical data to classify breast cancer. This research compared concatenation, addition, and multiplication with a trainable architecture. Although all operations helped improve the model’s performance, concatenation slightly outperformed the other operations with an AUC of 0.898. This study demonstrated the effectiveness of simple arithmetic operations in improving the model’s accuracy by integrating various data.

In a study [28], they applied intermediate fusion and decision-level fusion strategies to improve breast cancer diagnosis. The authors used a CNN to extract features from mammogram images and a Multi-Layer Perception (MLP) to extract clinical features; the various features were then concatenated. This process allowed the model to learn from the combined representations. For late fusion, the authors used hard and soft voting techniques to combine predictions from the classifiers to arrive at the final decision.

However, while concatenation is the most commonly used method and contributes to the aggregation method in enhancing model performance, it cannot capture the complex interactions between modalities. Therefore, advanced techniques such as cross-attention, gated fusion, co-attention, and tensor fusion can be more effective in capturing inter-relationships between different modalities.

Attention-based fusion is used in [46,92,93] in multimodal learning (such as image and clinical data fusion). This study [93] proposed an iterative multi-attention mechanism to combine textual BI-RADS descriptors with mammogram features. This study applied cross-attention between BI-RADS and image features, self-attention within image features, and view-attention between mammogram views. This integration enables the model to iteratively refine the fused multimodal representation at multiple resolution levels. After combining text descriptions with images, accuracy improved by about 10% and specificity from 0.65 to 0.77. The fusion technique was not just a fusion of features, but rather a deep fusion that contributed to raising accuracy, improving sensitivity, and simulating the thinking of a doctor. Another type of fusion method based on self-attention and cross-attention is transformer-based attention; these models are mostly used in vision-language tasks. The GPDBN framework proposed by [58] fully exploits complementary information between pathologic image features and genomic data to enhance breast cancer prognosis prediction by applying a tensor-based bilinear fusion method and using inter-modality and intra-modality bilinear feature-encoding modules.