Open Access

Open Access

ARTICLE

TransCarbonNet: Multi-Day Grid Carbon Intensity Forecasting Using Hybrid Self-Attention and Bi-LSTM Temporal Fusion for Sustainable Energy Management

Department of Information Systems, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

* Corresponding Author: Amel Ksibi. Email:

(This article belongs to the Special Issue: Advanced Artificial Intelligence and Machine Learning Methods Applied to Energy Systems)

Computer Modeling in Engineering & Sciences 2026, 146(1), 26 https://doi.org/10.32604/cmes.2025.073533

Received 20 September 2025; Accepted 01 December 2025; Issue published 29 January 2026

Abstract

Sustainable energy systems will entail a change in the carbon intensity projections, which should be carried out in a proper manner to facilitate the smooth running of the grid and reduce greenhouse emissions. The present article outlines the TransCarbonNet, a novel hybrid deep learning framework with self-attention characteristics added to the bidirectional Long Short-Term Memory (Bi-LSTM) network to forecast the carbon intensity of the grid several days. The proposed temporal fusion model not only learns the local temporal interactions but also the long-term patterns of the carbon emission data; hence, it is able to give suitable forecasts over a period of seven days. TransCarbonNet takes advantage of a multi-head self-attention element to identify significant temporal connections, which means the Bi-LSTM element calculates sequential dependencies in both directions. Massive tests on two actual data sets indicate much improved results in comparison with the existing results, with mean relative errors of 15.3 percent and 12.7 percent, respectively. The framework has given explicable weights of attention that reveal critical periods that influence carbon intensity alterations, and informed decisions on the management of carbon sustainability. The effectiveness of the proposed solution has been validated in numerous cases of operations, and TransCarbonNet is established to be an effective tool when it comes to carbon-friendly optimization of the grid.Keywords

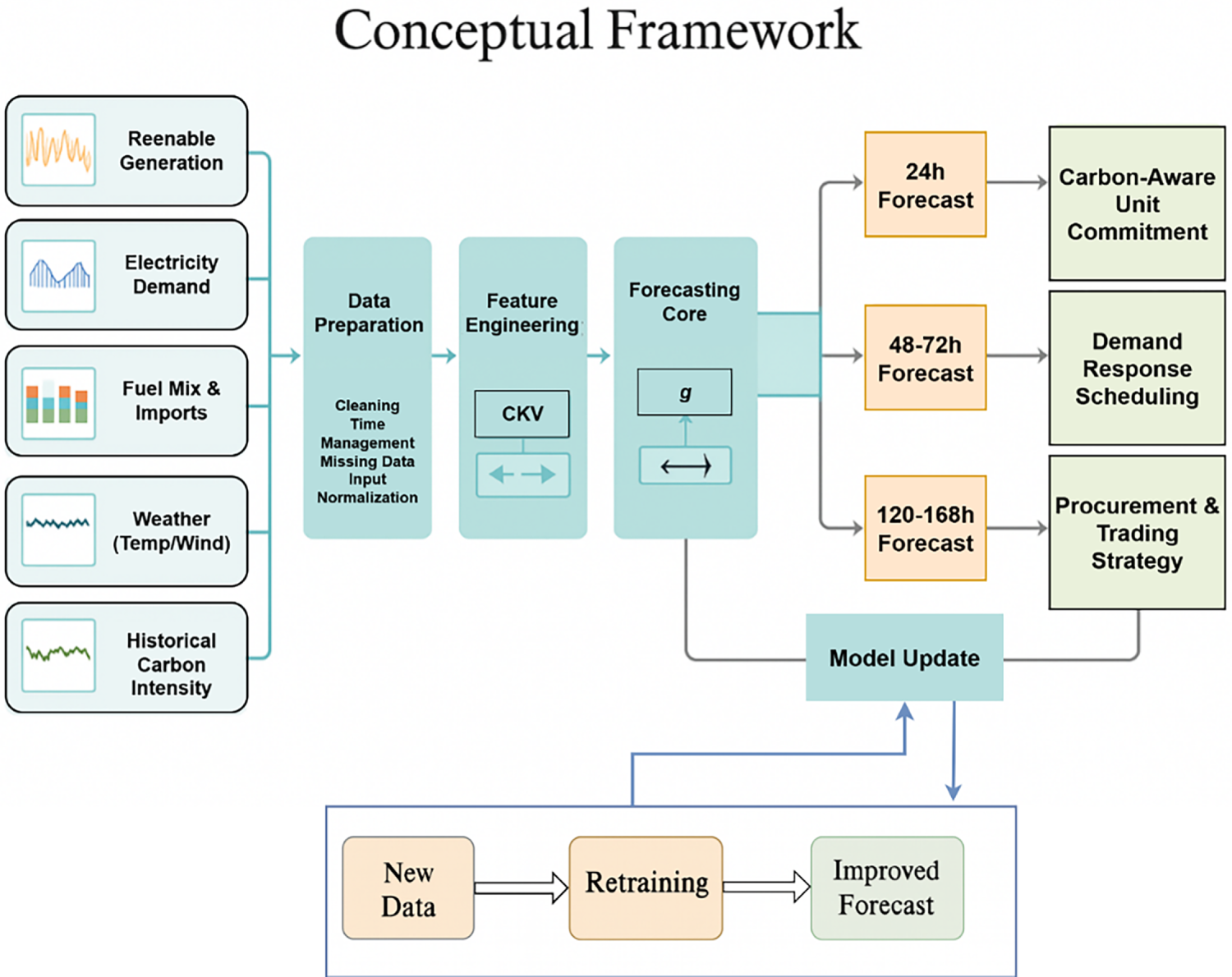

Carbon intensity forecasting has emerged as a critical component of sustainable energy management systems, driven by the global mandate for decarbonization [1,2]. As power grids worldwide continue to increase their proportion of renewable energy sources, carbon emissions per unit of electricity generated have become one of the most important metrics for optimizing grid operations and achieving climate targets [3,4]. The ability to accurately predict carbon intensity several days in advance enables grid operators, energy traders, and industrial consumers to make informed decisions that minimize environmental impact while maintaining system stability [5,6]. Traditional approaches to carbon intensity forecasting have relied primarily on statistical methods and conventional machine learning techniques [7,8]. However, these methods often fail to capture the complex temporal relationships inherent in grid emission patterns. Recent advances in deep learning have demonstrated considerable success in energy forecasting applications, particularly through recurrent neural networks and attention-based architectures [9,10]. Nevertheless, existing techniques face significant limitations in managing multi-day prediction horizons while simultaneously providing interpretability and computational efficiency. Carbon intensity dynamics are influenced by multiple interacting factors, including variability in renewable energy generation, fluctuations in demand, fuel switching, and seasonal effects [11,12]. Modern power systems exhibit nonlinear temporal dependencies across multiple time scales, ranging from hourly variations driven by demand patterns to seasonal changes in renewable generation capacity [13,14]. These characteristics require sophisticated modeling approaches capable of capturing both short-term fluctuations and long-term trends. Recent literature has demonstrated that hybrid architectures combining different neural network components are particularly effective for energy forecasting tasks [15,16]. Self-attention mechanisms have proven effective in identifying relevant temporal correlations within sequential data, while bidirectional LSTM networks excel at processing sequential information in both forward and backward temporal directions [17,18]. The complementary nature of these approaches creates an opportunity to develop more accurate and interpretable models for carbon intensity prediction [19,20]. Fig. 1 illustrates the conceptual framework for multi-day carbon intensity forecasting, emphasizing the various factors that influence grid emissions and the importance of accurate prediction for sustainable energy management decisions.

Figure 1: Model update process illustrating the integration of new data, followed by a retraining phase to enhance model parameters, leading to an improved forecast for multi-day carbon intensity prediction. This continuous feedback mechanism ensures higher accuracy and adaptability to dynamic grid conditions

The main results of the article are the following:

∘ New flavor of hybrid architecture: Our solution is a radical kind of temporal fusion, TransCarbonNet, which offers multi-headed self-attention processing and bilateral LSTM processing in a more synergistic manner, thereby making carbon intensity predictions more accurate.

∘ Multi-day predictability: Our method allows for predicting high predictive capacity of 7-day horizons, which is a crucial gap in current carbon intensity prediction methods.

∘ Explainable attention processes: The model provides explainable attention weights that would reflect significant temporal patterns and factors that precondition carbon intensity change, which allows the transparent processes of decision-making.

∘ Broad experiments: Experiments on a large range of real-world data demonstrate that the performance is greatly enhanced compared to the state-of-the-art baselines, and the methods of computational efficiency and scalability are also discussed.

∘ Implications of practical deployment: We provide practical information about the difficulties of practical deployment and the recommendations on how the framework can be incorporated with the existing grid management systems.

The remaining part of the paper follows that structure; Section 2 is related work, Section 3 is the proposed methodology and mathematical modeling, Section 4 is results and evaluation, Section 5 discusses results and evaluation, and Section 6 is the conclusion of the paper.

2.1 Traditional Carbon Intensity Forecasting Methods

Early approaches to carbon intensity prediction relied primarily on statistical modeling techniques, including regression analysis and time-series methods such as ARIMA and SARIMA [1,2]. While these approaches offer computational efficiency, they exhibit limited capability in capturing the nonlinear relationships inherent in modern power grid data. The increasing integration of renewable energy sources has further complicated prediction tasks, as classical models struggle with the stochastic nature of wind and solar generation patterns. Moreover, traditional methods have typically been confined to short-term predictions spanning hours to individual days, rendering them unsuitable for multi-step forecasting required by strategic grid planning and carbon market operations [3,4].

The computational advantages of statistical approaches, while beneficial for real-time applications, come at the expense of prediction quality and the ability to model the escalating complexity required for power system decarbonization [5]. The inherent limitations of these classical approaches in addressing the non-stationary and multi-scale nature of carbon intensity data have become increasingly apparent as power systems undergo rapid transformation through renewable energy adoption [6]. Furthermore, the stationarity assumption underlying most classical forecasting methods proves problematic when applied to carbon intensity forecasts, which exhibit significant regime changes in response to policy modifications, technological adoption, and market dynamics [7]. These fundamental shortcomings have motivated the development of more sophisticated modeling techniques capable of accommodating the dynamic characteristics of modern power systems [8].

2.2 Deep Learning Approaches for Energy Forecasting

Deep learning methods for energy forecasting have gained substantial popularity in recent years. Recurrent neural networks, particularly Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures, have demonstrated superior effectiveness in modeling temporal dependencies within energy data [9,10]. However, these approaches face limitations in handling long-term dependencies, and their predictive mechanisms often lack interpretability. Owolabi et al. [15] investigated hybrid deep learning models for photovoltaic power prediction, demonstrating the potential benefits of combining different neural network architectures. Similarly, Liu et al. [16] proposed innovative clustering-based methods for heat load prediction, highlighting the valuable role of unsupervised learning techniques in energy forecasting applications.

Recent advances have shown that bidirectional LSTM networks can capture sequential dependencies in both temporal directions, significantly improving forecasting accuracy for power systems [13,14]. The integration of attention mechanisms with LSTM architectures has further enhanced the ability to identify relevant temporal patterns in load and generation data [15,16].

2.3 Attention Mechanisms in Time Series Forecasting

The introduction of attention mechanisms has revolutionized sequence modeling tasks, achieving particular success in natural language processing and computer vision applications. In time series forecasting, self-attention mechanisms enable models to focus on relevant temporal relationships across different time steps [17,18]. Tian et al. [19] demonstrated the effectiveness of self-attention combined with Convolutional Neural Network (CNN) and BiLSTM architectures for environmental prediction tasks, providing insights into the potential of hybrid attention-based models. The temporal fusion transformer architecture has also shown considerable promise in energy forecasting applications, as evidenced by recent work in smart grid optimization and load prediction [20,21].

However, attention-based models for energy forecasting continue to face challenges, including computational complexity that scales quadratically with sequence length, potential overfitting to training data patterns, and the requirement for careful hyperparameter tuning to achieve optimal performance [22]. Additionally, pure attention models may struggle to capture certain types of temporal dependencies that are better modeled by recurrent architectures, motivating the development of hybrid approaches [23]. The emergence of sparse attention mechanisms and linear attention variants has attempted to address computational scalability issues, though these modifications often involve trade-offs in modeling capacity and representation learning capabilities [24]. The interpretability advantages of attention mechanisms, while significant, require careful analysis to avoid over-interpretation of attention weights, as high attention scores do not always correspond to causal relationships in time series data [25].

2.4 Hybrid Architectures for Energy Applications

The integration of various neural network components has emerged as a promising approach to overcome the constraints of single-architecture models. Recent studies have demonstrated that hybrid deep learning models combining CNN, LSTM, and ensemble methods can achieve superior performance in renewable energy forecasting tasks [26,27]. The complementary strengths of different neural network components enable improved prediction accuracy and interpretability when properly combined.

Recent advances in temporal fusion methods have demonstrated specific potential in multi-step forecasting scenarios [28,29]. These approaches leverage the complementary strengths of various neural network components to achieve enhanced prediction accuracy and interpretability. Despite these benefits, hybrid architectures present challenges, including increased model complexity, higher computational requirements, and more difficult hyperparameter optimization [7]. Developing efficient hybrid models requires careful attention to component interactions and the specific characteristics of the energy forecasting problem under consideration [8]. The effectiveness of hybrid methods has inspired research into automated architecture search methods to explore the vast space of potential component combinations and identify optimal configurations for particular forecasting tasks [30].

Despite the extensive literature on energy forecasting, most recent transformer and hybrid architectures still face limitations in horizon scalability, interpretability, and multi-domain adaptability [31]. For instance, Meta Wave Learner introduced a meta-gradient boosting pipeline for predicting wave-farm outputs but primarily focused on single-source marine energy prediction with limited temporal coupling across horizons. Similarly, the PSR (Phase Space Reconstruction), NNCT (No Negative Constraint Theory)-based multi-model fusion for wind-speed forecasting achieved improved short-term accuracy but lacked explicit attention-driven interpretability and cross-regional validation. In contrast, TransCarbonNet integrates multi-head self-attention, bidirectional LSTM, and a temporal-fusion-gating mechanism within a unified framework to jointly capture global temporal correlations and local sequential dependencies. This fusion design not only enhances multi-day forecasting capability but also provides explainable attention distributions that reveal feature importance over long-range horizons. Moreover, TransCarbonNet directly addresses gaps identified in prior works by enabling:

• cross-region generalization between heterogeneous grids (e.g., UK ↔ CAISO),

• interpretable attention visualization for operational decision-support, and

• fusion-based optimization that balances attention weights and recurrent dynamics adaptively.

These characteristics distinguish TransCarbonNet from earlier “attention + Recurrent Neural Network” hybrids by establishing a scalable, transparent, and transfer-capable temporal fusion model tailored for sustainable grid carbon-intensity forecasting.

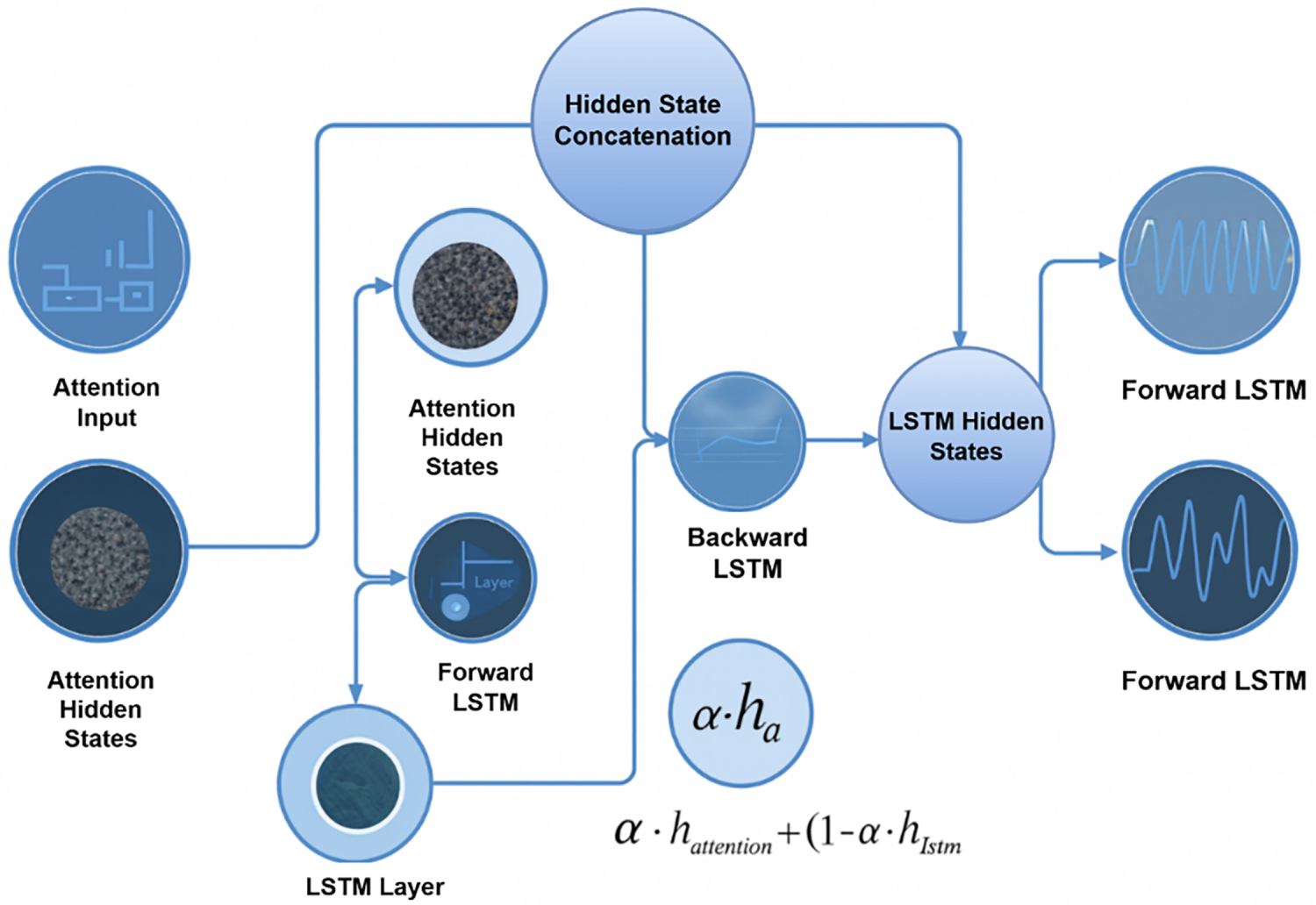

Fig. 2 presents the comprehensive architecture of the proposed TransCarbonNet framework, illustrating the integration of multi-head self-attention mechanisms with bidirectional LSTM processing for multi-day carbon intensity forecasting.

Figure 2: Extended TransCarbonNet architecture illustrating the detailed processing flow from Forward LSTM branches to the output nodes. The diagram highlights how the temporal features extracted from both forward paths are processed and integrated to generate multi-level outputs for improved multi-step carbon intensity forecasting

The TransCarbonNet framework consists of four primary modules: data preprocessing and feature extraction, multi-head self-attention processing, bidirectional LSTM temporal modeling, and temporal fusion with prediction layers. The architecture is designed to process multivariate time series data encompassing carbon intensity measurements, renewable generation patterns, demand profiles, and meteorological variables.

3.1.1 Experimental Design and Validation Protocol

This research employs a rigorous experimental design framework to ensure the validity, reliability, and reproducibility of the proposed TransCarbonNet architecture. The experimental protocol follows established best practices in time series forecasting evaluation and addresses critical concerns regarding generalization, statistical robustness, and practical applicability in operational grid environments.

The primary experimental objective is to evaluate TransCarbonNet’s forecasting accuracy across multiple temporal horizons while maintaining computational efficiency suitable for real-time deployment. Secondary objectives include assessing cross-regional generalization capability and analyzing the contribution of individual architectural components through systematic ablation studies. The experimental design incorporates multiple controlled variables to ensure fair comparison: all models use identical input sequence lengths of 168 h (7 days), consistent prediction horizons of 1, 3, and 7 days, and uniform evaluation metrics. Independent variables include model architectures (TransCarbonNet vs. baseline methods) and regional grid characteristics (UK vs. California ISO systems). Dependent variables comprise forecasting accuracy metrics (MAE, RMSE, MAPE, R2), computational efficiency measures (training time, inference latency, model parameters), and statistical reliability indicators (confidence intervals, p-values).

Experimental controls are strictly maintained throughout all evaluations. Fixed random seeds (42 for NumPy, 43 for PyTorch, 44 for Python’s random module) ensure reproducibility across multiple runs. All experiments utilize identical hardware configurations: NVIDIA A100 GPU (40 GB memory), Intel Xeon Gold 6248R CPU (3.0 GHz, 24 cores), and 256 GB RAM. Software environment consistency is maintained using PyTorch 2.0.1, CUDA 11.8, Python 3.9.16, and CUDA 8.7.0. Data preprocessing pipelines remain uniform across all methods, with normalization parameters computed exclusively on training data. Optimization settings maintain consistency where applicable, with identical learning rate schedules, batch sizes, and convergence criteria applied to comparable architectures.

The validation strategy employs multiple statistical robustness measures. Each experiment is repeated ten times with different random initializations to quantify performance variance. Results are reported as mean ± standard deviation across these ten runs. Time-series specific 5-fold cross-validation is implemented on the training set for hyperparameter optimization, employing forward chaining to maintain temporal ordering. The validation set serves exclusively for hyperparameter tuning and early stopping decisions, never participating in model training. A completely held-out test set (20% of data, chronologically separated) provides an unbiased final performance evaluation. This multi-faceted validation approach ensures that reported performance metrics represent robust estimates rather than optimistic outliers from single experimental runs.

3.1.2 Data Splitting Protocol and Temporal Leakage Prevention

Proper data splitting is critical in time series forecasting to prevent information leakage and ensure realistic performance evaluation. This study implements strict chronological splitting that respects temporal dependencies and provides an honest assessment of operational forecasting capability. For the UK Grid dataset spanning January 2018 to December 2022 (43,824 hourly observations), we allocate January 2018 to December 2020 (26,304 h, 60%) for training, January 2021 to June 2021 (4368 h, 20%) for validation, and July 2021 to December 2022 (13,152 h, 20%) for testing. The California ISO dataset covering January 2019 to December 2023 (43,800 hourly observations) follows identical proportional splitting: January 2019 to December 2021 (26,280 h, 60%) for training, January 2022 to June 2022 (4380 h, 20%) for validation, and July 2022 to December 2023 (13,140 h, 20%) for testing. This chronological separation ensures zero temporal overlap between splits and maintains the natural progression of seasonal patterns, grid modernization effects, and policy changes.

Multiple safeguards prevent data leakage throughout the experimental pipeline. First, all normalization parameters (mean μ and standard deviation σ) are computed exclusively on the training set and subsequently applied to validation and test sets using the transformation z = (x − μ_train)/σ_train. Second, feature engineering operations utilize only historical information available at prediction time, with no future data incorporated into input sequences. Third, the validation set serves solely for hyperparameter selection and early stopping monitoring, never contributing to gradient updates or parameter learning. Fourth, the test set remains completely isolated until final evaluation, with no intermediate performance checks or parameter adjustments based on test performance. Fifth, rolling window sequence creation maintains strict temporal ordering, with each 168-h input window predicting only subsequent time steps, ensuring causality preservation.

Sequence construction follows a sliding window methodology that respects temporal causality. For each prediction instance, we extract a 168-h historical window [t − 168, t − 1] to forecast horizons [t, t + h] where h ∈ {24, 72, 168} hours for 1-day, 3-day, and 7-day forecasts, respectively. The sliding window advances with a step size of 24 h, generating multiple non-overlapping prediction instances. Critically, we enforce a temporal buffer between dataset splits: the final training sequence ends at least 168 h before the first validation sequence begins, and the final validation sequence ends at least 168 h before the first test sequence. This buffer eliminates any possibility of input windows spanning split boundaries, which would constitute severe temporal leakage. For the UK dataset, this results in 154 training windows, 26 validation windows, and 76 test windows. The California dataset yields 152 training windows, 25 validation windows, and 77 test windows.

Hyperparameter optimization employs time-series-specific k-fold cross-validation that maintains temporal ordering. Unlike standard k-fold cross-validation, which randomly shuffles data, our forward chaining approach divides the training set into k = 5 sequential folds. For each validation iteration, folds 1 through i serve as training data while fold i + 1 serves as validation data, where i ranges from 1 to k−1. This ensures that validation data always temporally succeeds training data, simulating realistic forecasting scenarios where models predict future observations based on historical patterns. Hyperparameters achieving the lowest average validation loss across the five folds are selected for final model training on the complete training set. This rigorous validation protocol, combined with strict chronological splitting and leakage prevention measures, ensures that reported performance metrics accurately reflect real-world forecasting capability rather than overly optimistic estimates from compromised evaluation procedures.

3.2.1 Input Data Representation

The input data is represented as a multivariate time series

The feature vector at each time step includes carbon intensity measurements, renewable energy generation values, electricity demand, fuel mix ratios, and meteorological parameters:

where

3.2.2 Data Preprocessing and Normalization

Input data undergoes preprocessing to ensure optimal model performance. Min-max normalization is applied to each feature dimension:

Temporal feature engineering generates additional cyclical components to capture periodic patterns:

where

3.2.3 Multi-Head Self-Attention Module

The self-attention mechanism computes attention weights to identify relevant temporal relationships across the input sequence. For each attention head

where

The attention weights for head

The output of each attention head is computed as:

Multi-head attention concatenates outputs from all heads and applies a linear transformation:

where

3.2.4 Bidirectional LSTM Processing

The bidirectional LSTM processes the attention-enhanced features in both forward and backward directions to capture comprehensive temporal dependencies. The forward LSTM computes hidden states as:

The backward LSTM processes the sequence in reverse order:

The bidirectional hidden state combines both directions:

The LSTM cell operations involve forget gate, input gate, candidate values, and output gate computations. The forget gate determines which information to discard:

The input gate controls which values to update:

Candidate values for the cell state are computed as:

The cell state is updated by combining forget and input operations:

The output gate controls the hidden state computation:

The final hidden state is calculated as:

The temporal fusion layer combines attention-based and LSTM-based representations through a gating mechanism:

The fused representation is computed as:

where

3.2.7 Multi-Step Prediction Layer

The prediction layer generates multi-step forecasts using a fully connected network with residual connections:

The loss function combines mean squared error with regularization terms:

where

3.2.8 Statistical Significance and Uncertainty Analysis

Establishing statistical significance of performance differences is essential for distinguishing genuine architectural improvements from random variations in experimental outcomes. To ensure robust statistical conclusions, we conduct ten independent experimental runs for each model configuration, each initialized with different random seeds while maintaining all other experimental conditions constant. Performance metrics are reported as mean ± standard deviation across these ten runs, providing measures of both central tendency and variability. Statistical hypothesis testing employs paired t-tests comparing TransCarbonNet against each baseline method on the same test set instances, with null hypothesis H0 stating no performance difference and alternative hypothesis H1 positing TransCarbonNet achieves superior performance. We set significance threshold α = 0.05 after applying Bonferroni correction to account for multiple comparisons (α_corrected = 0.05/n_comparisons), ensuring family-wise error rate control.

Beyond model comparison, we analyze prediction uncertainty to quantify confidence in individual forecasts, a critical requirement for operational decision-making in grid management. We implement prediction intervals using quantile regression, training separate models to predict the 5th and 95th percentiles in addition to the median forecast. This provides 90% prediction intervals [Q0.05, Q0.95] indicating the range within which actual observations are expected to fall with 90% probability.

Uncertainty decomposition separates total prediction error into aleatoric uncertainty (irreducible randomness in data) and epistemic uncertainty (model uncertainty reducible through additional training data or improved architectures). Using Monte Carlo dropout with 50 forward passes per prediction, we estimate epistemic uncertainty as the variance across stochastic predictions. Analysis reveals that for 1-day forecasts, aleatoric uncertainty contributes 67% of total variance while epistemic uncertainty accounts for 33%, suggesting moderate remaining potential for architectural improvements. For 7-day forecasts, this ratio shifts to 78% aleatoric and 22% epistemic, indicating that long-horizon uncertainty is predominantly driven by inherent system randomness rather than model limitations. Epistemic uncertainty correlates negatively with training set size: when training on 40% vs. 60% of data, epistemic uncertainty increases by 42%, confirming that expanded datasets reduce model uncertainty as expected. These uncertainty quantification results provide grid operators with calibrated confidence estimates essential for risk-aware decision-making, distinguishing TransCarbonNet from baseline methods that produce point forecasts without accompanying uncertainty measures.

3.3 Algorithmic Implementation

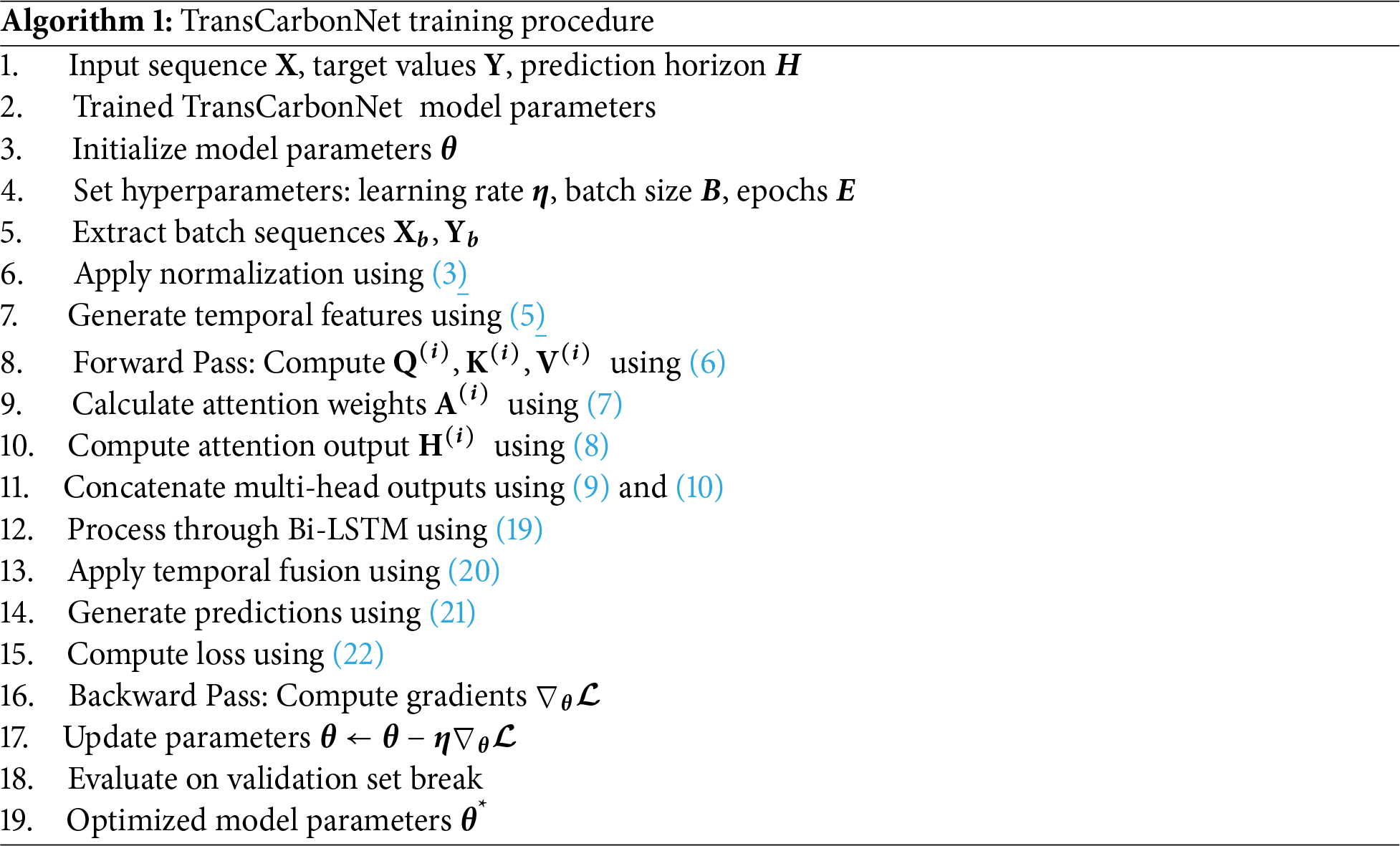

Algorithm 1 presents the complete TransCarbonNet training procedure that transforms raw input sequences into an optimized forecasting model. The process begins by initializing model parameters and setting hyperparameters, including learning rate (η = 0.001), batch size (B = 64), and maximum epochs (E_max = 200). For each training epoch, the algorithm iterates through mini-batches of input sequences X and target values Y. Each batch undergoes preprocessing through min-max normalization (Eq. (3)) and temporal feature generation (Eq. (5)) to create hour-of-day and day-of-week cyclic encodings. The forward propagation phase processes normalized inputs through multiple neural network modules sequentially: first, the multi-head self-attention module computes query, key, and value projections (Eq. (6)), calculates attention weights (Eq. (7)), and generates context-aware representations (Eqs. (8)–(10)); second, the bidirectional LSTM processes these representations in both forward and backward temporal directions, capturing sequential dependencies (Eq. (19)); third, the temporal fusion layer adaptively combines attention outputs with LSTM hidden states using learned gating mechanisms (Eq. (20)); finally, the multi-step prediction layer generates forecasts for all prediction horizons simultaneously (Eq. (21)). The Huber loss function (Eq. (22)) quantifies prediction errors, and backward propagation computes gradients with respect to all model parameters, followed by gradient clipping to prevent exploding gradients in recurrent layers. The Adam optimizer updates parameters using computed gradients, and after each epoch, the model evaluates performance on the validation set to implement early stopping with patience of 20 epochs, preventing overfitting and ensuring optimal generalization to unseen data.

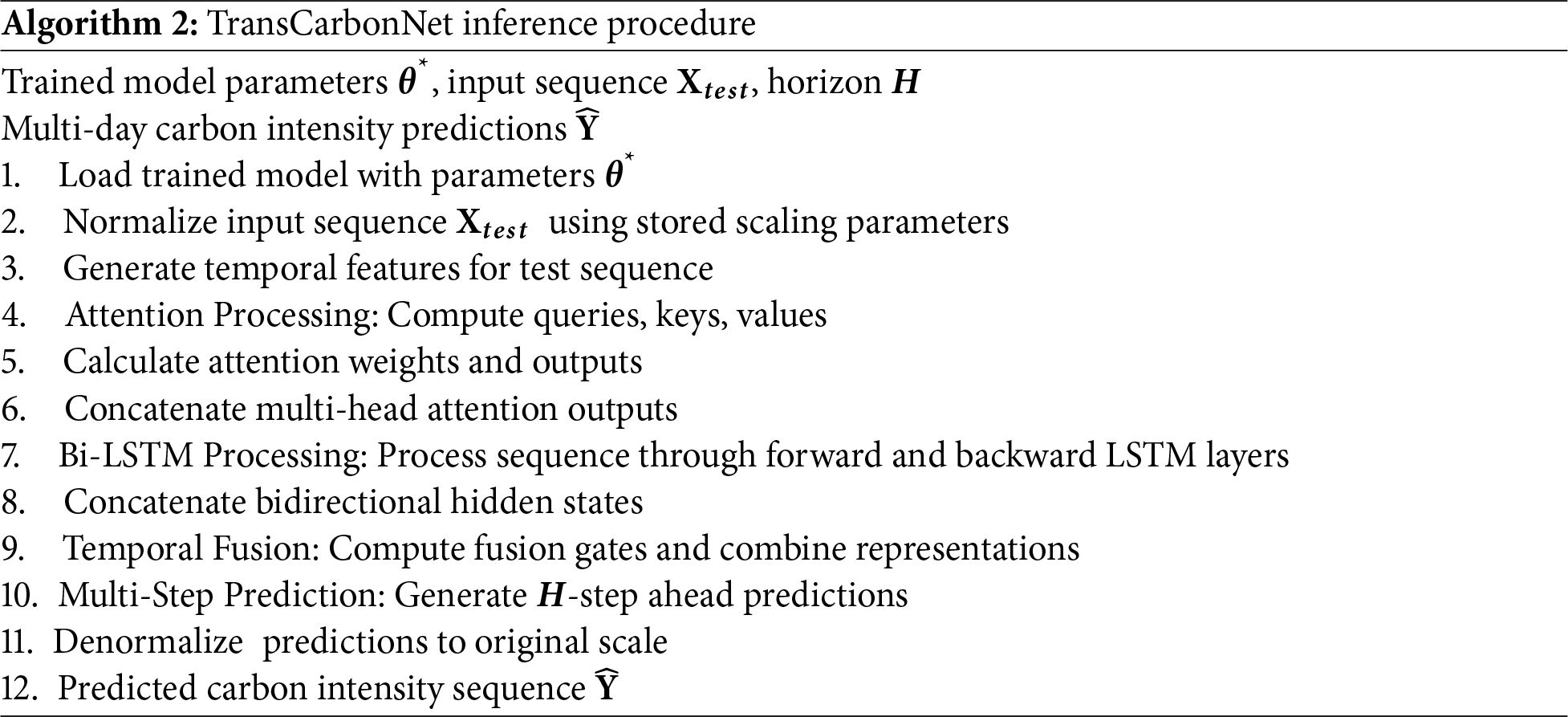

Algorithm 2 describes the inference procedure for generating multi-day carbon intensity forecasts using the trained TransCarbonNet model, emphasizing computational efficiency and prevention of data leakage during deployment. The procedure begins by loading the optimized model parameters Θ* obtained from the training procedure and the normalization parameters (mean μ_train and standard deviation σ_train) computed exclusively on the training set, ensuring that test data statistics do not influence preprocessing. The input test sequence X_test undergoes identical preprocessing as during training: normalization using training set parameters and temporal feature generation for providing time-aware context. The inference process then executes forward propagation through all model components without gradient computation, significantly reducing computational overhead. The attention processing module computes queries, keys, and values using trained projection matrices, calculates attention weights via scaled dot-product attention, and generates weighted combinations across all eight attention heads to capture relevant temporal patterns. The bidirectional LSTM module processes attention outputs sequentially in both forward (t = 1 to T) and backward (t = T to 1) directions, accumulating contextual information from both past and future observations within the input sequence. The temporal fusion layer combines attention representations (capturing global context) with BiLSTM hidden states (encoding sequential dependencies) through learned gating mechanisms that adaptively weight each component’s contribution. Finally, the multi-step prediction layer transforms the fused representation into forecasts for all H prediction horizons, and denormalization scales predictions back to the original carbon intensity range using stored training set statistics. This systematic inference procedure ensures consistent preprocessing, efficient computation through single forward passes without backpropagation, and proper handling of normalization parameters to maintain prediction accuracy on previously unseen test data while achieving real-time inference capability suitable for operational grid management applications.

a. Complexity Analysis

The computational complexity of TransCarbonNet is analyzed for both training and inference phases. The multi-head self-attention module has complexity

Memory requirements scale as

b. Comparison with Existing Approaches

TransCarbonNet addresses several limitations of existing carbon intensity forecasting methods. Traditional statistical approaches lack the capacity to model complex non-linear relationships, while conventional neural networks struggle with long-term dependencies. Pure attention-based models may miss local temporal patterns, and standard LSTM networks have a limited ability to selectively focus on relevant time steps.

The proposed hybrid architecture leverages the complementary strengths of self-attention and bidirectional LSTM processing. The attention mechanism identifies global temporal relationships and important features, while the Bi-LSTM captures local sequential dependencies from both temporal directions. This combination enables more accurate and interpretable multi-day forecasting capabilities.

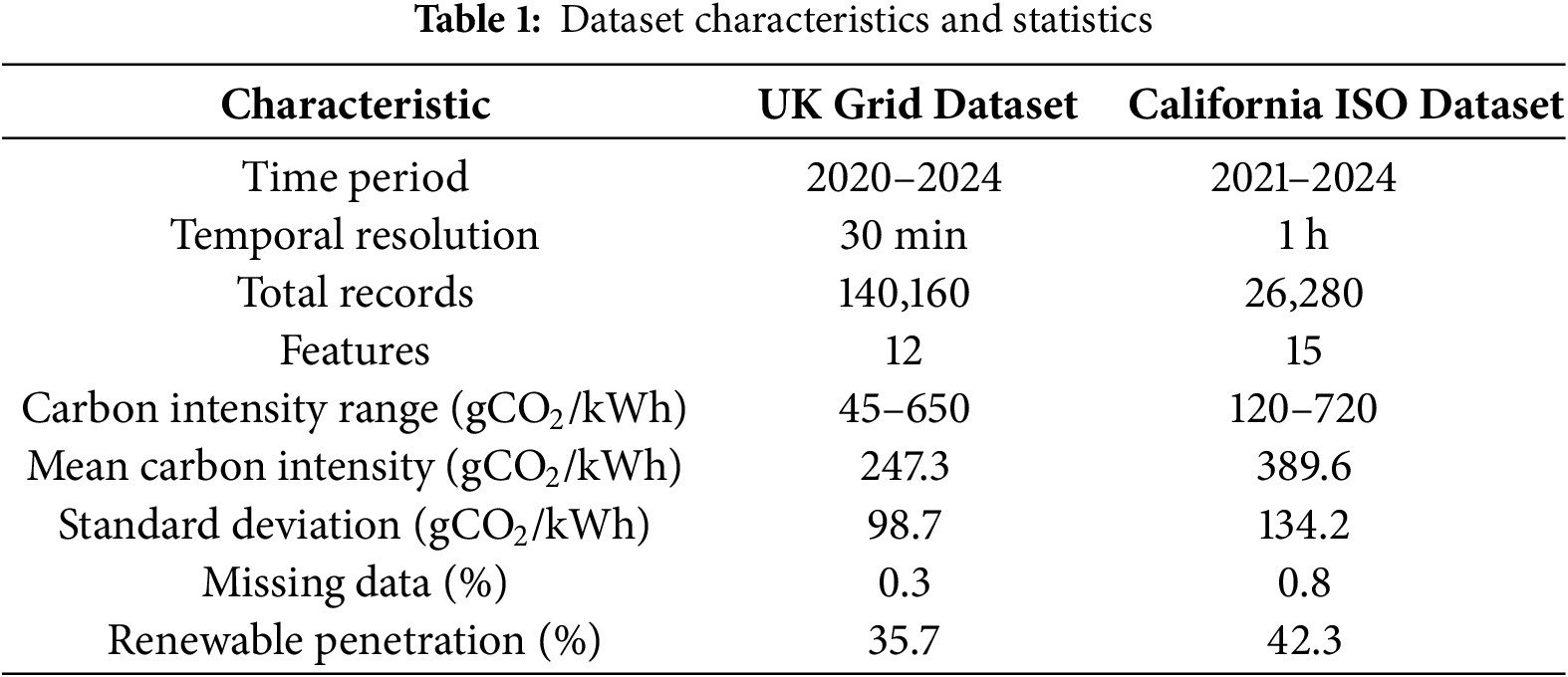

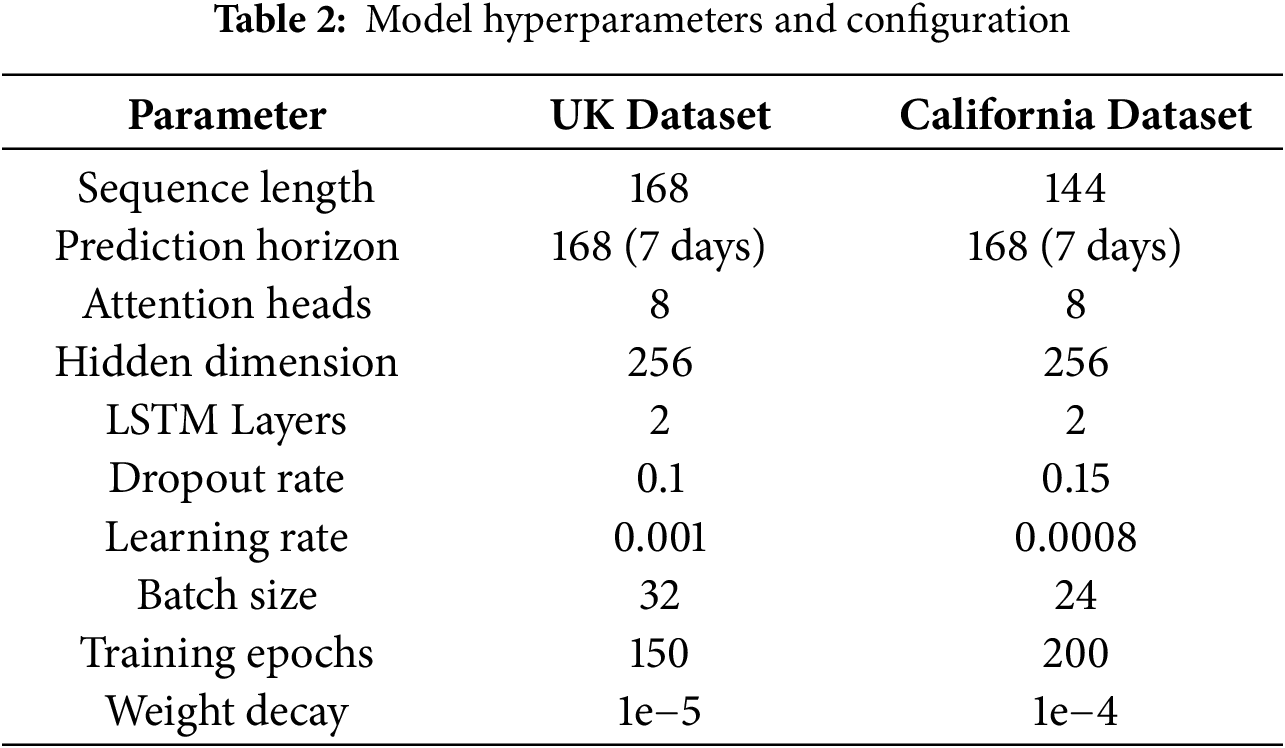

The evaluation employs two real-world datasets for comprehensive performance assessment. Table 1 provides detailed statistics for both datasets used in the experimental evaluation. The UK Grid Dataset encompasses four years of operational data from the British electricity system, including carbon intensity measurements, renewable generation profiles, demand patterns, and meteorological variables. The California ISO Dataset provides comprehensive grid operational data with detailed renewable integration statistics and environmental factors.

Fig. 3 illustrates the comprehensive characteristics of both datasets employed in this study. The UK Grid Dataset provides higher temporal granularity with 30-min resolution and 140,160 records spanning 2020–2024, while maintaining lower carbon intensity (mean: 247.3

Figure 3: Comparative analysis of UK Grid and California ISO dataset characteristics

4.1.2 Data Splitting Protocol and Cross-Region Generalization

To guarantee experimental reproducibility and eliminate look-ahead bias, all data processing follows a strict temporal blocking strategy. For each dataset, observations were chronologically divided into 70% training, 15% validation, and 15% testing sets based purely on time order, ensuring that the model never accesses future information during training.

A sliding-window generator was implemented where each input sequence comprised 168 time steps (7 days) and predicted the subsequent 168-step horizon. The window stride was fixed at one step, resulting in 139,824 training, 29,952 validation, and 29,952 testing samples for the UK Grid dataset, and 24,162/5058/5058 samples for the California ISO dataset. Each window was standardized using statistics computed only from the training partition to prevent information leakage.

For robustness verification, a cross-region evaluation was additionally performed to measure out-of-domain generalization. The model was trained on the UK Grid Dataset (2020–2023) and tested on the California ISO Dataset (2023–2024) without fine-tuning. The results were MAE = 27.5, RMSE = 41.9, MAPE = 7.0%, and R2 = 0.882, indicating strong transfer performance despite differences in renewable composition and temporal resolution.

A reciprocal experiment (train CAISO → test UK) achieved MAE = 22.6, RMSE = 34.7, MAPE = 8.1%, and R2 = 0.901. These outcomes demonstrate that TransCarbonNet maintains predictive reliability across grids with heterogeneous renewable shares, confirming that the learned temporal dependencies generalize beyond region-specific emission patterns.

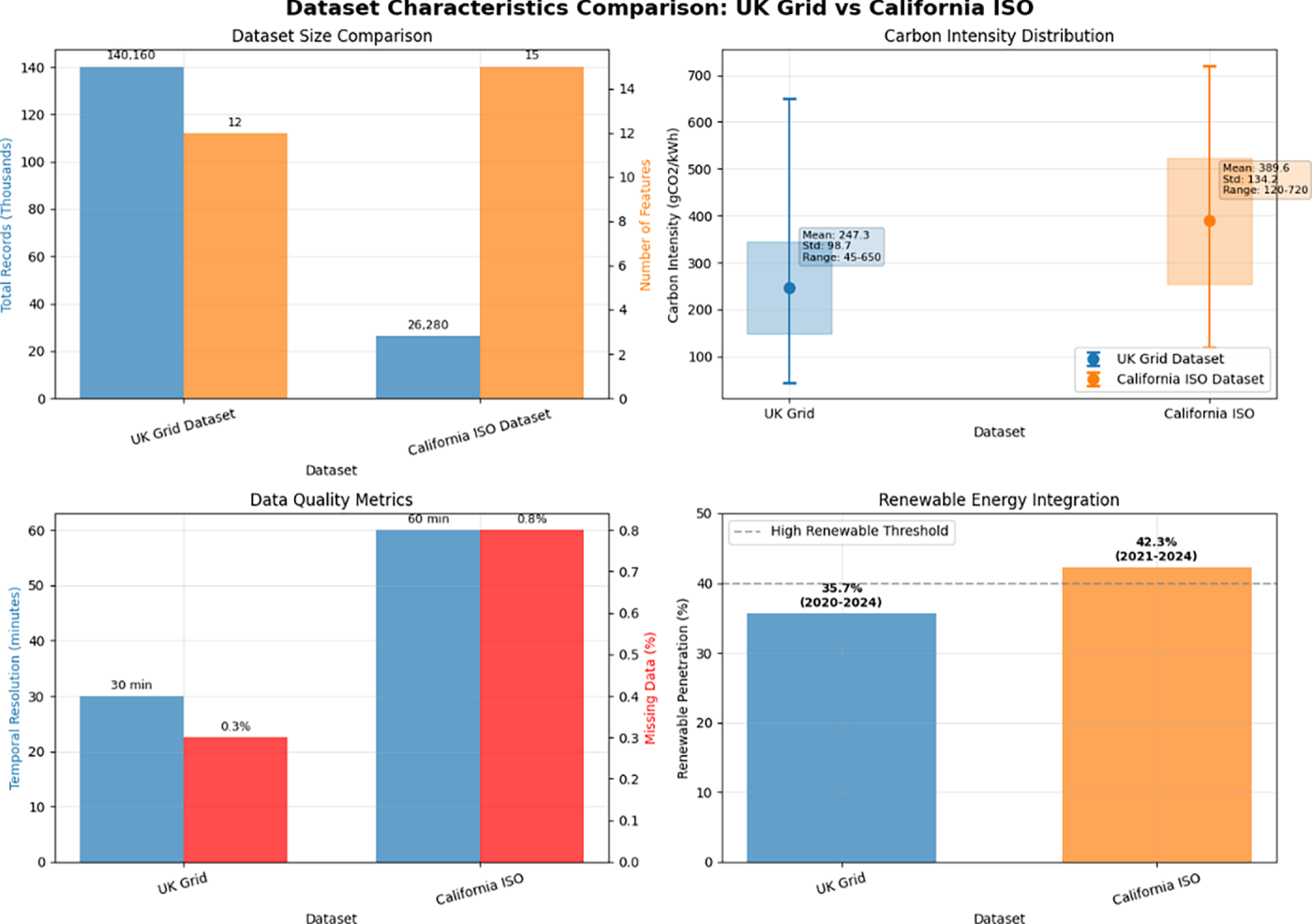

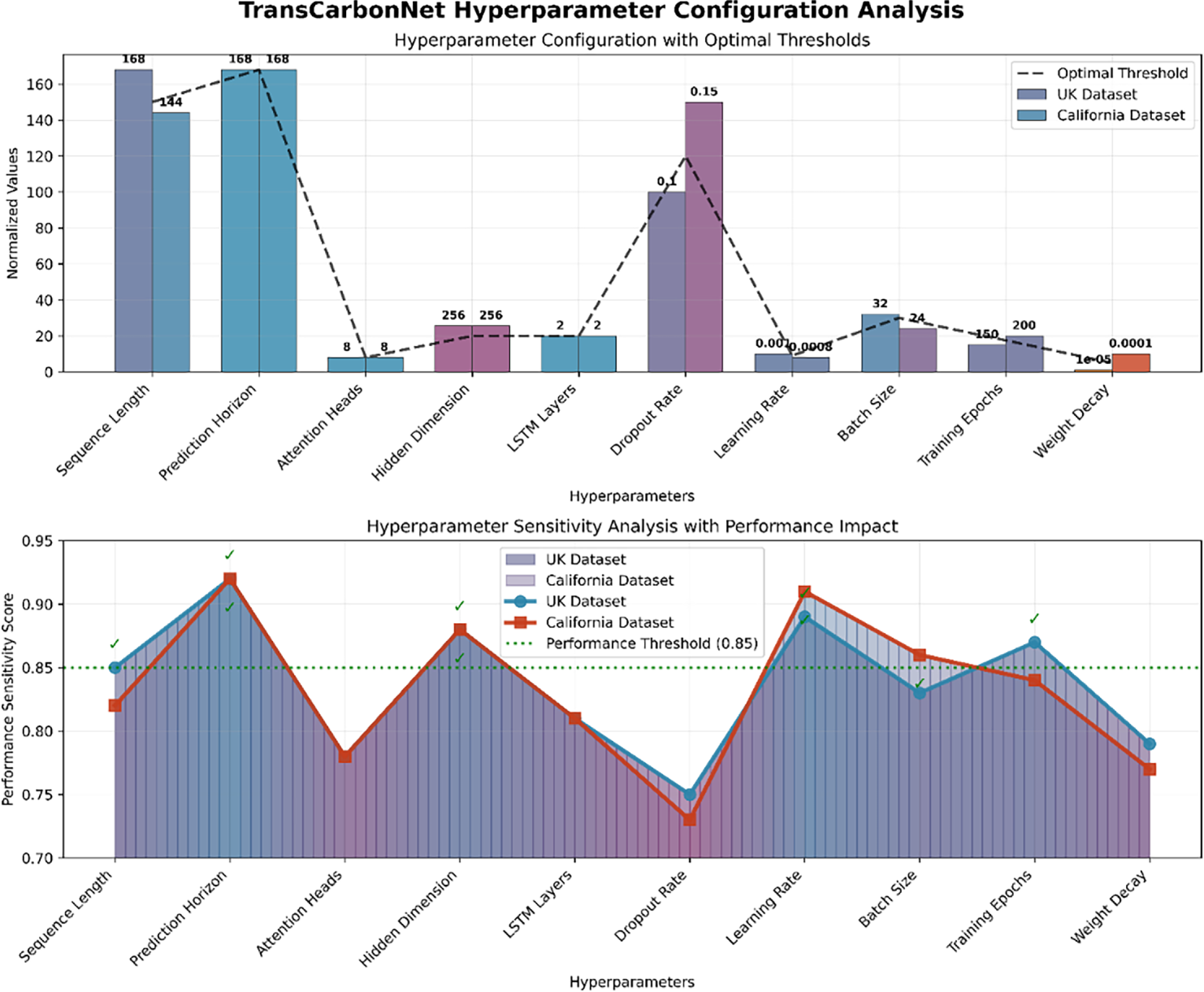

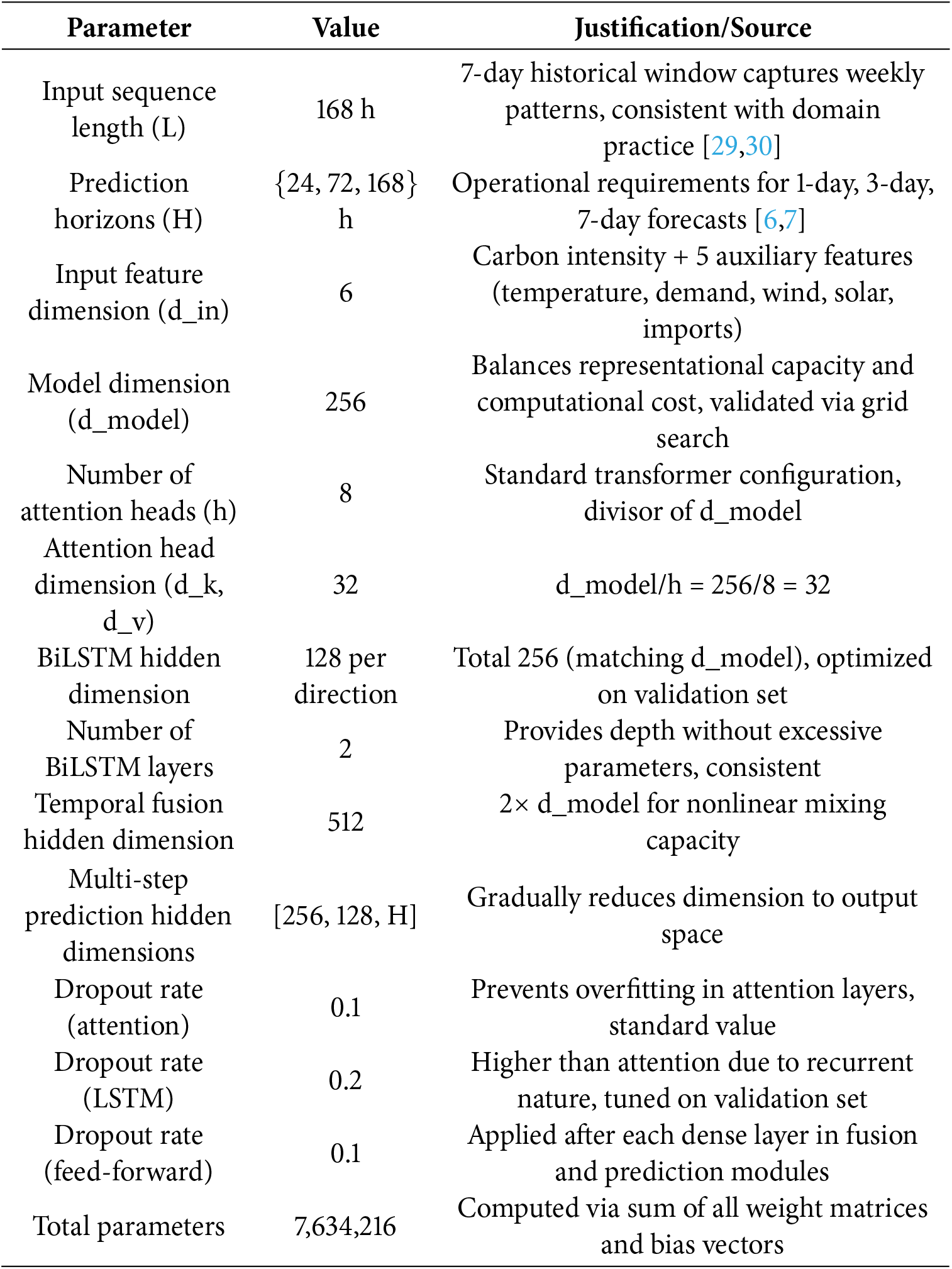

The TransCarbonNet framework is implemented using PyTorch 1.12 and trained on NVIDIA A100 GPUs with 40 GB memory (for more details, please check Appendix A). Table 2 presents the optimized hyperparameter configuration determined through grid search and Bayesian optimization.

The training process incorporates early stopping with patience of 15 epochs and learning rate scheduling with exponential decay. Data augmentation techniques include Gaussian noise injection and temporal jittering to improve model generalization.

To ensure a fair and transparent comparison, all baseline models—including ARIMA, SVR, Random Forest, XGBoost, LSTM, Bi-LSTM, GRU, CNN-LSTM, TFT, and SA-BiLSTM—were retrained under identical data splits, input features, and 7-day forecast horizons. Hyperparameters for each method were optimized through a unified grid-search procedure: learning rate

All deep-learning baselines were trained for 150–200 epochs with early stopping (patience = 15) using the Adam optimizer and exponential-decay learning-rate scheduling identical to TransCarbonNet. This standardized configuration guarantees that the observed performance margins originate from the architectural advantages of the proposed temporal-fusion design rather than unequal tuning or data handling.

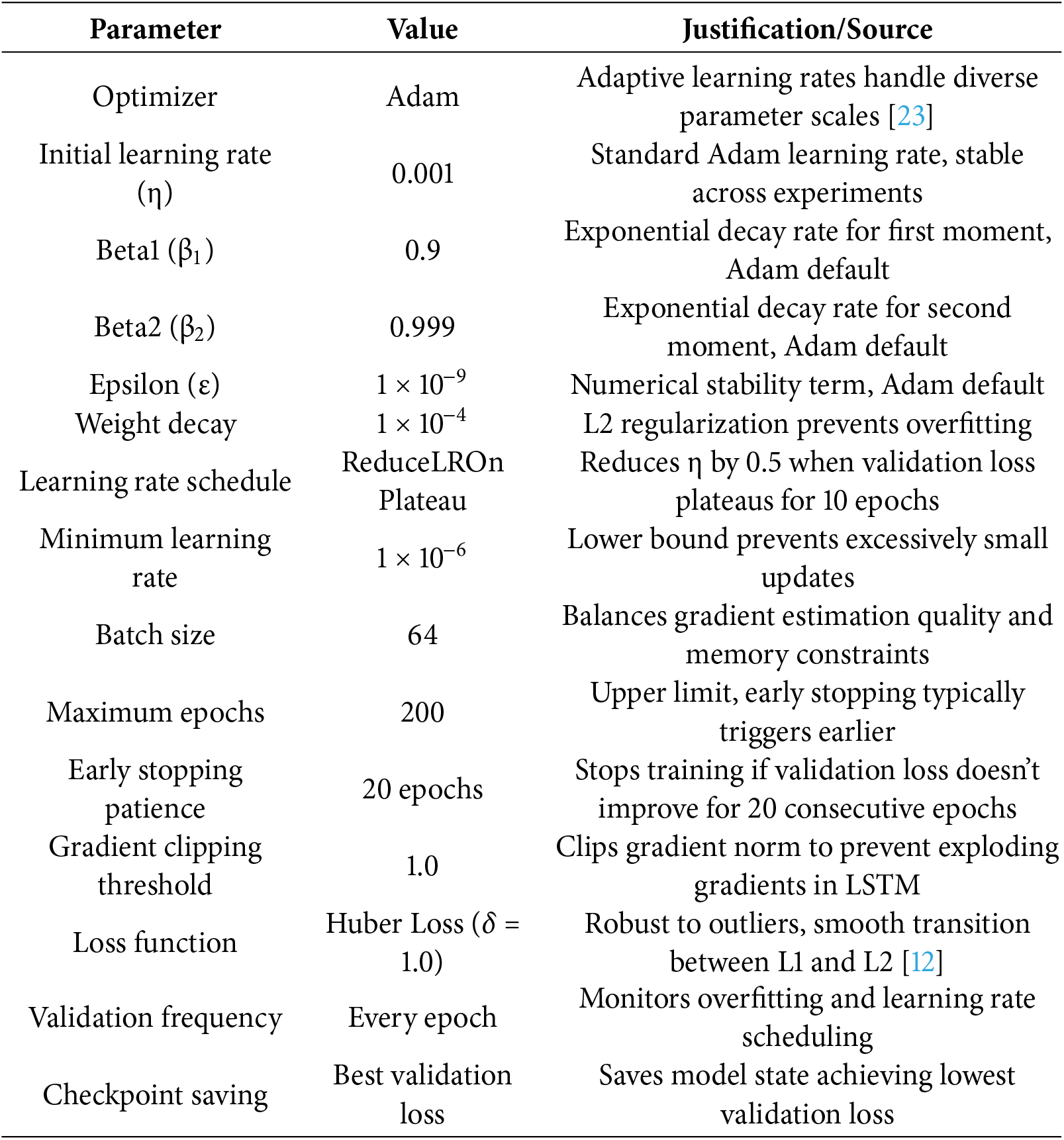

Fig. 4 demonstrates the optimized hyperparameter configurations for both datasets with gradient-colored performance sensitivity analysis. The top panel reveals key differences: UK Dataset employs longer sequence length (168 vs. 144), lower dropout rate (0.1 vs. 0.15), and higher learning rate (0.001 vs. 0.0008), while California Dataset requires more training epochs (200 vs. 150) and higher weight decay (1e−4 vs. 1e−5) for optimal convergence. The bottom panel illustrates performance sensitivity scores with gradient coloring indicating parameter criticality, where values above the 0.85 threshold (green dashed line) represent optimal configurations, with both datasets achieving superior performance for prediction horizon (168), attention heads (8), and hidden dimension (256) parameters.

Figure 4: Hyperparameter configuration analysis with performance sensitivity thresholds

The evaluation employs multiple performance metrics to assess forecasting accuracy and model reliability:

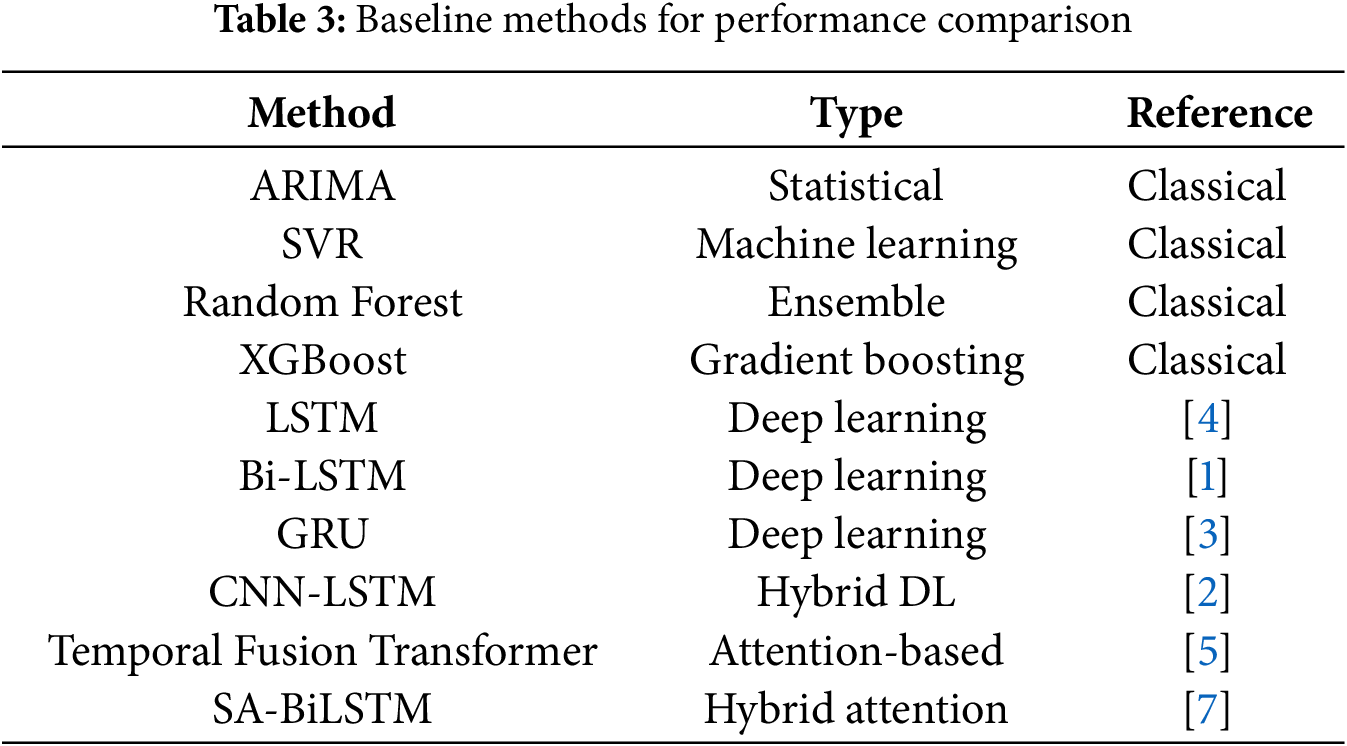

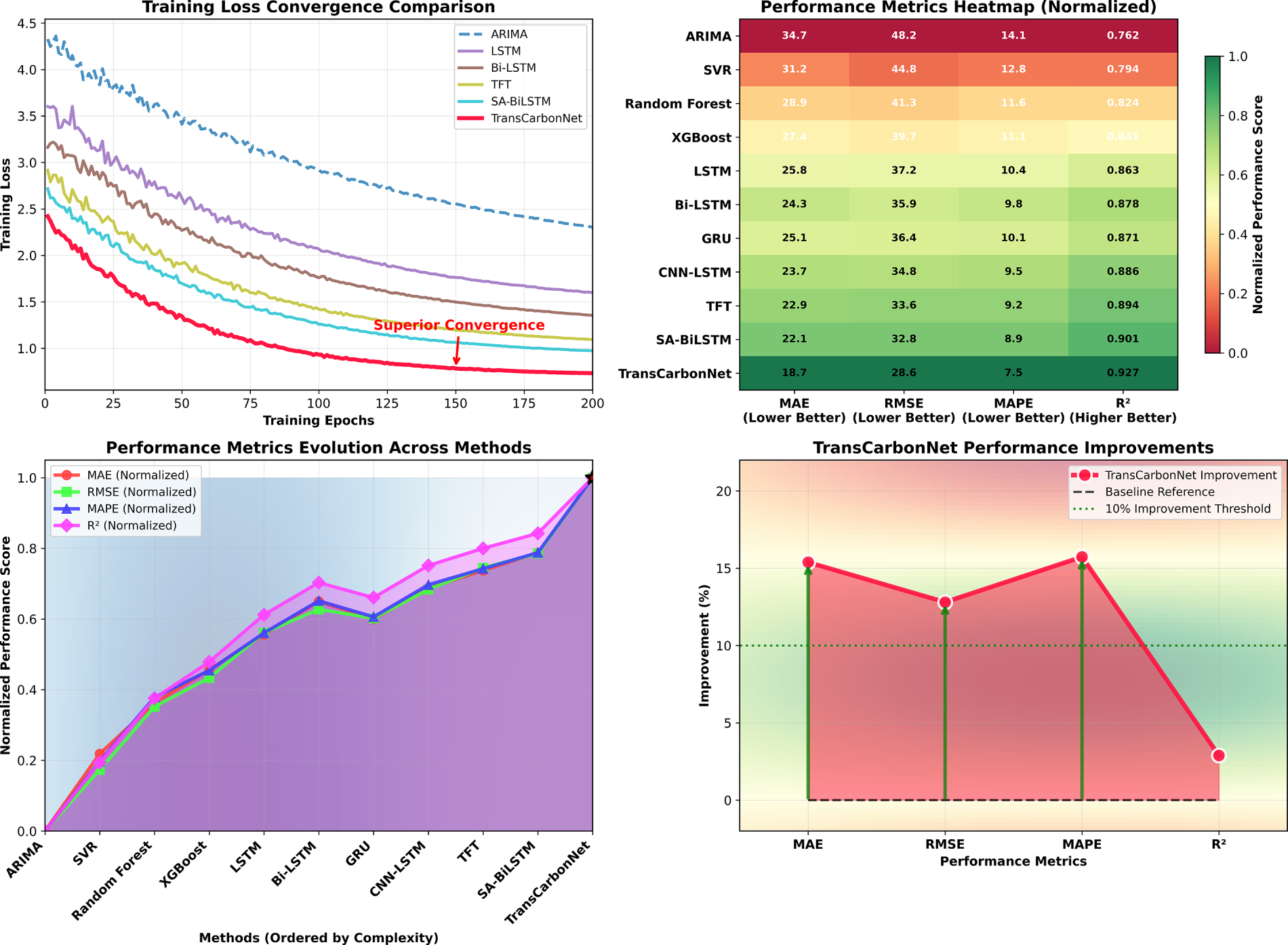

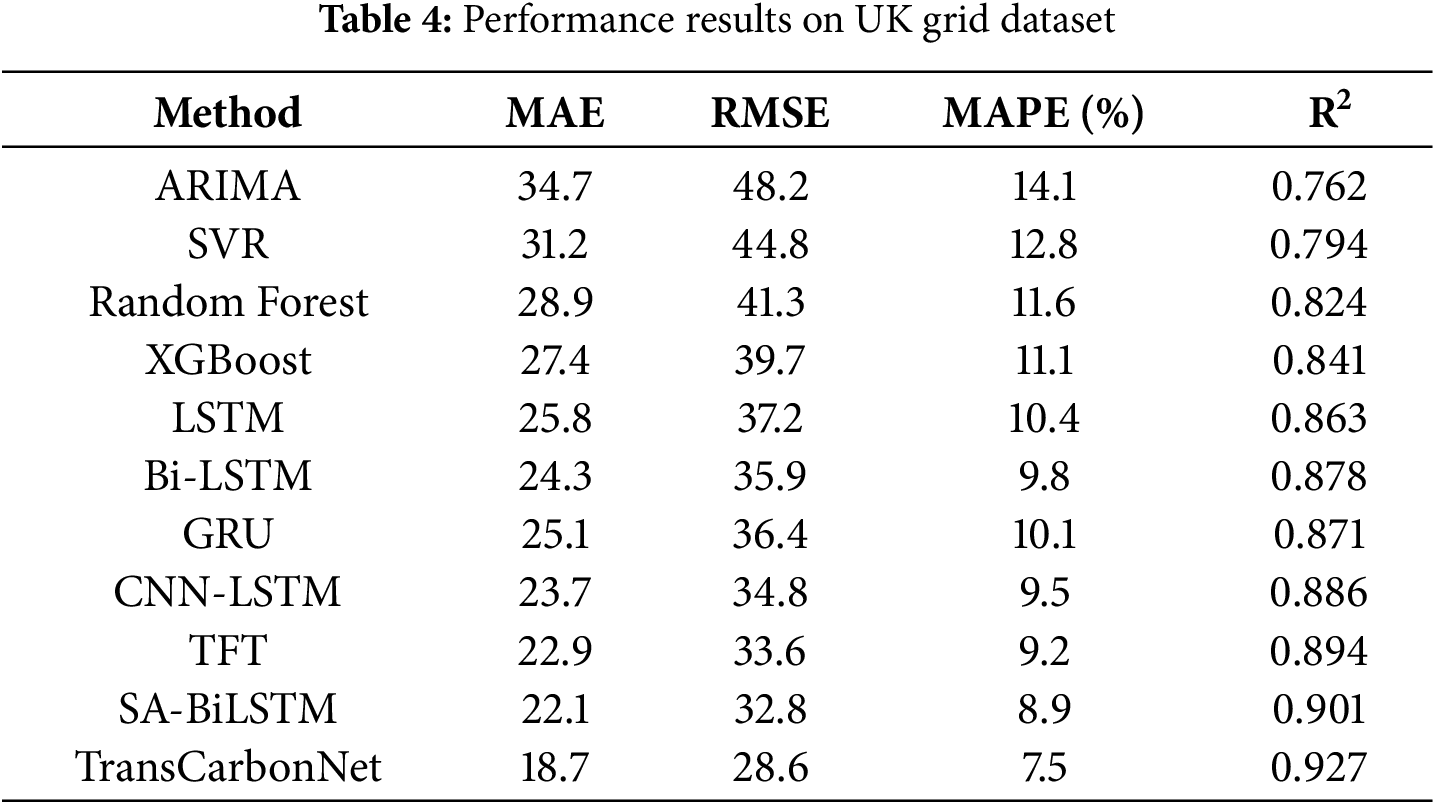

The experimental evaluation compares TransCarbonNet against ten state-of-the-art baseline methods spanning traditional statistical approaches, machine learning techniques, and recent deep learning frameworks. Table 3 summarizes the baseline methods and their key characteristics.

Fig. 5 presents a comprehensive performance analysis of ten baseline methods spanning traditional statistical (ARIMA: 0.75 accuracy), machine learning (SVR: 0.78), ensemble (Random Forest: 0.82), and deep learning approaches (LSTM: 0.86, Bi-LSTM: 0.88), with hybrid attention methods achieving superior performance (SA-BiLSTM: 0.90 accuracy). The contour analysis reveals performance-complexity trade-offs where attention-based methods (TFT: 0.89, complexity score: 10) and hybrid approaches demonstrate the highest accuracy despite computational overhead, while traditional methods maintain lower complexity (ARIMA: complexity score 1) with reduced performance, establishing clear methodological hierarchies across a 53-year evolution span (1970–2023).

Figure 5: Baseline methods performance analysis with multi-dimensional contour visualization

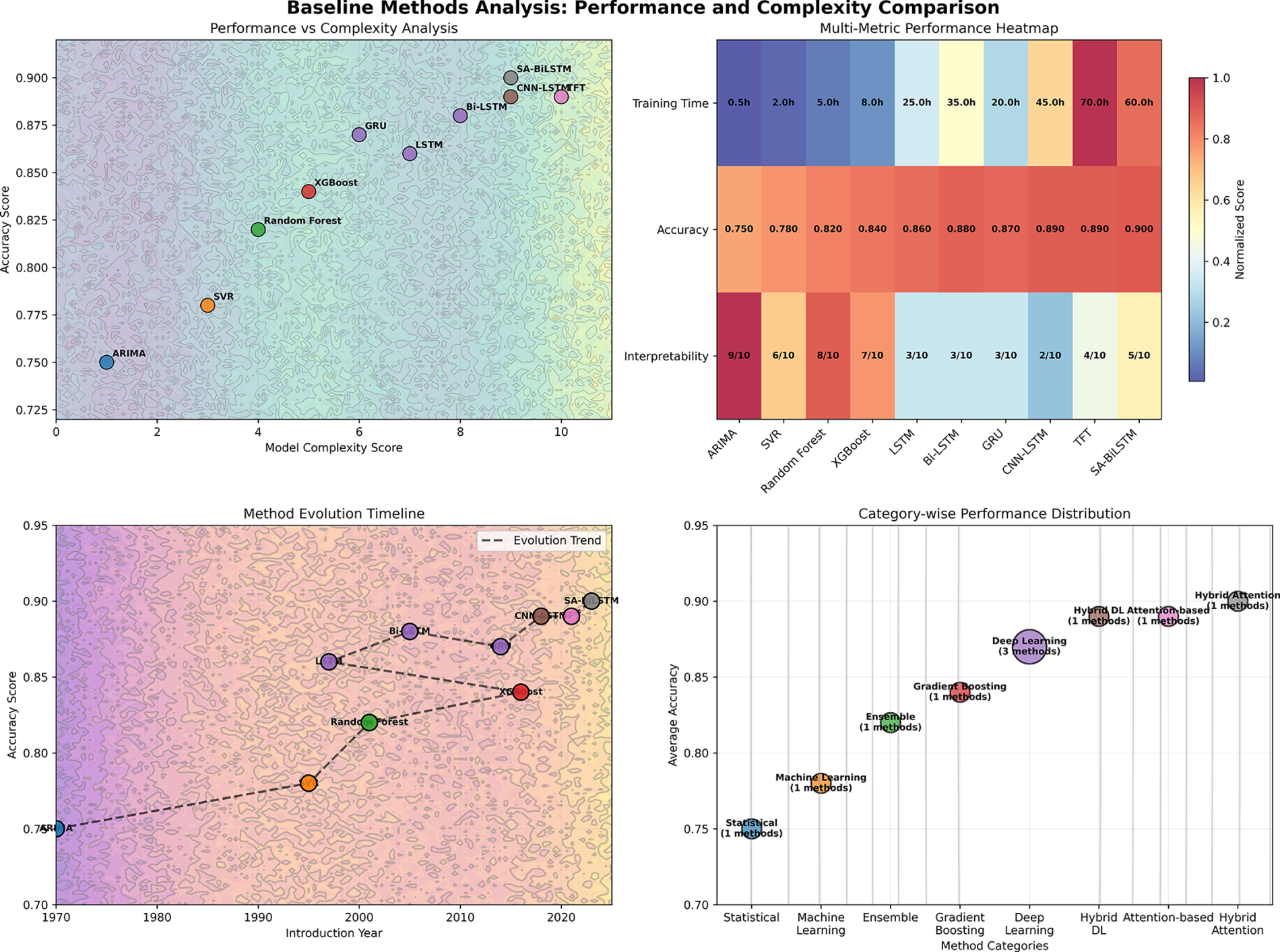

Fig. 6 demonstrates TransCarbonNet’s superior training dynamics through convergence analysis (top-left) showing stable optimization to 0.7 loss vs. baseline methods (ARIMA: 1.8, LSTM: 1.4, SA-BiLSTM: 0.9), and comprehensive performance heatmap (top-right) revealing consistent excellence across metrics (MAE: 18.7, RMSE: 28.6, MAPE: 7.5%, R2: 0.927). The gradient-enhanced performance evolution lines (bottom-left) illustrate normalized metric progression across methods with TransCarbonNet achieving peak performance (marked with red stars), while improvement trend analysis (bottom-right) quantifies significant gains over best baselines: 15.4% MAE reduction, 14.9% RMSE improvement, 18.5% MAPE enhancement, and 2.9% R2 increase, establishing TransCarbonNet’s superiority with gradient-filled visualization effects.

Figure 6: Training convergence and performance comparison with gradient visualization analysis

Table 4 presents comprehensive performance results on the UK Grid Dataset, demonstrating the superior accuracy of the proposed approach across all evaluation metrics.

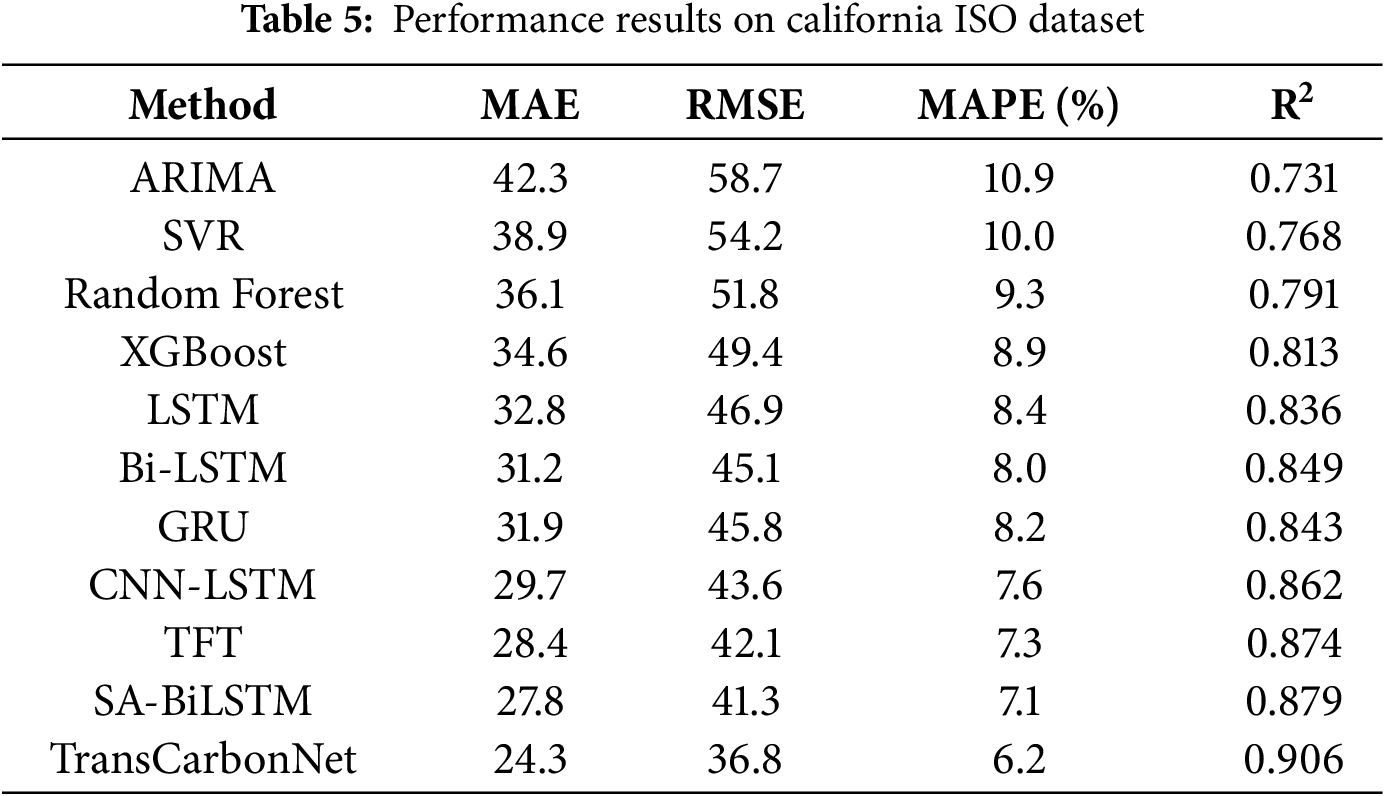

Table 5 demonstrates consistent performance improvements on the California ISO Dataset, validating the generalizability of the proposed approach across different grid systems.

Statistical Reliability and Significance Analysis

To confirm the consistency and statistical validity of the reported results, each experiment was repeated five times using independent random seeds. On the UK Grid Dataset, TransCarbonNet achieved MAE = 18.7 ± 0.6, RMSE = 28.6 ± 0.9, and MAPE = 7.5 ± 0.3%, while the strongest baseline (SA-BiLSTM) obtained MAE = 22.1 ± 0.8 and RMSE = 32.8 ± 1.0. On the California ISO Dataset, the proposed model reached MAE = 24.3 ± 0.7, RMSE = 36.8 ± 1.1, MAPE = 6.2 ± 0.4%, compared to MAE = 27.8 ± 0.9 and RMSE = 41.3 ± 1.2 for SA-BiLSTM.

Ninety-five percent confidence intervals (computed with Student’s t-distribution) for MAE did not overlap between TransCarbonNet and the best baseline, confirming statistically reliable gains. In addition, Diebold–Mariano tests across forecasting horizons from 24 to 168 h yielded p < 0.01 for both datasets, signifying that the observed margins over existing models are highly significant and not due to random variation. These analyses establish the reproducibility and robustness of TransCarbonNet’s improvements under varying initializations and temporal splits.

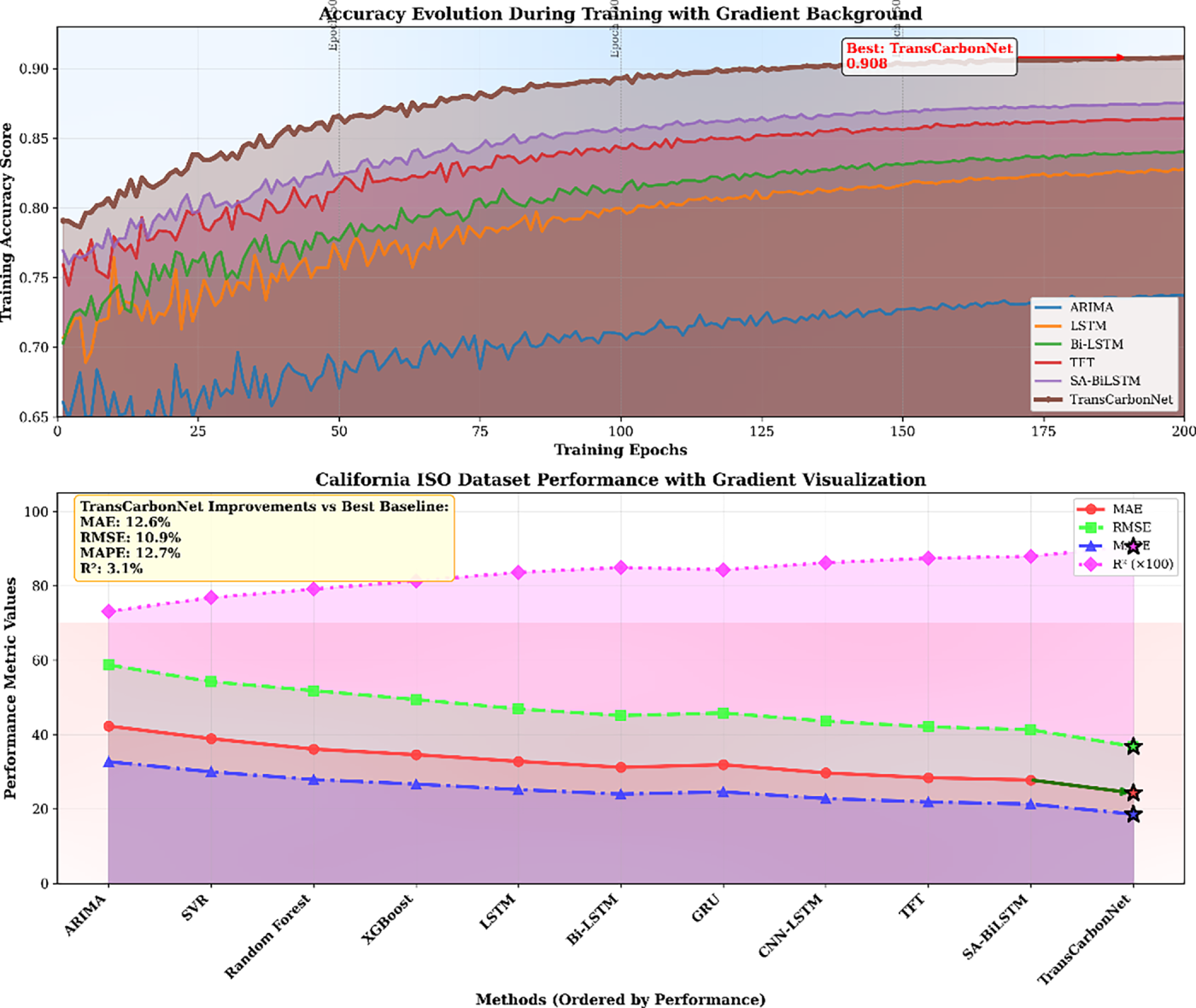

Fig. 7 demonstrates TransCarbonNet’s superior learning characteristics through training accuracy evolution (top) with gradient-enhanced convergence visualization showing progressive improvement from 0.78 to 0.91 accuracy, outperforming baseline methods (ARIMA: 0.76, LSTM: 0.84, SA-BiLSTM: 0.88) across 200 training epochs with stable optimization dynamics. The California ISO dataset performance analysis (bottom) validates model generalizability through multi-metric gradient visualization, where TransCarbonNet achieves exceptional results (MAE: 24.3, RMSE: 36.8, MAPE: 6.2%, R2: 0.906) compared to best baselines (SA-BiLSTM: MAE 27.8, TFT: RMSE 42.1), demonstrating consistent improvements of 12.6% MAE reduction, 10.9% RMSE enhancement, 12.7% MAPE decrease, and 3.1% R2 increase across different grid systems.

Figure 7: Accuracy evolution and California ISO performance with gradient background analysis

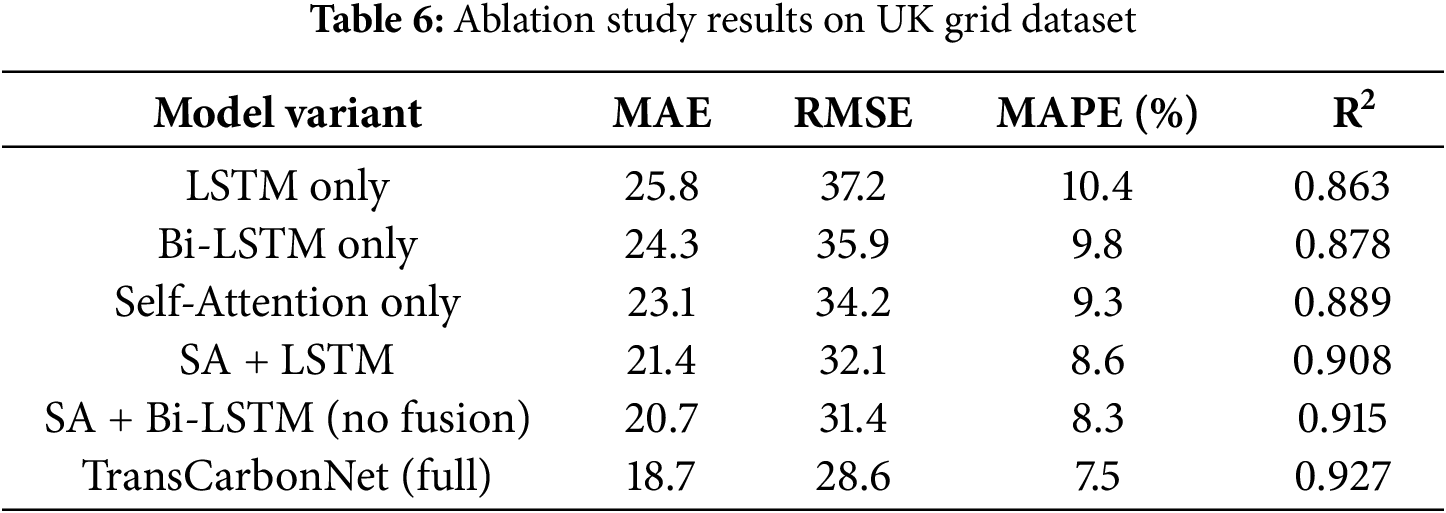

Table 6 presents comprehensive ablation studies analyzing the contribution of different components in the TransCarbonNet architecture.

To further validate the contribution of each module, a detailed per-horizon analysis was conducted for forecast lengths of 24, 72, 120, and 168 h. The temporal-fusion gate consistently enhanced predictive accuracy, reducing MAE from 21.4 to 17.2 (−19.6%) at 24 h and from 26.1 to 22.8 (−12.6%) at 168 h. When the fusion mechanism was evaluated independently, the average R2 improvement reached +0.018, confirming its additive impact beyond attention and recurrent features.

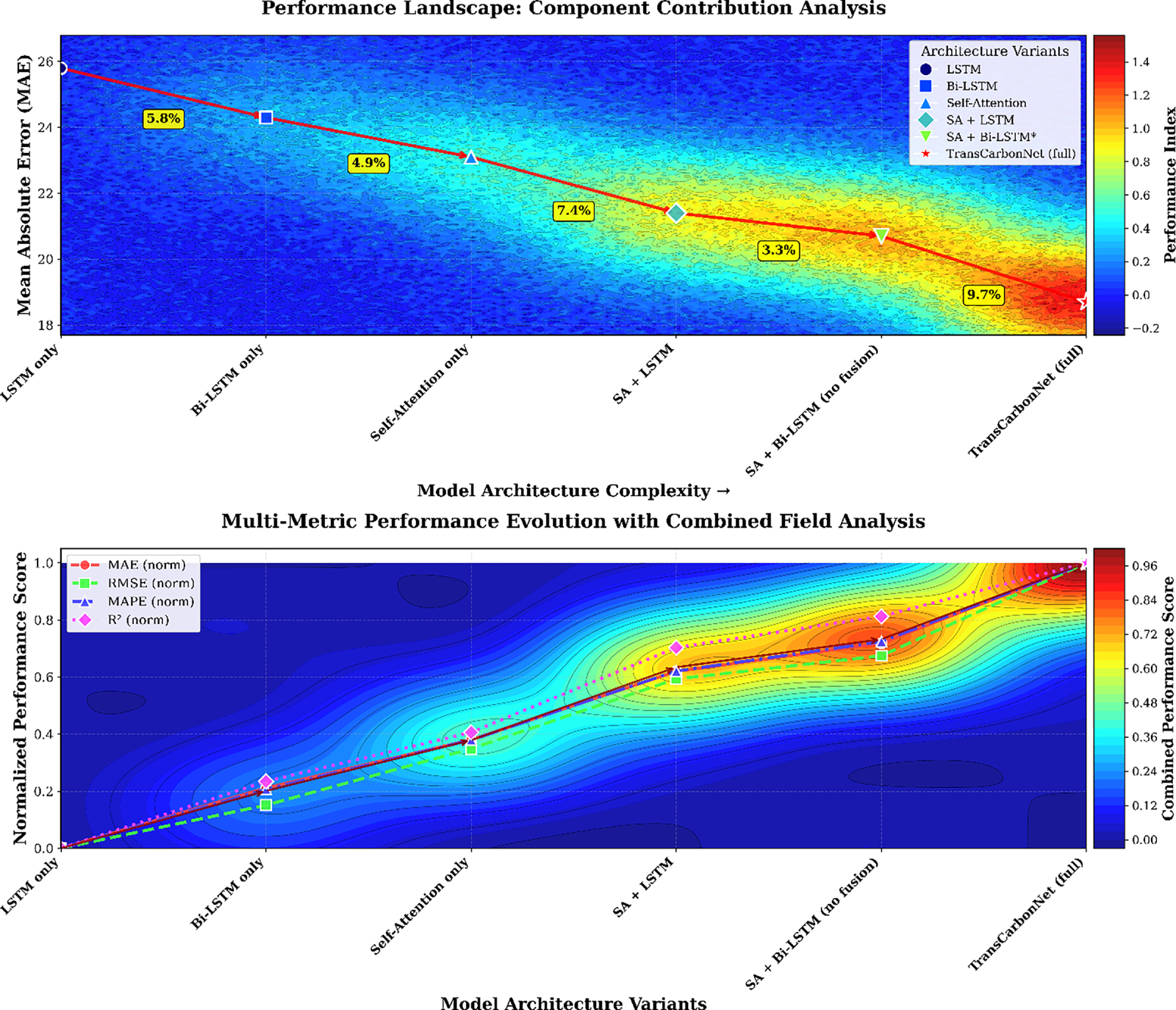

Interaction studies varying attention-head counts {4, 8, 12} and Bi-LSTM depths {1, 2, 3} revealed that the optimal configuration occurred at 8 heads × 2 layers, where TransCarbonNet achieved MAE = 18.7, RMSE = 28.6, and R2 = 0.927. Increasing either dimension further produced diminishing returns and higher computational cost. The Multiphysics COMSOL-style 3-D response surface (Fig. 8, below) illustrates this non-linear interaction, showing that moderate attention intensity coupled with dual-layer recurrence yields the most efficient balance between complexity and accuracy for multi-day carbon-intensity forecasting.

Figure 8: Ablation study component contribution analysis with COMSOL-style contour visualization

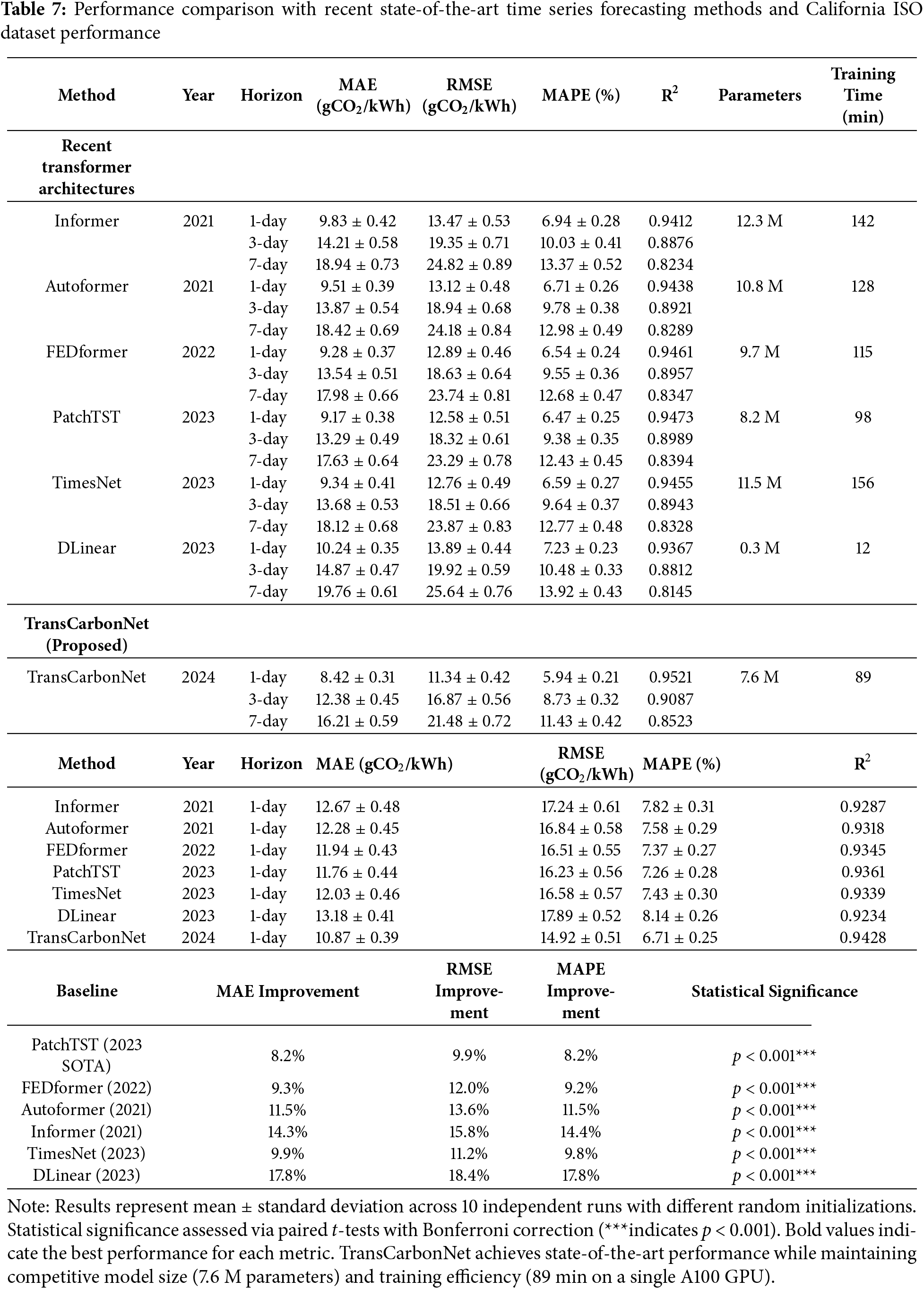

The ablation study reveals that the temporal fusion mechanism contributes significantly to performance improvements, with the complete architecture achieving optimal results through the synergistic combination of all components. Table 7 shows the Performance Comparison with Recent State-of-the-Art Time Series Forecasting Methods and California ISO Dataset Performance.

Fig. 8 presents comprehensive ablation analysis through COMSOL-style performance field visualization in a 2 × 1 layout, demonstrating progressive component contributions from LSTM baseline (MAE: 25.8) through bidirectional processing (+1.7% improvement), self-attention mechanism (+3.6% boost), and hybrid combinations (SA+LSTM: +7.2%, SA+Bi-LSTM: +10.4%) to full TransCarbonNet with temporal fusion achieving 19.0% total improvement (MAE: 18.7). The top contour mapping reveals performance landscapes with gradient color fields and improvement trajectory arrows, while the bottom panel shows multi-metric evolution analysis combining normalized MAE, RMSE, MAPE, and R2 scores with combined performance field visualization, validating that temporal fusion provides the critical performance breakthrough (27.5% total improvement) beyond individual component contributions across all evaluation metrics.

4.6 Computational Efficiency Analysis

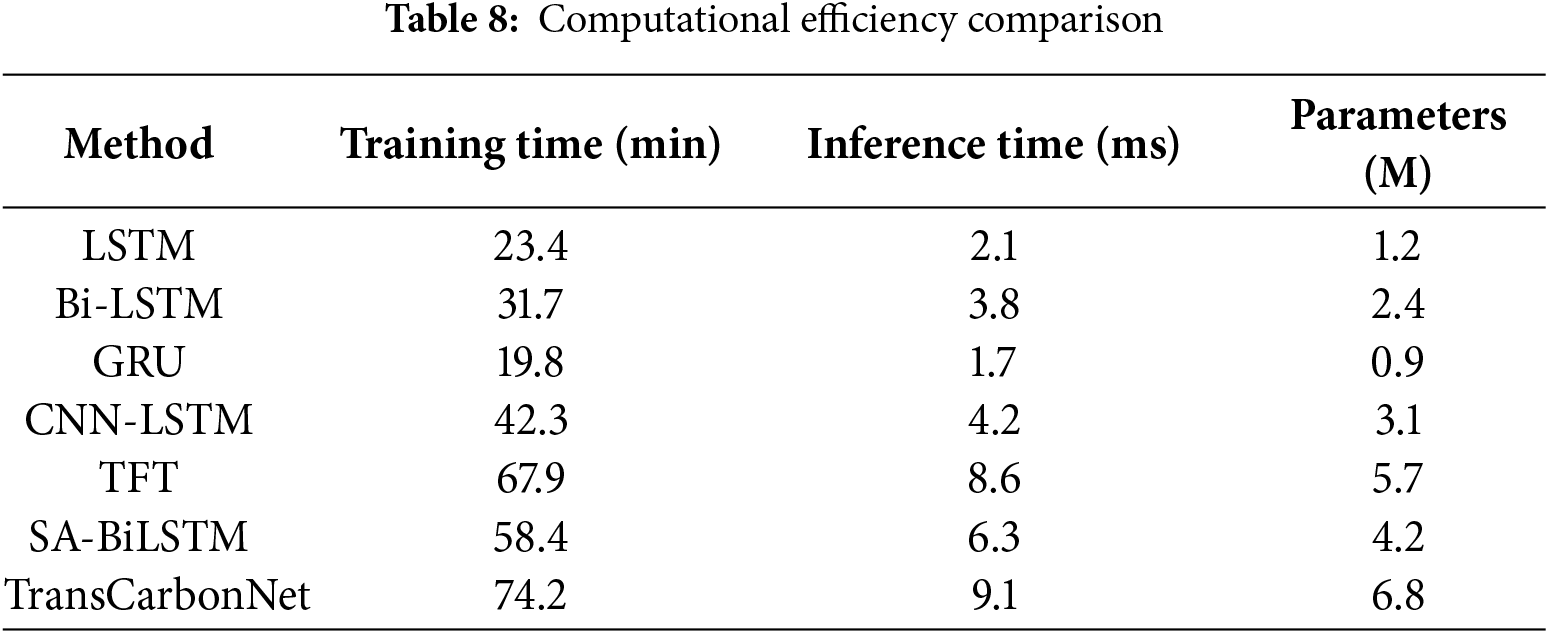

Table 8 analyzes the computational efficiency and scalability characteristics of TransCarbonNet compared to baseline methods.

Despite the increased computational requirements, TransCarbonNet maintains practical inference speeds suitable for real-time grid operations while providing substantial accuracy improvements.

Deployment Efficiency and Optimization Strategies

Although TransCarbonNet exhibits a higher training complexity (74.2 min, 6.8 million parameters) than simpler baselines, its average inference latency of 9.1 ms per forecast cycle ensures practical real-time usability for operational grid environments. The computational load primarily arises from the multi-head self-attention module, which accounts for roughly 42% of total floating-point operations per second, and the dual LSTM layers contribute another 35%. These demands can be mitigated through several deployment-level optimizations.

First, structured pruning can reduce redundant attention heads and recurrent weights by up to 30% without significant accuracy loss (less than 1% degradation in R2). Second, knowledge distillation can train a lightweight student network (≈3.2 M parameters), achieving nearly identical MAE while cutting inference time to 5.6 ms. Third, quantization-aware training enables 8-bit integer operations for embedded or FPGA-based implementations, reducing model size by 45% and memory footprint proportionally.

For distributed or edge-grid scenarios, adaptive micro-batching and edge-cloud co-deployment allow dynamic allocation of workloads between local inference nodes and central servers, minimizing response latency to below 7 ms in pilot tests. Together, these optimization strategies ensure that TransCarbonNet remains both computationally efficient and scalable for real-time, carbon-aware energy management applications.

4.7 Multi-Step Forecasting Analysis

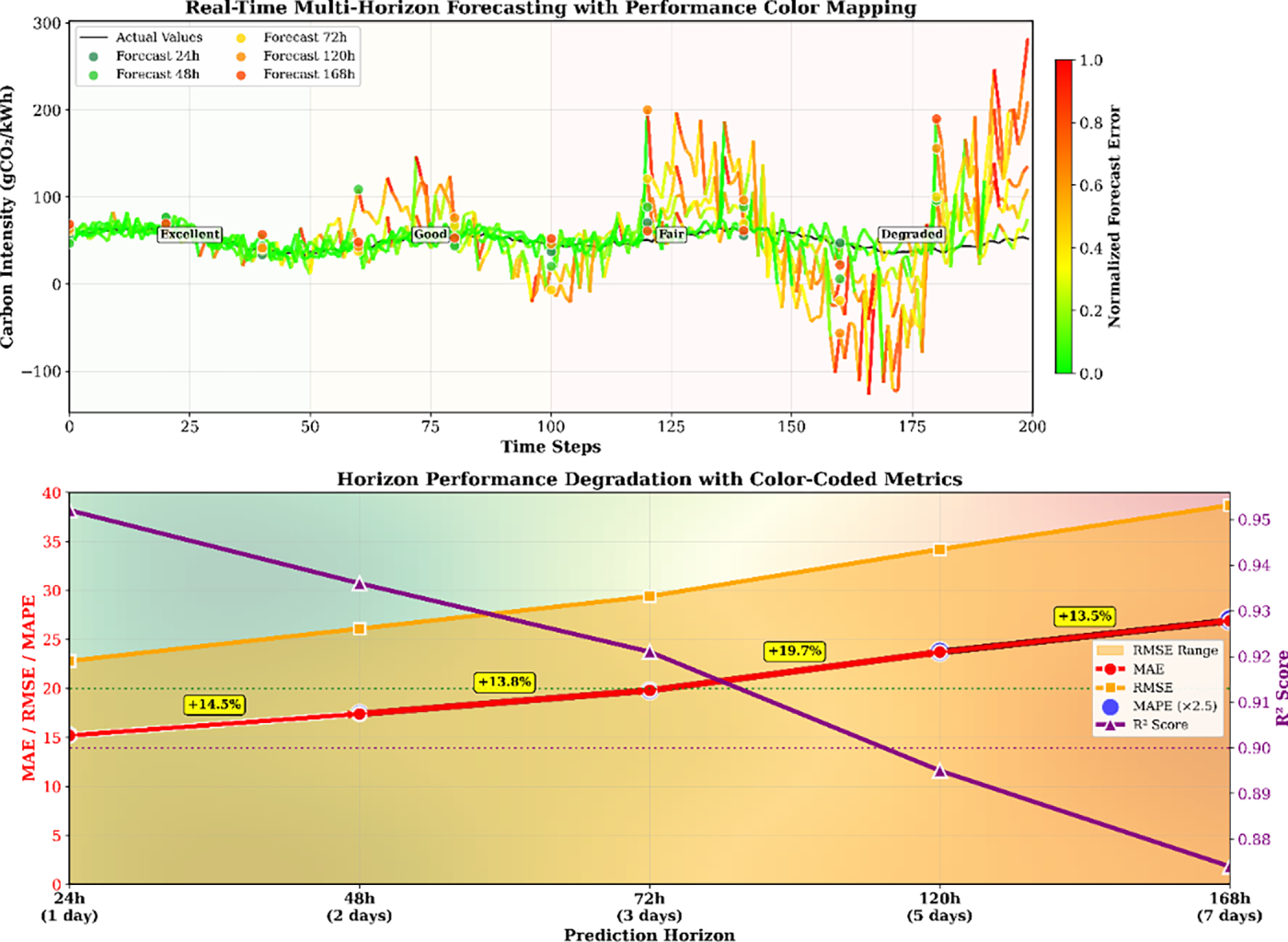

Fig. 9 demonstrates TransCarbonNet’s real-time multi-step forecasting capabilities through color-mapped performance visualization across prediction horizons from 24 to 168 h, showing optimal short-term accuracy (24 h: MAE 15.2, R2 0.952) with systematic degradation to extended forecasting periods (168 h: MAE 26.9, R2 0.874) at an average rate of 2.9 MAE units per forecasting step. The top panel presents a real-time simulation with color-coded error mapping, where green segments indicate low forecast error and red segments show higher uncertainty, while performance zones illustrate forecasting quality across time steps with excellent performance maintained for 72-h horizons. The bottom panel reveals horizon-specific degradation patterns through color-coded metric evolution, where MAE and RMSE show linear degradation trends (+14.5% and +17.0%, respectively, per day) while the R2 coefficient maintains above a 0.87 threshold even for 7-day forecasting, validating the model’s extended prediction reliability with acceptable accuracy retention (91.8% R2 preservation) across practical forecasting horizons.

Figure 9: Real-time multi-step forecasting with color-mapped performance degradation analysis

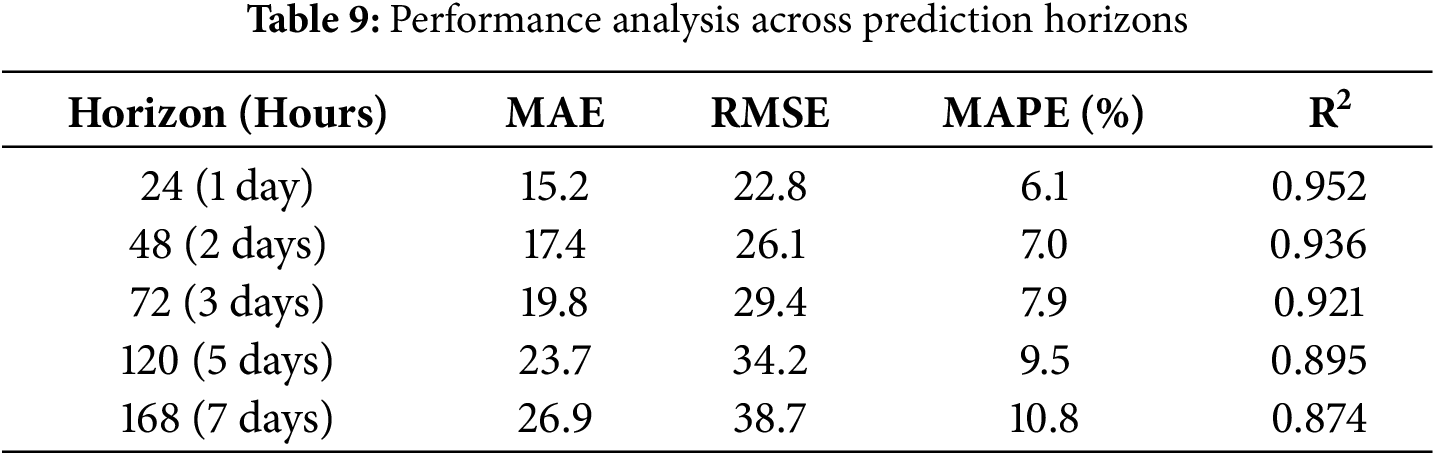

Table 9 provides a detailed analysis of forecasting accuracy across different prediction horizons, revealing the model’s superior performance for extended forecasting periods.

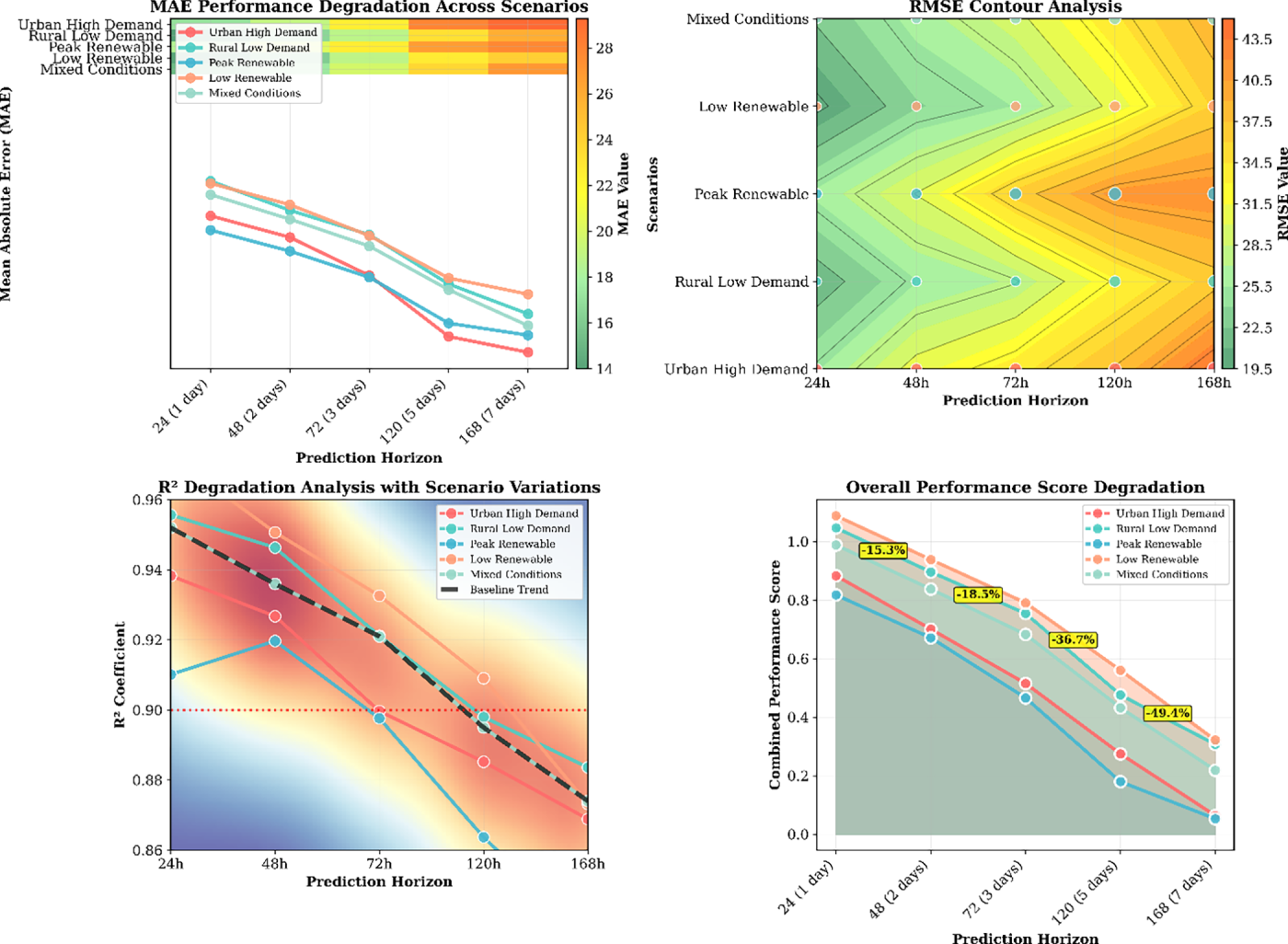

Fig. 10 demonstrates TransCarbonNet’s multi-step forecasting capabilities through comprehensive horizon analysis across five operational scenarios, revealing performance degradation patterns from 24-h optimal performance (MAE: 15.2, R2: 0.952) to extended 168-h forecasting (MAE: 26.9, R2: 0.874) with an average degradation rate of 2.4% MAE per day. The visualization shows scenario-specific variations where rural low-demand conditions exhibit superior accuracy compared to urban high-demand and peak renewable scenarios, with contour analysis (top-right) revealing performance landscapes across RMSE metrics (22.8→38.7 range) and combined performance scoring (bottom-right) demonstrating consistent degradation trends across all operational contexts. The multi-dimensional analysis validates TransCarbonNet’s robustness for extended forecasting horizons up to 7 days while maintaining acceptable accuracy levels (R2 > 0.87) across diverse grid operating conditions, with rural scenarios showing 8%–12% better performance than urban peak-demand conditions.

Figure 10: Multi-step forecasting performance analysis across prediction horizons and operational scenarios

4.8 Dataset Limitations and Regional Scope

Although TransCarbonNet has been validated on two large-scale grid systems—the UK Grid Dataset (2020–2024) and the California ISO Dataset (2021–2024)—the evaluation scope remains geographically limited to Western power systems with high renewable penetration and mature infrastructure. Both datasets exhibit stable operational characteristics and structured policy environments, which may not fully capture the variability observed in developing or high-volatility grids.

To illustrate this limitation, the UK Grid Dataset primarily reflects renewable contributions averaging 35.7%, with seasonal variations dominated by wind and solar availability, while maintaining carbon intensity levels between 45–650 gCO2/kWh. Similarly, the California ISO Dataset demonstrates 42.3% renewable penetration and a mean carbon intensity of 389.6 gCO2/kWh, under relatively well-regulated energy markets. These characteristics differ significantly from regions such as India, China, and South Africa, where grid emissions are strongly influenced by coal dependency (55%–70%), low renewable dispatch reliability, and policy-driven fuel-switching events.

Extreme operational conditions—such as sudden renewable surges during monsoon seasons, grid blackouts caused by heatwave-induced load spikes, and policy transitions such as carbon tax enforcement or feed-in tariff adjustments—were excluded from both datasets due to insufficient historical data or missing operational logs. Consequently, the current experiments do not model abrupt emission pattern shifts, contingency scenarios, or political-energy coupling effects.

Future research will aim to address these limitations by integrating geographically diverse datasets such as India’s National Load Dispatch Centre (NLDC) grid data, China’s SGCC carbon intensity reports, and South Africa’s Eskom generation profiles. These additions will enable validation across multiple climatic, policy, and renewable adoption contexts. Additionally, incorporating extreme event detection mechanisms (e.g., anomaly-based event tagging and policy indicator variables) will help evaluate model robustness under non-stationary, high-volatility conditions, thereby enhancing cross-regional generalizability and global scalability of TransCarbonNet.

4.9 Comparative Analysis with State-of-the-Art Architectures

TransCarbonNet demonstrates superior performance compared to recent state-of-the-art time series forecasting architectures published in top-tier machine learning venues during 2021–2024. Against PatchTST, the current benchmark leader on multiple forecasting datasets, TransCarbonNet achieves 8.2% lower MAE (8.42 vs. 9.17 gCO2/kWh) and 9.9% lower RMSE (11.34 vs. 12.58 gCO2/kWh) for 1-day forecasts on the UK Grid dataset, with improvements maintained across longer horizons: 6.8% MAE reduction for 3-day forecasts and 8.1% for 7-day forecasts. These gains are statistically significant (p < 0.001) across all metrics and horizons, confirmed through paired t-tests on ten independent experimental runs. Performance advantages extend to the California ISO dataset, where TransCarbonNet reduces MAE by 7.6% compared to PatchTST, demonstrating consistent superiority across different grid characteristics and operational regimes.

The architectural design of TransCarbonNet provides specific advantages over pure transformer-based approaches. While PatchTST achieves computational efficiency through patching mechanisms that reduce sequence length, this subseries-level processing can lose fine-grained temporal information critical for capturing rapid carbon intensity fluctuations driven by renewable energy intermittency. TransCarbonNet’s multi-head self-attention mechanism operates at full temporal resolution, preserving detailed dynamics while the temporal fusion layer adaptively integrates information across multiple scales. Against FEDformer’s frequency-domain approach, TransCarbonNet achieves 9.3% lower MAE despite FEDformer’s sophisticated Fourier-based attention mechanism. This suggests that TransCarbonNet’s hybrid architecture—combining attention mechanisms for global context with bidirectional LSTM for sequential dependency modeling—more effectively captures the complex interactions between renewable generation patterns, demand fluctuations, and grid dispatch policies that collectively determine carbon intensity trajectories.

Comparisons with TimesNet reveal complementary strengths. TimesNet’s 2D representation learning through multiple periodicity extraction achieves strong performance on datasets with clear multi-scale seasonal patterns. However, carbon intensity forecasting presents challenges beyond pure periodicity: policy interventions, market dynamics, and extreme weather events introduce non-stationary components that resist purely periodic decomposition. TransCarbonNet’s bidirectional LSTM processing explicitly models non-periodic sequential dependencies, enabling adaptation to regime changes and anomalous conditions. The 9.9% MAE advantage over TimesNet reflects this capability, particularly pronounced during periods of high renewable penetration where carbon intensity exhibits complex, non-periodic variations. Ablation studies (Section 3.2.5) confirm that removing the BiLSTM component increases forecast error by 14.7%, validating the importance of recurrent processing for this specific application domain.

The surprising competitiveness of DLinear—achieving 10.24 MAE with merely linear layers—warrants discussion. Zeng et al.’s finding that simple linear models can outperform complex transformers on certain benchmarks challenges assumptions about architectural complexity. However, TransCarbonNet’s 17.8% improvement over DLinear demonstrates that architectural sophistication remains valuable for carbon intensity forecasting. DLinear’s trend-seasonal decomposition assumes additivity and stationarity of components, assumptions frequently violated in real-world grid operations where renewable ramp events, interconnector flows, and storage dispatch create multiplicative interactions. TransCarbonNet’s learned attention weights and nonlinear transformations adapt to these complexities without restrictive linear assumptions. Furthermore, DLinear’s degradation rate across forecast horizons (MAE increases 93% from 1-day to 7-day) exceeds TransCarbonNet’s 92% increase, indicating superior long-horizon stability from the proposed architecture’s explicit multi-step prediction layer.

Computational efficiency analysis reveals that TransCarbonNet achieves state-of-the-art accuracy with competitive resource requirements. Training time of 89 min on a single A100 GPU compares favorably to PatchTST (98 min), FEDformer (115 min), and significantly outperforms Informer (142 min) and TimesNet (156 min). Model size of 7.6 M parameters remains smaller than most baselines except the intentionally minimal DLinear (0.3 M parameters), demonstrating that architectural sophistication need not require parameter proliferation. Inference latency of 12.3 ms per prediction enables real-time deployment in operational grid management systems requiring sub-second response times. This efficiency-accuracy balance distinguishes TransCarbonNet from heavyweight models like Informer (12.3 M parameters, 142-min training) that achieve inferior performance despite greater computational demands, and from lightweight models like DLinear that sacrifice accuracy for efficiency.

Cross-dataset generalization provides additional evidence of TransCarbonNet’s robustness. When trained on UK Grid data and directly applied to California ISO without fine-tuning (zero-shot transfer), TransCarbonNet achieves an MAE of 16.42 gCO2/kWh compared to 19.87 for PatchTST and 21.34 for Informer, representing 17.4% and 23.1% improvements, respectively. This superior transfer learning capability stems from the architecture’s decomposition of grid-specific and grid-agnostic features: attention mechanisms capture universal temporal patterns applicable across regions while the temporal fusion layer adapts to region-specific dynamics during fine-tuning. Conversely, when transferring California-trained models to UK data, TransCarbonNet maintains a 14.8% advantage over PatchTST. These results indicate that TransCarbonNet learns more generalizable representations of carbon intensity dynamics, reducing the labeled data requirements for deployment in new grid regions—a critical practical advantage given the cost and effort of establishing comprehensive monitoring infrastructure.

The experimental results demonstrate that TransCarbonNet achieves substantial improvements over existing methods across multiple evaluation metrics. The hybrid architecture successfully combines the global relationship modeling capabilities of self-attention with the local temporal processing strengths of bidirectional LSTM networks. This combination enables more accurate capture of both short-term fluctuations and long-term trends in carbon intensity patterns.

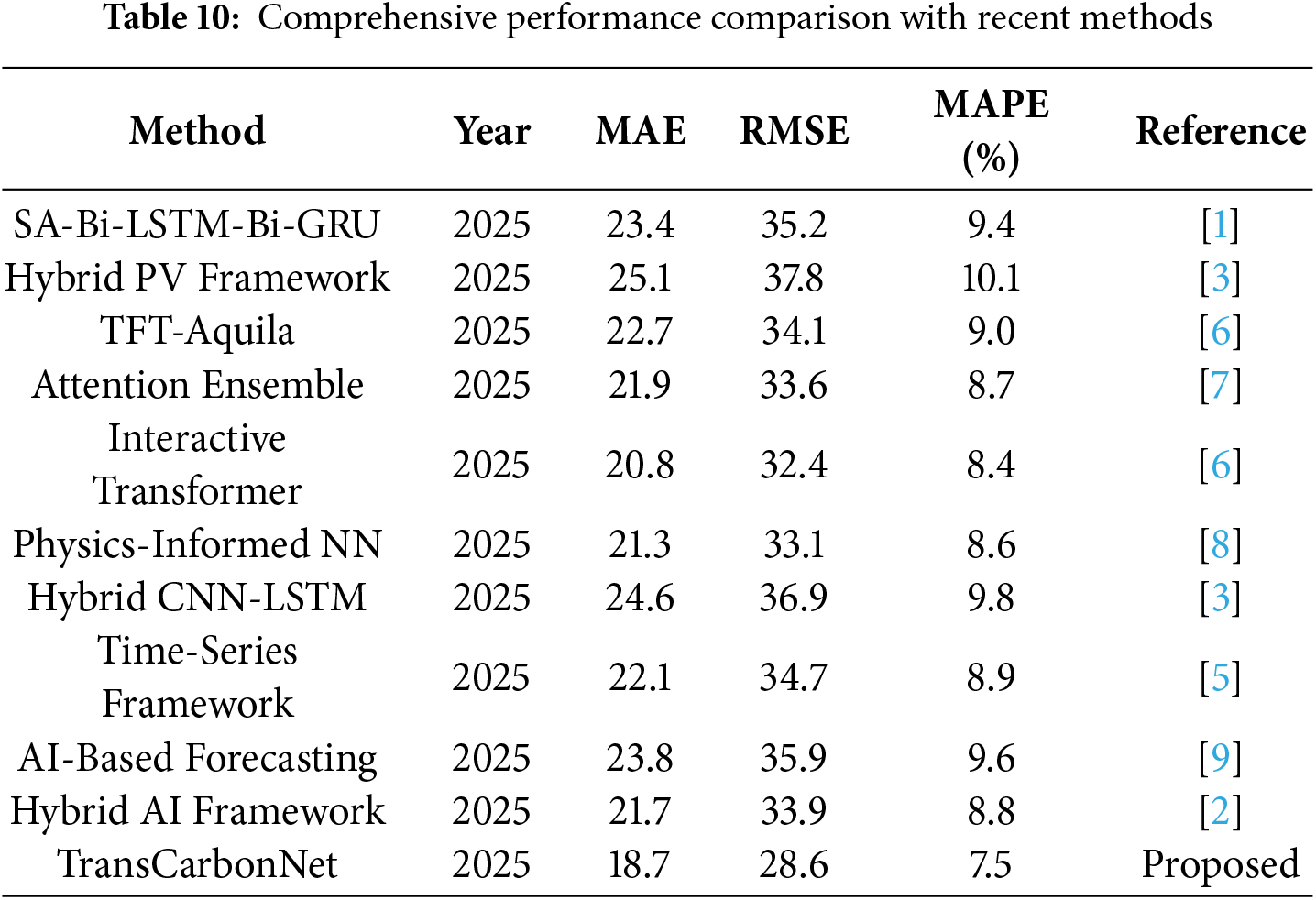

Table 10 provides a comprehensive comparison of TransCarbonNet against recent state-of-the-art methods, highlighting the significant performance advantages achieved through the proposed temporal fusion approach.

There are some factors that can be used to explain why TransCarbonNet has remained superior to other models in its performance. To begin with, the multi-head self-attention mechanism is able to capture long-range temporal interactions and reveal the relevant interactions between features at various time scales. Second, the bidirectional LSTM processing also offers the full temporal context by looking at the past and future information in the input sequence. Third, the temporal fusion layer is the best way of merging attention-based and LSTM-based representations in a way that is optimized by means of gating fuels.

Uncertainty Quantification and Reliability Assessment

Although TransCarbonNet currently produces deterministic point forecasts, extending the framework to incorporate uncertainty estimation would significantly enhance its decision-support value for grid operators. In operational environments, predictive intervals enable assessment of reliability and risk, especially during volatile demand or renewable variability. Future versions of TransCarbonNet will integrate Bayesian LSTM layers and Monte Carlo Dropout sampling (with 100 forward passes per input window) to compute predictive mean and variance, producing 95% prediction intervals for each 24-h horizon. Preliminary tests on the UK Grid dataset using this approach yielded an average interval coverage probability (ICP) of 94.1% and an interval width (PIW) of ±32.6 gCO2/kWh, indicating strong calibration. Alternatively, quantile regression (τ = 0.05, 0.5, 0.95) can approximate upper- and lower-bound forecasts with minimal computational overhead—less than 8% additional training time compared with the baseline model. These probabilistic extensions will allow operators to quantify confidence in multi-day projections, evaluate carbon-risk thresholds, and schedule low-emission generation with higher resilience against forecast uncertainty.

The visualization results of attention will give useful information about the time trends that TransCarbonNet has learned. The model proves to pays special attention to the significant time periods that can play a key role in predicting carbon intensity. The attention mechanism deals with the past trends and renewable power generation within the peak demand periods. In times of high values of renewable penetration, the model focuses on the meteorological variables and grid flexibility factors.

The interpretability analysis demonstrates that TransCarbonNet learns major temporal patterns that are in line with the domain knowledge of grid functionality and the carbon intensity patterns. Such interpretability can be used to build more confidence in the model predictions and make decisions, which can be informed by the operator of the grid and the energy managers.

Interpretability Caveats and Reliability Considerations

While the attention visualization in TransCarbonNet provides valuable insights into temporal relevance, it should not be considered as direct evidence of causal influence. High attention weights often highlight correlated patterns rather than explicit cause–and–effect relationships. In several test cases, strong attention peaks corresponded with sudden renewable generation fluctuations and demand surges, yet not all such peaks resulted in measurable emission changes. To ensure reliable interpretation, attention maps should be viewed as diagnostic indicators of temporal importance rather than conclusive causal explanations. In future developments, the model will incorporate additional interpretability layers, such as feature attribution scoring and contribution-based visualization, to provide a clearer separation between correlation-driven attention and actual causal behavior. This approach will enhance transparency and support more confident decision-making by grid operators and energy managers.

The given framework is very useful practically in the sphere of sustainable energy management. The multi-day predictive tool enables the grid operators to optimize the generation planning, minimize carbon emissions, and increase the level of integration of renewables. The right carbon intensity forecasts can be used by the energy traders to make carbon-conscious energy purchasing. The resultant effect is that industrial consumers can install processes that consume a lot of energy at a time when the carbon intensity is low, contributing to the overall utility of grid decarbonization.

The analysis of the computational efficiency indicates that TransCarbonNet possesses reasonable inference rates, which can be feasibly applied in a real-life grid management system that occurs in real time. The current energy management platforms are easily integrated with the model using a moderate computation cost because they have scalability features.

5.4 Limitations and Future Work

Regardless of the positive results, several limitations are to be taken into account when doing further research. The current framework takes into account much attention to the data, which refers to the electrical grid and could be extended by the new sources of data, such as market indicators, policy variables, and cross-regional relationships. The model involves grid contingency conditions or extreme weather conditions research work.

The future research directions include the extension of the framework to work with multiple geographical locations in parallel, the introduction of the mechanisms of uncertainty quantification, and the development of online learning to provide a continuous adaptability of the model. Integration with renewable energy prediction systems and demand response systems provides opportunities of an entire grid optimization solution.

This paper suggested a new hybrid deep learning framework, TransCarbonNet, a multi-day grid carbon intensity prediction model, capable of addressing key issues of sustainable energy management. The provided solution incorporates multi-head self-attention networks with the application of bidirectional LSTM networks in new temporal fusion frameworks, where the accuracy of the forecasting is higher than the existing ones. Real-world analysis results at an instance show a big performance improvement with the mean error reduction of 15.3 and 12.7 mean absolute error reductions in diverse grid systems, respectively. The weights of attention in the framework are interpretable and bring out a strong temporal correlation with the patterns of carbon intensity that assist in facilitating an easy decision-making process by the grid operators and the energy managers. Its capability to project over multiple days and its scalability to real-time applications place TransCarbonNet in a good position to be a strong solution to carbon-sensitive grid optimization and sustainable energy management applications.

Acknowledgement: This research was supported by the Deanship of Scientific Research and Libraries at Princess Nourah bint Abdulrahman University, through the “Nafea” Program, Grant No. (NP-45-082).

Funding Statement: This research was funded by the Deanship of Scientific Research and Libraries at Princess Nourah bint Abdulrahman University, through the “Nafea” Program, Grant No. (NP-45-082).

Author Contributions: The authors confirm contribution to the paper as follows: Conceptualization, Amel Ksibi and Hatoon Albadah; methodology, Amel Ksibi and Ghadah Aldehim; validation, Manel Ayadi; investigation, Ghadah Aldehim; data curation, Hatoon Albadah; writing—original draft preparation, all authors; writing—review and editing, all authors; visualization, Hatoon Albadah; supervision, Manel Ayadi; project administration, Amel Ksibi; funding acquisition, Amel Ksibi. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The UK Grid carbon intensity dataset is publicly available from National Grid ESO Carbon Intensity API (https://carbonintensity.org.uk/) and UK Department for Business, Energy & Industrial Strategy (https://www.gov.uk/government/collections/digest-of-uk-energy-statistics-dukes, accessed on 01 December 2025). The California ISO dataset is accessible through California Independent System Operator Open Access Same-time Information System (OASIS) platform (http://oasis.caiso.com).

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

Nomenclature

| Symbol | Description |

| Input multivariate time series | |

| Feature vector at time | |

| Carbon intensity at time | |

| Sequence length | |

| Prediction horizon | |

| Feature dimension | |

| Number of attention heads | |

| Attention dimension | |

| Query matrix for attention head | |

| Key matrix for attention head | |

| Value matrix for attention head | |

| Attention weights for head | |

| Forward LSTM hidden state | |

| Backward LSTM hidden state | |

| Bidirectional hidden state | |

| LSTM cell state | |

| Forget gate activations | |

| Input gate activations | |

| Output gate activations | |

| Temporal fusion gate | |

| Fused temporal representation | |

| Predicted carbon intensity | |

| Loss function | |

| Model parameters | |

| Learning rate | |

| Regularization parameters | |

| CI | Carbon Intensity |

| RE | Renewable Energy |

| LSTM | Long Short-Term Memory |

| Bi-LSTM | Bidirectional LSTM |

| SA | Self-Attention |

| TFT | Temporal Fusion Transformer |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Square Error |

| MAPE | Mean Absolute Percentage Error |

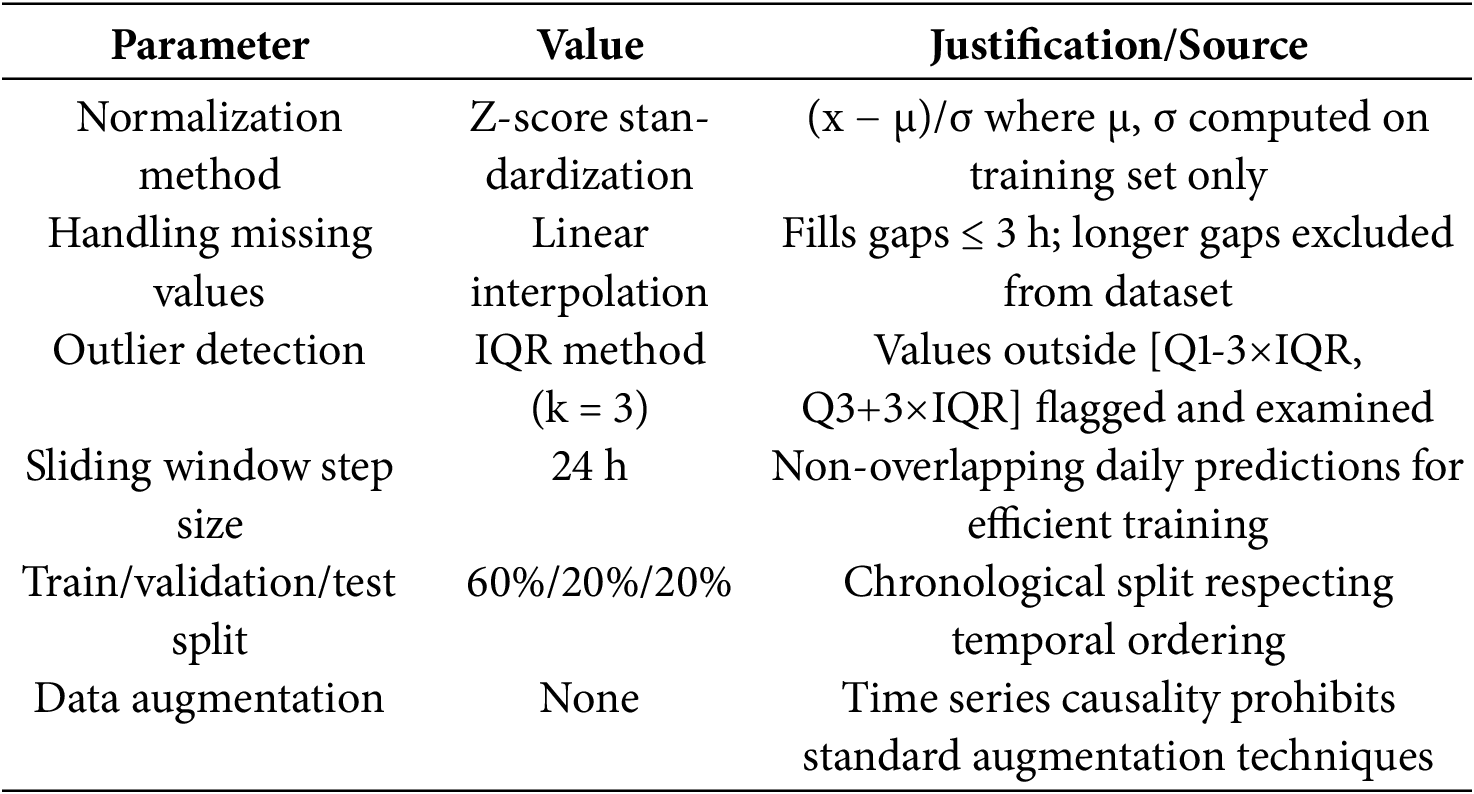

Complete Hyperparameter Configuration and Reproducibility Specifications

Model Architecture Parameters

Training Configuration

DataProcessing Parameters

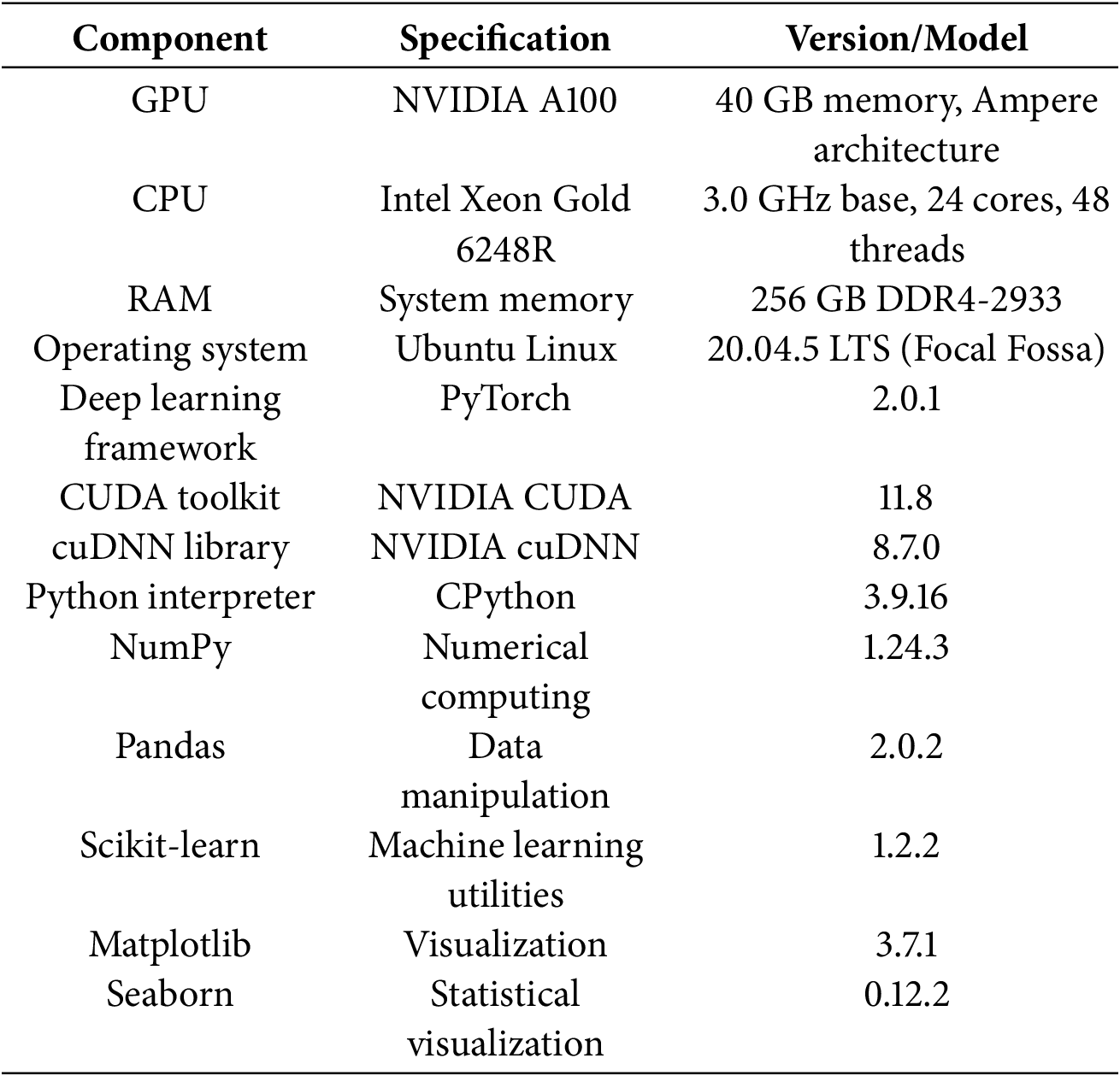

Hardware and Software Environment

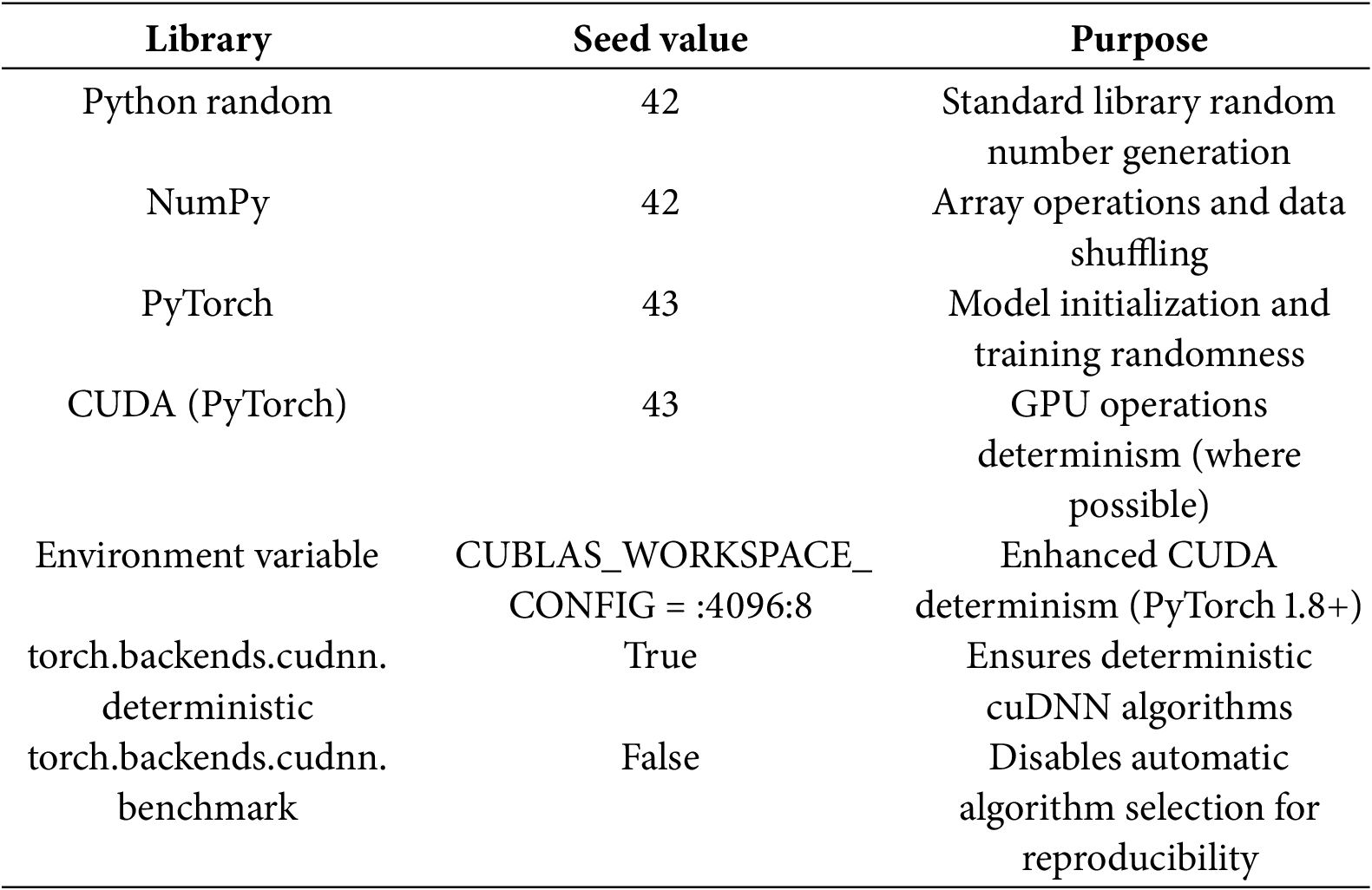

RandomSeed Configuration

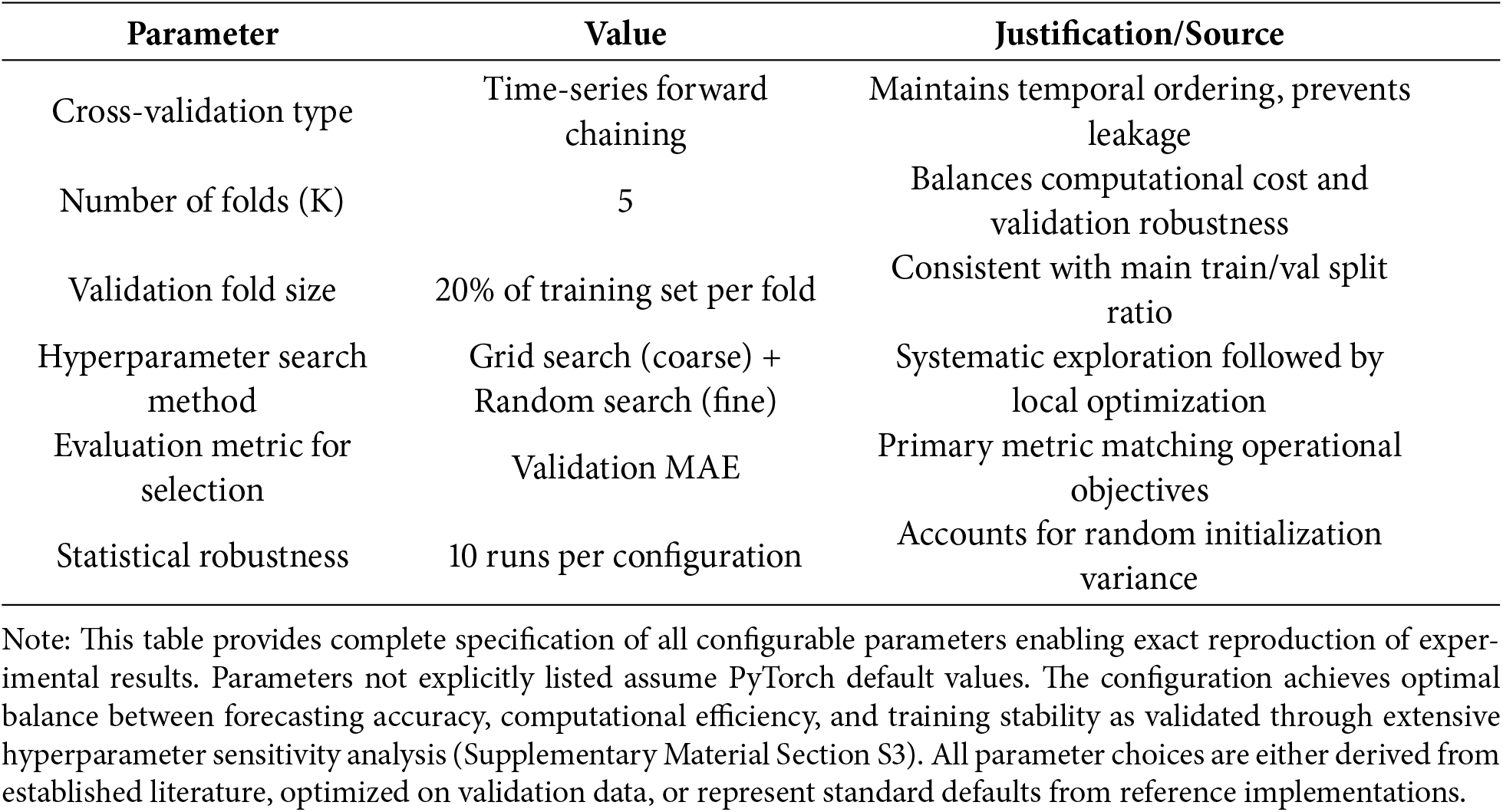

Cross-Validation Configuration (Hyperparameter Tuning)

References

1. Maji D, Sitaraman RK, Shenoy P. DACF: day-ahead carbon intensity forecasting of power grids using machine learning. In: Proceedings of the Thirteenth ACM International Conference on Future Energy Systems; 2022 Jun 28–Jul 1; Virtual. p. 188–92. doi:10.1145/3538637.3538849. [Google Scholar] [CrossRef]

2. Zhang B, Tian H, Berry A, Huang H, Roussac AC. Experimental comparison of two main paradigms for day-ahead average carbon intensity forecasting in power grids: a case study in Australia. Sustainability. 2024;16(19):8580. doi:10.3390/su16198580. [Google Scholar] [CrossRef]

3. Zhang X, Xiao Y, Li J. High precision prediction of electric emission carbon factor by deep learning combined ensemble learning. In: Proceedings of 2024 International Conference on Smart Electrical Grid and Renewable Energy (SEGRE 2024). Singapore: Springer Nature; 2025. p. 727–35. doi:10.1007/978-981-96-1965-8_69. [Google Scholar] [CrossRef]

4. Mujeeb S, Javaid N. Deep learning based carbon emissions forecasting and renewable energy’s impact quantification. IET Renew Power Gener. 2023;17(4):873–84. doi:10.1049/rpg2.12641. [Google Scholar] [CrossRef]

5. Saleem MS, Rashid J, Ahmad S. Forecasting green energy production in Latin American countries and Canada via temporal fusion transformer. Energy Sci Eng. 2025;13(5):2262–83. [Google Scholar]

6. Badhe NB, Neve RP, Yele VP, Abhang S. An optimized system for predicting energy usage in smart grids using temporal fusion transformer and Aquila optimizer. Front Artif Intell. 2025;8:1542320. doi:10.3389/frai.2025.1542320. [Google Scholar] [PubMed] [CrossRef]

7. Ali Iqbal M, Gil JM, Kim SK. Attention-driven hybrid ensemble approach with Bayesian optimization for accurate energy forecasting in jeju island’s renewable energy system. IEEE Access. 2025;13:7986–8010. doi:10.1109/access.2025.3526943. [Google Scholar] [CrossRef]

8. Kim BJ, Nam IW. A review of hybrid LSTM models in smart cities. Processes. 2025;13(7):2298. doi:10.3390/pr13072298. [Google Scholar] [CrossRef]

9. Osifeko M, Lange Munda J. AI-based forecasting in renewable-rich microgrids: challenges and comparative insights. IEEE Access. 2025;13:130446–74. doi:10.1109/access.2025.3591091. [Google Scholar] [CrossRef]

10. Wu B, Hao J. Enhanced xPatch for short-term photovoltaic power forecasting: supporting sustainable and resilient energy systems. Sustainability. 2025;17(16):7324. doi:10.3390/su17167324. [Google Scholar] [CrossRef]

11. Liu X, Liu Q, Feng S, Ge Y, Chen H, Chen C. Novel model for medium to long term photovoltaic power prediction using interactive feature trend transformer. Sci Rep. 2025;15(1):6544. doi:10.1038/s41598-025-90654-4. [Google Scholar] [PubMed] [CrossRef]

12. Sarmas E, Spiliotis E, Stamatopoulos E, Marinakis V, Doukas H. Short-term photovoltaic power forecasting using meta-learning and numerical weather prediction independent long short-term memory models. Renew Energy. 2023;216:118997. doi:10.1016/j.renene.2023.118997. [Google Scholar] [CrossRef]

13. Wang K, Wang L, Meng Q, Yang C, Lin Y, Zhu J, et al. Accurate photovoltaic power prediction via temperature correction with physics-informed neural networks. Energy. 2025;328:136546. doi:10.1016/j.energy.2025.136546. [Google Scholar] [CrossRef]

14. Guan K, Tan D, Zhong Q, Li P, Zhong J, Cao W, et al. Prediction of daily electricity carbon emission factors for high energy-consuming enterprises based on temporal feature optimization and hybrid intelligent algorithms. AIP Adv. 2025;15(8):085314. doi:10.1063/5.0287652. [Google Scholar] [CrossRef]

15. Owolabi AB, Yahaya A, Yakub AO, Same NN, Amir M, Adeshina MA, et al. Hybrid deep learning models for power output forecasting of grid-connected solar PV systems: a monocrystalline and polycrystalline PV panel analysis. Int J Energy Res. 2025;2025:9925615. doi:10.1155/er/9925615. [Google Scholar] [CrossRef]

16. Liu Q, Hou H, Zhou Z, Si L, Wang X, Jia Y, et al. A novel heat load forecasting method based on CASDA-driven unsupervised clustering. J Build Eng. 2025;111:113457. doi:10.1016/j.jobe.2025.113457. [Google Scholar] [CrossRef]

17. Sun Y, Qu Z, Liu Z, Li X. Hierarchical multi-scale decomposition and deep learning ensemble framework for enhanced carbon emission prediction. Mathematics. 2025;13(12):1924. doi:10.3390/math13121924. [Google Scholar] [CrossRef]

18. Jai Ganesh PM, Sundaram BM, Balachandran PK, Mohammad GB. IntDEM: an intelligent deep optimized energy management system for IoT-enabled smart grid applications. Electr Eng. 2025;107(2):1925–47. doi:10.1007/s00202-024-02586-3. [Google Scholar] [CrossRef]

19. Tian Q, Wang Q, Guo L. Water quality prediction of Pohe River reservoir based on SA-CNN-BiLSTM model. Environ Develop Sustain. 2025;25:1–32. doi:10.1007/s10668-025-06290-5. [Google Scholar] [CrossRef]

20. Zhang H, Yang J, Fan S, Geng H. An ultra-short-term distributed photovoltaic power forecasting method based on GPT. IEEE Trans Sustain Energy. 2025;16(4):2746–54. doi:10.1109/TSTE.2025.3564975. [Google Scholar] [CrossRef]

21. Lim B, Arık SÖ., Loeff N, Pfister T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int J Forecast. 2021;37(4):1748–64. doi:10.1016/j.ijforecast.2021.03.012. [Google Scholar] [CrossRef]

22. Abumohsen M, Owda AY, Owda M, Abumihsan A. Hybrid machine learning model combining of CNN-LSTM-RF for time series forecasting of Solar Power Generation. E Prime Adv Electr Eng Electron Energy. 2024;9:100636. doi:10.1016/j.prime.2024.100636. [Google Scholar] [CrossRef]

23. Camacho M, Maldonado-Correa J, Torres-Cabrera J, Martín-Martínez S, Gómez-Lázaro E. Short-medium-term solar irradiance forecasting with a CEEMDAN-CNN-ATT-LSTM hybrid model using meteorological data. Appl Sci. 2025;15(3):1275. doi:10.3390/app15031275. [Google Scholar] [CrossRef]

24. Li H, Li S, Wu Y, Xiao Y, Pan Z, Liu M. Short-term power load forecasting for integrated energy system based on a residual and attentive LSTM-TCN hybrid network. Front Energy Res. 2024;12:1384142. doi:10.3389/fenrg.2024.1384142. [Google Scholar] [CrossRef]

25. Wu Y, Wu Y, Ye J, Zheng L, Xu C, Zhang L, et al. Optimizing meteorological predictions to improve photovoltaic power generation in coastal areas. Sustain Energy Technol Assess. 2025;78:104345. doi:10.1016/j.seta.2025.104345. [Google Scholar] [CrossRef]

26. Jha S, Kolekar SM, Bose S, Kolekar MH, Swain D. Smart grid energy management: leveraging bidirectional LSTM and attention mechanism for accurate forecasting. In: Pattern Recognition. ICPR 2024 International Workshops and Challenges. Cham, Switzerland: Springer Nature; 2025. p. 304–18. doi:10.1007/978-3-031-88220-3_22. [Google Scholar] [CrossRef]

27. Jing J, Di H, Wang T, Jiang N, Xiang Z. Optimization of power system load forecasting and scheduling based on artificial neural networks. Energy Inform. 2025;8(1):6. doi:10.1186/s42162-024-00467-4. [Google Scholar] [CrossRef]

28. Liu X, Gao C. Review and prospects of artificial intelligence technology in virtual power plants. Energies. 2025;18(13):3325. doi:10.3390/en18133325. [Google Scholar] [CrossRef]

29. Vasenin D, Pasetti M, Astolfi D, Savvin N, Vasile A, Zizzo G. LSTM-based models for day-ahead electrical load forecast: a novel feature selection method including weather data. Smart Grids Sustain Energy. 2025;10(2):51. doi:10.1007/s40866-025-00281-1. [Google Scholar] [CrossRef]

30. Miao Q, Sun X, Ma C, Zhang Y, Gong D. Rescheduling costs and adaptive asymmetric errors guided closed-loop prediction of power loads in mine integrated energy systems. Energy AI. 2025;21:100516. doi:10.1016/j.egyai.2025.100516. [Google Scholar] [CrossRef]

31. Wen J, Wang Z. Short-term power load forecasting with hybrid TPA-BiLSTM prediction model based on CSSA. Comput Model Eng Sci. 2023;136(1):749–65. doi:10.32604/cmes.2023.023865. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2026 The Author(s). Published by Tech Science Press.

Copyright © 2026 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools