Open Access

Open Access

REVIEW

A Comprehensive Review of Multimodal Deep Learning for Enhanced Medical Diagnostics

1 Computer Science Department, Faculty of Computers and Artificial Intelligence, Damietta University, New Damietta, 34517, Egypt

2 Faculty of Computer Science and Engineering, New Mansoura University, Dakhlia, 35516, Egypt

3 Computer Science Department, Faculty of Graduate Studies for Statistical Research, Cairo University, Giza, 12613, Egypt

* Corresponding Author: Ahmed Ismail Ebada. Email:

(This article belongs to the Special Issue: Multi-Modal Deep Learning for Advanced Medical Diagnostics)

Computers, Materials & Continua 2025, 84(3), 4155-4193. https://doi.org/10.32604/cmc.2025.065571

Received 17 March 2025; Accepted 18 June 2025; Issue published 30 July 2025

Abstract

Multimodal deep learning has emerged as a key paradigm in contemporary medical diagnostics, advancing precision medicine by enabling integration and learning from diverse data sources. The exponential growth of high-dimensional healthcare data, encompassing genomic, transcriptomic, and other omics profiles, as well as radiological imaging and histopathological slides, makes this approach increasingly important because, when examined separately, these data sources only offer a fragmented picture of intricate disease processes. Multimodal deep learning leverages the complementary properties of multiple data modalities to enable more accurate prognostic modeling, more robust disease characterization, and improved treatment decision-making. This review provides a comprehensive overview of the current state of multimodal deep learning approaches in medical diagnosis. We classify and examine important application domains, such as (1) radiology, where automated report generation and lesion detection are facilitated by image-text integration; (2) histopathology, where fusion models improve tumor classification and grading; and (3) multi-omics, where molecular subtypes and latent biomarkers are revealed through cross-modal learning. We provide an overview of representative research, methodological advancements, and clinical consequences for each domain. Additionally, we critically analyzed the fundamental issues preventing wider adoption, including computational complexity (particularly in training scalable, multi-branch networks), data heterogeneity (resulting from modality-specific noise, resolution variations, and inconsistent annotations), and the challenge of maintaining significant cross-modal correlations during fusion. These problems impede interpretability, which is crucial for clinical trust and use, in addition to performance and generalizability. Lastly, we outline important areas for future research, including the development of standardized protocols for harmonizing data, the creation of lightweight and interpretable fusion architectures, the integration of real-time clinical decision support systems, and the promotion of cooperation for federated multimodal learning. Our goal is to provide researchers and clinicians with a concise overview of the field’s present state, enduring constraints, and exciting directions for further research through this review.Keywords

The integration of deep learning and big data has significantly transformed numerous fields, including healthcare. Recent advances in machine learning, particularly deep neural networks, have enabled the extraction of high-level features from complex datasets, such as images, text, and omics data. Healthcare domains, especially medical diagnostics, have benefited from these advances in areas such as computer-aided diagnosis, biomarker discovery, and personalized treatment planning.

Unimodal systems, which rely on a single type of data (e.g., clinical text or medical imaging), often exhibit limited effectiveness and restricted applicability. They cannot fully understand the complexity of a patient’s condition and may overlook important indicators that are apparent in other modalities. For example, a chest X-ray may show anomalies, but the interpretation could be inaccurate or misleading if it is not accompanied by contextual information, such as test findings, patient history, or symptoms. Furthermore, unimodal models are typically less reliable and more vulnerable to errors or missing data in their modality. These drawbacks highlight the necessity of models that combine data from multiple sources [1]. Multimodal deep learning, on the other hand, provides a revolutionary method by combining various disparate data sources into a unified analytical framework, which results in a more thorough and sophisticated comprehension of a patient’s health. This methodology improves biomarker identification, personalized treatment planning, and diagnostic accuracy by capturing complementary information from many modalities. A multifaceted view that goes beyond the potential of individual modalities is made possible by the integration of data from clinical notes, medical imaging, genetic profiles, and sensor outputs. Multimodal learning has shown notable advancements in disease detection, prognosis, and patient outcome prediction, especially when applied to complex and multivariate situations like cardiovascular diseases and cancer. This integrative approach advances clinical decision-making and precision medicine by helping researchers and clinicians identify links and patterns that might otherwise go unnoticed [2].

Despite this potential, significant clinical obstacles highlight the need to adopt such integrative technologies. No statistics have adequately captured the complexity of human health. Genetic, environmental, and behavioral factors all play a role in diseases, including cancer, diabetes, and neurodegenerative disorders. In addition, the amount of medical data is predicted to double every 73 days, placing clinicians at risk of cognitive overload in the absence of suitable analytical assistance. Additionally, the need for customized care that accounts for each patient’s particular biological characteristics and lifestyle choices is increasing, forcing healthcare organizations to abandon the use of one-size-fits-all approaches. By making scalable, context-aware, and patient-specific modeling possible, multimodal deep learning can address these issues.

We use a structured methodology that includes a systematic literature review of peer-reviewed articles published between 2022 and 2025, focusing on works that use state-of-the-art architecture like graph neural networks and attention-based models for data fusion. In this review, we critically assessed algorithmic innovations and their clinical impact, focusing on studies that demonstrate clear performance improvements across multiple medical conditions.

The scope of this review is to provide a thorough and critical analysis of recent advancements in multimodal deep learning for medical diagnostics, as well as its methodological evolution, real-world clinical applications, and related challenges.

Deep learning methods like CNNs (convolutional neural networks) [3] and RNNs (recurrent neural networks) [4] Can efficiently combine clinical text, physiological signals, genomic information, and medical images. This enables the identification of latent associations that aid in diagnosing and treating individualized diseases. Modern advances in deep learning, such as graph neural networks [5] and attention processes [6], have created new opportunities for combining and analyzing multimodal medical data. These innovative techniques have shown promise in better capturing contextual data and complex relationships to improve healthcare and enable more personalized and efficient care. Multimodal healthcare, which is becoming a major influence in the medical field, aims to use information technology to transform clinical procedures. It has drawn a lot of interest from academics and professionals as a possible approach to tackling important disease diagnosis issues in areas with unequal access to medical resources. The emergence of multimodal healthcare addresses pressing medical challenges. First, human health is too complex to be reduced to a single test or metric. No single diagnostic method can adequately account for the complex network of genetic, environmental, and behavioral factors that contribute to cancer [7], Diabetes [8], and neurodegenerative disorders [9]. Second, there are opportunities and challenges associated with the explosion of healthcare data, which is predicted to double every few years. Clinicians are at risk of becoming overwhelmed by a sea of disparate facts if no mechanisms are in place to synthesize these data. Furthermore, in a time when people vary greatly in terms of genetic composition, lifestyle, and reactions to treatment, patients now demand more proactive and individualized care. Multimodal healthcare satisfies this need by customizing interventions to each patient’s specific profile, improving results while reducing trial and error.

Several recent reviews have discussed various facets of multimodal medical AI (Artificial Intelligence). However, their coverage is frequently limited or devoid of critical analysis. For example, Muhammad et al. [10] introduced several topics related to multimodal signal fusion for intelligent medical devices. The survey article was mainly focused on IoMT. It included several important topics about IoMT (Internet of Medical Things) applications and smart healthcare difficulties. The review highlights four main limitations: its narrow focus may exclude some fusion techniques in smart healthcare, selection bias in study criteria may distort results, findings may become outdated due to rapid technological advancements, and sensor data variability could impact the validity of synthesized insights. Amal et al. [11] presented the applications and scope of machine learning and multimodal data concerning cardiovascular healthcare. The challenges of multimodal data fusion were also briefly covered by the writers. The study identified four main limitations: (1) a stated lack of conflicts of interest, although there may still be concerns about the independence of the results, (2) limited generalizability because of the study’s narrow demographic focus, (3) potential biases from the use of genetic and electronic health data, and (4) difficulties reproducing the intricate machine learning framework, which prevents practical application. In the opinion of Stahlschmidt et al. [12] Biomedical data is becoming more and more multimodal, offering a useful source of hidden information that is inaccessible using single-modality methodologies. The complex link between the modalities can be captured by deep learning approaches, which can combine multimodal data. Transfer learning is a good approach for multimodal medical big data, according to the authors. Although it provides a comprehensive review of multimodal deep learning techniques for biological data fusion, the research has some significant drawbacks. It is limited in its applicability to performance evaluation because it excludes quantitative comparisons between approaches and rigorous benchmarking. There is little technical depth and no emphasis on algorithmic implementation or reproducibility, as well as little help in choosing suitable fusion algorithms for biomedical applications. There is only a cursory recognition of real-world deployment issues, including infrastructure, privacy, and missing data. Moreover, the evaluation might already be out of date because of how quickly the subject is evolving, particularly with the introduction of more recent architectures like transformers. Pei et al. [13] discussed the main features of medical multimodal fusion techniques, including supported medical data, diseases, target samples, and implementation performance, and examined the effectiveness of current multimodal fusion pre-training algorithms. Furthermore, this paper outlines the primary obstacles and objectives of the most recent developments in multimodal medical convergence. It has various significant drawbacks. In contrast to providing in-depth technical insights or critical assessments of the approaches offered, it emphasizes publication trends. The lack of a defined research selection technique in the report compromises reproducibility and transparency. In addition, it offers little clinical or practical viewpoints and scant attention to how models function in actual environments. Evaluation metrics are also barely mentioned, and the section on future initiatives is vague and short. Lastly, the review feels old in some ways because it ignores some of the most recent developments in multimodal learning. Recently, Shaik et al. [14] concentrated on algorithmic techniques for managing multimodal data, including rule-based systems, feature selection, natural language processing, and data fusion using machine learning and deep learning techniques. Additionally, several smart healthcare concerns were discussed. After that, they suggest a general framework for combining multimodal medical data that is consistent with the DIKW (data to information to knowledge to wisdom) paradigm. This study overemphasizes the DIKW framework, offers little technical depth, and lacks a defined review approach. It lacks a comparative study of approaches, under-represents newer developments, such as transformer models, and offers little information about clinical integration. The future directions are imprecise and high-level, limiting the paper’s practical value.

This review makes three main contributions. First, we provide a taxonomy of multimodal learning strategies based on recent architectural developments that are specific to the medical field. Second, we evaluate the application of these techniques in real-world clinical settings, ranging from genetics to radiology, emphasizing examples where they have produced quantifiable gains in diagnostic performance. Third, we point out remaining challenges and suggest future lines of studies, most with a focus on interpretability, standardization, and ethical issues, to steer the creation of reliable, implementable solutions. We hope to accomplish this by providing a unique and practical viewpoint that transcends traditional literature reviews, thereby bridging the gap between clinical utility and machine learning innovation.

The rest of this review is organized to make it easier to navigate. Section 2 provides basic information and fundamental ideas, including important architectural frameworks and unimodal and multimodal techniques in medical diagnostics. Section 3 explains the different kinds of medical data used in multimodal learning. Section 4 examines the latest deep-learning methods used in medical diagnosis. Section 5 discusses the methods used to integrate multimodal data. Section 6 demonstrates how these techniques are used in actual healthcare settings. Section 7 discusses the main issues facing multimodal deep learning is facing, and Section 8 proposes possible fixes. Lastly, Section 9 describes upcoming research avenues and new developments in this rapidly changing field.

2.1 Unimodal in Medical Diagnostics

Unimodal medical diagnostics refers to the usage of a single diagnostic or imaging technique for the evaluation and diagnosis of medical disorders. This method has been a mainstay of healthcare for many years, and various approaches offer insightful information about patient health. Many unimodal diagnostic techniques are frequently employed in clinical settings, including computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, and X-rays [15]. Physicians can see inside structures and spot problems using these imaging methods without undergoing invasive treatments. These unimodal diagnostic methods’ capabilities have been greatly increased in recent years using artificial intelligence (AI) and machine learning [16]. For example, deep neural networks (DNNs) have demonstrated state-of-the-art performance in image classification tasks, offering doctors diagnostic assistance when examining medical images [17].

2.1.1 Performance and Applications

In medical diagnostics, unimodal models are frequently employed, especially for imaging-based tasks like pulmonary abnormality prediction, breast mass categorisation, and knee osteoarthritis detection. For instance, knee osteoarthritis has been diagnosed using only imaging data using deep learning models such as InceptionV3 and EfficientNetv2, which have demonstrated remarkable accuracy (up to 0.75 for 3-class severity classification) and, in certain situations, outperform more intricate multimodal models when only imaging features are considered [18]. In a similar vein, unimodal machine learning systems that use ultrasonic features have demonstrated performance in breast mass categorisation that is on par with human experts (AUC 0.82–0.84) [19].

Unimodal diagnostic models, which rely on a single data source, offer certain methodological and practical advantages, such as simplicity, lower computational cost, and easier implementation. The main advantages of these systems are their ease of use and computational effectiveness. Because these models do not require the synchronization or integration of numerous data sources, they are typically simpler to build and implement than multimodal systems [19]. Unimodal techniques are therefore frequently less computationally demanding, making them appropriate for environments with constrained computational resources. Their great performance at baseline is another asset. Unimodal models can compete with or even surpass multimodal systems in situations where the selected modality is informative, such as high-resolution imaging in radiology or unique molecular fingerprints in genomics [20]. This is particularly true for well-characterized disorders where most of the diagnostic information required is extracted by a single data type. Additionally, unimodal diagnostics are more appealing due to their practicality and clinical relevance. Most clinical workflows in use today are built on single-modality data, including histopathology slides, blood tests, or radiography pictures [21]. Therefore, unimodal models can be easily incorporated into current diagnostic workflows without requiring significant infrastructure modifications.

Although unimodal diagnostics offer many benefits, they also have certain drawbacks [22,23]. Their limited scope of knowledge is a major concern. These models might overlook supplementary information that could improve diagnostic accuracy if they only used one data modality, particularly in complicated or heterogeneous circumstances. For instance, a more thorough understanding of disease pathophysiology can be obtained by integrating imaging data with genetic or clinical information. However, limited generalizability presents another difficulty. When the modality is absent or distorted, unimodal models may not work as intended or are frequently sensitive to data quality changes. This dependence on a single source may reduce robustness, particularly in clinical settings where data are noisy or lacking. Finally, accuracy and false-positive rates are important issues. Compared to multimodal systems, which are better able to cross-validate signals across data types, unimodal biomarker-based diagnostics have been linked to increased false-positive rates and decreased precision in fields such as oncology. This restriction may cause patients to feel anxious and require needless procedures.

However, unimodal diagnostics have certain drawbacks. A more thorough diagnostic approach is frequently necessary because of the complexity of many medical diseases. As a result, multimodality imaging approaches have been developed, combining data from many imaging modalities to provide a more comprehensive view of a patient’s state [24]. Although multimodal approaches are becoming increasingly popular, continuous research is still improving unimodal diagnostic methods. For instance, single-modality diagnostic tools are becoming more sensitive and specific due to developments in quantum biosensors [25].

2.2 Multimodal in Medical Diagnostics

Multimodal medical diagnostics is a new field that integrates data from several data sources and imaging modalities to provide a more thorough understanding of patient situations and increase diagnosis accuracy. This method offers a synergistic effect in clinical diagnosis and medical research by utilizing the complementary nature of several imaging modalities and data kinds [26]. Combining many modalities, including pathological slides, radiological scans, and genomic data, enables a more comprehensive understanding of the patient’s situation. Recent developments in artificial intelligence, including deep learning-based methods for multimodal fusion, have greatly improved multimodal medical diagnostics [27]. Research has demonstrated, for example, that AI models such as GPT-4V can attain greater diagnostic accuracy when given multimodal inputs as opposed to single-modality inputs [28]. Curiously, multimodal medical diagnostics encompasses more than just conventional imaging modalities. New technologies like upconversion nanoparticles (UCNPs) are being investigated for their potential use in targeted therapies and multimodal cancer imaging [26]. Furthermore, chances to further advance precision oncology beyond genomics and conventional molecular approaches are presented by the combination of improved molecular diagnostics, radiographic and histological imaging, and coded clinical data [29]. Because of their increased precision and dependability, multimodal medical diagnostics can be considered a potential new area in healthcare. Still, there are obstacles to overcome, such as the requirement for strong image fusion algorithms, the management of partial multimodal data, and the resolution of privacy and ethical issues [30].

Multimodal architecture typically follows a structured pipeline, starting with feature extraction from several data modalities, including text, audio, pictures, and sensor inputs. This includes unstructured text (such as clinical notes and chief complaints), structured clinical information (such as laboratory findings and patient demographics), and imaging data (such as MRI, CT, PET (positron emission tomography), and ultrasound) in medical applications [31]. Word embedding models use textual analysis; however, convolutional neural networks (CNNs) are frequently used for visual and audio data [32]. For instance, combining genetic, pathological, radiological, and clinical data in the diagnosis of cancer offers a thorough description of disease phenotypes [33].

Following initial data extraction, each modality is subjected to specific preprocessing: structured and unstructured textual data are tokenized or converted into embeddings, whereas imaging data are usually normalized and segmented. The use of embedding layers in advanced deep learning architectures allows for the cooperative processing of visual and textual tokens in later stages by transforming inputs, including text and images, into a single representation space.

Data fusion is a crucial stage in multimodal pipelines that combine diverse information sources to create richer representations. There are three different levels of fusion: data-level fusion, which combines low-level features or raw data early on; feature level fusion, which incorporates modality-specific features into neural network architectures, frequently using transformer or convolutional layers; and decision-level fusion, which combines outputs from independently trained models, usually using ensemble techniques or voting strategies. Research has demonstrated that feature-level fusion often outperforms late fusion methods, especially in deep-learning models. Furthermore, other frameworks use methods like deep unfolding operators, which incorporate sparse priors and structured learning principles into the network design to include domain knowledge [34]. Feature extraction, fusion, and classification are becoming less distinct in modern multimodal systems, leading to unified designs that capture inter- and intra-modal interactions. Transformer-based models and convolutional neural networks (CNNs) are both commonly used; the latter is particularly good at joint multimodal representation learning using attention mechanisms. Complex anatomical structures and specialized designs, such as Multi ResU Net, have shown improved biomedical image segmentation performance. Furthermore, by including medical expertise at different stages of the model, knowledge-augmented networks can significantly increase diagnostic accuracy [35]. These models must be trained on extensive datasets with annotations and inputs that are synchronized across modalities. Standard criteria, including accuracy, sensitivity, and the area under the receiver operating characteristic curve (AUC), were used to assess performance, and multimodal models routinely outperformed baseline models with only one modality. The recent change toward unified multitask architectures that can manage issues like noisy data, missing modalities, and privacy concerns recent trend. Methods like hybrid secure models and deep hashing are being investigated to improve multimodal systems’ security, generalisability, and resilience [36,37].

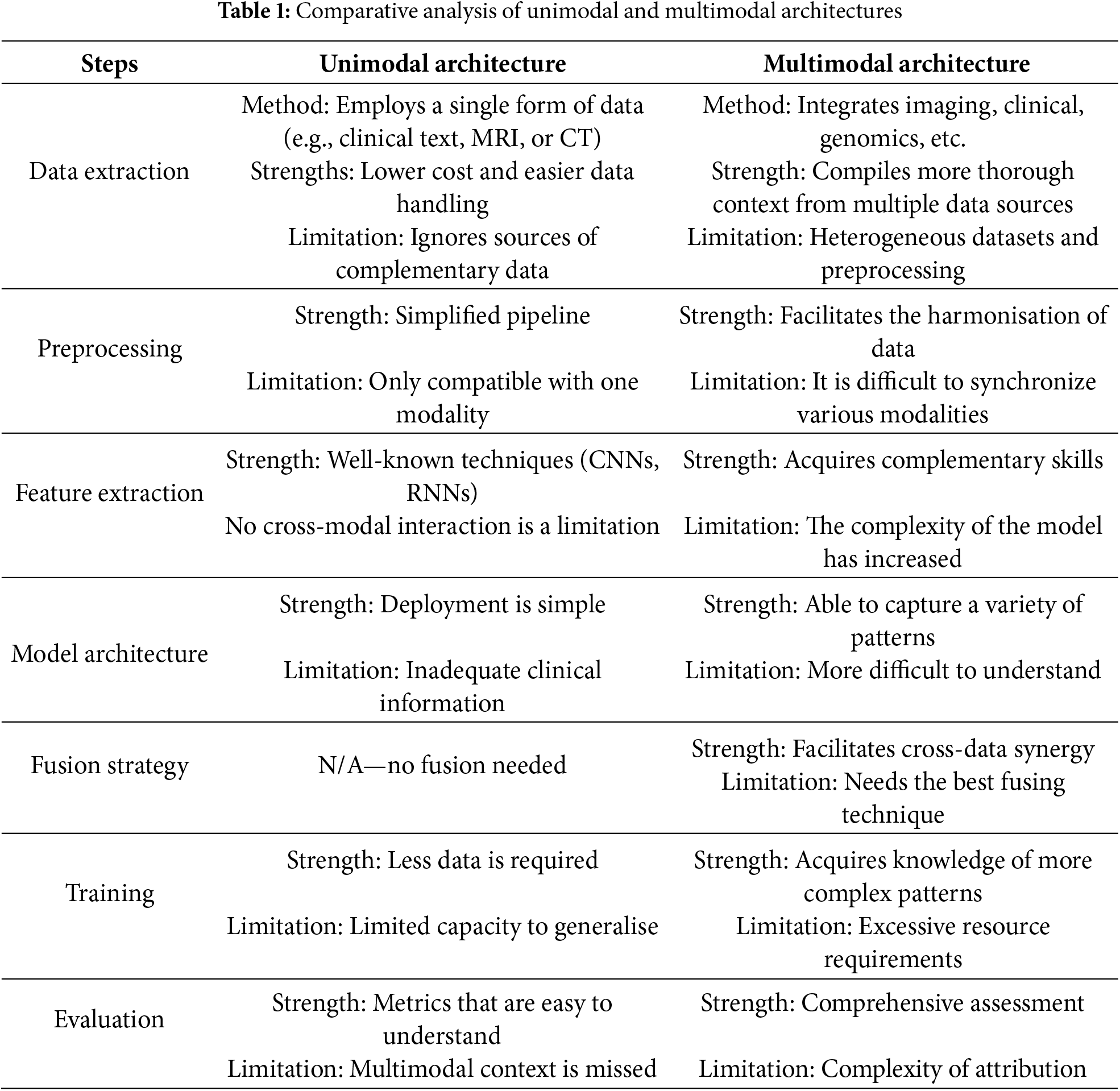

To ensure stable cross-modal associations, recent research emphasizes cross-modality techniques that align and synthesize data across modalities. Cross-modality learning, such as Cross-Modality Optimal Transport (CMOT), aligns disparate modalities into a shared latent space, enabling more reliable classification and missing modality inference in complex settings like cancer and cell-type identification [38]. Synthesis methods address data incompleteness by generating missing modalities from available ones [39]. Frameworks like MultiFuseNet, which integrate multiple screening test modalities, have proven effective in cervical dysplasia diagnosis [40], while MADDi employs cross-modal attention mechanisms for high-accuracy differentiation in Alzheimer’s disease stages [41]. Stability is further reinforced through methods like quaternion-based spatial learning and deep co-training, which improve segmentation performance across poorly annotated modalities [42,43]. Additionally, empirical approaches such as multimodally-additive function projection (EMAP) are used to determine whether improvements stem from true cross-modal interactions or dominant unimodal contributions [44], reinforcing the importance of evaluating and sustaining robust cross-modal coherence across all stages of multimodal processing. Table 1 presents a comparative analysis of unimodal and multimodal architectures, highlighting their respective strengths, limitations.

3 Overview of Medical Data Types

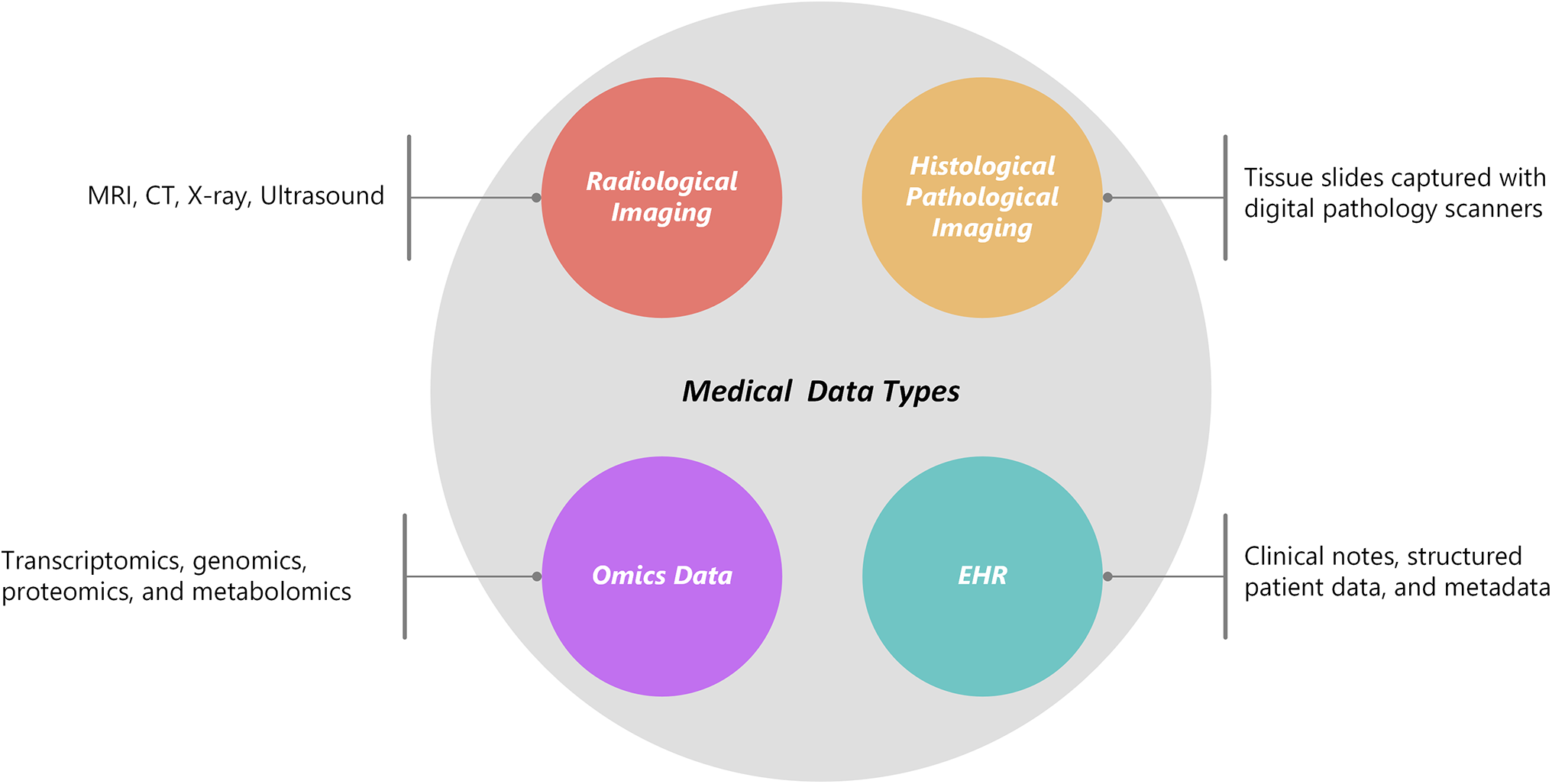

Multimodal deep learning extends these advantages by integrating data from multiple sources, including EHRs, radiological imaging, histological/pathological imaging, and omics data, as illustrated in Fig. 1. These modalities include raw, unstructured data that are unique to their formats. The data is processed using feature extraction techniques applied to medical imaging, wearable device data, and structured EHRs to generate valuable clinical insights. In the context of medical diagnostics, several major data modalities contribute to this process.

Figure 1: Medical data types

Medical 3D volumetric images are usually created from a stack of 2D slices with a specified thickness, representing a specific region of interest within the body. These individual slices can be processed and analyzed separately (as 2D images) or collectively (as 3D volumes) to extract vital information. Most medical imaging data are stored as 2D image slices in the Digital Imaging and Communications in Medicine (DICOM) format after acquisition [45]. This includes patient metadata, imaging procedure information, devices used for image acquisition, and imaging protocol settings. Radiological imaging could include MRI, CT, X-ray, or ultrasound.

X-rays are 2D grayscale radiograph pictures by nature. Five levels of attenuation can be distinguished using conventional radiography: air, fat, soft tissue, bone, and metal. The air looks dark on radiographs because most X-rays may flow through it because of its density, while much denser metal appears dazzling white because it absorbs most of the energy from the X-ray beam. The idea behind X-ray imaging is that different types of bodily tissues attenuate X-rays differently [46]. Different colors of gray are displayed for fat, soft tissue, and bone; fat is darker than soft tissue, and bone is lighter. However, X-rays are often used as a screening technique because they do not provide sufficient spatial depth information for definitive diagnosis. X-rays have been used in numerous recent studies for cardiology prediction tasks, including auxiliary conduction circuit analysis, pulmonary edema assessment [47], and cardiomegaly identification [48].

CT stands for Computed Tomography. CT scans are a common option for medical diagnostics because they provide high-resolution imaging, accessibility, affordability, and speed. The ability of ionizing radiation to differentiate soft tissues and expose patients to ionizing radiation [49]. The human body’s detailed cross-sectional images can be obtained from Computed Tomography (CT) scans [50]. These scans use radiographic projections obtained from various angles to reassemble several successive 2D slices, creating 3D picture volumes. CT is a flexible imaging method that is mostly used to find structural anomalies, to find tumors, to diagnose cardiac issues, and to image the brain for different neurological disorders. It is frequently used in cancer diagnosis [51], therapy planning [52], respiratory therapy [53], and cardiovascular research [54].

MRI facilitates tissue-specific reconstruction by measuring magnetization in both the longitudinal and transverse directions [55]. Without ionizing radiation, this method produces fine-grained images of internal structures, soft tissues, and organs. Numerous brain illnesses, such as Parkinson’s disease [56], multiple sclerosis [57], and Alzheimer’s disease [58], can be studied using this method.

Doppler techniques, which provide useful color overlays on grayscale images, are frequently used to observe blood flow and evaluate velocity [59]. Ultrasound is a preferred option for obstetrics and gynecology because of its noninvasiveness and absence of ionizing radiation [60]. And check numerous organs (liver, kidneys, etc.) [61] for possible problems. In addition, ultrasonography is crucial for monitoring the course of diseases and directing exacting surgical operations [62].

3.2 Histological/Pathological Imaging

Tissue slides were captured with digital pathology scanners. Histological and pathological imaging involves the examination of tissue samples under a microscope. These tissue slides are now digitally transformed into high-resolution images because of the development of digital pathology scanners, which provide sophisticated analysis, sharing, and storage.

3.3 Omics Data: Transcriptomics, Genomics, Proteomics, and Metabolomics

Omics technologies are a collection of high-throughput techniques used to investigate biological molecules at the system level. Proteomics analyses protein profiles, metabolomics maps small-molecule compounds, transcriptomics analyses RNA expression, and genomics decodes DNA sequences [63]. Therapeutic medications and possible risk protein biomarkers for the prevention and treatment of cancer. The proposed study [64] combined extensive genome, transcriptomics, proteomics, and metabolomics data. Thirty-six possible druggable proteins for cancer prevention were identified in subsequent investigations. Furthermore, a review of more than 3.5 million electronic health records revealed medications associated with either a higher or lower risk of cancer, providing new information for treatment approaches.

3.4 Electronic Health Records (EHRs)

EHRs are digital repositories that contain patient health data, such as imaging reports, lab findings, clinical notes, and demographic metadata. EHRs have emerged as a key component of contemporary healthcare, facilitating research, population health management, and data-driven decision-making. EHRs contain a multitude of information, both structured (such as vital signs and diagnoses) and unstructured (such as free-text notes) [65].

By aligning these heterogeneous data types, multimodal deep learning models aim to improve diagnostic accuracy and uncover complex disease mechanisms. Techniques vary widely, from early fusion (e.g., concatenating feature vectors) to late fusion (e.g., combining model output).

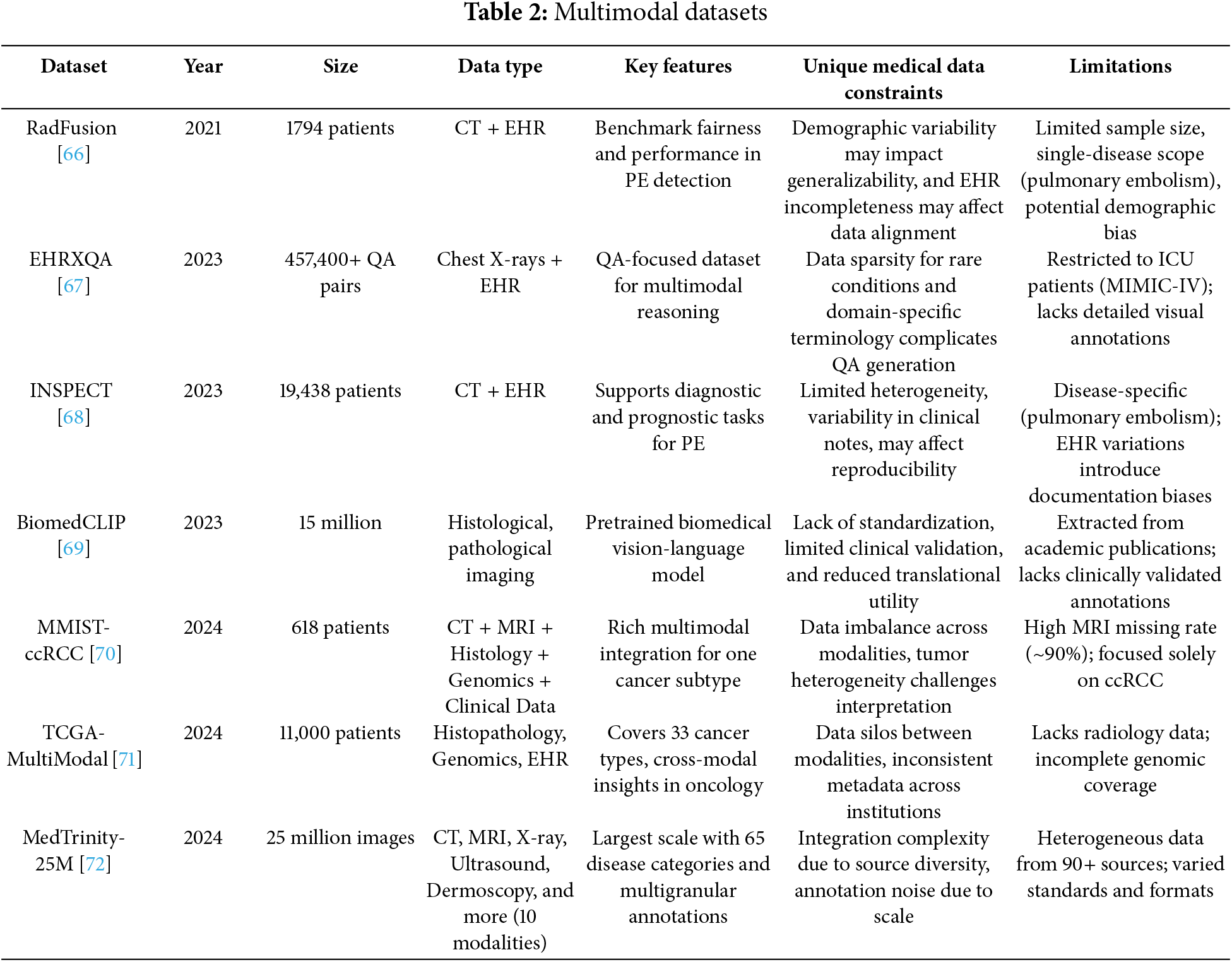

These various data types require efficient integration techniques that are becoming increasingly prevalent in contemporary multimodal datasets. Representative examples are presented in Table 2.

4 State of the Art in Deep Learning Techniques for Medical Diagnostics

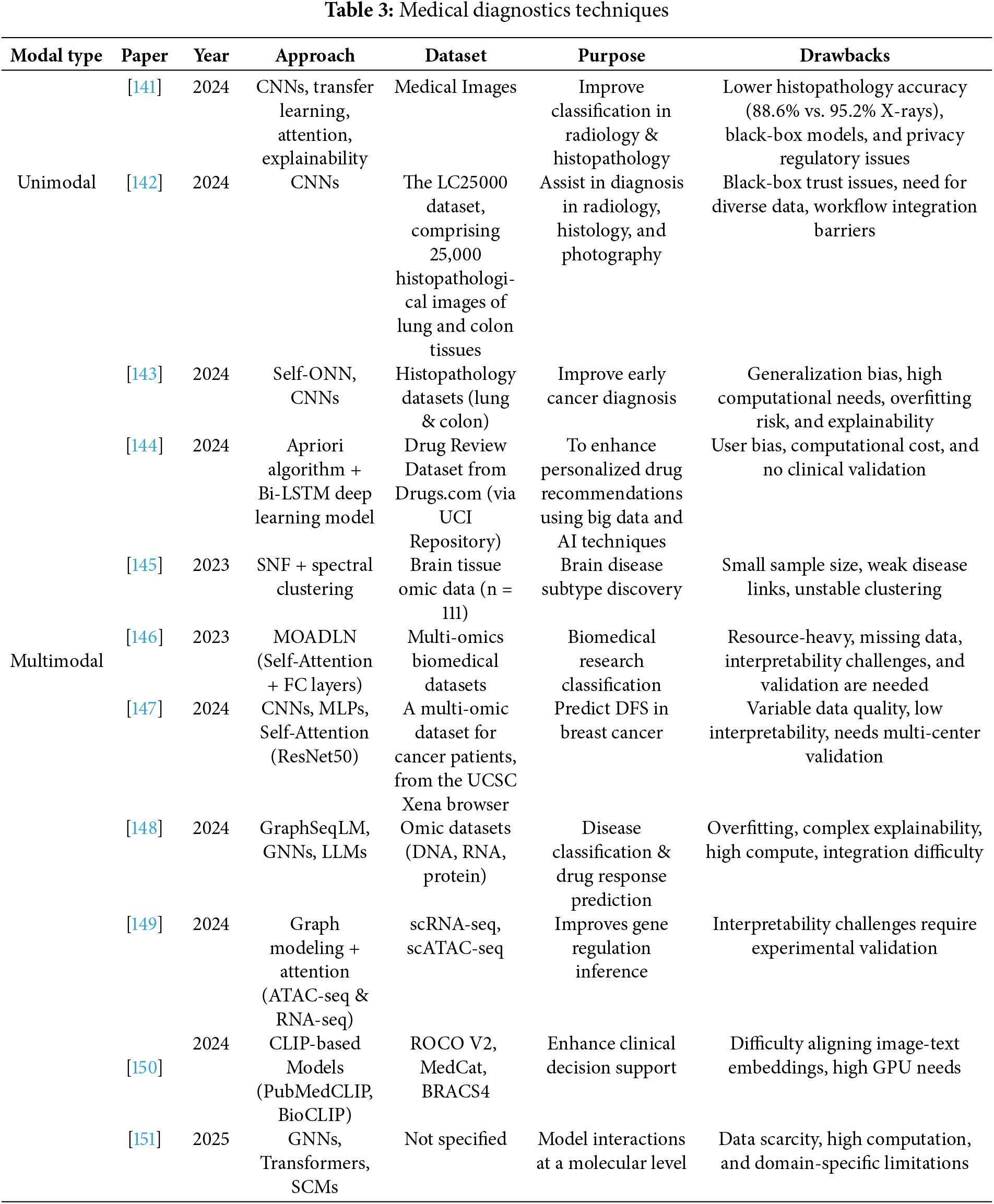

Deep learning’s amazing capacity to extract hierarchical representations directly from raw and high-dimensional data has greatly benefited healthcare research. This paradigm change has made it easier to create systems that can assess complicated medical data in a highly accurate, automated, and scalable manner. In addition to improving diagnostic and prognostic activities, deep learning’s incorporation into the healthcare industry has set the stage for intelligent decision support systems. A comparative review of previous research using deep learning in medical diagnostics is presented in Table 3, which also highlights the methodological frameworks, goals, and inherent constraints identifying the model type of each study.

Three key advantages of deep learning, automated feature extraction, scalability, and transfer learning, are primarily responsible for their efficacy in medical applications. These benefits have made deep learning architectures indispensable for handling the growing complexity of contemporary healthcare data. Automated feature extraction eliminates the need for handcrafted features and enables deep neural networks to extract important patterns from raw data input. The hierarchical structure of these models makes it easier to learn progressively more abstract representations, leading to a deeper comprehension of the underlying facts [73]. Scalability is one of deep learning’s other main advantages in the medical field. Large-scale, heterogeneous medical datasets can be used to train deep models due to the availability of high-performance computing infrastructure. This potential has sparked the creation of hybrid deep learning frameworks that can effectively handle high-dimensional data while simultaneously tackling issues like computational security, data privacy, and interoperability in medical settings [74]. Transfer learning has increased deep learning’s applicability even more, especially in scenarios with limited labeled medical data. Researchers can optimize these architectures for particular healthcare problems using models that have already been trained on sizable general-purpose datasets. This improves performance and speeds up convergence [75]. When combined, these key components, scalable model development, automated feature extraction, and the thoughtful application of transfer learning, have established deep learning as an essential instrument in medical research. The creation of next-generation medical technologies that are not only more precise and effective but also better able to adjust to the intricacies of actual clinical settings depends on these developments. Building on this fundamental summary of deep learning’s influence in healthcare, a more thorough analysis of the core architectures, such as Transformers, Recurrent Neural Networks, and Convolutional Neural Networks, is necessary to comprehend their unique contributions, advantages, and uses in the medical field.

4.1 Convolutional Neural Networks (CNN)

Deep learning has greatly improved medical diagnoses by increasing the precision and effectiveness of image processing, especially when CNNs are used. CNNs have transformed medical image analysis and diagnostics. CNNs have outperformed traditional computer-aided detection (CAD) systems in various tasks, including segmentation, object detection, and image classification [76]. CNNs can automatically learn complex image features, removing the need for manually engineered feature extraction, a major advantage over traditional machine learning techniques [77]. CNNs have been used in a variety of imaging modalities in medical diagnostics, such as MRI, CT, X-ray, and histopathology. The excellent accuracy of CNNs in analyzing medical pictures may help radiologists and physicians make more accurate and timely diagnoses [78]. CNN’s performance in image recognition tasks, as exemplified by models like AlexNet and GoogleNet, which have been successfully applied to medical images, has fueled their popularity in medical diagnostics [79]. These networks have played a key role in helping doctors make more accurate diagnoses by automating the examination of complicated medical images [80]. CT scans are used to detect and segment pelvic and omental lesions in patients with ovarian cancer [81].

More recent works have combined radiology images with text data (radiology reports) to augment understanding for Multimodality. The researchers proposed a framework [82] combines survival prediction, clinical variable selection, and 3D CNN-based feature extraction for the prognosis of renal cell cancer. It uses a deep learning model with Logistic Hazard-based loss for survival prediction, chooses clinical variables using Spearman and random forest scores, and predicts tumor ISUP grades from CT images. For best results, variable selection is fine-tuned through nine experiments. Other researchers [83] produced an improved CNN model is presented that overcomes data fusion and feature extraction restrictions to better multimodal medical image segmentation. Other studies [84] have combined MRI and CT imaging to create deep learning-based diagnostic models for osteoporosis prediction. Utilizing both unimodal and multimodal strategies. To construct the findings part of radiology reports, radiological images [4] and patient indication text in a multimodal strategy for automatic report generation were integrated using chest X-ray (CXR) imaging.

Digital pathology involves extremely high-resolution whole-slide images (WSIs). CNNs and attention-based methods have been used to localize regions of interest, with further integration of patient metadata for contextual interpretation. Researchers [85] examined the connection between Tumor mutational burden (TMB) clinical variables, gene expression, and image features by analyzing histopathological pictures, clinical data, and molecular data from The Cancer Genome Atlas (TCGA). To go beyond conventional unimodal methods for multimodal breast cancer diagnosis [86]. The researchers investigate the integration of histopathological pictures with non-image data. Enhancing diagnostic accuracy, clinician confidence, and patient involvement, the study highlights the significance of transparent AI decision-making by utilizing Explainable AI (XAI). Researchers combined genomic data [87] with histopathological images, a multimodal CNN-ensemble method for early and precise pancreatic cancer identification. The model uses feature fusion techniques, deep learning survival models, and ensemble CNNs to improve tumour segmentation, classification, and survival prediction.

Joint analyses of radiological and histological data have shown improved classification and staging results in cancer diagnostics. For instance, automated tumor detection can benefit from both imaging modalities radiology provides macroscale structural context, while histology validates microscale cellular anomalies.

It’s crucial to remember that, despite their enormous potential, CNNs have drawbacks. Large volumes of well-annotated training data are required, which can be costly and challenging to acquire in medical contexts. This is one major problem. To overcome this, transfer learning approaches have been investigated, in which CNNs that have already been trained on non-medical pictures are adjusted for particular medical tasks [88]. Researchers are also looking at self-supervised learning and transformer networks as ways to further enhance performance and lower data needs [89].

4.2 Recurrent Neural Networks (RNNs)

The use of deep learning methods, especially Recurrent Neural Networks (RNNs), has become essential for improving medical diagnosis. RNNs are skilled at handling multivariate time-series data, which is common in clinical environments like intensive care units (ICUs). This is especially true of those that use Long Short-Term Memory (LSTM) units. An innovative work [90] empirically assessed how well LSTMs can identify patterns in clinical parameters and demonstrated that they can categorize several diseases using only raw time-series data. According to their findings, LSTMs outperformed conventional machine learning models, providing a solid basis for the application of deep learning in medical diagnostics. The potential of deep learning approaches to forecasting violent episodes during patient admissions has been investigated in the field of psychiatric care. Their research demonstrated the superiority of deep learning over traditional techniques by using clinical text data stored in Electronic Health Records (EHRs) to achieve state-of-the-art predicted accuracy performance. The RNN-SURV model outperformed state-of-the-art methods in terms of the concordance index (C-index) in survival analysis, demonstrating superior performance in calculating risk scores and survival functions for individual patients [91]. RNNs have also shown promise in solving problems outside the mainstream of medical diagnosis. For example, a Modified Long Short-Term Memory (MLSTM) model has been constructed to predict new cases, fatalities, and recoveries in the COVID-19 pandemic setting, outperforming traditional LSTM and Logistic Regression models [92].

Increasingly, multimodal deep learning, which combines information from various medical sources, is being used to improve the precision and effectiveness of diagnosis. When used in combination with other deep learning models, recurrent neural networks (RNNs) are crucial for analyzing temporal and sequential medical data, thereby enhancing disease categorization and identification. RNNs are particularly good at interpreting sequential or temporal data, such as time-series signals (e.g., ECG) or dynamic imaging (e.g., ultrasound movies). When combined with other models, such as CNNs and autoencoders, RNNs improve temporal pattern identification and feature extraction, which is important for applications like video-based diagnostics, disease progression prediction, and cardiac MRI segmentation [93,94]. Reported prediction accuracies of up to 98% [95] have been achieved in image recognition and sequential data processing using hybrid frameworks that combine RNNs with CNNs and autoencoders.

Recurrent neural networks (RNNs) are a type of deep learning model that has shown remarkable efficacy in a variety of medical applications, most notably in tumor categorization and detection. The applications of these models to multimodal imaging modalities, like PET-MRI and PET-CT, have demonstrated their capacity to enhance diagnostic robustness and precision, enabling more precise and trustworthy tumor evaluations [96,97]. In addition, RNN-integrated multimodal fusion models have substantially enhanced the sensitivity and accuracy of disease recognition tasks, outperforming conventional single-modality methods. This enhancement reaches important domains, such as Alzheimer’s disease and heart disorders, where RNNs obtain high classification accuracy when combined with multimodal neuroimaging and clinical data [33]. These developments facilitate early prognosis and diagnosis, laying the groundwork for prompt clinical interventions. Multimodal deep learning frameworks have several advantages [98], such as improved accuracy, reduced diagnostic time and expense, and increased resilience to noise and adversarial attacks—all of which are critical in clinical contexts. However, there are still issues regarding enhancing model interpretability, refining data fusion techniques, and incorporating expert medical knowledge to increase diagnostic accuracy. To properly use deep learning models in medical diagnostics, these constraints must be overcome [99].

Graph Neural Networks (GNNs) have demonstrated a great deal of promise in improving medical diagnosis. GNNs are especially well-suited for integrating various medical data types because they effectively blend graph structure representations with deep learning’s outstanding prediction accuracy [100]. In cancer research, where data range across several dimensions, modalities, and resolutions—from digital histopathology slides and genetic data to screening and diagnostic imaging, this method is particularly helpful [101]. Graph Neural Networks (GNNs) are becoming a crucial tool for combining and evaluating these multimodal datasets, providing deeper insights and increased accuracy, particularly in intricate domains like neurodegenerative illnesses and oncology. Deep reinforcement learning (DRL) combined with GNNs has further increased the potential of models for use in medical diagnostics. This combination can lead to more reliable and accurate diagnostic tools by strengthening the application of GNNs and improving the formulation of DRL [102] For example, an AI-powered model that uses neural network optimization, multilevel thresholding, and image preprocessing has 92% accuracy in classifying various forms of brain tumors [103]. Incorporating protein-protein interaction networks to combine omics data with imaging features. The researchers introduced [104] the integration of multi-omics data in biomedical research using graph-based machine learning techniques, namely graph neural networks (GNNs). Multi-omics techniques, whether used at bulk or single-cell resolution, aid in finding biomarkers, predicting treatment response, and gaining a mechanistic understanding of cellular and microenvironmental processes. Multi-omics information [105], such as transcriptomics, proteomics, epigenomics, and genomics, provide a thorough understanding of cellular signaling pathways. Because they can naturally integrate and represent multi-omics data as a biologically meaningful multi-level signaling graph and interpret multi-omics data using graph node and edge ranking analysis, graph AI models, which have been widely used to analyze graph-structure datasets, are perfect for integrative multi-omics data analysis.

In summary, multimodal deep learning using GNNs is an effective approach for medical diagnostics due to its efficient integration and analysis of many kinds. This technology could improve patient outcomes by streamlining workflows and reducing interpretation time [106]. However, for a smooth transition into clinical practice, issues including data heterogeneity, model interpretability, and regulatory compliance must be resolved [107].

4.4 Generative Adversarial Networks (GANs)

Adversarial Generative Networks (GANs) have greatly improved medical imaging by producing realistic synthetic images for data augmentation, which has improved segmentation and classification, particularly for rare disorders [108,109]. They provide thorough diagnosis and personalized care by promoting multimodal analysis through image-to-image translation and cross-modality synthesis [110,111]. In clinical settings, GANs improve tasks like segmentation, reconstruction, and denoising in the diagnosis of diseases like Alzheimer’s and myocarditis [112,113]. One of the primary benefits of GANs is their capacity to produce realistic synthetic data in medical diagnostics, which can be applied to data augmentation and to solve the problem of medical imaging’s sparse datasets [114]. This is especially helpful when training AI-based computer-aided diagnostic systems because performance improvement requires multiple different data types [115]. In addition, in tasks involving image augmentation, denoising, and super-resolution, GANs have demonstrated promise. These tasks can increase picture quality and lower radiation exposure in specific imaging modalities [116]. Although GANs have made impressive strides in medical imaging applications, obstacles remain. More dependable and consistent outcomes require addressing problems such as mode collapse, non-convergence, and instability during training [117]. Additionally, it is crucial to guarantee that GANs learn the statistics essential to objective picture quality assessment and medical imaging applications [118]. As this area of study develops, GANs could transform medical diagnostics by facilitating more precise and effective picture synthesis, analysis, and interpretation in a variety of modalities [119].

Transformer-based models use embedding layers and attention processes to transform different inputs (such as text, images, and structured data) into cohesive representations for multimodal data analysis. These models provide a comprehensive comprehension of patient data by learning both intra- and intermodal interactions. For instance, models that combine laboratory results, clinical histories, and radiographs into a single diagnostic framework using visual and text tokens and bidirectional attention blocks outperform models that use just one input type or analyze modalities independently [120,121]. Interestingly, some studies have explored hybrid techniques that combine the strengths of Transformers and Convolutional Neural Networks (CNNs). For example, the HybridCTrm network outperformed fully CNN-based approaches in multimodal medical picture segmentation tasks [122]. This demonstrates that using both local and global feature representations enhances performance. Among Transformer-based designs, Vision Transformers (ViT) and other devices have demonstrated exceptional performance in jobs involving medical image interpretation tasks. For example, to overcome restrictions such as the absence of cross-modal feature interaction and local feature extraction, a unique lightweight cross-Transformer based on a cross-multiaxis mechanism has been developed for multimodal medical picture fusion [123]. An ensemble method that included the ViT and EfficientNet-V2 models outperformed the standalone models in brain tumor classification, achieving an impressive 95% accuracy [124]. Leveraging self-attention to correlate genomic markers with imaging signatures. A new deep learning model called DeepFusionCDR [125] combines drug chemical structures with multi-omics data from cell lines to forecast cancer drug responses (CDRs). For analyzing the chemical structures of drugs using transformers that are specialized in SMILES. A transformer-based deep learning model called DeePathNet [126] was developed for the processing of multi-omics data in cancer research. It combines information about cancer-specific pathways to enhance subtype identification, cancer classification, and treatment response prediction.

Thus, transformer-based multimodal deep learning techniques have demonstrated notable progress in medical diagnosis. These techniques have demonstrated promise in several applications, such as the diagnosis of Parkinson’s disease, classification of brain tumors, and detection of cancer [127]. By combining various data types and using transformer structures, these methods provide better interpretability and diagnostic accuracy, as well as the possibility of optimizing clinical operations. To fully exploit these technologies in healthcare, further study and cooperation between medical professionals and AI specialists are essential as the field develops. Enhancing early Parkinson’s disease detection through multimodal deep learning and explainable AI: insights from the PPMI database.

4.6 Autoencoders and Variational Autoencoders (VAEs)

VAEs have been effectively used in biomedical informatics applications, such as large-scale biological sequence analysis, integrated multi-omics data analytics, and medical image classification and segmentation [128]. Variational Autoencoders (VAEs) have shown great promise in medical diagnostics through improved interpretability, representation learning, and multimodal data fusion. They have made it possible to grade gliomas accurately using interpretable MRI-based characteristics, and expedited the screening process for cognitive impairment using a variety of data sources [129] and enhanced the detection of early cardiac disease by combining imaging and clinical data [130]. Additionally, VAEs addressed data scarcity by producing synthetic eye-tracking data [131] and performed better than conventional approaches in deriving strong representations from metabolomics and protein data [132,133]. Additional model improvements, like adversarial training and attention processes, improved performance in tasks involving face analysis and cancer detection [134].

4.7 Explainable AI (XAI) in Deep Learning

XAI is essential for increasing the transparency and reliability of deep learning models, particularly in high-stakes medical applications. The goal of this study was to clarify the data underlying the deep learning black-box model, thereby exposing the decision-making process [135,136]. In the healthcare industry, where every choice or judgment has associated dangers, this is especially crucial. By assisting doctors in comprehending and interpreting AI-generated data, XAI procedures can increase their trust in the technology’s dependability [137]. The development of innovative techniques for COVID-19 classification models, which offer both quantitative and qualitative visualizations to improve doctors’ comprehension and decision-making, is an example of recent developments in XAI for medical applications [138]. Researchers have also investigated XAI approaches for regression models (XAIR), which tackle the particular difficulties in comprehending predictions for continuous output [139]. Researchers have proposed frameworks for identifying XAI strategies in deep learning-based medical image analysis to advance the field. These frameworks classify methods according to certain XAI criteria and anatomical location [140]. In the end, such efforts aim to create more dependable and understandable AI-driven diagnostic tools by standardizing and enhancing the use of XAI in healthcare.

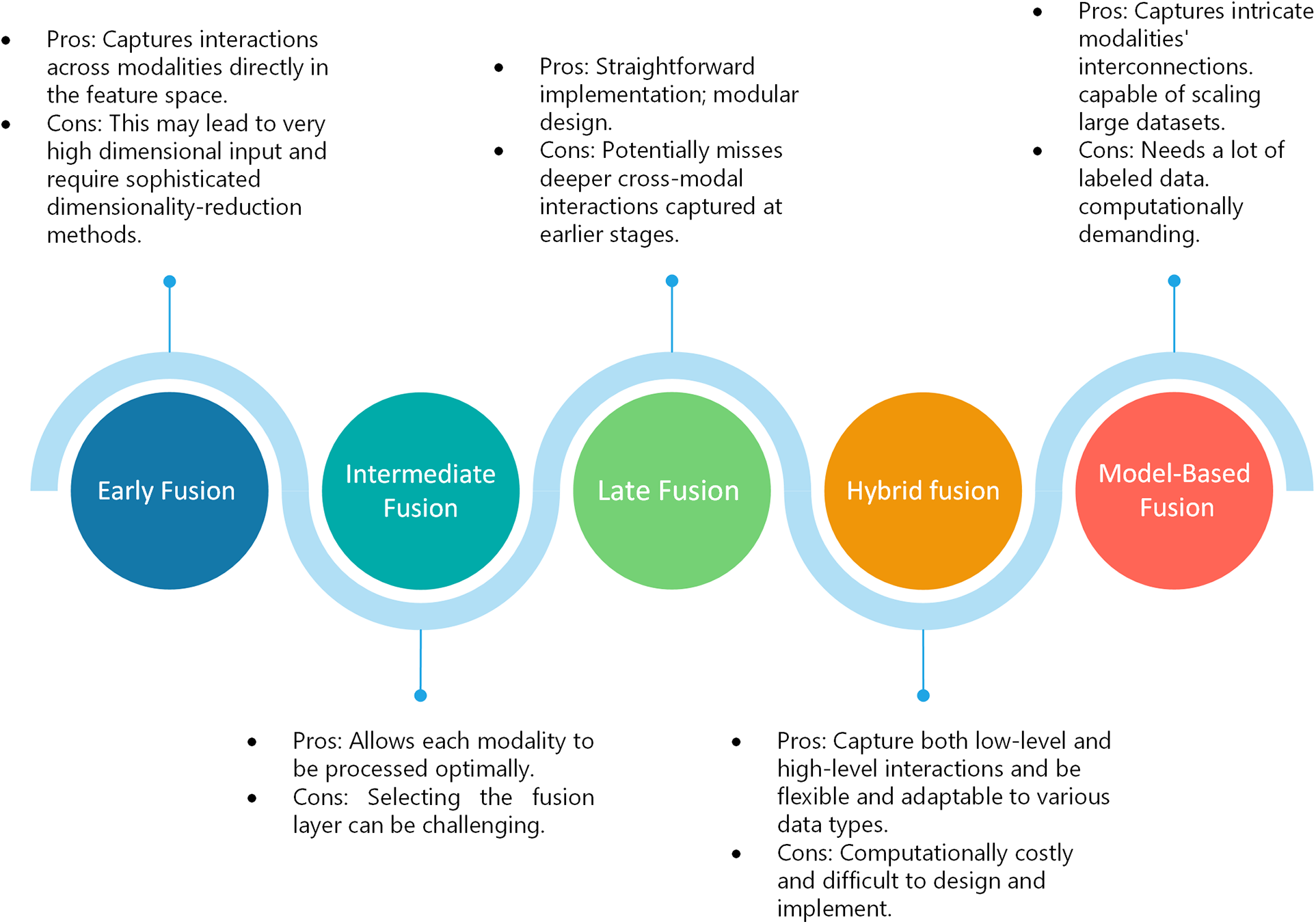

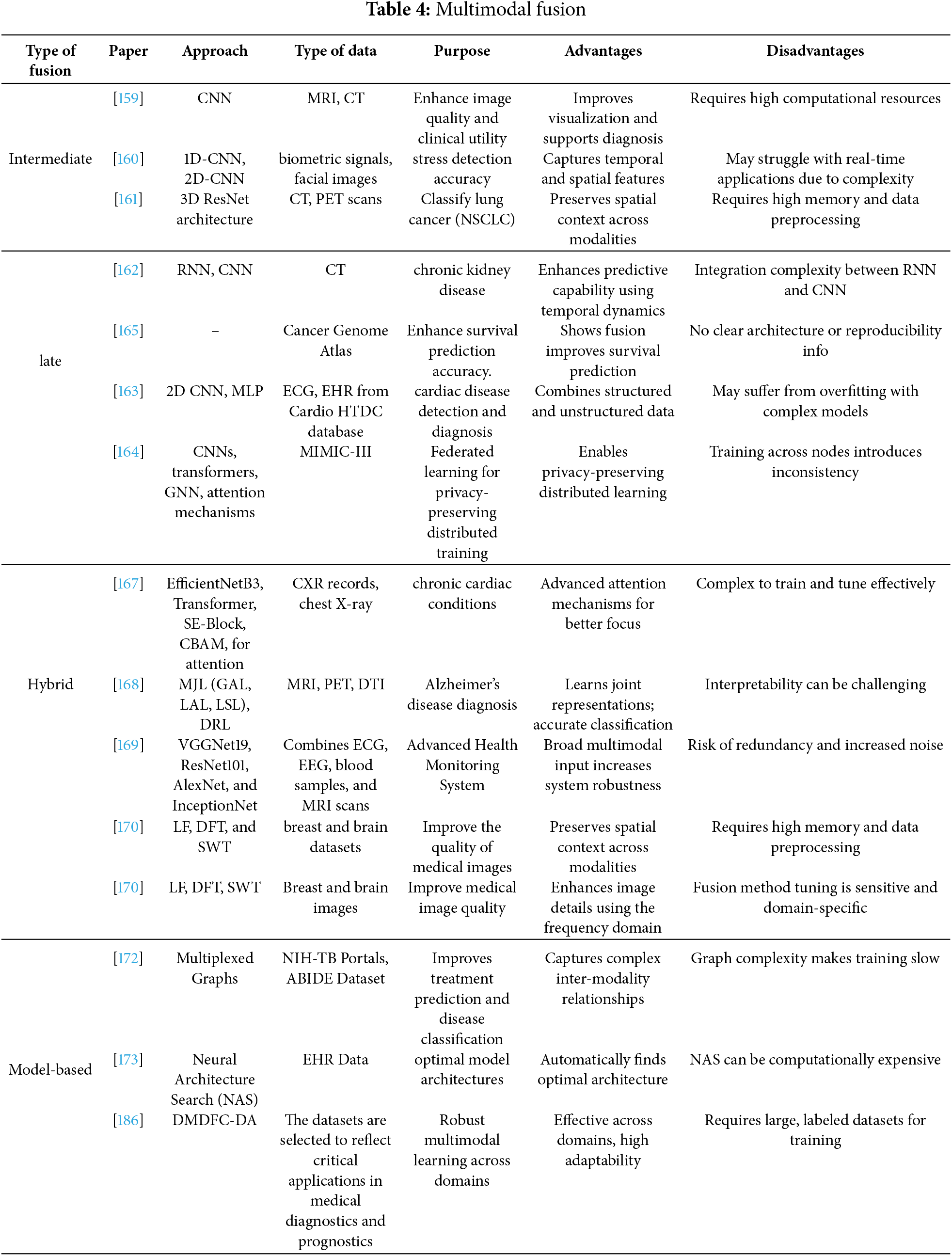

In medical diagnostics, multimodal integration combines various data types discussed in Section 3, including clinical text, imaging, molecular profiles, and structured electronic health records, to improve the accuracy of diagnosis and offer a thorough grasp of patient health [152]. These modalities [153] include radiological and histological images, omics data (e.g., proteomics, genomes), structured variables from electronic health records, unstructured clinical narratives, and even audio or video recordings of clinical conversations. Multimodal integration can be further extended in smart healthcare systems to incorporate contextual and behavioral data that reflect environmental and lifestyle factors. Combining such diverse data sources allows for uncovering hidden patterns, correlations, and interdependencies essential for identifying risk factors, predicting disease progression, enhancing treatment regimens, and implementing preventative measures. The deep learning methods described in Section 4 enable the integration of these various modalities by providing scalable frameworks for learning joint representations and facilitating end-to-end predictive modeling. Typically, integration involves modality-specific feature extraction (e.g., Random Forests for structured data, CNNs or vision transformers for images, and transformer-based encoders for clinical text), followed by fusion algorithms that align and merge the results. Table 4 classifies and summarizes contemporary fusion approaches based on their underlying processes and medical applications, while Fig. 2 illustrates the advantages and disadvantages of each fusion type (data-level, feature-level, and decision-level). In certain applications, the uncertainty present in real-world clinical data is managed through probabilistic reasoning (e.g., Bayesian models) [154]. Despite the revolutionary promise of multimodal systems, there are certain challenges, such as temporal misalignment, data heterogeneity, and the lack of standardized validation frameworks [155]. Additionally, recent research has indicated that performance improvement does not necessarily involve simply adding more modalities. For instance, ChatGPT-4V performed worse on diagnostic tasks than its text-only counterpart, despite having access to both visual and textual input [156]. Unlocking the full potential of multimodal diagnostics in clinical settings requires addressing these limitations [157]. This section describes the primary types of multimodal fusion: data-level, feature-level, hybrid fusion, decision-level, and model-based fusion, and provides a thorough analysis of each implementation strategy.

Figure 2: Multimodal fusion types

5.1 Early Fusion (Feature-Level Fusion)

Raw data or extracted features from multiple modalities are combined early.

Modality-specific networks process each input type separately, producing latent representations. These representations are merged at an intermediate layer. The study [158] proposed a thorough analysis of state-of-the-art methods and a complex classification scheme that allows for a better-informed choice of fusion strategies for biological applications, as well as an investigation of novel approaches. Kumar et al. [159] proposed a fusion technique that combines information or characteristics from many modalities to produce improved images. This is consistent with intermediate fusion, which combines the properties of modalities after they are initially processed independently. Manifold learning-based dimensionality reduction [160] was introduced in an intermediate multimodal fusion network. Using 1D-CNN and 2D-CNN, the multimodal network creates independent representations from biometric inputs and facial landmarks. A multi-stage intermediate fusion method for classifying NSCLC [161] subtypes from CT and PET images are presented. The proposed method employs voxel-wise fusion to use complementary information across different abstraction levels while maintaining spatial correlations, integrating the two modalities at different phases of feature extraction.

5.3 Late Fusion (Decision-Level Fusion)

Individual models produce modality-specific predictions or embeddings, which are combined to reach a final decision. Using the late fusion technique [162] clinical information and CT images are combined to diagnose chronic kidney disease (CKD). The model achieves accuracy comparable to that of a human expert and shows promise as a trustworthy diagnostic tool for medical practitioners by independently analyzing modalities and combining them at the decision level. To enhance the detection and diagnosis of heart disorders, Ref. [163] introduces a model that integrates 12-lead ECG imaging data and EHR data. By addressing the drawbacks of using electrocardiogram (ECG) data alone, which might not be definitive in predicting cardiac normality and abnormality, the proposed late fusion strategy aims to attain improved accuracy in the classification of cardiac diseases compared to unimodal approaches. FH-MMA [164] combines relational, sequential, and image information at the decision level using late fusion, a privacy-preserving, multimodal analytics framework. The diagnostic accuracy, computational efficiency, and scalability of FH-MMA can be significantly increased using FLE and attention methods to investigate and contrast multimodal fusion approaches, with an emphasis on late fusion [165] in the context of cancer research. The late fusion technique is used. It continuously beats unimodal models by combining data from several modalities, demonstrating the potential of multimodal fusion to enhance patient outcome forecasts. A thorough comparison of data fusion techniques in smart healthcare [166] highlights the importance of seamless integration and analysis of diverse healthcare data. They used three types of fusion: early, intermediate, and late.

Integrate early, intermediate, and late fusion techniques to exploit their advantages. The VAEs [167] provide a common latent space in which both structured and image data are represented. The squeeze-and-Excitation block (SE-Block) and Convolutional Block Attention Module (CBAM) attention processes ensure that, during fusion, the most essential aspects of both modalities are highlighted. Transformer encoders enhance the structured data representation, making it easier to integrate with picture data. The MDL-Net [168] integrates the disease-induced region-aware learning (DRL) and multi-fusion joint learning (MJL) modules to improve the early determination of brain areas linked to Alzheimer’s disease (AD) and provide an accurate and comprehensible diagnosis. The MDL-Net was created to address interpretability problems in multimodal fusion and inherent diversity among multimodal neuroimages. Improve feature representation using the latent space and local and global learning. Golcha et al. [169] introduced an enhanced health monitoring system that combines feature-level fusion and decision-level fusion to overcome the drawbacks of single-modal systems that improves patient quality of life, reduces healthcare expenses, and transforms the management of chronic diseases. A novel hybrid pre-processing method [170] called Laplacian Filter + Discrete Fourier Transform (LF + DFT) was proposed to improve medical images before fusion. This method efficiently detects significant discontinuities and adjusts image frequencies from low to high by emphasizing important details, capturing minute details, and sharpening edge details. To integrate multimodal EHR data, a hybrid fusion [171] is used, which combines early fusion, joint fusion (intermediate fusion), and late fusion. This method handles the heterogeneous nature of EHR data and enhances clinical risk prediction by utilizing the advantages of various fusion procedures.

It takes advantage of sophisticated models to implicitly merge modalities, such as transformers and graph neural networks. To overcome the difficulties associated with multimodal fusion in healthcare, the proposed model-based fusion architecture [172] uses multiplexed graphs and graph neural networks (GNNs). The proposed system provides state-of-the-art performance on benchmark and clinical datasets by adaptively modeling complicated interactions between modalities via embedding the fusion process within the GNN architecture. A Neural Architecture Search (NAS) [173] the method is presented in the AutoFM framework to automatically create the best model architectures for multimodal EHR data. This method demonstrates how model-based fusion can improve healthcare services through deep learning while reducing the dependency on manually created models.

Selecting an appropriate fusion strategy often depends on the complexity, dimensionality, and correlation structure of the modalities involved. In clinical practice, late fusion is common due to simpler model interpretability and the feasibility of using existing modality-specific analysis pipelines.

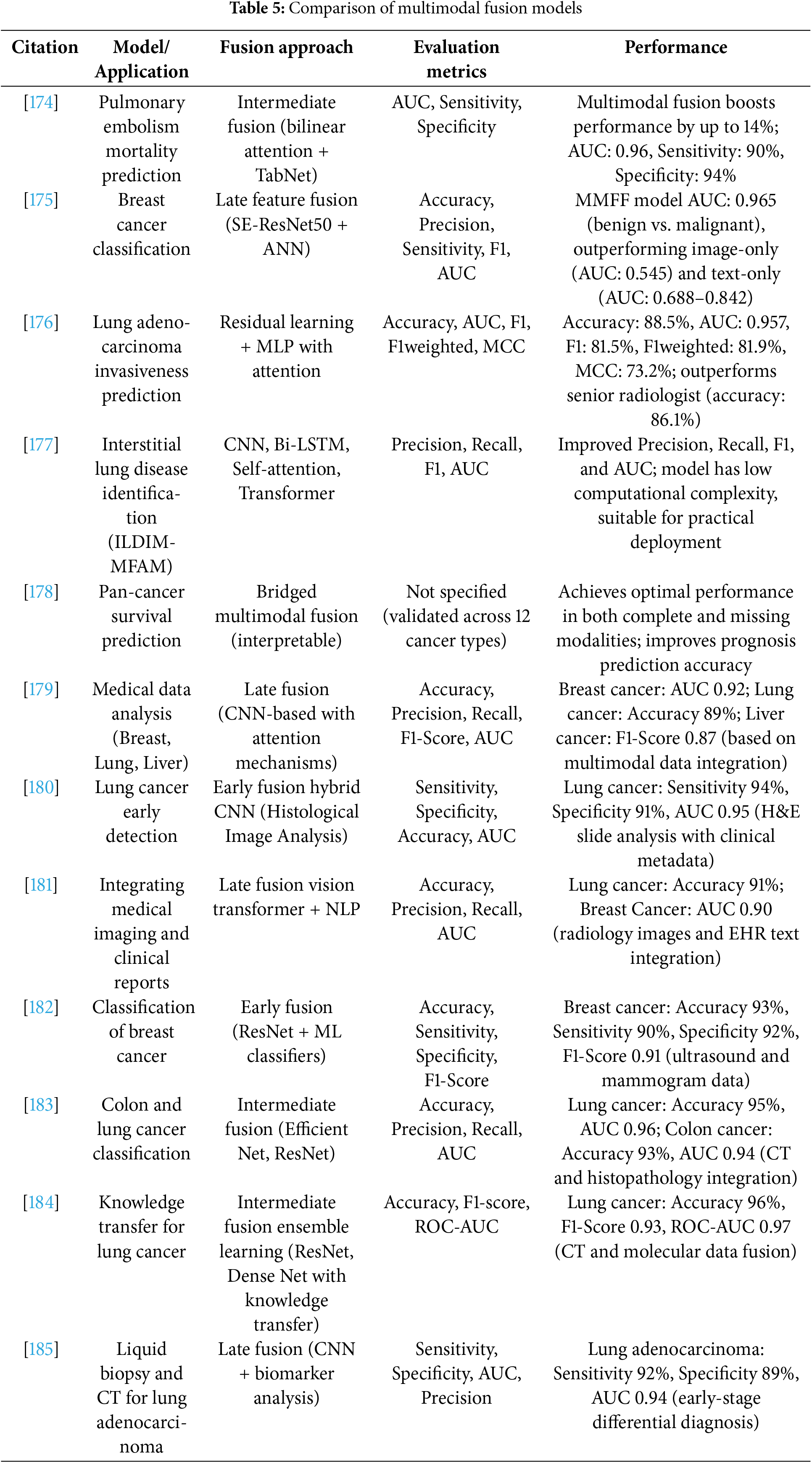

5.6 A Comparison of Recent Modern Multimodal Models (2023–2025)

Several multimodal designs are state-of-the-art (SOTA) in various medical diagnostic sectors. Table 5 presents a carefully selected collection of clinical use cases from recent studies that illustrate the real-world significance of multimodal deep learning in healthcare. These examples include various activities across diseases, such as breast cancer, lung cancer, and interstitial lung disease, including early detection, categorization, and survival prediction. Each case illustrates the increasing influence of multimodal AI in real-world medical conditions by providing the fusion method employed, performance data, and reported results. Cahan et al. [174] developed an intermediate fusion model for pulmonary embolism prognosis using TabNet and bilinear attention. It achieved an AUC of 0.96 with 90% sensitivity and 94% specificity, demonstrating performance improvement by integrating structured and picture data. For breast cancer classification, Hussain et al.’s late fusion SE-ResNet50 + ANN framework achieved an AUC of 0.965, which was significantly higher than that of unimodal baselines [175]. Similarly, Huang et al. outperformed professional radiologists with an accuracy of 88.5% and an AUC of 0.957 in their demonstration of a residual learning and Multilayer Perceptron (MLP) attention model for lung cancer invasiveness prediction [176]. The ILDIM-MFAM model for interstitial lung disease diagnosis was created by Zhong et al. [177] by combining CNNs, Bi-LSTMs, and Transformer blocks to improve the F1-score and AUC while preserving a low computational cost appropriate for clinical use. To predict pan-cancer survival, Gao et al. [178] presented an interpretable bridging fusion model that was successful in missing modality settings and validated across 12 cancer types. To further explore the adaptability of fusion, Kumar and Sharma [179] presented a late fusion CNN framework tailored for the study of liver, lung, and breast cancer, with respective AUCs of 0.92, 89% accuracy, and F1-scores of 0.87. Noaman et al. [180] applied early hybrid CNN fusion to histological pictures and clinical metadata for early lung cancer detection and obtained a sensitivity of 94%, specificity of 91%, and AUC of 0.95. In a related field, Yao et al. [181] used a late fusion model that included Vision Transformers and Natural Language Processing (NLP) modules to merge radiological imagery with EHR text. They achieved an AUC of 0.90 for breast cancer and an accuracy of 91% for lung cancer. Atrey et al. [182] achieved 93% accuracy, 90% sensitivity, and 92% specificity for breast cancer using residual neural networks and conventional machine learning classifiers in conjunction with early fusion between ultrasound and mammography data. To classify lung and colon cancers, Uddin et al. [183] developed an intermediate fusion technique based on EfficientNet and ResNet, which produced AUC scores of 0.94 and 0.96, respectively. Furthermore, Sharma et al. [184] combined ResNet and DenseNet to create a knowledge transfer-driven ensemble framework that achieved 96% accuracy and an AUC of 0.97 for the delineation of lung cancer. Lastly, Zhang et al. [185] achieved 92% sensitivity and an AUC of 0.94 for the identification of early-stage lung cancer by combining CNN-based CT imaging with liquid biopsy data in a late fusion scheme.

Collectively, these models demonstrate the rapid progress and efficacy of multimodal fusion in improving diagnostic accuracy. They demonstrated that the fusion approach (early, intermediate, or late), integration depth, and application of sophisticated architectures, such as transformers and attention mechanisms, significantly impact performance benefits. Thus, these studies indicate a substantial advancement toward high-performance, interpretable, and clinically feasible AI solutions for medical diagnostics.

Healthcare is transforming due to deep learning (DL), which enables more precise diagnosis, focused therapies, and improved patient outcomes. DL’s use extends beyond algorithmic implementation, requiring careful consideration of its potential therapeutic applications. Fig. 3 shows a variety of healthcare use cases influenced by DL techniques.

Figure 3: Healthcare application

6.1 Precision Medicine and Patient Stratification

The ability to stratify patients into clinically significant subgroups using DL frameworks to integrate multi-omic data has sped up advancements in precision medicine. For instance, tumors can be easily classified into molecularly different groups when radiological imaging and transcriptome profiles are combined. Prognosis, treatment choice, and therapeutic results are all significantly impacted by this classification.

DL-based integrative analysis of gene expression data and imaging-derived characteristics identified new biomarkers for early disease detection. In addition to being helpful for diagnosis, these biomarkers provide insight into the pathophysiology of diseases and may help direct the creation of focused treatments. However, the variety of patient data and the requirement for sizable, annotated datasets make it difficult to use these findings in clinical practice.

6.1.2 Drug Response Prediction

Multimodal DL models use data from gene expression, tumor imaging, and treatment histories to infer drug responses specific to individual patients. This method lessens the need for trial and testing treatment strategies, thereby improving the personalization of therapy. However, it is unclear whether these models can be applied to various populations and healthcare systems, emphasizing the necessity of cross-cohort validation.

6.2 Enhanced Disease Diagnosis and Prognosis

The integration and validation of data from several modalities have greatly enhanced the identification of diseases through DL, resulting in more trustworthy clinical judgments.

DL models can improve diagnostic specificity by integrating radiological imaging and histological data, especially in neurology, cardiovascular disease, and oncology. A comprehensive approach to patient health is crucial, as demonstrated by the superior performance of these cross-modal systems compared with conventional single-modality models. However, issues such as data standardization and alignment continue to exist.

6.2.2 Computer-Aided Diagnosis Systems

Multimodal computer-aided diagnosis (CAD) systems, which integrate imaging, omics data, and patient history, have demonstrated efficacy in complicated diagnostic tasks, including chronic condition management and Alzheimer’s disease progression tracking. Although these technologies are useful resources for clinical workflows, their incorporation into practical environments requires thorough validation and clinician assistance.

6.3 Personalized Healthcare and Clinical Decision Support

Clinical workflows are changing because of DL-powered systems’ customized decision support tools, which closely match unique patient profiles.

Advanced DL models can use genetic data, medical imaging, EHR metadata, and blood test results to evaluate the risk of critical health events (such as myocardial infarction or stroke). By proactively directing healthcare interventions, these risk classification technologies can lower morbidity and mortality. Nonetheless, the interpretability and openness of these models remain significant barriers to clinical implementation.

Analyzing treatment outcomes from patients with comparable multimodal profiles allows DL to be used for personalized therapeutic planning, which recommends the optimal plan of action. Although this approach improves treatment precision, it requires thorough model training on various representative datasets to prevent biases and guarantee impartiality in decision-making.

7 Challenges in Multimodal Deep Learning

Multimodal deep learning has transformative potential in industries such as healthcare, but its broad and successful implementation is hampered by several intricate issues. Data heterogeneity and quality, computational complexity, cross-modal alignment, interpretability and explainability, and privacy and ethical issues are some of the interconnected domains that these difficulties encompass, as shown in Fig. 4. Each of these problems affects the clinical applicability, generalisability, and dependability of the produced systems, in addition to making model development more difficult.

Figure 4: Multimodal challenges

7.1 Data Heterogeneity and Quality

Imaging modalities, electronic health records, and genomic sequences are only a few of the multiple sources of medical data, each with its own format, resolution, and completeness levels. For instance, confounding variability may be introduced by varied resolution in radiological imaging or batch effects in high-throughput sequencing. These discrepancies compromise the repeatability of multimodal models and increase the difficulty of data integration. This problem becomes more difficult in the absence of standard preprocessing techniques throughout organizations. In addition to technical fixes such as domain adaptation and data harmonization, overcoming these obstacles calls for cooperative efforts to create cross-institutional data standards.

An extensive amount of computational resources is available to integrate and analyze multimodal information, which frequently includes high-dimensional, large-scale data such as gigapixel histopathology images or whole-genome sequencing. When models must be trained jointly across modalities, complexity increases, which imposes a burden on processing and memory capacities. Although distributed learning frameworks and technology advancements like GPU/TPU clusters provide some respite, the discipline still lacks commonly used techniques for scalable, resource-efficient multimodal learning. The gap between research and clinical translation may grow as a result of this obstacle for organizations with inadequate computational infrastructure.

The accurate alignment of many kinds of data is one of the most technically challenging parts of multimodal learning. For example, sophisticated modeling techniques are required to align temporal EHR data with static genetic markers or transfer pixel-level information from histopathology slides to corresponding radiographic pictures. Noise from misalignment can reduce feature fusion’s efficacy and produce less-than-ideal predictions. Although robust, generalizable ways are still being investigated, recent research has explored solutions, including contrastive learning and attention mechanisms, to improve alignment.

7.4 Interpretability and Explainability

Deep learning models are frequently criticized for their lack of transparency despite their predictive capacity. This is a critical issue in the healthcare industry because clinical decision-making necessitates accountability. Clinicians’ trust and uptake of AI solutions are hampered by black-box models. The development of explainable AI (XAI) techniques, such as saliency maps, attention visualizations, and counterfactual reasoning, is therefore clinically necessary rather than just technically necessary. More reliable and context-aware XAI approaches are required because many current interpretability techniques provide little insight into multimodal interactions and frequently falter under rigorous validation.

7.5 Privacy and Ethical Considerations

The presence of sensitive personal information in multimodal healthcare data raises serious ethical and legal issues. Although adherence to regulations like the GDPR (EU) and HIPAA (US) is crucial, it complicates data sharing and model training. There are encouraging opportunities to develop ethical models using emerging privacy-preserving methods, such as safe multi-party computation, federated learning, and differential privacy. Nevertheless, these approaches provide new difficulties, such as decreased performance, communication overhead, and problems with model convergence. Utility and privacy balance remains a hot topic of ethical and technical discussion.

8 Strategies to Overcome Challenges

To successfully traverse the complexity of multimodal biological data integration, interdisciplinary cooperation, regulatory adaptability, and ongoing technical development are essential. The following tactics offer a way to overcome important constraints, with a focus on not only execution but also the justification and anticipated results of each strategy.

Effective integration of different datasets necessitates stringent harmonization methods. Different platforms or institutions’ approaches to data collection can seriously impede downstream analysis.

• Protocol standardization is essential to guaranteeing dataset comparability. Preprocessing pipelines can reduce sources of bias or technological artifacts by incorporating domain expertise.

• Advanced normalization methods, like ComBat, are very useful in omics research to address batch effects, which are systematic non-biological fluctuations that can mask real biological signals if left unchecked. These techniques improve the generalisability of the model and the reliability of the data.

8.2 Efficient Model Architectures

Model scalability becomes a critical issue when biomedical datasets increase in size and complexity.

• Techniques for compressing models, pruning, quantization, and knowledge distillation allow deep learning models to be implemented in contexts with limited resources without suffering appreciable performance degradation. These techniques also improve energy efficiency and model interpretability.

• Distributed and parallel training Architecture enables effective management of huge datasets. These systems provide iterative experimentation and hyperparameter adjustment that are frequently not feasible in single-machine situations, going beyond simple computing acceleration.

8.3 Robust Feature Alignment and Fusion

Accurate spatial and semantic alignment is essential for the successful integration of multimodal data.

• Image registration algorithms, such as ANTs and elastix, are essential for lining up anatomical features in various imaging modalities. For tasks such as morphological comparison and lesion identification, high-fidelity alignment maintains the essential spatial relations.

• Attention-based fusion methods dynamically determine the relative significance of each modality, allowing for more task-specific and sophisticated integration. In clinical settings, when not all data modalities have the same diagnostic weight, this is advantageous.

8.4 Explainable AI (XAI) Methods

The interpretability of models is essential for the transparency and trustworthiness of AI-driven healthcare decision-making.

• Tools for post hoc explanations, such as saliency maps, CAMs, and LIME, help reveal which features influence model predictions. Allows clinicians to gain insights into the reasoning process.

• Architectures with inherent interpretability, Attention-based models, for example, include transparency into the actual learning process, thereby promoting greater confidence and making regulatory adoption easier.

8.5 Privacy-Preserving Techniques

Powerful privacy-preserving procedures are required when handling sensitive medical data to adhere to legal and ethical requirements.