Submission Deadline: 31 July 2025 (closed) View: 5870 Submit to Journal

Prof. Simon Fong

Email: ccfong@umac.mo

Affiliation: Department of Computer and Information Science, University of Macau, Macau, China

Research Interests:Data mining, Deep learning, AI for medical diagnosis

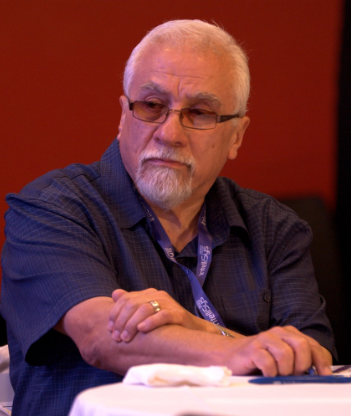

Prof. Sabah Mohammed

Email:sabah.mohammed@lakeheadu.ca

Affiliation: Department of Computer Science, Lakehead University, Thunder Bay, Canada

Research Interests:Artificial Intelligence, Generative AI, Machine Learning, Graph-Based Data Analytics, Translational Medical Informatics

Prof. Tengyue Li

Email:yb97475@um.edu.mo

Affiliation: Faculty of Information, North China University of Technology, Beijing, China

Research Interests:Artificial Intelligence, Deep Learning, Multi-modal Algorithms, Tumor Analytics

The next frontier in medical diagnostics lies in the integration of multi-modal deep learning with big data analytics from diverse sources such as radiology images, histological data, and multi-omic transcriptomic datasets. This Special Issue aims to explore how these cutting-edge techniques can be used to enhance diagnostic accuracy, streamline data processing, and enable personalized medicine. The fusion of data from various medical formats presents significant opportunities for improving patient outcomes through comprehensive analysis and cross-referencing of different biological and clinical information. However, challenges such as data heterogeneity, computational complexity, and the need for robust cross-modal correlations must be addressed to unlock the full potential of multi-modal deep learning.

We invite submissions that focus on innovative solutions and novel frameworks in multi-modal data integration, advanced deep learning models, and their applications in real-world healthcare scenarios. The issue will cover topics such as the utilization of multi-omic data for precision medicine, the integration of radiology and histological data for accurate disease diagnosis, and AI-based models for personalized healthcare. By advancing the field of multi-modal deep learning, this Special Issue aims to shape the future of medical diagnostics and contribute to better clinical outcomes worldwide.

The topics of interest for this special issue include, but are not limited to:

· Multi-modal data integration techniques

· Machine learning and deep learning for radiology and histological data integration

· AI applications in precision medicine and multi-omic data

· Handling data heterogeneity in medical diagnostics

· Big data management and computational approaches in healthcare

· Personalized healthcare through multi-modal machine and deep learning

· Benchmarking and evaluation of machine learning and deep learning models

· Innovations in multi-omic data analysis for patient outcomes

· Ethical considerations and data privacy in healthcare AI

· Advancements in AI, machine learning, and deep learning for healthcare

· Integration and visualization of heterogeneous medical data

· Emerging technologies in medical imaging and diagnostics

· Machine learning and deep learning architectures for medical data analysis

· Transfer learning and domain adaptation in medical imaging

· Explainable AI, automated feature extraction, and data augmentation

· Neural network optimization, robustness, and generalization

· Comparison of machine learning and deep learning with traditional methods

· Challenges in deploying machine learning and deep learning models in clinical settings

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue