Open Access

Open Access

REVIEW

Implementation of Human-AI Interaction in Reinforcement Learning: Literature Review and Case Studies

1 Department of Mechanical Engineering, Iowa Technology Institute, The University of Iowa, Iowa City, IA 52242, USA

2 Talus Renewables, Inc., Austin, TX 78754, USA

3 Department of Psychological and Brain Sciences, Iowa Neuroscience Institute, The University of Iowa, Iowa City, IA 52242, USA

4 Department of Chemical and Biochemical Engineering, Iowa Technology Institute, The University of Iowa, Iowa City, IA 52242, USA

* Corresponding Author: Shaoping Xiao. Email:

(This article belongs to the Special Issue: Advances in Object Detection: Methods and Applications)

Computers, Materials & Continua 2026, 86(2), 1-62. https://doi.org/10.32604/cmc.2025.072146

Received 20 August 2025; Accepted 25 October 2025; Issue published 09 December 2025

Abstract

The integration of human factors into artificial intelligence (AI) systems has emerged as a critical research frontier, particularly in reinforcement learning (RL), where human-AI interaction (HAII) presents both opportunities and challenges. As RL continues to demonstrate remarkable success in model-free and partially observable environments, its real-world deployment increasingly requires effective collaboration with human operators and stakeholders. This article systematically examines HAII techniques in RL through both theoretical analysis and practical case studies. We establish a conceptual framework built upon three fundamental pillars of effective human-AI collaboration: computational trust modeling, system usability, and decision understandability. Our comprehensive review organizes HAII methods into five key categories: (1) learning from human feedback, including various shaping approaches; (2) learning from human demonstration through inverse RL and imitation learning; (3) shared autonomy architectures for dynamic control allocation; (4) human-in-the-loop querying strategies for active learning; and (5) explainable RL techniques for interpretable policy generation. Recent state-of-the-art works are critically reviewed, with particular emphasis on advances incorporating large language models in human-AI interaction research. To illustrate some concepts, we present three detailed case studies: an empirical trust model for farmers adopting AI-driven agricultural management systems, the implementation of ethical constraints in robotic motion planning through human-guided RL, and an experimental investigation of human trust dynamics using a multi-armed bandit paradigm. These applications demonstrate how HAII principles can enhance RL systems’ practical utility while bridging the gap between theoretical RL and real-world human-centered applications, ultimately contributing to more deployable and socially beneficial intelligent systems.Keywords

In the era of artificial intelligence (AI), its technologies have been increasingly applied across a wide range of science and engineering domains, including cyber-physical systems (CPS), materials science, hydrology, agriculture, and manufacturing [1–5]. This broad integration is largely driven by the pursuit of greater efficiency in prediction and recommendation, leveraging data-driven insights to solve complex problems. A key research goal within this context is the development of AI-driven autonomous systems capable of operating independently and making complex decisions without direct human supervision. These systems hold the potential to revolutionize industries by enabling faster responses, reducing human errors, and maintaining continuous operation in dynamic or hazardous environments [6].

Among the various AI approaches, reinforcement learning (RL) stands out as one of the most advanced and influential techniques for enabling autonomy [7]. RL empowers systems to learn complex behavior patterns through trial-and-error interactions with their environments. Unlike supervised learning, which relies on labeled data, RL is driven by reward signals that guide an intelligent agent to take actions aimed at maximizing long-term cumulative rewards. Through this iterative learning process, the agent gradually develops strategies, referred to as policies in the RL framework, to inform which actions to take under various environmental conditions.

These learned policies function as decision-making blueprints, allowing the agent to select optimal actions as circumstances change. As environments evolve or increase in complexity, RL agents continuously refine their policies to improve performance. This makes RL particularly well-suited for real-time, adaptive tasks such as autonomous driving, robotic control, and dynamic resource management [8–11]. Furthermore, RL’s capacity to manage sequential decisions under uncertainty positions it as a powerful tool for applications where long-term consequences must be carefully considered.

However, while the capabilities of autonomous systems continue to grow, they raise important ethical and philosophical questions [12]. Can machines be entrusted to make decisions solely with moral implications? Should AI systems act autonomously in scenarios involving human welfare, justice, or environmental impact? These concerns are particularly pressing given that intelligent agents, whether algorithms, robots, or other computational systems, are neither legally nor morally responsible. Unlike humans, they do not possess consciousness, intent, or empathy, and thus cannot be held accountable in traditional ways. Consequently, the responsibility for their behaviors must ultimately rest with the humans who design, develop, and deploy them. Addressing these questions is critical to ensuring that AI technologies are not only technically effective but also ethically grounded and socially responsible.

Furthermore, these ethical concerns become even more pronounced when AI-based systems operate autonomously or semi-autonomously, shifting decision-making authority from humans to machines. This transformation reshapes human-technology interaction and introduces new ethical challenges, including questions of distributive justice, risks of discrimination and exclusion, and transparency issues stemming from the perceived opacity and unpredictability of AI systems [13,14]. Building on these shifts in autonomy and cognitive capabilities, recent research highlights trust, usability, and understandability as three fundamental pillars of human-AI interaction (HAII), offering potential pathways to address these ethical challenges [15]. These aspects are closely tied to the aforementioned ethical considerations and play a pivotal role in shaping how people perceive, adopt, and collaborate with AI systems.

Trust is widely regarded as a cornerstone for effective HAII and the successful adoption of AI systems. It can be broadly defined as the willingness of individuals to accept and rely on AI-generated suggestions or decisions, and to engage in task-sharing or information exchange with these technologies. High or appropriately calibrated trust is essential: when trust is too low, users may avoid using AI systems even when those systems offer substantial benefits. Conversely, excessive or misplaced trust may lead to over-reliance and critical judgment errors. In many high-stakes domains such as healthcare, finance, autonomous driving, and customer service, distrust remains a major barrier to adoption. This often stems from the so-called “black-box” nature of AI models, which makes their internal logic opaque and their behavior difficult to anticipate or validate. As a result, users may perceive AI decisions as arbitrary or unreliable, undermining confidence in the system. On the other hand, establishing and maintaining trust can significantly accelerate AI adoption and integration. When users perceive AI systems as transparent, reliable, and aligned with their goals, they are more likely to engage with and benefit from these technologies. Ultimately, the full societal value of AI can only be realized when individuals, organizations, and communities trust these systems enough to meaningfully interact with and atop them [16].

The second pillar of HAII is usability. Even the most intelligent AI system may fail to deliver its value if it is not usable or if humans find it difficult to understand, interact with, or incorporate it into their workflow. In traditional human–machine interaction research, usability has often been assessed through interface design and task performance metrics such as efficiency, accuracy, and error rates [17]. However, in collaborative human–AI environments, the concept of usability extends well beyond interface-level interactions. It encompasses the AI system’s ability to adapt to the human partner, responding dynamically to the users’ needs, preferences, goals, and context. An AI system that can adjust its behavior, level of autonomy, or recommendations in real time contributes more effectively to human performance and decision-making. This perspective represents a shift from viewing AI as a tool to reviewing it as a teammate, a symbiotic partner working toward shared goals. Importantly, an adaptive AI that is trainable or context-aware can better align its assistance with the user’s workflow. For instance, by learning from a human’s decision-making patterns, the system can provide more timely and context-relevant support. Such alignment has been shown to not only improve team efficiency and effectiveness, but also to enhance the human user’s sense of agency and competence [18]. Recent studies confirm that working with an adaptive AI collaborator, as opposed to a static assistant, leads to higher task success and greater user satisfaction [19].

The third crucial facet of HAII is understandability, often referred to as explainability or XAI (explainable AI). This concept is deeply intertwined with the other pillars of trust and usability, as it directly influences a user’s ability to comprehend, evaluate, and confidently act on AI-generated outputs. At its core, explainability refers to an AI system’s capacity to communicate the rationale behind its decision in ways that are interpretable and meaningful to humans. This capability is particularly important given a persistent trade-off in machine learning (ML): as models become more accurate and powerful, especially in the case of deep learning (DL), they also tend to become more opaque and complex. As a result, it is difficult to discern how specific outcomes are predicted and recommended [20]. When users and stakeholders can understand the underlying reasoning behind an AI’s recommendations or actions, they are more likely to trust the system and are better equipped to verify, question, or override its decisions if necessary [21]. Indeed, one of the primary motivations for XAI is the recognition that, without such clarity, users find it difficult (if not impossible) to fully trust AI, especially given its potential for making unpredictable or inexplicable errors. Explainability thus serves as a mechanism for building confidence and ensuring accountability, helping to demystify AI processes and reinforce human oversight.

In the following section, this article will begin by reviewing pioneering works in the domain of HAII, with particular emphasis on the aforementioned three foundational pillars: trust, usability, and understandability. These elements have been widely recognized as critical to fostering effective, ethical, and sustainable collaboration between humans and AI systems. By synthesizing key contributions across disciplines, this review aims to highlight how each of these dimensions has evolved and identify existing gaps and emerging challenges in current literature. Subsequently, the focus will shift to HAII in the context of RL, a growing area of interest due to its capacity to enable adaptive, autonomous behavior. Various approaches, ranging from human-in-the-loop training and reward shaping to imitation learning, will be reviewed to explore how RL-based systems can enhance or hinder the human-AI relationship. This section aims to bridge the conceptual foundations of trust, usability, and understandability with the technical mechanisms of RL. Finally, this article will present several recent contributions from the authors’ research group, which seeks to address identified limitations and push the boundaries of current HAII frameworks. These include novel algorithmic developments to incorporate ethical constraints and trust awareness. Additionally, an experiment is designed to investigate the human trust evolution in AI systems. Collectively, these contributions aim to advance the theoretical and practical understanding of how to build AI systems that are not only intelligent but also aligned with human values, expectations, and needs.

The novel contributions of this paper include:

• Providing a comprehensive overview of foundational works on trust, usability, and understandability in HAII.

• Categorizing and reviewing five major RL-based HAII approaches, including state-of-the-art developments reported in recent publications.

• Presenting new contributions from the authors’ research group, focusing on (i) ethical constraint integration in RL, (ii) trust-aware algorithmic design, and (iii) experimental investigation of human trust evolution in AI systems.

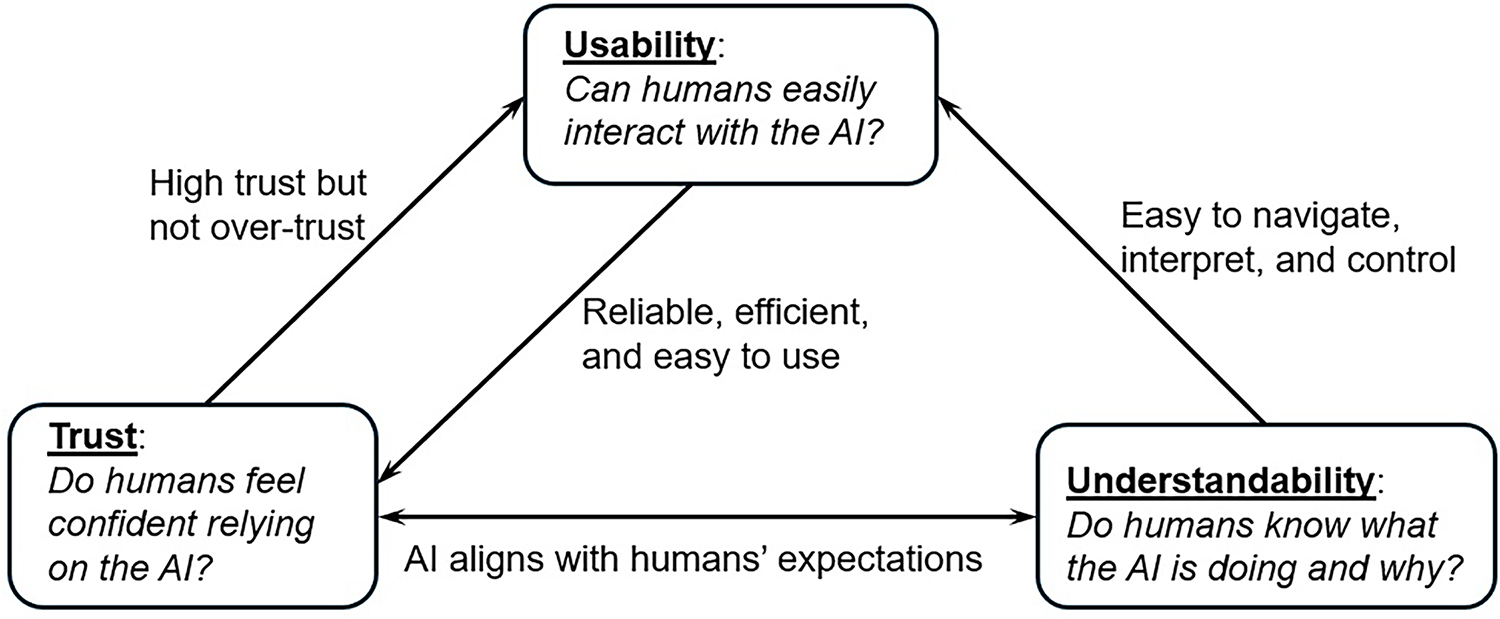

In HAII, trust, usability, and understandability form three foundational pillars that collectively determine the effectiveness and acceptance of AI systems, as illustrated in Fig. 1. Understandability enables users to grasp how and why an AI system makes decisions, fostering accurate mental models and informed oversight. Usability ensures that users can interact with the system efficiently and intuitively, minimizing friction and cognitive load. Trust governs whether users feel confident relying on the AI, influencing their willingness to adopt and engage with it. These three pillars are deeply interconnected: improvements in understandability and usability tend to promote trust, while trust can shape how users perceive the system’s usability and explanations.

Figure 1: Three foundational pillars in HAII

Previous research suggests that trust in AI emerges from a combination of human, contextual, and technology-based factors [16]. Human characteristics (e.g., a user’s general propensity to trust technology) and contextual variables (e.g., high-stakes vs. low-stakes scenarios) significantly influence the formation of trust. However, technology-based factors are often unique to AI systems. Key attributes such as the accuracy and reliability of an AI’s predictions and recommendations, its predictability and consistency over time, and its transparency and explainability in decision-making are consistently identified as critical determinants of perceived trustworthiness [22]. In particular, because modern AI, especially ML models, can exhibit unexpected or opaque behaviors, unlike knowledge-based systems, providing users with insight into how the AI works and clearly communicating its uncertainties and limitations becomes essential. For instance, users are more likely to trust an AI agent that can clearly signal its confidence level or justify its recommendations, rather than one that delivers opaque decisions without context [23]. Recent work has therefore focused on integrating features such as confidence scores, explanations, uncertainty estimates, and trustworthiness cues to foster appropriately calibrated user trust.

A central theme in human-AI trust research is the notion of trust calibration, ensuring that users’ trust levels are aligned with the AI system’s actual capabilities and performance. Miscalibrated trust can manifest as either over-trust (excessive reliance, even when unwarranted) or under-trust (unwarranted skepticism, leading to under-use). Both outcomes are problematic: over-trust may lead users to follow incorrect AI suggestions, while under-trust may cause them to dismiss valuable guidance, undermining the AI’s benefits.

One recent behavioral study found that simply labeling advice as AI-generated led participants to over-rely on it, even when it contradicted their correct judgments or the available evidence [24]. This blind trust resulted in suboptimal or harmful decisions, highlighting how over-trust can degrade decision quality and negatively affect others. To mitigate this, researchers advocate for dynamic trust calibration strategies. One promising approach involves designing AI systems to be both interpretable and uncertainty-aware, enabling users to adjust their trust based on the system’s likelihood of error. For example, Okamura and Yamada [25] demonstrated a method of adaptive trust calibration, where the system monitored user reliance patterns and delivered targeted alerts or explanations when users appeared to be over- or under-trusting. This interaction helped users recalibrate trust in line with the system’s true performance. Overall, interactive transparency features can serve as important safeguards that promote critical engagement, preventing users from uncritically deferring to AI outputs.

Usability, a core pillar of Human-AI Interaction, refers to how effectively and efficiently users can interact with an AI system to accomplish their goals with minimal friction or cognitive strain. A growing body of empirical work highlights that adaptive AI systems, which personalize their behavior based on user actions or situational context, can significantly enhance usability by improving interaction quality, reducing user workload, and increasing task efficiency. These systems adjust their responses in real time, tailoring support to user needs, which leads to more intuitive and productive human-AI collaboration. For example, one controlled study found that domain experts using an AI decision aid with explainable visual feedback (such as heatmap explanations) achieved higher accuracy than those using a non-transparent, black-box AI, improving task success rates by approximately 5%–8% [26]. Another study shows that AI teammates capable of learning from user input and dynamically adapting their guidance can reduce decision time and cognitive load, thereby streamlining the interaction process [18]. Furthermore, adaptive interfaces that provide timely, customized feedback or modulate task difficulty help keep users in a performance-optimized zone, contributing to sustained usability and satisfaction [27]. These findings underscore the importance of designing AI systems that are not only intelligent but also easy to use, responsive, and context-aware, ensuring that users can interact with them seamlessly and effectively.

Designing human-centered AI (HCAI) offers a practical solution to addressing usability challenges in HAII by aligning AI system behavior with human needs, preferences, and limitations. HCAI design emphasizes building systems that account for the capabilities and constraints of both humans and AI. This approach directly enhances usability by making AI systems more intuitive, responsive, adaptive, and aligned with human-centered goals. The development of HCAI systems puts people first, supporting human values such as rights, justice, and dignity, while also promoting goals like self-efficacy, creativity, responsibility, and social connections [28,29]. A recent review highlights the breadth of HCAI research, identifying established clusters as well as emerging areas at the intersection of HAII and ethics [30]. In addition to proposing a new definition and conceptual framework of HCAI, the review outlines key challenges and future research directions. Furthermore, Bingley and colleagues conducted a qualitative study involving AI developers and users, revealing that current HCAI guidelines and frameworks need to place greater emphasis on addressing real human needs in everyday life [31].

Understandability, also referred to as explainability, is another fundamental pillar of HAII that enables users to interpret, evaluate, and make informed decisions based on AI outputs. Broadly, there are two complementary approaches to achieving understandability: (1) building interpretable models, and (2) generating post-hoc explanations for “black-box” models [21,32–35]. Interpretable models are inherently transparent; that is, users can directly inspect their internal logic. Examples include decision trees (where the decision path can be followed step-by-step), linear regression with a limited number of features (where coefficients indicate variable importance), and rule-based or knowledge-based systems [36–39]. These models offer intrinsic explainability, meaning that the model’s structure itself explains. However, a trade-off often exists: while these models are easier to interpret, they may lack the capacity to capture complex patterns in high-dimensional data, potentially compromising performance for transparency.

In contrast, many high-performing AI models, particularly deep neural networks (DNNs), are not inherently interpretable. For these “black-box” systems, post-hoc explanation techniques are used to enhance understandability without modifying the model’s internal structure. These techniques generate explanations after a decision is made, offering insights into the model’s reasoning process. Common post-hoc methods include: (1) generating feature importance scores, which identify which input variables most influenced the output [40]; (2) providing local explanations for individual predictions, such as with LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which use simplified surrogate models or Shapley values to attribute importance [41,42]; (3) visualizing internal activations, for example with heatmaps that highlight the image regions most relevant to a vision model’s decision [43,44]; (4) generating natural language justifications, which explain the model’s outputs in human-readable text [45,46]; and (5) constructing counterfactual explanations, which illustrate how minimal changes to input features could have altered the model’s decision [47,48]. These techniques play a vital role in making complex AI systems more transparent and comprehensible, especially for non-technical users or in domains requiring accountability.

Moreover, explainability is increasingly recognized as a cornerstone of accountable and transparent AI, particularly in high-stakes domains such as healthcare, finance, and criminal justice. In these settings, users and regulators must be able to interrogate AI decisions, asking not only what decision was made, but why and how. For instance, if an AI system recommends a treatment or a bail decision, stakeholders must receive a satisfactory justification. Without such transparency, trust erodes, and systems may fail to meet ethical or legal requirements. Recent policy developments reflect this urgency. The European Union’s General Data Protection Regulation (GDPR) has been interpreted as granting individuals a “right to explanation” for algorithmic decisions, and the EU’s Ethics Guidelines for Trustworthy AI explicitly identify transparency and explainability as core principles [49,50]. These regulatory pressures have further accelerated research in XAI, emphasizing that understandability is not merely a desirable feature but is often a prerequisite for legal compliance, ethical alignment, and public acceptance [21].

3 Reinforcement Learning and Its Extensions

Learning from interaction is a foundational principle that underlies many theories of intelligence, both biological and artificial. RL formalizes this concept as a framework for goal-directed learning through sequential interactions between an agent and its environment [7]. In this framework, the agent learns to make decisions over time by receiving feedback in the form of rewards, aiming to discover policies that maximize cumulative returns.

This section introduces the mathematical foundations of RL, focusing on the formalism of Markov Decision Processes (MDPs). We then explore several key extensions that enhance RL’s expressiveness and applicability. In particular, we examine Partially Observable Markov Decision Processes (POMDPs), which address environments where the agent lacks full observability and must instead maintain and reason over belief states. We also discuss approaches for handling temporally complex or logically structured tasks by integrating temporal logic, automata-based task representations, and related formal methods. These extensions allow RL systems to operate effectively in settings characterized by long-term dependencies, safety constraints, and structured objectives beyond scalar reward signals, bringing RL closer to the demands of real-world decision-making under uncertainty.

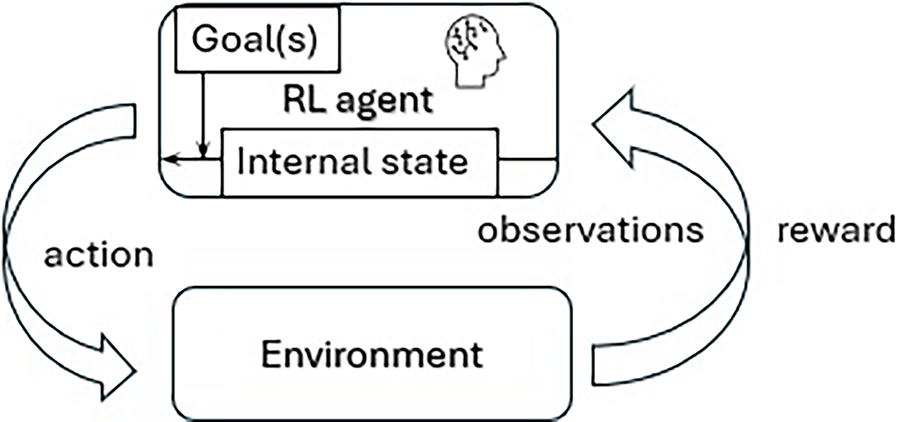

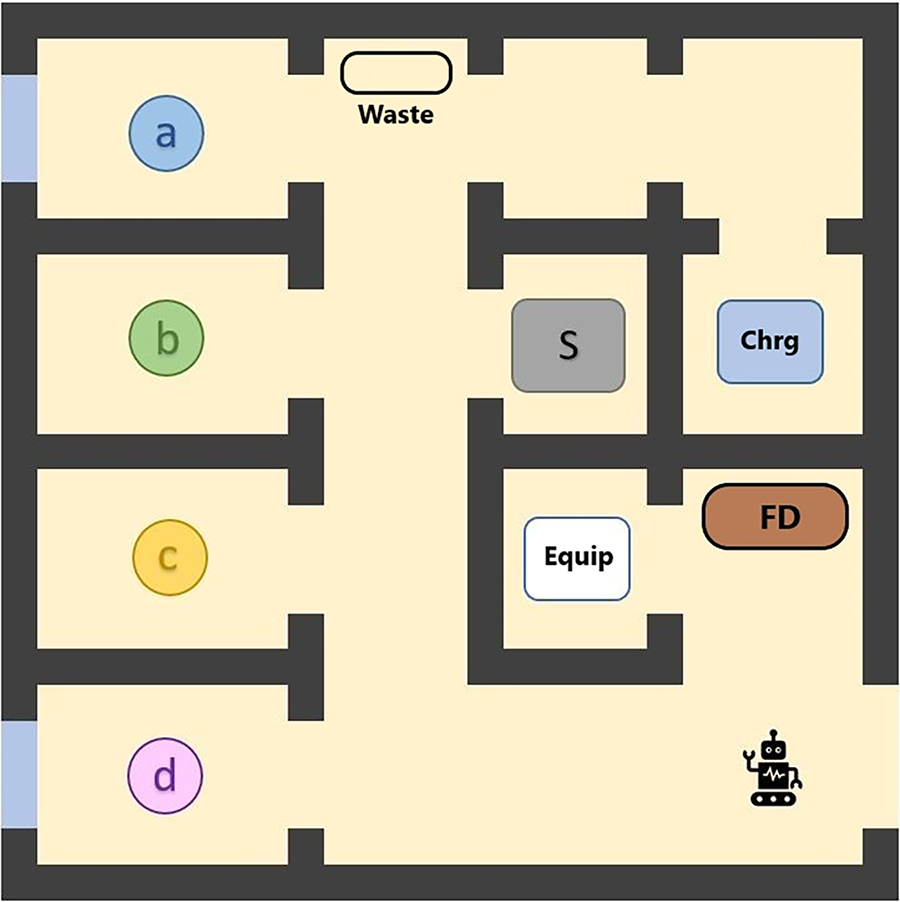

Fig. 2 illustrates the core structure of RL, which consists of five key components: the agent, the environment, the internal state, actions, and the reward function. The environment, whether physical or virtual, represents the external setting with which the agent interacts. Through this interaction, the agent receives observations that provide (possibly partial) information about the environment, which it uses to update its internal state, i.e., an internal representation or belief about the current configuration of the environment. Based on this internal state, the agent selects and executes a goal-oriented action that influences the environment. In response, the agent receives a reward signal, a scalar feedback value that evaluates the desirability of its recent behavior. This trial-and-error learning process, driven by continuous interaction and feedback, forms the foundation of RL.

Figure 2: RL agents learn from interaction with the environment

The agent’s objective is to learn a policy, a mapping from observations or states to actions, that maximizes the expected cumulative reward over time, often in the presence of uncertainty, partial observability, and delayed action effects. In conventional RL, it is typically assumed that the environment is fully observable, meaning the agent has direct access to the true state of the environment at each time step. Under this assumption, the environment-agent interaction is formally modeled using an MDP, which provides a mathematical framework for defining the states, actions, transition dynamics, and reward structure [51].

An MDP can be mathematically represented by a tuple

where S is a finite state space, A is a finite action space,

It shall be noted that a set of actions is available in each state, e.g.,

where

To numerically derive the optimal policy, two value functions are typically introduced: the state-value function

Q-learning is a value-based RL algorithm, originally introduced to estimate the Q-value function by exploring the state space of an MDP over discrete time steps [52]. It is considered a model-free method because it does not require prior knowledge of the transition probabilities (

where

Classical Q-learning assumes that both the state space (S) and action space (A) are small and discrete, enabling the use of a tabular representation to store and update Q-values for each state-action pair individually. However, in many practical applications, the state and/or action spaces are either continuous or high-dimensional, making tabular methods infeasible due to the large number of state-action pairs. To address this challenge, it is common to employ function approximation techniques, particularly DNNs, to generalize Q-value estimates across similar states and actions. A notable advancement in this direction is the deep Q-network (DQN) algorithm, which leverages DNNs to approximate the Q-function and enables RL in complex, high-dimensional environments [54].

DQN introduces the use of two DNNs to stabilize learning: an evaluation Q-network

Using the above equation, the agent records each transition as an experience tuple and stores it in a memory buffer (also known as experience replay memory) [55]. During training, the evaluation Q-network

Another major class of RL methods is policy-based methods, differing from value-based approaches, which directly optimize the policy without explicitly estimating value functions. Rather than learning a value function and then deriving a policy from it, these methods aim to update the policy parameters

where

3.2 Partially Observable Environments

Assuming a fully observable environment, MDPs have been extensively employed in robotics and autonomous systems. However, in many real-world scenarios, agents often operate with incomplete perceptual information, making it difficult to fully determine the underlying system state, i.e., the environment is partially observable. In such cases, a more general mathematical framework, POMDP, must be adopted [58].

To accommodate both static and dynamic events, a probabilistic-labeled POMDP (PL-POMDP, or simply POMDP hereafter) can be formally defined as a tuple

The set of state labels,

Over the past decade, researchers have developed a range of model-based algorithms to address POMDP problems. Many state-of-the-art solvers are capable of handling complex domains involving thousands of states [59]. One prominent class of these algorithms is Point-Based Value Iteration (PBVI), which approximates the solution to a POMDP by computing the value function over a selected, finite subset of the belief space [60,61]. Notably, a belief state represents a probability distribution over all possible system states. Following each state transition, the belief must be updated using the system’s transition and observation models. In essence, model-based approaches, including those based on value iteration, solve an equivalent MDP defined over the continuous belief space.

A commonly used alternative for handling POMDPs is the model-free approach, which does not require prior knowledge of state transition or observation probabilities. In this framework, the agent relies on a history of observations to determine actions, effectively mapping sequences of observations to decisions. To extend the DQN framework for partially observable environments, the Q function (i.e., Q-networks) is adapted to process a series of past observations rather than a single input. Specifically, let

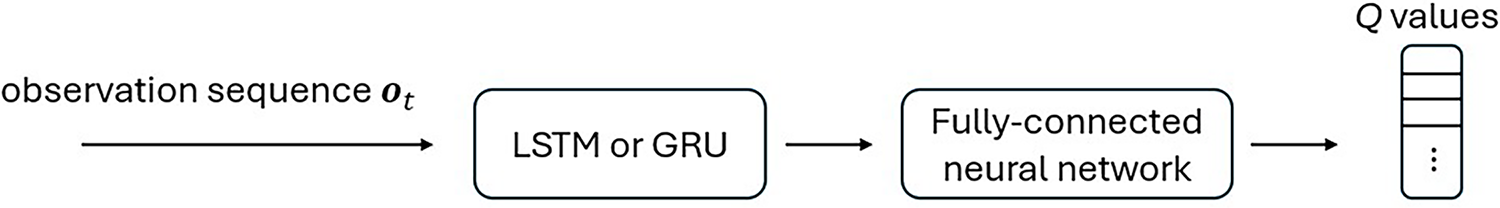

To better capture temporal dependencies in such settings, recurrent architectures like Long Short-Term Memory (LSTM) networks, Gated Recurrent Units (GRUs), or other recurrent neural network (RNN) variants are often integrated into the DQN architecture, as shown in Fig. 3 [8]. This leads to reformulated Q-networks: the evaluation network

Figure 3: RNN-based Q networks in DQN for POMDP problems

3.3 Temporal Logic and Finite-State Automaton

Linear temporal logic (LTL), a formalism within the domain of formal languages, is widely used to specify linear-time properties by describing how state labels evolve over sequential system executions [62]. LTL builds upon propositional logic, incorporating not only standard Boolean operators but also temporal ones. Two fundamental temporal operators are

here,

The meaning of LTL formulas is evaluated over infinite sequence, or words, denoted as

LTL formulas can be systematically transformed into equivalent representations in the form of automata, enabling automated verification techniques such as model checking to assess whether a system satisfies a specified temporal property [62]. One commonly used class of automata for this purpose is the Limit-Deterministic Generalized Büchi Automaton (LDGBA) [64]. LDGBAs are particularly advantageous because they strike a balance between the expressiveness of nondeterministic automata and the efficiency of deterministic ones. By converting an LTL formula into an LDGBA, it becomes possible to encode temporal tasks as automata that accept exactly the sequences (executions) satisfying the formula. This transformation enables checking whether all possible system executions satisfy the temporal specification, or identifying counterexamples when violations occur.

An LDGBA can be defined as a tuple

Given an infinite input word

3.4 Complex Tasks and Specifications in RL

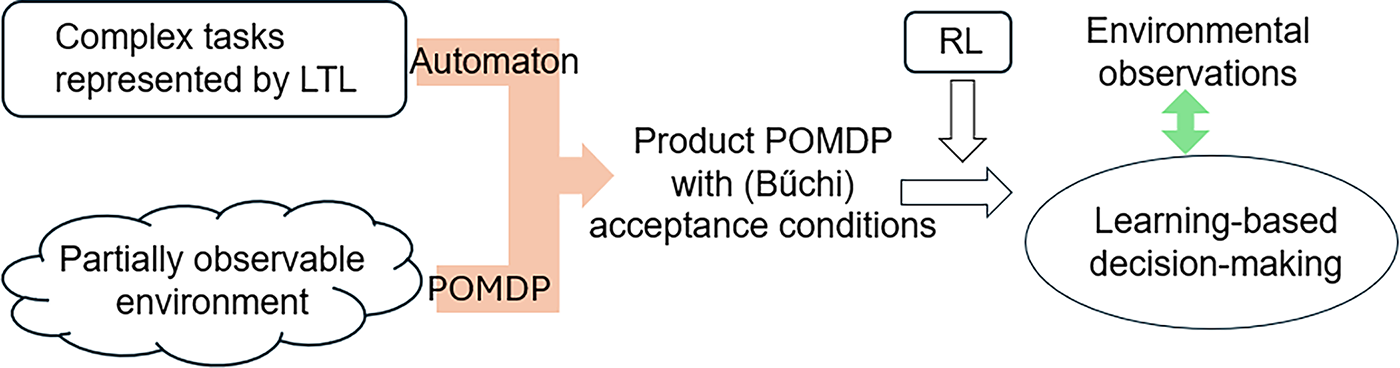

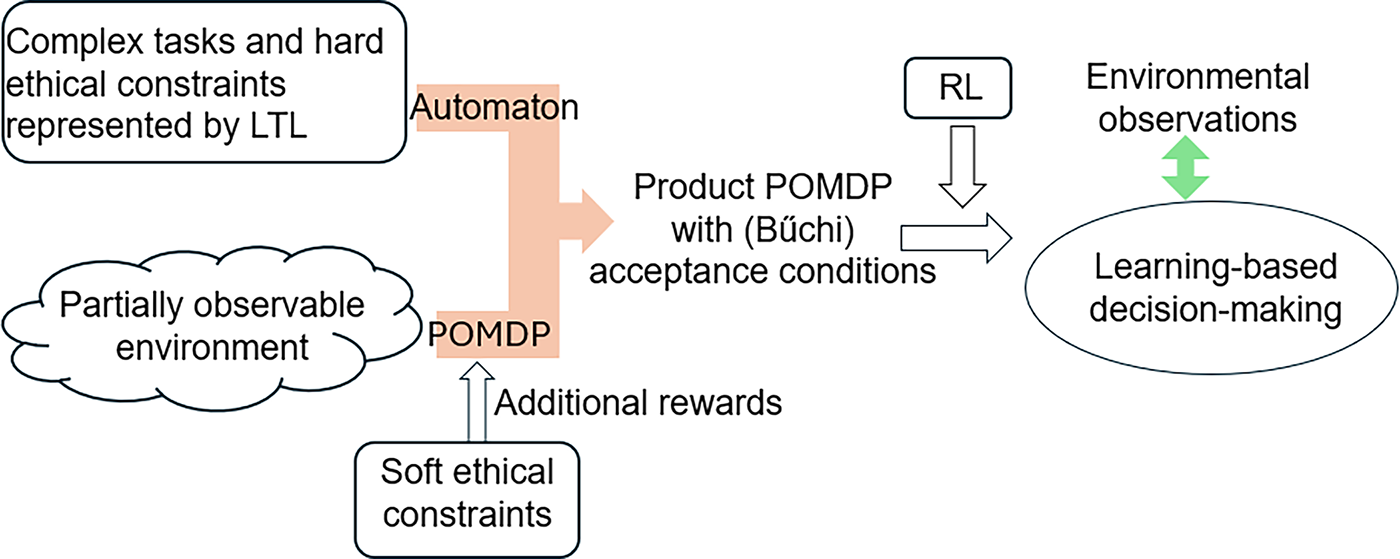

LDGBAs are frequently applied in RL and motion planning under temporal constraints by constructing the product of an MDP and an LDGBA. This product formulation enables the seamless incorporation of logic specifications into the decision-making processes for complex tasks [66–68]. Similarly, methods have been extended to construct the product of a POMDP and an LDGBA, addressing the challenges in environments with partial observability [8], as illustrated in Fig. 4.

Figure 4: The product of LDGBA and POMDP for RL problems with complex tasks

Combining a POMDP

• State Space:

• Action Space:

• Initial State:

• Probabilistic Transition Function:

where

• Reward Function: Rewards are defined over product states:

• Observation Function:

if

• Accepting Conditions: The set of accepting sets in the product POMDP is

A run on

This formulation enables evaluating whether a policy not only performs well in the environment but also satisfies the desired temporal logic objectives.

The optimal policy

3.5 Applications of Reinforcement Learning

RL has become a cornerstone of modern AI, with applications spanning a wide range of scientific, industrial, and societal domains. Its core strength lies in solving sequential decision-making problems under uncertainty, where an agent must learn effective strategies through interaction and feedback from the environment.

• Robotics and autonomous systems: In robotics, RL enables machines to learn complex motor skills such as grasping, locomotion, and manipulation without explicit programming, often outperforming traditional control methods in unstructured settings [69–71]. For autonomous systems, including self-driving vehicles, unmanned aerial vehicles (UAVs), and mobile robots, RL provides a framework for real-time decision-making, motion planning, and obstacle avoidance under uncertainty [72–74]. By leveraging simulated environments for training and transferring learned policies to the physical world, RL allows these systems to achieve robust, adaptive, and scalable performance in tasks ranging from warehouse automation to autonomous exploration.

• Energy and sustainability: RL has shown significant promise in advancing energy efficiency and sustainability by enabling intelligent decision-making in complex, resource-constrained systems. In power grid management, RL has been applied to optimize demand response, balance renewable energy integration, and improve fault detection and recovery, thereby enhancing grid stability and resilience [75–77]. In building energy systems, RL supports dynamic control of heating, cooling, and lighting to minimize energy consumption while maintaining occupant comfort [78,79]. Similarly, in renewable energy operations, RL can optimize wind farm coordination, solar panel tracking, and battery storage management to maximize output and reduce waste [80,81]. Beyond energy systems, RL has been applied to water resource management, smart irrigation, and waste reduction strategies, contributing to broader sustainability goals [82–84]. By continuously adapting policies to variable conditions such as weather patterns, consumption behavior, and resource availability, RL enables sustainable solutions that are both cost-effective and environmentally responsible.

• Healthcare and medicine: RL is increasingly applied in healthcare and medicine, where adaptive decision-making is critical for improving patient outcomes. In personalized medicine, RL has been used to optimize treatment strategies for chronic diseases such as diabetes, cancer, and sepsis by continuously adjusting drug dosages or therapy schedules based on patient responses [85–87]. In medical imaging, RL assists in automating diagnostic tasks, improving image acquisition protocols, and guiding interventions with greater precision [88,89]. Robotic surgery and rehabilitation also benefit from RL, where agents learn to perform delicate maneuvers or adapt therapy regimens to individual patient needs [90,91]. Beyond clinical care, RL contributes to hospital operations by optimizing resource allocation, scheduling, and patient flow management [92,93]. By learning from complex, uncertain, and high-dimensional health data, RL systems can support clinicians in making evidence-based, real-time decisions, thereby enhancing both efficiency and quality of care.

• Finance and economics: RL has emerged as a powerful tool in finance and economics, where decision-making under uncertainty and dynamic environments is fundamental [94]. In financial markets, RL is applied to algorithmic trading, enabling agents to learn adaptive strategies for portfolio optimization, risk management, and high-frequency trading [95]. In economic policy and macroeconomic modeling, RL has been used to simulate and evaluate interventions such as monetary policy adjustments or carbon pricing schemes, allowing exploration of long-term impacts in complex systems [96,97]. Beyond markets, RL supports operations in banking and insurance by optimizing credit scoring, fraud detection, and dynamic pricing [98,99]. Its ability to balance risk and reward in uncertain and evolving contexts makes RL particularly suited to financial and economic domains, where small improvements in decision-making can translate into significant economic value.

• Natural sciences and engineering: RL is increasingly being applied across the natural sciences and engineering to address problems characterized by complex dynamics, uncertainty, and the need for adaptive control. In environmental and climate sciences, RL has been used to optimize flood mitigation strategies and water management in agricultural systems, supporting efficient resource allocation and risk reduction under variable conditions [100,101]. In materials science and chemistry, RL guides simulations and experiments to discover novel compounds, catalysts, and metamaterials [102,103]. In engineering, RL enables intelligent control of systems ranging from adaptive traffic networks and structural health monitoring to advanced manufacturing processes [3,104]. Together, these applications demonstrate RL’s capacity to operate in high-dimensional, data-rich environments, accelerating scientific discovery while enhancing the resilience, efficiency, and sustainability of natural and engineered systems.

4 Human-AI Interaction in Reinforcement Learning

This study classifies the implementation of the HAII mechanism into RL with the following different approaches:

• Learning from human feedback (LHF): In this approach, RL agents learn by using human-provided feedback, such as scalar reward signals, preference comparisons, or evaluative judgments, to guide policy improvement and optimize behavior [105]. This learning is typically passive, as the human evaluates agent behavior after it is produced, rather than being actively queried by the agent.

• Learning from human demonstrations (LHD): This approach uses human demonstrations of desired behavior to kickstart or guide RL. Methods range from inverse RL (inferring reward functions from expert trajectories) to interactive imitation learning (where the agent may iteratively request corrective demonstrations from a human).

• Shared autonomy (SA): In this approach, human and AI agents collaboratively share control of a system. The agent assists the human by inferring goals, predicting intent, or adjusting suboptimal inputs—often using RL to learn when and how to intervene or assist.

• Human-in-the-Loop querying (HLQ): This approach allows agents to actively query a human for information, such as demonstrations, preferences, or advice, to improve learning efficiency. By selecting informative queries or asking targeted questions, the agent can reduce the total amount of human effort needed.

• Explainable RL (XRL): In this approach, the RL agent generates human-understandable explanations about its decision-making process, including why specific actions are chosen. These explanations enhance transparency and trust, enabling humans to provide better-informed feedback, corrections, or constraints.

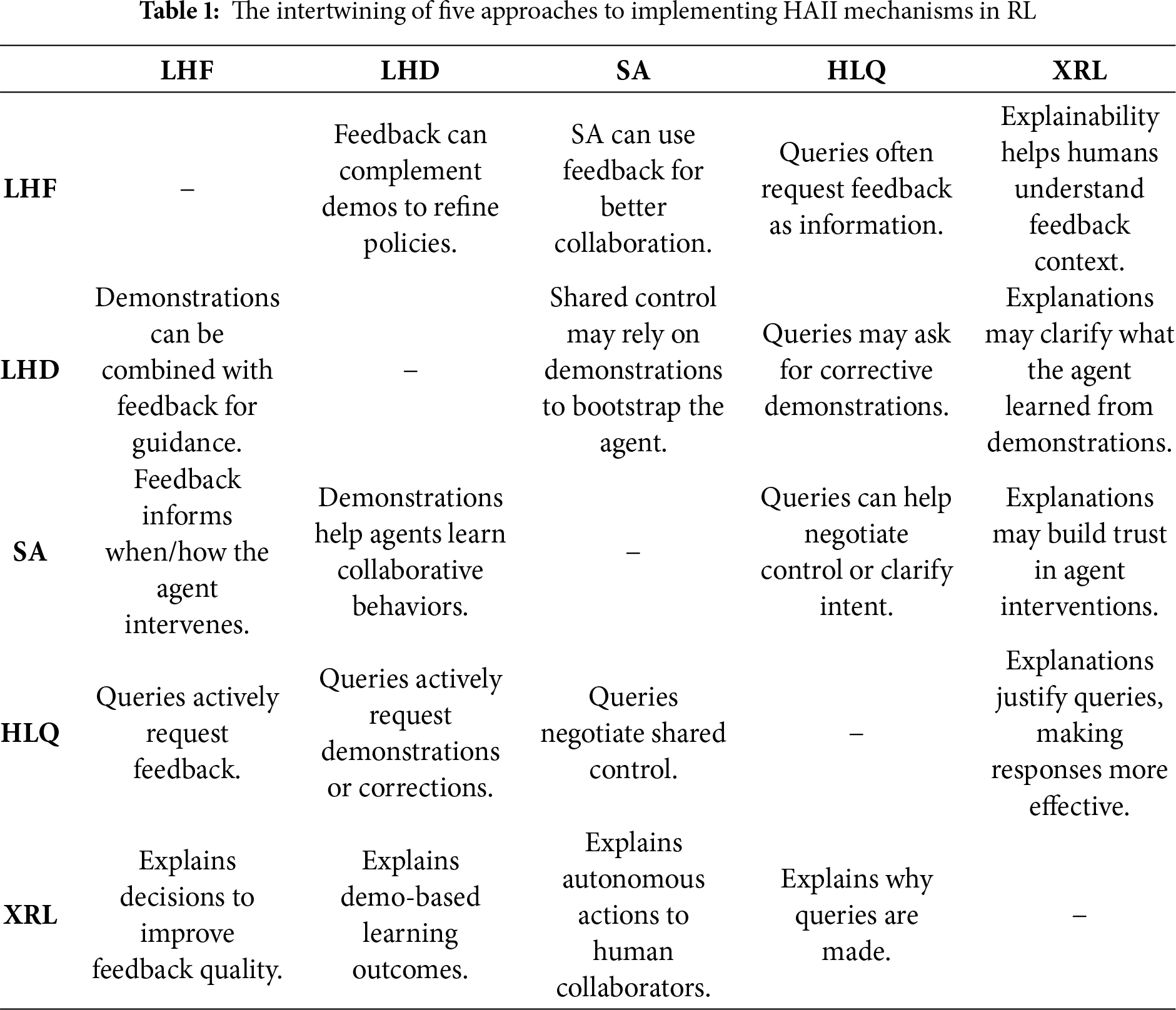

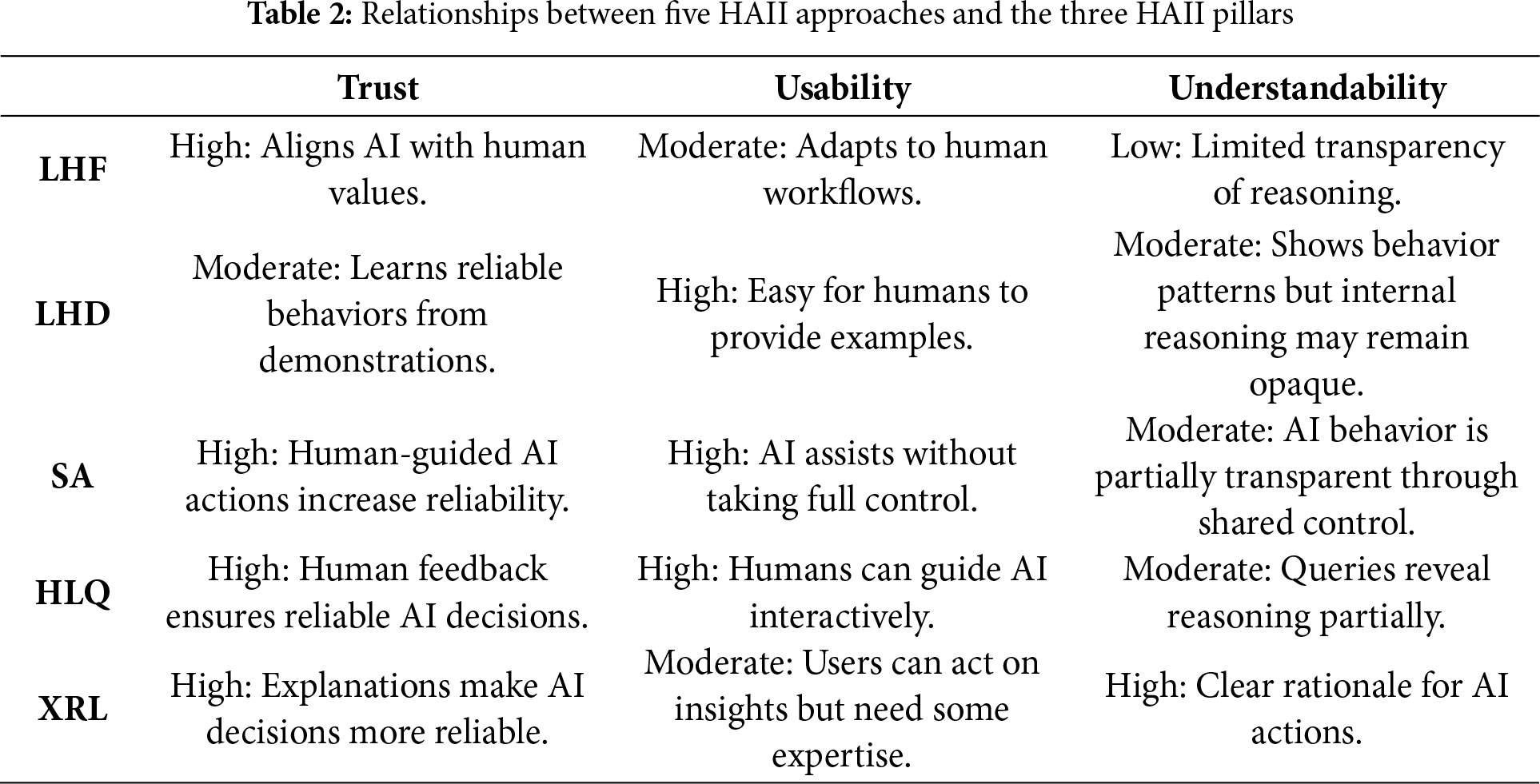

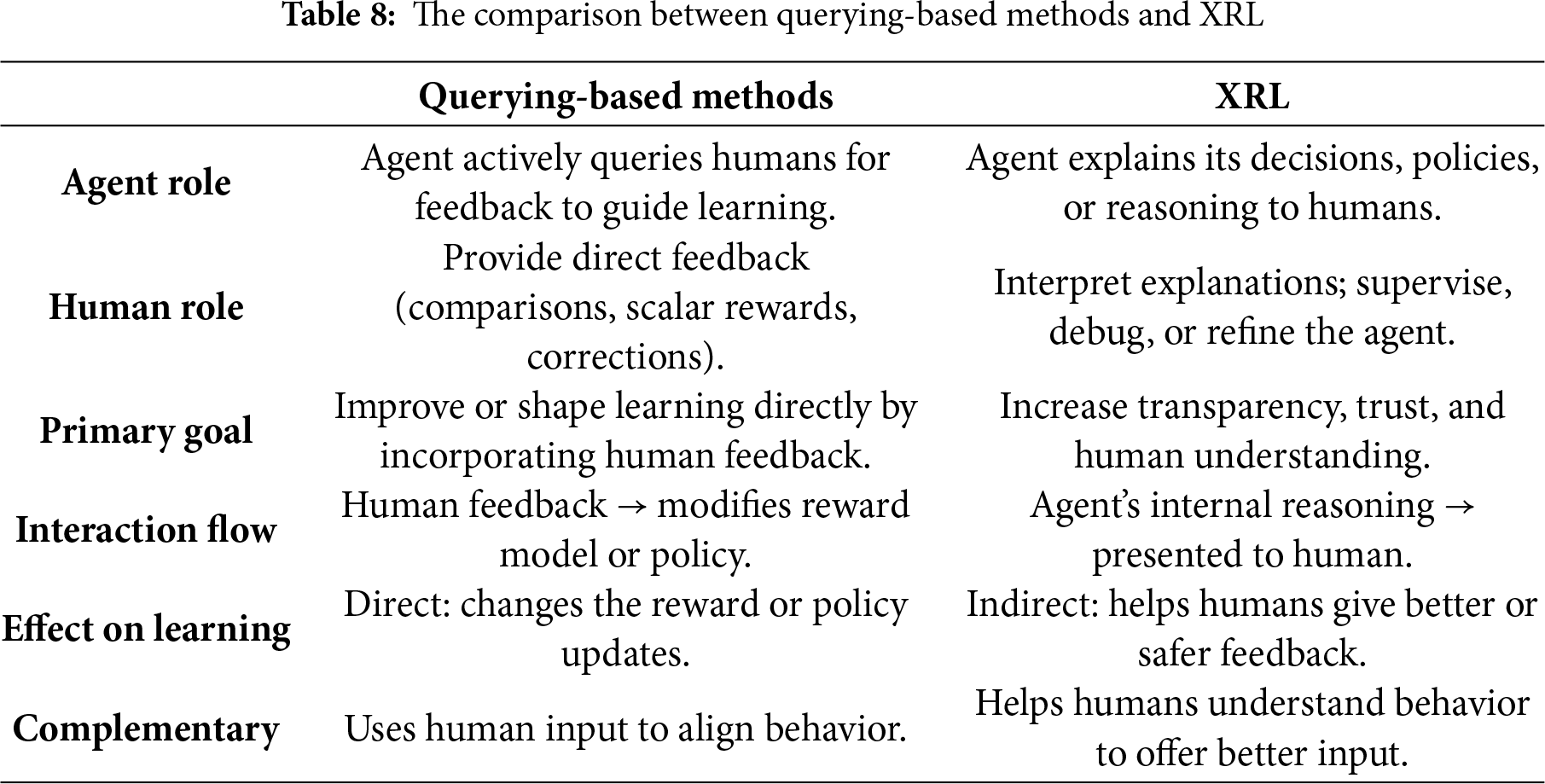

Although this study reviewed each approach individually, these approaches form a spectrum of HAII mechanisms that are often used together in the same system, as shown in Table 1. They complement each other to enable more effective, efficient, and trustworthy collaboration between humans and RL agents. Additionally, their relationships with three HAII pillars (discussed in Section 2) are illustrated in Table 2.

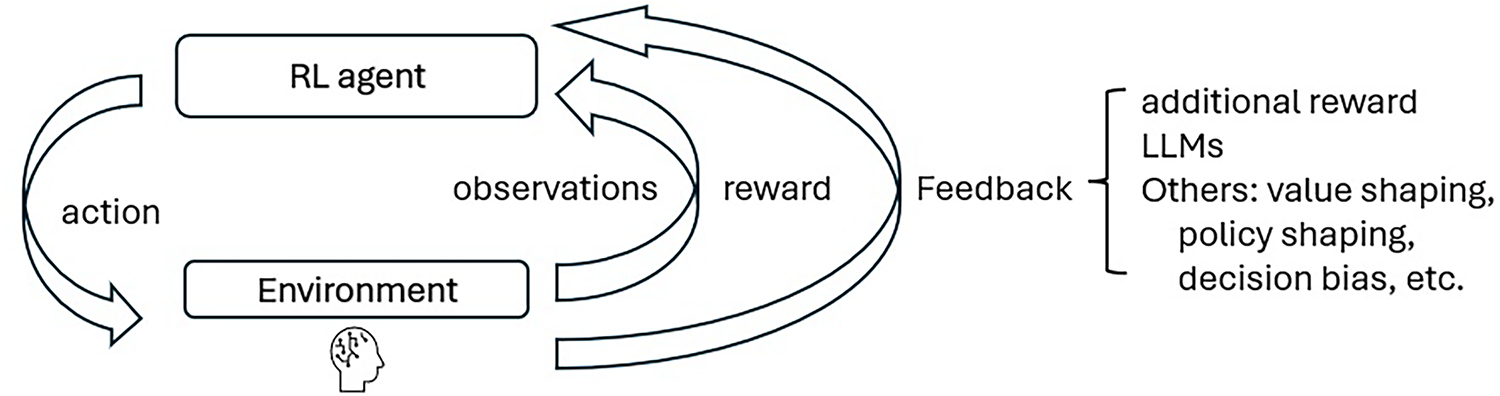

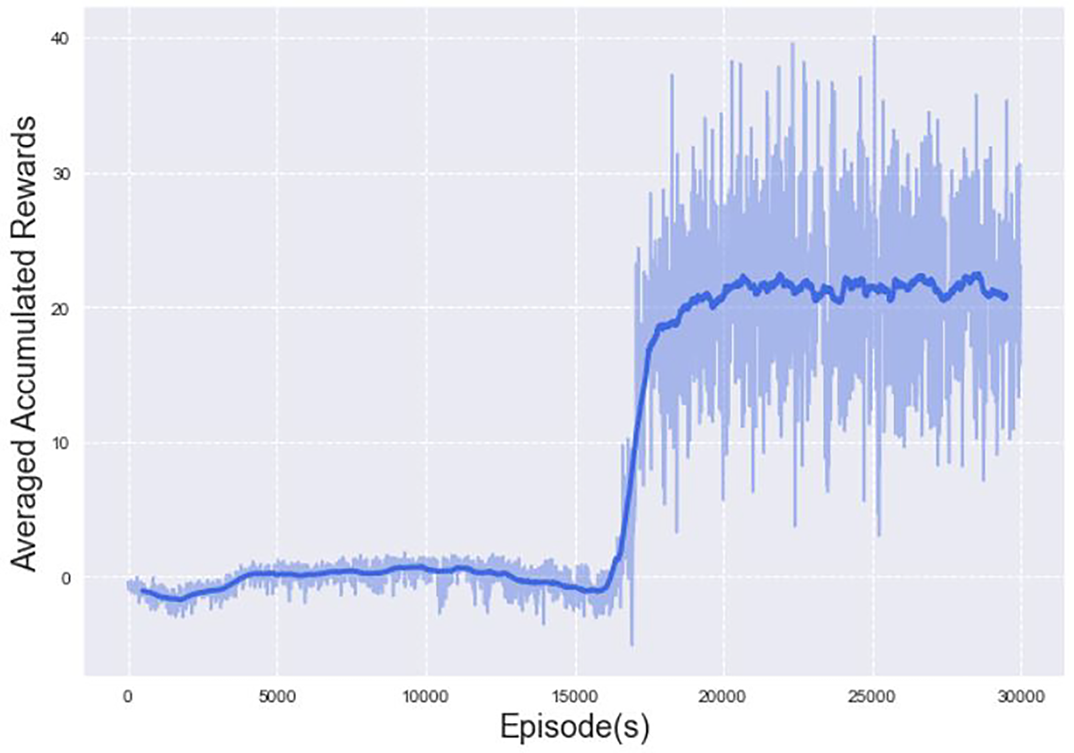

4.1 Learning from Human Feedback

Human feedback can often be combined with environmental feedback and integrated into the reward function, enabling RL agents to perform tasks that better reflect human preferences and needs. This approach is known as reward shaping [105]. In addition, recent developments have employed generative AI models, such as large language models (LLMs), to incorporate human feedback into the RL learning process [106]. Importantly, there is a distinction between direct human feedback, which reflects authentic user values and preferences, and synthetic feedback generated by LLMs, which can simulate or approximate human input at scale. While LLMs offer clear practical advantages, such as reducing annotation costs, accelerating prototyping, and enabling richer language-based interaction, they also raise ethical and methodological concerns. Synthetic feedback may unintentionally embed model biases, misrepresent user intent, or obscure accountability if used as a replacement for real human input. Thus, a critical open question is not whether LLMs can generate feedback, but how they should be used: as a complement to, rather than a replacement for, genuine human oversight. While human feedback can be used in various ways, including value shaping, policy shaping, and decision biasing, this section focuses specifically on reward shaping and the integration of feedback via LLMs, as illustrated in Fig. 5.

Figure 5: The implementation of LHF into RL

LHF directly implements the three pillars of HAII by aligning AI systems more closely with human expectations and needs. It strongly enhances trust, as human feedback allows AI to adopt behaviors that reflect users’ values, preferences, and priorities, making decisions more reliable and socially acceptable. It moderately improves usability by enabling AI systems to adapt to human workflows and practical constraints, reducing friction in interactions and facilitating adoption in real-world settings. LHF also provides partial support for understandability, as analyzing feedback signals can shed light on the factors influencing AI behavior, though full transparency of internal decision-making may remain limited. By integrating human guidance directly into learning processes, LHF helps bridge the gap between theoretically optimal AI policies and solutions that are actionable, interpretable, and widely accepted by users, demonstrating its pivotal role in human-centered AI design.

Reward shaping converts human feedback into a numerical value as a supplemental reward (either incentive or penalty) to RL agents during HAII. This additional reward is often added to the reward provided by the environment [107–109]. Consequently, the overall reward function is revised as

Ibrahim et al. comprehensively reviewed reward engineering and shaping in RL [113]. Their work highlights reward shaping as a critical technique for accelerating learning and guiding agents toward desired behaviors, particularly in environments with sparse or delayed rewards. Among various methods, potential-based reward shaping (PBRS) stands out for its strong theoretical guarantees, as it modifies the reward using a potential function to provide additional guidance without altering the optimal policy [108,114]. The paper also emphasizes the growing importance of incorporating human feedback into reward shaping, where human evaluations, preferences, or demonstrations help construct internal reward models that better align agent behavior with human intentions [115–117].

In a recent work done by Golpayegani et al., the authors applied RL to advancing sustainable manufacturing for job shop scheduling (JSS) [118]. They proposed an ontology-based adaptive reward machine to address unforeseen events in dynamic and partially observable environments. In their paper, the generation of additional rewards does not rely on explicit real-time human feedback. Instead, it is achieved automatically through an ontology-based mechanism that embeds domain expert knowledge. Particularly, the ontology encodes concepts and properties relevant to the JSS environment and labels them with positive or negative beliefs that reflect desirable or undesirable outcomes from a human perspective, such as high machine utilization or low waiting time [119]. When the RL agent observes its environment, it maps observations to high-level ontological concepts, extracts new propositional symbols, and dynamically updates the reward machine. This process creates new reward functions by maximizing properties with positive beliefs and minimizing those with negative beliefs, allowing the agent to adapt its behavior to unforeseen events.

In another study, Jeon et al. proposed a robot teaching framework using RL in which robots learn cooperative tasks by integrating real-time sentiment feedback from human trainers [120]. Using a speech-based sentiment analysis module, the system detects humans’ emotional tone, such as encouragement or frustration, and adaptively transforms this into an additional reward signal that augments the robot’s standard task-based rewards [121,122]. This human-derived reward dynamically shapes the learning process, helping robots adjust their behavior to better align with human expectations and cooperation goals. Experiments show that robots guided by sentiment-based rewards achieve faster convergence, more stable learning, and improved cooperative performance compared to purely environment-driven RL.

Recent research explores leveraging LLMs to automate or enhance reward shaping in RL. By interpreting natural language feedback, demonstrations, or preferences, LLMs can generate auxiliary rewards that guide RL agents more efficiently than manually designed reward functions [123–125]. For instance, LLMs can translate human instructions (e.g., “Avoid unsafe actions”) into quantitative shaping rewards or infer implicit preferences from textual critiques. This approach is particularly valuable in complex, real-world tasks where sparse or ambiguous environmental rewards hinder learning. However, challenges remain, such as mitigating biases in LLM-generated rewards and ensuring alignment with true objectives [126,127].

Chaudhari et al. provided a comprehensive critique of LHF as applied to LLMs, dissecting its components, challenges, and practical implications [128]. The paper systematically analyzes the LHF pipeline, from human preference data collection and reward modeling to policy optimization, highlighting key bottlenecks like reward misgeneralization, scalability limitations, and annotator biases. It contrasts with some other alternative approaches, such as direct preference optimization, adversarial training, and underscores trade-offs between alignment, diversity, and computational cost. The authors also discussed ethical concerns (e.g., amplification of harmful preferences) and proposed future directions, including hybrid methods and better human-AI collaboration frameworks. By synthesizing empirical findings and theoretical insights, this survey serves as a roadmap for improving LHF’s efficacy and reliability in LLM deployment.

A recent study introduced Text2Reward, a framework that integrates LLMs to automatically generate dense, interpretable reward functions for RL tasks from natural language task descriptions [129]. By prompting an LLM to decompose high-level goals into structured reward components (e.g., “move forward” to linear velocity reward) and synthesize them into executable code, Text2Reward eliminates the need for manual reward engineering and iteratively incorporates human feedback from users to improve reward functions. Experiments in robotic locomotion and manipulation tasks showed that policies trained with LLM-generated rewards achieve performance comparable to those trained with human-designed rewards, while also enabling real-time adjustments via natural language. The method bridges the gap between high-level intent and low-level RL implementation, making RL more accessible to non-experts.

It is worth mentioning that, in many state-of-the-art achievements, LLMs in RL act as proxies for human feedback, even without real-time or runtime HAII. For example, Sun et al. introduced an LLM-as-reward-designer paradigm where the LLM automatically shapes rewards through iterative dynamic feedback, without requiring direct human input during training [130]. The LLM evaluates agent behavior, identifies suboptimal actions, and adjusts dense/sparse rewards to guide learning, mimicking human-like reward engineering but in a scalable and automated manner. In another study, Qu et al. use LLMs to interpret environment states and agent behaviors, generating intermediate rewards that guide learning more effectively [131]. By integrating LLM-derived latent rewards, the framework improves sample efficiency and policy optimization in complex, long-horizon tasks.

Another approach included LLM-guided pretraining for RL agents, where an LLM (e.g., GPT) provides high-level task guidance to accelerate learning [132]. This approach simulates human-like feedback by critiquing agent behaviors during pretraining and provides reward-shaped advice to bootstrap RL policies. Additionally, Guo et al. presented an LLM-based framework for automated reward shaping in robotic RL, where LLMs interpret task descriptions and generate dense, semantically aligned reward functions [133]. The key innovation is a hierarchical reward decomposition: the LLM first breaks tasks into sub-skills, then designs weighted sub-rewards for each skill, dynamically adjusting their importance based on real-time agent performance. This approach also maintains interpretability by mapping LLM outputs to modular reward components.

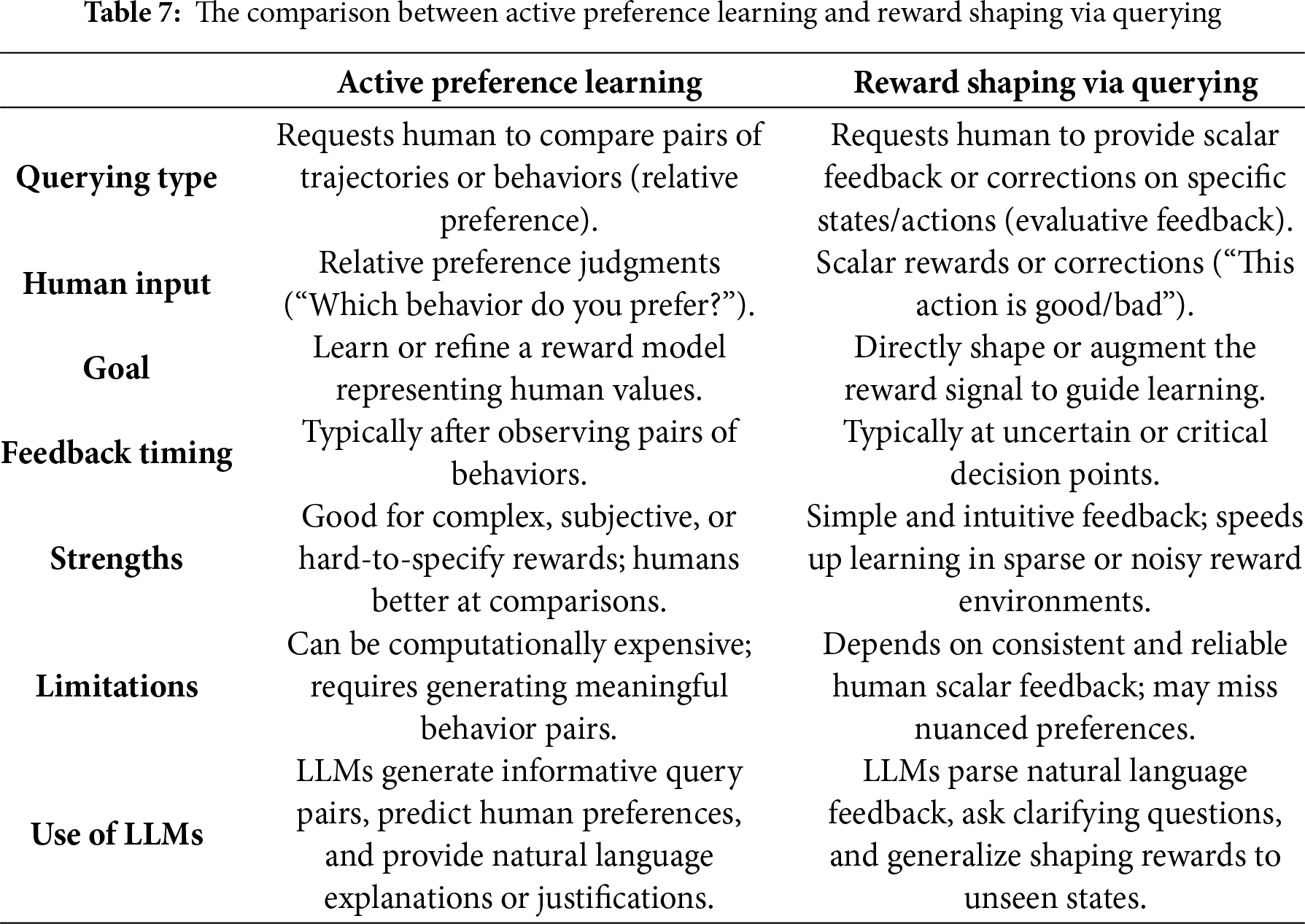

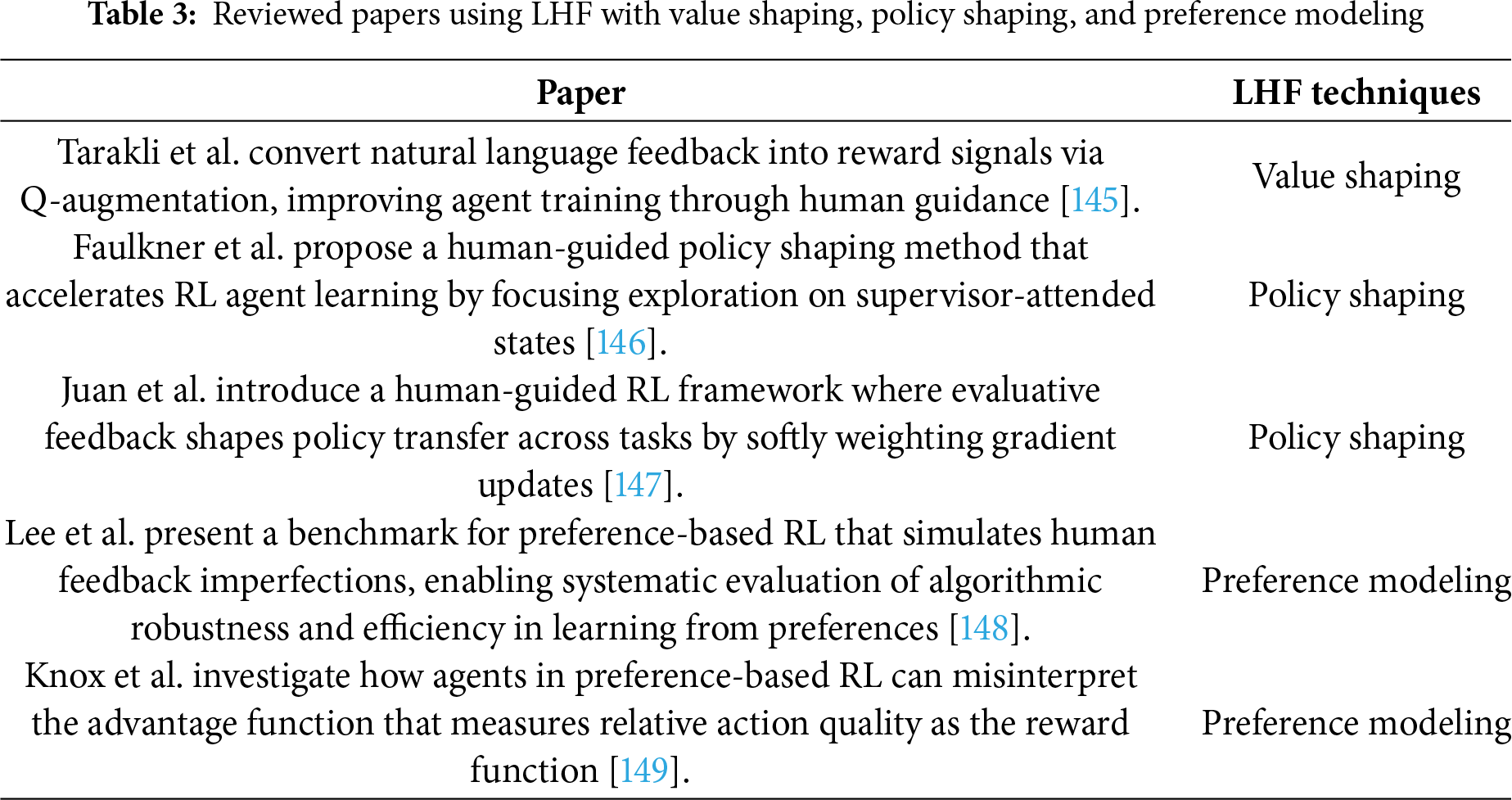

The other techniques of LHF in RL include value shaping, policy shaping, and preference modeling. Value shaping addresses the challenge of sparse or delayed rewards in RL by incorporating additional immediate feedback signals [134,135]. These signals, including human feedback, provide intermediate guidance about the relative value of actions or states, helping to bridge the temporal credit assignment problem [136]. Policy shaping incorporates human feedback by directly modifying the policy update rule in RL [137]. Model-free policy shaping is typically implemented by adding a human guidance term to the policy gradient, which biases the agent’s actions toward those preferred by the human demonstrator or critic [138]. Several model-based policy shaping can be found in [110–112,139–142]. Additionally, preference modeling is a framework for learning reward functions or policies directly from human judgments (e.g., rankings or comparisons of trajectories). Instead of requiring explicit rewards or demonstrations, the agent infers objectives from relative human preferences over pairs of state-action sequences [143,144]. Table 3 presents an overview of the articles discussed in detail subsequently.

Tarakli et al. presented a novel RL framework, ECLAIR (Evaluative Corrective Guidance Language as Reinforcement), in which an agent learns from natural language feedback instead of predefined numeric rewards [145]. The authors employed value shaping, especially Q-augmentation methods, to convert qualitative human feedback into structured reward signals, dynamically adjusting the agent’s value function to align with the trainer’s guidance [150]. By integrating LLMs with RL, the method enables more intuitive human-robot collaboration, as demonstrated in simulated environments where it improves learning efficiency and task performance over traditional RL approaches in advanced robotics.

Faulkner et al. introduced Supervisory Attention Driven Exploration (SADE), a policy shaping method where a human supervisor guides an RL agent’s exploration by directing attention to key states (e.g., via gaze or clicks) and providing shaping rewards [146]. The approach dynamically scales exploration noise using an attention mask and converts feedback into potential-based rewards, reducing sample complexity in robotic tasks (e.g., block stacking) while preserving convergence guarantees. Unlike traditional policy shaping, SADE focuses on biasing exploration rather than directly modifying actions, balancing human guidance with autonomous learning.

In another work, Juan et al. proposed a framework for policy transfer in RL using human evaluative feedback to shape exploration [147]. The method integrates human-provided evaluations into a progressive neural network, where feedback dynamically scales the policy’s gradient updates, biasing learning toward preferred behaviors without hard action overrides. By combining human guidance with multi-task RL, the approach achieves efficient policy adaptation across related tasks, demonstrating faster convergence and higher success rates compared to standard RL baselines. While not traditional policy shaping, the human feedback implicitly shapes the policy through loss function modifications, offering a flexible alternative to direct action corrections.

Lee et al. presented a comprehensive benchmark designed to evaluate and advance preference-based RL methods, which learn from human feedback rather than pre-defined rewards [148]. The authors addressed the lack of standardized evaluation in general preference-based RL approaches by introducing simulated human teachers that exhibit realistic irrationalities, including stochastic preferences, myopic decision-making, occasional mistakes, and query skipping, to better reflect real-world human input. The benchmark covers diverse tasks from locomotion to robotic manipulation, enabling systematic comparison of algorithms under varying conditions. Using this benchmark, the authors evaluated state-of-the-art methods and found that while these algorithms perform well with perfectly rational teachers, their effectiveness degrades significantly when faced with noisy or biased feedback [115]. They also pointed out that preference-based RL may have potential drawbacks if malicious users teach the bad behaviors or functionality, which raises safety and ethical issues.

Furthermore, Knox et al. investigated how RL agents, particularly those trained with preference-based LHF, can misinterpret the advantage function (which measures relative action quality) as the reward function (which measures absolute desirability) [149]. The authors showed that when agents are trained using preference comparisons, where humans rank trajectories, they often conflate advantage with reward, leading to suboptimal or unintended behaviors. Through theoretical analysis and experiments, they demonstrated that this confusion can cause agents to prioritize actions that merely outperform alternatives rather than those that are objectively best. The paper highlights implications for LHF in LLMs, where such misalignment could propagate biases or reward hacking. Proposed solutions include careful reward shaping and explicit separation of advantage and reward during training.

4.2 Learning from Human Demonstration

While reward shaping through human feedback provides an efficient means of steering agent behavior, it often captures only local preferences or corrective signals. Demonstrations complement this limitation by offering richer, trajectory-level guidance that illustrates not only what outcomes are preferred but also how to achieve them. In this way, learning from demonstration extends the strengths of feedback, providing structured examples that can anchor RL in more complex, real-world tasks.

LHD, also known as imitation learning (IL), is a subfield of ML and robotics where an agent learns to perform tasks by observing how humans (or sometimes other agents) perform them, rather than being explicitly programmed or learning solely through trial and error [151,152]. LHD supports the HAII pillars by allowing AI systems to observe and imitate human behavior. It moderately enhances trust as AI’s actions reflect demonstrated strategies and appear more reliable to users. LHD strongly improves usability, since providing demonstrations is a highly intuitive form of teaching that lowers the barrier for human-AI collaboration, especially for non-experts. It also moderately supports understandability, as observing the AI’s learned behaviors can reveal patterns aligned with human actions, though the internal decision-making process may still be difficult to fully interpret. By leveraging human demonstrations, LHD enables AI systems to adopt human-aligned strategies efficiently while maintaining practical relevance and user acceptance.

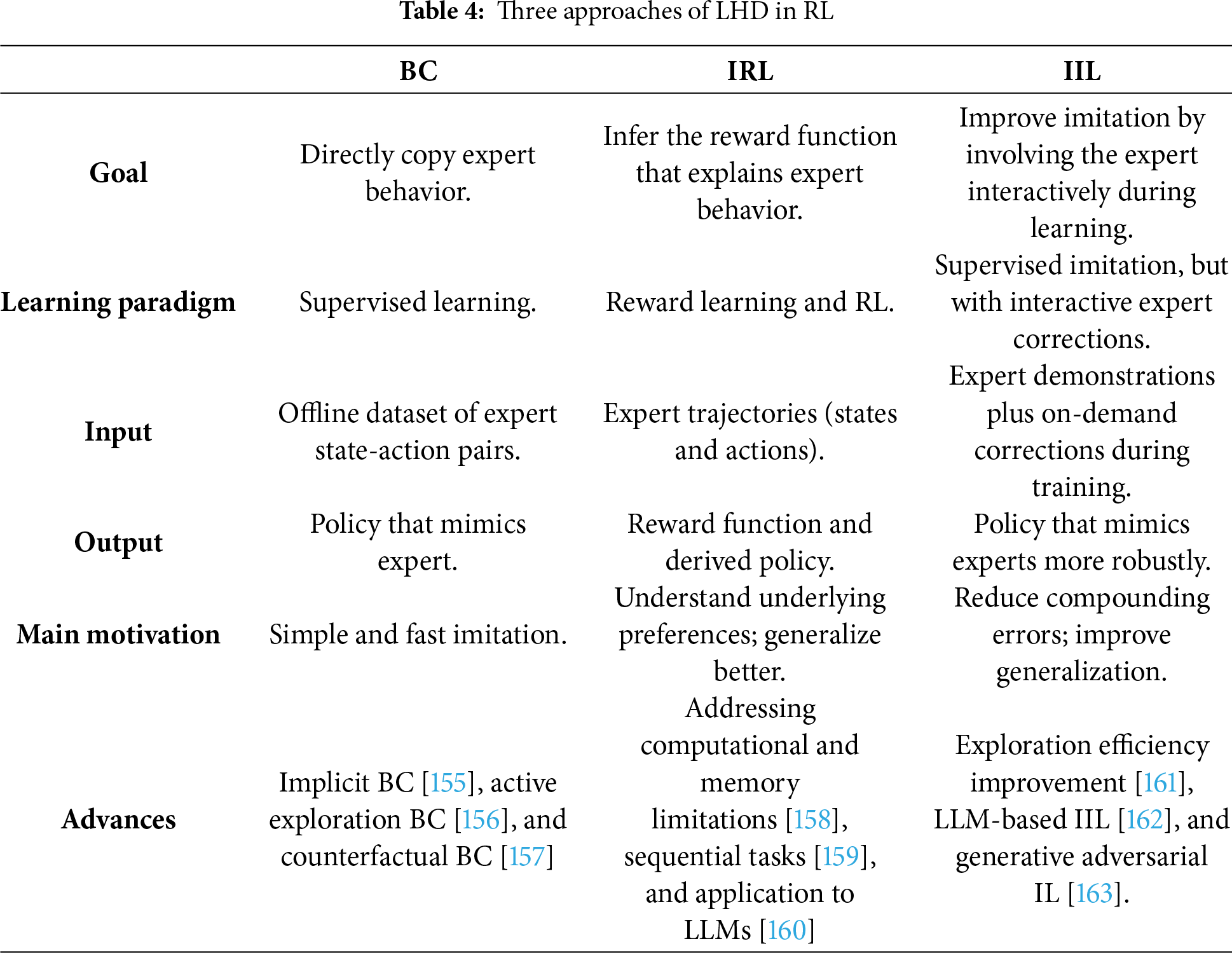

There are three common methods to incorporate LHD in RL: (1) Behavioral cloning (BC) that directly mapping states to action by supervised learning [153], (2) inverse RL (IRL) to infer the reward function based on the demonstration and use it to train the agent [154], and (3) interactive imitation learning (IIL) where the agent actively interacts with the human during learning, instead of only passively copying static demonstrations. The overview of the three approaches is listed in Table 4.

It should be noted that Zare et al. provided a comprehensive overview of IL, focusing on its evolution, methodologies, and open challenges [164]. The authors discussed the approaches of IL, such as BC, IRL, and adversarial IL, as well as emerging areas like imitation from observation [165,166]. Key challenges discussed include handling imperfect demonstrations and domain discrepancies like dynamics or viewpoint mismatches [167–169]. The paper concludes by outlining future directions, emphasizing the need for robust, scalable methods to enhance real-world applicability in domains such as autonomous driving and robotics.

BC is essentially supervised learning. Given a set of demonstrations,

That is, BC directly learn to imitate the demonstrated behavior by minimizing the difference between the agent’s chosen action and the demonstrator’s action.

Jia and Manocha presented a hybrid approach that leverages BC to initialize painting policies from human demonstrations before refining them via RL in a simulated environment [170]. This BC framework bridges the sim-to-real gap by pre-training the agent on stroke sequences, enabling efficient transfer to a real UltraArm robot equipped with a RealSense camera. By combining BC with Gaussian stroke modeling and vision-based contact force estimation (eliminating the need for physical sensors), the system achieves precise, adaptive brush control in real-world sketching, outperforming RL-only baselines in both stroke quality and policy convergence. This work highlights BC as a critical enabler for scalable and realistic robotic artistry.

Zhang et al. proposed Implicit BC (IBC) combined with Dynamic Movement Primitives (DMPs) to enhance RL for robot motion planning [155]. The authors address the inefficiency of traditional RL by leveraging human demonstrations through IBC, which avoids overfitting by penalizing deviations via an energy function rather than explicit action matching, unlike explicit BC (EBC). The framework integrates a multi-DoF DMP model to simplify the action space and a dual-buffer system (demonstration and replay) to bootstrap training. Experiments on a 6-DoF Kinova arm demonstrated that IBC-DMP achieves faster convergence, higher success rates, and better generalization in pick-and-place tasks compared to baselines such as the Deep Deterministic Policy Gradient (DDPG) method. The authors also discussed IBC’s energy-based loss and demonstration-augmented RL, bridging human expertise with RL robustness for complex motion planning.

In another study, Xu and Zhao introduced Active Exploration Behavior Cloning (AEBC), an innovative IL algorithm designed to overcome the sample inefficiency limitations of traditional BC [156]. Unlike conventional BC, which passively replicates expert demonstrations, AEBC actively enhances exploration by integrating a Gaussian Process (GP)-based dynamics model that predicts future state uncertainties. The algorithm’s novel entropy-driven exploration mechanism prioritizes high-uncertainty states during training, optimizing sample utilization through a dual objective, consisting of the standard BC’s action prediction loss and an entropy term that maximizes the log determinant of the covariance matrices of the predicted states. In other words, GP-guided active exploration avoids expensive online expert relabeling, and a hybrid loss function dynamically balances imitation and exploration. Consequently, AEBC addresses BC’ core weaknesses, compounding errors and poor sample efficiency, making it particularly suitable for real-world applications like robotics, where interactions are expensive. The authors conducted benchmark problems and found that AEBC achieved expert-level performance with much fewer interactions than traditional BC and outperformed Advantage-Weighted Regression (AWR) in sample efficiency [171].

Recently, Sagheb and Losey proposed Counterfactual Behavior Cloning (Counter-BC), a novel offline IL method that addresses the limitations of traditional BC by inferring the human’s intended policy from noisy demonstrations [157]. Unlike standard BC, which naively clones all actions, including errors, Counter-BC introduces counterfactual actions that are plausible alternatives the human might have intended but did not demonstrate perfectly. The algorithm’s core innovation is a generalized loss function that minimizes policy entropy over these counterfactuals, favoring simple, confident explanations while remaining close to the demonstrated data, all without requiring additional human labels or environment interactions. Evaluated on simulated tasks and real-world robotics, Counter-BC outperforms BC and baselines in sample efficiency and task success, particularly with noisy or heterogeneous demonstrations. Counter-BC advances BC’s practicality by interpreting what the human meant rather than what they did, bridging noisy data and robust policy learning.

Echeverria et al. introduced another novel approach, in which an offline RL method is tailored for combinatorial optimization problems like job-shop scheduling [172]. The authors addressed limitations of traditional deep RL (slow learning) and BC (poor generalization) by proposing a hybrid approach that combines reward maximization with imitation of expert solutions. The innovative contributions include modeling scheduling problems as MDPs with heterogeneous graph representations, encoding actions in edge attributes, and a new loss function balancing RL and imitation terms. The method outperforms state-of-the-art techniques on several benchmarks, demonstrating superior performance in minimizing makespan, particularly for large-scale instances. The work highlights offline RL’s potential for real-time scheduling with complex constraints and suggests future extensions to other combinatorial problems.

4.2.2 Inverse Reinforcement Learning

Although BC is computationally efficient, it faces another major limitation: the covariate shift problem [173,174]. When training, the agent learns from expert-generated state-action pairs. However, during deployment, it encounters states influenced by its actions, potentially deviating from the training distribution [175,176]. Since the agent isn’t trained to recover from these unfamiliar states, errors compound over time [177]. This makes BC particularly unreliable in safety-critical applications, such as autonomous driving, where unseen scenarios can lead to catastrophic failures [178]. One of the solutions to address covariate shifting is IRL.

A recent survey comprehensively explored IRL as an approach to infer an agent’s reward function from observed behavior rather than manually designing it for handling RL problems [179]. The paper outlines key challenges, including ambiguity in reward inference, generalization to unseen states, sensitivity to prior knowledge, and computational complexity [180,181]. It categorizes foundational methods, including maximum margin optimization, maximum entropy approaches, Bayesian learning techniques, and classification or regression-based solutions [182–185]. The survey also discusses extensions addressing imperfect or partial observations, multi-task learning, incomplete models, and nonlinear reward functions [186–188]. The paper concludes by emphasizing future directions, such as enhancing efficiency for high-dimensional spaces and adapting IRL to dynamic real-world applications like robotics and autonomous systems. Another survey can be found in [189].

Lin et al. proposed a computationally efficient IRL approach to recover reward functions from expert demonstrations while addressing the computational and memory limitations of existing methods [158]. The authors introduced three key contributions, including a simplified gradient algorithm for the reward network, a loss function based on feature expectations rather than state-visiting frequency, and an automated featurization network to process trajectories without manual intervention. The method eliminates repetitive gradient calculations and excessive storage by using streamlined feature expectations for both expert and learner trajectories. Validated on benchmarks, such as CartPole, this approach demonstrated superior performance in terms of average return, required steps, and robustness to noise, while reducing training time and memory demands compared to state-of-the-art methods [190]. The approach is particularly effective for high-dimensional, continuous-state tasks with variable-length demonstrations.

Melo and Lopes addressed the challenge of teaching sequential tasks to multiple heterogeneous IRL agents [159]. They identified conditions under which a single demonstration can effectively teach all learners (e.g., when agents share the same optimal policy) and demonstrated that significant differences in transition probabilities, discount factors, or reward features render class teaching impossible. After formalizing the problem, they proposed two novel algorithms: SplitTeach and JointTeach. SplitTeach combines group and individualized teaching to ensure all learners master the task optimally, while JointTeach minimizes teaching effort by selecting a single demonstration, albeit with potential suboptimal learning outcomes. Simulations across multiple scenarios validated the theoretical findings, demonstrating that SplitTeach guarantees perfect teaching with reduced effort compared to individualized teaching, whereas JointTeach achieves minimal effort at the cost of performance. This work bridges gaps in ML for sequential tasks, offering practical solutions for heterogeneous multi-agent settings.

Wulfmeier et al. explored the application of IRL to improve IL in LLMs [160]. Unlike traditional maximum likelihood estimation (MLE), which optimizes individual token predictions, the authors proposed an IRL-based approach that optimizes entire sequences by extracting implicit rewards from expert demonstrations. They reformulated inverse soft-Q-learning as a temporal difference-regularized extension of MLE, bridging the gap between supervised learning and sequential decision-making. Experiments on T5 and PaLM2 models demonstrated that the proposed IRL methods outperform MLE in both task performance (e.g., accuracy) and generation diversity. This paper also discusses improved robustness to dataset size, better trade-offs between diversity and performance, and potential for more aligned reward functions in LLM fine-tuning. The work positions IRL as a scalable and effective alternative to MLE for IL in language models.

In addition, recent efforts include generative adversarial imitation learning (GAIL) that uses ideas from generative adversarial networks (GANs), so the agent tries to produce behavior indistinguishable from the demonstrator, judged by a discriminator network. Ho and Ermon introduced an early work of the GAIL framework that directly learns a policy from expert demonstrations without requiring interaction with the expert or access to a reinforcement signal [191]. Unlike traditional BC or IRL, which can be inefficient or indirect, GAIL draws an analogy to GANs. It trains a policy to match the expert’s occupancy measure by optimizing a minimax objective between a discriminator (which distinguishes expert from learner actions) and a policy (which aims to fool the discriminator). The authors demonstrated that GAIL outperforms existing model-free methods, achieving near-expert performance across various high-dimensional control tasks, while being more robust to limited expert data. Another recent study by Zuo et al. proposed a GIRL approach to handle imperfect demonstrations from multiple sources [192]. The method introduces confidence scores to weight demonstrations, enabling the agent to prioritize higher-quality data. Additionally, it leverages maximum entropy RL and a reshaped reward function to enhance exploration and avoid local optima.

4.2.3 Interactive Imitation Learning

IIL is a framework where an agent learns to perform tasks by iteratively querying a human expert for corrective feedback during execution, refining its policy through interactions. Unlike BC, which passively mimics expert demonstrations without feedback, IIL actively corrects errors in real-time, improving robustness to distributional shift. In contrast to IRL, which infers a reward function from demonstrations to guide RL, IIL directly learns a policy through supervised corrections, avoiding the need for reward estimation. Thus, IIL combines the sample efficiency of supervised learning with interactive refinement, bridging the gap between BC’s simplicity and IRL’s adaptability.

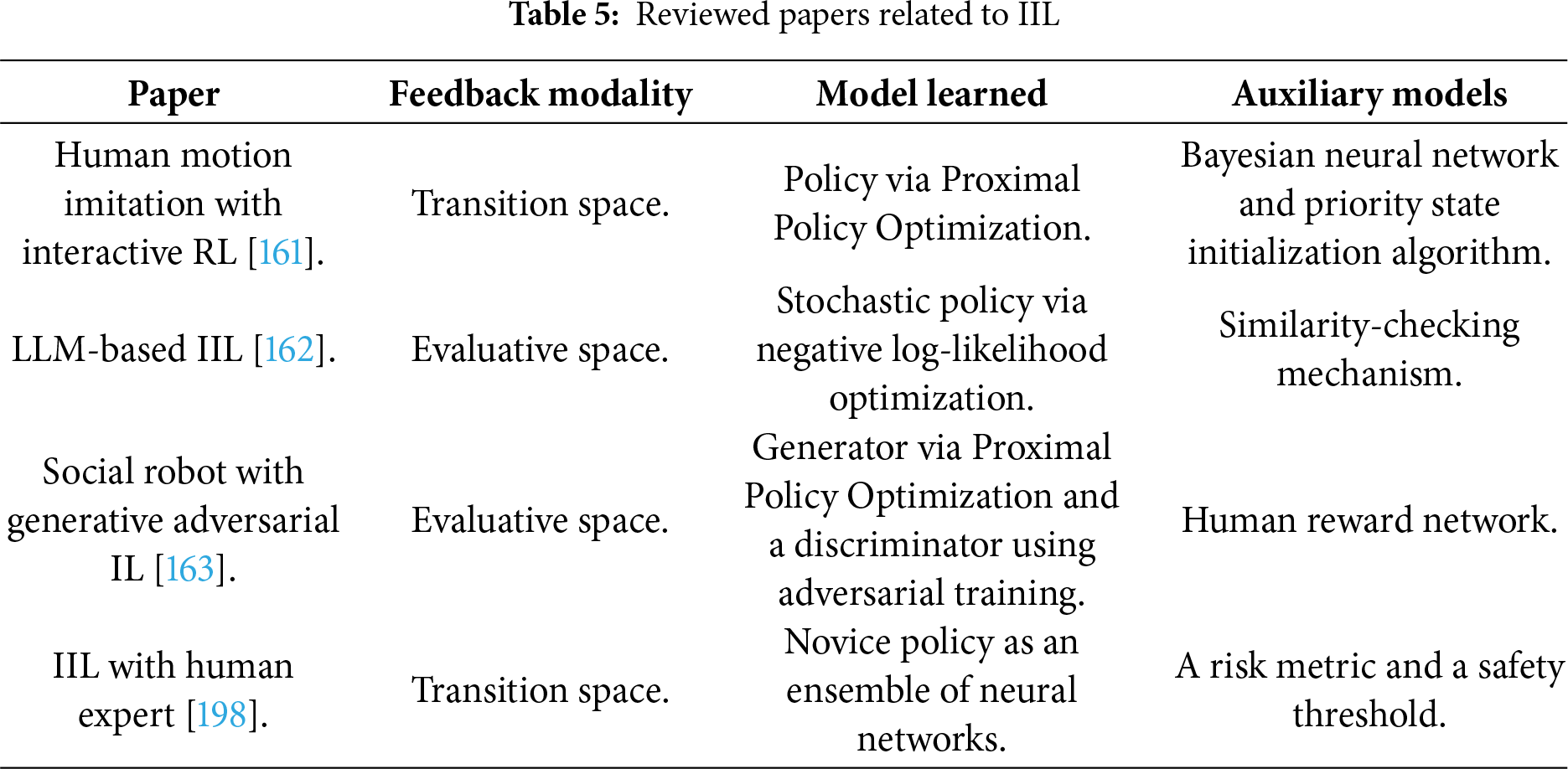

Celemin et al. conducted a survey paper on IIL in Robotics, providing a comprehensive overview of methods where human feedback is intermittently provided during robot execution to improve behavior online [193]. The authors categorized IIL approaches based on feedback modalities (evaluative vs. transition space, absolute vs. relative), the models learned (policies, state transitions, or reward functions), and auxiliary models like task features or human feedback interpretation [117,194–196]. They also discuss algorithmic properties (on/off-policy learning), interfaces for human-robot interaction, and applications in real-world robotics. The paper highlighted IIL’s advantages over traditional IL and RL, such as data efficiency and robustness, while addressing challenges like human feedback inconsistency and covariate shift. Other similar surveys can be found in [164,197]. Table 5 summarizes several IIL papers reviewed in this section.

Cai et al. proposed an interactive RL framework to accelerate human motion imitation for robots by improving exploration efficiency and reducing training time [161]. The method involves human experts labeling critical states in sampled trajectories, which are then used to train a Bayesian Neural Network (BNN) to identify and prioritize effective samples automatically. Additionally, a priority state initialization (PSI) algorithm allocates training resources based on the difficulty of initial states. Experiments demonstrate that the approach, combining human labeling termination (HLT) and PSI, significantly reduces the number of samples and training time while achieving higher imitation accuracy, compared to baseline methods.

Werner et al. introduced an IIL framework that leverages LLMs as cost-effective, automated teachers for robotic manipulation tasks [162]. The method employs a hierarchical prompting strategy to generate a task-specific policy and uses a similarity-based feedback mechanism to provide evaluative or corrective feedback during training. Evaluations on benchmark tasks demonstrated that this LLM-based IIL outperforms BC in success rates and matches the performance of a state-of-the-art IIL method using human teachers, while reducing reliance on human supervision [195]. While this approach offers a scalable, efficient alternative to human-in-the-loop training in robotics, the authors discussed the limitations, including LLMs’ restricted environmental awareness and dependence on ground-truth data, and suggested future improvements with vision-language models and real-world sensory inputs.

Recently, a method named GAILHF (Generative Adversarial Imitation LHF) was introduced to enable a social robot to learn and imitate human game strategies in real-time two-player games by combining human demonstrations and evaluative feedback [163]. This framework employs a generator-discriminator architecture, where the generator learns from human trajectories. At the same time, the discriminator distinguishes between human and robot actions, supplemented by a human reward network that predicts feedback and reduces human burden. Experiments showed the robot successfully imitated strategies and outperformed conventional IL and RL in game scores. Overall, this study demonstrates the efficacy of interactive learning for social robots and highlights the role of evaluative feedback in surpassing demonstration performance.

Kelly et al. developed HG-DAgger, an improved variant of the DAgger (Dataset Aggregation) algorithm for IIL with human experts [198]. Unlike standard DAgger, which stochastically switches control between the novice policy and the expert (potentially causing instability and degraded human performance), HG-DAgger lets the human expert take full control whenever they perceive the novice entering unsafe states, collecting corrective demonstrations only during these recovery phases. The method also learns a risk metric from the novice’s uncertainty using neural network ensembles and a safety threshold derived from human interventions. Evaluated on autonomous driving tasks, HG-DAgger outperforms DAgger and BC in sample efficiency, safety, and human-like behavior, while providing interpretable risk estimates for test-time deployment.

Feedback and demonstrations together lay the groundwork for aligning agents with human intentions: feedback supplies evaluative cues, while demonstrations encode procedural knowledge. However, both approaches are primarily offline and cannot always anticipate the uncertainties and novelties of dynamic environments. SA complements these methods by enabling real-time collaboration, allowing humans to intervene when learned strategies fall short and letting agents assist when human control alone becomes burdensome. This balance ensures adaptability beyond what either feedback or demonstrations can provide in isolation.

SA is an emerging paradigm in human-robot interaction and HAII that blends autonomous control with real-time human input to achieve better performance, safety, and user satisfaction than either fully manual or fully autonomous systems alone [199]. In this framework, the autonomous system and the human operator collaboratively share control of the task, often dynamically adjusting their respective levels of influence based on context, uncertainty, or user preference. This approach leverages the complementary strengths of humans, such as intuition, high-level reasoning, and adaptability, and machines like precision, speed, and the ability to process large volumes of sensory data. Applications of SA span a wide range of domains, including assistive robotics for people with disabilities, teleoperation in hazardous environments, and intelligent driving systems [200–202]. By facilitating seamless human-machine collaboration, SA aims to enhance task efficiency, reduce cognitive load, and improve overall user experience.

SA supports the HAII pillars by combining human input with AI assistance, creating a collaborative partnership where each agent leverages its strengths. This strongly enhances trust, as humans retain ultimate control and accountability while benefiting from AI support, leading to more reliable and acceptable outcomes. SA also strongly improves usability, since the system assists users without requiring them to relinquish control entirely, fitting naturally into human workflows. It moderately supports understandability, as users can observe and influence AI actions, gaining partial insight into its reasoning, though full transparency may still be limited. By effectively balancing human guidance with AI autonomy, SA enables efficient, user-centered decision-making that leverages the strengths of both humans and machines.

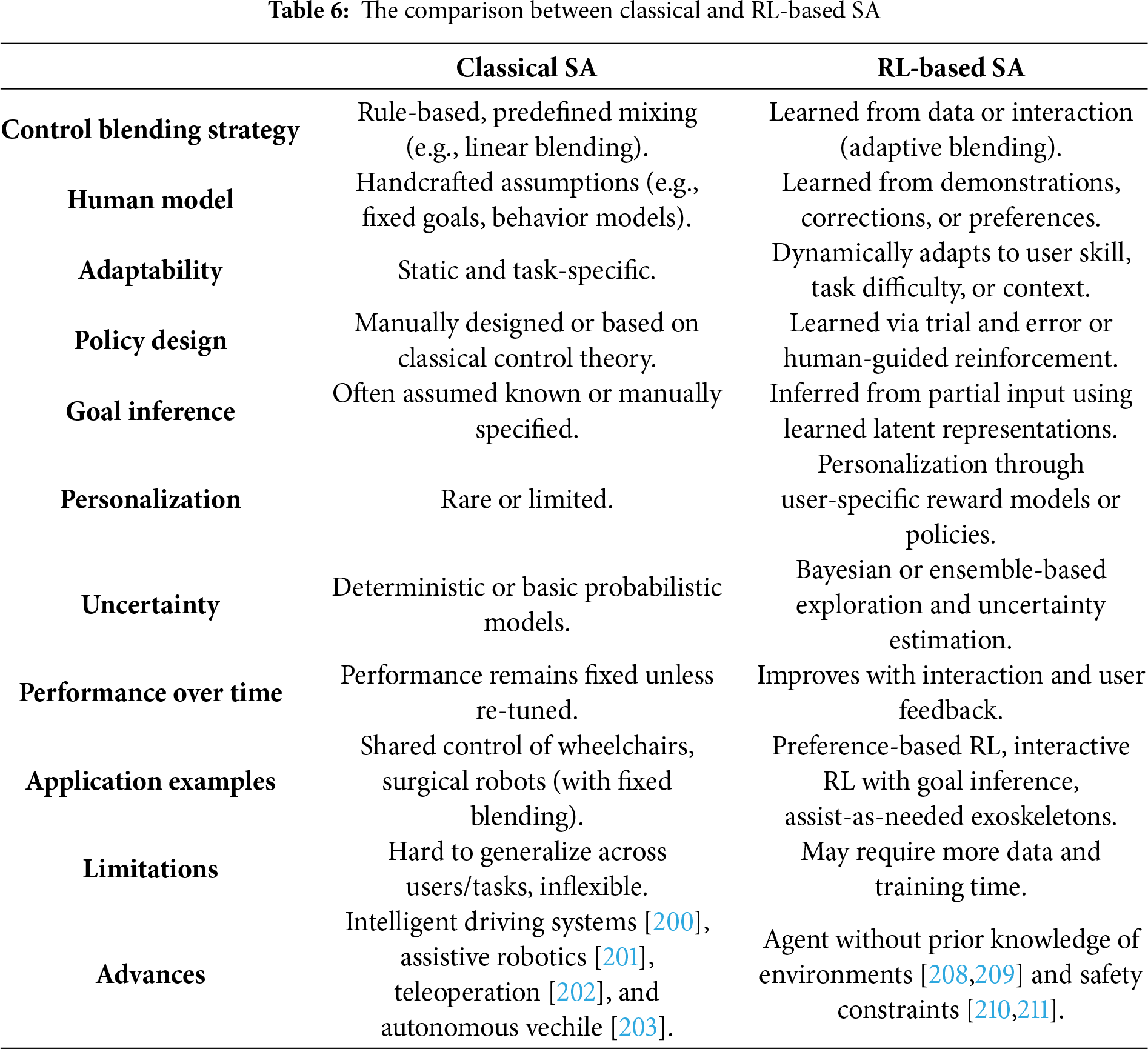

SA approaches can be broadly categorized into classical methods and learning-based methods, particularly those leveraging RL. Classical SA typically relies on manually designed control blending strategies, where the system assists the user according to predefined rules or heuristics, for instance, by combining user preferences on autonomous vehicle service attributes [203]. While these methods offer predictability and are often easier to verify for safety-critical applications, they lack flexibility and personalization and cannot easily adapt to new tasks, user preferences, or changes in the environment without manual retuning.

In contrast, RL-based SA frameworks aim to learn optimal assistance policies directly from data, often through human demonstrations, real-time feedback, or preference learning. This enables the system to dynamically infer user intent, personalize assistance based on individual skill or context, and continuously improve through interaction [204–206]. Although RL-based methods face challenges related to data efficiency, safety, and interpretability, they hold significant promise for creating more adaptive, user-centered SA systems that generalize beyond the scenarios anticipated by classical designs [207]. The comparison is summarized in Table 6.