Open Access

Open Access

ARTICLE

An Update Method of Decision Implication Canonical Basis on Attribute Granulating

1 School of Computer and Information Technology, Shanxi University, Taiyuan, Shanxi Province, 030006, China

2 Key Laboratory of Computational Intelligence and Chinese Information Processing of Ministry of Education, Shanxi University, Taiyuan, Shanxi Province, 030006, China

* Corresponding Author: Yanhui Zhai. Email:

(This article belongs to the Special Issue: Cognitive Granular Computing Methods for Big Data Analysis)

Intelligent Automation & Soft Computing 2023, 37(2), 1833-1851. https://doi.org/10.32604/iasc.2023.039553

Received 04 February 2023; Accepted 11 April 2023; Issue published 21 June 2023

Abstract

Decision implication is a form of decision knowledge representation, which is able to avoid generating attribute implications that occur between condition attributes and between decision attributes. Compared with other forms of decision knowledge representation, decision implication has a stronger knowledge representation capability. Attribute granularization may facilitate the knowledge extraction of different attribute granularity layers and thus is of application significance. Decision implication canonical basis (DICB) is the most compact set of decision implications, which can efficiently represent all knowledge in the decision context. In order to mine all decision information on decision context under attribute granulating, this paper proposes an updated method of DICB. To this end, the paper reduces the update of DICB to the updates of decision premises after deleting an attribute and after adding granulation attributes of some attributes. Based on this, the paper analyzes the changes of decision premises, examines the properties of decision premises, designs an algorithm for incrementally generating DICB, and verifies its effectiveness through experiments. In real life, by using the updated algorithm of DICB, users may obtain all decision knowledge on decision context after attribute granularization.Keywords

Formal Concept Analysis (FCA) is a data analysis and processing tool proposed by Prof. Wille [1]. FCA has been widely studied [2–5] and applied in machine learning [6–8], data mining [9,10], information retrieval [11,12], conflict analysis [13–15] and recommendation systems [16–18].

In FCA, a formal context is a two-dimensional table that reflects relationships between objects and attributes. Attribute implication is a formal representation of knowledge in formal contexts. Since the number of (attribute) implications extracted from formal context is very large, Qu et al. [19] proposed decision implication to reduce the implications that occur between condition attributes or between decision attributes. Zhai et al. [20] studied the semantical and syntactical characteristics of decision implication from a logical perspective. In particular, in the syntactical aspect, Zhai et al. [20] proposed two inference rules, namely AUGMENTATION and COMBINATION, to perform knowledge reasoning among decision implications. Zhai et al. [21] further investigated the influences of the order and number of times of applying inference rules on knowledge reasoning and designed an optimal reasoning strategy.

The number of decision implications in decision contexts, however, is still huge. Thus, Zhai et al. [22] introduced decision premise and decision implication canonical basis (DICB), and proved that DICB is a complete, non-redundant and optimal set of decision implications. In other words, DICB can not only keep all the information in decision contexts (i.e., completeness), but also contain the least number of decision implications among all complete sets of decision implications (optimality). To generate DICB efficiently, Li et al. [23] proposed a true premise-based generation algorithm for DICB, and Zhang et al. [24] proposed an incremental generation algorithm for DICB.

In fact, researchers have investigated other forms of knowledge representation and reasoning besides decision implication, such as concept rules and granular rules [25,26]. Concept rules are decision implications where both premises and consequences are intents [25], and granular rules are decision implications where both premises and consequences are granular intents [25]. Based on these works, Qin et al. [27] investigated attribute (object)-oriented decision rule acquisition; Xie et al. [28] discussed decision rule acquisition in multi-granularity decision context; Zhi et al. [29] proposed a fuzzy rule acquisition method based on granule description in fuzzy formal context; Hu et al. [30] studied rules whose premises and consequences are dual (formal) concepts and formal (dual) concepts. Zhang et al. [31] compared concept rule, granular rule and decision implication, and found that concept rule has stronger knowledge representation capability than granular rule and decision implication has stronger knowledge representation capability than granular rule and concept rule, i.e., decision implication is the strongest form of knowledge representation and reasoning on decision context. Thus, it is recommended in [21] that a knowledge representation and reasoning system based on decision implication can be constructed in applications by using DICB as the knowledge base and CON-COMBINATION and AUGMENTATION as the inference engine [21].

On the other hand, since granular computing [32] is able to solve complex problems with multi-level structures and facilitate knowledge acquisition at different levels and granulations, many granulation methods have been developed in FCA such as relational granulation [33,34], attribute granulation [35–41], and object granulation [42]. This paper mainly focuses on knowledge discovery on attribute granulating.

The existing attribute granulation methods mainly focus on how to update concept lattice after transforming attribute granulation [35], aiming at reducing the complexity of regenerating concept lattice. For example, Belohlavek et al., Wan et al. and Zou et al. proposed some algorithms to update concept lattice on attribute granulating [35–37]; Shao et al. [38] proposed an algorithm to update object(attribute)-oriented multi-granularity concept lattice.

The existing studies did not take into account knowledge discovery on attribute granulating. Because decision implication is superior to concept rule and granular rule, the paper will examine the update of decision implication on attribute granulating; in particular, since DICB can efficiently represent all the information of decision implication [22], it is sufficient to examine the update of DICB. Thus, the aim of the paper is to examine the relationship between DICBs before and after granulation and find a more efficient method to generate the DICB of the granulation context.

This paper is organized as follows. Section 2 reviews the related concepts and properties of decision implication and DICB; Section 3 introduces granulation context and discusses the updates of decision premises (the premises of DICB) on attribute granulating; Section 4 proposes an incremental method for updating DICB; Section 5 concludes the paper.

This section reviews the basic concepts and conclusions of decision implication and DICB; further details can be found in [19,20].

Definition 1 [19]: Decision context

Definition 2 [19]: Let

(1)

(2)

(3)

(4)

Proposition 1 [19]: Let

(1)

(2)

(3)

Definition 3 [20]: Let

Definition 4 [22]: Let

(1)

(2)

The set

is called the decision implication canonical basis (DICB) of

Zhai et al. [22] proved that for any decision context, DICB is complete, non-redundant, and optimal. In other words, DICB can be considered as a complete (completeness) and compact (non-redundancy and optimality) knowledge representation in decision context. Furthermore, Li et al. [23] proved that the properness of decision premise implies minimality, i.e.,

3 DICB on Attribute Granulating

This section introduces granulation context based on attribute granularity refinement [39] and studies the update of decision premises.

Definition 5: Let

It can be seen from Definition 5 that the refinement of

Example 1. A decision context for bank marketing is shown in Table 1, where

When attribute

The granulation context is shown in Table 2, where

According to [22], DICB can retain all the knowledge in decision context. Therefore, DICB after attribute granulation can also retain all the knowledge in granulation context. Furthermore, since decision premise determines DICB [22], the update of DICB can be reduced to the update of decision premises.

To simplify discussion, this paper divides the updates of decision premises into two steps, namely, the update of decision premises after deleting attribute

3.1 The Updates of Decision Premises after Deleting Attribute

After attribute

where

Because decision premise is defined by the operator

First, the following properties are given.

Proposition 2: For decision contexts

(1) If

(2) If

(3)

Proof: (1) If

(2) If

(3) It follows from (1) and (2).

In order to generate the decision premises of

(1) If

(2) If

(3) If

The following conclusion identifies the characteristics of unchanged decision premises.

Proposition 3: For decision contexts

Proof: Sufficiency: By Proposition 2, if

Necessity: Since

The following conclusion identifies the characteristics of invalid decision premises.

Proposition 4: For decision contexts

Proof: If

The following result shows that there does not exist new decision premise in

Proposition 5: For decision context

Proof: Assume that

It can be seen from Proposition 5 that decision premises from

3.2 The Updates of Decision Premise after Adding Granulation Attributes

This section examines the update of decision premise in

Similarly, we classify the changes of decision premises from

(1) If

(2) If

(3) If

In order to avoid confusion, the operation

Proposition 6: For decision contexts

(1) If

(2) If

Proof: (1) By the definitions of

(2) By (1), we have

The following conclusion identifies the characteristics of unchanged and invalid decision premises from

Proposition 7: For decision contexts

Proof: Since

Proposition 7 shows that all the decision premises of

Next, we discuss the new decision premises in

Proposition 8: If

Proof: First, we will prove that there exists

Next, suppose that more than one attribute in

By the definition of redundant decision implication, a decision implication

For any

By the discussion in Sections 3.1 and 3.2, for a decision premise

4 Algorithm for Updating DICB on Attribute Granulating

By Section 3, some decision premises of

Firstly, Definition 6 gives the definition of true premise.

Definition 6 [23]: Let

(1)

(2) For any

The following theorem shows the equivalence of the decision premise and the true premise.

Theorem 1 [23]: Let

According to Theorem 1, we can determine whether

Definition 7 defines the symbols needed to calculate true premise.

Definition 7 [23]: Let

(1)

(2) For

For

Definition 8 gives the concepts of candidate premise and candidate true premise, which are used in the process of generating true premise.

Definition 8 [23]: Let

(1) If

(2) If

Proposition 9 [23]: Let

(1) If

(2) If

By Definition 7 and Proposition 9, true premise can be identified based on

Proposition 10: Let

(1)

(2)

Proof: (1) By the definitions of

If

If

(2) By the definition of

Next, we prove that for any

Combining with

First, we prove that if

Next, we prove that if

Next, we will generate the true premises of

Proposition 11: Let

Proof: For any

At this time, we obtain the candidate true premise

Definition 9 [23]: Let

(1) If

(2) If

(3) If

Proposition 12 [23]: Let

(1)

(2)

(3)

a)

b) There exists an invalid candidate true premise

c) For any

By Definition 9 and Proposition 12, the objects in the set

The above process can be further optimized. For example, Proposition 13 shows that if

Proposition 13: Let

Proof: Let

A true premise-based incremental method can then be proposed for generating DICB under attribute granulation, as shown in Algorithm 1.

In Algorithm 1, we first generate the unchanged decision premises by the decision premises of

Algorithm 2 presents the function

Algorithm 2 generates

Algorithm 3 presents the function

Algorithm 3 first initializes

For the candidate true premise

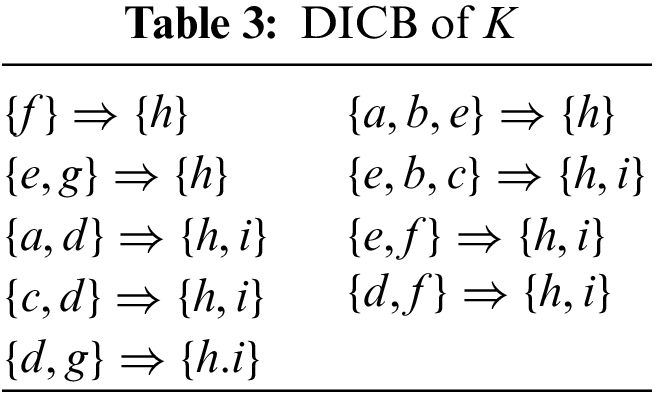

Example 2. (Continuing Example 1) For the decision context

According to Algorithm 1 (Steps 1–6), the unchanged decision premises can be computed as

Next, we calculate the true premise of

Next, we calculate the true premises of

Similarly, we can obtain the true premises of

Table 4 lists the DICB of

In order to verify the effectiveness of the proposed method, we conduct some experiments in real data sets. We selected four UCI data sets, performed preprocessing such as removing missing values and normalizing continuous values, and generated the binary data sets according to the threshold of 0.5. Since the true premise-based algorithm (MBTP) in [23] is the most efficient method for generating DICB, especially when

In the experiments, MBTP is used to directly generate the DICB of granulation context and the proposed method, called the InCremental Method (ICM), is used to generate the DICB of granulation context by updating the DICB of decision context. In order to analyze the influence of the number of granulations on the experimental results, we randomly selected about one fifth of the number of condition attributes and refined them into 2, 3 and 4 fine-grained attributes respectively.

The average consuming times of generate DICB for each refinement are summarized in Table 6.

According to Table 6, on the whole, ICM is more efficient than MBTP. For the data sets whose DICB can be generated within about one minute, such as bank8FM and credit rating, the consuming times may depend more on runtime environments than other data sets. Thus, the comparison results may be unconvincing. For the other data sets, i.e., supermarket and hypothyroid, MBTP will take about twenty minutes to generate DICB, whereas ICM only takes half the consuming time of MBTP, i.e., about ten minutes to achieve the same goal.

It should be noted that the consuming time of ICM only contains the updating time from the DICB of decision context to the DICB of granulation context, excluding the consuming time of generating DICB of decision context. Thus, the comparison results above do not imply that ICM is more efficient than MBTP in generating DICB. However, for one thing, since ICM presupposes the presence of DICB of decision context, the comparison is reasonable; for another, since in reality, there may be a continual requirement of attribute granulation, ICM will become more efficient than MBTP because the time of generating DICB of decision context is consumed once and will be balanced finally by the subsequent updating profit.

6 Conclusion and Further Works

In order to reduce the complexity of regenerating decision implications on attribute granulation, this paper stated that the update of decision implications can be accomplished by the update of DICB. Thus, the paper discussed the properties of DICB on attribute granulation and designed an incremental method for updating DICB. Experiment results show that the update of DICB is more efficient than generation from decision context.

It can also be observed that the proposed method has some limitations. For example, the proposed method only considers the refinement of one condition attribute in the update of DICB. Although the updates of multiple attributes can be completed by applying the proposed method to multiple attributes many times, it may be inefficient.

In addition, this paper only examines the update of DICB after condition attribute granulation and does not take decision attribute granulation into consideration. The results in [20,21] show that condition attributes and decision attributes in decision implications are not symmetrical, as reflected in the semantical and syntactical aspects of decision implication. For example, in the syntactical aspect, by AUGMENTATION, one can augment the premises (condition attributes) and reduce the consequences (decision attributes), implying that one cannot apply the proposed method in the paper to the case of decision attribute granulation. Therefore, it is necessary to study the update of decision implications under decision attribute granulation, not only for generating DICB but also for deeply understanding the system of decision implications.

Finally, the proposed method can also be combined with other applications [43]. For example, through attribute refinement, some low-level features in image, such as texture, can be extracted and refined into high-level features, such as texture direction and texture perimeter. By calculating a compact knowledge base such as DICB, features with more semantical information can be obtained. In other words, the information in image can be fully utilized to segment the images, thus improving the accuracy and robustness of segmentation.

Acknowledgement: This work was supported by the National Natural Science Foundation of China (Nos. 61972238, 62072294).

Funding Statement: This research was funded by the National Natural Science Foundation of China (www.nsfc.gov.cn) under Grant No. 61972238 (received by Y. H. Zhai) and No. 62072294 (received by D. Y. Li).

Conflicts of Interest:: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. R. Wille, “Restructuring lattice theory: An approach based on hierarchies of concepts,” In: I. Rival (Eds.Ordered Sets, Berlin Heidelberg: Springer, pp. 445–470, 1982. [Google Scholar]

2. H. L. Zhi and J. H. Li, “Granule description based on formal concept analysis,” Knowledge-Based Systems, vol. 104, pp. 62–73, 2016. [Google Scholar]

3. Y. H. Zhai, D. Y. Li and K. S. Qu, “Fuzzy decision implications,” Knowledge-Based Systems, vol. 37, pp. 230–236, 2013. [Google Scholar]

4. C. Carpineto and G. Romano, “Rule mining,” in Concept data analysis: Theory and applications, 1st ed., Hoboken, NJ: Wiley, pp. 155–165, 2004. [Google Scholar]

5. Y. H. Zhai, J. J. Qi, D. Y. Li, C. Zhang and W. H. Xu, “The structure theorem of three-way concept lattice,” International Journal of Approximate Reasoning, vol. 146, no. 12, pp. 157–173, 2022. [Google Scholar]

6. X. Y. Chen, J. J. Qi, X. M. Zhu, X. Wang and Z. Wang, “Unlabelled text mining methods based on two extension models of concept lattices,” International Journal of Machine Learning and Cybernetics, vol. 11, no. 2, pp. 475–490, 2020. [Google Scholar]

7. A. Houari, W. Ayadi and S. B. Yahia, “A new FCA-based method for identifying biclusters in gene expression data,” International Journal of Machine Learning and Cybernetics, vol. 9, no. 11, pp. 1879–1893, 2018. [Google Scholar]

8. W. H. Xu and W. T. Li, “Granular computing approach to two-way learning based on formal concept analysis in fuzzy datasets,” IEEE Transactions on Cybernetics, vol. 46, no. 2, pp. 366–379, 2016. [Google Scholar] [PubMed]

9. C. F. Zou, H. F. Deng, J. F. Wan, Z. R. Wang and P. Deng, “Mining and updating association rules based on fuzzy concept lattice,” Future Generation Computer Systems, vol. 82, pp. 698–706, 2018. [Google Scholar]

10. A. Mouakher and S. B. Yahia, “On the efficient stability computation for the selection of interesting formal concepts,” Information Sciences, vol. 472, pp. 15–34, 2019. [Google Scholar]

11. C. Carpineto and G. Romano, “A lattice conceptual clustering system and its application to browsing retrieval,” Machine Learning, vol. 24, no. 2, pp. 95–122, 1996. [Google Scholar]

12. W. Y. Bao, C. C. Shen and Y. Li, “Collaborative information retrieval framework based on FCA,” in 2015 12th Web Information System and Application Conf. (WISA), Jinan, China, pp. 175–178, 2015. [Google Scholar]

13. H. L. Zhi, J. J. Qi, T. Qian and R. S. Ren, “Conflict analysis under one-vote veto based on approximate three-way concept lattice,” Information Sciences, vol. 516, no. 2, pp. 316–330, 2020. [Google Scholar]

14. H. L. Zhi, J. H. Li and Y. N. Li, “Multilevel conflict analysis based on fuzzy formal contexts,” IEEE Transactions on Fuzzy Systems, vol. 30, no. 12, pp. 5128–5142, 2022. [Google Scholar]

15. G. M. Lang and Y. Y. Yao, “Formal concept analysis perspectives on three-way conflict analysis,” International Journal of Approximate Reasoning, vol. 152, no. 407, pp. 160–182, 2023. [Google Scholar]

16. C. F. Zou, D. Q. Zhang, J. F. Wan, M. M. Hassan and J. Lloret, “Using concept lattice for personalized recommendation system design,” IEEE Systems Journal, vol. 11, no. 1, pp. 305–314, 2017. [Google Scholar]

17. H. Mezni and T. Abdeljaoued, “A cloud services recommendation system based on fuzzy formal concept analysis,” Data Knowledge Engineering, vol. 116, no. 4, pp. 100–123, 2018. [Google Scholar]

18. W. Q. Huang, F. Hao, G. Y. Pang and Y. F. Sun, “Complementary context-enhanced concept lattice aware personalized recommendation,” in 2021 IEEE 20th Int. Conf. on Trust, Security and Privacy in Computing and Communications, Shenyang, China, pp. 919–926, 2021. [Google Scholar]

19. K. S. Qu, Y. H. Zhai, J. Y. Liang and M. Chen, “Study of decision implications based on formal concept analysis,” International Journal of General Systems, vol. 36, no. 2, pp. 147–156, 2007. [Google Scholar]

20. Y. H. Zhai, D. Y. Li and K. S. Qu, “Decision implications: A logical point of view,” International Journal of Machine Learning and Cybernetics, vol. 5, no. 4, pp. 509–516, 2014. [Google Scholar]

21. Y. H. Zhai, N. Jia, S. X. Zhang, D. Y. Li and W. H. Xu, “Study on deduction process and inference methods of decision implications,” International Journal of Machine Learning and Cybernetics, vol. 13, no. 7, pp. 1959–1979, 2022. [Google Scholar]

22. Y. H. Zhai, D. Y. Li and K. S. Qu, “Decision implication canonical basis: A logical perspective,” Journal of Computer System Sciences, vol. 81, no. 1, pp. 208–218, 2015. [Google Scholar]

23. D. Y. Li, S. X. Zhang and Y. H. Zhai, “Method for generating decision implication canonical basis based on true premises,” International Journal of Machine Learning and Cybernetics, vol. 8, no. 1, pp. 57–67, 2017. [Google Scholar]

24. S. X. Zhang, D. Y. Li and Y. H. Zhai, “Incremental method of generating decision implication canonical basis,” Soft Computing, vol. 26, no. 3, pp. 1067–1083, 2022. [Google Scholar]

25. J. H. Li, C. L. Mei, C. A. Kumar and X. Zhang, “On rule acquisition in decision formal contexts,” International Journal of Machine Learning and Cybernetics, vol. 4, no. 6, pp. 721–731, 2013. [Google Scholar]

26. W. Z. Wu, Y. Leung and J. S. Mi, “Granular computing and knowledge reduction in formal contexts,” IEEE Transactions on Knowledge and Data Engineering, vol. 21, no. 10, pp. 1461–1474, 2009. [Google Scholar]

27. K. Y. Qin, B. Li and Z. Pei, “Attribute reduction and rule acquisition of formal decision context based on object (property) oriented concept lattices,” International Journal of Machine Learning and Cybernetics, vol. 10, no. 10, pp. 2837–2850, 2019. [Google Scholar]

28. J. P. Xie, M. H. Yang, J. H. Li and Z. Zheng, “Rule acquisition and optimal scale selection in multi-scale formal decision contexts and their applications to smart city,” Future Generation Computer Systems, vol. 83, no. 3, pp. 564–581, 2018. [Google Scholar]

29. H. L. Zhi and Y. F. Ma, “Fuzzy association rule acquisition based on granule description,” Chinese Journal of Kunming University of Science and Technology (Natural Science), vol. 47, no. 5, pp. 61–6877, 2022. [Google Scholar]

30. Q. Hu and K. Y. Qin, “Attribute reduction and rule acquisition of formal decision context based on dual concept lattice,” in 19th Int. Conf. on Web-Based Learning and 5th Int. Symp. on Emerging Technologies for Education, Ningbo, China, pp. 117–129, 2021. [Google Scholar]

31. S. X. Zhang, D. Y. Li, Y. H. Zhai and X. P. Kang, “A comparative study of decision implication, concept rule and granular rule,” Information Sciences, vol. 508, no. 1, pp. 33–49, 2020. [Google Scholar]

32. L. A. Zadeh, “Toward a theory of fuzzy information granulation and its centrality in human reasoning and fuzzy logic,” Fuzzy Sets and Systems, vol. 90, no. 2, pp. 111–127, 1997. [Google Scholar]

33. X. P. Kang and D. Q. Miao, “A study on information granularity in formal concept analysis based on concept-bases,” Knowledge-Based Systems, vol. 105, pp. 147–159, 2016. [Google Scholar]

34. X. P. Kang, D. Y. Li and S. G. Wang, “Research on domain ontology in different granulations based on concept lattice,” Knowledge-Based Systems, vol. 27, no. 3, pp. 152–161, 2012. [Google Scholar]

35. R. Belohlavek, B. D. Baets and J. Konecny, “Granularity of attributes in formal concept analysis,” Information Sciences, vol. 260, no. 4, pp. 149–170, 2014. [Google Scholar]

36. Y. Wan and L. G. Zou, “An efficient algorithm for decreasing the granularity levels of attributes in formal concept analysis,” IEEE Access, vol. 7, pp. 11029–11040, 2019. [Google Scholar]

37. L. G. Zou, Z. P. Zhang and J. Long, “An efficient algorithm for increasing the granularity levels of attributes in formal concept analysis,” Expert Systems with Applications, vol. 46, no. 3, pp. 224–235, 2016. [Google Scholar]

38. M. W. Shao, M. M. Lv, K. Li and Z. Z. Wang, “The construction of attribute (object)-oriented multi-granularity concept lattices,” International Journal of Machine Learning and Cybernetics, vol. 11, no. 5, pp. 1017–1032, 2020. [Google Scholar]

39. L. Wei and Q. Wan, “Granular transformation and irreducible element judgment theory based on pictorial diagrams,” IEEE Transactions on Cybernetics, vol. 46, no. 2, pp. 380–387, 2016. [Google Scholar] [PubMed]

40. J. J. Qi, L. Wei and Q. Wan, “Multi-level granularity in formal concept analysis,” Granular Computing, vol. 4, no. 3, pp. 351–362, 2019. [Google Scholar]

41. Q. Hu and K. Y. Qin, “The construction of multi-granularity concept lattices,” Journal of Intelligent & Fuzzy Systems, vol. 39, no. 3, pp. 2783–2790, 2020. [Google Scholar]

42. L. K. Guo, F. P. Huang, Q. G. Li and G. Q. Zhang, “Power contexts and their concept lattices,” Discrete Mathematics, vol. 311, no. 18–19, pp. 2049–2063, 2011. [Google Scholar]

43. K. Chen, H. Che, M. F. Leung and Y. Wang, “An improved superpixel-based fuzzy C-Means method for complex picture segmentation tasks,” in 2022 14th Int. Conf. on Advanced Computational Intelligence (ICACI), Wuhan, China, pp. 231–238, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools