Open Access

Open Access

ARTICLE

Spatial Multi-Presence System to Increase Security Awareness for Remote Collaboration in an Extended Reality Environment

1 Department of Game Software, Hoseo University, Asan-si, Korea

2 Department of Artificial Intelligence and Data Science, Korea Military Academy, Seoul, Korea

* Corresponding Author: Hyun Kwon. Email:

Intelligent Automation & Soft Computing 2023, 37(1), 369-384. https://doi.org/10.32604/iasc.2023.036052

Received 15 September 2022; Accepted 13 January 2023; Issue published 29 April 2023

Abstract

Enhancing the sense of presence of participants is an important issue in terms of security awareness for remote collaboration in extended reality. However, conventional methods are insufficient to be aware of remote situations and to search for and control remote workspaces. This study proposes a spatial multi-presence system that simultaneously provides multiple spaces while rapidly exploring these spaces as users perform collaborative work in an extended reality environment. The proposed system provides methods for arranging and manipulating remote and personal spaces by creating an annular screen that is invisible to the user. The user can freely arrange remote participants and their workspaces on the annular screen. Because users can simultaneously view various spaces arranged on the annular screen, they can perform collaborative work while simultaneously feeling the presence of multiple spaces and can be fully immersed in a specific space. In addition, the personal spaces where users work can also be arranged through the annular screen. According to the results of the performance evaluations, users participating in remote collaborative works can visualize the spaces of multiple users simultaneously and feel their presence, thereby increasing their understanding of the spaces. Moreover, the proposed system reduces the time to perform tasks and gain awareness of the emergency in remote workspaces.Keywords

A computer-supported cooperative work environment provides a network in which multiple users can perform collaborative work [1]. Here, participants can change the data value for collaborative work while talking to each other and develop results while monitoring the changed properties [2]. An extended reality (XR) environment is a technology that encompasses those of the existing virtual reality (VR), augmented reality (AR), and mixed reality (MR) environments in which VR and AR are mixed and can provide environments that are suitable for situations faced by users [3,4]. Similar to VR environments, XR environments provide users with a sensation of immersion while being directly helpful for work in the real world as AR environments. Currently, remote work environments are similar to collaborative work environments because of the use of computers [5–7]. In addition, the recent 5G era provides super-high speed that enables downloading of ultra-high-definition XR content within a second and ultra-low latency that guarantees response speeds within 1 ms depending on the user’s input operation. Therefore, unimagined XR environments that transcend temporary spatial constraints in the past can be constructed [8–11].

In an XR environment, users can use applications in a variety of fields, such as games, computer-aided design (CAD), military simulation, training, and 3D video calls jointly with other remotely distant users. Recently, with the development of various applications, the boundary between reality and VR has blurred, such as the participation of multiple users in VR to form a class to play a game or participate in a concert as with a metaverse [12–15]. Enhancing the sense of participant presence is an important issue in terms of security awareness in remote collaboration in extended reality [16–19]. Conventional research has mainly studied visualization methods to make aware of the real environment while a user performs tasks in the XR environment. However, studies concerning the presence of remote collaboration in the extended reality domain are lacking.

Moreover, analysis of the recent collaboration process in the existing non-face-to-face environment using video calls and collaborative VR shows that the collaboration process follows two aspects. First, users share information about the real spaces where they are located with those participating in collaborative work [20,21]. Second, users follow a relatively complicated form of the collaboration process in which they participate in the collaborative work environment while checking the situation of their work and reflecting on the collaborative work environment [22,23]. However, in cases where collaboration is provided in the existing XR environment, the personal spaces of users cannot be sufficiently identified because the users enter a fixed virtual environment to communicate or work. Moreover, there are cases in which an environment that merges users’ respective spaces is created to implement a collaborative work environment. However, even in these cases, users cannot rapidly be aware and share information on both collaborative works in their environments.

In this study, to address this limitation, a spatial multi-presence system that enables users to perform collaborative work while obtaining information in the remote spaces of other participants in the collaborative work and obtaining their workspaces simultaneously is proposed. The proposed system creates an annular transparent cylinder space that surrounds users participating in XR and provides interactions for creating, deleting, moving, immersing, and exploring the spaces of users participating in collaborative works. Users can dynamically construct and adjust participant spaces in the collaborative work environment and personal workspaces using the system proposed in this study. In a collaborative work situation, a user can perform work while conversing with a user located in another space by turning his/her head through a head-mounted display (HMD). Moreover, the spaces surrounding the user can be manipulated by hand gestures.

The main contributions of this study are as follows:

• The proposed system can enhance the sense of presence of participants rendering them aware of multiple remote situations of collaborative work in the XR environment.

• The proposed system provides a novel space management method that enables quick search and control of remote collaborative and personal workspaces.

With the focus on XR, such as in a collaborative work environment, XR users have attempted to use more than one environment in the collaborative work [1,2]. According to a study conducted by Thalman et al., for users to improve their interactive performance with content in a specific real environment, a sense of presence that makes them feel that the space is similar to reality must be provided at a high level, so that they can be immersed in the environment [14]. Security awareness can be low when the image or sound of the remote space is not transmitted properly when remote cooperative work is performed using XR environments [16,17]. McGill et al. proposed a method that enables users to view images in real space using a camera while working in VR by attaching a camera to the HMD [15]. However, this method does not support collaboration. Lu et al. proposed a mixed-reality system that recognizes real space and visualizes 3D furniture to provide a high-level presence. Although they demonstrated the possibility of increasing security awareness in mixed realities, remote cooperative workspaces were not considered [18]. Personalization and adaptation can increase the sense of presence in virtual reality applications. Mourtzis et al. proposed a personalized perception method that adapts educational content according to students’ profiles for factory education in an extended reality environment [19]. Although they provided collaborative education for the factory for the student’s situation, remote users’ security awareness was not considered.

Spatial is an XR-based work platform that enables users to jointly create and edit 3D objects while conducting a remote meeting wearing VR or AR HMDs and accessing one another in the VR or AR environment to which they belong [7]. However, using Spatial, users can enter only a designated VR environment; the spaces of other users cannot be easily remotely seen. Therefore, it is difficult to feel the awareness of situations that may occur in the other party’s remote environment.

Saraiji et al. configured an AR environment as a basic environment, configured the environments of remote users participating in collaborative works into multiple layers, and made each of them semi-transparent to formulate a system in which the environments are superimposed [20]. Users could feel that they participated in multiple spaces simultaneously while visualizing the environments of remote users semi-transparently, depending on cases they work in their local environments. However, as the number of users increases, the superimposed remote areas increase, thus reducing the awareness and presence of the entire environment. Consequently, managing individual remote spaces and searching them in detail become challenging.

Alsereidi et al. proposed a synchronization method for the remote camera according to the user’s head movement for remote collaboration in the XR environment [21]. Their approach showed an enhanced sense of presence in the 1-on-1 remote collaboration because the user can change the remote camera’s view by moving his/her head. Although the synchronization method between a remote camera and a user’s head movement is helpful in remote collaboration, improvements are needed because searching for and controlling multiple remote workspaces is difficult for more than two users.

Lee et al. proposed a collaborative game-level design system that allows users to perform various collaborative tasks in real-time [22]. Although they showed the possibility of complex collaboration in a remote collaborative environment, conflicts can occur because of a lack of awareness of each participant.

Maimore et al. proposed a study in which two users perform collaborative work by merging the appearance and space of the user at a remote distance into a single space [23]. In this method, the complexity of space merging increases significantly when the number of users participating in collaborative work increases. In addition, complex interactions other than working while facing each other, as well as quick conversion and sharing when users perform work in their personal spaces, are challenging. It is difficult to express real-time remote situations because their method takes time to 3D environments where remote users are located.

We discuss the design considerations of the proposed spatial multi-presence system in Section 3.1. Section 3.2 describes the details of the proposed system and algorithms. We apply the proposed method to practical scenarios in a case study in Section 3.3.

3.1 Concept of Spatial Multi-Presence System

The system proposed in this study is a simultaneous multi-presence system that enables users to feel the spaces of other participants in the XR environment simultaneously while rapidly searching for spaces and sharing information about individuals’ workspaces. When the user participates in collaborative work, the spaces of the users participating in the collaborative work are arranged around the user, and the user can place the desired spaces at the desired positions to see them using their hands. Moreover, the users sense the presence of the participants and the spaces to which they belong simultaneously while looking at the spaces of the remote participants surrounding them.

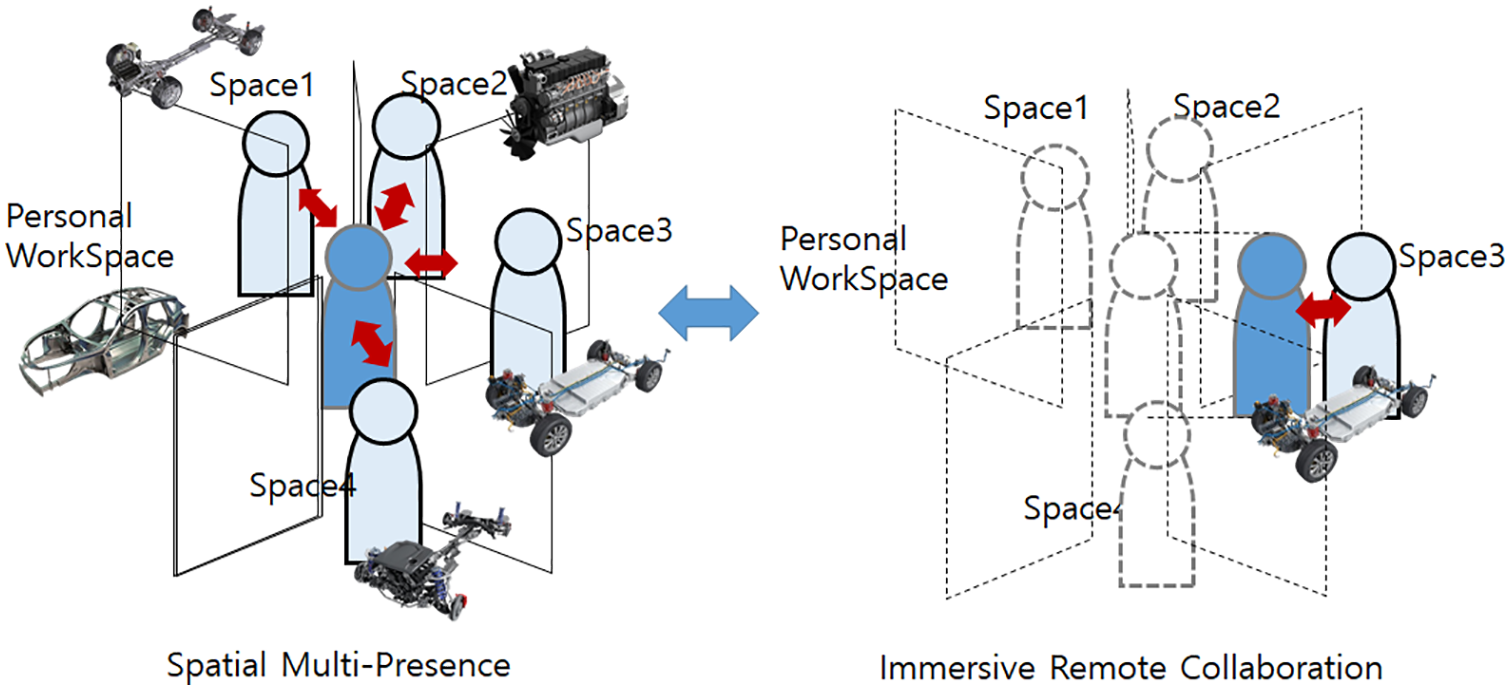

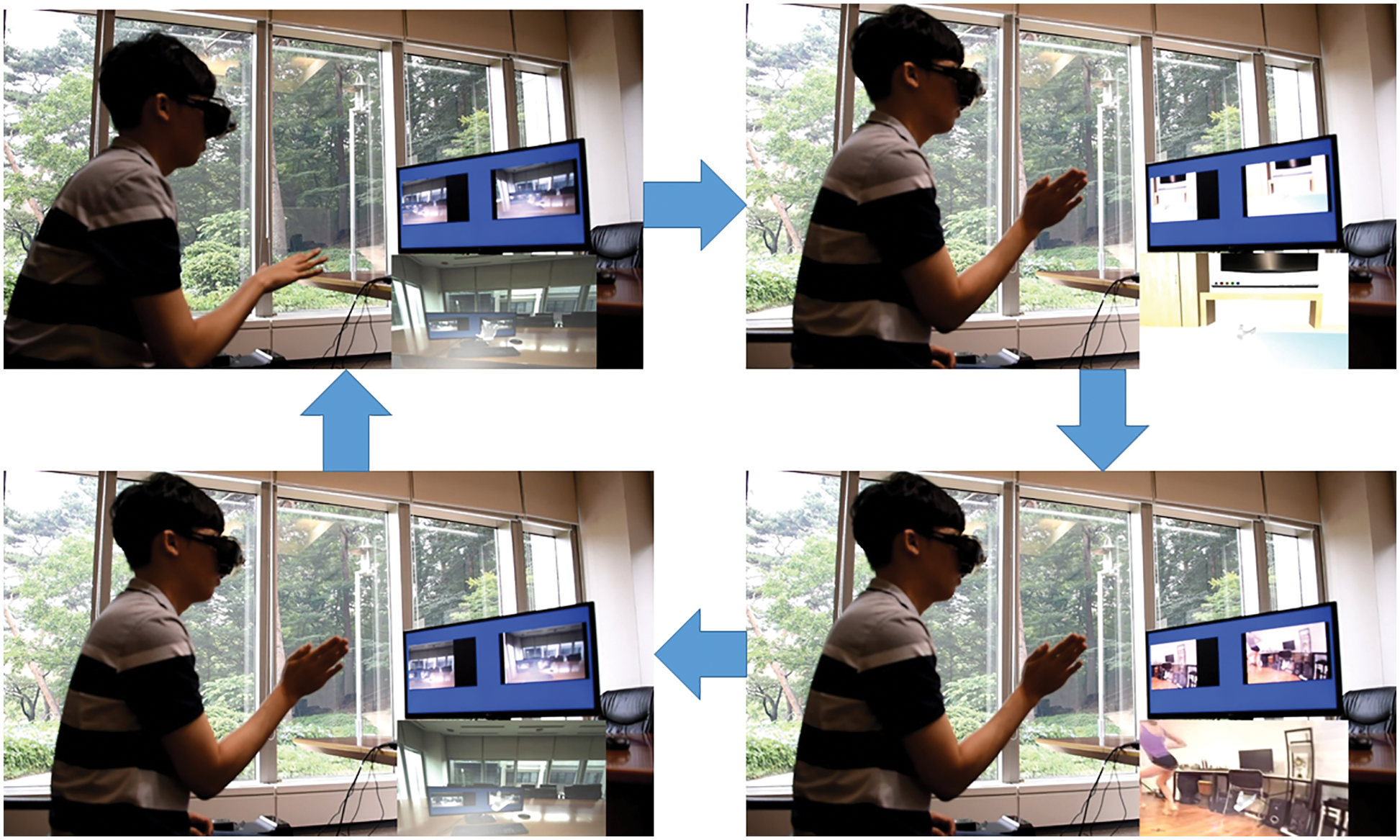

In a situation in which they are wearing an HMD, users can perform collaborative works while feeling the simultaneous presence of multiple spaces, as they examine the remote spaces surrounding them. The number and sizes of the spaces can be configured by the users, as they can adjust the arrangement order and sizes using hand gestures. As collaborative work progresses, users can immerse themselves in a specific space to work by selecting the desired space, as shown in Fig. 1. Moreover, they can subsequently return to their original spaces. During immersion, the user can fully enter the participant’s remote space in Space 3 and perform special tasks while viewing the work results, as shown in Fig. 1. When the users return to the multi-presence state after completing the immersive work, they can perform collaborative works while watching other users participate in the collaborative works. Users can share the work results in their workspace while performing collaborative work by sending personal work information to other remote users.

Figure 1: Concept of the proposed scheme: dynamic changing states between spatial multi-presence and immersive remote collaboration

3.2 Overall System Architecture

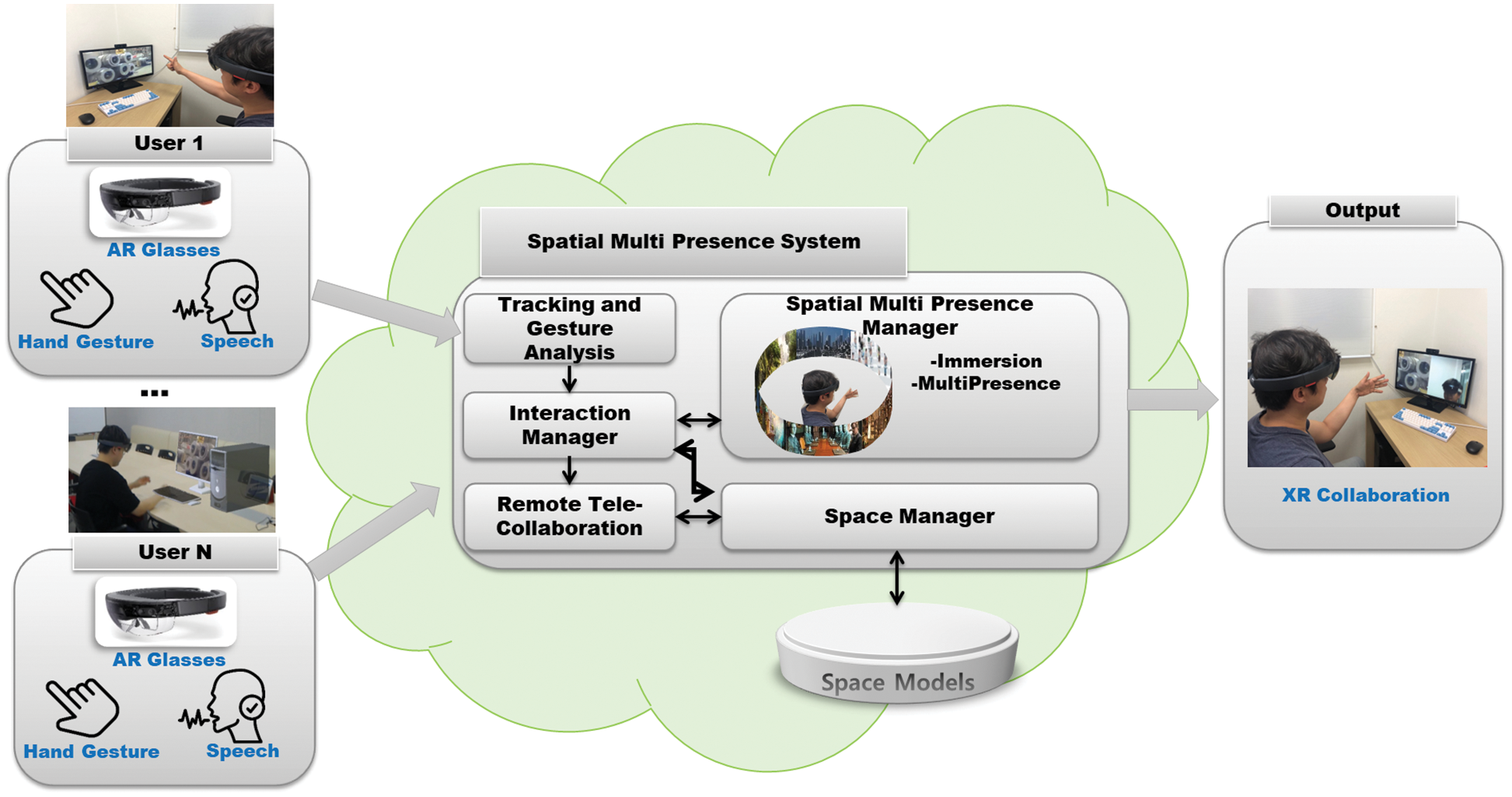

Fig. 2 shows the structure of a simultaneous multi-presence system in which a user wearing an HMD proposed in this study can perform personal work while simultaneously viewing users in multiple spaces and information on remote spaces while also performing collaborative work in an XR environment. The annular spaces surrounding the user are arranged when the user participates in collaborative work. Users wearing HMDs can view the spaces of other collaborative work participants surrounding them to conduct meetings while viewing the information in the relevant spaces. In this case, remote telecollaboration modules are used to transmit the spaces of remote users through web cameras, thereby providing a real-time telepresence environment. Each remote environment is located in an annular space surrounding the user through a multi-presence space manager to enable the user to feel the presence while simultaneously viewing the remote spaces of users participating in the collaborative work. Thereafter, in an interaction manager, users can adjust the arrangement and sizes of the spaces through hand gestures, immerse themselves in a specific space, or return to the multi-presence state.

Figure 2: Proposed system scheme

Moreover, the personal work performed by users is managed in a personal workspace separately from synchronization in collaborative work. Additionally, users can share relevant personal work in the collaborative work environment.

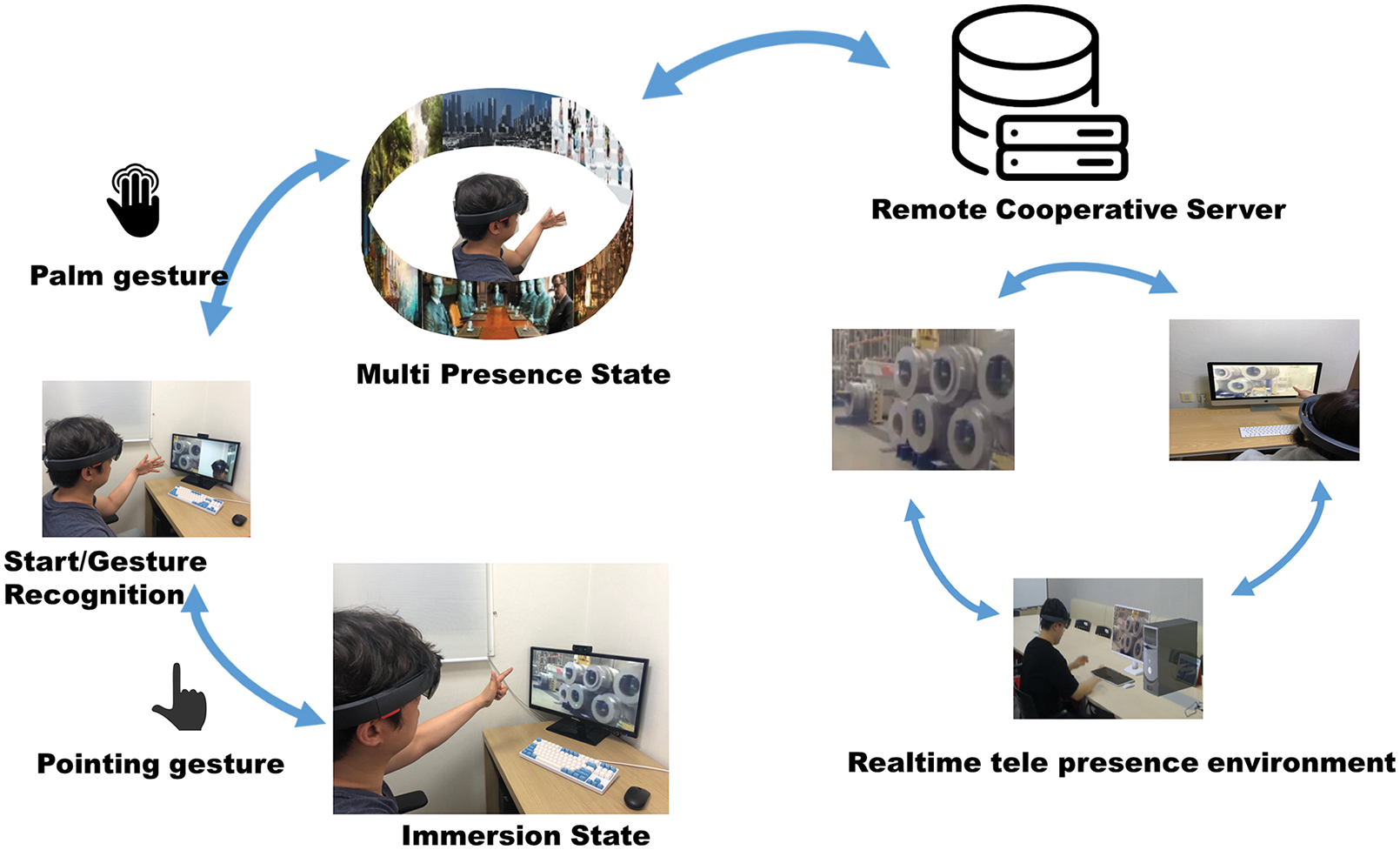

Fig. 3 shows the overall workflow of the proposed system. A user participating in an XR cooperative work can determine multi-presence and immersion states through the user’s hand gesture recognition. As can be seen in the figure, it is assumed that the user works on remote factory contents with two other remote participants. When the user proceeds to the multi-presence state through a palm gesture, the proposed system generates multi-presence with three remote spaces surrounding the user. The user can perceive multi-presence simultaneously because the remote spaces surround the user in the XR environment. The user can collaborate while rotating a circular space in the desired direction using a hand gesture and also while recognizing situations occurring in remote spaces. If users intend to accurately investigate the situation of a specific remote space by immersing themselves, the users can enter the remote space through a pointing gesture. Thereafter, the users can immerse themselves in the remote space and perform collaborative work while viewing the remote space at 360°.

Figure 3: State of the proposed system

For a collaborative work participant U and an nth user participant C, a set of collaborative workspaces including this user up to Un is created. In addition, if a user performs a task in P, the user’s workspace, the multi-presence space M from the user’s point of view, can be defined using Eq. (1). The multi-presence space merges only the spaces of the other participants and presents the resultant space to the user.

Thereafter, if users share the results of their workspaces in the collaborative workspace, their works are configured in the collaborative workspace, as shown in Eq. (2), and users participating in the collaborative work can identify the information.

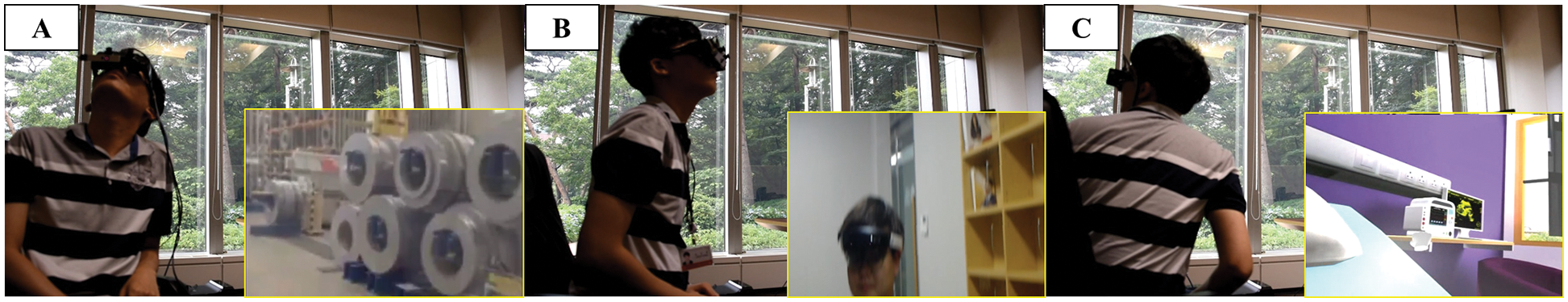

Fig. 4 shows three users performing collaborative work using the system proposed in this study. As the proposed system provides the user with the simultaneous presence of two different remote spaces as shown Figs. 4A and 4B, the structural information of the relevant remote spaces can be recognized as it has three-dimensional effects. Based on this information, the user can perform simulation works suitable for the relevant spaces and provide the results to users who subsequently participate in the collaborative works again, as shown Fig. 4C.

Figure 4: Multi-presence situation

If users want to change the arrangement of the surrounding spaces during collaborative work, the proposed system recognizes the user’s hand gestures and rotates the spaces left or right around the user, as shown in Fig. 5. Moreover, when users participate in a specific remote space, they immerse themselves in the remote space through a clenching gesture. Further, when they return to the multi-presence state, they use an opened-palm gesture [24].

Figure 5: Changing environment using hand gestures

A collaborative work environment is constructed using the system proposed in this study. The constructed system provides synchronization through the recognition of user locations and motions so that users can work collaboratively in MR and XR environments. Moreover, the constructed system provides a joint collaborative work office environment.

3.3 Applications and Case Study

We proposed a collaborative work environment in smart factories and offices to apply the proposed spatial multi-presence system. A smart factory is a cutting-edge technology that manages work processes and responds to security situation issues by collaborating with AI-based services or office people. To address the situation, a smart factory collects and utilizes data from IoT sensors [19]. To evaluate possible issues and collaborations occurring in the smart factory, we surveyed professional engineers and officers of companies, such as DataReality (http://datareality.kr/) and Futuretechwin (http://www.ftwin.co.kr/), which are related to XR in the smart factory. With the survey results, we designed a scenario as a case study.

To apply XR-based remote collaboration in the smart factory, there is a factory site with a remote sensor, such as a camera, and a site manager. The site manager generally checks and controls the machines of the factory. The site manager should collaborate with other participants in remote office spaces in real-time when an issue occurs. In collaborative work, participants can solve processing issues and emergencies at the factory through dialogue and sharing the field of vision. Fig. 6 shows an example of remote collaboration. The participants took part in three physically separated spaces through a network, wearing MS HoloLens 1 and using the proposed system.

Figure 6: Case study of the XR-based remote collaboration for smart factory

To verify the utility of the simultaneous multi-presence system proposed in this study, a performance evaluation was conducted on whether users participating in the experiment in remote collaborative works simultaneously felt the presence of multiple spaces. MS HoloLens was used as a device to configure the experiment so that users could view their spatial information while looking at remote users. The proposed system was developed using the Unity 2019.4 (LTS) version. The experimental environment was configured such that the experiment could be conducted in a local area network environment that supports wired and wireless 100 Mbps. The computers, which were used as servers to receive the streams of remote images, converted them into multi-presence and sent them to MS HoloLens in the process of the experiment; these were equipped with an i7-8700 CPU (3.2 GHz, 6 cores) and Nvidia GTX 1060 graphics card. Moreover, Logitech Pro HD 1080 p Stream webcams were used to show each remote environment. Three separate spaces were configured for the experiment, and each space was set such that the participants could see the computers, webcams, and HoloLens.

To evaluate the performance of the proposed system, 30 participants aged between 20 and 40 years who had experience using video conferencing and HMDs were selected. They received a 10-min training on the proposed system. For the experiment, the participants were divided into 10 teams of three participants each. They participated in three physically separated spaces through a network, wearing MS HoloLens 1 and using the proposed system. The participants were configured to perform a 30-min collaborative work to understand the factory and draft improvement plans while viewing 360° video content of the factory facility. The image on the display screen of the participant is visualized as it is on the MS HoloLens display worn by the users.

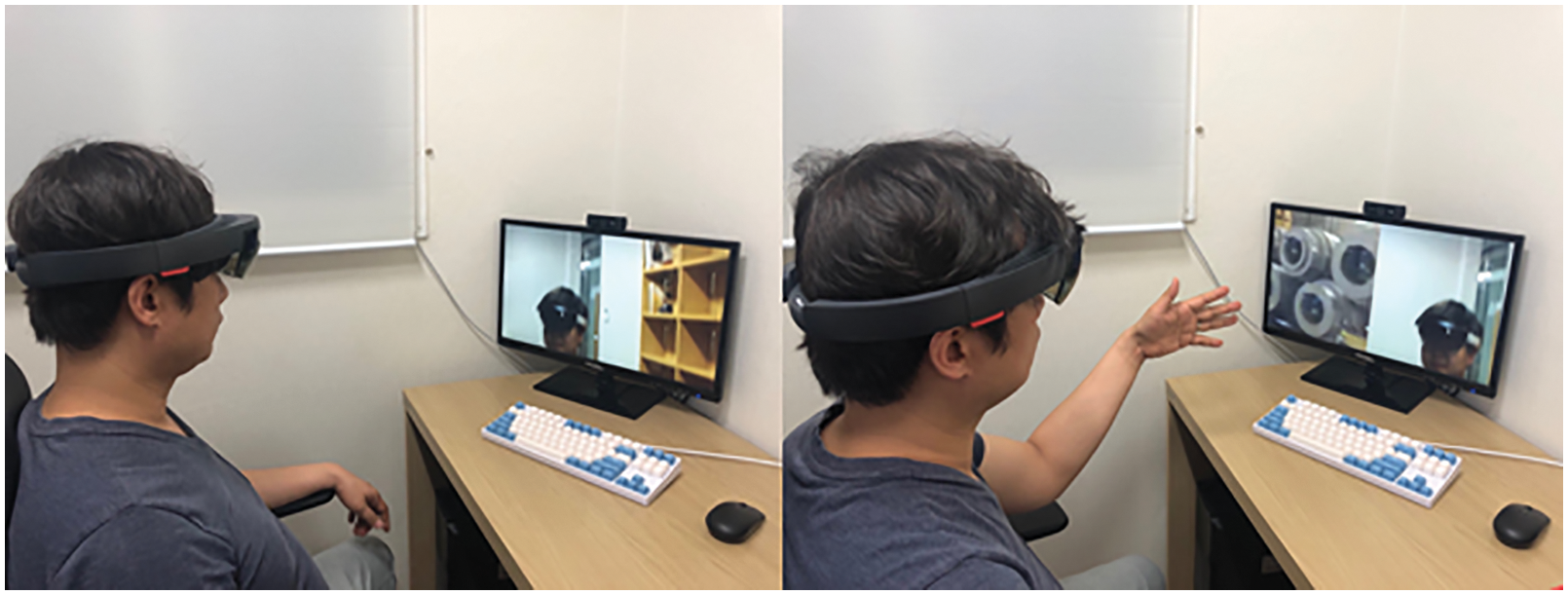

In the experiment, the users talked about improvement plans while viewing 360° video content about the factory in their workspaces. Fig. 7A shows a user participating in the experiment talking with another user participating in the collaborative work while watching him/her. Fig. 7B is a case in which a user works while viewing two spaces simultaneously using the proposed multi-presence state.

Figure 7: (A) Conversation with remote participant (B) Multi-presence state with hand gesture

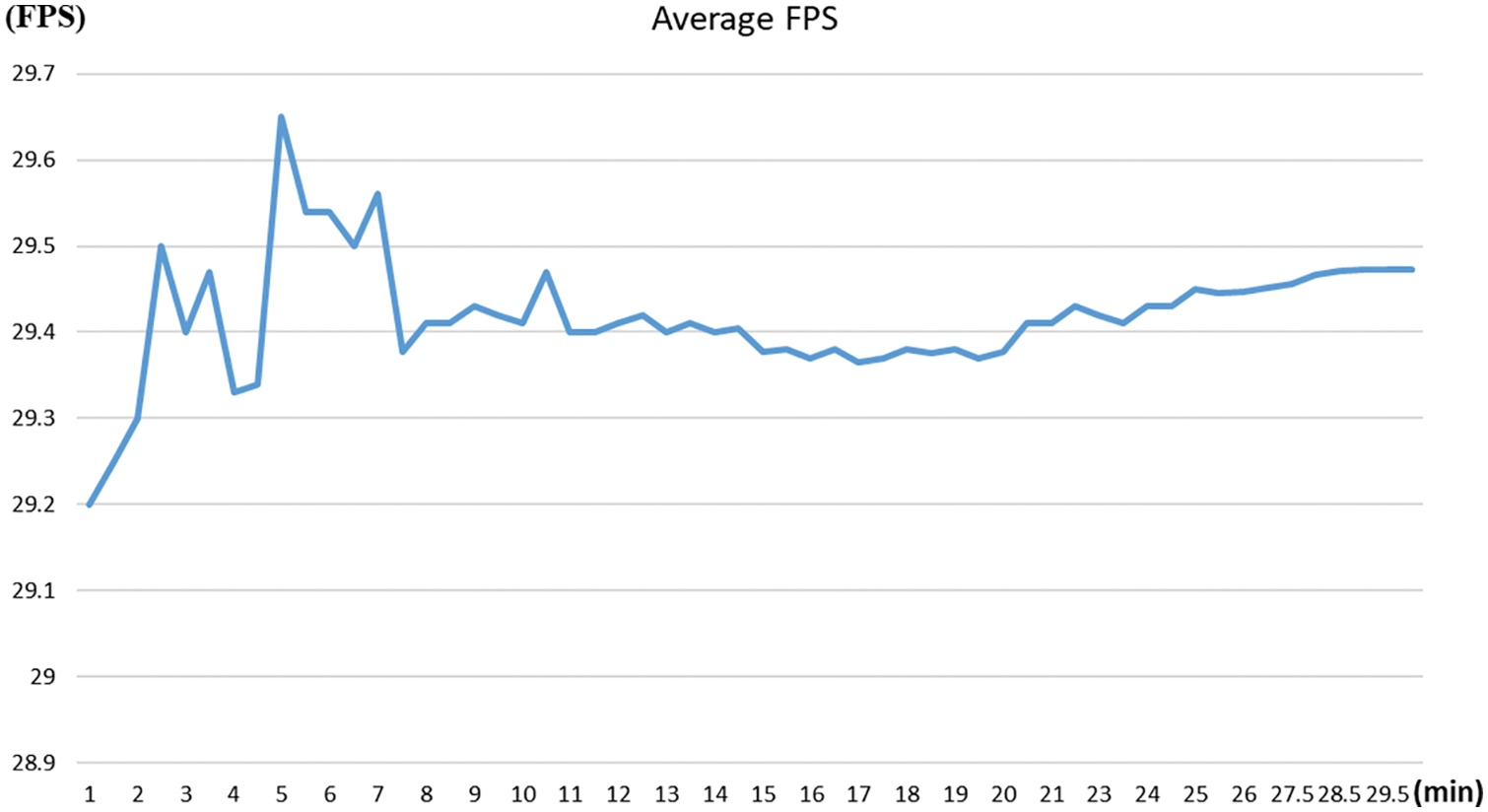

First, the average rendering speed when using the proposed system was measured by the participant in the experiment. Fig. 8 shows the average frames per second (FPS) value of the participant while experimenting for 30 min.

Figure 8: Average FPS

As the maximum FPS speed of MS HoloLens 1 was 30 FPS, the service was provided at high speeds on average to the participants. Fig. 8 shows that the changes in the FPS occur rapidly in the first 1–5 min of the experiment. As the process through which the users receive remote spatial information through a network and convert it to multi-presence to visualize the information as well as through which the users are immersed in specific spaces was repeatedly switched, the speed was slightly reduced in cases where video information was matched and buffering occurred. However, stable results were obtained thereafter as users became accustomed to the work.

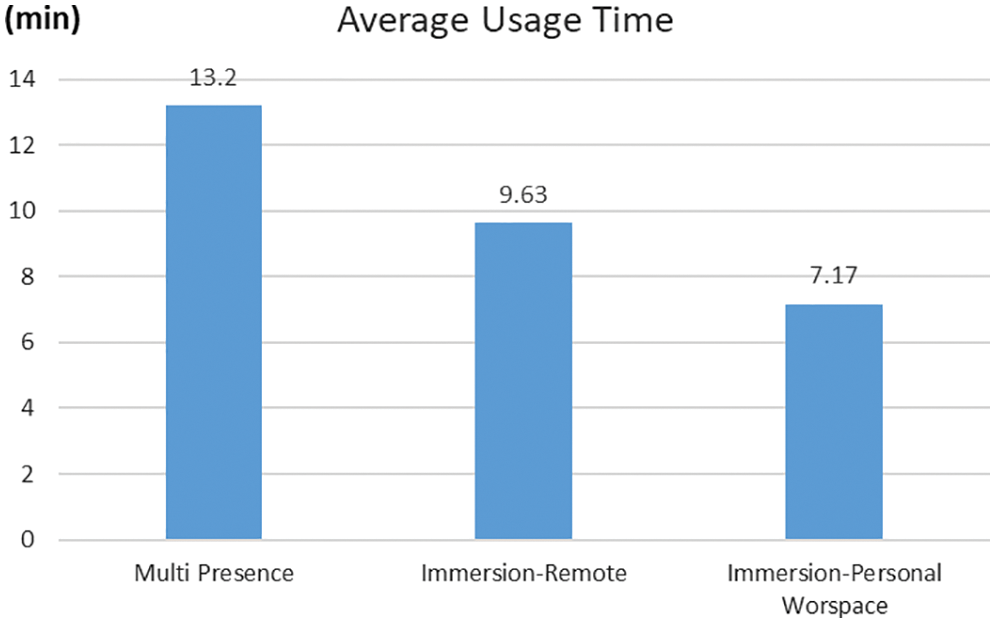

Second, in this study, the average usage time was measured to determine the frequency at which users used the multi-presence mode and the general immersion state during the experiment. The general immersion mode was divided into cases where the user had a conversation with a remote user and those where the users watched a video in their workspace. The experimental results are shown in Fig. 9. According to the experimental results, when the three modes were separately measured, the multi-presence mode exhibited the highest frequency with an average of 13.2 min. However, when all the immersion modes were combined, the average usage of the immersion mode was 16.8 min, which was 3.6 min longer on average. Interestingly, cases were observed where the 360° factory video content used in the experiment, which was approximately 10 min long, was viewed in an immersive state at the beginning and subsequently viewed using multi-presence because the users wanted to watch the video while talking to remote users. Moreover, the users preferred to use the multi-presence mode when talking to two users simultaneously.

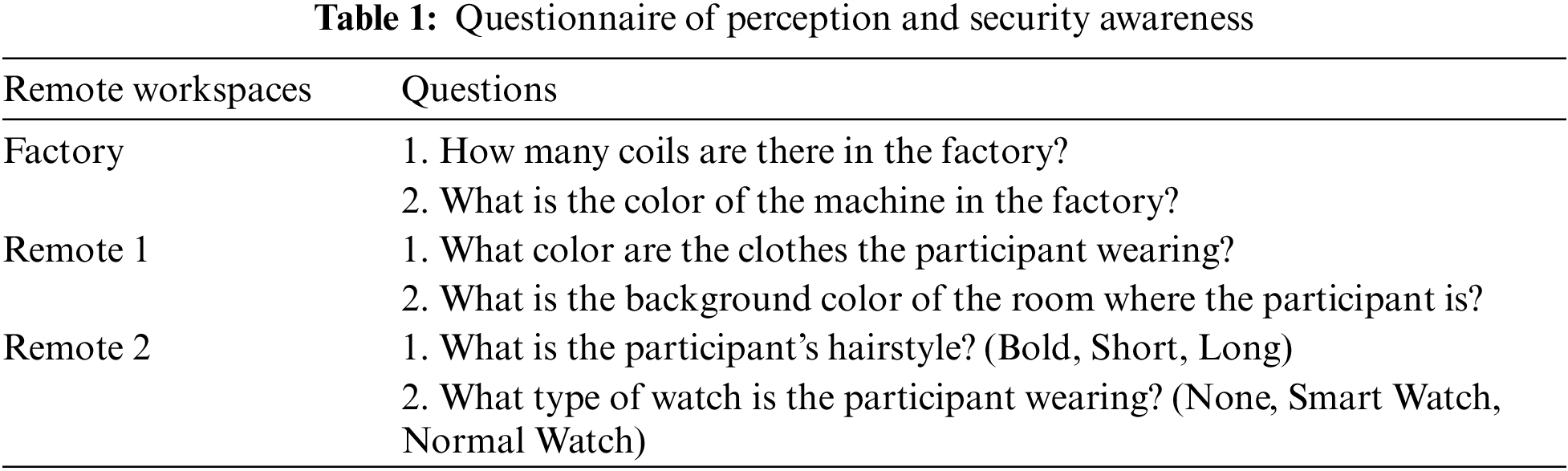

Figure 9: Average usage

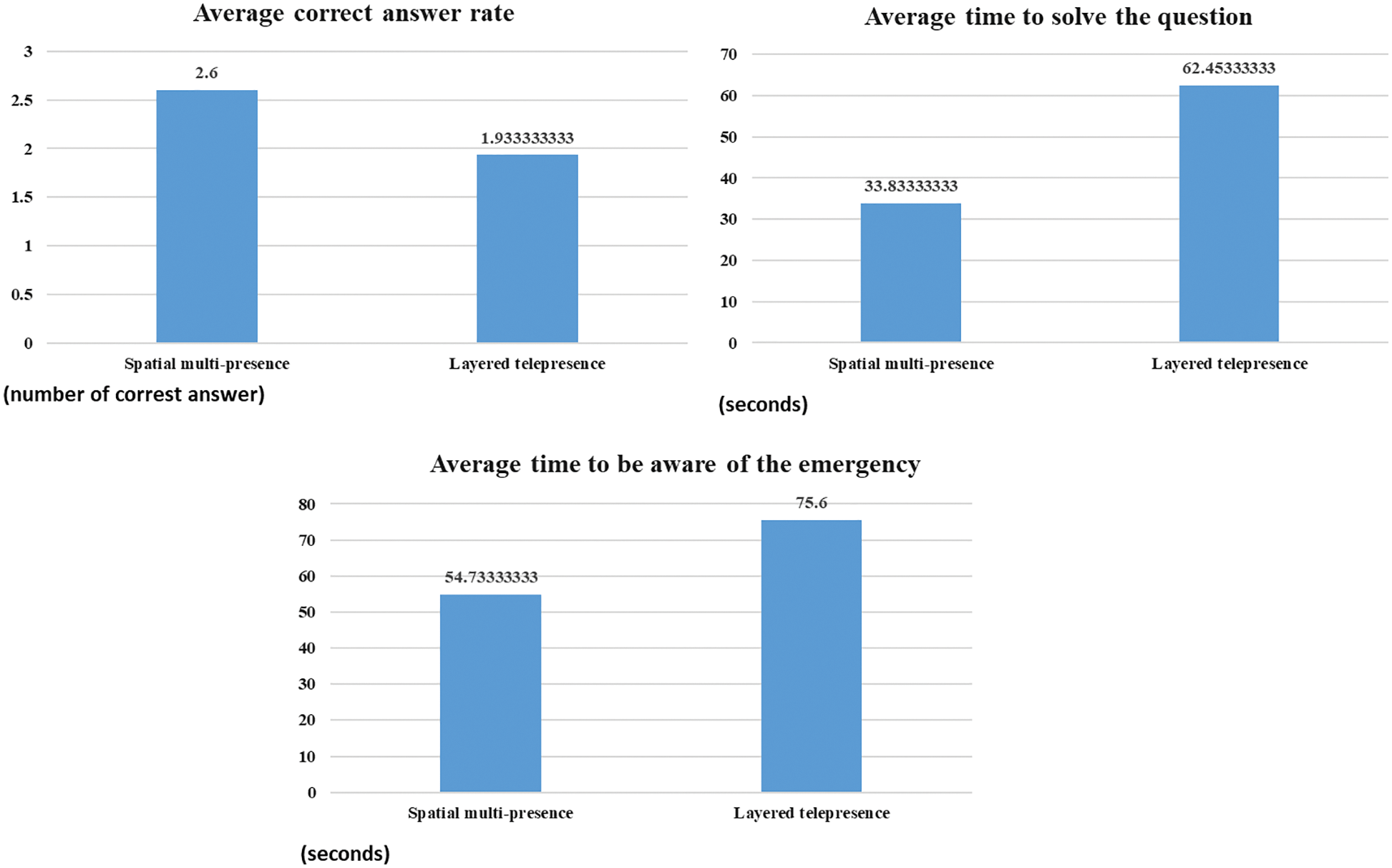

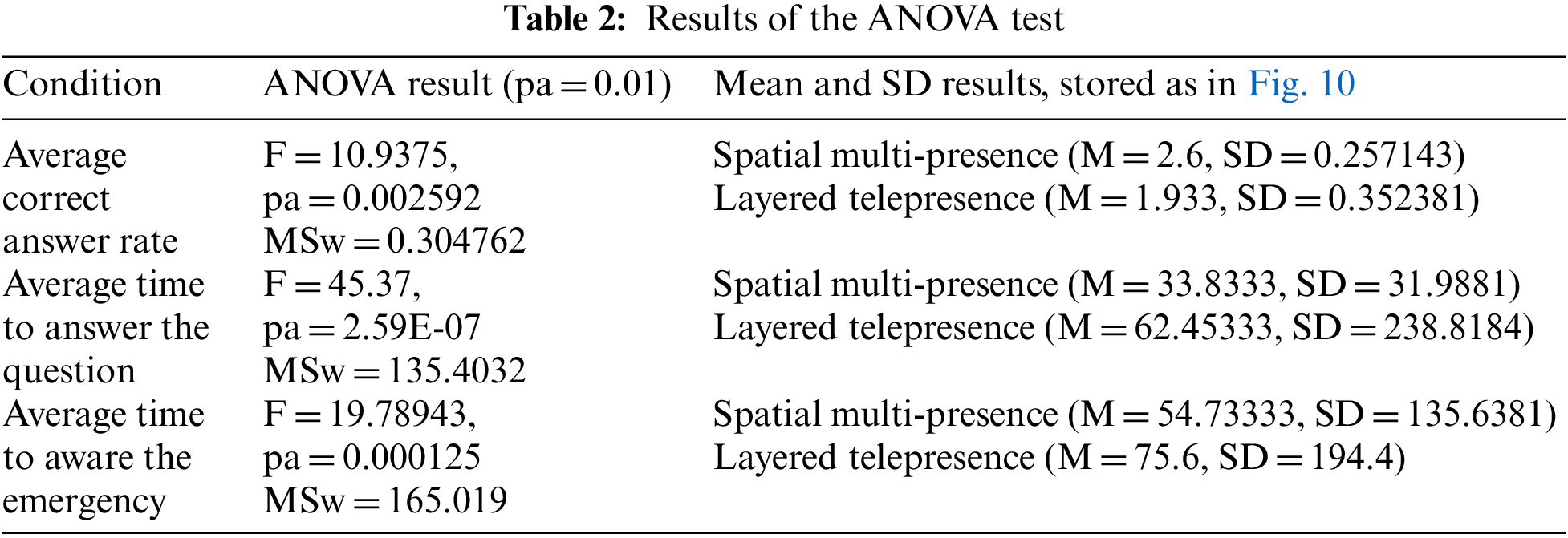

Third, we compared conventional telepresence systems that supported collaboration in an XR environment. Thus, the layered telepresence [20] and the proposed spatial multi-presence systems were compared. Fifteen participants aged between 20 and 40 years working in smart factories and XR companies, including the interviewer in the case study, were newly selected. The participants were divided into five teams of three participants each. Because the experiment was conducted in two different environments, the order of the experimental environment was randomly configured to avoid educational effects. In the experiment, we asked participants to perform the following two tasks. First, the participants were asked to check the questions of the three remote workspaces, as shown in Table 1, and then answer the questions while performing 15 min of collaborative work. The order of the questions in the remote workspace was random. When one question belonging to one remote workspace was decided in one experiment environment, another question of the remote workspace appeared in another. When participants answer the question, the proposed system checks the correctness of the answer and measures the time taken to answer the question. If the participant did not answer in 5 min, the proposed system shows the next question. Second, smoke and fire appeared in the smart factory at random times during the first experiment. We evaluated how quickly participants perceived the situation.

Fig. 10 shows the results of the experiments. According to the average correct answer rate result, participants obtained the correct answer better when using the proposed spatial multi-presence. In the layered telepresence, participants gave incorrect answers because the remote workspaces were seen overlapping each other. Participants also answered the questions faster when using the proposed spatial multi-presence because they had to adjust the transparency of other overlapping remote workspaces when using the layered telepresence. It was also easy for participants to identify emergencies in the proposed spatial multi-presence because they could simultaneously see the multiple remote workspaces by turning their heads.

Figure 10: Results of the experiments

We conducted an analysis of variance (ANOVA) test on the results of experiments for statistical analysis, as described in Table 2. According to the results of the ANOVA test, the average correct answer rate between the spatial multi-presence and the layered telepresence is statistically significant (0.002592 < 0.01). As the visualization method is affected by the understanding ability of the participants, the proposed spatial multi-presence showed the best correct answer rate. Moreover, the results of the ANOVA test show that the average time to answer questions is statistically significant (2.59E-07 < 0.01). Searching for specific clues in remote workspaces is influenced by a sense of presence and interactivity. There were significant differences between the spatial multi-presence and the layered telepresence (0.000125 < 0.01) in terms of the average time to be aware of the emergency, as described in Table 2. Thus, the proposed spatial multi-presence may reduce awareness and search for times for remote collaboration in extended reality environments.

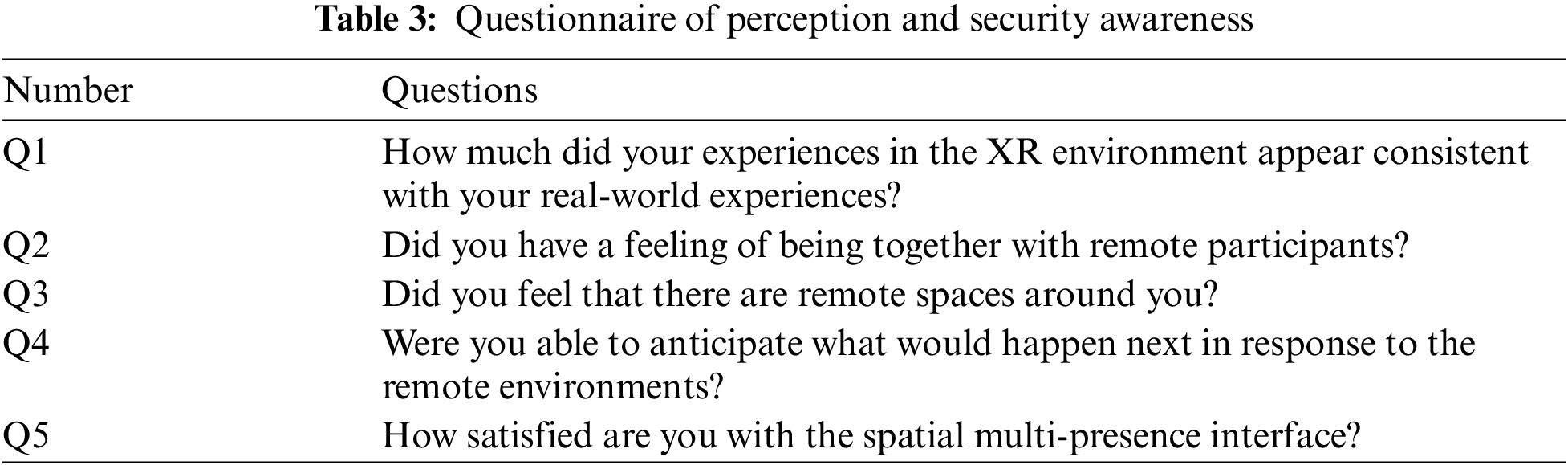

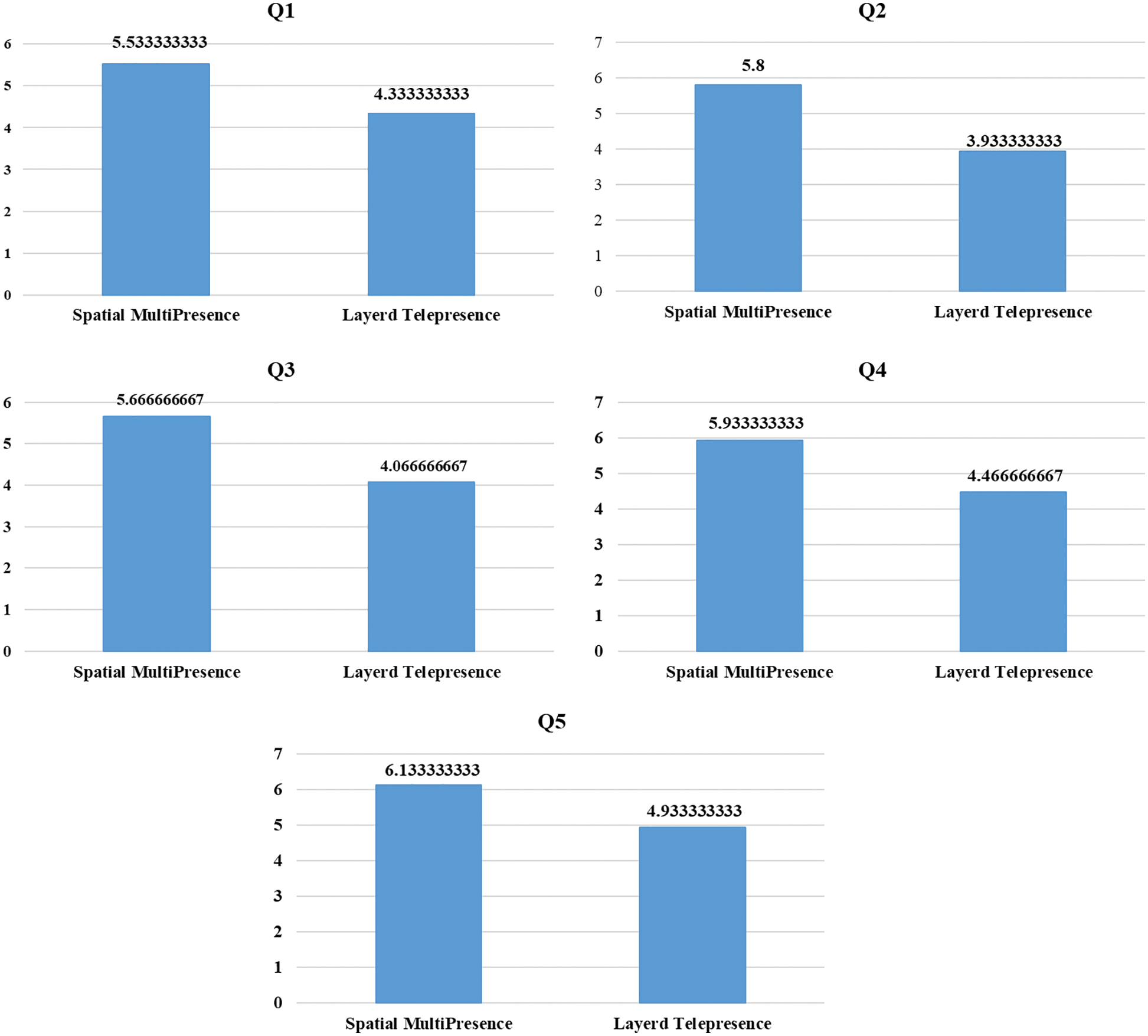

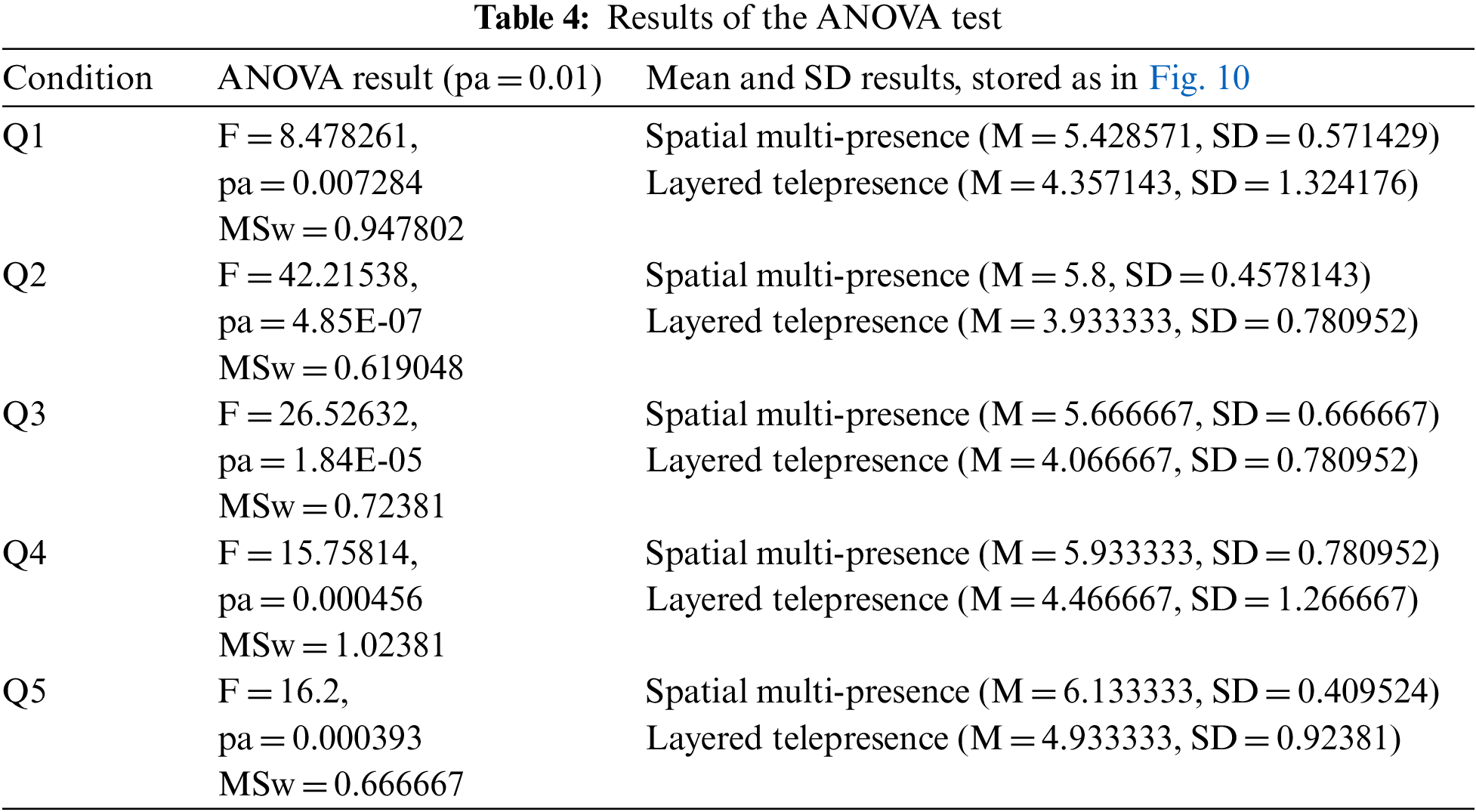

After the quantitative experiments, we conducted qualitative experiments that measured the sense of presence and security awareness. Because the perception of participants was closely related to qualitative experiments, we designed five questionnaires to verify the usability of the proposed spatial multi-presence system [25]. Three questions focused on measuring the perception of multi-presence, and one on measuring the security awareness of a remote workspace. Another question focused on usability, as shown in Table 3.

Fig. 11 shows the results of the average points for the measurements of the spatial multi-presence questionnaire. According to the results of questions 1–3 (Q1-Q3), participants perceived more spatial presence with the proposed system. Because the proposed system places remote spaces on the sides, the layered telepresence method blends several remote spaces. The participants could perceive more spatial and social presences when remote spaces were placed on their either side. In terms of the results of question 4 (Q4), the participants were aware of situations in remote spaces during the spatial multi-presence mode. Participants were also more satisfied with the proposed spatial multi-presence system than with the layered telepresence system. We conducted an analysis of variance (ANOVA) test on the spatial multi-presence questionnaire results for statistical analysis. Table 4 presents the ANOVA test on the average scores of the questionnaire between spatial multi-presence and layered telepresence. The results were statistically significant (p = 0.01).

Figure 11: Results of the evaluation of spatial multi-presence perception

After the experiment, the participants were interviewed to qualitatively evaluate the proposed system. First, according to the results of the question of whether they felt presence, the participants notified that they were generally confused about the space at first in cases where they used the spatial multi-presence. However, they soon became accustomed and felt as if remote users were in the same space as them when they were visualizing multiple spaces. Second, they were asked about the difference between collaborative work using multi-presence and that through existing video conferencing. The participants indicated that compared with existing collaborative work, multi-presence-based collaborative work had advantages such as realistic communication with other users while being able to obtain more spatial information. Third, regarding efficiency of personal workspaces, the participants notified that guaranteeing individual workspaces enabled them to check their work and organize their thoughts while performing collaborative work. Fourth, using spatial multi-presence, it was easy to be aware of remote situations and to search for a specific goal among the remote workspaces. Finally, about the disadvantages of multi-presence, some users complained of slight dizziness when the boundary between spaces was crossed.

We conducted additional interviews about the advantages of increasing security awareness in a remote collaborative work environment with the participants. According to the results, it is difficult to feel the spatial presence and to be aware of security issues of the situation occurring in a remote space because the smart factory generally uses top view-based cameras with video conferencing. Security issues that can occur in a smart factory situation are not only the case of fire as applied in this paper but also cases where there is a risk of injury by users accidentally accessing hazardous materials and theft. With the proposed method, it can be quickly aware and notified of various security issues that may occur behind the scenes, such as being unaware of the remote user, especially while collaborating.

This study proposed a spatial multi-presence system that supports personal work while enabling users to simultaneously feel the presence of users in remote spaces when multiple users perform collaborative work remotely in an XR environment. Using the proposed system, users were able to collaborate with remote users and interact with spaces through hand gestures while wearing an HMD. The performance evaluations demonstrated the possibility of improving the existing video conference or collaborating with virtual environment-based collaborative works, as three users could feel the simultaneous presence of remote spaces while performing collaborative work and being able to immerse themselves when working in a specific space. Experimental results show that the proposed spatial multi-presence increased the rate of recognizing remote collaborative work environment situations by about 34% more accurately than the conventional method. When the participants could find specific problems in a collaborative environment, the proposed spatial multi-presence was over 84% faster. In addition, the rate of recognizing an emergency and security awareness about it was about 38% faster than the conventional method. Thus, the proposed spatial multi-presence showed enhanced sense of presence and awareness of remote workspaces compared to the conventional method.

In the future, an interaction model will enable users to concentrate while recognizing natural changes in spaces when simultaneous events occur in multiple spaces by applying elements of interactions other than the user’s hand gestures. In addition, an algorithm for information sharing between spaces will be researched and developed to enable the flexible transformation of data across multiple spaces. Finally, we will apply the proposed scheme to the practical collaborative work of the smart factory with the companies participating in the case study.

Acknowledgement: The authors thank Suhan Park (Hoseo University) for supporting the experiments of the proposed system.

Funding Statement: These results were supported by “Regional Innovation Strategy (RIS)” through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (MOE) (2021RIS-004).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. R. Johansen, Groupware, Computer Support for Business Teams. New York: Free Press, 1988. [Google Scholar]

2. C. Joslin, D. T. Giacomo and N. Magnenat-Thalmann, “Collaborative virtual environments: From birth to standardization,” IEEE Communication Magazine, vol. 42, no. 4, pp. 28–33, 2004. [Google Scholar]

3. A. Fast-Berglund, L. Gong and D. Li, “Testing and validating extended reality (xR) technologies in manufacturing,” Procedia Manufacturing, vol. 25, pp. 31–38, 2018. [Google Scholar]

4. G. Lawson, P. Herriotts, L. Malcolm, K. Gabrecht and S. Hermawati, “The use of virtual reality and physical tools in the development and validation of ease of entry and exit in passenger vehicles,” Applied Ergonomics, vol. 48, pp. 240–251, 2015. [Google Scholar] [PubMed]

5. H. Fuchs, A. State and J. C. Bazin, “Immersive 3D telepresence,” Computer, vol. 47, no. 7, pp. 46–52, 2014. [Google Scholar]

6. C. Johnson, B. Khadka, E. Ruiz, J. Halladay, T. Doleck et al., “Application of deep learning on the characterization of tor traffic using time based features,” Journal of Internet Services and Information Security (JISIS), vol. 11, no. 1, pp. 64–79, 2021. [Google Scholar]

7. S. Sriworapong, A. Pyae, A. Thirasawasd and W. Keereewan, “Investigating students’ engagement, enjoyment, and sociability in virtual reality-based systems: A comparative usability study of spatial.io, gather.town, and zoom,” in Proc. of Well-Being in the Information Society: When the Mind Breaks, Turku, Finland, pp. 140–157, 2022. [Google Scholar]

8. S. Shi, W. Yang, J. Zhang and Z. Chang, “Review of key technologies of 5G wireless communication system,” in Proc. of MATEC Web of Conf., Singapore, pp. 1–6, 2015. [Google Scholar]

9. M. Zuppelli, A. Carrega and M. Repetto, “An effective and efficient approach to improve visibility over network communications,” Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications (JoWUA), vol. 12, no. 4, pp. 89–108, 2021. [Google Scholar]

10. M. Leitner, M. Frank, G. Langner, M. Landauer, F. Skopik et al., “Enabling exercises, education and research with a comprehensive cyber range,” Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications (JoWUA), vol. 12, no. 4, pp. 37–61, 2021. [Google Scholar]

11. D. Pöhn and W. Hommel, “Universal identity and access management framework,” Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications (JoWUA), vol. 12, no. 1, pp. 64–84, 2021. [Google Scholar]

12. J. Q. Coburn, I. Freeman and J. L. Salmon, “A review of the capabilities of current low-cost virtual reality technology and its potential to enhance the design process,” Journal of Computing and Information Science in Engineering, vol. 17, no. 3, pp. 1–15, 2017. [Google Scholar]

13. P. P. Desai, P. N. Desai, K. D. Ajmera and K. A. Mehta, “Review paper on oculus rift-a virtual reality headset,” International Journal of Engineering Trends and Technology, vol. 13, no. 4, pp. 175–179, 2014. [Google Scholar]

14. D. Thalmann, J. Lee and N. M. Thalmann, “An evaluation of spatial presence, social presence and interactions with various 3D displays,” in Proc. of CASA, Singapore, pp. 197–204, 2016. [Google Scholar]

15. M. McGill, D. Boland, R. Murray-Smith and S. Brester, “A dose of reality: Overcoming usability challenges in VR head-mounted displays,” in Proc. of CHI, Seoul, Korea, pp. 2143–2152, 2015. [Google Scholar]

16. G. Assenza, “A review of methods for evaluating security awareness initiatives,” European Journal for Security Research, vol. 5, no. 2, pp. 259–287, 2019. [Google Scholar]

17. E. M. Culpa, J. I. Mendoza, J. G. Ramirez, A. L. Yap, E. Fabian et al., “A cloud-linked ambient air quality monitoring apparatus for gaseous pollutants in urban areas,” Journal of Internet Services and Information Security (JISIS), vol. 11, no. 1, pp. 64–79, 2021. [Google Scholar]

18. Y. Lu and T. Ishida, “Implementation and evaluation of a high-presence interior layout simulation system using mixed reality,” Journal of Internet Services and Information Security (JISIS), vol. 10, no. 1, pp. 50–63, 2020. [Google Scholar]

19. D. Mourtzis, J. Angelopoulos and N. Panopoulos, “A teaching factory paradigm for personalized perception of education based on extended reality (XR),” in Proc. of CLF, Singapore, pp. 1–6, 2022. [Google Scholar]

20. M. Y. Saraijii, S. Sugimoto, C. L. Fernando, K. Minamizawa and S. Tachi, “Layered telepresence: Simultaneous multi presence experience using eye gaze based perceptual awareness blending,” in Proc. of ACM SIGGRAPH Emerging Technologies, New York, United States, pp. 1–2, 2016. [Google Scholar]

21. A. Alsereidi, Y. Iwasaki, J. Oh, V. Vimolmongkolporn, F. Kato et al., “Experiment assisting system with local augmented body (EASY-LAB) in dual presence environment,” Acta Imeko, vol. 11, no. 3, pp. 1–6, 2022. [Google Scholar]

22. J. Lee, M. Lim, H. S. Kim and J. I. Kim, “Supporting fine-grained concurrent tasks and personal workspaces for a hybrid concurrency control mechanism in a networked virtual environment,” Presence Teleoperators Virtual Environment, vol. 21, no. 4, pp. 452–469, 2012. [Google Scholar]

23. A. Maimone, X. Yang, N. Dierk, M. Dou and H. Fuchs, “General-purpose telepresence with head-worn optical see-through displays and projector-based lighting,” in Proc. of IEEE VR, Lake Buena Vista, USA, pp. 1–4, 2013. [Google Scholar]

24. J. Y. Oh, J. Lee, J. H. Lee and J. H. Park, “A hand and wrist detection method for unobtrusive hand gesture interactions using HMD,” in Proc. of ICCE-Asia, Seoul, Korea, pp. 1–4, 2016. [Google Scholar]

25. B. G. Witmer and M. J. Singer, “Measuring presence in virtual environments: A presence questionnaire,” Presence, vol. 7, no. 3, pp. 225–240, 1997. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools