Open Access

Open Access

ARTICLE

Optimal Deep Learning Model Enabled Secure UAV Classification for Industry 4.0

1 SAUDI ARAMCO Cybersecurity Chair, Networks and Communications Department, College of Computer Science and Information Technology, Imam Abdulrahman bin Faisal University, P.O. Box 1982, Dammam, 31441, Saudi Arabia

2 Department of Information Systems, College of Computer Science, King Khalid University, Abha, Saudi Arabia

3 Department of Industrial and Systems Engineering, College of Engineering, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

4 Department of Information Technology, College of Computers and Information Technology, Taif University, Taif P.O. Box 11099, Taif, 21944, Saudi Arabia

5 Department of Information Systems, College of Computer Science, Center of Artificial Intelligence, Unit of Cybersecurity, King Khalid University, Abha, Saudi Arabia

6 Department of Computer Sciences, College of Computing and Information System, Umm Al-Qura University, Saudi Arabia

7 Department of Biomedical Engineering, College of Engineering, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

8 Department of Mechanical Engineering, Faculty of Engineering and Technology, Future University in Egypt, New Cairo, 11835, Egypt

9 Department of Computer Science, College of Sciences and Humanities- Aflaj, Prince Sattam bin Abdulaziz University, Saudi Arabia

* Corresponding Author: Mesfer Al Duhayyim. Email:

Computers, Materials & Continua 2023, 74(3), 5349-5367. https://doi.org/10.32604/cmc.2023.033532

Received 20 June 2022; Accepted 28 September 2022; Issue published 28 December 2022

Abstract

Emerging technologies such as edge computing, Internet of Things (IoT), 5G networks, big data, Artificial Intelligence (AI), and Unmanned Aerial Vehicles (UAVs) empower, Industry 4.0, with a progressive production methodology that shows attention to the interaction between machine and human beings. In the literature, various authors have focused on resolving security problems in UAV communication to provide safety for vital applications. The current research article presents a Circle Search Optimization with Deep Learning Enabled Secure UAV Classification (CSODL-SUAVC) model for Industry 4.0 environment. The suggested CSODL-SUAVC methodology is aimed at accomplishing two core objectives such as secure communication via image steganography and image classification. Primarily, the proposed CSODL-SUAVC method involves the following methods such as Multi-Level Discrete Wavelet Transformation (ML-DWT), CSO-related Optimal Pixel Selection (CSO-OPS), and signcryption-based encryption. The proposed model deploys the CSO-OPS technique to select the optimal pixel points in cover images. The secret images, encrypted by signcryption technique, are embedded into cover images. Besides, the image classification process includes three components namely, Super-Resolution using Convolution Neural Network (SRCNN), Adam optimizer, and softmax classifier. The integration of the CSO-OPS algorithm and Adam optimizer helps in achieving the maximum performance upon UAV communication. The proposed CSODL-SUAVC model was experimentally validated using benchmark datasets and the outcomes were evaluated under distinct aspects. The simulation outcomes established the supreme better performance of the CSODL-SUAVC model over recent approaches.Keywords

Recent technological advancements such as Artificial Intelligence (AI), Edge Computing, 5G, Internet of Things (IoT), and big data analytics are incorporated in industries with innovation and cognitive skills. These cutting-edge technologies might be helpful for industries to rapidly escalate their manufacturing and delivery processes and customization of their goods [1]. Such enabling technologies empower Industry 4.0 with advanced production models that enable communication between humans and machines. In the smart machinery concept, both humans and machines co-work together and this phenomenon improves the capabilities of human beings effectively. Further, smart machinery was innovated to automate processes, persons, and industries at a given time [2]. According to Industry 4.0, the automation processes and the launch of edge computing occur in a dispersed and intellectual manner. The primary goal is to enhance the potentiality of the processes, thereby unintentionally avoiding the human cost incurred upon the maximization of the processes [3]. Fig. 1 showcases the types of industrial versions.

Figure 1: Types of industrial versions

On the other hand, avoiding or reducing manpower in industries will become a huge issue in the upcoming years, when Industry 4.0 becomes fully operative. Further, it would also face opposition from politicians and labor unions to compromise on the advantages of Industry 4.0 to improve employment opportunities [4,5]. But there is no need to reverse the evolution of the Industry 4.0 concept since process effectiveness should be improved by launching modern technology continuously. It can be suggested that Industry 4.0 can be considered to be a viable solution and it is required after the backward push commences [6]. Industry 4.0 can be expected to put forward a complete structure for automated and linked systems that range from individual cars to Unmanned Aerial Vehicles (UAV), with different necessities in terms of reliability, latency, energy efficiency, and data rate. Drones, on the other hand, serve a significant portion in wide scenarios that might surpass 6G and 5G too [7].

Owing to their adaptability, automation abilities, and less cost, drones have been extensively applied to meet civilian needs in the past few years [8]. Some of the instances include precision agriculture, power line inspection, building inspection, and wildlife conservation [9]. But, drones have a set of restrictions in terms of weight, size, energy utilization of the payload, restricted range of operations, and endurance. Such limits should not be ignored, especially when Deep Learning (DL) systems are required to be run on board [10]. Aerial imagery classification of scenes classifies the aerial images, captured using drones, to sub-areas, by masking several ground matters and types of land covers, to numerous semantic forms. In many real-time implications such as urban planning, computer cartography, and the management of remote sensing sources, aerial image classifier plays a significant role [11,12]. This approach is highly efficient in most domains, especially in educational and industrial settings, than the standard processes [13]. DL method endeavors to extract some of the commonly available hierarchies of Feature Learning (FL) concerning numerous abstraction stages. Deep Convolutional Neural Network (CNN) is the most commonly applied DL technique [14]. This method has become familiar and successful in countless detection and recognition tasks, receiving superior outcomes over a count of standard datasets.

The current research article presents a Circle Search Optimization with Deep Learning Enabled Secure UAV Classification (CSODL-SUAVC) model for Industry 4.0 environment. The proposed CSODL-SUAVC technique consists of Multi-Level Discrete Wavelet Transformation (ML-DWT), CSO-related Optimal Pixel Selection (CSO-OPS), and signcryption-based encryption. Besides, the image classification process includes three components such as Super-Resolution using Convolution Neural Network (SRCNN), Adam optimizer, and softmax classifier. The proposed CSODL-SUAVC method was experimentally validated using benchmark datasets and the outcomes were assessed under distinct aspects.

The aim of the study conducted earlier [15] was to provide a survey-related tutorial on potential applications and support technologies for Industry 4.0. At first, the researchers presented a new concept and defined Industry 4.0 from the perspectives of industrial practitioners and researchers. Next, the research scholars elaborated on the potential applications of Industry 4.0 such as cloud manufacturing, intellectual healthcare, manufacturing production, and supply chain management. In the literature [16], the authors continuously monitored the harmful gas to alert the people, in case of any leakage, and to save them from accidents. Further, the study also discussed Industry 4.0 from the perspective of leveraging UAV's longer-range transmission.

Bhat et al. [17] proposed Agri-SCM-BIoT (Agriculture Supply Chain Management utilizing Blockchain and IoT) structure and deliberated the classification of security threats with IoT architecture and the existing blockchain-related defense mechanisms. In the study conducted earlier [18], the researchers presented a Machine Learning (ML)-based architecture for rapid identification and detection of UAE over encrypted Wi-Fi traffic. The architecture was inspired by the observations made when a consumer UAV uses a Wi-Fi link for controlling and video streaming purposes [19]. In this study, the significance of a secure drone network was emphasized in terms of preventing intrusion and interception. A hybrid ML method was developed, combining Logistic Regression (LR) and Random Forest (RF) methods, to categorize the data instances for maximum effectiveness. By integrating the sophisticated AI-stimulated approaches with NoD architecture, the presented method mitigated the cybersecurity vulnerabilities with the creation of secure NoD security and its protection.

In literature [20], the authors proposed an autonomous Intrusion Detection System (IDS) that can effectively recognize the malicious threats which invade UAVs with Deeper Convolution Neural Network (UAV-IDS-ConvNet). Especially, the presented method considered the encryption of the Wi-Fi traffic dataset collected from three different kinds of widely-employed UAVs. Kumar et al. [21] presented a Secured Privacy-Preserving Framework (SP2F) for smart agriculture UAVs. The presented SP2F architecture had two major engines such as a two-level privacy engine and a DL-related anomaly detection engine. In this method, SAE was employed to transform the information into a novel encoded format to prevent inference attacks.

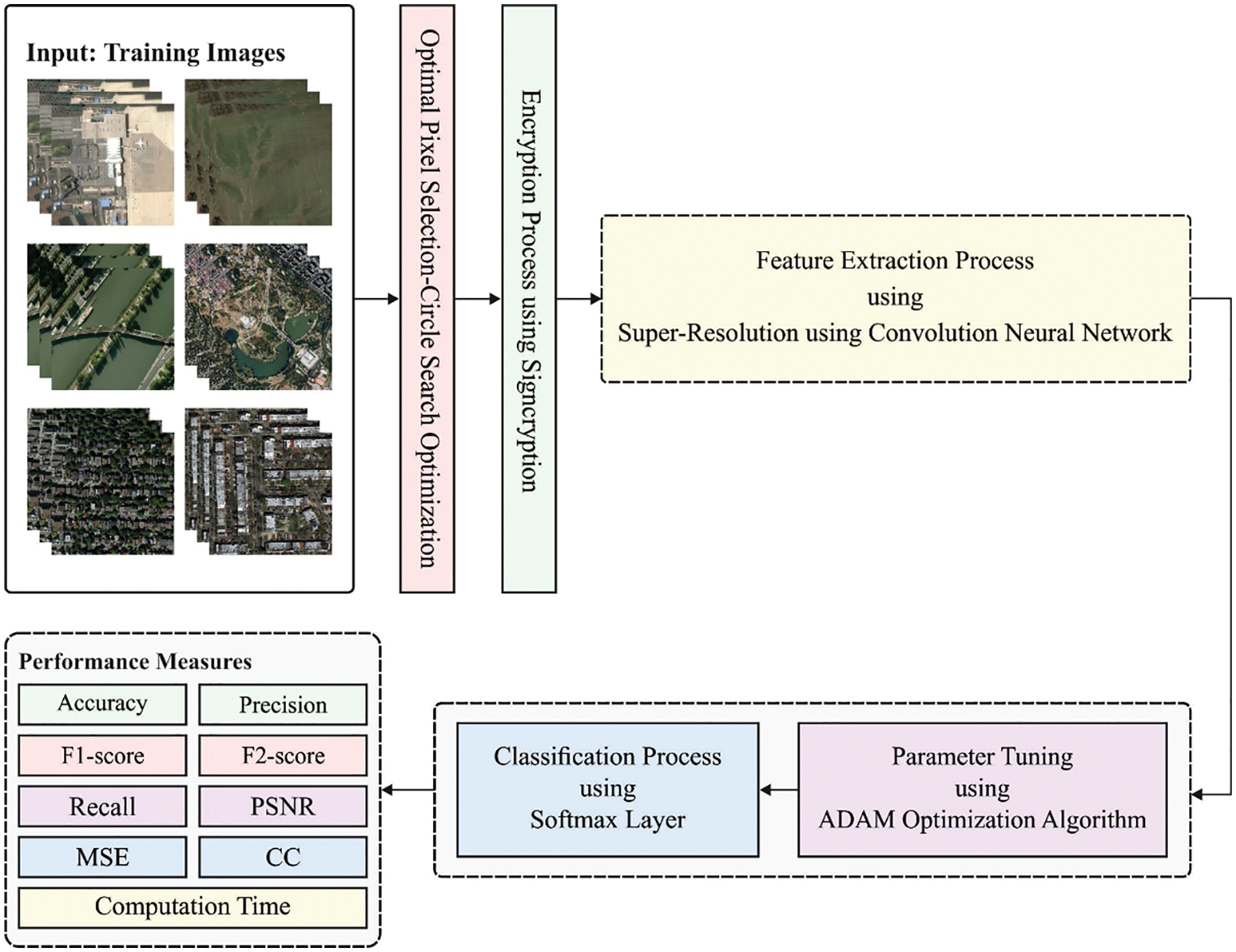

In this article, a novel CSODL-SUAVC algorithm has been developed to accomplish secure UAV classification and communication in the Industry 4.0environment. The presented CSODL-SUAVC technique performs image steganography via ML-DWT, CSO-related optimal pixel selection, and signcryption base- encryption technique. At the same time, the image classification module encompasses SRCNN-based feature extraction, Adam optimizer, and softmax classifier. Fig. 2 depicts the block diagram of the proposed CSODL-SUAVC approach.

Figure 2: Block diagram of CSODL-SUAVC approach

3.1 Secure UAV Communication Module

To accomplish secure UAV communication, the proposed model deploys the CSO-OPS technique to select the optimal pixel points in a cover image. Then, the secret image, encrypted by the signcryption technique, is embedded onto the cover image.

RGB cover images are classified based on Low High (LH), High Low (HL), Low Low (LL), and High High (HH) frequency bands to find the location of a pixel. Here, 2D-DWT is the prominent spatial applied in the frequency domain conversion model [22]. When an image is partitioned, it follows horizontal and vertical processes. The vertical function decomposes the images to

In Eq. (1), ‘

The co-efficient in the lower level band

In Eq. (3),

3.1.2 Optimal Pixel Selection Process

CSO algorithm is applied in this stage to select the optimal pixels of the images. The geometrical circle is an underlying closed curve that has a similar distance from the center to every point [23]. The diameter is calculated as a line, connecting two points on a curve that intersect at the

CSO seeks an optimal answer inside a random circle to widen the possibilities of the searching region. By utilizing the center of the circle as a target point, the circumference of the circle and the angle of contacting points of the tangent line reduce gradually, until it approaches the center ofthe circle. Owing to the probability that this circle gets stuck with the local solution, the angle, where the tangent line touches the point, is randomly changed. The

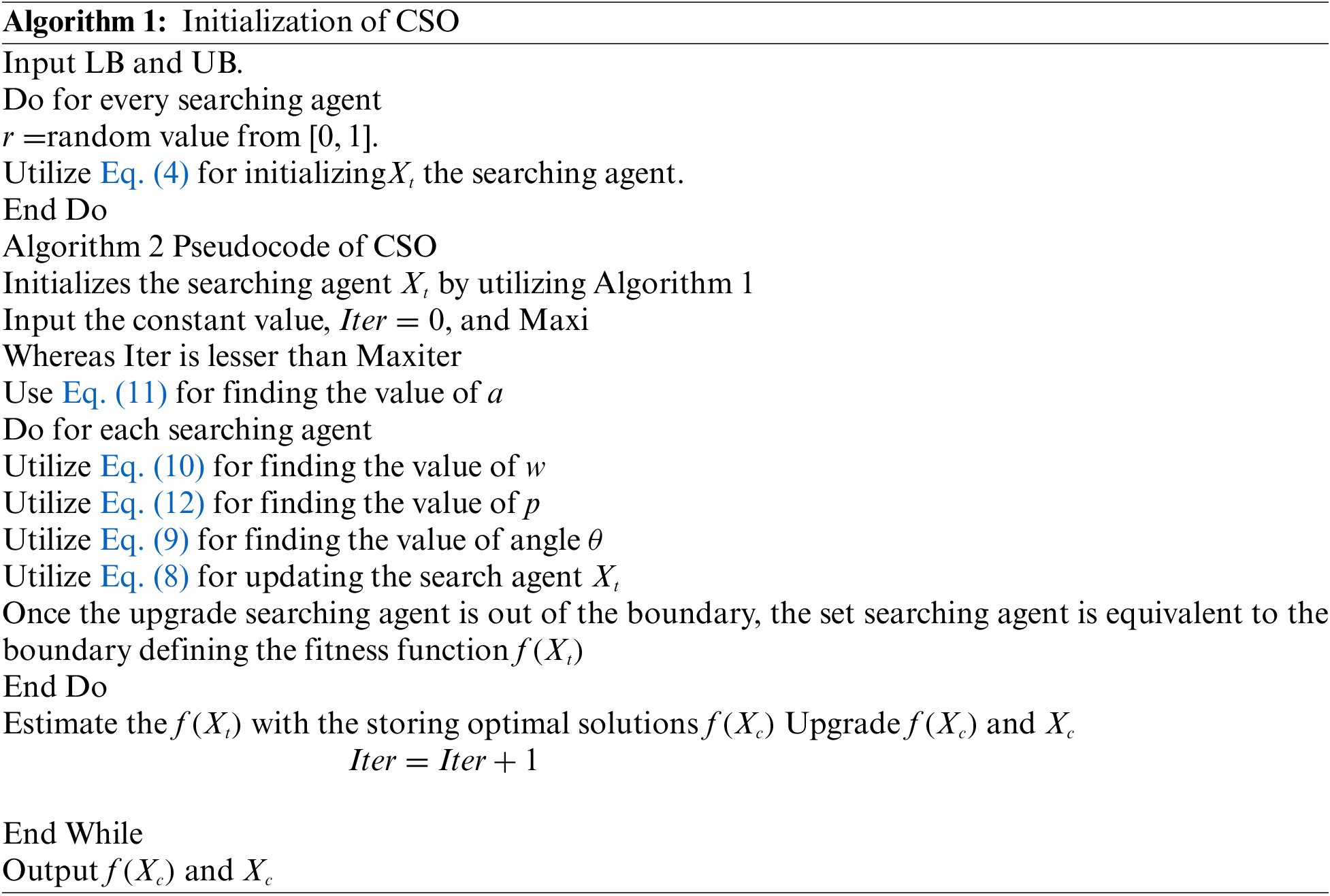

Step 1: Initialization: This is a crucial phase in CSO in which the overall set of the dimensions of the searching agent must be randomized equally, as demonstrated in Algorithm 1. The majority of the existing codes randomize the dimension unequally. This phenomenon occasionally makes the algorithm achieve a better outcome unexpectedly. Next, the searching agent is initialized between the (UB) and (LB) upper and lower limits of the searching region as given below.

In Eq. (7), the random vector is represented by

Step 2: Upgrade the location of the searching agent; the location of the searching agent

In Eq. (8), angle

In this expression, the random number is represented by rand that lies in the range of 0 and 1. Iter refers to the iteration count, Maxiter indicates the maximal iteration amount, and

Fitness Function is used to evaluate the objective function. The primary intention is to design a steganography model that must maximize PSNR and minimize the error rate (MSE) and is achieved using the following equation.

Both maximized and minimized values can be acquired by leveraging the CSO system.

The proposed model enables an encryption approach to encrypt secret images. Signcryption is a public key cryptosystem that provides sufficient privacy to private images, by producing digital signatures and following the encryption process. The parameter, utilized in the Signcryption technique, is denoted by standard ‘cp’ while ‘xs’ denotes the private key of the sender, ‘S’ denotes the sender, ‘ys’ denotes the sender and receiver public key, and the public key of the receiver is denoted by ‘yr’. While ‘yr’ is fed as input in the form of ‘binfo’ to the Signcryption system. The variable ‘binfo’ is fundamental to secure the Signcryption process and is composed of strings that exclusively recognize the receiver and the sender or the hash value of the public key. The steps that are used to signcrypt the private images are discussed below.

Step 1: Choose any value for ‘x’ in the range of 1 to

Step 2: The hash function is evaluated to receive the public key and ‘N’ with

Step 3: Then, it is segregated into two 64-bit strings such as K1 and K2 (key pairs).

Step 4: The message ‘m’ is encrypted, through the sender, using a public key encryption system ‘E’ in which key K1 is used to achieve the cipher text ‘c’; here,

Step 5: K2 is utilized in a one-way keyed hash ‘KH’ to retrieve the hash of messages. Here, ‘r’ represents the hash value of 128 bits for the message

Step 6: Next, the value of ‘s’ is calculated based on the ‘x’ value and the private key, ‘xa’ while a large prime value Ln and ‘r’ are used in s = x/(r + xa) mod Ln

Step 7: c, s, and r values are transferred to the receivers at once via signcryptext ‘C’ to complete the secured communication.

At last, the encrypted cover image is embedded as an optimal designated pixel point of the cover images. This guarantees the privacy of the stego images, due to the encryption process and the embedding of private images.

3.2 UAV Image Classification Module

To perform UAV image classification, the CSODL-SUAVC model carries out three sub-processes namely, SRCNN-based feature extraction, Adam optimizer, and softmax classifier. There has been some research conducted on utilizing the DL technique for high image resolution [24]. To be specific, SRCNN directly learns end-to-end mappings between higher and lower-resolution images. Mapping signifies a deep-CNN model that comprises non-linear mapping, reconstruction, extraction of the patches, and representation. At the beginning of describing all the operations, only a single low-resolution image is considered. The selected image is then up-scaled towards the preferred size with the help of bi-cubic interpolation; later the image is represented as an interpolated image i.e.,

At first, patch extraction and representation are formulated as given herewith.

In Eq. (14),

Next, the nonlinear mapping is expressed as follows.

In Eq. (15),

In Eq. (16),

In Eq. (17), the number of trained instances is represented by

SM classifier is used to allocate the class labels to the input UAV images.

It multiplies every value obtained irrespective of its nature and converts it to an entire number that is continuously between

whereas,

To improve the performance of the SRCNN algorithm, the Adam optimizer is employed. Adam is an optimized approach that is utilized for iteratively upgrading the network weight with the help of trained data, instead of the standard Stochastic Gradient Descent (SGD) process. This method is the most effective technique in overcoming difficult issues with a huge number of variables or data. It is effectual and economical in terms of memory. It performs a mix of Gradient Descent (GD) with momentum and Root Mean Square propagation techniques [25]. Two GD techniques are integrated into the Adam optimizer. Adam optimizer includes the strength of two preceding methods to further effectual GD. When the formulas are utilized in the two preceding manners, the following equation is obtained.

After all the iterations are over, it is instinctively altered to GD thereby remaining constant and impartial across the procedure, and is given the name, Adam. At this point, rather than the normal weighted parameters,

During every technique, this optimization is utilized due to its maximal efficacy and less memory utilization requirement.

In this section, the proposed CSODL-SUAVC approach was experimentally validated utilizing UCM [26] and AID datasets. A few sample images are shown in Fig. 3. UCM dataset comprises 21 scene types like oil tanks, residential areas, farmland, forest, and so on. There are 100 images present for every scene type and are sized at 256 × 256 pixels. Altogether, this dataset has 2,100 RGB images with a spatial resolution of ~0.3 m. AID dataset covers 30 scene types with finely classified scene types. The number of RGB images in every category varies in the range of ~220 to 440 RGB images. So, the total amount of images in this dataset is 10,000. The image size is 600 × 600 pixels and its resolution is ~0.5–8 m.

Figure 3: Sample images

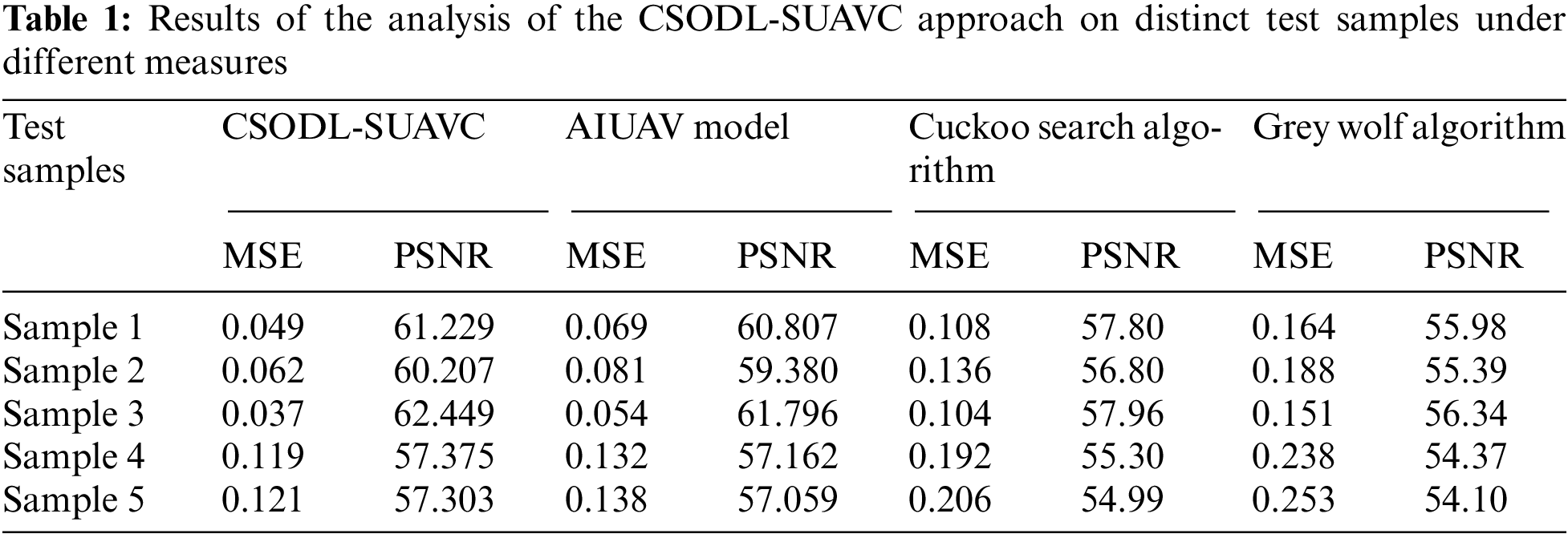

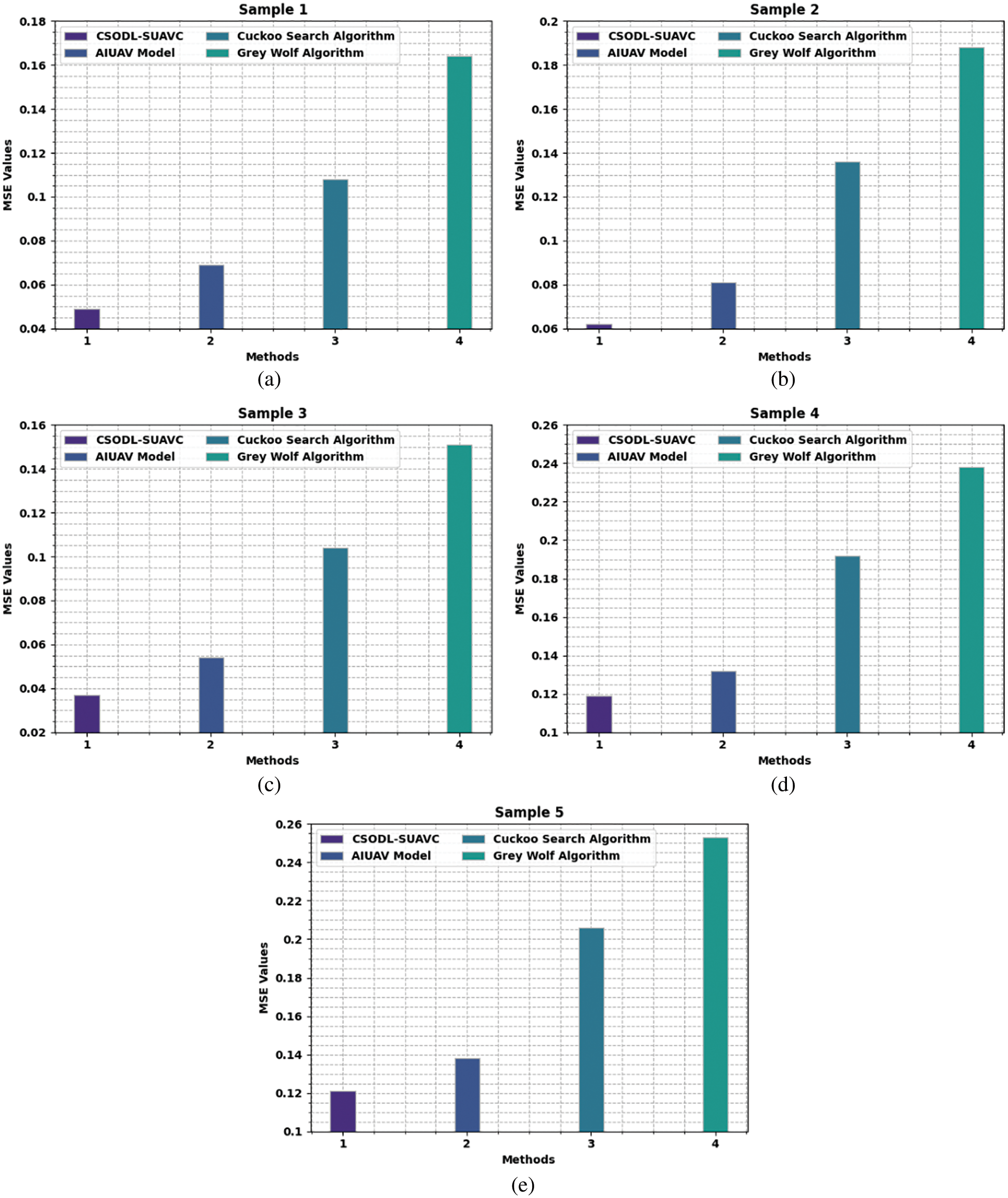

Table 1 provides the analytical results of the proposed CSODL-SUAVC system and other existing models in terms of MSE and PSNR [27]. Fig. 4 portrays the Mean Square Error (MSE) scrutinization, performed by the CSODL-SUAVC system and other recent models under a distinct number of samples. The figure indicates that the proposed CSODL-SUAVC method gained effectual outcomes with minimal values of MSE. For example, in sample 1, the CSODL-SUAVC model offered a low MSE of 0.049, but other techniques such as AI-based UAV (AIUAV), Cuckoo Search (CS), and Grey Wolf Optimization (GWO) techniques attained high MSE values such as 0.069, 0.108, and 0.164 correspondingly. In addition, in sample 5, the proposed CSODL-SUAVC approach obtained a low MSE of 0.121, where AIUAV, CS, and GWO techniques obtained high MSE values such as 0.138, 0.206, and 0.253 correspondingly.

Figure 4: MSE analysis results of CSODL-SUAVC approach (a) Sample 1, (b) Sample 2, (c) Sample 3, (d) Sample 4, and (e) Sample 5

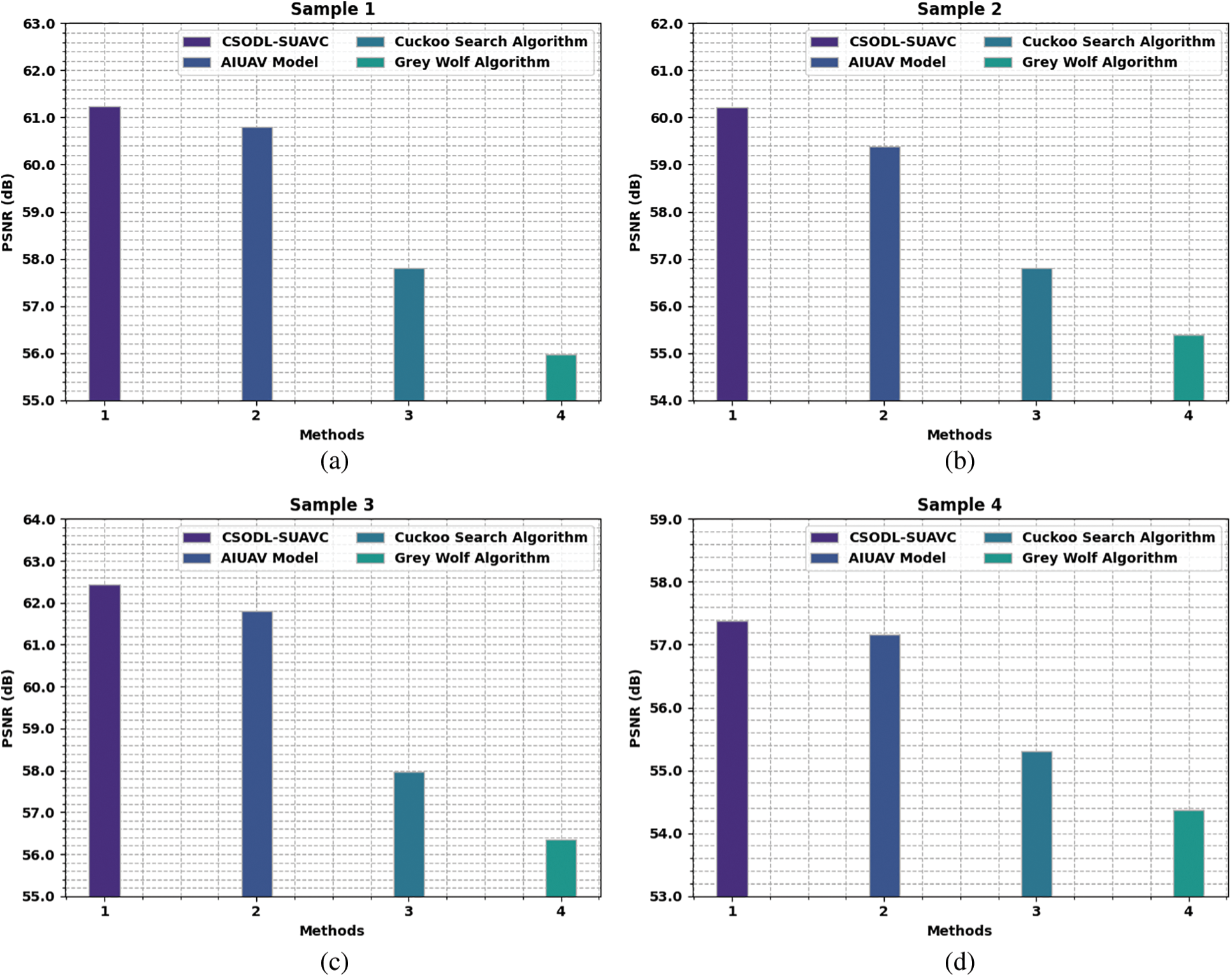

A comparative Peak Signal to Noise Ratio (PSNR) study was conducted on the CSODL-SUAVC model and other existing models and the results are shown in Fig. 5. The results portray that the proposed CSODL-SUAVC model achieved enhanced results with maximum PSNR values for every sample. For instance, in sample 1, the proposed CSODL-SUAVC model demonstrated a maximum PSNR value of 61.229 dB, while AIUAV, CS, and GWO approach produced the least PSNR values such as 60.807, 57.80, and 55.98 dB respectively. Besides, in sample 5, the presented CSODL-SUAVC technique accomplished a maximum PSNR of 57.303 dB, whereas AIUAV, CS, and GWO methodologies produced the minimum PSNR values such as 57.059, 54.99, and 54.10 dB correspondingly.

Figure 5: PSNR analysis results of CSODL-SUAVC approach (a) Sampl1, (b) Sample 2, (c) Sample3, (d) Sample4, and (e) Sample5

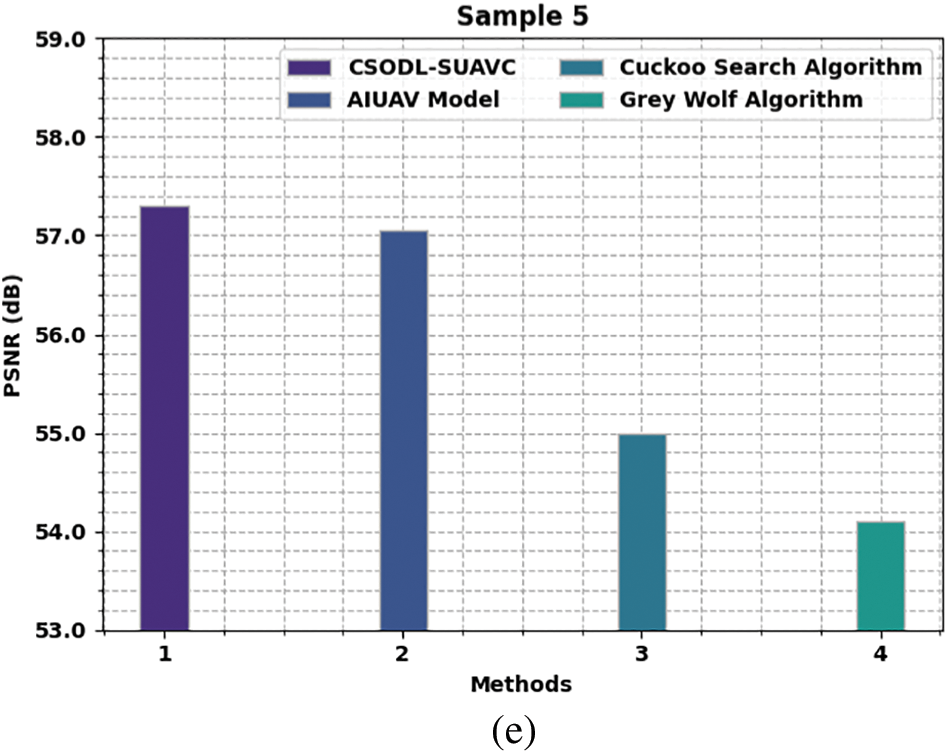

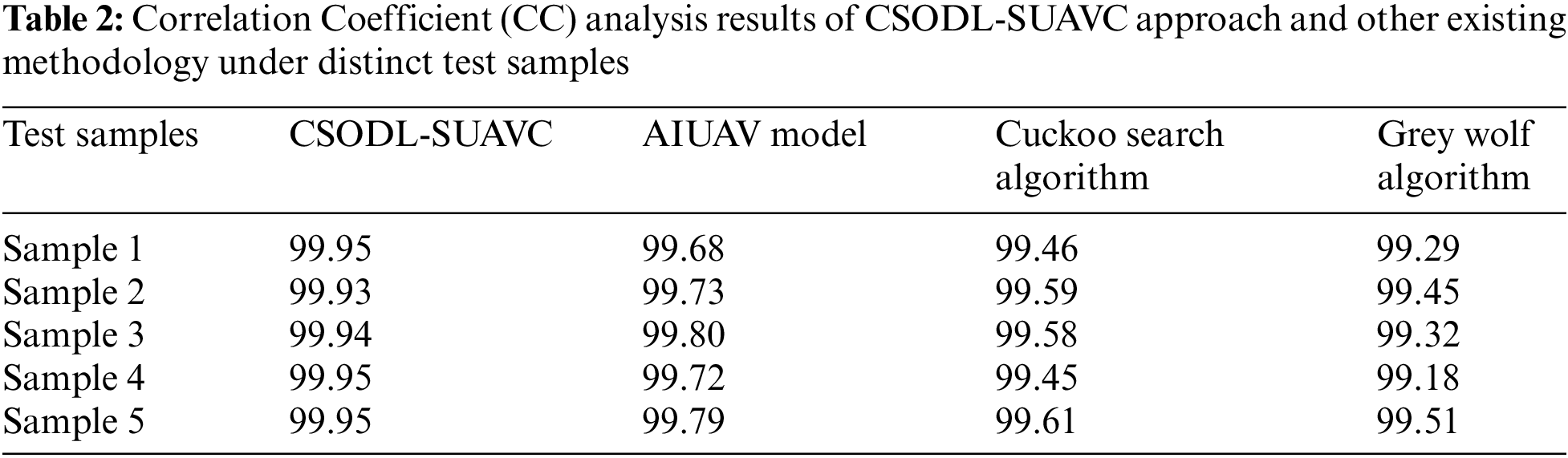

A comparative CC analysis was conducted between the CSODL-SUAVC approach and other existing methodologies and the results are illustrated in Table 2 and Fig. 6. The outcomes represent that the proposed CSODL-SUAVC approach achieved excellent results with maximal CC values for all the samples. For instance, in sample 1, the proposed CSODL-SUAVC technique demonstrated a maximum CC of 99.95, whereas AIUAV, CS, and GWO algorithms resulted in minimal CC values such as 99.68, 99.46, and 99.29 correspondingly. In addition, in sample 5, the proposed CSODL-SUAVC system exhibited a superior CC of 99.95, whereas AIUAV, CS, and GWO algorithms accomplished less CC values such as 99.79, 99.61, and 99.51 correspondingly.

Figure 6: CC analysis results of CSODL-SUAVC approach and other methodologies with distinct test samples

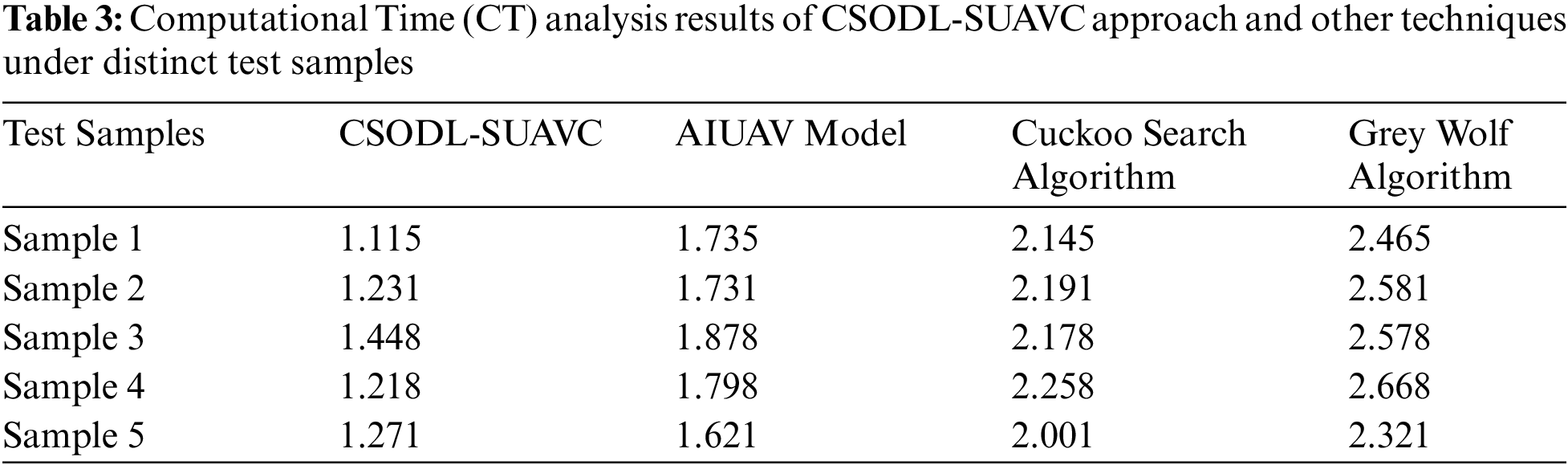

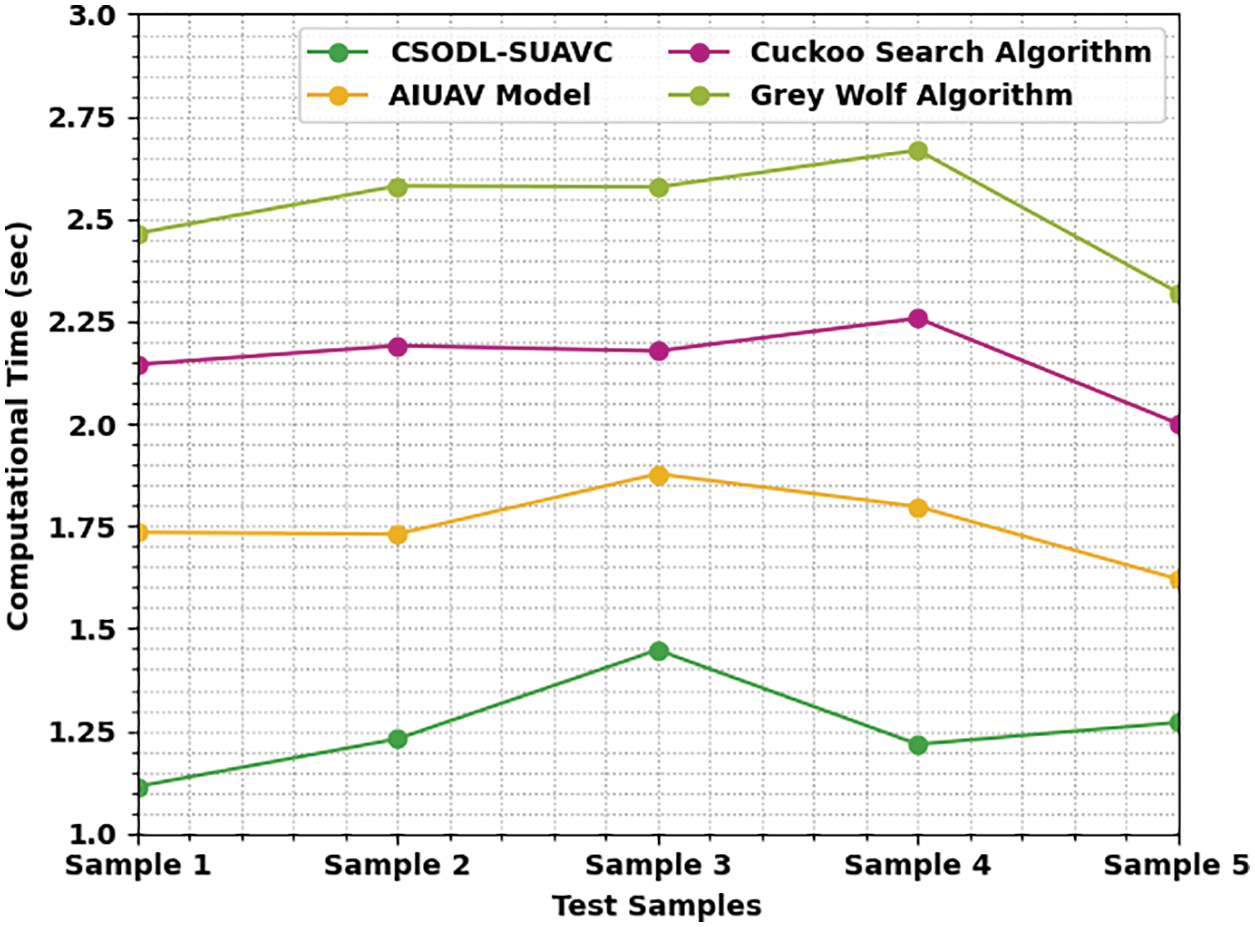

Table 3 and Fig. 7 portray the CT investigation results achieved by the proposed CSODL-SUAVC approach and other recent models under different samples. The figure implies that the proposed CSODL-SUAVC system attained effective outcomes with minimal CT values. For instance, in sample 1, the proposed CSODL-SUAVC algorithm obtained a low CT of 1.115 s, whereas AIUAV, CS, and GWO algorithms achieved high CT values such as 1.735, 2.145, and 2.465 s correspondingly. Eventually, in sample 5, the presented CSODL-SUAVC model offered a low CT of 1.271 s, whereas AIUAV, CS, and GWO algorithms attained maximal CT values such as 1.621, 2.001, and 2.321 s correspondingly.

Figure 7: CT analysis results of CSODL-SUAVC approach and other techniques under distinct test samples

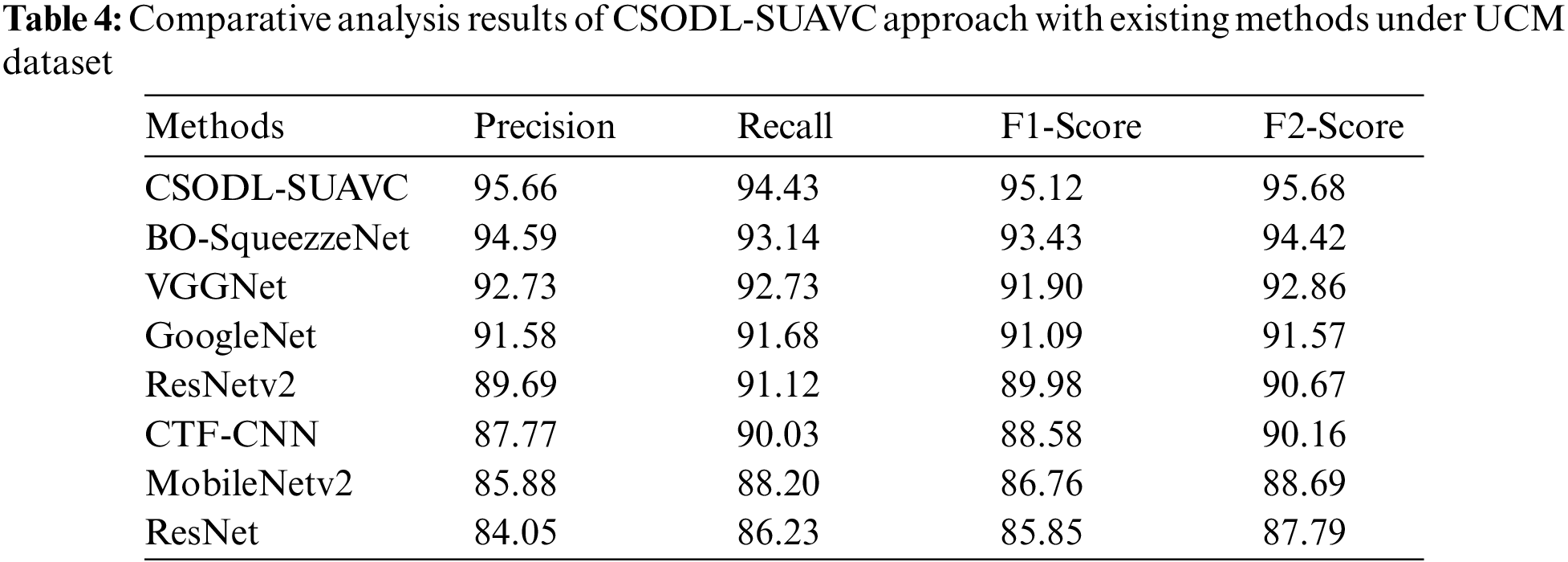

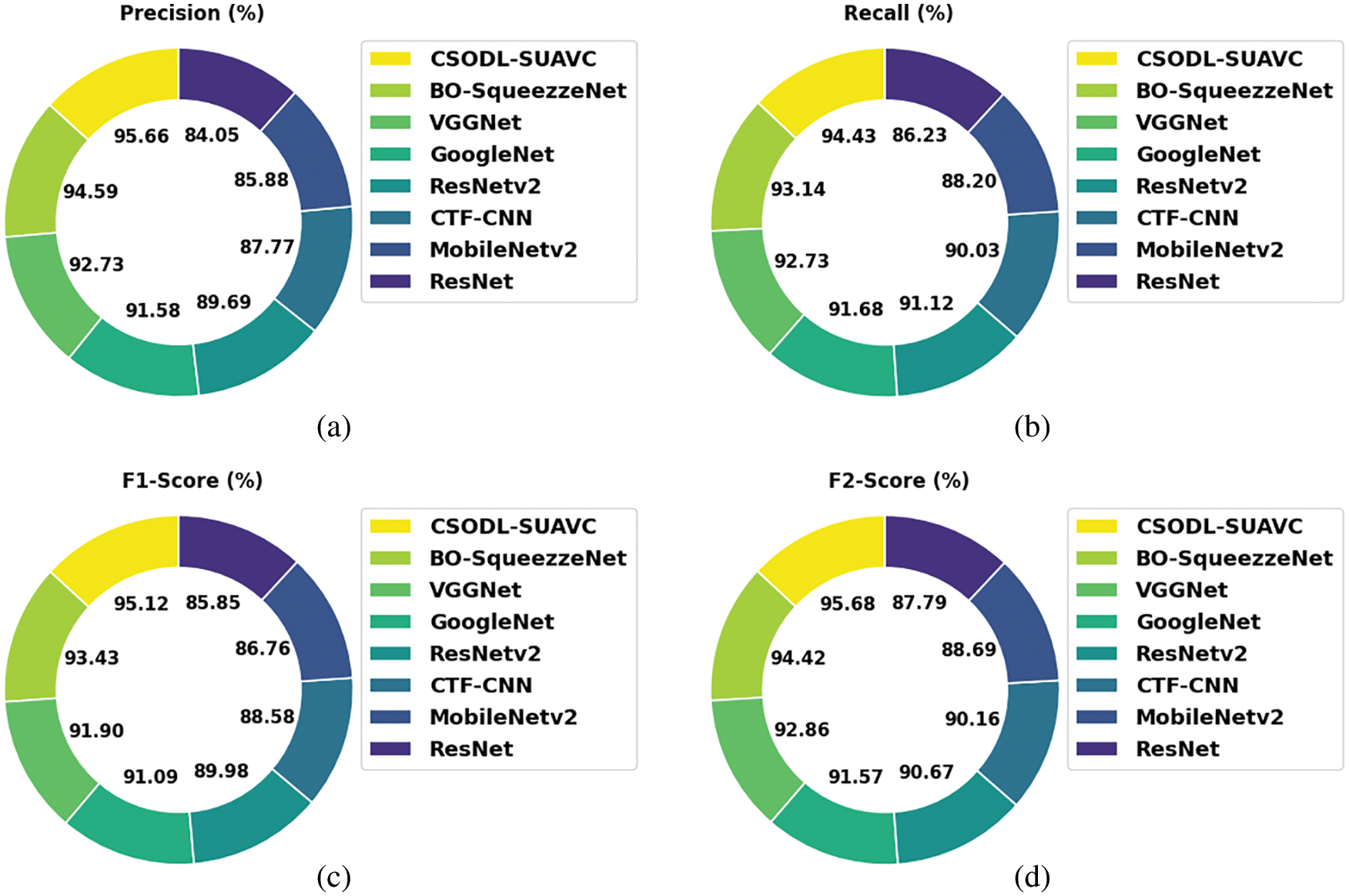

Table 4 and Fig. 8 provide an overview of the comparative analysis results accomplished by the proposed CSODL-SUAVC system on the test UCM dataset [28,29]. The results infer that the CSODL-SUAVC model can attain maximum classification results under different measures. For

Figure 8: Comparative analysis results of CSODL-SUAVC approach under UCM dataset (a)

Besides, concerning

In this study, a new CSODL-SUAVC model has been developed to accomplish secure UAV communication and classification in Industry 4.0 environment. The presented CSODL-SUAVC technique performs image steganography via ML-DWT, CSO-based optimal pixel selection, and signcryption-based encryption technique. At the same time, the image classification module encompasses SRCNN-based feature extraction, Adam optimizer, and softmax classifier. The integration of the CSO-OPS algorithm and Adam optimizer helps in achieving the maximum performance on UAV communication. The proposed CSODL-SUAVC method was experimentally validated using benchmark datasets and the outcomes were measured under distinct aspects. The simulation outcomes infer the better efficiency of the proposed CSODL-SUAVC model over recent approaches. Thus, the presented CSODL-SUAVC model can be applied to enable secure communication and classification in a UAV environment. In the future, hybrid DL methodologies can be applied to improve the classification performance of the proposed CSODL-SUAVC model.

Funding Statement: The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through the small Groups Project under grant number (168/43). Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R151), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. The authors would like to thank the Deanship of Scientific Research at Umm Al-Qura University for supporting this work by Grant Code: (22UQU4310373DSR59).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. D. K. Jain, Y. Li, M. J. Er, Q. Xin, D. Gupta et al., “Enabling unmanned aerial vehicle borne secure communication with classification framework for industry 5. 0,” IEEE Transactions on Industrial Informatics, vol. 18, no. 8, pp. 5477–5484, 2022. [Google Scholar]

2. D. De, A. Karmakar, P. S. Banerjee, S. Bhattacharyya and J. J. Rodrigues, “BCoT: Introduction to blockchain-based internet of things for industry 4.0. in blockchain based internet of things,” in: Blockchain Based Internet of Things, Lecture Notes on Data Engineering and Communications Technologies Book Series, vol. 112. Singapore: Springer, pp. 1–22, 2022. [Google Scholar]

3. K. Dev, K. F. Tsang and J. Corchado, “Guest editorial: The era of industry 5.0—technologies from no recognizable hm interface to hearty touch personal products,” IEEE Transactions on Industrial Informatics, vol. 18, no. 8, pp. 5432–5434, 2022. [Google Scholar]

4. P. F. Lamas, S. I. Lopes and T. M. F. Caramés, “Green IoT and edge AI as key technological enablers for a sustainable digital transition towards a smart circular economy: An industry 5.0 use case,” Sensors, vol. 21, no. 17, pp. 5745, 2021. [Google Scholar]

5. I. Abunadi, M. M. Althobaiti, F. N. Al-Wesabi, A. M. Hilal, M. Medani et al., “Federated learning with blockchain assisted image classification for clustered UAV networks,” Computers, Materials & Continua, vol. 72, no. 1, pp. 1195–1212, 2022. [Google Scholar]

6. B. Chander, S. Pal, D. De and R. Buyya, “Artificial intelligence-based internet of things for industry 4.0,” in Artificial Intelligence-based Internet of Things Systems, Internet of Things, Cham: Springer, pp. 3–45, 2022. [Google Scholar]

7. M. A. Alohali, F. N. Al-Wesabi, A. M. Hilal, S. Goel, D. Gupta et al., “Artificial intelligence enabled intrusion detection systems for cognitive cyber-physical systems in industry 4.0 environment,” Cognitive Neurodynamics, vol. 16, no. 5, pp. 1045–1057, 2022. https://doi.org/10.1007/s10586-021-03401-5. [Google Scholar]

8. D. G. Broo, O. Kaynak and S. M. Sait, “Rethinking engineering education at the age of industry 5. 0,” Journal of Industrial Information Integration, vol. 25, no. 8, pp. 100311, 2022. [Google Scholar]

9. A. M. Hilal, J. S. Alzahrani, I. Abunadi, N. Nemri, F. N. Al-Wesabi et al., “Intelligent deep learning model for privacy preserving IIoT on 6G environment,” Computers, Materials & Continua, vol. 72, no. 1, pp. 333–348, 2022. [Google Scholar]

10. A. V. L. N. Sujith, G. S. Sajja, V. Mahalakshmi, S. Nuhmani and B. Prasanalakshmi, “Systematic review of smart health monitoring using deep learning and artificial intelligence,” Neuroscience Informatics, vol. 2, no. 3, pp. 100028, 2022. [Google Scholar]

11. A. M. Hilal, M. A. Alohali, F. N. Al-Wesabi, N. Nemri, H. J. Alyamani et al., “Enhancing quality of experience in mobile edge computing using deep learning based data offloading and cyberattack detection technique,” Cluster Computing, vol. 76, no. 4, pp. 2518, 2021. https://doi.org/10.1007/s10586-021-03401-5. [Google Scholar]

12. S. A. A. Hakeem, H. H. Hussein and H. Kim, “Security requirements and challenges of 6G technologies and applications,” Sensors, vol. 22, no. 5, pp. 1969, 2022. [Google Scholar]

13. P. Porambage, G. Gur, D. P. M. Osorio, M. Livanage and M. Ylianttila, “6G security challenges and potential solutions,” in 2021 Joint European Conf. on Networks and Communications & 6G Summit (EuCNC/6G Summit), Porto, Portugal, pp. 622–627, 2021. [Google Scholar]

14. S. Wang, M. A. Qureshi, L. M. Pechuaán, T. H. The, T. R. Gadekallu et al., “Explainable AI for B5G/6G: Technical aspects, use cases, and research challenges,” arXiv preprint arXiv: 2112. 04698, 2021. [Google Scholar]

15. P. K. R. Maddikunta, Q. V. Pham, B. Prabadevi, N. Deepa, K. Dev et al., “Industry 5.0: A survey on enabling technologies and potential applications,” Journal of Industrial Information Integration, vol. 26, no. 2, pp. 100257, 2022. [Google Scholar]

16. R. Sharma and R. Arya, “UAV based long range environment monitoring system with Industry 4.0 perspectives for smart city infrastructure,” Computers & Industrial Engineering, vol. 168, pp. 10806, 2022. [Google Scholar]

17. S. A. Bhat, N. F. Huang, I. B. Sofi and M. Sultan, “Agriculture-food supply chain management based on blockchain and IoT: A narrative on enterprise blockchain interoperability,” Agriculture, vol. 12, no. 1, pp. 40, 2021. [Google Scholar]

18. A. A. Fanid, M. Dabaghchian, N. Wang, P. Wang, L. Zhao et al., “Machine learning-based delay-aware UAV detection over encrypted wi-fi traffic,” in 2019 IEEE Conf. on Communications and Network Security (CNS), Washington, D.C., USA, pp. 1–7, 2019. [Google Scholar]

19. A. Aldaej, T. A. Ahanger, M. Atiquzzaman, I. Ullah and M. Yousufudin, “Smart cybersecurity framework for IoT-empowered drones: Machine learning perspective,” Sensors, vol. 22, no. 7, pp. 2630, 2022. [Google Scholar]

20. Q. A. Al-Haija and A. Al Badawi, “High-performance intrusion detection system for networked UAVs via deep learning,” Neural Computing and Applications, vol. 34, no. 13, pp. 10885–10900, 2022.https://doi.org/10.1007/s00521-022-07015-9. [Google Scholar]

21. R. Kumar, P. Kumar, R. Tripathi, G. P. Gupta, T. R. Gadekallu et al., “SP2F: A secured privacy-preserving framework for smart agricultural unmanned aerial Vehicles,” Computer Networks, vol. 187, no. 2, pp. 107819, 2021. [Google Scholar]

22. K. K. Hasan, U. K. Ngah and M. F. M. Salleh, “Multilevel decomposition discrete wavelet transform for hardware image compression architectures applications,” in 2013 IEEE Int. Conf. on Control System, Computing and Engineering, Penang, Malaysia, pp. 315–320, 2013. [Google Scholar]

23. M. H. Qais, H. M. Hasanien, R. A. Turky, S. Alghuwainem, M. T. Véliz et al., “Circle search algorithm: A geometry-based metaheuristic optimization algorithm,” Mathematics, vol. 10, no. 10, pp. 1626, 2022. [Google Scholar]

24. N. Galgali, M. M. Pereira, N. K. Likitha, B. R. Madhushri, E. S. Vani et al., “Real-time image deblurring and super resolution using convolutional neural networks,” in Emerging Research in Computing, Information, Communication and Applications, Singapore: Springer, pp. 381–394, 2022. [Google Scholar]

25. D. Bhowmik, M. Abdullah, R. Bin and M. T. Islam, “A deep face-mask detection model using DenseNet169 and image processing techniques (Doctoral dissertation, Brac University),” 2022. [Google Scholar]

26. Y. Yang and S. Newsam, “Bag-of-visual-words and spatial extensions for land-use classification,” in ACM SIGSPATIAL Int. Conf. on Advances in Geographic Information Systems (ACM GIS), 2010. [Online]. Available: http://weegee.vision.ucmerced.edu/datasets/landuse.html. [Google Scholar]

27. R. L. Ambika, Biradar and V. Burkpalli, “Encryption-based steganography of images by multiobjective whale optimal pixel selection,” International Journal of Computers and Applications, vol. 46, no. 4, pp. 1–10, 2019. [Google Scholar]

28. Y. Li, R. Chen, Y. Zhang, M. Zhang and L. Chen, “Multi-label remote sensing image scene classification by combining a convolutional neural network and a graph neural network,” Remote Sensing, vol. 12, no. 23, pp. 4003, 2020. [Google Scholar]

29. D. Yu, Q. Xu, H. Guo, C. Zhao, Y. Lin et al., “An efficient and lightweight convolutional neural network for remote sensing image scene classification,” Sensors, vol. 20, no. 7, pp. 1999, 2020. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools