Open Access

Open Access

ARTICLE

Iris Recognition Based on Multilevel Thresholding Technique and Modified Fuzzy c-Means Algorithm

1 Computer Engineering Department, Faculty of Computers and Information Technology, University of Tabuk, Tabuk, 47512, Saudi Arabia

2 Department of Electrical Engineering, University of Tunis, CEREP, ENSIT 5 Av, Taha Hussein, 1008, Tunis, Tunisia

* Corresponding Author: Slim Ben Chaabane. Email:

Journal on Artificial Intelligence 2022, 4(4), 201-214. https://doi.org/10.32604/jai.2022.032850

Received 31 May 2022; Accepted 28 July 2022; Issue published 25 May 2023

Abstract

Biometrics represents the technology for measuring the characteristics of the human body. Biometric authentication currently allows for secure, easy, and fast access by recognizing a person based on facial, voice, and fingerprint traits. Iris authentication is one of the essential biometric methods for identifying a person. This authentication type has become popular in research and practical applications. Unlike the face and hands, the iris is an internal organ, protected and therefore less likely to be damaged. However, the number of helpful information collected from the iris is much greater than the other biometric human organs. This work proposes a new iris identification model based on a multilevel thresholding technique and modified Fuzzy c-means algorithm. The multilevel thresholding technique extracts the iris from its surroundings, such as specular reflections, eyelashes, pupils, and sclera. On the other hand, the modified Fuzzy c-means is used to combine and classify the most useful statistical features to maximize the accuracy of the collected information. Therefore, having the most optimal iris recognition. The proposed model results are validated using True Success Rate (TSR) and compared to other existing models. The results show how effective the combination of the two stages of the proposed model is: the Otsu method and modified Fuzzy c-means for the 400 tested images representing 40 people.Keywords

The process of validating a person’s identity based on his/her characteristics is called authentication. Several identification methods are proposed for implementing a trade-off between technological solutions and safety-related information [1–3].

Biometrics is considered the most accurate and reliable technology to authenticate and identify a person based on his/her biological characteristics, such as hand morphology, retina, iris, voice, DNA fingerprints, and signatures [4].

Iris is one of the safest and most accurate biometric authentication methods. Unlike the hands and face, the iris is an internal organ, protected and therefore less likely to be damaged [5–7].

The authors in [8] have proposed a new model for iris recognition using the Scale Invariant Feature Transformation (SIFT). First, the SIFT characteristic feature is extracted. Then, the matching process is performed between two images. This matching is achieved based on associated descriptors by comparing each local extrema. Experiments results using the BioSec multimodal database demonstrate that the combination of the SIFT with a matching approach achieves significantly better performance than more existing approaches.

Another model is the Speeded Up Robust Features (SURF), proposed in [9]. This model extracts unique features from annular iris images, which results in satisfactory recognition rates. In [10], the authers developed a system for iris recognition using a SURF key that is extracted from normalization and enhancing images of the iris. This process will provide high accuracy.

With the same objective, Masek [11] has taken advantage of the Canny edge detector and the circular Hough transform to detect iris boundaries. The Log-Gabor wavelets extract features and apply Hamming distance for recognition. In [12], the authors developed an iris recognition system that characterizes local variations of image structures. This work builds a one-dimensional (1D) intensity signal with essential local variations of the original 2D iris image. Moreover, intensity signals Gaussian–Hermite moments are utilized as distinguishing features. For classification, the cosine similarity measure and nearest center classifier are used.

Some recent papers have used machine learning for iris recognition. For example, in [13], the authors’ framework proposes a model that uses artificial neural networks or personal identification. Another paper [14] uses neural networks for iris recognition. This paper extracts the eye from an image and then normalizes and enhances it. This process is applied to many images that are collected in one dataset. The neural network is employed on this dataset for iris classification.

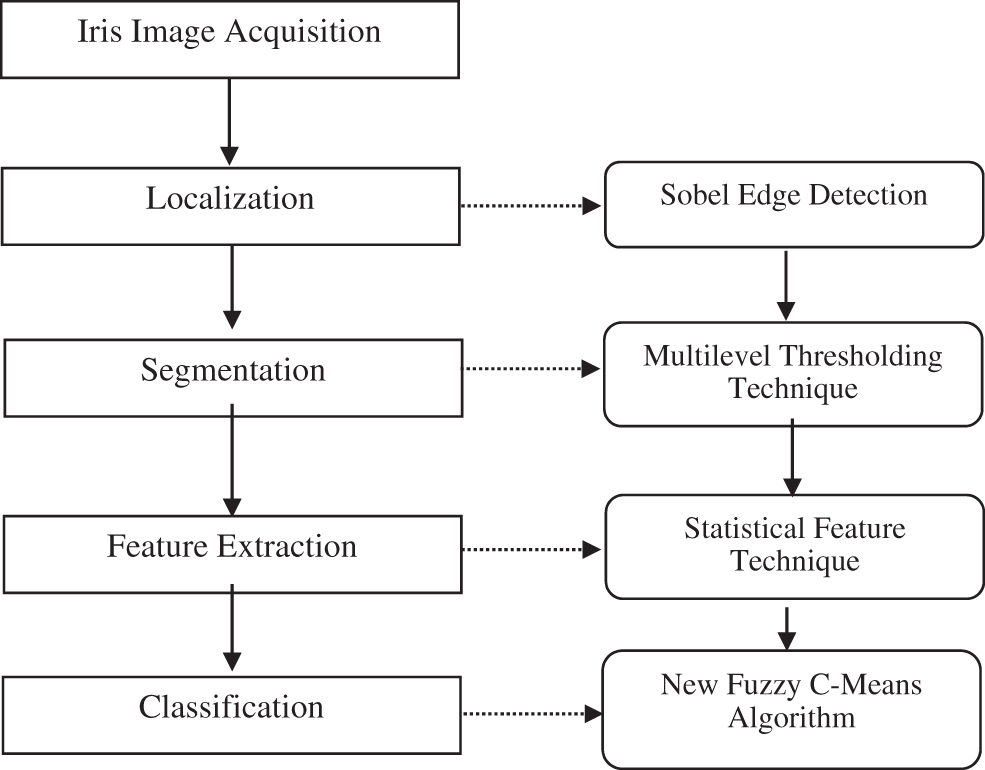

In our proposed model, the iris recognition system includes two main stages: “Iris segmentation and localization” and “Feature classification and extraction” [15]. Fig. 2 shows the steps of the methodology and the sequential proposed iris recognition system processing.

The face recognition method in this work has a different concept and explores a new strategy. The notion behind the proposed model is to explore all possible solutions for merging the multilevel thresholding method and Fuzzy c-means algorithm instead of elaborating a better-designed iris recognition model.

In the first step, some image processing solutions are utilized for pulling the iris from the image. Once the iris is localized, the two-stage multi-threshold Otsu’s technique segments the iris into two classes. Eventually, feature extraction is employed using a statistical features technique, and the FCM classifier is used for classification.

Section 2 discusses the proposed iris recognition model. The results are represented in Section 3. Section 4 concludes the paper.

Iris recognition is a biometrics technique that recognizes a person based on their iris. It represents an automated method that allows to authenticate/identify a person using the iris features. Practically, iris recognition can be carried out images (photos) or video recordings of one or both irises of an individual’s eyes using mathematical pattern recognition techniques, including complex patterns are unique, stable and can be seen from some distance.

In this work, we are interested in identifying people by their iris. The proposed system is conceptually different and explores new strategies. Indeed, rather than considering refinement of a better-designed iris recognition model, the proposed method suggests a possible alternative of combining a multi-level thresholding technique with a modified fuzzy c-means algorithm.

The proposed iris recognition method is developed using four fundamental steps: (1) Localization, (2) segmentation, (3) encoding, and (4) iris matching/classification [15].

The iris localization step consists of detecting the iris in the human image. The second step is to segment the images into two classes, iris, and non-iris. The main phase of the recognition cycle is the feature extraction which consists of extracting the feature vector for each iris identified in the second phase. Extracting the features accurately leads to an accurate output for the next steps. The features of the iris are extracted from raw images, a feature vector is built based on the statistical feature technique, and finally, the iris recognition result is obtained using the modified FCM technique. In the recognition phase, matching and classification of image features can be performed concerning specific criteria to specify the identity of an iris compared to all template iris databases. The flowchart shown in Fig. 1 depicts the stages of the proposed method.

Figure 1: The diagram of the proposed method

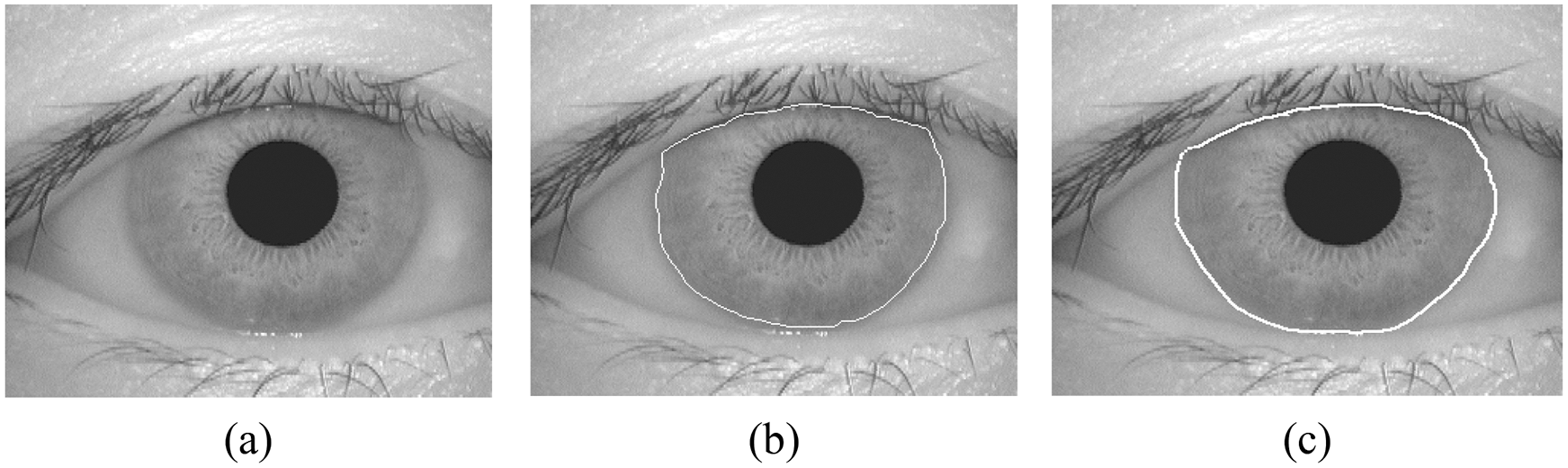

Figure 2: The localization of iris. (a) Original image, (b) Edge detection using sobel filter, (c) Reference result

Iris localization is one of the most important steps in an iris recognition system that determines match accuracy. This step mainly identifies the border of the iris. The iris border is the inner and outer border of the iris and the upper and lower eyelids. To do this, Sobel edge detection can be used to locate the iris in the original image. Sobel edge detection is based on image convolution with two integer filters, one horizontal and one vertical. This filter starts with the edge with the highest slope. Fig. 2 presents the localization of the iris using the Sobel edge detection and the reference edge detection.

2.2 Multilevel Thresholding Using a Two Stage Optimization Approach

In order to select the optimal thresholds for both unimodal and bimodal distributions, and to greatly improve the shortcomings of Otsu’s method in terms of multistage threshold selection, we used Two-stage Multithreshold Otsu’s method [16]. The general concept of the TSMO method is presented in reference [16].

Using this technique can significantly reduce the iterations required to compute the zeroth and first order moments of the class. In order to automatically classify the eyes image into the predefined categories of eyes and noneyes, we developed the two-stage thresholding technique.

At the first stage, the histogram of an image with L gray levels is divided into Mk groups which contain Nk gray levels.

Let Ω = {

The zeroth-order cumulative moment in the qth group denoted by

where:

The first-order cumulative moment in the qth group denoted by

The optimal threshold

However, the image is divided into two classes

The numbers of the cumulative pixels (

and

In the case of bi-level thresholding (

At the second stage of multilevel thresholding technique, in order to find the optimal threshold T, Otsu’s method is applied again to the group

The optimal threshold T is defined as:

Given the optimal threshold determined automatically by the two-stage Otsu optimization approach, the IT function classifies the pixels of the input image into two opposite classes (object and background) as follows:

During feature extraction, the system extracts the features from the segmented image [17]. To do this, the statistical method is used to complete the best statistical features and to build the attribute images [18]. The importance of feature extraction comes from that it determines the overall system performance. Usually, face recognition systems start with feature extraction, then feature selection, which increases the framework accuracy. The feature selection is a must since it affects the overall performance of the recognition system.

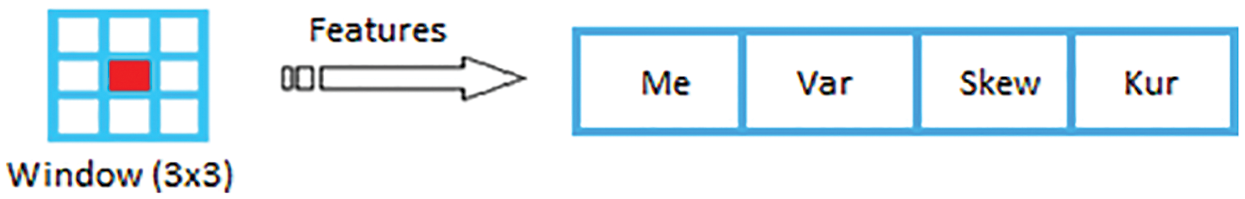

This work presents a new iris recognition technique that relies on statistical features. These features are commonly utilized in data science. It is usually the first statistical technique when examining a dataset, and it has various statistical properties such as skewness, variance, mean and median.

In our preprocessing stage, four statistical characteristics have been selected like mean, standard deviation, skewness, and kurtosis to ensure the distinctiveness and variations of features with application to iris recognition system.

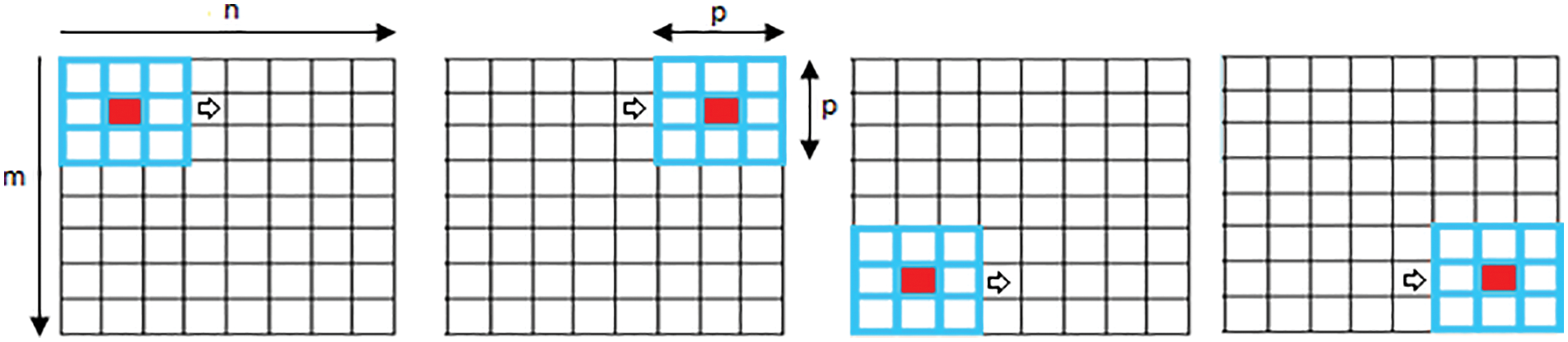

Instead of using the grayscale value of a particular pixel in the human iris median step, statistical features are computed from a sliding window (

Figure 3: The adaptive sliding window

The statistical attributes used in our application, as shown in Fig. 4, are: mean (

Figure 4: The features extraction

The calculation of the statistical features is affected by the length of the sliding window, hence, it is important for the window to provide ample space to capture sufficient information from the raw image. In contrast, if the window length is excessively long, this can be considered time-consuming. A

2.4 Use of Modified Fuzzy C-Means for Classification

A similarity measure is presented based on the previously extracted features to find the contrast between two irises. There are many techniques used to compare irises. References include distance calculations and similarity calculations. Other methods are based on classifying features using a single classifier such as SVM, Bayes classifier, etc.

Optimal Iris Feature Descriptors and Fuzzy C-Means seem to be an intriguing approach for iris recognition in this context. Moreover, we suggest substituting the pixel-value-based technique with a vector F that comprises the most effective statistical features to enhance the iris recognition accuracy.

The proposed image segmentation technique using the FCM algorithm combined with the statistical features can be summarized by the following steps:

Step 1: Initialization (Iteration t = 0)

Randomly initialize the matrix

Step 2: Construction of the matrix F of size

From the iteration

Step 3: Calculate the membership matrix

where, Fk and vi are vectors of size (1 × 4.

Step 4: Calculate the matrix

Step 5: Convergence test: if

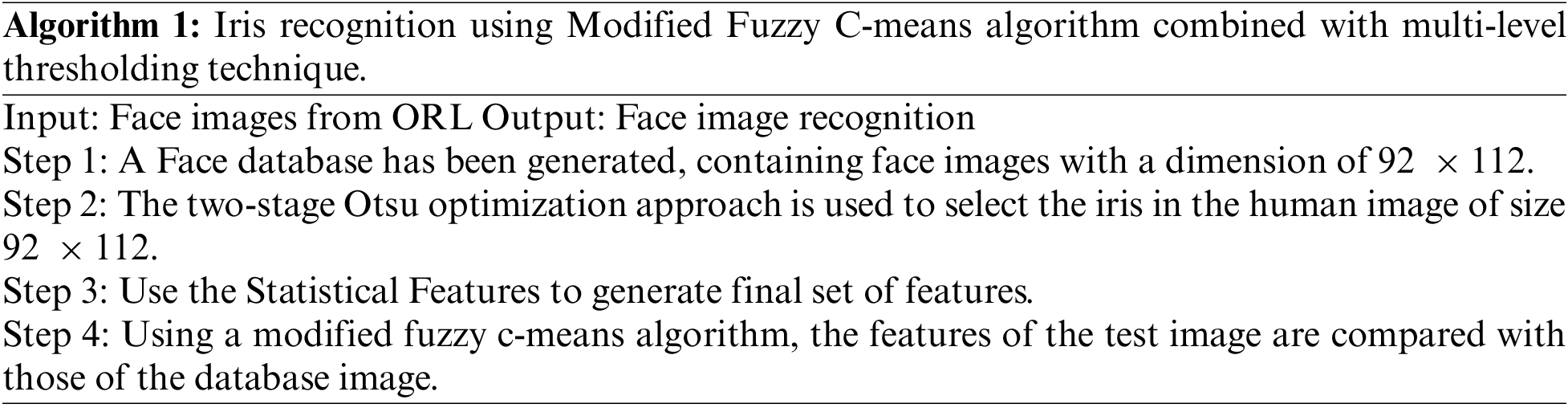

So, the steps of the proposed iris recognition technique using multi-level thresholding technique combined with Modified Fuzzy C-means algorithm are presented in Algorithm 1.

3 Experimental Results and Discussion

To evaluate the efficiency and accuracy of the proposed method, the results are compared vs. existing methods, as described earlier. The experiments are carried out on the MATLAB software 10.

The images originally are stored in gray level format and uses 8 bits with integer values between 0 and 255. Performance analysis considers the CASIA Iris database. This database contains 756 images from 108 different individuals. This database is currently the largest publicly available Iris database.

After performing the image segmentation described in Section 3.1, the homogeneous regions of each image were obtained. The original multilevel thresholding algorithm is used to address known number of regions in an image (iris and pupil) for image segmentation.

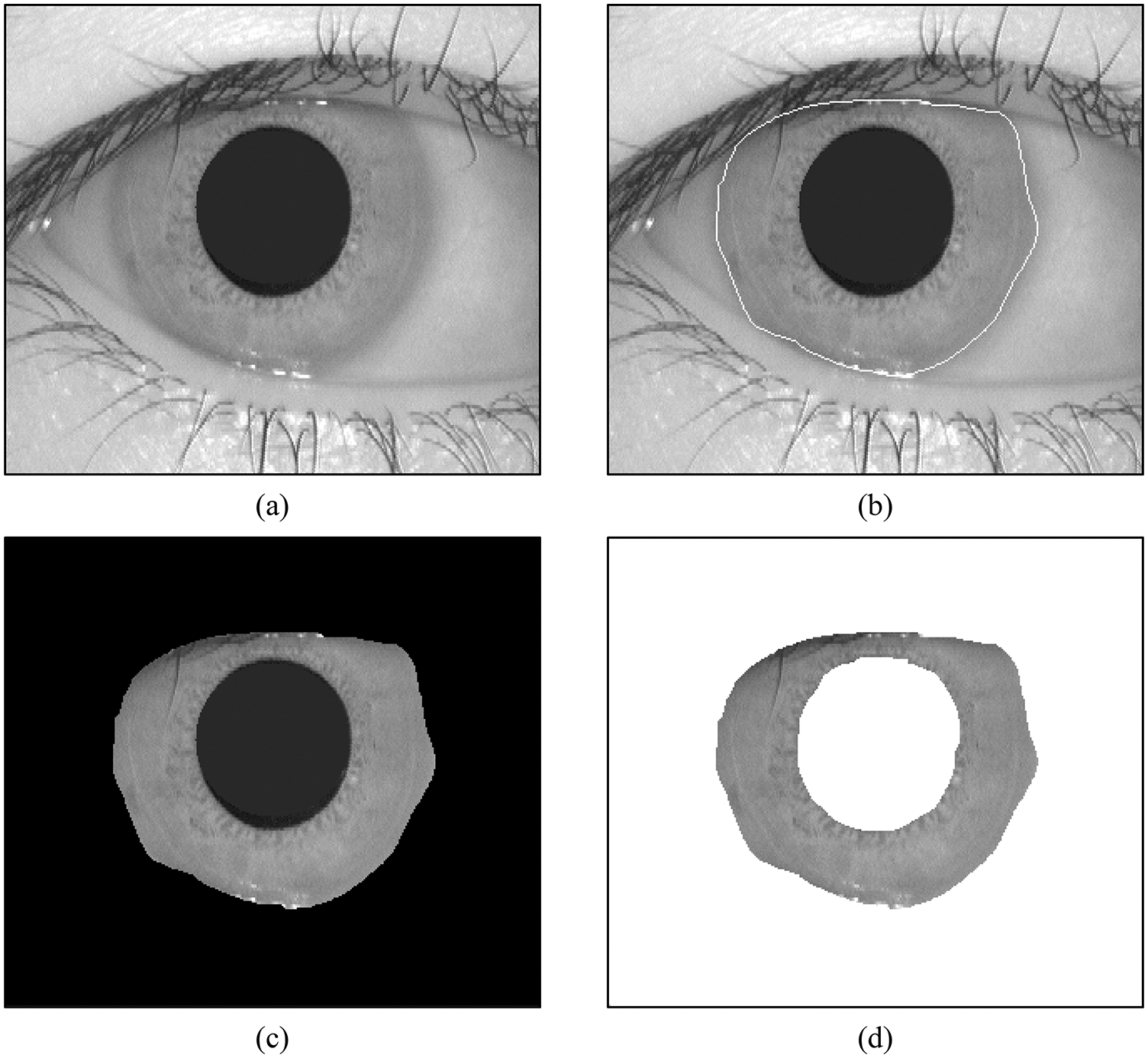

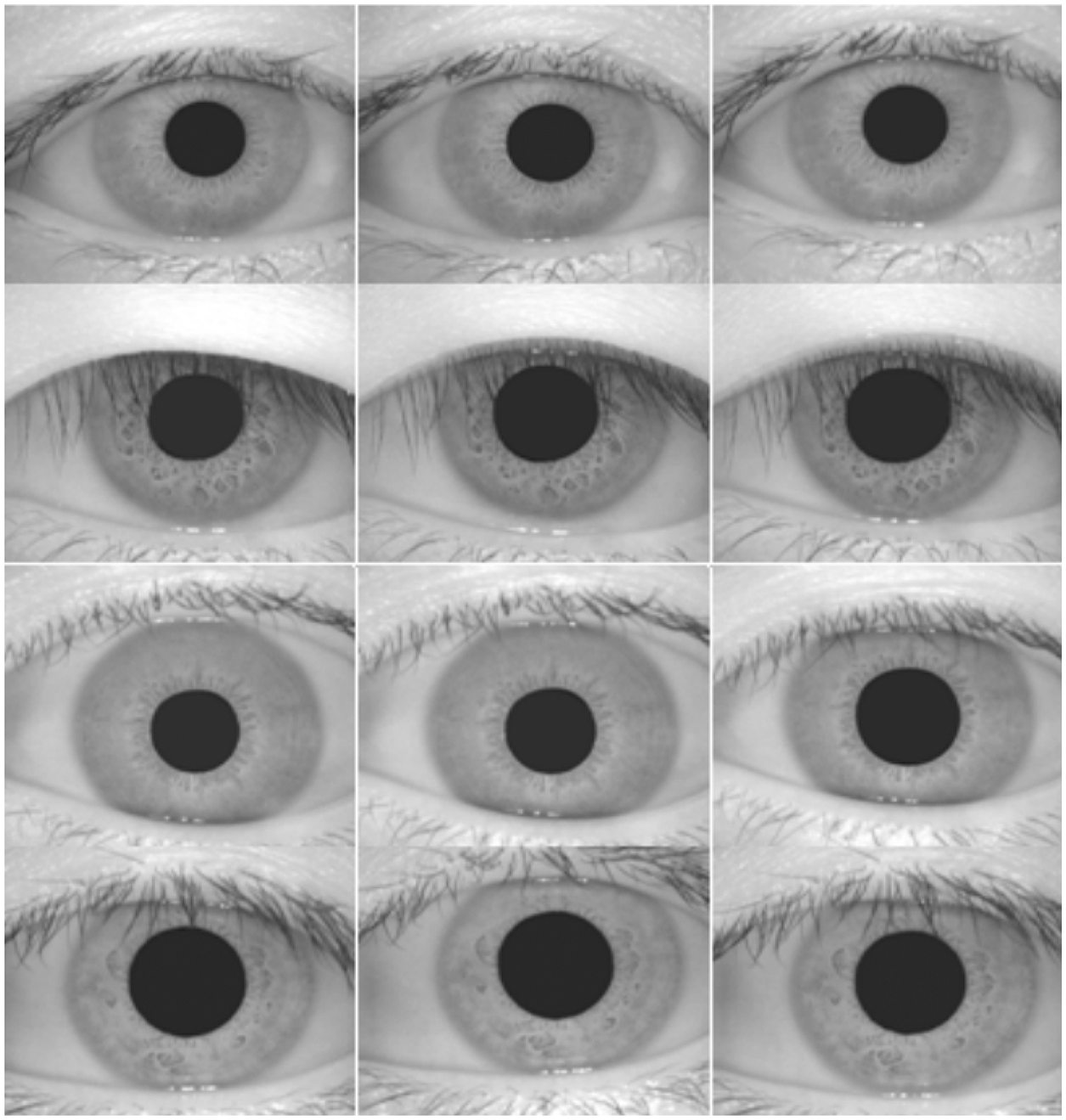

Fig. 5 shows the segmentation results for one example image. In this figure, (a) is an example image in the database and (d) is its region representation. Each segmented region is characterized by the average gray level of all pixels associated with that region. The segmentation results show that the two regions were correctly segmented by optimal multi-level thresholding using the two-level Otsu optimization approach. Accuracy evaluation uses a segmentation sensitivity criterion to determine the number of correctly classified pixels. Iris images evaluated on 756 images from the CASIA database were used. Some sample images are shown in Fig. 6. Image segmentation of the test database took 5.5 h and took about 1.9 s per image.

Figure 5: Image segmentation. (a) Original image. (b) Iris localization. (c) Segmented image (3 regions: Iris, Pupil, and background). (d) Segmented image (2 regions: Iris and background)

Figure 6: Example of irises of the human eye. Twelve were selected for a comparison study. The patterns are numbered from 1 through 12, starting at the upper left-hand corner. Image is from CASIA iris database (Fernando Alonso-Fernandez, 2009)

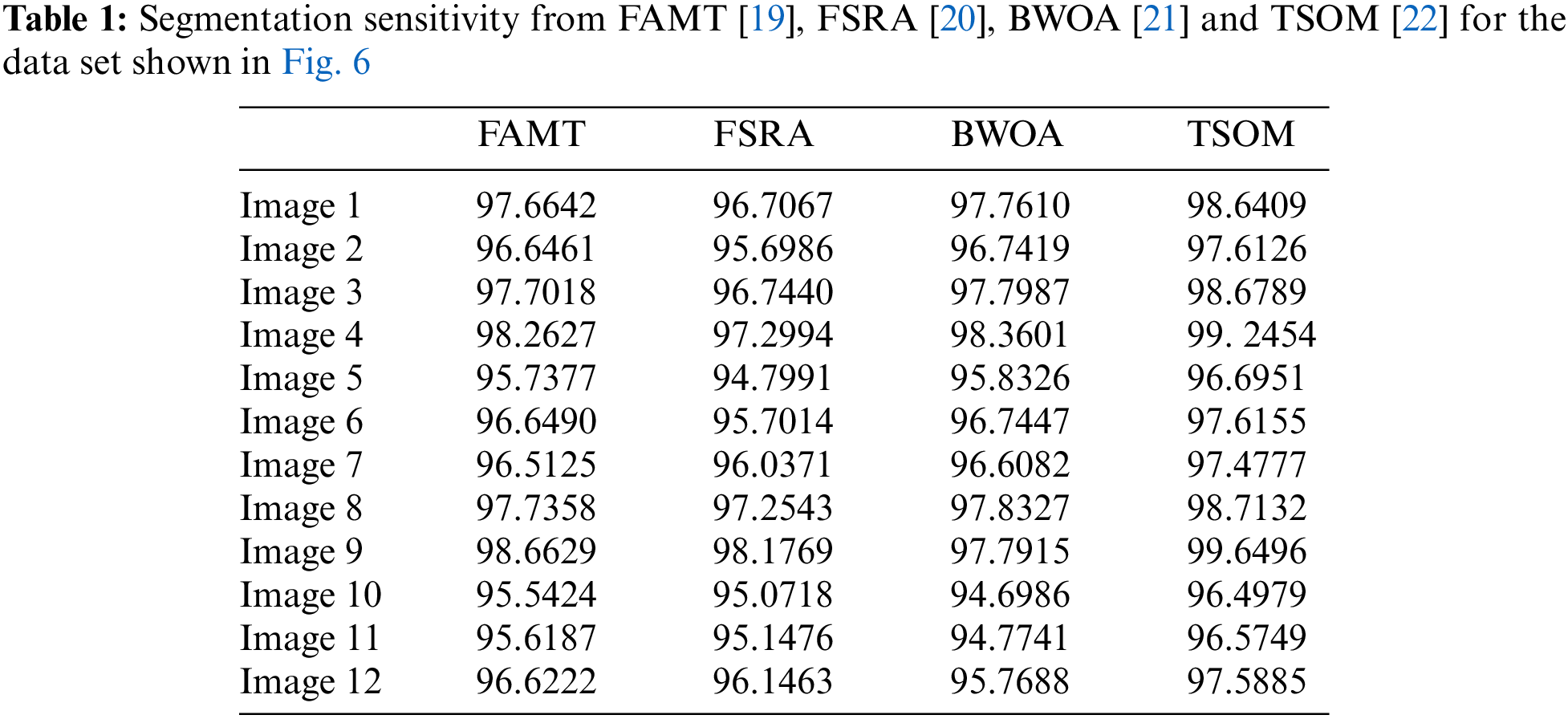

The segmentation sensitivity of some existing methods FAMT [19], FSRA [20], BWOA [21] and the two-stage Otsu optimization approach (TSOM) [22] is shown in Table 1. It can be seen from Table 1 that 31.77%, 20.44%, and 2.73% of pixels were incorrectly segmented by FAMT [19], FSRA [20], BWOA [21] and the two-stage Otsu optimization approach [22], respectively. In fact, the experimental results indicate that the multilevel thresholding technique is more accurate than existing methods in terms of segmentation quality, and the two regions are correctly segmented by the optimal multi-level thresholding using a two-stage Otsu optimization approach.

We calculated the segmentation sensitivity as follows:

where:

The overall analysis performed in this study randomly split the 756 images into training and test datasets.

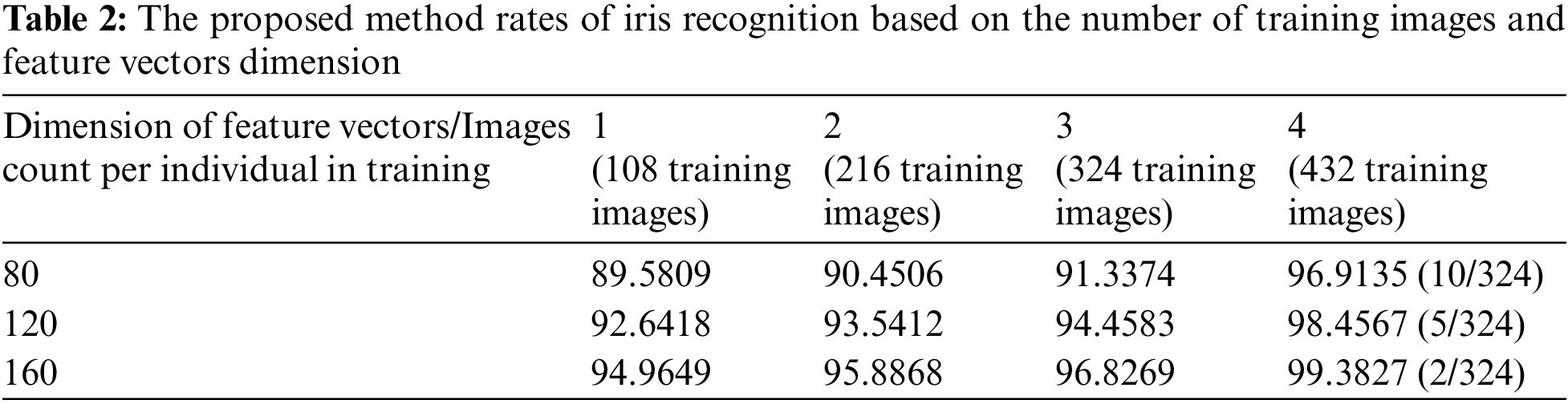

Datasets are often separated into training and testing datasets in a 4:3 ratio. Therefore, 432 images are randomly selected as training set and 324 are selected from all cases as test images. A total of four iris images for each subject are selected as the training set for the feature extraction method. The training set contains 108, 216, 324, and 432 images, depending on the number of images selected. For each person, irises with the same index are selected for the corresponding set.

In the way to reduce the dimensions of the training set, some statistical features are selected randomly, and M feature vectors that correspond to them are used to form a training set with a smaller size. This process would help reduce the number of operations the

For a more straightforward and achievable classification process, we utilize only

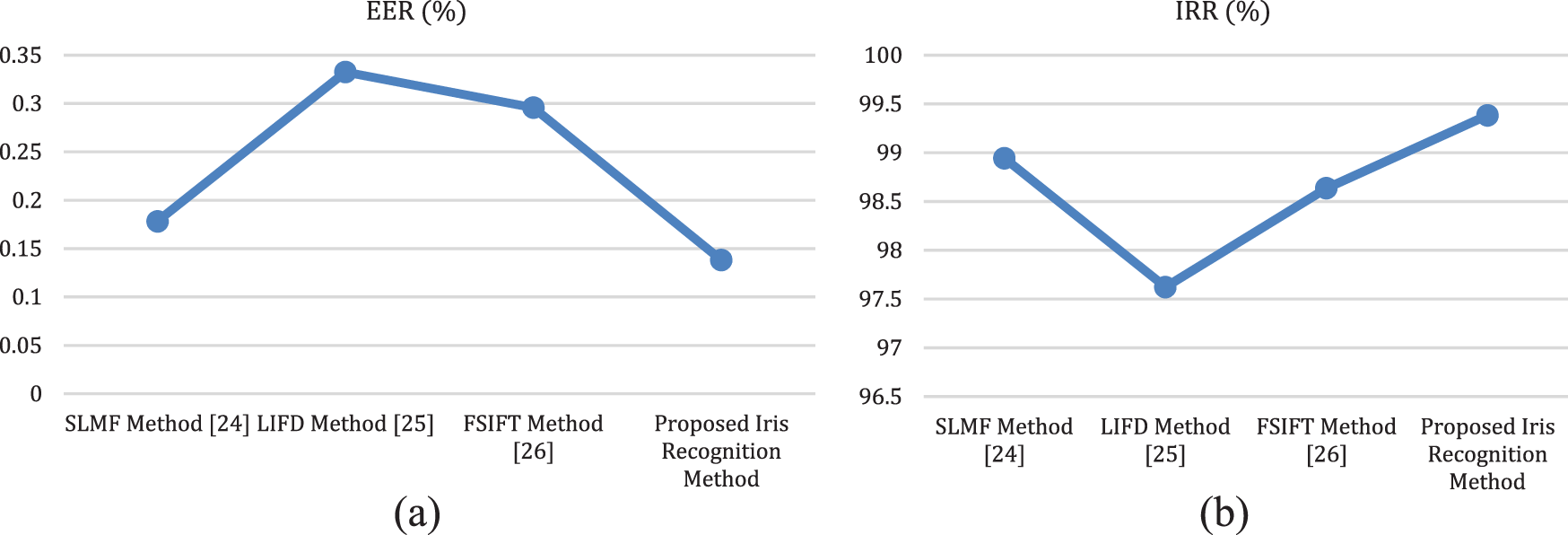

It is evident from Fig. 7 and Table 2, that an increase in the number of training images results in an enhancement of recognition accuracy.

Figure 7: The recognition performance of different approaches on CASIA iris database

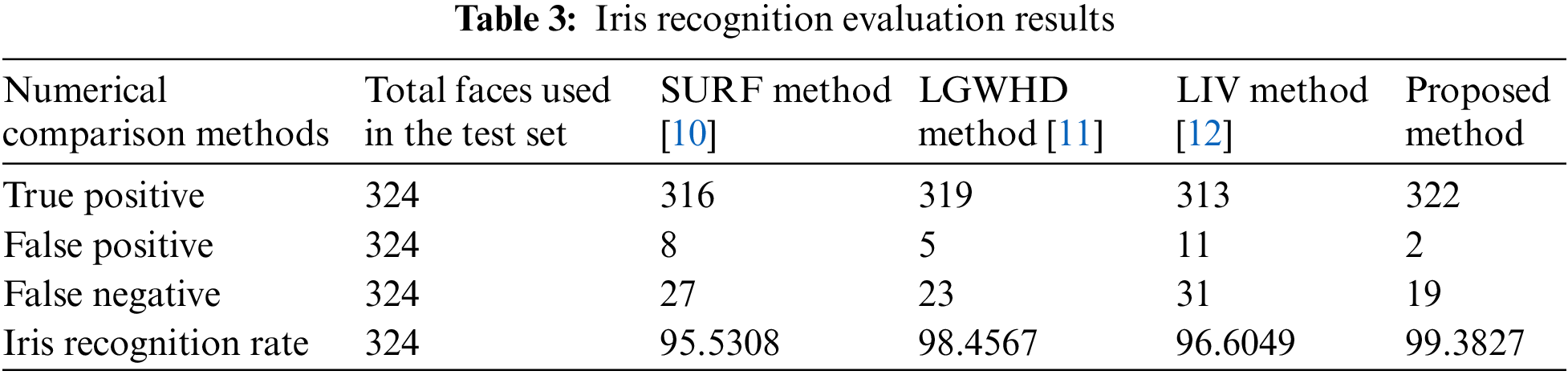

When utilizing the proposed method with a total of 432 training images (4 images per individual) and 160 feature vectors, Table 3 demonstrates that the recognition performance reaches a peak level of 99.3827%.

However, we used the iris recognition rate in our evaluation [23]. We calculated the iris recognition rate as follows:

where:

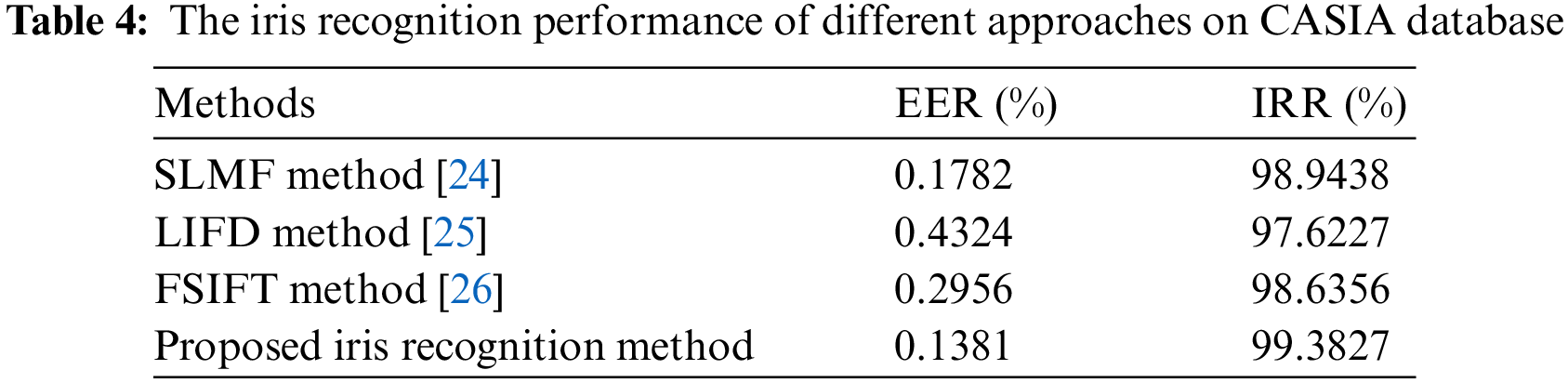

In addition, the Equal Error Rate (EER), Iris Recognition Rate (IRR) [23], True Positive (TP), False Positive (FP) and False Negative (FN) are expressed as numerical comparisons between different current techniques in the face recognition literature.

The numerical comparison of iris recognition using the Speeded Up Robust Features (SURF) method [10], the Log-Gabor wavelets and the Hamming distance method (LGWHD) [11], the Local intensity variation method (LIVM) [12], the supervised learning based on matching features (SLMF) [24], the local invariant feature descriptor (LIFD) [25], the Fourier-SIFT method (FSIFT) [26] on the CASIA database are shown in Tables 3 and 4, where 57% of samples for each individual are used in training set and 43% of the samples are used in the test set. The instinctive comparisons between these techniques in terms of EER and IRR are shown in Fig. 7.

As shown in Fig. 7 and Table 4, we can see that the EER and IRR obtained by the proposed method are 0.1381% and 99.3827%, respectively. In fact, the proposed method clearly outperforms other approaches in terms of the use numerical comparison method. Also from Table 3, we find that the false positive rate (FPR) and false negative rate (FNR) are 5.8641% and 0.6172%, respectively, indicating that the proposed method achieves a higher accuracy rate.

In this work, we have presented a new method of human iris recognition based on a multilevel thresholding technique and a modified fuzzy c-means Algorithm. In the first phase, the edge detection technique is used to localize the iris in the original image. This step which decides the accuracy of matching, mainly localizes the outer boundaries of the iris. In the second phase, these regions’ efficient segmentation is performed using the multilevel thresholding technique. Then, the statistical feature analysis method is used to ensure the acquisition of the iris characteristics essential for representation and identification. In contrast, the Fuzzy c-means algorithm is modified and employed for the classification task.

The evaluation and testing of the CASIA database prove the tool’s validity and achieve its aim to recognize the human iris, which might require more attention. Furthermore, data fusion techniques will be included in future work to fuse and aggregate data from different information sources such as iris and fingerprint biometrics.

Acknowledgement: The author thanks the faculty of computers and information Technology and he Industrial Innovation and Robotics Center, University of Tabuk for supporting the study.

Funding Statement: This research is supported by the faculty of computers and information Technology and the Industrial Innovation and Robotics Center, University of Tabuk.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. C. Otti, “Comparison of biometric identification methods,” in Int. Symp. on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, pp. 1–7, May 2016. [Google Scholar]

2. A. Sumalatha and A. Bhujanga Rao, “Novel method of system identification,” in Int. Conf. on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 2016. [Google Scholar]

3. C. D. Byron, A. M. Kiefer, J. Thomas, S. Patel, A. Jenkins et al., “Correction to: The authentication and repatriation of a ceremonial tsantsa to its country of origin (Ecuador),” Heritage Science, vol. 9, no. 60, pp. 1–10, 2021. [Google Scholar]

4. M. Ortega, M. G. Penedo, J. Rouco, N. Barreira and M. J. Carreira, “Retinal verification using a feature points-based biometric pattern,” EURASIP Journal on Advances in Signal Processing, vol. 9, no. 23, pp. 1–11, 2009. [Google Scholar]

5. J. R. Malgheet, N. B. Manshor and L. S. Affendey, “Iris recognition development techniques: A comprehensive review,” Complexity Journal, vol. 2021, pp. 1–32, 2021. [Google Scholar]

6. J. G. Daugman, “How iris recognition works,” IEEE Trans. Circ. Syst. Video Technol., vol. 14, no. 1, pp. 21–30, 2004. [Google Scholar]

7. F. M. Alkoot, “A review on advances in iris recognition methods,” Int. J. Comput. Eng. Res, vol. 3, no. 1, pp. 1–9, 2012. [Google Scholar]

8. F. Alonso-Fernandez, P. Tome-Gonzalez, V. Ruiz-Albacete and J. Ortega-Garcia, “Iris recognition based on SIFT features,” in Int. Conf. on Biometrics, Identity and Security (BIdS), Paris, France, 2009. [Google Scholar]

9. H. Mehrotra, P. K. Sa and B. Majhi, “Fast se’gmentation and adaptive surf descriptor for iris recognition,” Math. Comput. Model, vol. 58, no. 1–2, pp. 132–146, 2013. [Google Scholar]

10. A. I. Ismail, H. S. Ali and F. A. Farag, “Efficient enhancement and matching for iris recognition using SURF,” in National Symp. on Information Technology: Towards New Smart World (NSITNSW), Riyadh, Saudi Arabia, pp. 1–5, 2015. [Google Scholar]

11. L. Masek, “Recognition of human iris patterns for biometric identification,” The University of Western Australia, School of Computer Science and Software Engineering, vol. 4, no. 1, pp. 1–56, 2003. [Google Scholar]

12. L. Ma, T. Tan, Y. Wang and D. Zhang, “Local intensity variation analysis for iris recognition,” Pattern Recogn., vol. 37, no. 6, pp. 1287–1298, 2004. [Google Scholar]

13. K. Saminathan, D. Chithra and T. Chakravarthy, “Pair of iris recognition for personal identification using artificial neural networks,” International Journal of Computer Science Issues (IJCSI), vol. 9, no. 1, pp. 324–327, 2012. [Google Scholar]

14. R. Abiyev and K. Altunkaya, “Personal iris recognition using neural networks,” International Journal of Security and its Applications (IJSIA), vol. 2, no. 2, pp. 41–50, 2008. [Google Scholar]

15. S. V. Sheela and P. A. Vijaya, “Iris recognition methods-survey,” Int. J. Comput. Appl., vol. 3, no. 5, pp. 19–25, 2010. [Google Scholar]

16. D. Yuan Huang and C. Hung Wang, “Optimal multilevel thresholding using a two-stage Otsu optimization approach,” Pattern Recognition Letters, vol. 30, no. 3, pp. 275–284, 2009. [Google Scholar]

17. H. Mehrotra, G. S. Badrinath, B. Majhi and P. Gupta, “An efficient dual stage approach for iris feature extraction using interest point pairing,” in IEEE Workshop on Computational Intelligence in Biometrics: Theory, Algorithms, and Applications, Nashville, TN, USA, pp. 59–62, 2009. [Google Scholar]

18. W. Liu, L. Zhou and J. Chen, “Face recognition based on lightweight convolutional neural networks,” Journal of Information, vol. 12, no. 5, pp. 1–18, 2021. [Google Scholar]

19. P. S. Liao, T. S. Chen and P. C. Chung, “A fast algorithm for multi-level thresholding,” Journal of Information Science and Engineering, vol. 17, no. 5, pp. 713–727, 2001. [Google Scholar]

20. S. Arora, J. Acharya, A. Verma and P. K. Panigrahi, “Multilevel thresholding for image segmentation through a fast statistical recursive algorithm,” Pattern Recognition Letters, vol. 29, pp. 119–125, 2008. [Google Scholar]

21. D. Huang and C. Wang, “Optimal multi-level thresholding using a two-stage Otsu optimization approach-ScienceDirect,” Pattern Recognition Letters, vol. 30, no. 3, pp. 275–284, 2009. [Google Scholar]

22. A. Thaer, H. Al-Rahlawee and J. Rahebi, “Multilevel thresholding of images with improved Otsu thresholding by black widow optimization algorithm,” Multimedia Tools and Applications, vol. 80, no. 2, pp. 28217–28243, 2021. [Google Scholar]

23. W. She, M. Surette and R. Khanna, “Evaluation of automated biometrics-based identification and verification systems,” Proceeding IEEE, vol. 85, no. 9, pp. 1464–1478, 1997. [Google Scholar]

24. E. Hernandez-Garcia, A. Martin-Gonzalez and R. Legarda-Saenz, “Iris recognition using supervised learning based on matching Features,” in Int. Symp. on Intelligent Computing Systems: Intelligent Computing Systems, Universidad de Chile, Santiago, Chile, pp. 44–56, 2022. [Google Scholar]

25. Q. Jin, X. Tong, P. Ma and S. Bo, “Iris recognition by new local invariant feature descriptor,” Journal of Computational Information Systems, vol. 9, no. 5, pp. 1943–1948, 2013. [Google Scholar]

26. A. Kumar and B. Majhi, “Isometric efficient and accurate Fourier-SIFT method in iris recognition system,” in Int. Conf. on Communications and Signal Processing (ICCSP), Sharjah, United Arab Emirates, pp. 809–813, 2013. [Google Scholar]

Cite This Article

Copyright © 2022 The Author(s). Published by Tech Science Press.

Copyright © 2022 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools