Open Access

Open Access

REVIEW

Artificial Intelligence-Enabled Chatbots in Mental Health: A Systematic Review

1 International University of Tourism and Hospitality, Turkistan, Kazakhstan

2 Alem Research, Almaty, Kazakhstan

* Corresponding Author: Batyrkhan Omarov. Email:

Computers, Materials & Continua 2023, 74(3), 5105-5122. https://doi.org/10.32604/cmc.2023.034655

Received 23 July 2022; Accepted 22 September 2022; Issue published 28 December 2022

Abstract

Clinical applications of Artificial Intelligence (AI) for mental health care have experienced a meteoric rise in the past few years. AI-enabled chatbot software and applications have been administering significant medical treatments that were previously only available from experienced and competent healthcare professionals. Such initiatives, which range from “virtual psychiatrists” to “social robots” in mental health, strive to improve nursing performance and cost management, as well as meeting the mental health needs of vulnerable and underserved populations. Nevertheless, there is still a substantial gap between recent progress in AI mental health and the widespread use of these solutions by healthcare practitioners in clinical settings. Furthermore, treatments are frequently developed without clear ethical concerns. While AI-enabled solutions show promise in the realm of mental health, further research is needed to address the ethical and social aspects of these technologies, as well as to establish efficient research and medical practices in this innovative sector. Moreover, the current relevant literature still lacks a formal and objective review that specifically focuses on research questions from both developers and psychiatrists in AI-enabled chatbot-psychologists development. Taking into account all the problems outlined in this study, we conducted a systematic review of AI-enabled chatbots in mental healthcare that could cover some issues concerning psychotherapy and artificial intelligence. In this systematic review, we put five research questions related to technologies in chatbot development, psychological disorders that can be treated by using chatbots, types of therapies that are enabled in chatbots, machine learning models and techniques in chatbot psychologists, as well as ethical challenges.Keywords

Presently the world wide mental health care system is going through challenging times. According to the World Health Organization, one in four people affected by mental illness at some point in their lives [1]. Mental disorder is still the leading cause of health-related economic hardship around the world [2]. In particular, depression and anxiety are the most frequent causes, affecting an estimated 322 million (depression) and 264 million (anxiety) individuals globally [3]. In spite of such growing burden, there seems to be an acute shortage of mental health professionals worldwide (9 per 100, 000 people), principally in Southeast Asia (2.5 per 100,000 people) [4]. Despite the fact that there are efficient and well-known therapies for numerous mental and neourological disorders, only half of people, afflicted by mental disorder, receive them [1]. The main obstacles to successful and wide-ranging treatment have been highlighted as a lack of resources and qualified medical professionals, as well as social discrimination, stigma and marginalization [1]. Growing public expectations are raising the bar for healthcare systems to overcome the obstacles and offer an accessible, cost-effective, and evidence-based treatment to medically indigent individuals [5].

In light of such circumstances, information technology tools were hailed as a panacea for resolving longtime issues such as societal stigma and a supply and demand mismatch in the provision of mental health treatment. It is anticipated that AI-enabled technologies will offer more available, affordable, and perhaps less stigmatizing approaches than the conventional mental health treatment paradigm [6]. As a result of the lack of access to mental health treatment, there is an urgent need for flexible solutions. Consequently, there has been a surge of interest in developing mobile applications to complement conventional mental health therapy [7]. Although therapeutic efficacy of these new approaches has been similar to traditional methods in studies [8], the regular administration and integration of such digital mental health support products were shown to be rather low. Poor commitment and acceptance have been linked to the difficulty of technology to adequately engage patients as well as the failure of clinical trial results to improve quality of patient care in real-life conditions [9].

The resurgence of digital mental health treatments is being fueled by a renaissance in AI technologies. Conversational agents or Chatbots are AI-enabled software systems that can communicate with people using natural language across a text or voice-based interaction [10]. This technology has been constantly developing and is currently utilized in digital assistants such as Apples’s Siri, Yandex’s Alice, Amazon’s Alexa and other virtual assistants, as well as consumer interfaces in electronic shopping and online banking [11]. Other applications of the technology include development of a new kind of digital mental health service called mental health chatbots, which has the potential to have a long-term influence on psychotherapy treatment [12]. The automated chatbots can provide evidence-based treatments by simulating social communication in a funny and unprejudiced manner, addressing problems including low commitment, inadequate clinician availability, and stigma in mental health care [13].

Number of consumer behavior tendencies has identified the urgent need to further our comprehension of artificial intelligence (AI) enabled chatbots in mental health treatment. Furthermore, text-based chatbots such as Ruhh or Xiaoice have gained popularity by offering private chats [14], as well as AI-enabled virtual assistants on mobile phones and gadgets. Statistical surveys point to an increasing willingness among consumers to receive a treatment from conversational agents or chatbots. The number of users who have downloaded mental health chatbots demonstrates the growing popularity of these self-service technologies.

Despite the increasing scholarly attention to this concept, numerous studies on AI-enabled chatbot applications contexts are fragmented in terms of psychological or technical specialists. Considering the importance of this issue to both psychologists and AI developers, a literature review is required to integrate previous studies in order to obtain an overview of the current state of research, applied technologies, as well as to identify existing challenges, ethical issues and outline potential avenues for future research.

Consequently, it is critical to explain how and why patients would utilize a chatbot for mental health assistance in overcoming obstacles and enhancing the delivery of AI-enabled chatbot-based psychiatric services and patient results. Therefore, the main objective of this study is to investigate user response and create a theoretical model that may be used to assess the acceptability of AI-enabled chatbots for mental health assistance. As a result, the following research questions are proposed:

• Question 1: What kind of technologies are used in chatbot development?

• Question 2: What aspects of mental health do the chatbots target?

• Question 3: What kind of therapy are used in the fight against mental problems in chatbots?

• Question 4: What types of machine learning models are currently being applied for mental health?

• Question 5: To what extent do the papers describe ethical challenges or implications?

This review is organized as follows: Section 2 introduces man concepts in chatbot development. Section 3 explains methodology of the systematic literature review. Section 4 provides an analysis of the 35 identified studies and searches the answer of each research questions. Section 5 discusses the study implications, limitations, and the scope for further research. Section 6 concludes the review.

Conversational agents or chatbots are software systems that consist of a conversational user interface. They may be categorized as open-domain if they can communicate with users about any subject, or task-specific if they help with a particular activity. The following are some basic principles in chatbot technology.

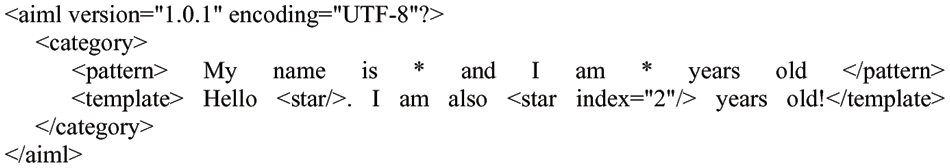

Initially, pattern matching is based on sample stimulus-response blocks. Sentences entered by the user serve as stimuli, and responses are created in reaction to those stimuli [15]. ALICE and Eliza were the first chatbots that use computer vision. However, a significant disadvantage of this approach was that the responses were robotic, repetitive, and impersonal. Additionally, it couldn’t preserve prior responses, which might result in debates repeating [16]. In the 1990s the Artificial Intelligence Markup Language (AIML) was created. It is an Extensible Markup Language (XML) based tag-based markup language, which is employed in natural language modeling for speakers of the stimulus-response communication system, such as conversational agents. As shown in Fig. 1, AIML is organized around categories, which are composed of chatbot replies and user input patterns.

Figure 1: AIML for a chatbot development

Latent Semantic Analysis (LSA) and AIML are widely used to create chatbots. In the form of a vector representation, the technology is used to find correlations among words [17]. LSA is able to react to unanswered questions, while AIML may be used to address template-based questions like welcomes and general inquiry [18]. It is noteworthy to mention an expert system Chatscript, which is a combination of a complex programming language engine, in contrast to AIML, and dialog management system.

RiveScript is an another plain text programming language with a line-based syntax for building chatbots. With Go, Python, Perl, and JavaScript tools, it is free and open-source [19]. Language recognition relies heavily on Natural Language Processing (NLP), which is used by Google and Apple’s Siri. The ability of technology to recognize human natural language textual data and speech-based commands depends on two fundamental elements: natural language understanding (NLU) and natural language generation (NLG) [20].

By analyzing the final interpretation of the text’s true meaning using pragmatic analysis and discourse integration, NLU determines how a particular word or phrase should really be interpreted. To produce an intelligible answer, NLG uses text realization and text planning. To put it another way, language generation is in charge of forming linguistically acceptable sentences and phrases. Understanding the complexities of real human language is a major problem for NLP.

Language’s structure is ambiguous in terms of syntax, lexis, and other speech components like similes and metaphors. A single word may be interpreted as a noun or a verb; a single phrase can be transmitted in a variety of ways; and a single input can have many interpretations, among other possibilities.

In this Systematic literature review, both qualitative and quantitative research methods were applied [21,22]. Following subsections describes article and data collection process for the review.

3.1 Article Collection, Inclusion and Exclusion

The collection of articles started by defining a search string. This string is composed of five search terms: “Chatbot” and “Conversational Agent”, “Machine Learning”, “Deep Learning”, “Artificial Intelligence”. Eq. (1) explains query string for article search.

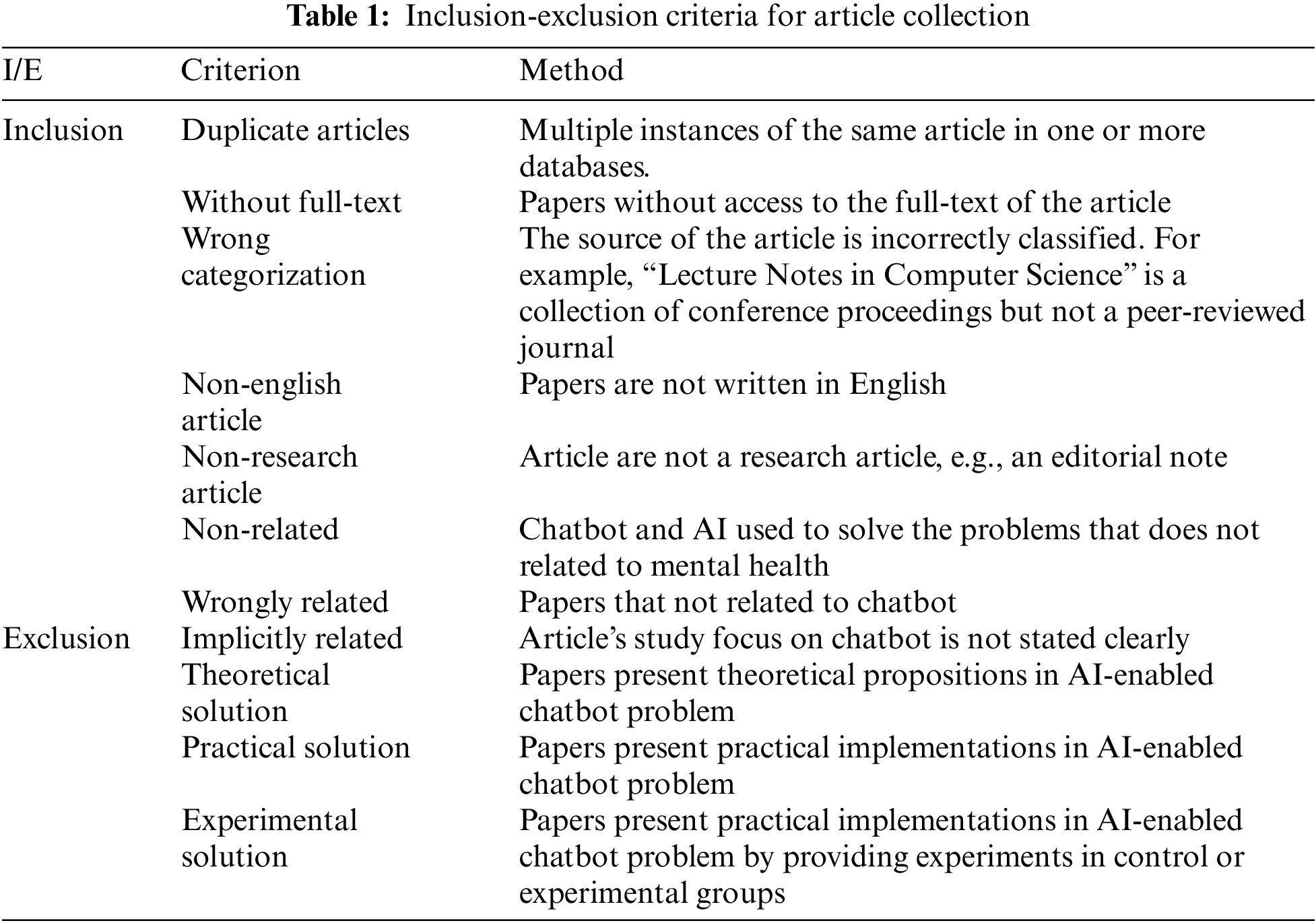

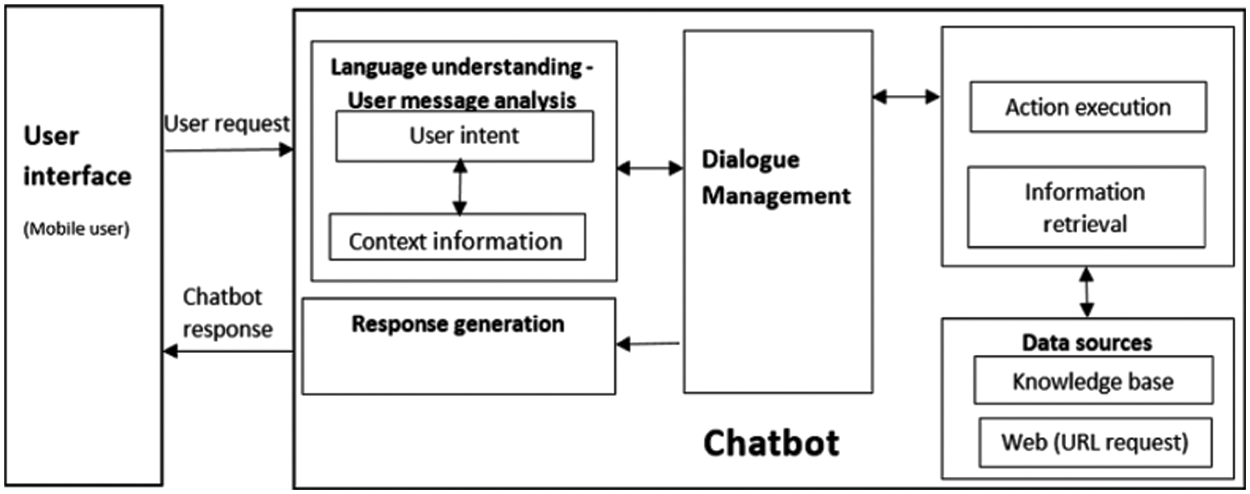

Seven databases were used including three technical, three medical databases and Scopus abstract, as well as citation database. IEEE Xplore, Sciencedirect, Springer databases were used for technical paper searching, while Pubmed, Cinahl, Medline databases were used for searching papers in medical area. During the paper selection, several rules were set as all the papers should be published between 2017 January and 2021 June, searched keywords must appear in the Title, abstract or keywords, and the papers should be published in a peer reviewed journal. Table 1 demonstrates inclusion and exclusion criteria for further analysis of the extracted papers. Four inclusion and eight exclusion criteria were defined to organize the papers for further exploration. In the Fast filtering stage, duplicated articles, articles without full text, wrong categorization articles, non-english articles, and non-research articles were removed from the list. Afterwards, following the results of exploring Titles, Abstracts, and Keywords, non-related, wrongly and implicitly related articles were removed. As a result of analyzing full texts, papers were subsequently classified into three categories as theoretical solution, practical solution, and experimental solution. Fig. 2 demonstrates each step of article collection, inclusion, and exclusion of the research papers.

Figure 2: Flowchart of the research

In the data collection process, the concrete data used to answer the research questions are collected. For the first research question “What kind of technologies are used in chatbot devel-opment?”, the data of interest are: programming languages for chatbot development like Java, Clojure, Python, C++, PHP, Ruby, etc; Natural language understanding cloud platforms like DialogFlow [23], wit.ai [24], Microsoft’s language understanding (LUIS) [25], Conversation AI [26], etc; and Chatbot development technologies like Rasa [27], Botsify [28], Chatfuel [29], Manychat [30], etc.

For the second research question “What aspects of mental health do the chatbots target?”, the data of interest are: Psychological disorders that can be tackled by applying chatbot applications as depression, anxiety, panic disorder, suicidal thinking, mood disorders, etc.

For the third research question “What kind of therapy are used in the fight against mental problems in chatbots or conversational agents?”, the data of interest are: types of therapy that applied into the chatbot in order to fight the psychological disorders. Cognitive Behavior Therapy (CBT) [31], Dialectical Behavior Therapy (DBT) [32], Positive Psychology [33] methods can be served as an example.

For the fourth research question “What types of ML models and applications are currently being applied for mental health?”, the data of interest are: machine learning techniques that applied in a chatbot development. Supervised, Unsupervised, Semi-supervised models as support vector machines (SVM), random forest (RF), decision trees (DT), k-nearest neithbours (KNN), latent Dirichlet allocation (LDA), Logistic Regression, and other models were examined in this stage.

For the fifth research question “To what extent do the papers describe ethical challenges or implications?”, the data of interest are: open questions and challenges that reference to ethical issues.

4 Systematic Literature Review

4.1 Question 1: What Kind of Technologies are Used in Chatbot Development?

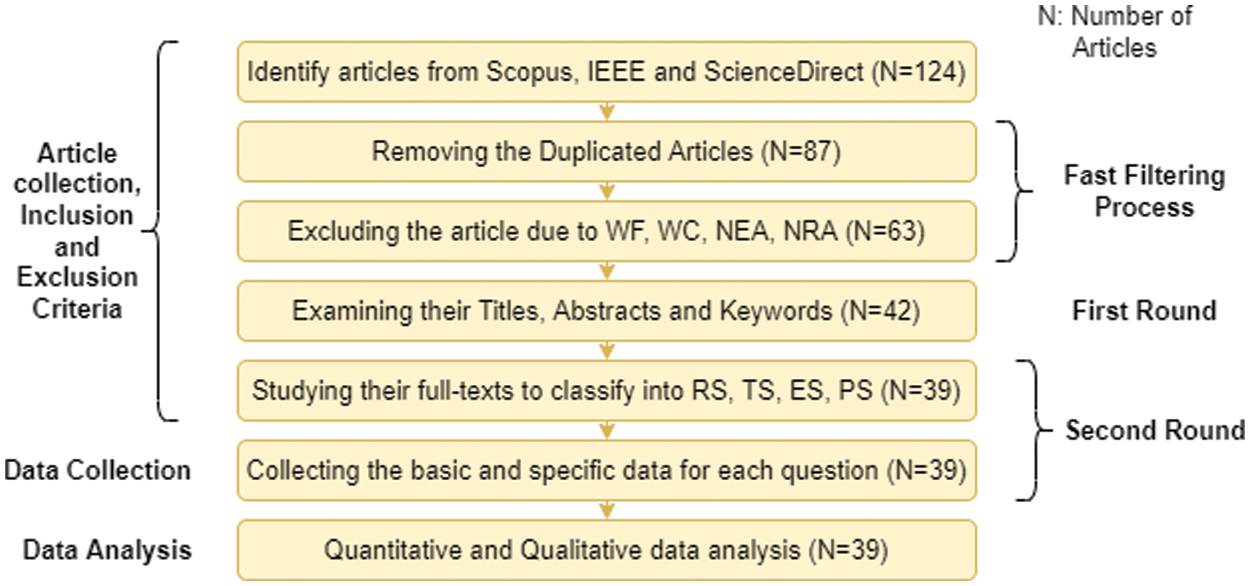

During the development of chatbots, a variety of techniques are used [34]. Developers may choose various approaches, platforms, methodologies, and tools to construct a chatbot by expecting what it will offer and to which class it belongs. Additionally, it helps consumers by letting them know what to expect. The requirements for developing a chatbot include correct representations, a technique for producing responses, and a library of neutral responses to utilize when a user’s words cannot be understood [35]. In order to use a modular development approach, each system must primarily be divided into its component parts in accordance with the corresponding standards [36]. Fig. 3 presents the overall chatbot architecture. A user initiates the process by posing a query to a chatbot, such as “What is the definition of environment?” using a messaging platform like WhatsApp, Facebook, or a text or voice-input application like Amazon Echo [37].

Figure 3: Overall chatbot artchitecture

Language comprehension: When a user request is received by the chatbot, the component parses it to identify the user’s intent and any relevant information (intent: “translate,” entities: [word: “environment”]) [38]. A chatbot must determine what to do after coming up with the best feasible interpretation [39]. It might respond right away in reaction to the new information, keep in mind what it has learnt and wait to see what happens next, ask for more background information, or ask for clarification. After the request has been comprehended, the actions are done and data is obtained. The chatbot uses its sources of data, which may be a database or other websites reachable through an API, to carry out the necessary activities or get the relevant information [40].

As a result of the user message analysis component’s retrieval of intent and context information [41], the Response Generation Module uses NLG [39] to generate a human-like response for the user.

A range of open-source and paid programs may be used to create chatbots. The range of chatbot-related solutions is still growing at an accelerating pace [42]. One of two methods may be used to create a chatbot: either a cutting-edge platform or a programming language like C++, Python, Java, Clojure, or Ruby. Currently, there are six main NLU cloud platforms that developers may use to create NLU applications such as Google DialogFlow, Facebook wit.ai, Microsoft LUIS, IBM Watson Conversation, Amazon Lex, and SAP Conversation AI [43]. Each of these systems is built on machine learning. They have certain common features, but also considerably vary in other aspects. RASA [27], Botsify [28], Chatfuel [29], Manychat [30], Flow XO [44], Chatterbot [45], Pandorabots [42], Botkit [42], and Botlytics [42] are some well-known chatbot development platforms.

Chatbots may be created using a variety of technologies. These tools also use different methods, from low-code form-based platforms to programming language frameworks, libraries, and services [46]. Because of this diversity, determining which tool is best for building a particular chatbot is quite challenging, since not every tool supports every conceivable functionality. Furthermore, due to the fact that chatbot specification often contains tool-specific unintended details, the conceptual model of the chatbot may be difficult to achieve. As a result, irrespective of the implementation technology, thinking, comprehending, verifying, and testing chatbots becomes difficult. Finally, certain systems are proprietary, which makes it nearly impossible to migrate chatbots and leads to vendor lock-in.

4.2 Question 2: What Aspects of Mental Health do the Chatbots Target?

A sizable number of papers discussed the use of machine learning to assist in the detection or diagnosis of mental health problems. Numerous of these research concentrate on the early identification of depression or its symptoms, most often through analyzing speech acoustic features or tweets from Twitter. Identifying emotional states from mobile sensing data sources, phone typing dynamics [47], stress evaluations from position [48], biometric and accelerometer data [49], and stress evaluations from location are further uses. Besides, recent advances in the analysis of human-robot or agent interventions [50] facilitate to assess emotional and mental health condition of the patients. Additionally, questionnaire data and text analysis was employed to automatically identify and retrieve diagnostic data from historical writings or clinical reports [51].

The majority of the papers discussed techniques for identifying and diagnosing mental health problems as well as for anticipating and comprehending psychological health concerns. The most frequent methods used sensor data [52], electronic health record data [53,54], or text [55] to predict future suicide risks. A few examples contain the examination of suicide notes as well as later periods [56]. Studies on an individual basis have attempted to forecast many things, such as patient stress [57], the likelihood of re-hospitalization for outpatients with severe psychological issues [58], and manic or depressive episodes concerning people with bipolar disorder [59].

A small number of studies focused mostly on Reddit2 posts to better identify the language elements of psychological wellness content posted in online communities. This work used text-mining algorithms by identifying helpful and harmful comments in online mental health forums [60,61].

The use of machine learning to gain more understanding of what elements (such as psychological symptoms, contextual influences, and others) may have the greatest impact on a person’s mental health and their relationship to mental health outcomes presented some additional isolated findings outside of these three major categories [62]. A prototype system was described that the user engages in conversation with a female avatar who screens for signs of anxiety and sadness [63]. According to the users’ responses and emotional state, the screening questions are modified and encouragement is supplied in this adaptive system.

In the case of anxiety, low-quality data from two randomized controlled trials (RCTs) revealed no statistically significant difference between chatbots and anxiety severity information. In contrast, one quasiexperiment found that utilizing chatbots significantly reduced anxiety levels. There are two possible explanations for these conflicting results. Firstly, pretest-posttest studies are not quite as reliable as RCTs for assessing the effectiveness of an intervention, in part, due to low internal consistency brought on by sampling error. Second, the conversational agent in the exploration, in contrast to the two RCTs, featured a virtual view, enabling chatbots to communicate with users both verbally and nonverbally [64].

A narrative synthesis of three research found no statistically significant difference in subjective psychological wellbeing between chatbots and the control group. The inclusion of a nonclinical sample in the three research explains the nonsignificant difference. In other words, since the individuals were already in excellent mental health, the impact of utilizing chatbots may be minimal.

Chatbots substantially reduced levels of discomfort, according to two researches, combined in a narrative manner. This result should be taken with care since both trials had a significant risk of bias. Studies in a comparable setting found results that were similar to ours. To be more specific, an RCT found that online chat therapy reduced psychological discomfort substantially over time [65].

Both RCTs evaluating chatbot safety found that virtual conversational agents are safe for use in psychological problems, as no adverse events or injury were recorded when chatbots were employed to treat depression patients. However, given the significant potential of bias in the two trials, this data is insufficient to establish that chatbots are safe.

4.3 Question 3: What Kind of Therapy are Used in the Fight against Mental Problems in Chatbots or Conversational Agents?

Chatbots in Clinical Psychology and Psychotherapy. A chatbot is a type of computer program that mimics human interaction via a text- or voice-based conversational interface. The foundations of the underlying model might range from a collection of straightforward rule-based replies and keywords screening to complex NLP algorithms [66]. Psychology and virtual conversational agents share a long history together. The initial purpose of ELIZA, the first well-known chatbot, was to emulate a Rogerian psychotherapist [30], as well as the intriguing philosophical and psychological problems they raise. A few dozen chatbots have reportedly been developed to cure a range of illnesses, including depression, autism, and anxiety, according to recent assessments. Chatbots have a high rate of user happiness, and there is a wealth of early efficacy evidence [67].

After a basic and short dialogical engagement, the simplest of these chatbots may be utilized as virtual agents or recommendation system interfaces, guiding users to receive appropriate mental health information or treatment material. While an AI agent capable of mimicking a human therapist is unlikely to arrive in the near future, more advanced AI agents with excellent NLP may replicate a small conversation using therapeutic methods. Though such therapeutic chatbots are not meant to replace real therapists, they may offer their own kind of connection with users. They can communicate at any time, and they may be used by people who are embarrassed or feel insecure to visit a therapist.

There are several examples of advanced treatment mental health virtual assistants that recently emerged: Woebot [68], Wysa [69], and Tess [70]. Through brief daily talks and mood monitoring, Woebot offers CBT to clients suffering from sadness and anxiety. The Woebot group saw a considerable decline in depression, as measured by the PHQ-9, after two weeks of use, in contrast to the knowledge control group, which got an e-book on depression from the NIMH but did not see a similar decline.

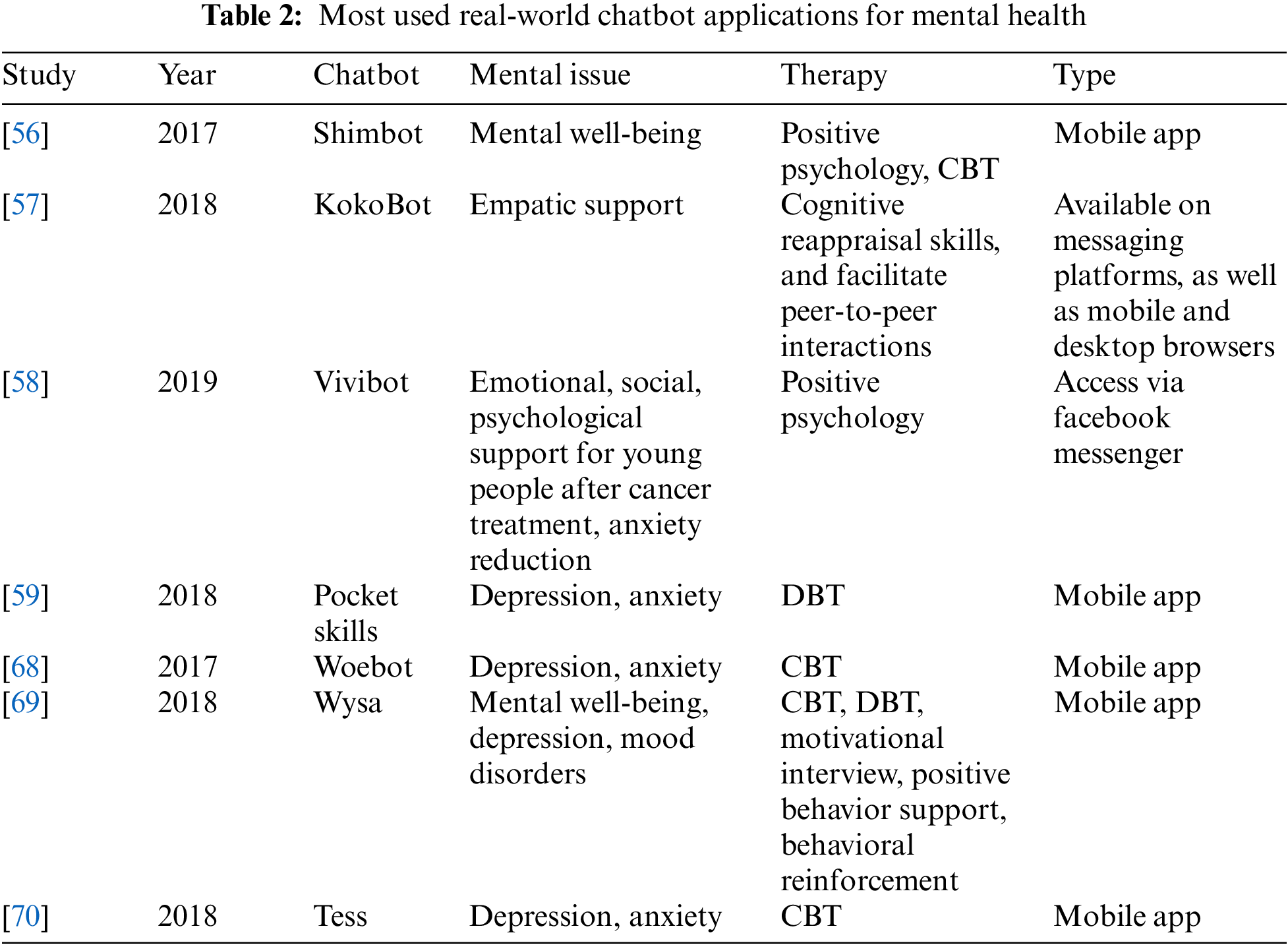

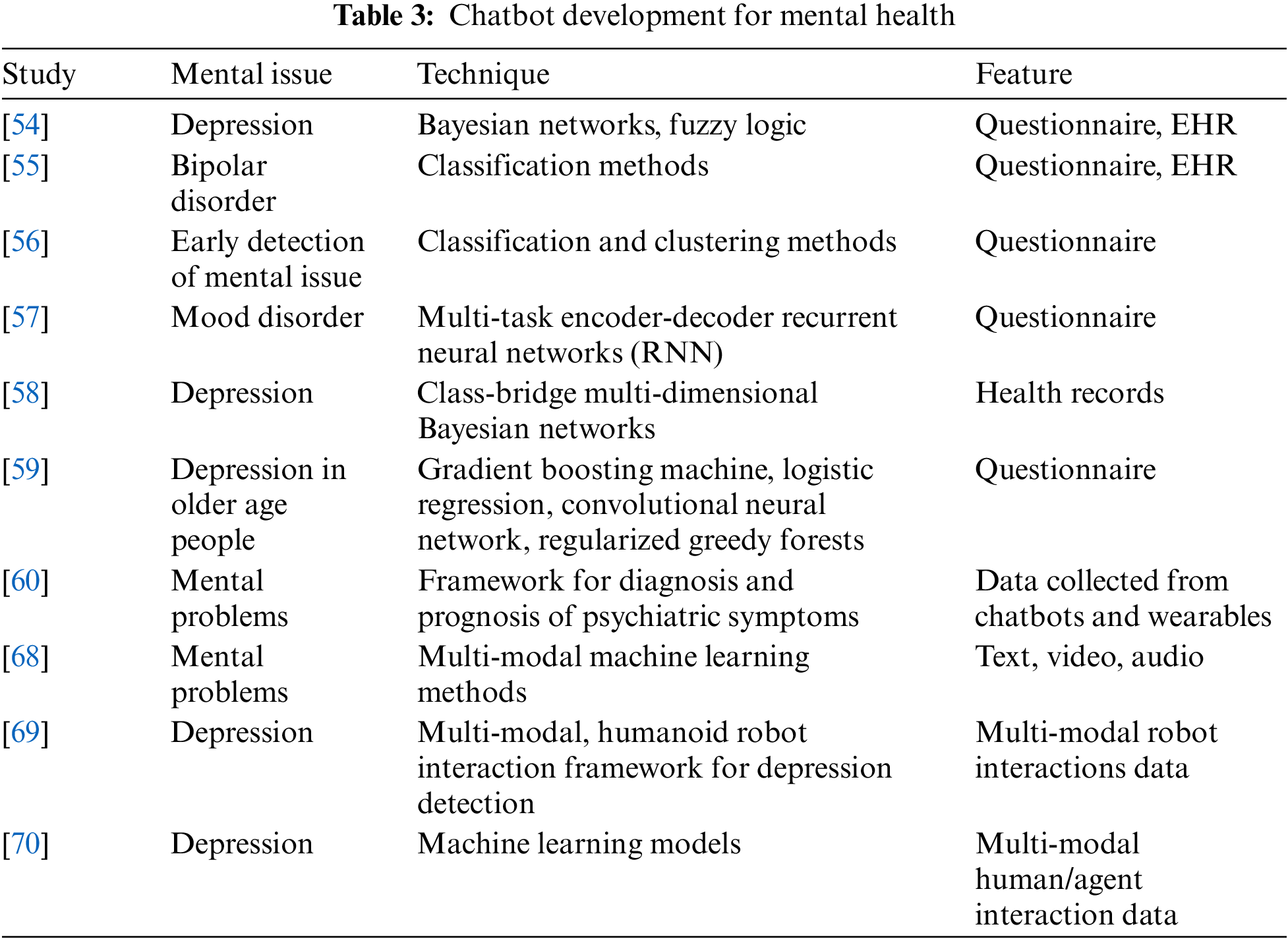

Chatbots and conversational agents for mental disorders show great promise, but more investigations are needed to get reliable results and verify them in bigger samples and over longer periods of time. Work on psychosocial chatbots will also need to address the issue of emotional and empathetic AI, in addition to the technical complexity of language processing methods. Table 2 describes most used real-world chatbot applications for mental health by indicating mental issues and therapies that can be treated by mobile chatbot applications. Table 3 describes neural networks, machine learning techniques and features that can be solved for mental health treatment.

Digital Interventions in Mental Health. A realistic supply-demand analysis of psychiatric services in 2020 leads to the conclusion that without additional technological advancements, treatment requirements will be difficult to fulfill. The use of digital mental health interventions (DMHI) among older patients has its own set of challenges and benefits. In an opinion piece focusing on healthcare inequities, Seifert et al. examine how new technologies may either alleviate or amplify gaps that already exist across the age spectrum, depending on knowledge, training, and public health objectives [71].

However, DMHIs do not have to completely replace conventional therapy; they may be used in conjunction with or prior to regular treatment. In a research conducted in the United Kingdom, Duffy et al. used internet-based cognitive behavioural therapy (ICBT) as a preceding step to a high-intensity face-to-face intervention to treat 124 individuals with severe depression and anxiety. From baseline through the end of the ICBT therapy, and from the end of the ICBT treatment to the end of the service, there were significant decreases in all main outcome measures. The findings suggest the use of ICBT to decrease inconvenient waiting times and increase efficiency [72].

The potential of technology-enabled treatment for mental wellness is likely to be best shown by the virtual reality and artificial intelligence developments taking place within the larger area of DMHIs. Virtual reality has been researched for almost as long as it has been used for gameplay in terms of its potential therapeutic use for psychiatric diseases, but the number of scientific investigations into VR therapies has increased dramatically in recent years, notably for anxiety disorders. Baragash et al. analyze this reality in their opinion piece and suggest educational, exercise, and research strategies to fully take advantage of what virtual reality treatments have to provide. However, VR’s reach is limited among patients and clinicians [73].

Radiography and pathology are two medical fields that are being transformed by AI and ML, and these technologies also hold the promise of providing tailored, low-cost therapies that can be easily scaled to medicine as a whole. Conversely, what is their prospective area of expertise and weakness in relation to mental health? Some recently studies investigate how artificial intelligence enabled conversational agents may influence psychological and psychiatric treatment at the level of diagnosis, data gathering, and therapy and provide four alternative approaches as a framework for further research and regulation.

Psychological health therapies must be documented in patient records maintained by clinical professionals and healthcare providers, whether they are technological or not. Williams argue that, for the purpose of empowering and autonomy, people should have access to their medical records. Patient portals often limit access to information on mental wellbeing regardless of the fact that the electronic medical record, which is now extensively used, substantially simplifies this process for the reasons and arguments described by the authors.

Finally, DMHIs serve as an illustration of how technology may be useful in the field of psychological disorders. A comparable and equally large body of research has focused on the negative aspects of technology, including addiction, entertainment, bullies, and online impulsivity. Despite certain parallels, as Baldwin et al. explore in a perspective piece, these two academic disciplines have mostly emerged independently of one another. To understand the issues at the intersection of computer science and psychology, cooperation between scholars on both sides is essential.

To sum up, the studies indicate that DMHI achievements are on equal level with traditional programs, and some underserved peoples are receiving a promising and interesting way of treatment, not mentioning that, on the whole, industry experts and healthcare providers are eager to use the newest technological advancements, like augmented worlds and cognitive computing, for psychological disorder, diagnosis, and therapeutic interventions. The disadvantages of DMHI include technical restrictions, the absence of big datasets, as well as long-term studies, and the possible paradoxical outcomes wherein electronic resources can occasionally reinforce rather than lessen healthcare issues. Given the relatively low availability of traditional mental health treatments and the urgent need to meet the rising demand, DMHIs are probably an integral component of any solutions to treatments access issues.

4.4 Question 4: What Types of Machine Learning Techniques are Currently Being Applied for Mental Health?

Classification and supervised learning are the most common machine learning tasks and techniques. A variety of machine learning methods, including classification, regression, and clustering, may be used to basic tasks like detecting correlations and pattern identification in high-dimensional datasets to provide more simple, human-interpretable forms. Authors divided the articles in their corpus into four machine learning groups as supervised, unsupervised, semi-supervised learning; and new methods.

Unsupervised learning clusters data using mathematical methods to offer new insights. The dataset just includes inputs and no intended output labels in this case. Clustering techniques react to the presence or absence of similarities in each piece of data to identify patterns and assist organize the data. Only two studies [68,69] used clustering to differentiate language usage in online conversations and extract diagnoses from psychiatric reports, respectively. However, data clustering was often used as a first step in classification (as mentioned above) to assist in the selection of features or the identification of labels for the development of supervised learning models [60,71].

Only one article specifically stated that they used semi-supervised learning models [73] to mix their model includes both labeled and unlabeled data, and only a few studies (n = 6) mentioned that they used new approaches. Deep learning techniques have been used to provide customized suggestions for stress-management measures [68], while custom machine learning models have been employed to develop multi-class classifiers [69]. The remaining studies either applied pre-existing classifiers on newly collected data [70] or presented key ideas without using machine learning [67].

Finally, NLP including voice and text, is a subset of machine learning that mostly employs unsupervised methods. For instance, by extracting keywords such as anxiety, depression, stress, topics, or idiomatic expressions from phrase in order to extract necessary features from human speech to develop different classification models or assess its semantic sentiment, numerous studies [67] utilized vocabulary and other text-mining methods [72]. Few researches such as [54,57] have examined the acoustic, paralinguistic aspects of speech, including prosody, pitch, and speech rate estimates.

As a result, the studies mostly used supervised machine learning methods to see whether, and how effectively, specific mental health behaviors, states, or disorders might be categorized using newly created data models, in line with the cluster of the articles’ emphasis on mental health evaluation. With the purpose to assist data labeling and feature selection for classification, the majority of unsupervised learning methods were used. This is consistent with [73] clinical systemic review results. However, other avenues for utilizing ML methods, such as allowing customization, remain unexplored.

4.5 Question 5: To What Extent do the Papers Describe Ethical Challenges or Implications?

Psychiatric care has been established as a sector that presents unique ethical and legal issues, as well as the necessity for regulation, by its very nature. The advancement of AI, as well as its increasing application in a variety of fields, including psychological disorders, has determined the necessity to ethically scrutinize and control its use. Consequently, we’ve arrived at the crossroads, where the confluence of artificial intelligence and mental health presents its own set of new questions [56].

While AI-enabled chatbots offer a lot of potential advantages, they also pose a lot of ethical issues that need to be addressed. One major source of worry is the prevention of damage. Robust investigations are required to ensure non-maleficence in therapeutic interactions, as well as to anticipate how to deal with situations in which robots fail or behave in unanticipated ways [61]. While traditional medical devices are subjected to extensive risk assessments and regulatory oversight before being approved for therapeutic use, it is unclear whether AI-enabled devices—such as chatbots and conversational agents and publicly accessible mental health apps—should be subjected to the same scrutiny.

As one of the most recent areas of psychological and psychiatric research and treatment, artificial intelligence enabled applications need guidance on design, biomedical integration, and training [64]. Existing legal and ethical frameworks are often out of step with these developments, putting them at danger of failing to offer adequate regulatory advice. While there are ‘gaps’ between the usage of certain apps and established ethical standards, damage may only be addressed retrospectively [67]. In order to combine useful insight into artificial intelligence design and development, deliberate discussion and reflection on the correlation between changes concerning embodied AI and mental health treatment are considered necessary. Sure, it is challenging to foresee the ethical challenges highlighted by present and future chatbot developments. This entails developing higher-level guidance from medical and psychiatric councils on how medical professionals may successfully develop abilities for employing embodied intelligence in the clinical practice [68,69,71].

As AI becomes more incorporated into mental wellbeing, it’s critical to consider how intelligent apps and robots may alter the current landscape of services. With an aim to provide corresponding treatment, the availability of embodied AI alternatives may justify the removal of current services, resulting in either fewer mental health care service options or options that are mainly AI-driven. If this happens, it may exacerbate current healthcare problems. As a result, it’s crucial to consider AI’s integration in the context of other mental health care tools. At their present state of development, embodied AI and robots cannot substitute comprehensive, multi-tiered psychological treatment to the full extent. Therefore, it is critical to guarantee that the integration of artificial intelligence services does not serve as a pretext to eliminate multi-layered psychological disorders treatment provided by qualified experts.

It’s essential to note that consumers saw medical chatbots as having many advantages, including anonymity, ease, and quicker access to pertinent information. This supports the suggestion of previous research that consumers are just as willing to share emotional and factual information with a chatbot as if they were with a human companion. Conversations with chatbots and humans had comparable levels of perceived comprehension, disclosure closeness, and cognitive reappraisal, suggesting that individuals psychologically interact with chatbots in the same way they do with people. A few participants in mental health settings mentioned the apparent anonymity, but the preferences for specific chatbot usage in healthcare contexts need to be investigated further. Our results back up the qualitative research findings that investigated chatbot user expectations in terms of comprehension and preferences. Users are usually unsure what chatbots can accomplish, but they believe that this technology will improve their experience by giving instant access to relevant and useful information. They also recognize the chatbot’s lack of judgment as a unique feature, but it was formerly pointed out that establishing rapport with a chatbot would require trust and meaningful interactions. These reasons for using chatbots need to be thoroughly examined further in order to determine how this technology may be securely integrated into healthcare.

Patient motivation and engagement must be properly and thoroughly evaluated in order to optimize the benefits of AI technologies as their employing in treatment of psychological disorders becomes more ubiquitous. Although many individuals have open access to medical conversational agents, the majority of concerned people is still hesitant to resort to the assistance of AI-enabled solutions, according to our research. Therefore, intervention engineers should utilize theory-based techniques in order to tackle user anxieties as well as to provide efficient and moral services suitable of narrowing the healthcare and well-being gap. Future studies are required to determine how clinical chatbots might be effectively employed in healthcare and preventative medicine, especially by enabling individuals to take a more active role in treatment of their own health problems.

The main topics of this review are the definition of technologies for the development of chatbots for mental health, identifying mental problems that can be solved using chatbot applications, as well as the description of artificial intelligence methods for chatbot applications and the definition of ethical problems in the development of chatbot applications for mental health.

The target audience for the psychological health software has been determined as an underserved and vulnerable part of the population that urgently needs medical support. Consequently, the creators of these apps should take inconsistent information and a lack of data carefully. It is critically important that users should be informed if an app was developed as part of an experiment that will end after a certain amount of time or has already been terminated.

The demands of vulnerable and underserving populations should be better understood by designers from the viewpoints of both end-users and experts. These insights go beyond the actual mental health problems to include questions such as how people who need mental health support can use these programs to get benefits, when and why they should stop using them, and what it implies for their wellness. Application developers shouldn’t generalize “unknown detrimental impact for a single condition” as a wholly null effect because “not known unfavorable impact for a single circumstance” may not be applicable or proven for digital behavior.

Like conventional learning techniques, each individual has their own preferable learning style while using virtual applications. Two main advantages of employing a digital instrument are the ability to quantify and adjust for variation of learning approaches. In each unique case, there is still a lot to learn about digital learning techniques. While patients’ individual mental health state further complicates the variety in learning methods, AI-based personalization is considered to be the main concept in the psychological health app market. The extent of personalization is a further issue brought up in this research. A framework for assessing mental health programs should contain metrics to gauge characteristics like customization—how personalized the app is, how relevant it is to the patient’s condition, and how this personalization affects the condition being targeted as well as the patient’s general wellbeing.

Funding Statement: This work was supported by the grant “Development of an intellectual system prototype for online-psychological support that can diagnose and improve youth’s psycho-emotional state” funded by the Ministry of Education of the Republic of Kazakhstan. Grant No. IRN AP09259140.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. H. Pontes, B. Schivinski, C. Sindermann, M. Li, B. Becker et al., “Measurement and conceptualization of gaming disorder according to the world health organization framework: The development of the gaming disorder test,” International Journal of Mental Health and Addiction, vol. 19, no. 2, pp. 508–528, 2021. [Google Scholar]

2. Y. Ransome, H. Luan, I. Song, D. Fiellin and S. Galea, “Association of poor mental-health days with COVID-19 infection rates in the US,” American Journal of Preventive Medicine, vol. 62, no. 3, pp. 326–332, 2022. [Google Scholar]

3. R. Levant, M. Gregor and K. Alto, “Dimensionality, variance composition, and development of a brief form of the duke health profile, and its measurement invariance across five gender identity groups,” Psychology & Health, vol. 37, no. 5, pp. 658–673, 2022. [Google Scholar]

4. S. Zhang, T. Gong, H. Wang, Y. Zhao and Q. Wu, “Global, regional, and national endometriosis trends from 1990 to 2017,” Annals of the New York Academy of Sciences, vol. 1484, no. 1, pp. 90–101, 2021. [Google Scholar]

5. J. Campion, A. Javed, C. Lund, N. Sartorius, S. Saxena et al., “Public mental health: Required actions to address implementation failure in the context of COVID-19,” The Lancet Psychiatry, vol. 9, no. 2, pp. 169–182, 2022. [Google Scholar]

6. B. Williamson, K. Gulson, C. Perrotta and K. Witzenberger, “Amazon and the new global connective architectures of education governance,” Harvard Educational Review, vol. 92, no. 2, pp. 231–256, 2022. [Google Scholar]

7. A. Chan and M. Hone, “User perceptions of mobile digital apps for mental health: Acceptability and usability-an integrative review,” Journal of Psychiatric and Mental Health Nursing, vol. 29, no. 1, pp. 147–168, 2022. [Google Scholar]

8. T. Furukawa, A. Suganuma, E. Ostinelli, G. Andersson, C. Beevers et al., “Dismantling, optimising, and personalising internet cognitive behavioural therapy for depression: A systematic review and component network meta-analysis using individual participant data,” The Lancet Psychiatry, vol. 8, no. 6, pp. 500–511, 2021. [Google Scholar]

9. E. Lattie, C. Stiles-Shields and A. Graham, “An overview of and recommendations for more accessible digital mental health services,” Nature Reviews Psychology, vol. 1, no. 2, pp. 87–100, 2022. [Google Scholar]

10. J. Paay, J. Kjeldskov, E. Papachristos, K. Hansen, T. Jørgensen et al., “Can digital personal assistants persuade people to exercise,” Behaviour & Information Technology, vol. 41, no. 2, pp. 416–432, 2022. [Google Scholar]

11. K. Nirala, N. Singh and V. Purani, “A survey on providing customer and public administration based services using AI: Chatbot,” Multimedia Tools and Applications, vol. 81, no. 1, pp. 22215–22246, 2022. [Google Scholar]

12. A. Adikari, D. De Silva, H. Moraliyage, D. Alahakoon, J. Wong et al., “Empathic conversational agents for real-time monitoring and co-facilitation of patient-centered healthcare,” Future Generation Computer Systems, vol. 126, no. 1, pp. 318–329, 2022. [Google Scholar]

13. N. Kazantzis and A. Miller, “A comprehensive model of homework in cognitive behavior therapy,” Cognitive Therapy and Research, vol. 46, no. 1, pp. 247–257, 2022. [Google Scholar]

14. C. Chang, S. Kuo and G. Hwang, “Chatbot-facilitated nursing education,” Educational Technology & Society, vol. 25, no. 1, pp. 15–27, 2022. [Google Scholar]

15. R. May and K. Denecke, “Security, privacy, and healthcare-related conversational agents: A scoping review,” Informatics for Health and Social Care, vol. 47, no. 2, pp. 194–210, 2022. [Google Scholar]

16. Y. Wang, N. Zhang and X. Zhao, “Understanding the determinants in the different government AI adoption stages: Evidence of local government chatbots in China,” Social Science Computer Review, vol. 40, no. 2, pp. 534–554, 2022. [Google Scholar]

17. A. Chaves, J. Egbert, T. Hocking, E. Doerry and M. Gerosa, “Chatbots language design: The influence of language variation on user experience with tourist assistant chatbots,” ACM Transactions on Computer-Human Interaction, vol. 29, no. 2, pp. 1–38, 2022. [Google Scholar]

18. K. Wołk, A. Wołk, D. Wnuk, T. Grześ and I. Skubis, “Survey on dialogue systems including slavic languages,” Neurocomputing, vol. 477, no. 1, pp. 62–84, 2022. [Google Scholar]

19. N. Ahmad, M. Che, A. Zainal, M. Abd Rauf and Z. Adnan, “Review of chatbots design techniques,” International Journal of Computer Applications, vol. 181, no. 8, pp. 7–10, 2022. [Google Scholar]

20. A. Haghighian Roudsari, J. Afshar, W. Lee and S. Lee, “PatentNet: Multi-label classification of patent documents using deep learning based language understanding,” Scientometrics, vol. 127, no. 1, pp. 207–231, 2022. [Google Scholar]

21. L. Curry, I. Nembhard and E. Bradley, “Qualitative and mixed methods provide unique contributions to outcomes research,” Circulation, vol. 119, no. 10, pp. 1442–1452, 2009. [Google Scholar]

22. C. Pickering and J. Byrne, “The benefits of publishing systematic quantitative literature reviews for PhD candidates and other early-career researchers,” Higher Education Research & Development, vol. 33, no. 3, pp. 534–548, 2014. [Google Scholar]

23. J. Yoo and Y. Cho, “ICSA: Intelligent chatbot security assistant using text-CNN and multi-phase real-time defense against SNS phishing attacks,” Expert Systems with Applications, vol. 207, no. 1, pp. 1–12, 2022. [Google Scholar]

24. X. Pérez-Palomino, K. Rosas-Paredes and J. Esquicha-Tejada, “Low-cost gas leak detection and surveillance system for single family homes using wit.ai, raspberry pi and arduino,” International Journal of Interactive Mobile Technologies, vol. 16, no. 9, pp. 206–216, 2022. [Google Scholar]

25. L. Xu, A. Iyengar and W. Shi, “NLUBroker: A QoE-driven broker system for natural language understanding services,” ACM Transactions on Internet Technology (TOIT), vol. 22, no. 3, pp. 1–29, 2022. [Google Scholar]

26. L. Cao, “A new age of AI: Features and futures,” IEEE Intelligent Systems, vol. 37, no. 1, pp. 25–37, 2022. [Google Scholar]

27. T. Rasa and A. Laherto, “Young people’s technological images of the future: Implications for science and technology education,” European Journal of Futures Research, vol. 10, no. 1, pp. 1–15, 2022. [Google Scholar]

28. C. Abreu and P. Campos, “Raising awareness of smartphone overuse among university students: A persuasive systems approach,” Informatics, vol. 9, no. 1, pp. 1–15, 2022. [Google Scholar]

29. A. Mandayam, S. Siddesha and S. Niranjan, “Intelligent conversational model for mental health wellness,” Turkish Journal of Computer and Mathematics Education, vol. 13, no. 2, pp. 1008–1017, 2022. [Google Scholar]

30. J. Isla-Montes, A. Berns, M. Palomo-Duarte and J. Dodero, “Redesigning a foreign language learning task using mobile devices: A comparative analysis between the digital and paper-based outcomes,” Applied Sciences, vol. 12, no. 11, pp. 1–18, 2022. [Google Scholar]

31. S. Sulaiman, M. Mansor, R. Wahid and N. Azhar, “Anxiety Assistance Mobile Apps Chatbot Using Cognitive Behavioural Therapy,” International Journal of Artificial Intelligence, vol. 9, no. 1, pp. 17–23, 2022. [Google Scholar]

32. G. Simon, S. Shortreed, R. Rossom, A. Beck, G. Clarke et al., “Effect of offering care management or online dialectical behavior therapy skills training vs usual care on self-harm among adult outpatients with suicidal ideation: A randomized clinical trial,” The Journal of the American Medical Association (JAMA), vol. 327, no. 7, pp. 630–638, 2022. [Google Scholar]

33. P. Maheswari, “COVID-19 lockdown in India: An experimental study on promoting mental wellness using a chatbot during the coronavirus,” International Journal of Mental Health Promotion, vol. 24, no. 2, pp. 189–205, 2022. [Google Scholar]

34. W. Huang, K. Hew and L. Fryer, “Chatbots for language learning—are they really useful? A systematic review of chatbot-supported language learning,” Journal of Computer Assisted Learning, vol. 38, no. 1, pp. 237–257, 2022. [Google Scholar]

35. S. Divya, V. Indumathi, S. Ishwarya, M. Priyasankari and S. Devi, “A self-diagnosis medical chatbot using artificial intelligence,” Journal of Web Development and Web Designing, vol. 3, no. 1, pp. 1–7, 2018. [Google Scholar]

36. A. Følstad, T. Araujo, E. Law, P. Brandtzaeg, S. Papadopoulos et al., “Future directions for chatbot research: An interdisciplinary research agenda,” Computing, vol. 103, no. 12, pp. 2915–2942, 2021. [Google Scholar]

37. D. Rooein, D. Bianchini, F. Leotta, M. Mecella, P. Paolini et al., “aCHAT-WF: Generating conversational agents for teaching business process models,” Software and Systems Modeling, vol. 21, no. 3, pp. 891–914, 2021. [Google Scholar]

38. Y. Ouyang, C. Yang, Y. Song, X. Mi and M. Guizani, “A brief survey and implementation on refinement for intent-driven networking,” IEEE Network, vol. 35, no. 6, pp. 75–83, 2021. [Google Scholar]

39. F. Corno, L. De Russis and A. Roffarello, “From users’ intentions to if-then rules in the internet of things,” ACM Transactions on Information Systems, vol. 39, no. 4, pp. 1–33, 2021. [Google Scholar]

40. D. Chandran, “Use of AI voice authentication technology instead of traditional keypads in security devices,” Journal of Computer and Communications, vol. 10, no. 6, pp. 11–21, 2022. [Google Scholar]

41. M. Aliannejadi, H. Zamani, F. Crestani and W. Croft, “Context-aware target apps selection and recommendation for enhancing personal mobile assistants,” ACM Transactions on Information Systems, vol. 39, no. 3, pp. 1–30, 2021. [Google Scholar]

42. S. Pérez-Soler, S. Juarez-Puerta, E. Guerra and J. de Lara, “Choosing a chatbot development tool,” IEEE Software, vol. 38, no. 4, pp. 94–103, 2021. [Google Scholar]

43. P. Venkata Reddy, K. Nandini Prasad and C. Puttamadappa, “Farmer’s friend: Conversational AI bot for smart agriculture,” Journal of Positive School Psychology, vol. 6, no. 2, pp. 2541–2549, 2022. [Google Scholar]

44. J. Peña-Torres, S. Giraldo-Alegría, C. Arango-Pastrana and V. Bucheli, “A chatbot to support information needs in times of COVID,” Systems Engineering, vol. 24, no. 1, pp. 109–119, 2022. [Google Scholar]

45. R. Alsadoon, “Chatting with AI bot: Vocabulary learning assistant for Saudi EFL learners,” English Language Teaching, vol. 14, no. 6, pp. 135–157, 2021. [Google Scholar]

46. D. Di Ruscio, D. Kolovos, J. de Lara, A. Pierantonio, M. Tisi et al., “Low-code development and model-driven engineering: Two sides of the same coin?,” Software and Systems Modeling, vol. 21, no. 2, pp. 437–446, 2022. [Google Scholar]

47. X. Xu, H. Peng, M. Bhuiyan, Z. Hao, L. Liu et al., “Privacy-preserving federated depression detection from multisource mobile health data,” IEEE Transactions on Industrial Informatics, vol. 18, no. 7, pp. 4788–4797, 2021. [Google Scholar]

48. K. Crandall, “Knowledge sharing technology in school counseling: A literature review,” Journal of Computing Sciences in Colleges, vol. 37, no. 2, pp. 61–69, 2021. [Google Scholar]

49. S. Khowaja, A. Prabono, F. Setiawan, B. Yahya and S. Lee, “Toward soft real-time stress detection using wrist-worn devices for human workspaces,” Soft Computing, vol. 25, no. 4, pp. 2793–2820, 2021. [Google Scholar]

50. L. Fiorini, F. Loizzo, A. Sorrentino, J. Kim, E. Rovini et al., “Daily gesture recognition during human-robot interaction combining vision and wearable systems,” IEEE Sensors Journal, vol. 21, no. 20, pp. 23568–23577, 2021. [Google Scholar]

51. P. Amiri and E. Karahanna, “Chatbot use cases in the COVID-19 public health response,” Journal of the American Medical Informatics Association, vol. 29, no. 5, pp. 1000–1010, 2022. [Google Scholar]

52. R. Wang, B. Yang, Y. Ma, P. Wang, Q. Yu et al., “Medical-level suicide risk analysis: A novel standard and evaluation model,” IEEE Internet of Things Journal, vol. 8, no. 23, pp. 16825–16834, 2021. [Google Scholar]

53. Y. Kim, J. DeLisa, Y. Chung, N. Shapiro, S. Kolar Rajanna et al., “Recruitment in a research study via chatbot versus telephone outreach: A randomized trial at a minority-serving institution,” Journal of the American Medical Informatics Association, vol. 29, no. 1, pp. 149–154, 2022. [Google Scholar]

54. M. McKillop, B. South, A. Preininger, M. Mason and G. Jackson, “Leveraging conversational technology to answer common COVID-19 questions,” Journal of the American Medical Informatics Association, vol. 28, no. 4, pp. 850–855, 2021. [Google Scholar]

55. A. Chekroud, J. Bondar, J. Delgadillo, G. Doherty, A. Wasil et al., “The promise of machine learning in predicting treatment outcomes in psychiatry,” World Psychiatry, vol. 20, no. 2, pp. 154–170, 2022. [Google Scholar]

56. A. Darcy, A. Beaudette, E. Chiauzzi, J. Daniels, K. Goodwin et al., “Anatomy of a Woebot®(WB001Agent guided CBT for women with postpartum depression,” Expert Review of Medical Devices, vol. 19, no. 4, pp. 287–301, 2022. [Google Scholar]

57. A. Zhang, B. Zebrack, C. Acquati, M. Roth, N. Levin et al., “Technology-assisted psychosocial interventions for childhood, adolescent, and young adult cancer survivors: A systematic review and meta-analysis,” Journal of Adolescent and Young Adult Oncology, vol. 11, no. 1, pp. 6–16, 2022. [Google Scholar]

58. M. Fleming, “Considerations for the ethical implementation of psychological assessment through social media via machine learning,” Ethics & Behavior, vol. 31, no. 3, pp. 181–192, 2021. [Google Scholar]

59. T. Zidaru, E. Morrow and R. Stockley, “Ensuring patient and public involvement in the transition to AI-assisted mental health care: A systematic scoping review and agenda for design justice,” Health Expectations, vol. 24, no. 4, pp. 1072–1124, 2021. [Google Scholar]

60. R. Boyd and H. Schwartz, “Natural language analysis and the psychology of verbal behavior: The past, present, and future states of the field,” Journal of Language and Social Psychology, vol. 40, no. 1, pp. 21–41, 2021. [Google Scholar]

61. T. Solomon, R. Starks, A. Attakai, F. Molina, F. Cordova-Marks et al., “The generational impact of racism on health: Voices from American Indian communities: Study examines the generational impact of racism on the health of American Indian communities and people,” Health Affairs, vol. 41, no. 2, pp. 281–288, 2022. [Google Scholar]

62. K. Spanhel, S. Balci, F. Feldhahn, J. Bengel, H. Baumeister et al., “Cultural adaptation of internet-and mobile-based interventions for mental disorders: A systematic review,” NPJ Digital Medicine, vol. 4, no. 1, pp. 1–18, 2021. [Google Scholar]

63. A. Dillette, A. Douglas and C. Andrzejewski, “Dimensions of holistic wellness as a result of international wellness tourism experiences,” Current Issues in Tourism, vol. 24, no. 6, pp. 794–810, 2021. [Google Scholar]

64. H. Haller, P. Breilmann, M. Schröter, G. Dobos and H. Cramer, “A systematic review and meta-analysis of acceptance-and mindfulness-based interventions for DSM-5 anxiety disorders,” Scientific Reports, vol. 11, no. 1, pp. 1–13, 2021. [Google Scholar]

65. Q. Tong, R. Liu, K. Zhang, Y. Gao, G. Cui et al., “Can acupuncture therapy reduce preoperative anxiety? A systematic review and meta-analysis,” Journal of Integrative Medicine, vol. 19, no. 1, pp. 20–28, 2021. [Google Scholar]

66. B. Omarov, S. Narynov, Z. Zhumanov, A. Gumar and M. Khassanova, “A skeleton-based approach for campus violence detection,” Computers, Materials & Continua, vol. 72, no. 1, pp. 315–331, 2022. [Google Scholar]

67. J. Torous, S. Bucci, I. Bell, L. Kessing, M. Faurholt-Jepsen et al., “The growing field of digital psychiatry: Current evidence and the future of apps, social media, chatbots, and virtual reality,” World Psychiatry, vol. 20, no. 3, pp. 318–335, 2021. [Google Scholar]

68. S. Prasad Uprety and S. Ryul Jeong, “The impact of semi-supervised learning on the performance of intelligent chatbot system,” Computers, Materials & Continua, vol. 71, no. 2, pp. 3937–3952, 2022. [Google Scholar]

69. C. Wilks, K. Gurtovenko, K. Rebmann, J. Williamson, J. Lovell et al., “A systematic review of dialectical behavior therapy mobile apps for content and usability,” Borderline Personality Disorder and Emotion Dysregulation, vol. 8, no. 1, pp. 1–13, 2021. [Google Scholar]

70. S. D’Alfonso, “AI in mental health,” Current Opinion in Psychology, vol. 36, no. 1, pp. 112–117, 2020. [Google Scholar]

71. R. Bevan Jones, P. Stallard, S. Agha, S. Rice and A. Werner-Seidler, “Practitioner review: Co-design of digital mental health technologies with children and young people,” Journal of Child Psychology and Psychiatry, vol. 61, no. 8, pp. 928–940, 2020. [Google Scholar]

72. R. Baragash, H. Al-Samarraie, L. Moody and F. Zaqout, “Augmented reality and functional skills acquisition among individuals with special needs: A meta-analysis of group design studies,” Journal of Special Education Technology, vol. 37, no. 1, pp. 74–81, 2022. [Google Scholar]

73. F. Williams, “The use of digital devices by district nurses in their assessment of service users,” British Journal of Community Nursing, vol. 27, no. 7, pp. 342–348, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools