Open Access

Open Access

ARTICLE

Melanoma Detection Based on Hybridization of Extended Feature Space

DCSA, Panjab University, Chandigarh, 160014, India

* Corresponding Author: Shakti Kumar. Email:

Intelligent Automation & Soft Computing 2023, 37(2), 2175-2198. https://doi.org/10.32604/iasc.2023.039093

Received 10 January 2023; Accepted 11 April 2023; Issue published 21 June 2023

Abstract

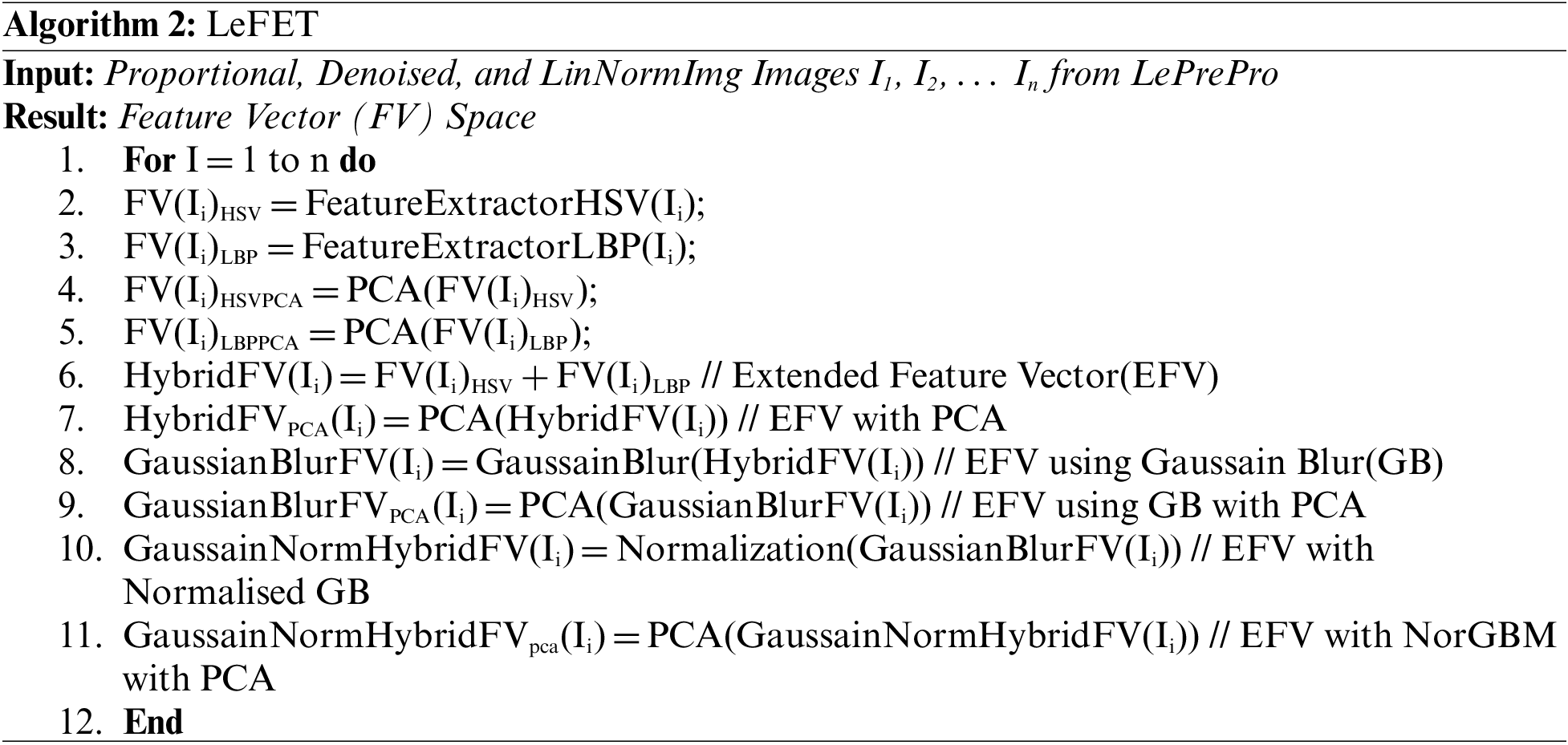

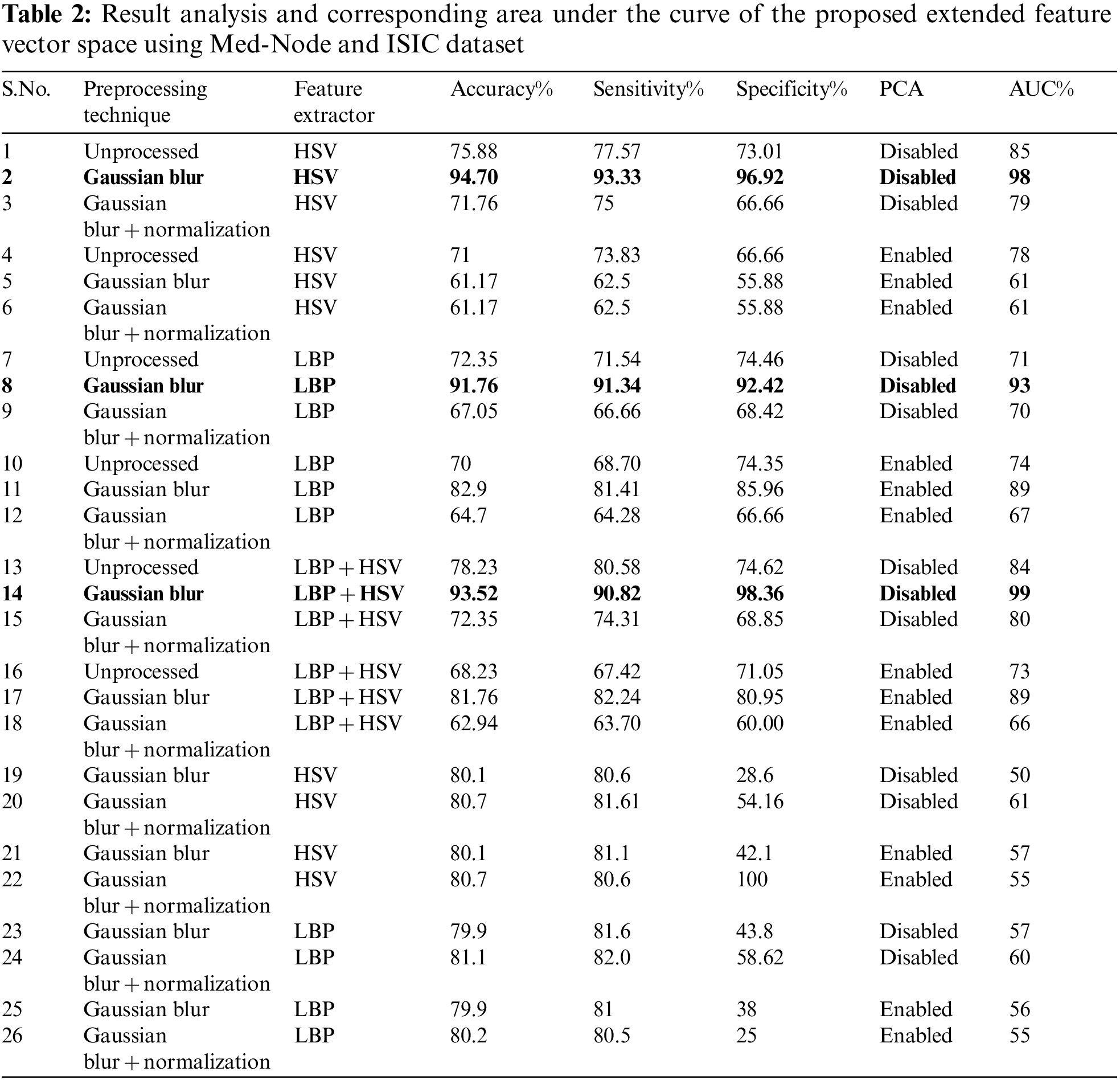

Melanoma is a perfidious form of skin cancer. The study offers a hybrid framework for the automatic classification of melanoma. An Automatic Melanoma Detection System (AMDS) is used for identifying melanoma from the infected area of the skin image using image processing techniques. A larger number of pre-existing automatic melanoma detection systems are either commercial or their accuracy can be further improved. The research problem is to identify the best preprocessing technique, feature extractor, and classifier for melanoma detection using publically available MED-NODE data set. AMDS goes through four stages. The preprocessing stage is for noise removal; the segmentation stage is for extracting lesions from infected skin images; the feature extraction stage is for determining the features like asymmetry, border, and color, and the classification stage is to classify the lesion as benign or melanoma. The infected input image for the AMDS may contain impurities such as noise, illumination, artifacts, and hairs. In the proposed methodology an algorithm LePrePro is proposed for the preprocessing stage for denoising and brightness cum contrast normalization and another algorithm LeFET is proposed for extending the feature vector space in the feature extraction stage using a hybrid approach. In the study, a novel approach has been proposed in which different classifiers, feature extractions, and data preprocessing steps of the AMDS are compared. In a conclusion, this comparison revealed that on experimentation using Med-Node and ISIC 2017 Dataset, the best results included Gaussian blur as the best data preprocessing step, Extended feature vector which is the combination of Hue Saturation Value (HSV), and Local Binary Pattern (LBP) was the best feature extraction method, and the ensemble bagged tree was the best classification technique on the Med-Node data sets with 99% Area Under the Receiver Operating Characteristic Curve (AUC), 93.52% accuracy, 90.82% sensitivity, and 98.36% specificity in the proposed automatic melanoma detection system.Keywords

Melanoma is a treacherous form of skin cancer. In India, melanoma of the skin was estimated to have 3916 new cases as reported by the International Agency for Research on Cancer (IARC) [1]. Early diagnosis of melanoma leads to fewer death rates. Therefore, it is necessary to examine and monitor melanoma’s progress at its early stages. Dermatologists use different techniques to detect melanoma. They use clinical tests or naked eye examinations. All this is done to closely observe the melanoma’s progress. The most prominent factors in the analysis of melanoma are the characteristics like color, texture, and shape as described by authors in [2]. These characteristics are used for the detection of melanoma by dermatologists. A biopsy, which is an invasive way, is also used to determine melanoma. Due to the advancement in technology, machine learning is also playing a wide role in the field of cancer detection. Computer-aided diagnosis systems like Automatic Melanoma Detection Systems (AMDS) help to detect melanoma from the captured image of the lesion. The Automatic Melanoma Detection System plays an important role in the field of medical science. Automatic melanoma detection systems can be an alternative or second opinion to the manual results by dermatologists [3,4]. Such systems are also helpful in remote areas where a patient can perform self-skin diagnosis using such systems. Using image processing and pattern recognition, the Automatic Melanoma Detection System (AMDS) may identify melanoma skin cancer from an image of the affected area. While processing the image captured for melanoma diagnosis, many difficulties such as lightning conditions, noise, hair, and moisture present on the skin may occur. Preprocessing, segmentation, feature extraction, and classification are the four fundamental processes that the melanoma detection system goes through. Dermoscopic or macroscopic methods of image capture produce noise, which is removed during preprocessing by doing things like removal of the skin’s hair, sharpening the lesion, and shrinking the image for utilization. The act of segmenting involves separating the lesion location from the surrounding skin picture. It typically includes pixels with comparable characteristics. To extract key features from the segmented area of the skin, this step feeds information into the feature extraction phase. The process of finding parameters like boundary, symmetry, and color is called feature extraction. The performance of the classification step is directly governed by the extracted characteristics. Melanoma is classified to distinguish it from benign or nevi. This study focuses on the problem statement to design and implement a hybrid system for automatic melanoma detection that effectively combines preprocessing techniques and feature extraction methods to improve the accuracy and robustness of melanoma diagnosis using lesion images. The system takes into account the variations in image quality, lighting, and scaling, and overcomes the challenges of false positive and false negative diagnoses. The ultimate goal is to provide an efficient and reliable tool that can assist dermatologists in making prompt and accurate diagnoses and improve the prognosis for melanoma patients. The research problem is to build a more accurate and robust system to preprocess and extract features from a given image of the lesion using a hybrid approach. It also focuses to develop an efficient and effective algorithm that accurately extracts relevant features from the images of skin lesions and correctly classifies them as benign or malignant (melanoma). The proposed system is able to identify the best classifier on the MED-NODE dataset using HSV and LBP feature extractor using a hybrid approach. Also the same is being evaluated using accuracy, sensitivity, specificity, and AUC metric. The goal is to aid dermatologists in making more accurate and prompt diagnoses and ultimately improve the prognosis for melanoma patients. The motivation for the study of an automatic melanoma detection system using a hybrid preprocessing and feature extraction technique is likely to address the significant public health issue posed by melanoma, which is the deadliest form of skin cancer. Early detection of melanoma is crucial for improving patient outcomes, but manual screening by dermatologists can be time-consuming and subjective, leading to diagnostic errors. An automatic melanoma detection system using a hybrid preprocessing and feature extraction technique aims to improve the accuracy and efficiency of melanoma diagnosis. The hybrid preprocessing and feature extraction technique is expected to enhance the quality of the input images by removing noise and highlighting the relevant features for melanoma detection. The use of an automatic system would allow for more consistent and objective diagnostic results, reducing the risk of misdiagnosis and potentially saving lives. The experiment’s proposed technique, which categorizes the lesion as benign or melanoma, uses 3 preprocessing methods, 3 feature extraction methods, and 4 classifiers on the publicly accessible data set Med-Node and summarizes the results through an in-depth evaluation. The key endowments of our present study are as follows:

• A newfangled system is provided for the recognition of the infected lesion as benign or melanoma.

• Two algorithms namely LePrePro and LeFET have been proposed for preprocessing and Feature Extraction Stages.

• The proposed model determines the best classifier, AUC, and feature extractor for the publically accessible Med-Node and ISIC datasets.

• For various stages of AMDS, the proposed method is applied in an individual as well as in a hybrid mode.

On the Med-Node and ISIC dataset, 99% AUC, 93.52% accuracy, 90.82% sensitivity, and 98.36% specificity is obtained by employing the proposed approach.

The consequent sections are outlined as Section 2 provides a review of literature, Section 3 deals with materials and methods, including dataset and methodology, and discussion and analysis of the findings are conducted in Section 4. Finally, the conclusions, including future work, are contained within Section 5.

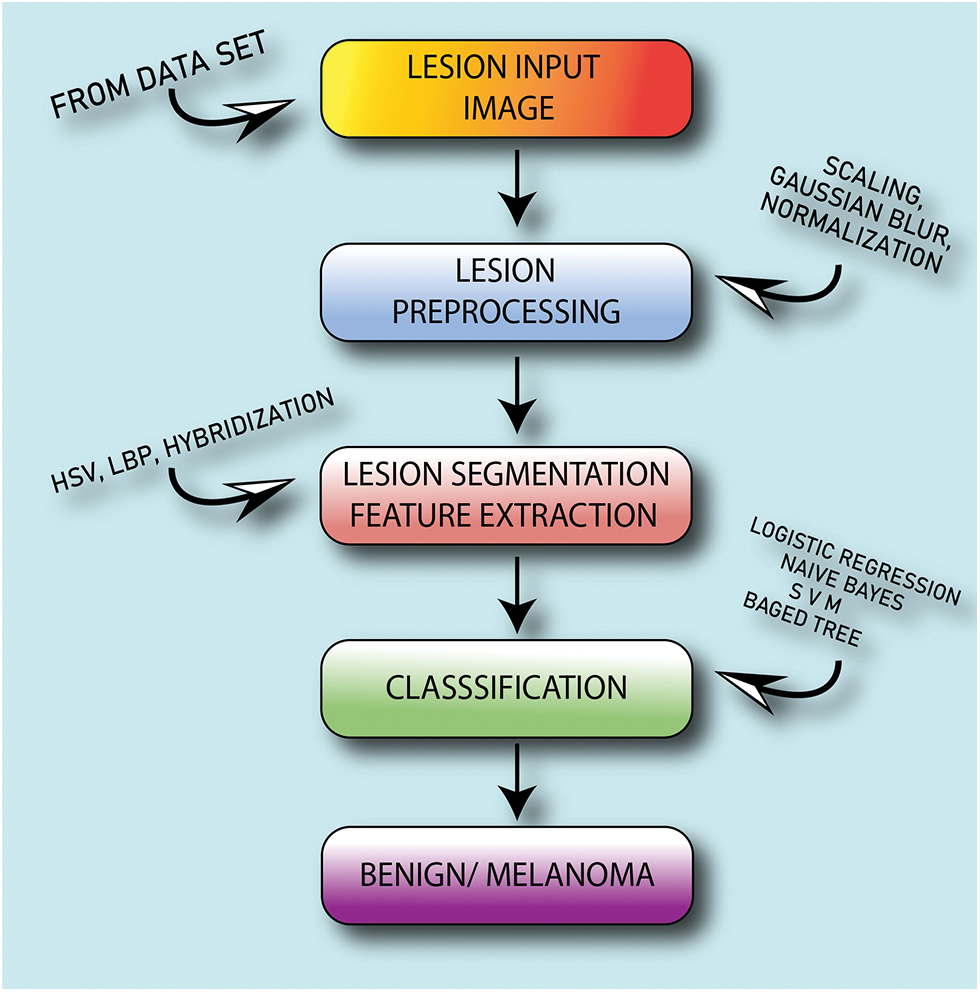

A significant quantity of literature has been reported by numerous researchers in the domains of computer vision and image processing over the course of roughly two decades. Many methods have been proposed in the literature to build an AMDS. To provide more accurate results extended feature vector space based on the hybridization of features is a key process for the automatic melanoma detection system. In [5] authors proposed a symmetry and color-matching-based method to predict cancer. In particular, the method uses lesion edge detection and segmentation to calculate image symmetries to isolate benign. The suspected images were kept in three different classes, namely basal cell carcinoma (BCC), squamous cell carcinoma (SCC), and Melanoma. The categorization was done based on symmetrization and pigmented color-matching scores. In [6] authors described a method for pigment skin lesion classification of dermoscopic images using texture, color, and shape features. In the proposed technique, the authors divided the images according to the significant clinical properties into sets of regions. The extracted features were provided as input to the optimization framework, and these features were ranked according to the findings of optimal subsets of the features. Shape features were used to extract the region of interest (ROI) from the infected area of the skin. The located border from the lesion image was further used to extract the shape-related features of the lesion. In [7] authors proposed two systems one for the analysis of global features of lesions and the other for the analysis of local features of lesions. The observation from the performed experiments showed that color features performed better than texture features for the problem of lesion classification. In [8] authors performed image analysis for lesion segmentation, and dermoscopic feature detection and measured the performance using ground truth. According to authors in [9] for the diversity of lesion images different acquisition and cleaning methods has to be used which strongly emphasize the need for preprocessing lesion images. Authors in [10] quantified HSV color space in non-equal intervals to construct a one-dimensional color feature vector. In [11] authors proposed the Extraction of texture features using local binary patterns. In [12] authors described a statistical method for reducing variables of data for the consideration of essential features only. For better classification, the use of a modified support vector machine is proposed by [13]. The application of Classification algorithms for processing medical images using logistic regression and the random forest is described by authors in [14,15]. In the case of melanoma detection generally, the number of images for the melanoma class is few as compared to non-melanoma classes. Therefore an imbalance does exist in the dataset. To deal with imbalanced data set a novel approach is proposed by authors in [16,17]. In [18] authors modeled a Histogram of Oriented Gradients (HOG) based descriptor as an application of a Support Vector Machines (SVM) Model for melanoma detection. The application of an SVM model to an optimized set of HOG-based skin lesion descriptors is the key method through which the author proposed a successful framework of a Computer Aided Diagnosis (CAD) system for melanoma skin cancer. A combination of features for lesion classification is considered by authors in [19]. Authors in [20] proposed a cascaded and discriminative framework for the analysis of lesion images. In [21] authors integrated deep features information to generate the most discriminant feature vector, with the advantage of preserving the original feature space they also the framework for most discriminant feature selection and proposed a framework for dimensionality reduction. Similarly, multilevel feature reduction and selection are being proposed by authors in [22,23]. Authors in [24] proposed a model for the improvement of classification accuracy by performing feature selection using the binary harris hawks optimization algorithm. In [25] authors proposed a blended model design to lower the variance in output predictions. For the researchers, a publically available dataset for the experimentation of melanoma classification Med-Node computer-assisted diagnosis tool is proposed by authors in [26]. The role of texture and color-based features to detect melanoma is described by authors in [27]. Neural network, logistic reasoning, and naïve based classifiers for the classification of the lesion are described by authors in [28–30]. Authors in [31] focused on the use of a bagged ensemble tree classifier for the improvement of accuracy for melanoma classification. According to [32,33] the four stages of any automatic melanoma detection are usually: the preprocessing stage for noise removal, the segmentation stage for the extraction of the region of interest, the feature extraction stage for extracting the relevant features, and the classification stage for decision making. Using image processing and pattern recognition, the Automatic Melanoma Detection System (AMDS) identifies melanoma skin cancer from an image of the affected area. One variety of skin cancer is melanoma. Of all the malignancies of the skin, it has the greatest fatality rate. It is among the most prevalent malignancies in both young people and adults. Melanocytes are a type of cell found in the innermost layer of the epidermis, which is located just above the dermis. The skin’s pigment or color is produced by these cells. The healthy melanocyte cells in melanoma alter and expand uncontrollably, resulting in the formation of a malignant tumor. Melanoma cancer risk is increased by the ozone layer’s reduced ability to shield against Ultraviolet (UV) rays. Additionally, increased sun, solarium, and tanning bed exposure can cause skin infections. In the epidermal layer, there may be benign skin cancer lesions. Similar to the malignant lesion part of the epidermis is not life hazardous until it penetrates and reaches the dermis layer. While processing the image captured for melanoma diagnosis, many difficulties such as lightning conditions, noise, hair, and moisture present on the skin may occur. Preprocessing, segmentation, feature extraction, and classification are the four fundamental processes that the melanoma detection system goes through. Dermoscopic or macroscopic methods of image capture produce noise around the lesion, which is removed during preprocessing by doing things like removal of the skin’s hair surrounding the lesion, sharpening the lesion, and scaling the image for usage later. The act of segmenting involves separating the lesion location from the surrounding skin picture. It typically includes pixels with comparable characteristics. To extract key features from the segmented area of the skin, this step feeds information into the feature extraction phase. The process of finding parameters like boundary, symmetry, and color is called feature extraction. The performance of the classification step is directly governed by the extracted characteristics of the lesion. The classification step is used to differentiate melanoma from benign or nevi lesions. Fig. 1 depicts the proposed hybrid AMDS procedure in detail. Many methods are available in the literature for the classification of Lesions as benign or melanoma. Therefore, a comparative study of these methods is really helpful for identifying the best method for melanoma classification.

Figure 1: Automatic melanoma detection system based on hybrid approach

In this study, a novel method of lesion classification from the skin infected area using a hybrid and extended feature-based approach is proposed using an automatic melanoma detection system as shown in Fig. 1.

Noise diminution is performed in the first step. The second stage is used to extract the characteristics of the lesion including texture and color features, the third stage is used to classify, and the fourth is to analyze the results. The Med-Node dataset, consisting of images in the naevus and melanoma categories, is used for experimentation. The LePrePro and LeFET, two novel algorithms, have been proposed. LePrePro is applicable to the lesion preprocessing stage and LeFET is applicable to the feature extraction stage.

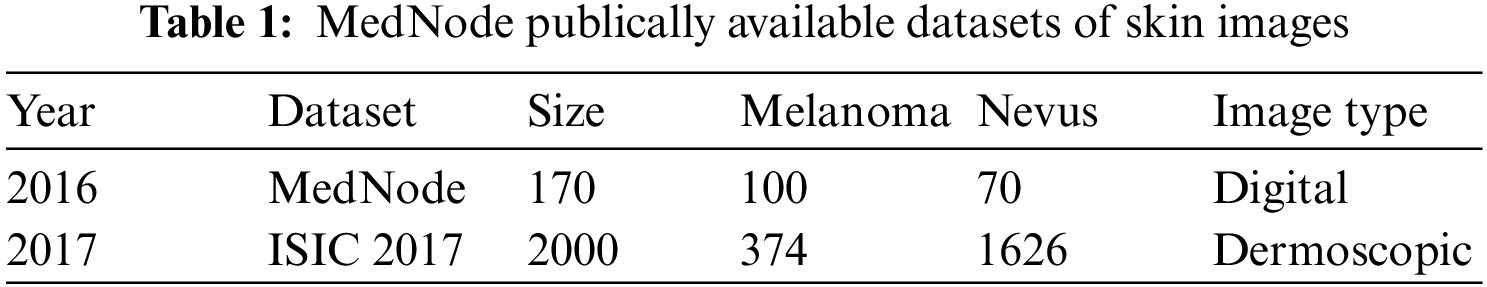

The quality of the image influences the accuracy of the system. A lesion image can be taken using a dermoscopy process using a specialized dermatoscope or using a macroscopic process. MedNode and ISIC 2017 publically available datasets are listed below in Table 1 along with their Image type and size.

To perform the experiments, Med-Node and ISIC 2017 publically available datasets are selected. Before the selection of the datasets, some of the points were considered which include:

■ With the exception of instances where a disease manifests itself in a clearly different way on different areas of the body, which can be included in the dataset, each such image must come from different patients.

■ Each image needs to be clear and exposed adequately so that it may be properly annotated to be part of the dataset.

■ Each image must accurately depict the group to which it belongs. The dataset excludes uncommon clinical variants, skin conditions that have already received treatment, secondary infections, and so forth.

After consideration of the above-mentioned factors, Med-Node Dataset is considered for experimentation as it satisfies all the requirements of the proposed work. Also, this dataset contains lesion images in the category of melanoma and benign. The Med-Node system for skin cancer identification is a publically available dataset formulated using macroscopic images having 100 nevus images and 70 melanoma images from the digital image collection of the Department of Dermatology at the University Medical Center Groningen (UMCG). A cross-validation approach is used to evaluate the usefulness of dataset size. Similarly from the ISIC dataset out of 2000 images we have considered 755 images with ground truth out of which 608 images are from the Benign category while the remaining 147 images are from the melanoma category.

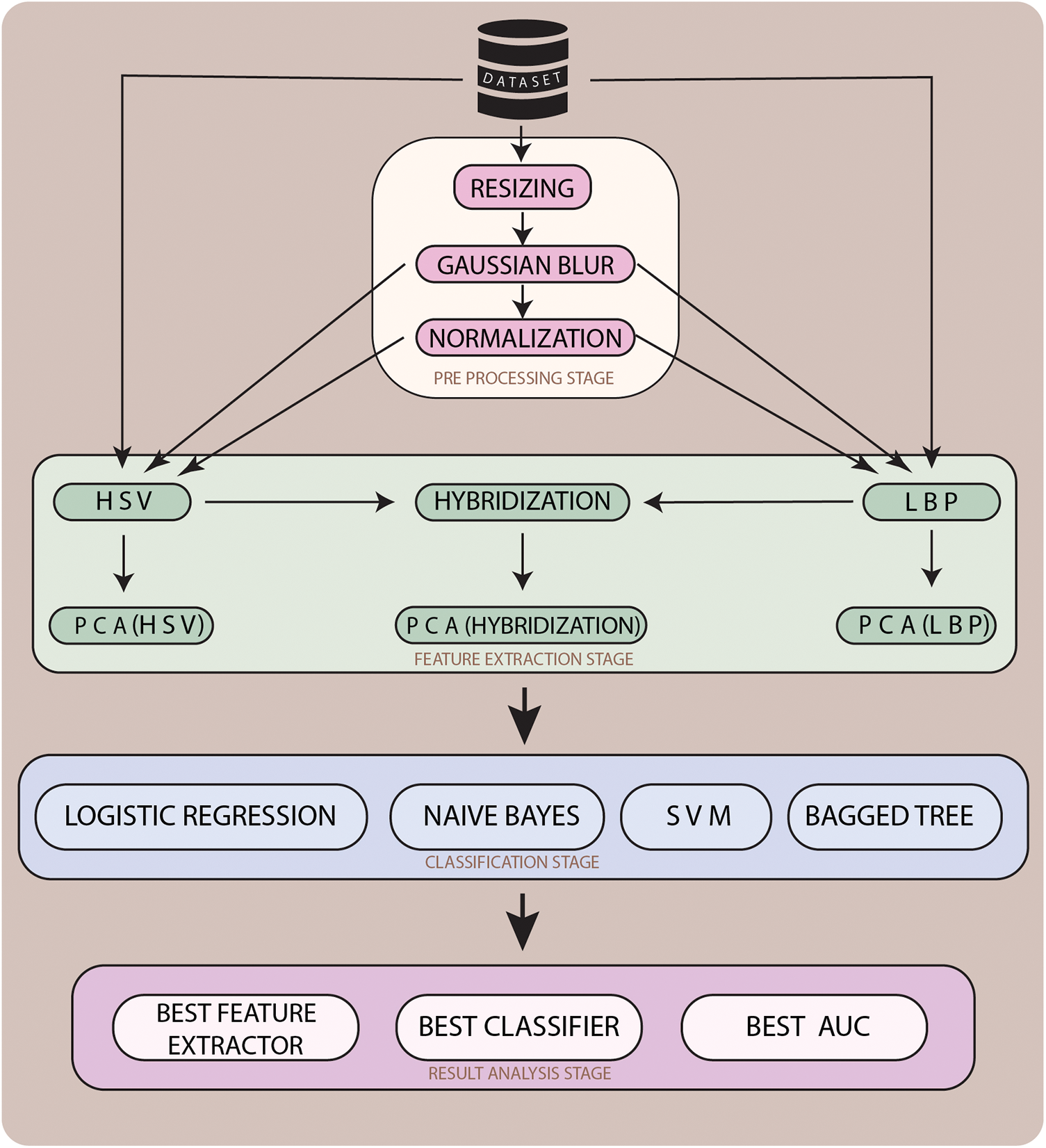

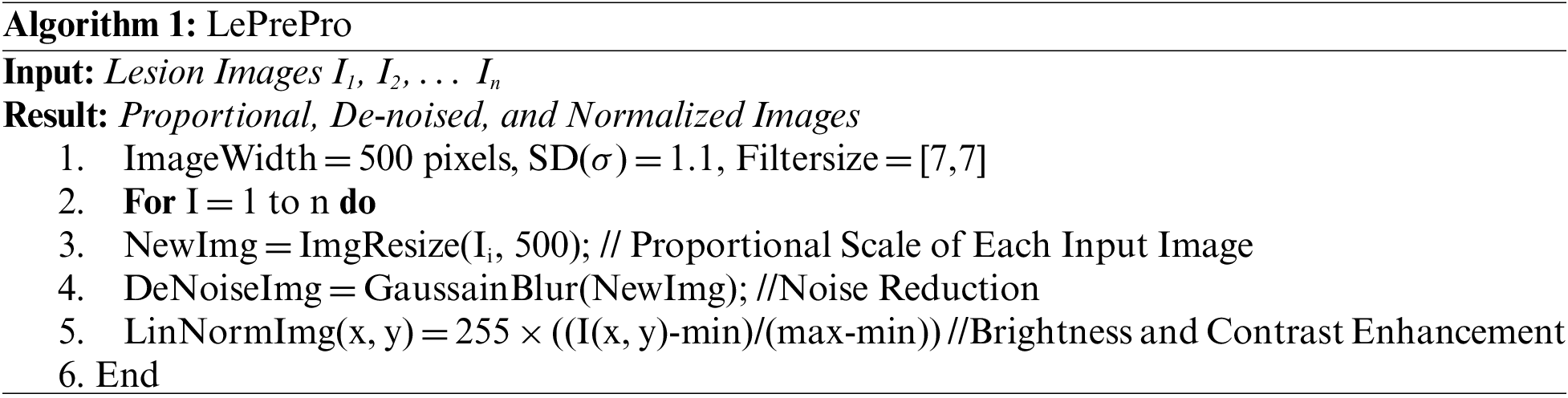

In the proposed methodology for the classification of lesion images as melanoma or nevus, the hybrid approach is used. The methodology is used to evaluate the preprocessing, feature extraction, and classification procedure for lesion classification. Our proposed methodology goes through 4 stages as shown in Fig. 2. In Stage 1 which is Lesion Preprocessing the unnecessary artifacts are removed. Also in the same stage the proposed algorithm LePrePro is used. In this stage, the lesion images from the MedNode dataset are taken and then they are changed to a proportional scale having an image width of 500 pixels, the value of standard deviation is taken as 1.1 and the filter size is considered as [7,7] to further preprocess the image using Gaussian blur and Linear Normalization technique. After the preprocessing is done the images of the lesion from the med-node dataset are kept in three categories Unprocessed Images, Gaussian Blur (GB) Preprocessed Images, and a combination of Gaussian Blur and Normalized images (GBNR). On the outcomes of the LePrePro, proposed algorithm Feature Extraction techniques Like LBP and HSV are used for the calculation of the lesion feature vector. To extend the feature vector LBP and HSV features are combined. Then in Stage 3 which is Lesion Classification the classification of lesion image is done as nevus or melanoma using a support vector machine, logistic regression (LR), naïve bayes (NB), and bagged tree (BT) Classifier, and Stage 4 is used for the analysis of result so that the comparative evaluation of obtained results related to preprocessing, feature extraction, and classification can be done. Each stage of the proposed methodology is discussed in the ensuing subsection.

Figure 2: Proposed hybrid approach for automatic melanoma detection system

3.2.1 Stage 1—Lesion Preprocessing

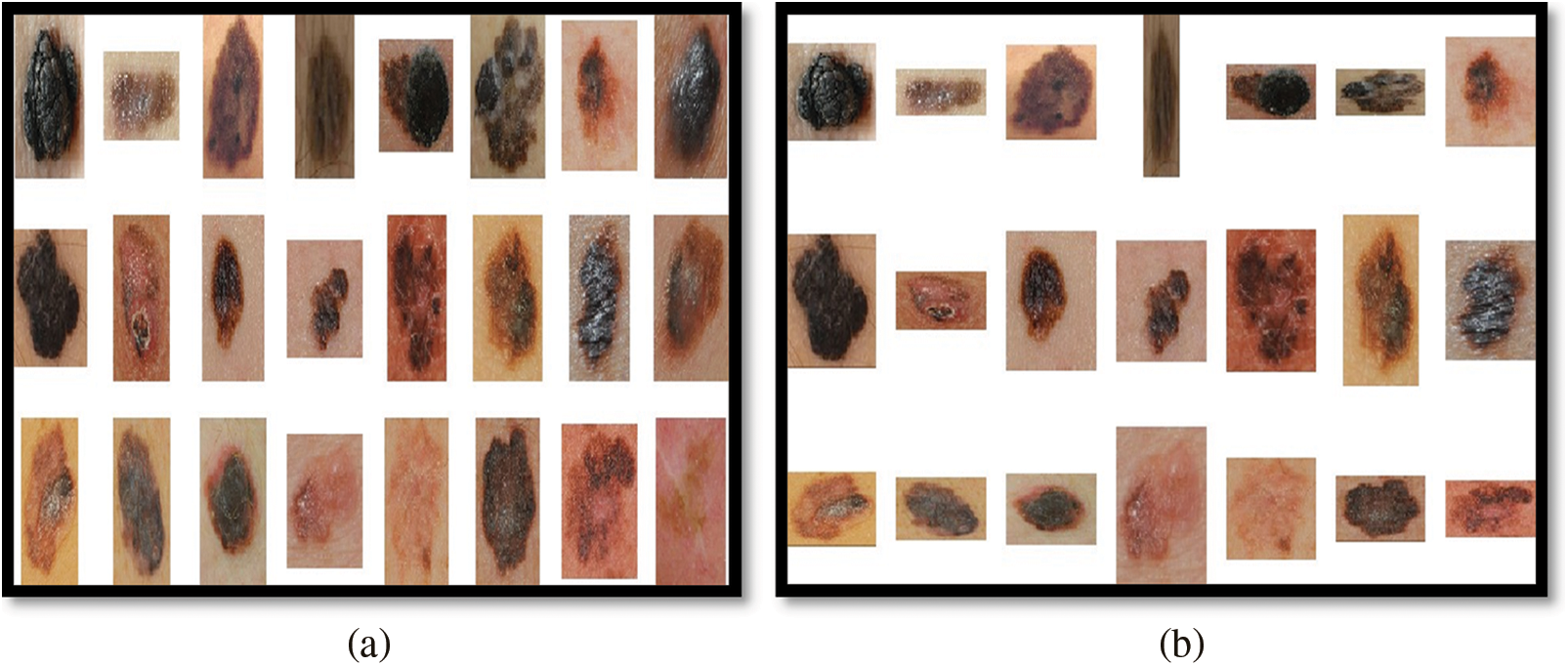

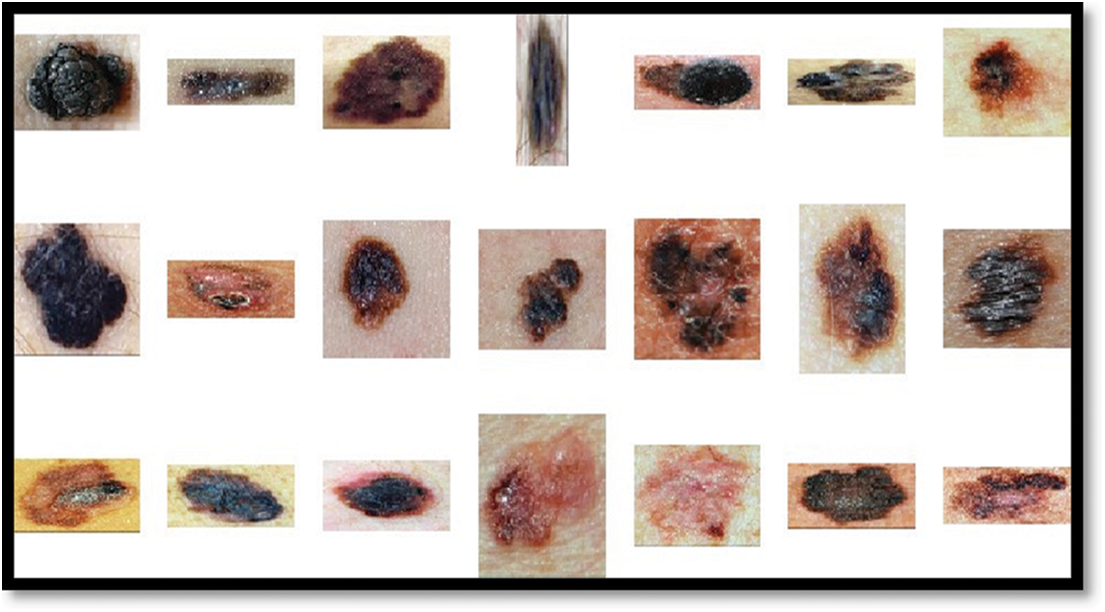

The input images that will serve as the base for the entire experiment come in a variety of resolutions and sizes as shown in Fig. 3a. Consequently, images must be scaled to a proportional size (500 pixels in width) and de-noised as shown in Fig. 3b. Subsequently to the scaling operation, three different preprocessing techniques, including Gaussian Blur, Normalization, and the combination of Gaussian Blur and Normalization, are carried out on the scaled images as shown in Fig. 4. The Gaussian Blur operation reduces the noise and while smoothing out the local variations in intensity it preserves the overall structure of the lesion image. Also, it reduces the size of image data which is helpful for the improvement of computational efficiency. Similarly, the normalization operation when performed on the lesion images makes the intensity value more consistent and reduces the impact of illumination variations. The hybrid preprocessing approach combines both features to get a robust output from the preprocessing stage using LePrePro Algorithm as given below:

Figure 3: Unprocessed and resized cum denoised melanoma images from med-node

Figure 4: Resized and denoised and normalized melanoma images to balance illumination and contrast from med-node outcomes of LePrePro

In the proposed system, de-noised and linear normalized images as well as a combination of both techniques, are considered in the preprocessing stage to evaluate the classification results of the proposed system.

3.2.2 Stage 2—Feature Extraction

In machine learning applications, the feature extraction stage is performed to carry out relevant features for further decision-making. It is the second stage in an automatic melanoma detection system, following the preprocessing phase. The preprocessing output is used as the input for the feature extraction step. For more features, further dimensionality reduction is carried out. From the extracted features some features are irrelevant or noisy. Therefore the process of the removal of unnecessary, noisy, and replicated features is carried out using principal component analysis. After the removal of unnecessary features, the complexity gets reduced for the classification step. Each feature that’s been identified from the input image is represented as a vector.

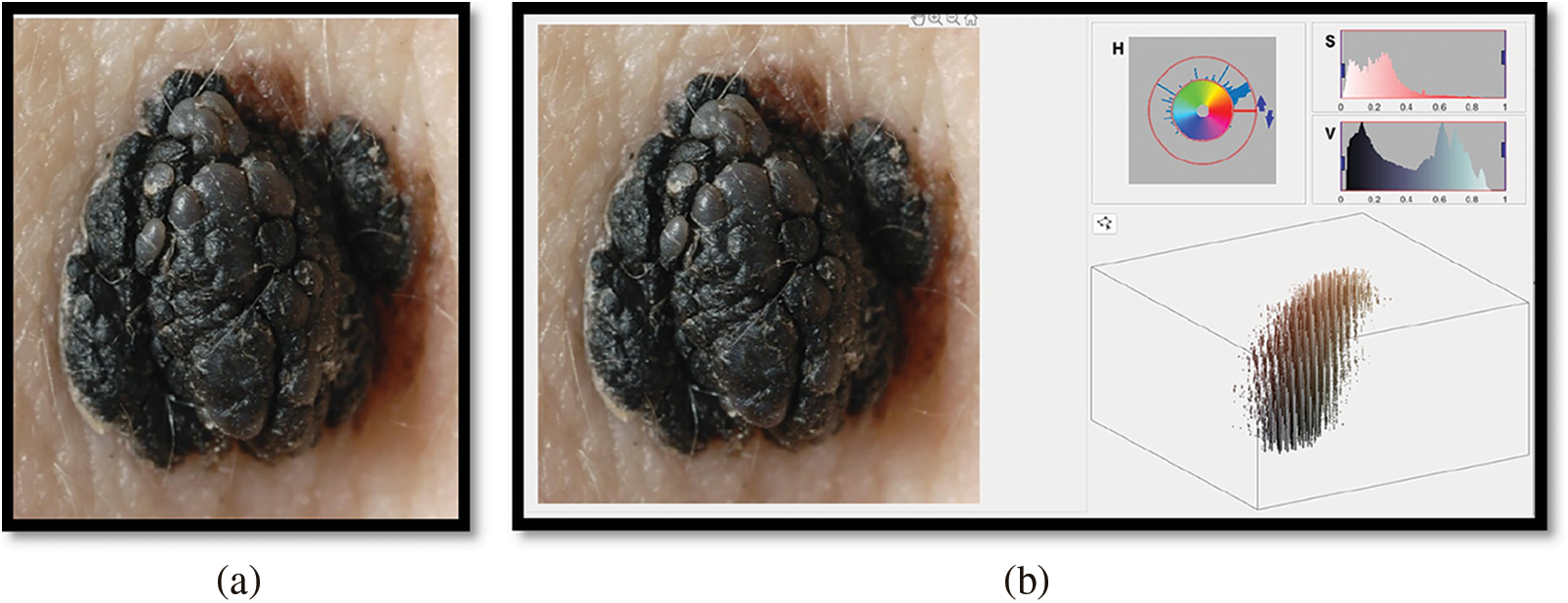

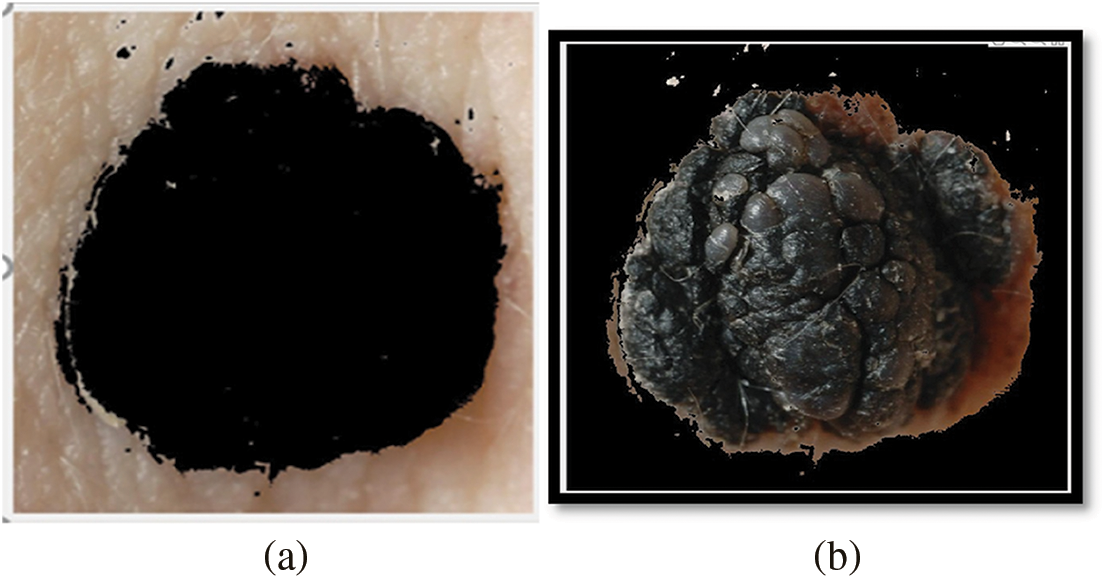

Hue-Saturation-Value outcomes are used to indicate the lesion input image’s color characteristics. The lesion’s Red Green Blue (RGB) input image is transformed to HSV color. This color transformation is used to separate the color and intensity information from the lesion image of the med-node dataset. Further using the HSV color space feature vector obtained contains an improvement in contrast and visibility of the lesion. Also using HSV a computation between entropies of lesion classes can be used for melanoma prediction. The greater the distance between the entropies more is the more chance for the lesion to be melanoma. Similarly, the color Euclidian distance is helpful for melanoma detection using HSV color space. The RGB representation of the lesion is shown in Fig. 5a and HSV in Fig. 5b.Also we can further generate the mask using HSV color space as shown in Fig. 6a which can further be used for boundary detection using the inversion technique as shown in Fig. 6b. Further these detected boundaries are helpful for lesion classification as benign or melanoma.

Figure 5: RGB and HSV representation melanoma images from med-node

Figure 6: Image mask generation and inverted binary mask generation using hsv color space of melanoma images from med-node

Local Binary Pattern is a useful texture descriptor. It is used to extract the texture features of the lesion image. In LBP the value of thresholds is computed using the comparison of the pixel value of the segmented image based on the value of the current pixel. The lesion image is divided into 8 × 8 cells. Each cell’s pixel is compared with its neighbor. A histogram for each cell is calculated. Then all cells are combined for normalization. LBP’s main advantage in our proposed study is its resistance to monotonic gray scale which provides perfect dealing with illumination conditions. Its computational simplicity, which enables lesion picture analysis in difficult real-time conditions, is another crucial characteristic of LBP to be used as a feature extractor in our proposed studyDue to these considerations, the proposed study emphasizes the usage of HSV and LBP only as a feature descriptors.

We extract features using HSV and LBP from the lesion images which are unprocessed that is directly taken from the med-node and ISIC 2017 dataset, on the images which are preprocessed using Gaussian Blur and on the images which are preprocessed by the combination of Gaussian Blur and normalization. Then we hybridize the feature vector by combining features from HSV and LBP and the same process is repeated. As the feature space is large, dimensionality reduction is also required. Using Principal Component Analysis (PCA), the features are extracted from HSV and LBP. In the proposed study 3 feature extraction methods are evaluated to measure the effectiveness of the proposed system including HSV, LBP, and a combination of HSV and LBP. Further, the feature vector space is extended by considering the HSV with PCA, LBP with PCA, Extended Feature Vector HSV + LBP with PCA, HSV + LBP with Gaussain Blur and without Normalization and PCA, HSV + LBP with Gaussain Blur and without Normalization and with PCA, HSV + LBP with Gaussain Blur and Normalization but without PCA, HSV + LBP with Gaussian Blur and Normalization with Dimensionality Reduction.

The feature extraction process in the proposed model provides novelty in terms of evaluating the effectiveness of these feature extraction methods separately as well as in combination with each other.

In order to classify the lesion as melanoma or benign. The system goes through different classifiers, including support vector machine, logistic regression, Naive Bayes & Bagged Tree. From these available classifiers, the proposed system compares the results on the basis of accuracy, sensitivity, and specificity. The inputs for the classifiers are taken from the extended feature vector space introduced in the feature extraction stage of the proposed study.

Support Vector Machine defines the separate hyperplane for discriminative classification. The labeled data in training are bifurcated by an optimal hyperplane which is basically used to categorize new for untrained data. One of the most well-liked supervised learning algorithms, Support Vector Machine, or SVM, is used to solve Classification and Regression problems. However, it is largely employed in Machine Learning Classification issues. The SVM algorithm’s objective is to establish the best line or decision boundary that can divide n-dimensional space into classes, allowing us to quickly classify fresh data points in the future. A hyperplane is a name given to this optimal decision boundary. SVM selects the extreme vectors and points that aid in the creation of the hyperplane. The approach is referred described as a “support vector machine” because of these extreme circumstances.

Logistic Regression is a common technique in order to solve a binary classification problem. Predictive analytics and categorization frequently make use of this kind of statistical model, also referred to as a logit model. The likelihood of an event is estimated using logistic regression. Given that the result is a probability, the dependent variable’s range is 0 to 1.

Naive Bayes classifiers are a group of classification algorithms built on Bayes’ Theorem. It is a family of algorithms rather than a single method, and they are all based on the idea that every pair of features being classified is independent of the other. The number of parameters required for naive Bayes classifiers is linear in the number of variables (features/predictors) in a learning problem, making them extremely scalable. Instead of using an expensive iterative approximation, as is the case for many other types of classifiers, maximum-likelihood training can be performed simply by evaluating a closed-form expression, which takes linear time.

Bagged Tree is a popular classifier. The key concept behind bagged trees is that you rely on numerous decision trees rather than just one, which enables you to take advantage of the wisdom of multiple models. A decision tree’s large volatility is generally considered to be its major drawback. This is a problem since even a small change in the data can have a significant impact on the model and forecasts for the future. This is relevant since one advantage of bagged trees is that they reduce variation while maintaining bias consistency. The proposed system has to face some challenges including more processing and computational resources for high performance of the proposed system, and overfitting to the training data. Moreover, the proposed system is not a replacement for the dermatologist instead the same can be used as a second opinion. Generally machine learning model suffers from a trade-off between the quantity and quality of the dataset, So a larger dataset if selected for training and testing can result in the requirement of more processing power and time in the case of the proposed methodology.

The experiments are conducted using MATLAB and an Intel(R) Core(TM) i7-10870H CPU running at 2.20 GHz, a GeForce RTX 3060 processor, and 16.0 GB of RAM. The outcomes of the proposed system using the Med-Node dataset are shown in Table 2 from S.No 1 to 18 and using ISIC 2017 dataset using S.No. 19 to 26. In the proposed system, we employ the following metrics given from Eqs. (1) to (3) in order to compare the efficiency of each combination (preprocessing, feature extraction, and classification):

Accuracy is determined by the ability with which melanoma and benign instances can be distinguished.

Sensitivity is determined by the system’s capacity to accurately identify cases of melanoma, defined as:

Specificity is determined by the system’s capacity to accurately identify cases of benign, defined as:

In these formulas listed from Eqs. (1) to (3), the correctly identified number of cases of Melanoma are represented by True Positive (TP); the incorrectly identified number of cases of Melanoma are represented by False Positive (FP); correctly identified number of cases of Benign are represented by True Negative (TN); and incorrectly identified the number of cases of Benign are represented by False Negative (FN).

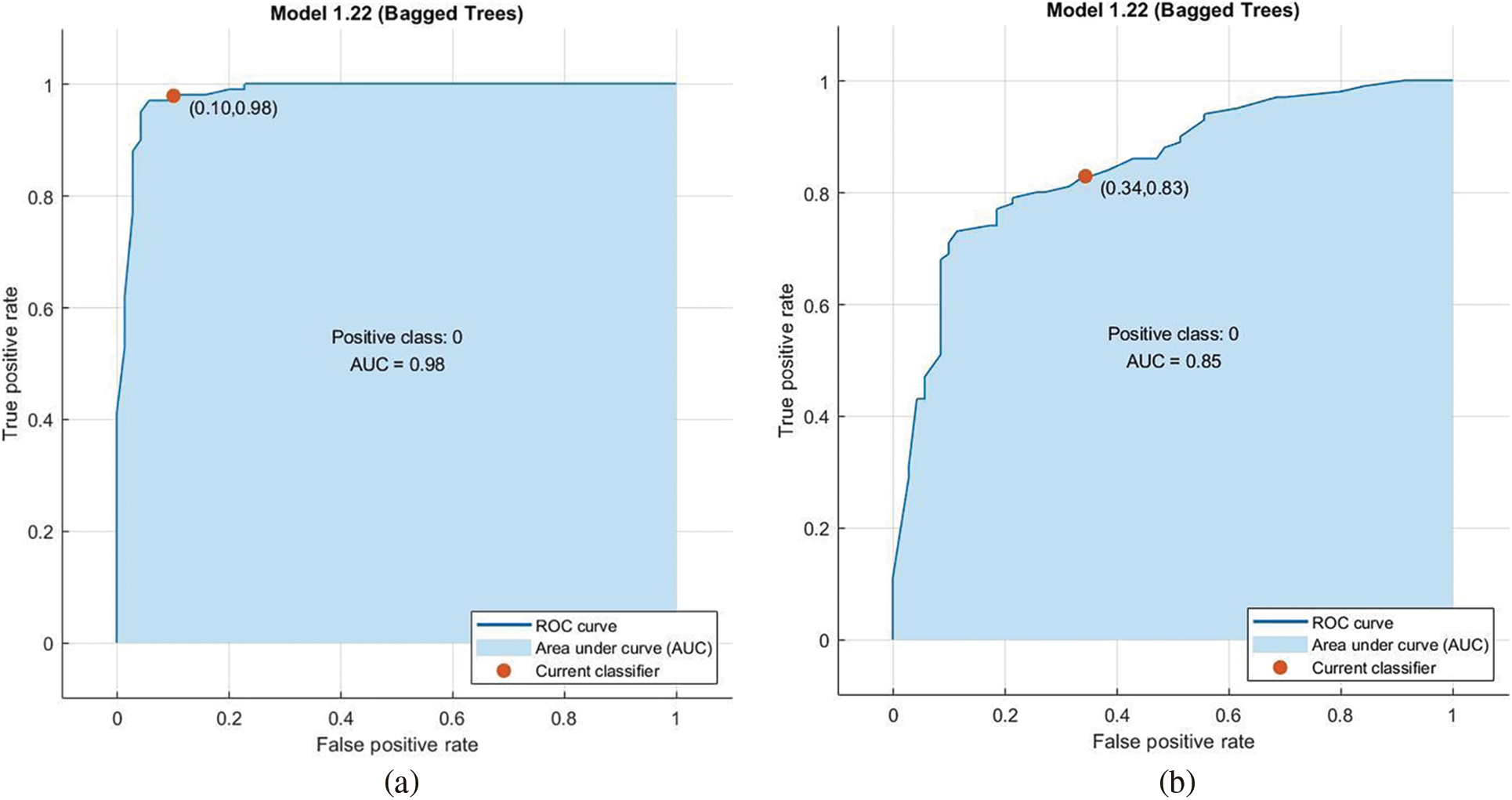

Area Under Curve is a higher level metric, using the true positive rate (T P R, same as sensitivity) and false positive rate (F P R = F P/(F P + T N)), The AUC measures how well a classification system can distinguish between a positive class and a negative class. When simply considering specificity and sensitivity separately, it could be difficult to determine if a classification system is overfit with positive samples (high sensitivity) or overfit with negative cases (low sensitivity). As a result, several classification systems offer AUC as the standard metric to assess their efficacy, both for positive class and negative class. When the classification threshold fluctuates, the area below a Receiver Operating Characteristic curve composed of various combinations of TPR and FPR is determined. The classification approach is more closely to a flawless prediction system when the AUC value is higher.

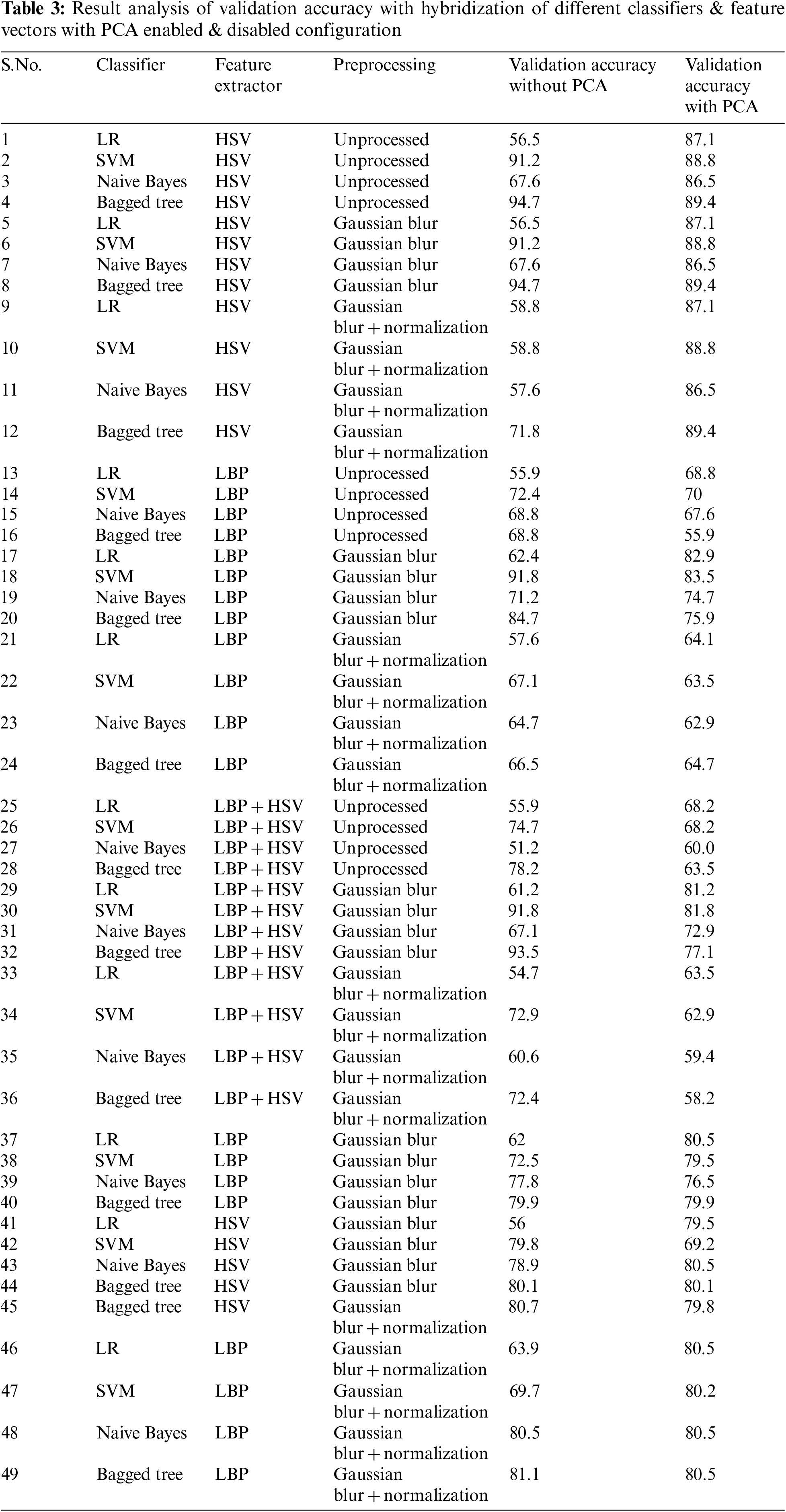

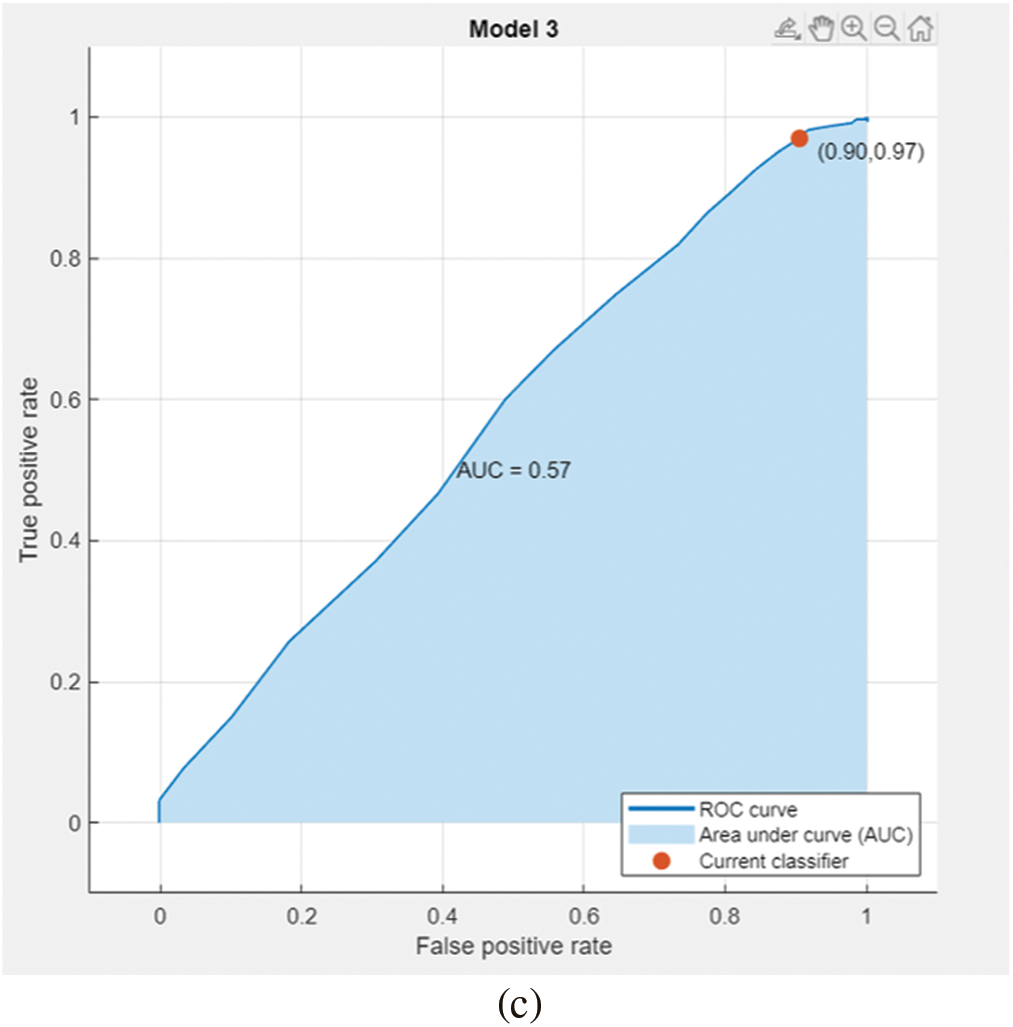

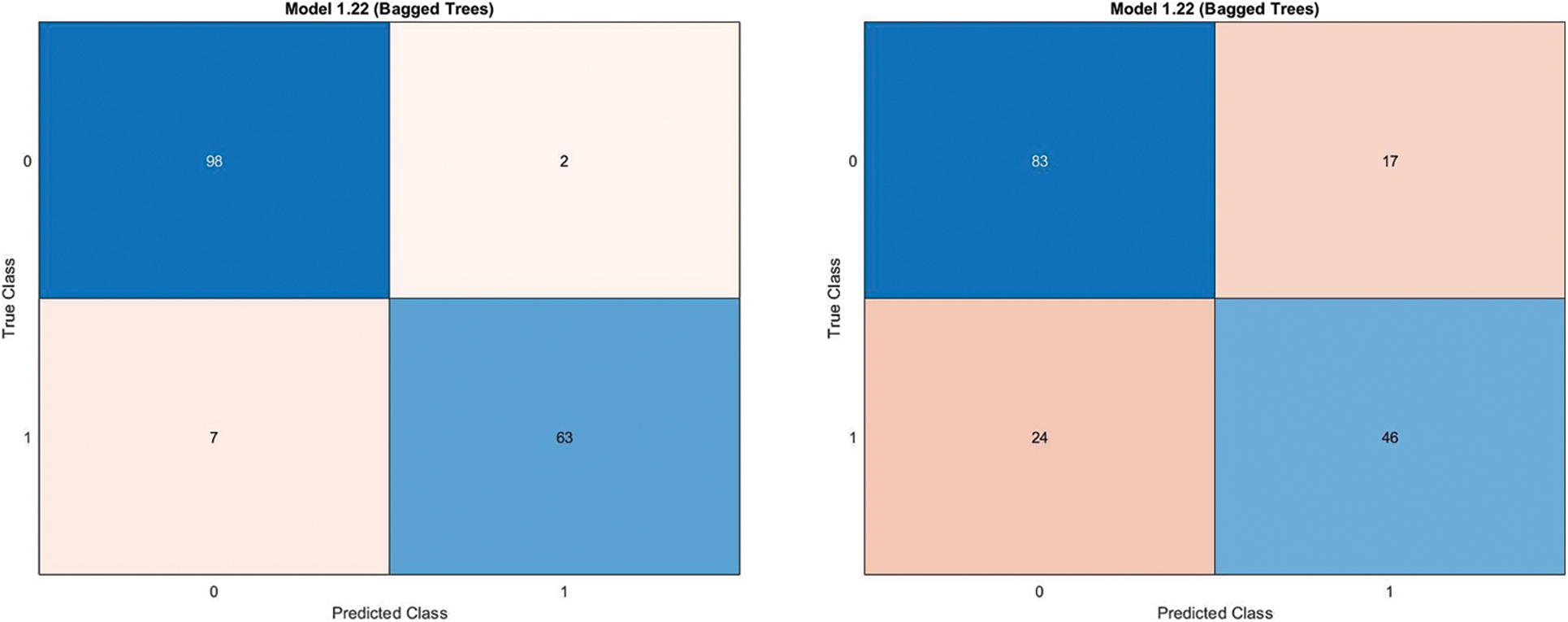

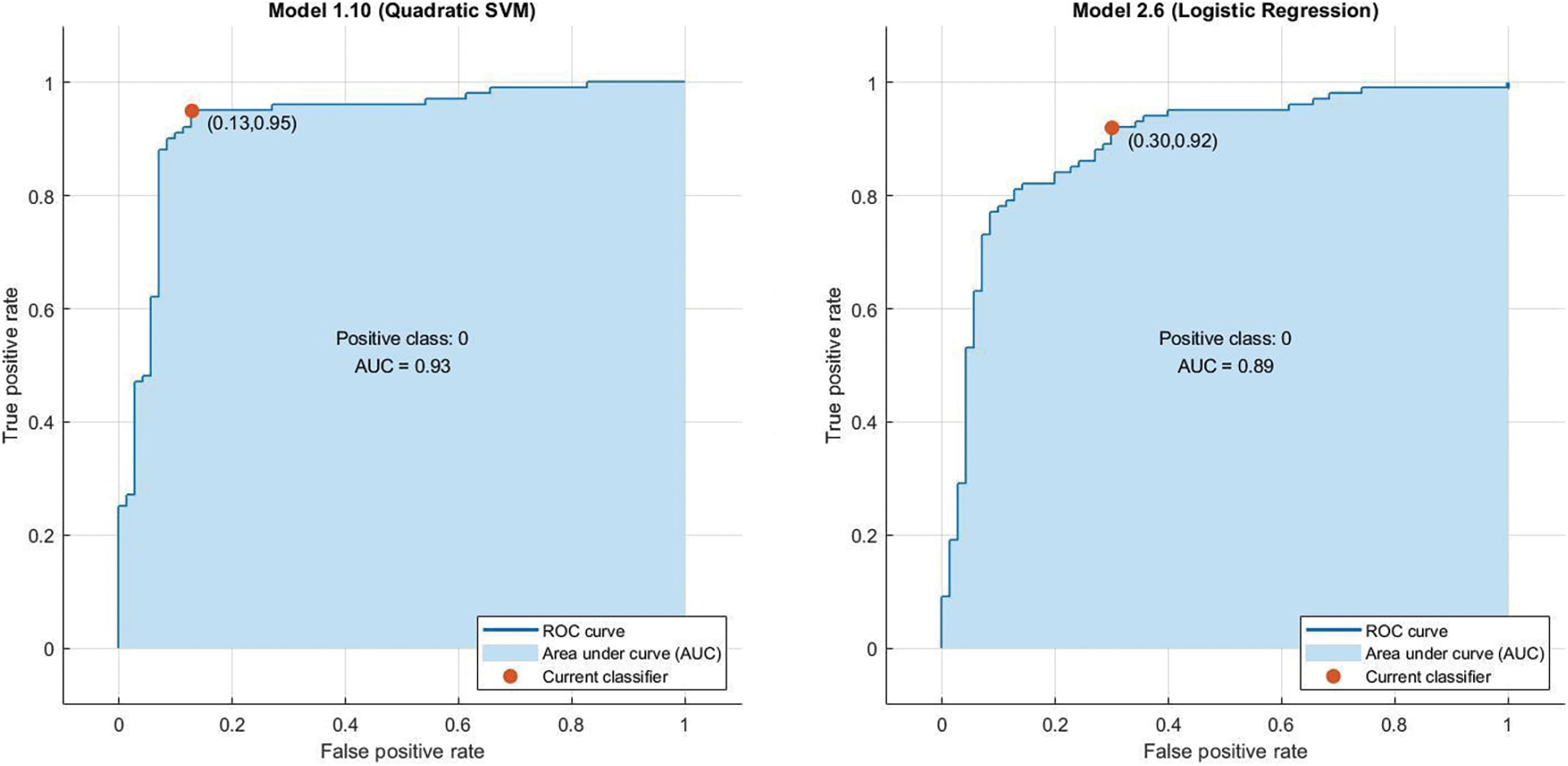

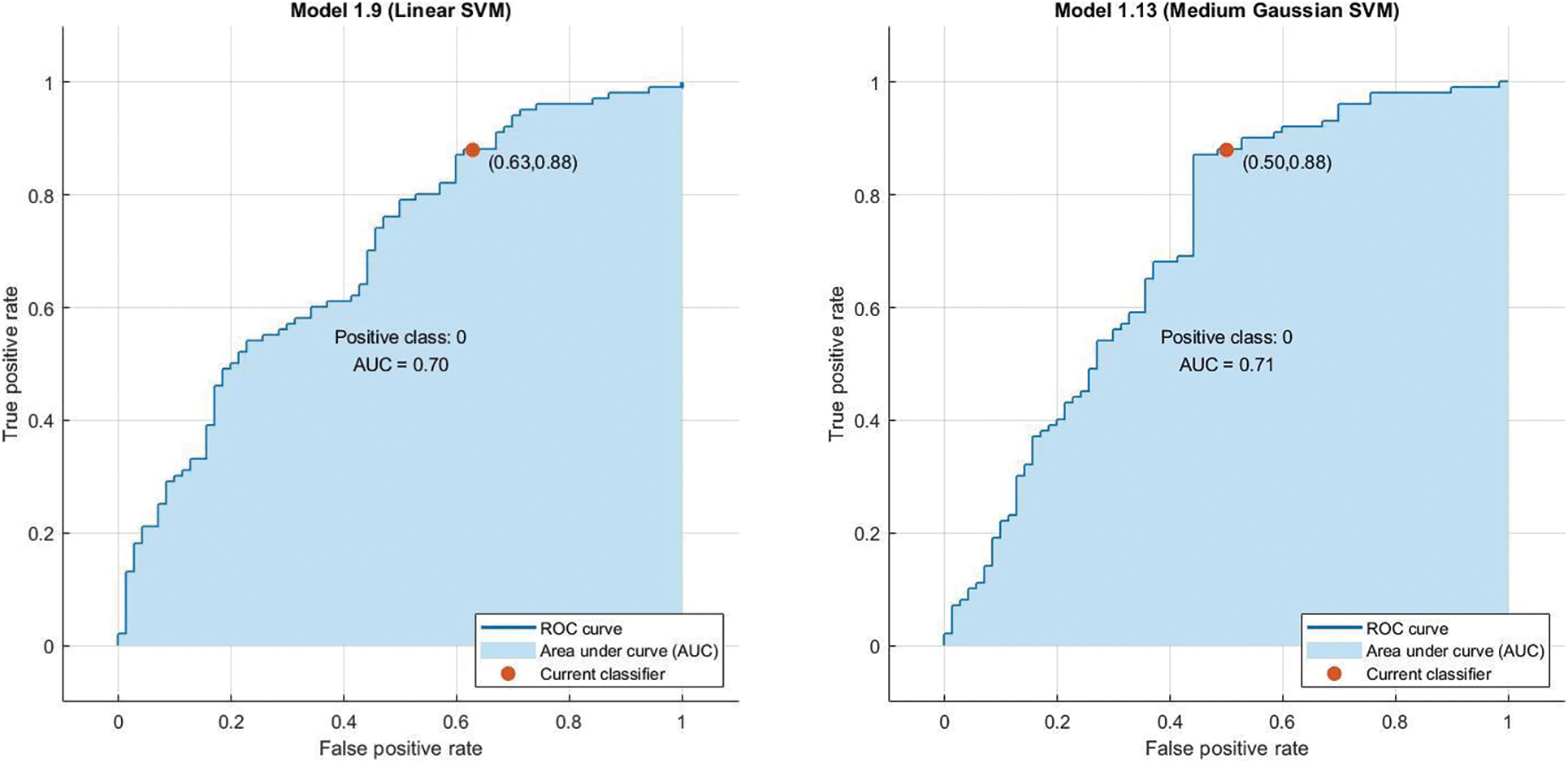

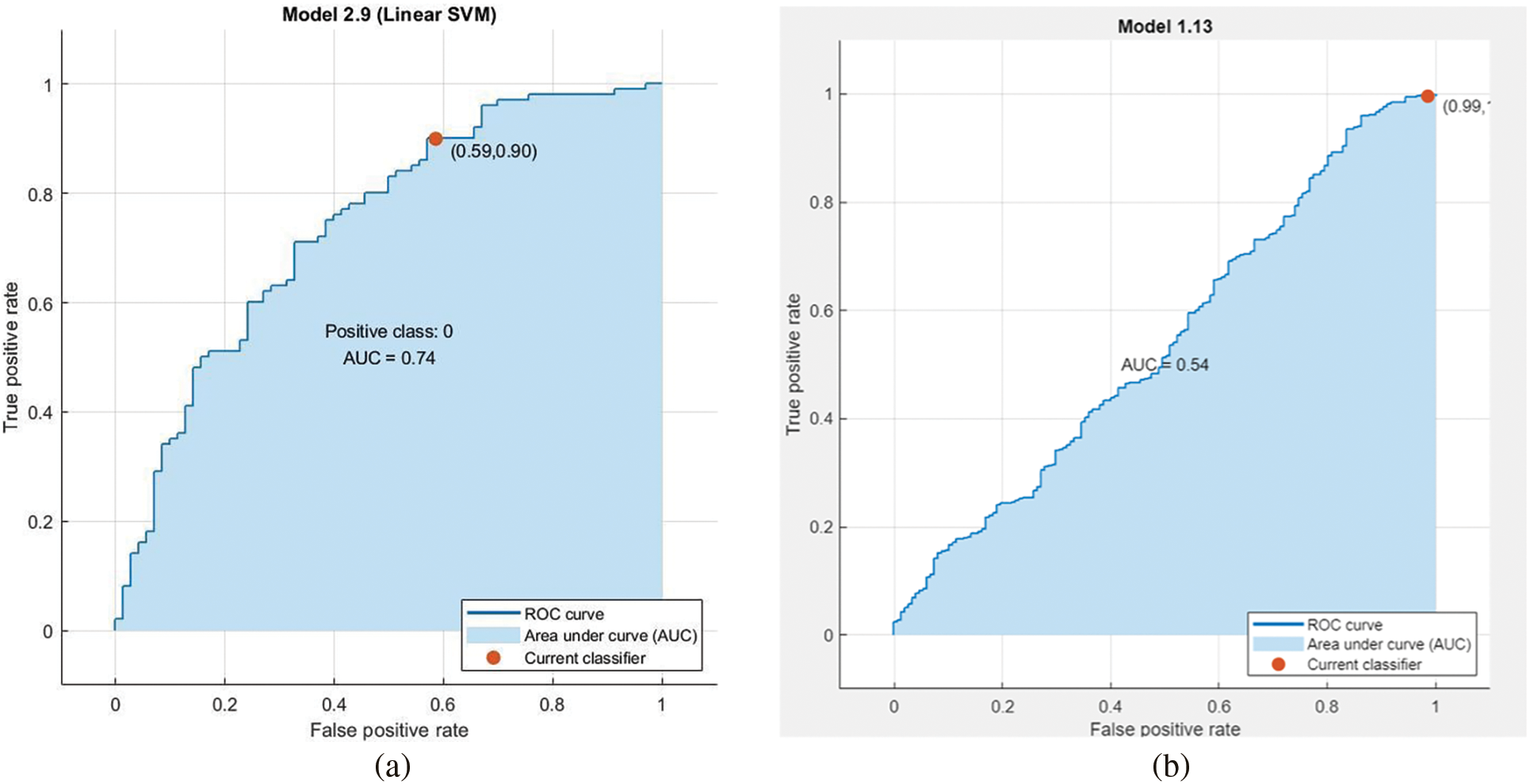

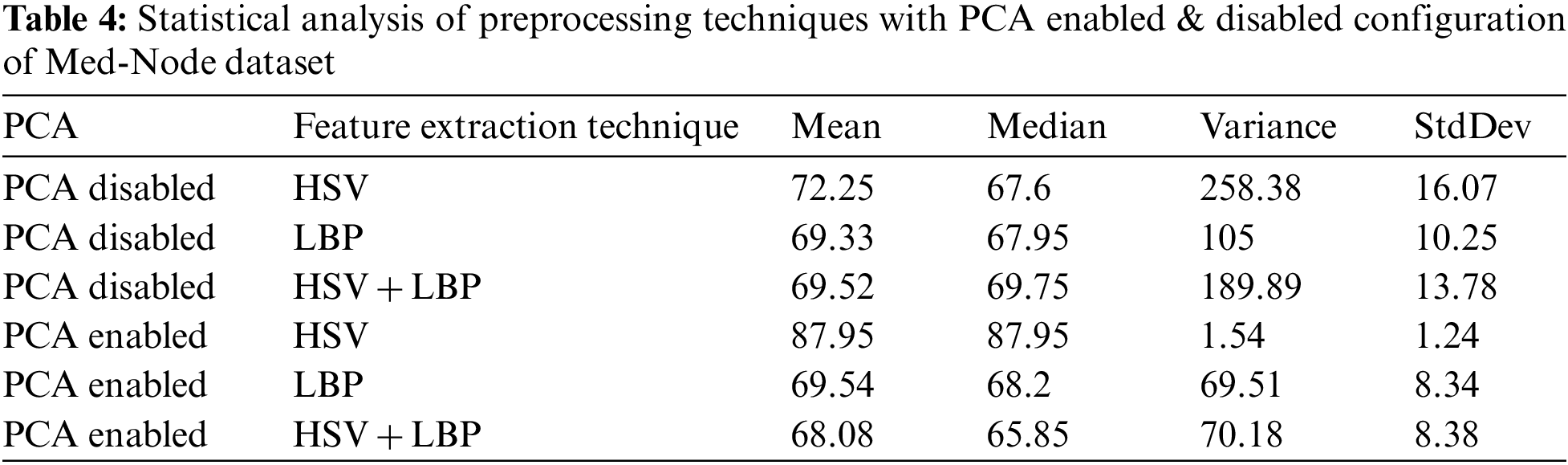

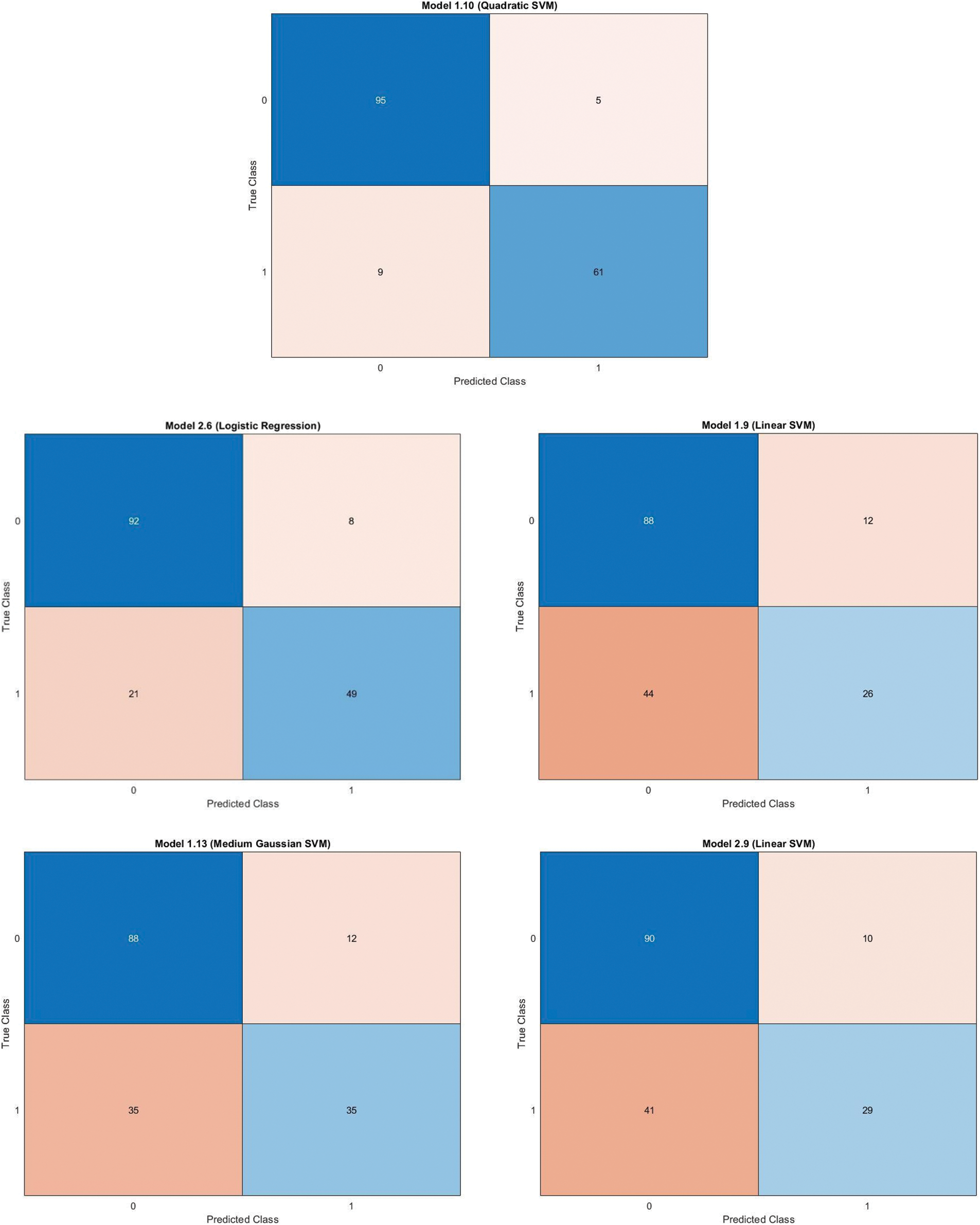

In the preprocessing stage from the proposed algorithm, a combination of Gaussian Blur and Normalisation is obtained and the same has been used to extract the features from the HSV and LBP Feature Extractor. To extend the feature vectors, the LeFET algorithm is proposed which makes use of HSV, LBP, HSV with PCA, LBP with PCA, HSV + LBP, and HSV + LBP with PCA. The obtained extended feature vector space from the LeFET algorithm is passed to classifiers including Logical Regression, Support Vector Machine, Naive Bayes Classifier, and Ensemble Bagged Tree Classifier. The obtained results from different classifiers are given in Table 3 using the Med-Node dataset listed from S.No. 1 to 36 and using ISIC 2017 from S.No. 37 to 49. The proposed methodology makes use of threefold validation technique. In 3-fold cross-validation, the dataset is divided into three equal-sized parts or folds. In each iteration of the cross-validation, two-thirds of the data (or roughly 66.67%) is used for training, and one-third (or roughly 33.33%) is used for validation (or testing). In the first iteration, the first fold is used for validation and the remaining two folds are used for training. In the second iteration, the second fold is used for validation and the remaining two folds are used for training. In the third iteration, the third fold is used for validation and the remaining two folds are used for training. So, over the three iterations of the cross-validation, each fold is used for validation exactly once, and each fold is used for training twice. The results from each iteration are then combined to give an overall estimate of the model’s performance. The AUC for the bagged tree classifier for Gaussian blur and unprocessed images with HSV feature vector using the Med-Node dataset is shown in Figs. 7a and 7b. Also the AUC for bagged tree classifier for Gaussian blur images with HSV feature vector using ISIC 2017 dataset is shown in Fig. 7c. Confusion matrix for bagged tree classifier for Gaussian blur and unprocessed images with HSV feature vector using Med-Node dataset is shown in Fig. 8. The AUC for Med-Node dataset using quadratic SVM and LR classifier for Gaussian blur with LBP feature vector with PCA enabled and disabled respectively is shown in Fig. 9. The AUC for quadratic SVM and LR classifier for Gaussian blur with LBP feature vector with PCA enabled and disabled respectively is shown in Fig. 10 using Med-Node dataset. AUC for linear SVM unprocessed with LBP feature vector with PCA enabled on Med-Node dataset is shown in Fig. 11a and AUC for linear SVM processed with Gaussian blur and normalization using LBP feature vector without PCA enabled on ISIC dataset is shown in Fig. 11b to depict the results of experiments. The best results are obtained using the Med-Node dataset. Also, Table 4 provides the descriptive statistical measures for the feature extraction techniques used in the proposed methodology using the Med-Node dataset.

Figure 7: AUC for bagged tree classifier for gaussian blur and unprocessed images with hsv feature vector on Med-Node dataset in (a), (b), and ISIC 2017 dataset in (c)

Figure 8: Confusion matrix for bagged tree classifier for gaussian blur and unprocessed images with hsv feature vector on Med-Node dataset

Figure 9: AUC for quadratic svm and lr classifier for gaussian blur with lbp feature vector with pca enabled and disabled respectively on Med-Node dataset

Figure 10: AUC For Linear SVM and Medium Gaussian Support Vector Machine (MGSVM) classifier for gaussian blur normalized and unprocessed with LBP feature vector with PCA disabled respectively on Med-Node

Figure 11: AUC for linear svm unprocessed with lbp feature vector with pca enabled on Med-Node dataset in (a) and AUC for linear svm with normalized gaussian blur lbp feature vector without pca enabled on ISIC dataset in (b)

Fig. 12 contains a Confusion matrix for quadratic svm and lr classifier for gaussian blur with lbp feature vector with pca enabled and disabled, linear svm and Medium Gaussian Support Vector Machine (MGSVM) classifier for gaussian blur normalized and unprocessed with lbp feature vector with pca disabled & linear svm unprocessed with lbp feature vector with pca enabled respectively.

Figure 12: Confusion matrix for quadratic svm and lr classifier for gaussian blur with lbp feature vector with pca enabled and disabled, linear svm and mgsvm classifier for gaussian blur normalized and unprocessed with lbp feature vector with pca disabled & linear svm unprocessed with lbp feature vector with pca enabled respectively on Med-Node dataset

For the evaluation of the proposed study, a comparison of our hybrid methodology with some existing state-of-the-art techniques used for texture and color-based features extraction and melanoma classification using Med-Node and some other publically available datasets is listed below in Table 5.

The proposed model performs an in-depth evaluation of lesion classification using a hybrid approach based on machine learning. The proposed system consists of techniques for lesion preprocessing, feature extraction and classification, and results evaluation for the detection of melanoma. Experimentation is done with 3 different methods of preprocessing step, 3 feature extraction techniques, and 4 classifiers. The study makes use of publicly available Med-Node and ISIC 2017 data sets. The experimental configuration revealed that the best results are obtained utilizing the Gaussian blur preprocessing technique, the hybrid HSV and LBP feature extraction technique, and the ensemble bagged tree classifier on the Med-Node data set. By including more contemporary methods for various stages of automatic melanoma detection systems, such as Convolutional Neural Network and Deep Neural Network, the suggested system can be employed for research purposes in the future. The proposed study’s future scope is covered by the hybridization of existing feature extractors like HSV, LBP, Scale-Invariant Feature Transform (SIFT), and some other existing feature extractors along with the adoption of both CNN and DNN approach so that the behavior of the proposed system can be further observed under various conditions in terms of time-space complexity.

Acknowledgement: This paper and the research behind it would not have been possible without the exceptional support of my supervisor, Dr. Anuj Kumar. His enthusiasm, knowledge and exacting attention to detail have been an inspiration and kept my work on track from my first idea of this manuscript to the final draft of this paper.

Funding Statement: The authors received no specific funding for this study.

Author Contributions: The authors confirm contribution to the paper as follows: Introduction: Dr. Anuj Kumar. Literature review: Dr. Anuj Kumar. Data acquisition & preprocessing: Dr. Anuj Kumar. Segmentation & feature extraction: Mr. Shakti Kumar. Material and methods: Mr. Shakti Kumar. Analysis and interpretation of results: Mr. Shakti Kumar. Draft manuscript preparation: Dr. Anuj Kumar, Mr. Shakti Kumar. All authors reviewed the results and approved the final version of the manuscript.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. “Cancer today”, “Global Cancer Observatory,” 2020. [online] Available: https://gco.iarc.fr/today/online-analysis-reemap?v=2020&mode=cancer&mode_population=continents&population=900&populations=900&key=asr&sex=0&cancer=39&type=0&statistic=5&prevalence=0&population_group=0&ages_group%5B%5D=0&ages_group%5B%5D=17&group_cancer=1&include_nmsc=0&include_nmsc_other=1&reloaded [Google Scholar]

2. H. Bhatt, V. Shah, K. Shah, R. Shah and M. Shah, “State-of-the-art machine learning techniques for melanoma skin cancer detection and classification: A comprehensive review,” Intelligent Medicine, vol. 2, 2022. [Google Scholar]

3. S. Parshionikar, R. Koshy, A. Sheikh and G. Phansalkar, “Skin cancer detection and severity prediction using computer vision and deep learning,” in Proc. of the Second Int. Conf. on Sustainable Technologies for Computational Intelligence, New York, NY, USA, Springer, pp. 295–304, 2022. [Google Scholar]

4. G. Campanella, C. Navarrete-Dechent, K. Liopyris, J. Monnier, S. Aleissa et al., “Deep learning for basal cell carcinoma detection for reflectance confocal microscopy,” Journal of Investigative Dermatology, vol. 142, no. 1, pp. 97–103, 2021. [Google Scholar] [PubMed]

5. M. H. Hamd, K. A. Essa and A. Mustansirya, “Skin cancer prognosis based pigment processing,” International Journal of Image Processing, vol. 7, no. 3, pp. 227, 2013. [Google Scholar]

6. M. E. Celebi, H. A. Kingravi, B. Uddin, H. Iyatomi, Y. A. Aslandogan et al., “A methodological approach to the classification of dermoscopy images,” Computerized Medical Imaging and Graphics, vol. 31, no. 6, pp. 362–373, 2007. [Google Scholar] [PubMed]

7. C. Barata, M. Ruela, M. Francisco, T. Mendonca and J. S. Marques, “Two systems for the detection of melanomas in dermoscopy images using texture and color features,” IEEE Systems Journal, vol. 8, no. 3, pp. 965–979, 2014. [Google Scholar]

8. D. Gutman, N. C. Codella, E. Celebi, B. Helba, M. Marchetti et al., “Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the international skin imaging collaboration (ISIC). ” arXiv preprint arXiv:1605.01397, 2016. [Google Scholar]

9. P. Tschandl, C. Rosendahl and H. Kittler, “The ham10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions,” Scientific Data, vol. 5, no. 1, pp. 1–9, 2018. [Google Scholar]

10. J. Q. Ma, “Content-based image retrieval with hsv color space and texture features,” in Web Information Systems and Mining. Int. Conf. on, Shanghai, China, IEEE, pp. 61–63, 2009. [Google Scholar]

11. Z. Guo, L. Zhang and D. Zhang, “A completed modeling of local binary pattern operator for texture classification,” IEEE Transactions on Image Processing, vol. 19, no. 6, pp. 1657–1663, 2010. [Google Scholar] [PubMed]

12. M. Greenacre, P. J. Groenen, T. Hastie, A. I. d’Enza, A. Markos et al., “Principal component analysis,” Nature Reviews Methods Primers, vol. 2, no. 1, pp. 100, 2022. [Google Scholar]

13. M. Tanveer, T. Rajani, R. Rastogi, Y. H. Shao and M. A. Ganaie, “Comprehensive review on twin support vector machines,” Annals of Operations Research, vol. 157, pp. 1–46, 2022. [Google Scholar]

14. D. W. Hosmer Jr., S. Lemeshow and R. X. Sturdivant, “Applied logistic regression,” John Wiley & Sons, vol. 398, pp. 1–33, 2013. [Google Scholar]

15. L. Breiman, “Random forests,” Machine Learning, vol. 45, no. 1, pp. 5–32, 2001. [Google Scholar]

16. S. Hido, H. Kashima and Y. Takahashi, “Roughly balanced bagging for imbalanced data,” Statistical Analysis and Data Mining: The ASA Data Science Journal, vol. 2, no. 5–6, pp. 412–426, 2009. [Google Scholar]

17. T. M. Khoshgoftaar, M. Golawala and J. Van Hulse, “An empirical study of learning from imbalanced data using random forest,” in 19th IEEE Int. Conf. on Tools with Artificial Intelligence (ICTAI 2007), Patras, Greece, vol. 2, pp. 310–317, 2007. [Google Scholar]

18. S. Bakheet, “An SVM framework for malignant melanoma detection based on optimized HOG features,” Computation, vol. 5, no. 4, 2017. https://doi.org/10.3390/computation5010004 [Google Scholar] [CrossRef]

19. Z. She, Y. Liu and A. Damatoa, “Combination of features from skin pattern and ABCD analysis for lesion classification,” Skin Research and Technology: Official Journal of International Society for Bioengineering and the Skin (ISBS) and International Society for Digital Imaging of Skin (ISDIS) and International Society for Skin Imaging (ISSI), vol. 13, no. 1, pp. 25–33, 2007. https://doi.org/10.1111/j.1600-0846.2007.00181.x [Google Scholar] [PubMed] [CrossRef]

20. S. Bakheet and A. Al-Hamadi, “A hybrid cascade approach for human skin segmentation,” British Journal of Mathematics & Computer Science, vol. 17, no. 6, pp. 1–18, 2016. [Google Scholar]

21. S. Bakheet and A. Al-Hamadi, “A discriminative framework for action recognition using f-HOL features,” Information, vol. 7, no. 4, pp. 68, 2016. https://doi.org/10.3390/info7040068 [Google Scholar] [CrossRef]

22. A. Rehman, M. A. Khan, Z. Mehmood, T. Saba, M. Sardaraz et al., “Microscopic melanoma detection and classification: A framework of pixel-based fusion and multilevel features reduction,” Microscopy Research and Technique, vol. 83, no. 4, pp. 410–423, 2020. [Google Scholar] [PubMed]

23. T. Akram, H. M. Lodhi, S. R. Naqvi, S. Naeem, M. Alhaisoni et al., “A multilevel features selection framework for skin lesion classification,” Human-Centric Computing and Information Sciences, vol. 10, no. 1, pp. 1–26, 2020. [Google Scholar]

24. P. Bansal, A. Vanjani, A. Mehta, J. C. Kavitha and S. Kumar, “Improving the classification accuracy of melanoma detection by performing feature selection using binary harris hawks optimization algorithm,” Soft Computing, vol. 26, no. 17, pp. 8163–8181, 2022. [Google Scholar]

25. K. Safdar, S. Akbar and S. Gull, “An automated deep learning based ensemble approach for malignant melanoma detection using dermoscopy images,” in Int. Conf. on Frontiers of Information Technology (FIT), Islamabad, Pakistan, IEEE, pp. 206–211, 2021. [Google Scholar]

26. I. Giotis, N. Molders, S. Land, M. Biehl, M. F. Jonkman et al., “MED-NODE: A computer-assisted melanoma diagnosis system using non-dermoscopic images,” Expert Systems with Applications, vol. 42, no. 19, pp. 6578–6585, 2015. [Google Scholar]

27. S. Oukil, R. Kasmi, K. Mokrani and B. García-Zapirain, “Automatic segmentation and melanoma detection based on color and texture features in dermoscopic images,” Skin Research and Technology, vol. 28, no. 2, pp. 203–211, 2022. [Google Scholar] [PubMed]

28. D. Popescu, M. El-Khatib, H. El-Khatib and L. Ichim, “New trends in melanoma detection using neural networks: A systematic review,” Sensors, vol. 22, no. 2, pp. 496, 2022. [Google Scholar] [PubMed]

29. T. Rymarczyk, E. Kozłowski, G. Kłosowski and K. Niderla, “Logistic regression for machine learning in process tomography,” Sensors, vol. 19, no. 15, pp. 3400, 2019. [Google Scholar] [PubMed]

30. M. A. Arasi, E. S. El-Horbaty and A. El-Sayed, “Classification of dermoscopy images using naive Bayesian and decision tree techniques,” 1st Annual Int. Conf. on Information and Sciences (AiCIS), Fallujah, Iraq, IEEE, pp. 7–12, 2018. [Google Scholar]

31. N. C. Lynn and N. War, “Melanoma classification on dermoscopy skin images using bag tree ensemble classifier,” in Int. Conf. on Advanced Information Technologies (ICAIT), Yangon, Myanmar, IEEE, pp. 120–125, 2019. [Google Scholar]

32. S. Kumar and A. Kumar, “Lesion preprocessing techniques in automatic melanoma detection system—A comparative study,” in Computer Networks and Inventive Communication Technologies, Singapore: Springer, pp. 357–368, 2022. [Google Scholar]

33. S. Kumar and A. Kumar, “Automatic melanoma detection system (AMDSA state-of-the-art review,” in Int. Conf. on Emerging Technology Trends in Electronics Communication and Networking, Singapore, Springer, pp. 201–212, 2020. [Google Scholar]

34. K. M. Hosny, M. A. Kassem and M. M. Foaud, “Classification of skin lesions using transfer learning and augmentation with alex-net,” Public Library of Science, vol. 14, no. 5, pp. e0217293, 2019. [Google Scholar]

35. S. A. Kostopoulos, P. A. Asvestas, I. K. Kalatzis, G. C. Sakellaropoulos, T. H. Sakkis et al., “Adaptable pattern recognition system for discriminating melanocytic nevi from malignant melanomas using plain photography images from different image databases,” International Journal of Medical Informatics, vol. 105, pp. 1–10, 2017. [Google Scholar] [PubMed]

36. M. H. Jafari, S. Samavi, N. Karimi, S. M. Soroushmehr, K. Ward et al., “Automatic detection of melanoma using broad extraction of features from digital images,” in 38th Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, pp. 1357–1360, 2016. [Google Scholar]

37. E. Nasr-Esfahani, S. Samavi, N. Karimi, S. M. Soroushmehr, M. H. Jafari et al., “Melanoma detection by analysis of clinical images using convolutional neural network,” in 38th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, IEEE, pp. 1373–1376, 2016. [Google Scholar]

38. J. Premaladha and K. S. Ravichandran, “Novel approaches for diagnosing melanoma skin lesions through supervised and deep learning algorithms,” Journal of Medical Systems, vol. 40, no. 96, pp. 1–12, 2016. [Google Scholar]

39. M. Fraiwan and E. Faouri, “On the automatic detection and classification of skin cancer using deep transfer learning,” Sensors (Basel, Switzerland), vol. 22, no. 13, pp. 4963, 2022. https://doi.org/10.3390/s22134963 [Google Scholar] [PubMed] [CrossRef]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools