Open Access

Open Access

ARTICLE

Integration of Digital Twins and Artificial Intelligence for Classifying Cardiac Ischemia

1 Department of Mechanical and Aerospace, Brunel University London, Uxbridge, UB83PH, UK

2 Department of Electrical and Electronic, Brunel University London, Uxbridge, UB83PH, UK

* Corresponding Authors: Mohamed Ammar. Email: ; Hamed Al-Raweshidy. Email:

Journal on Artificial Intelligence 2023, 5, 195-218. https://doi.org/10.32604/jai.2023.045199

Received 20 August 2023; Accepted 19 October 2023; Issue published 29 December 2023

Abstract

Despite advances in intelligent medical care, difficulties remain. Due to its complicated governance, designing, planning, improving, and managing the cardiac system remains difficult. Oversight, including intelligent monitoring, feedback systems, and management practises, is unsuccessful. Current platforms cannot deliver lifelong personal health management services. Insufficient accuracy in patient crisis warning programmes. No frequent, direct interaction between healthcare workers and patients is visible. Physical medical systems and intelligent information systems are not integrated. This study introduces the Advanced Cardiac Twin (ACT) model integrated with Artificial Neural Network (ANN) to handle real-time monitoring, decision-making, and crisis prediction. THINGSPEAK is used to create an IoT platform that accepts patient sensor data. Importing these data sets into MATLAB allows display and analysis. A myocardial ischemia research examined Health Condition Tracking’s (HCT’s) potential. In the case study, 75% of the training sets (Xt), 15% of the verified data, and 10% of the test data were used. Training set feature values (Xt) were given with the data. Training, Validation, and Testing accuracy rates were 99.9%, 99.9%, and 99.9%, respectively. General research accuracy was 99.9%. The proposed HCT system and Artificial Neural Network (ANN) model gather historical and real-time data to manage and anticipate cardiac issues.Keywords

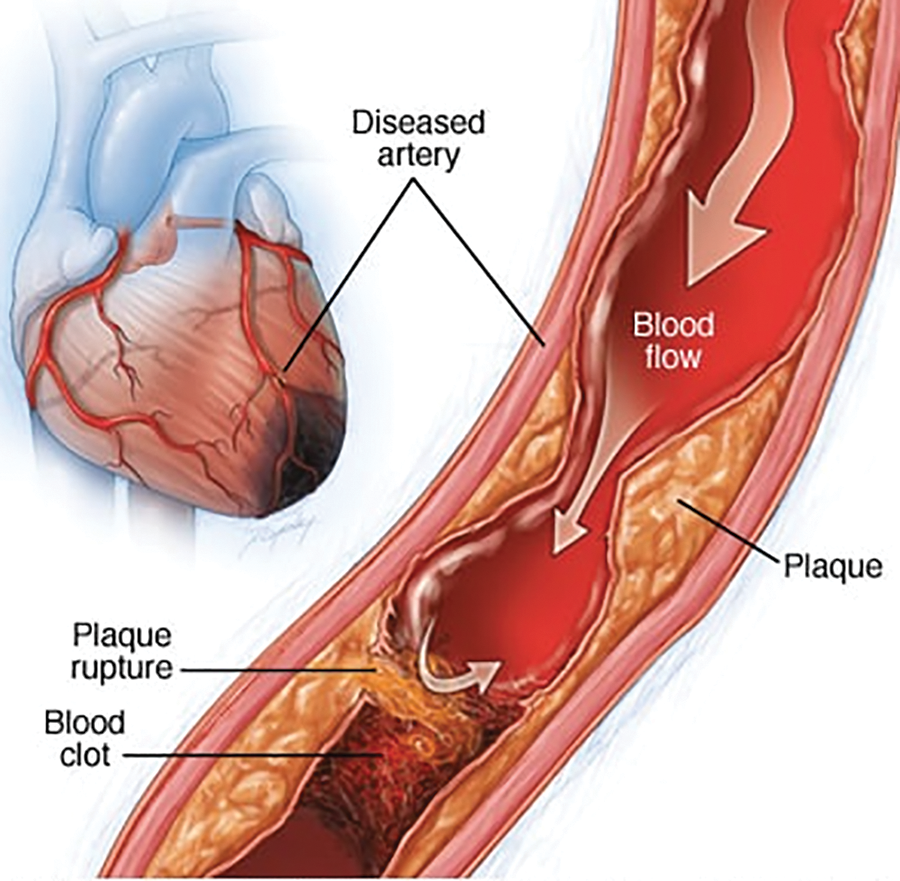

Ischemic Heart Disease (IHD) and stroke, as shown in Fig. 1, have been among the top 10 causes of death worldwide for several decades, particularly in communities with high affluence. This high level is especially true in countries where people have access to a higher standard of living. Based on the Health Organization’s statistics, there were 56.9 million deaths worldwide in 2016, 15.2 million of which were caused by these two diseases [1]. Together, they accounted for 26.7% of the total fatalities worldwide. Cardiovascular Disease (CVD), particularly non-communicable illnesses, substantially contributes to global mortality and morbidity. Using acute care and risk factor management measures, supported by epidemiological research and clinical trial evidence, has often led to enhanced quality of life and heightened survival rates across diverse settings. Nevertheless, it is crucial to consider that around fifty per cent of myocardial infarction and stroke events occur in individuals who do not meet the eligibility criteria for cholesterol-lowering interventions. Consequently, there exists a potential risk of administering expensive preventative treatments in an unsuitable manner, some of which may yield adverse outcomes without adequate justification. It is necessary to possess a comprehensive understanding of the vulnerability and prognosis of individual patients [2] to optimise results in cardiology.

Figure 1: Ischemic heart anatomy [3]

Digital Twins (DTs) are initially used in healthcare for predictive maintenance and medical device performance enhancement (in examination speed and energy consumption). DTs also improve the lifetime of hospitals. The DTs from GE Healthcare improve hospital management. This international corporation’s predictive analytics technologies and AI solutions convert medical data into actionable intelligence. The goal is for hospitals and government organisations to manage and coordinate patient care operations. General Electric (GE) Healthcare created a “Capacity Command Center” to improve decision-making at Johns Hopkins Hospital in Baltimore. The hospital improves service, safety, experience, and volume by establishing a DT of patient pathways that forecasts patient activity and plans capacity based on demand. Siemens Healthineers created DTs to optimise the Mater Private Hospitals (MPH) in Dublin [4], which was experiencing increased patient demand, increasing clinical complexity, ageing infrastructure, a lack of space, increased waiting times, interruptions, delays, and rapid advances in medical technology, which necessitated the purchase of additional equipment. MPH and Siemens Healthineers redesigned the radiology department using an Artificial Intelligence (AI) computer model. Medical DTs enable digital process optimisation by simulating workflows and assessing unique operational situations and architectures.

The DTs’ realistic 3D simulations and descriptive and quantitative reporting allow for the prediction of operational scenarios and the analysis of various options for transforming care delivery. The expansion and advancements that enable technology in the healthcare business are unprecedented because they make things previously unachievable feasible. These challenges are because the enabling technology makes things previously unattainable possible. The surge in connection can be attributed to the fact that Internet of Things devices are becoming less expensive and simpler to implement [5,6]. As a result of the increased connectivity, the future applications of DTs utilisation within the healthcare business are only growing to become more extensive. The production of a digital twin of a person, which would allow for a real-time study of the body, is one application that has the potential to be used in the future. A digital twin, a more practical application currently being implemented, is currently being employed to replicate the effects of various medicines. Building human DTs is a goal for the medical and clinical fields. By evaluating the real twin’s personal history and present circumstances, a human DTs can reveal what is happening inside a linked physical twin’s body, making it simpler to predict sickness [7].

This DT model would enable a paradigm shift in the delivery of therapies in medicine, moving away from “one-size-fits-all” to customised treatments based on the person’s “physical asset”, which includes structural, physical, biological, and historical components. Precision medicine focuses on a patient’s genetic, biomarker, phenotypic, physical, or psychological features [8]. Patients are not treated per a “norm” or “Standard of Care” but rather as unique individuals. The ancestor of the human DT is the Virtual Physiological Human (VPH). It is a computer model created to look at the entire human body. With a VPH, clinicians and researchers can test any medication. In-silico clinical studies can be conducted using a VPN [9]. AnyBody Modeling System (AMS) (see https://www.anybodytech.com) shows how the human body interacts with the environment and was created due to a study into VPH. Muscle forces, joint contact forces and moments, metabolism, tendon elasticity, and antagonistic muscle activity can all be calculated using AnyBody. A DT-virtualized physiological model could predict organ behaviour. Automated CAD analysis may assess the efficacy of individualised therapies, advancing precision medicine. Picture Archiving and Communication Systems (PACS) [9], which provide affordable storage, quick access, and the interchange of medical examinations (mainly images) from different modalities, are crucial in this field. Some organs DTs have been used in clinical practice as a reliable tool for experts, while others are being validated.

The Living Heart [10] was built by Dassault Systèmes and introduced in 2015 (May). The preliminary results of the experimental testing were promising. The human respiratory system is another organ DTs produced by Oklahoma State University’s CBBL [11–14]. Chhimek Laghubitta Bittiya Sanstha Limited (CBBL) researchers used ANSYS computational fluid dynamics simulations to explore the precision delivery of a cancer-destroying inhaler. Aerosol-delivered chemotherapeutic medicines have much to lose when “hitting” healthy tissue. While the drug is widely diffused in the aerosol, it only reaches its target in the lungs by 25% [14,15] The CBBL built the virtual human V2.0 on the foundation of their virtual person V1.0 using a CT/MRI image and the patient’s lungs’ geometry. CBBL researchers used the V2.0 DT to create a large population of human DTs (virtual population group, or VPG) consisting of high-resolution anatomical models. The VPG allowed researchers to analyse population or subgroup differences, boosting statistical validity. Writers could recreate various particle travel scenarios by adjusting particle sizes, inhalation flow rates, and drug placement. These simulations showed that confining the size and location of active drug particles within the aerosol (rather than distributing them widely) would boost the local deposition efficacy of pharmaceuticals to 90% [15]. Surgeons can simulate procedures and research the connection between implants and aneurysms using the customised DT. Many implants can be checked in less than five minutes to optimise the procedure. Initial tests have yielded positive results [5], delivering high-quality 3D-Angiography basis data. Understanding the effect of device-dimension adjustments on results requires further analysis.

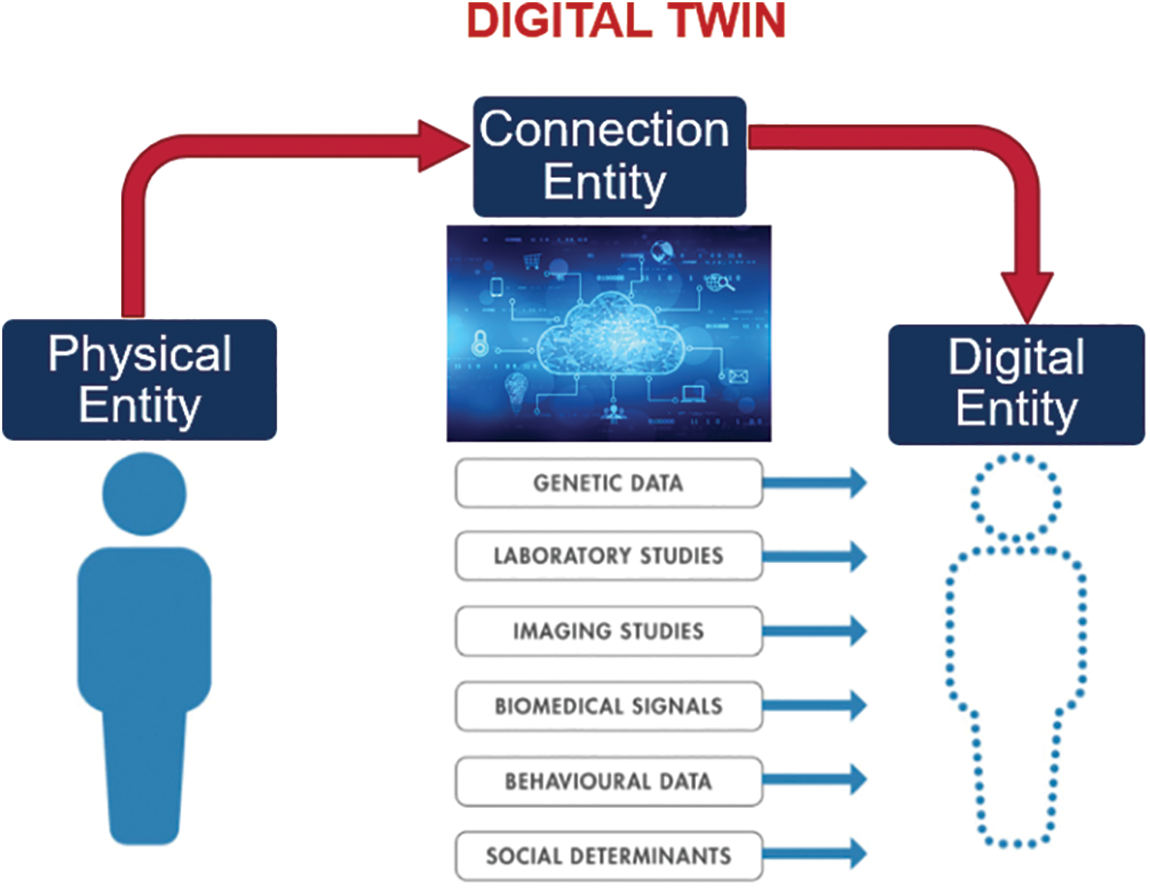

A virtual duplication model is a digital twin of a physical thing or a digital device representation produced through simulation using finite element analysis. This process creates a digital copy of the actual object or device. The creation of a digital twin is the end outcome of carrying out this approach. In addition, digital twins can be utilised in the pre-production testing of novel concepts before such designs are put into production. This testing can take place before the manufacturing stage. NASA utilised this particular piece of technology for the very first time in 2002, and it was done so to further the agency’s primary aim of space exploration. In the end, the system was used in various industries, such as oil and gas, aerospace, telecommunications, and manufacturing, to name just a few of the specific applications that can be found in these disciplines. This paper defines DTs as a “live digital replica that continuously simulates and connects the entire mechanical behaviour of anything”. Fig. 2 shows data flows between an existing physical thing and a digital entity and are completely integrated into both directions. The digital item may also serve as the controlling instance of the physical object in such a combination. Other physical or digital items may cause the digital object to undergo state changes. A change in the physical object’s state directly affects the digital object’s state and vice versa.

Figure 2: Fully automated process of the DTs

Reference [16] described the DT in healthcare as a system that automatically monitors and assists any individual in crisis. This DT is referred to as a “defensive technology”. Even if the true twin, the person at risk, is alone and experiences an IHD emergency, this can still be done. Sim&Cure simulates an aneurysm and its associated blood vessels in a patient (see https://sim-and-cure.com/). Artery wall bulges are known as aneurysms. In 2% of persons, they exist. A stroke, clotting, and even death can result from certain aneurysms. Brain surgery is typically required for aneurysm repair. Endovascular repair is safer and less intrusive. It restores damaged arteries and lowers aneurysm pressure by using a catheter-guided implant. Selecting the ideal device size can be challenging, even for experienced surgeons. Sim&Cure’s DT, which got regulatory approval, assists surgeons in choosing the best implant to precisely suit the cross-section and length of the aneurysm and maximise aneurysm repair. 3D rotational angiography creates a 3D model of the aneurysm and surrounding blood vessels after the patient has been surgically ready. Surgeons can simulate procedures and research the connection between implants and aneurysms using the customised DT. Many implants can be checked in less than five minutes to optimise the procedure. Initial tests have yielded positive results [17,18], delivering high-quality 3D-Angiography basis data. Understanding the effect of device-dimension adjustments on results requires further analysis.

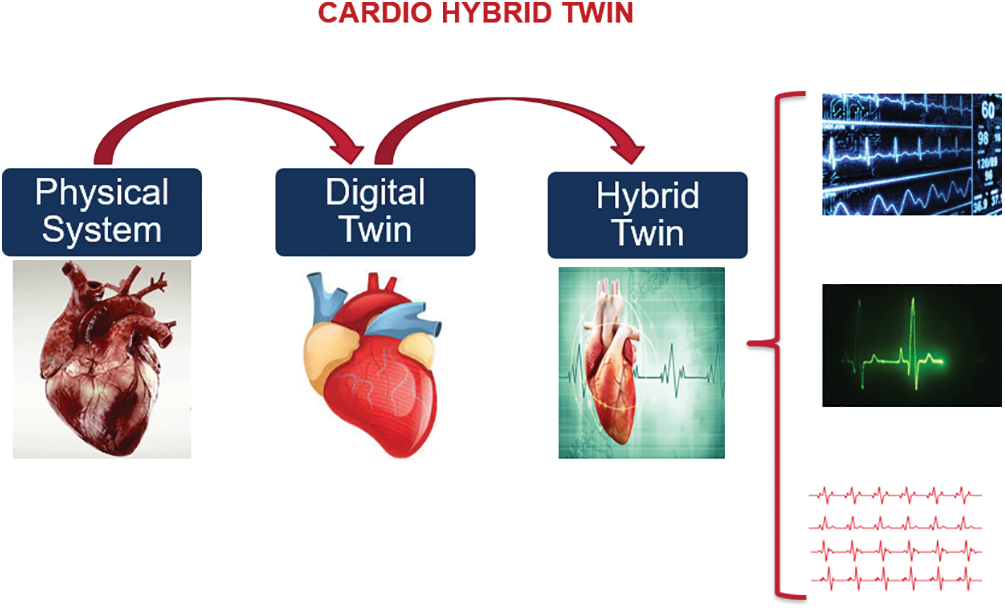

In this paper, we provide a Hybrid Cardio Twin (HCT) as an extension of the digital twin of the human heart (Fig. 2) that can analyse data built on the digital twin’s ecosystem for healthcare and well-being. The HCT concept (Fig. 3) aims to improve patients’ health and well-being by providing more accurate information about their conditions.

Figure 3: Concept of the proposed HCT

HTs are an extension of the DT in which the isolated DT models are interlaced to recognise, foresee, and communicate less optimum (but predictable) behaviour of the physical counterpart well before such behaviour occurs. The connection is made to maximise the overall system’s efficiency. The HTs integrate data from various sources (such as sensors, databases, and simulation) with the DTs models and apply AI analytics techniques to achieve higher predictive capabilities while simultaneously optimising, monitoring, and controlling the behaviour of the physical system. In other words, the HTs can do all these things to achieve synergy among the DTs models; the HTs are often manifested as a collection of all related models. The process of amassing data and conducting analyses has become much less complicated due to the development of technologies such as Industry 4.0, Artificial Intelligence (AI), analytics software, big data analytics, and machine learning algorithms. These technologies have all contributed to simplifying this process [19]. To build a digital representation of the physical thing, a huge variety of software packages, such as MATLAB, Simulation, and Abaqus, are utilised in cooperation with one another to generate a live digital representation of the cardio system. In the cardio-twining system, ventricular tachycardia and fibrillation contribute significantly to the disease known as sudden cardiac death [20,21]. Even though ischemic heart disease is the most common cause of cardiac arrhythmias, it can be caused by various clinical situations. Ischemia causes damage to the heart due to inadequacies in the supply of energetic material and the elimination of waste products. Reference [22] showed that the final effect is a slow but persistent decline in electrical activity in the wounded area, which leads to the inability of the cell to contract and, eventually, cell death. The effect is a gradual but continuous drop-in electrical activity in the affected area. This series of bad events finally results in malignant arrhythmias and the failure of the organ’s pump [23].

1. Proposing a Hybrid Cardiac Twin (HCT) framework with the integration of an Artificial Neural Network a novel framework called the Hybrid Cardiac Twin (HCT), which incorporates an Artificial Neural Network (ANN) to address the challenges associated with real-time monitoring, accurate decision-making, and predictive capabilities in the context of cardiac crises.

2. The proposed methodology is validated through a case study on cardiac ischemia to showcase the capabilities of HCT. The model’s accuracy is assessed through Training, Validation, and Testing, resulting in accuracies of 0.99997, 0.99954, and 0.9993, respectively. The overall accuracy of 0.99986 further confirms the reliability of the proposed model.

3. The suggested framework of Health Condition Tracking (HCT) and the creation of the Artificial Neural Network (ANN) model enable the collection of historical and real-time data. This framework facilitates the management and prediction of present and future cardiac problems.

1.3 Potential and Applications of the Proposed Framework

1. Drug development: researchers can identify potential new treatments for cardiac ischemia.

2. Surgical planning: models can be used to plan surgical procedures, such as angioplasty or bypass surgery.

3. Personalised medicine: doctors can better predict how patients respond to treatment and make more informed decisions.

4. Training of medical professionals: train medical professionals, such as cardiologists and surgeons, in diagnosing and treating cardiac ischemia.

5. Understanding disease progression: researchers can better understand the underlying mechanisms of cardiac ischemia, which can lead to new insights into the disease.

Arrhythmias may arise before hospital admission, and even if they occur in the hospital, resuscitation and patient survival take precedence over studies into arrhythmogenesis. The processes underlying potentially deadly ventricular arrhythmias linked with ischemic heart illness are difficult to discern via clinical examinations of people who develop these conditions. Clinical pathophysiology on animal models has been developed to explore the electrophysiological changes associated with myocardial ischemia injury and how they contribute to developing ventricular tachycardia and fibrillation. These models were created to circumvent the limitations of clinical trials and play a crucial role in advancing mechanistic understanding. Animal model research has developed concepts and hypotheses tested in clinical investigations [22,24] to improve the processes contributing to arrhythmogenesis in an ischemia environment. Myocardial Infarction (MI), a form of ischemic heart disease, happens when the oxygen-rich blood that typically passes to the heart muscle is blocked. This blockage results in a myocardial infarction, which harms the heart itself. Patients with ischemic heart disease frequently do not recognise the signs of an impending attack until too late, preventing them from getting the right help. This delay may result in several negative consequences [25]. In other words, early detection and action are unquestionably necessary for survival. It is difficult to pinpoint problems on time because the subject must be checked frequently.

It is challenging to undertake a thorough analysis of arrhythmogenic pathways and, as a result, to promote the development of new antiarrhythmic medications to combat the effects of ischemic heart disease since experimental models have their own unique set of limitations. This limitation is due to experimental models’ distinct set of restrictions. This statement is particularly true in the first 10 min after occlusion of the coronary artery, during the acute initial stage of ischaemia. Additionally, the experimental techniques now employed to record electrical behaviour at the tissue and organ level have limitations regarding their ability to adequately capture events confined inside the depths of the ventricular wall with sufficient spatial resolution. This limitation is because it took many years to establish the experimental techniques currently utilised to record electrical behaviour at the tissue and organ levels [26]. The model was developed in [27] using the Philips Healthcare Model of Digital Twin model as a base, and a machine learning algorithm was added to the model to improve its analytical skills. Machine learning employs a strategy known as the Artificial Neural Network (ANN) to generate predictions on the highest likelihood that affects the target variable (the target variable explains the parameters causing heart diseases). The doctors will use the target variable to gauge the model’s accuracy before they compile their data and provide a diagnosis based on their observations. Many publications have been published in digital twin technology, applying it to model-based system engineering and incorporating it as a key component of Model-Based Systems Engineering (MBSE) methodology and experimentation test beds [13]. The article’s authors also cover the benefits of combining digital twins with the Internet of Things (IoT) devices and system simulations. Reference [27] analysed the architecture, consequences, and trends in digital twin products in their article from 2017 propose a suggested architecture for the product’s digital twin.

Reference [28] used big data analytics to develop more efficient product design, production, and service delivery techniques. This work aims to draw attention to the restrictions placed by the virtual replica and the difficulties encountered during analysis. Reference [29] focused on developing an intelligent production management system built on a digital twin. Additionally, it recommends a preventative action that might be used in conjunction with the strategy used when dealing with complex products. Real-time management and organisation, as well as gathering information related to physical assembly, are some essential strategies in the proposed structure. These tactics are employed on the work floor. In [30], the authors discussed the digital twin technology’s simulation component and how to create a model to assist with task design or evaluate various solutions. Reference [31] used Skin Model Shapes to provide a detailed reference model. The groundwork for using digital twins to drive product design has been established in [28]. Reference [31] have suggested a method for generating predictions for the remaining useful life of an offshore wind turbine. Automated attributes from a machine learning model were used by [31] to relate the Digital Twin to the power control system, in which he improved the application in power control system centres. Reference [32] have investigated the use of digital twin’s technology for automated data selection and acquisition. DTs take advantage of the idea of pacemakers controlled by microprocessors, which can capture, store, and transmit patient data [33].

Siemens Healthineers created DTs to optimise the Mater Private Hospitals (MPH) in Dublin [34], which was experiencing increased patient demand, increasing clinical complexity, ageing infrastructure, a lack of space, increased waiting times, interruptions, delays, and rapid advances in medical technology, which necessitated the purchase of additional equipment. MPH and Siemens Healthineers redesigned the radiology department using an AI computer model. Medical DTs enable digital process optimisation by simulating workflows and assessing unique operational situations and architectures. The DTs’ realistic 3D simulations and descriptive and quantitative reporting allow for the prediction of operational scenarios and the analysis of various options for transforming care delivery. The expansion and advancements that enable technology in the healthcare business are unprecedented because they make things previously unachievable feasible. These challenges are because the enabling technology makes things previously unattainable possible. The surge in connection can be attributed to the fact that Internet of Things devices are becoming less expensive and simpler to implement [5], [6]. As a result of the increased connectivity, the future applications of DTs utilisation within the healthcare business are only growing to become more extensive. The production of a digital twin of a person, which would allow for a real-time study of the body, is one application that has the potential to be used in the future. A digital twin, a more practical application currently being implemented, is currently being employed to replicate the effects of various medicines.

One further application for DTs is in medicine, namely in the planning and performing of surgical operations. This application can be accomplished with the assistance of DTs. When it comes to medicine, the ability to simulate different scenarios and react to them in real time is of the utmost significance because it can literally mean the difference between living and dying. In addition, the DTs may be able to assist with preventative maintenance as well as continuous repairs to medical equipment. In the context of the medical environment, DTs possess the capability, in conjunction with artificial intelligence, to make decisions that could save lives based on data collected in real-time in the past [18,35]. The following is a list of applications for DTs, exhibiting some of the overlaps in the intended application to show how adaptable predictive maintenance can be, ranging from manufacturing plant machinery to patient care. Building human DTs is a goal for the medical and clinical fields. By evaluating the real twin’s personal history and present circumstances, a human DTs can reveal what is happening inside a linked physical twin’s body, making it simpler to predict sickness [7]. This DT model would enable a paradigm shift in the delivery of therapies in medicine, moving away from “one-size-fits-all” to customised treatments based on the person’s “physical asset”, which includes structural, physical, biological, and historical components.

Precision medicine focuses on a patient’s genetic, biomarker, phenotypic, physical, or psychological features [8]. Patients are not treated per a “norm” or “Standard of Care” but rather as unique individuals [36,37]. The ancestor of the human DT is VPH. It is a computer model created to look at the entire human body. With a VPH, clinicians and researchers can test any medication. In-silico clinical studies can be conducted using a VPN [9]. AnyBody Modeling System (AMS) shows how the human body interacts with the environment and was created due to a study into VPH, muscle forces, joint contact forces and moments, metabolism, tendon elasticity, and antagonistic muscle activity can all be calculated using AnyBody [38]. A DT-virtualized physiological model could predict organ behaviour. Automated CAD analysis may assess the efficacy of individualised therapies, advancing precision medicine. Picture Archiving and Communication Systems (PACS) [9], which provide affordable storage, quick access, and interchange of medical examinations (mainly images) from different modalities, are crucial in this field. Some organs DTs have been used in clinical practice as a reliable tool for experts, while others are being validated. The Living Heart [10] was built by Dassault Systèmes and introduced in 2015 (May). First DTs of an organ to consider blood flow, mechanics, and electrical impulses. The software converts a 2D scan into an accurate 3D organ model.

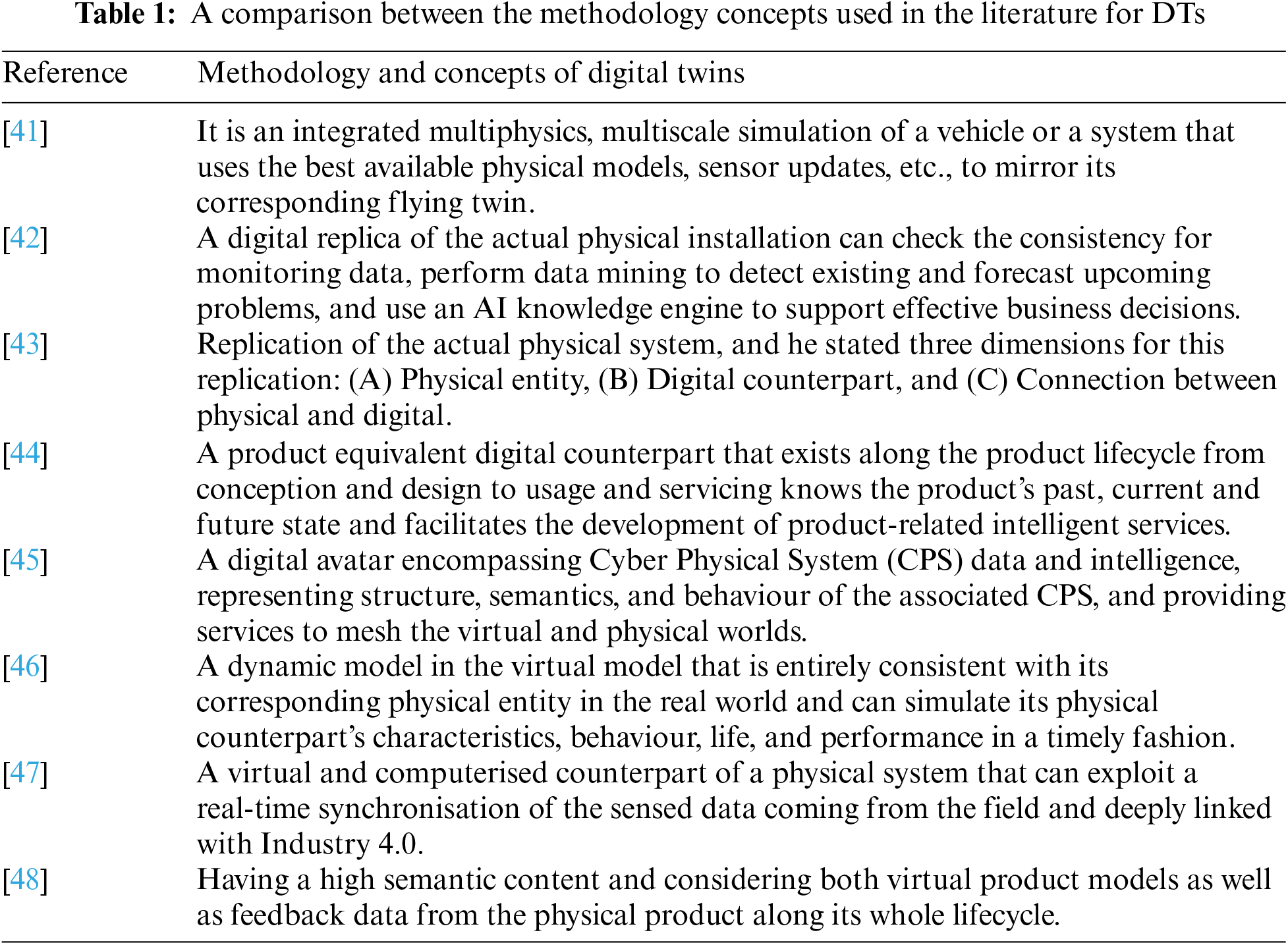

The preliminary results of the experimental testing were promising. The human respiratory system is another organ DTs produced by Oklahoma State University’s CBBL [11–14]. CBBL researchers used ANSYS computational fluid dynamics simulations to explore the precision delivery of a cancer-destroying inhaler. Aerosol-delivered chemotherapeutic medicines have much to lose when “hitting” healthy tissue. While the drug is widely diffused in the aerosol, it only reaches its target in the lungs by 25% [14,15]. The remaining medication leaks into healthy tissue, causing adverse effects and lung damage. To address this issue, the authors virtualised a standing, digital male in his forties with a high-resolution human respiratory system that covered his conducting and respiratory zones, lobes, and body shell. The “virtual human V1.0”, also known as the “individualised digital twin”, enables ANSYS simulations of computational fluid dynamics and subject-specific health risk assessment for in-silico occupational exposure research. There are many different methods and concepts based on the types of application used in the industry or the academia using digital twins Table 1; the question is are they an actual/real digital twins, and if they are, why they do not have the same concept? Or same principles? Why are they named differently? Table 1 shows a comparison between those methods and concepts.

Reference [39] offered four Key Performance Indicators (KPIs) for assessing DT performance and suggests a systematic strategy for analysing the performance and flexibility of Digital Twins (DTs). Researchers and practitioners may evaluate various DT approaches and come to more sensible design choices thanks to the suggested method and KPIs, which provide a quantitative estimate of DT performance. The research illustrates the use of DTflex as a straightforward tool for swiftly evaluating various DT approaches, which may help DTs iteratively develop and become more effective in future applications. Researchers and practitioners in a variety of sectors are anticipated to benefit from the information and methodology presented in this study as they compare DT approaches and DT performance, resulting in more effective DT solutions and greater re-use of current DTs. In [39], it is crucial to remember that the study focuses on evaluating the effectiveness and adaptability of Digital Twins (DTs) using certain Key Performance Indicators (KPIs). Other facets of DT assessment are not covered, nor are any possible drawbacks in using the suggested approach addressed. The limitations or difficulties that could be encountered while using the suggested strategy in real-world circumstances are not also covered in the study. In [39], additional study and validation may be necessary in order to evaluate the efficacy and suitability of the suggested methodology in other sectors and circumstances. It is important to note that the work lacks a thorough examination of the constraints associated with current methodology for Decision Tree (DT) analysis and strategies for evaluating performance. This study in [40] aimed to provide a standardised design process for the construction of Digital Twins (DTs) in the context of Zero-Defect Manufacturing (ZDM) across diverse domains. This paper aims to provide an advanced analysis and comprehensive discussion on the present status and limits of research and practise in the area of Decision Trees (DTs) for Zero-Defect Manufacturing (ZDM). However, the study in [40] did not address the various obstacles or pragmatic factors that may occur during the execution of the suggested design process. The study lacks a thorough assessment or verification of the suggested design process via actual case studies or real-world implementations.

2.1 Summary of the Digital Twins Methodologies in the Literature

• Designing, planning, improving and controlling the cardiac system is still very challenging due to the system’s complexity governed.

• There is a lack of intelligent monitoring, including intelligent monitoring, feedback, and management.

• Existing systems or platforms do not provide continuous personal health management services throughout a patient’s lifecycle.

• Patient crisis warning services are inaccurate enough. Routine real-time communication between healthcare providers and patients is not present.

• There is no true integration of physical medical systems with intelligent information systems.

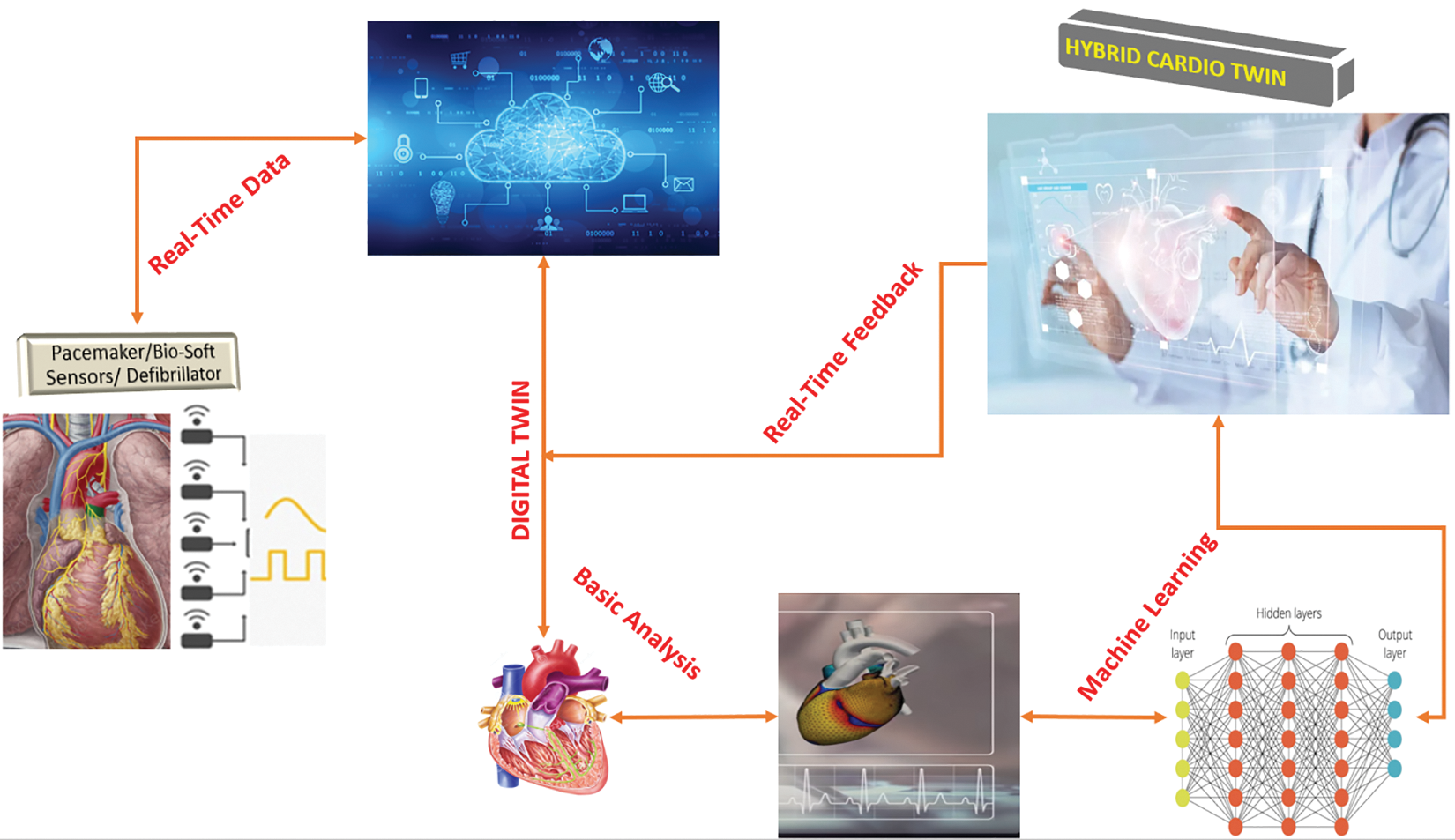

The study uses a conceptual framework of DTs to discuss the idea of a digital duplicate to gather information linked to various parameters such as chest discomfort, resting blood pressure or biosensors. The simulation software will use the parameters to represent the human heart digitally. A 3D method is used to model the heart’s parts as a biophysical model of the patient’s heart. This model will appear and behave comparable to the actual patient’s heart. Accessing real-time data requires using data analytics techniques such as big data analytics and cloud computing, which will connect similar digital twins of similar cases [19]. Additional machine learning methods, such as decision trees, may be utilised to classify patients’ situations under the target variable’s degree of precision. This digital twin is subsequently utilised to follow the most effective strategy for the actual object as shown in Fig. 4 below. After the model is developed using the technologies and procedures described above, the digital twin of the heart can be connected to the physical creation. For real-time monitoring and the detection of future heart diseases through predictive and prescriptive analytics. 99.9% of overall accuracy was reached throughout the process, and the ANN separates the parameters according to the accuracy achieved. The ANN algorithm is selected because it is not overly complicated and because, compared to other algorithms, it is simple to implement in various contexts.

Figure 4: Hybrid Twin framework with ANN

3.1 Figure 4 Procedure in Details

1. At the initial stage, the patient had surgical procedures to get a pacemaker and an implantable cardioverter defibrillator, which were surgically inserted into their cardiac structures to monitor and document any potential cardiac abnormalities.

2. The collected data takes into account all of the assessed characteristics, including age, gender, medical history, lifestyle factors, blood pressure, chest pain, fasting blood sugar, and other variables (Age, Sex, Chol, Restecg, Exang, CP, and Fbs) is sent to a cloud-based data storage facility; in this case, it is the THINGSPEAK platform. The obtained data include all the examined qualities.

3. The data mentioned above is stored in a THINGSPEAK platform, and computational MATLAB is used to assess and generate a digital replica or virtual counterpart. MATLAB/SimScale is a cloud-based simulation programmed that facilitates the creation of virtual instances, which can be conveniently accessible through the internet.

4. The above data is obtained from the cloud and inputted into the simulation software (If you want to use cloud-based simulation software, you may disregard this step).

5. Upon inputting the supplied parameters into the simulation programmed, a finite element analysis is conducted to generate a digital replica of the system, sometimes called a digital twin. This Finite Element Analysis (FEA) aims to enhance the precision of the digital twin.

6. The digital twin of the heart accurately replicates the physiological movements and reflexes of the heart. Cloud computing enables digital twins to have continuous access to real-time data, and any modifications made to the parameters will be immediately reflected in the appropriate counterpart.

7. To design a methodology for recognising the potential of a disease, an algorithm called an Artificial Neural Network (ANN) may be used for classifications. The ANN uses machine learning techniques to analyze risk characteristics and assess how these parameters depart from established norms.

8. The algorithm can retrieve the patient’s past medical information and adapt its diagnostic approach appropriately. This approach in the virtual heart was first intended to mitigate the occurrence of mistakes.

The Physikalisch Technische Bundesanstalt (PTB) Diagnostic ECG Database (PTBD), which can be found in Physio Bank, served as the foundation for the creation of both the training and test datasets [49]. The collected data and the factors included in this study are blood pressure, chest pain, fasting blood sugar, Cholesterol, Cardiopulmonary, and other variables (Age, Sex, Chol, Restecg, Exang, CP, and Fbs). These data sets contain information about patients shown below. The following data is divided into seven input columns:

• Age

• Sex

• Chol

• Fbs

• Restecg

• Exang

• CP

To make the greatest and most ideal selection for the post for which applicants have applied, the main goal is to construct a model for assessing and projecting the best prospects. A Neural Network model is created and trained using the simulated data in MATLAB to forecast each candidate's outcome based on the column of the total score.

Data of patients are stored and organized into .xlsx files. Using the following code to import the patient’s data into MATLAB and assign it to a name called data = readmatrix(‘patient01.xlsx’);. The top seven columns, where the seven requirements were chosen to maximize the applicants’ overall scores, were allocated to the variable X as the input data. The variable Y was assigned to the output data, which moves all of the column’s results into the eighth column, and the letter M was given to the number of observations. Assigning the three variables was done as follows: X = data (:, 1:7); Y = data (:,8); M = length (Y);

The ANN model was trained using the training set, and it will only look at data from the training set going forward to allow for parameter optimization. The bias value and the weights of the connections connecting the neurons make up the model’s parameters. In order to determine the optimum values for the parameters, such as the weights of the connections between neurons, the network is exposed to the training set data during this phase.

In this phase, the model's performance is assessed using fresh data, not the full data set. This method minimizes the error in the validation set by optimizing the hyperparameters, such as the internal parameters and architecture of the model. In this case, internal settings may control the regularization. The connection between the input and the output is unaffected by these factors. The number of neurons in the hidden layer and the total number of hidden layers are examples of hyperparameters. Following this procedure, the ideal hyperparameter values are established.

After validation, a portion of the assets (data sets) is kept and used as a test set to see how the model does on data that is unknown. This is done after the best hyperparameter values have been found. The dataset cannot be repeated since the validation sets are used to optimize the hyperparameters, hence the test set will be used to gauge the model's accuracy.

By specifying the percentage of the data set used to train the model and the percentage of the data set used for validation and testing, the properties of the object net are created by fixing the neural network parameters, making the training ratio 75% and the validation ratio 15%. Moreover, the test set is 10%.

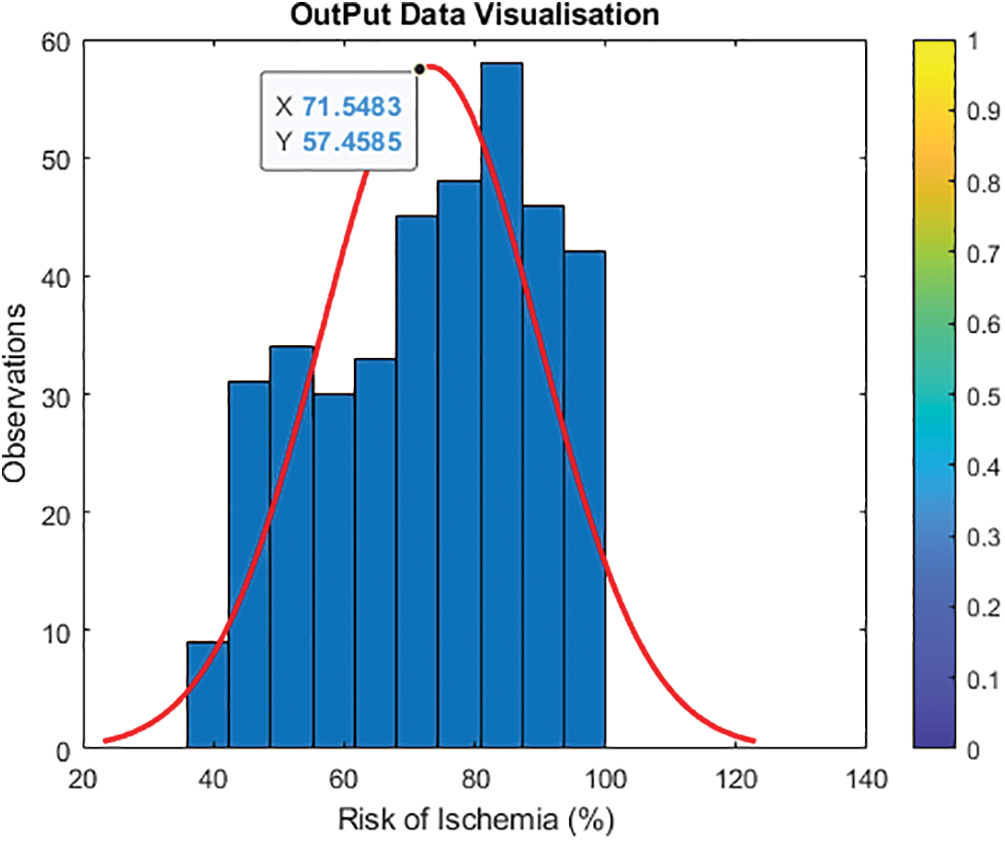

4.1 Output and Normalized Output Data Visualization

Fig. 5 shows and validates category entries with projected values from 0 to 100. To avoid having one output variable at zero and just a few around higher parameters, the updated output normalizes features and transforms the output value. To avoid the log of zero, add one to the output to establish a new output variable by taking the log of one plus the output value. Fig. 6 shows Y2 = log (1 + Y) and histogram (Y2, 10).

Figure 5: Visualization of the initial output data

Figure 6: Updated visualization of the output

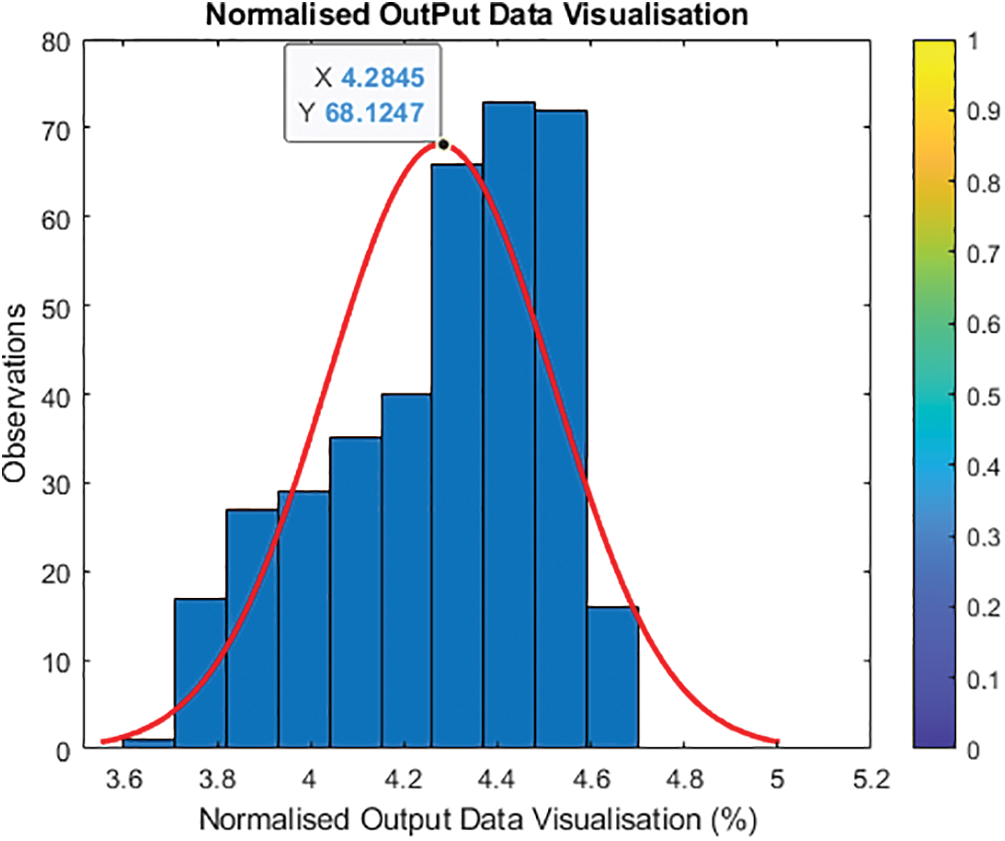

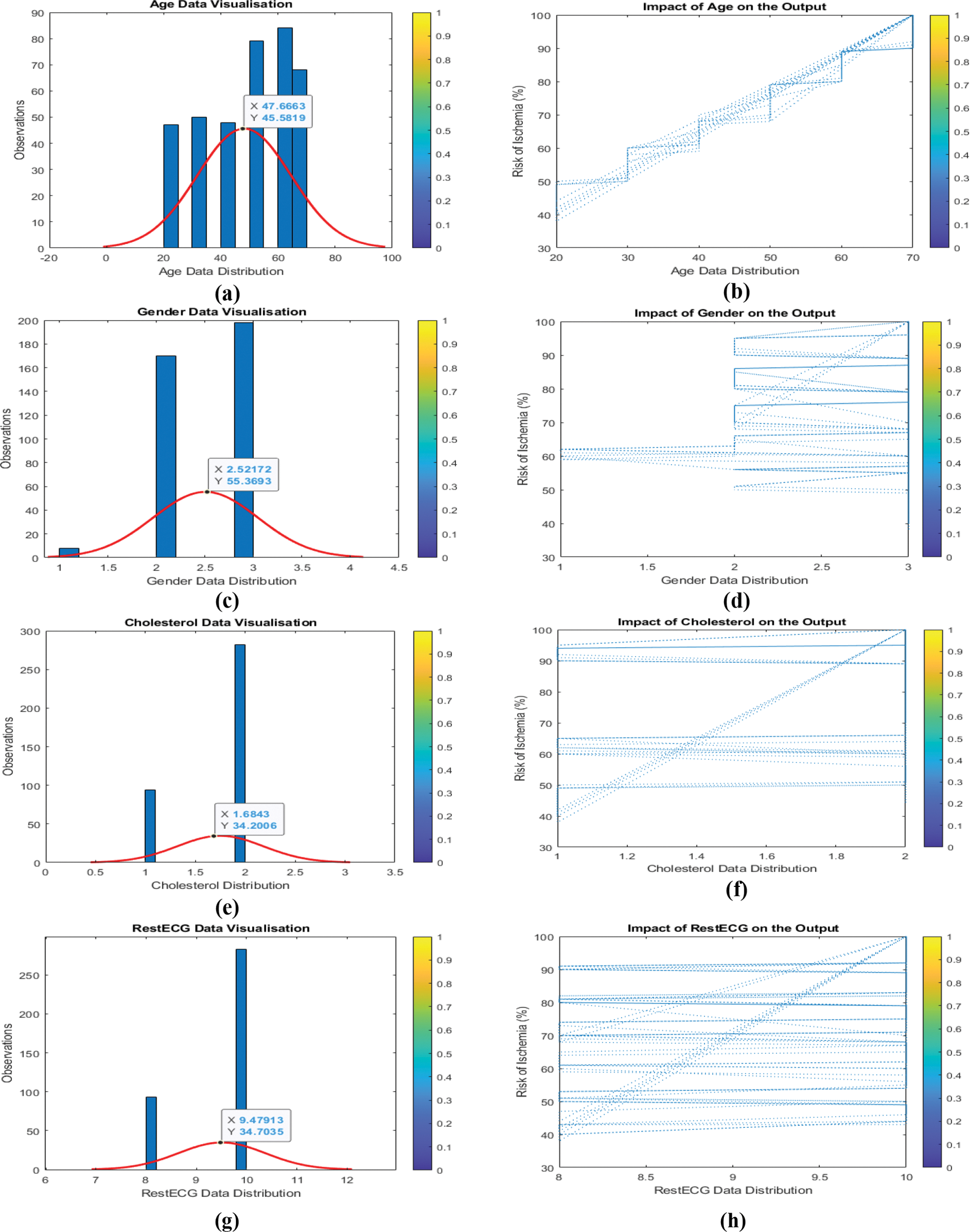

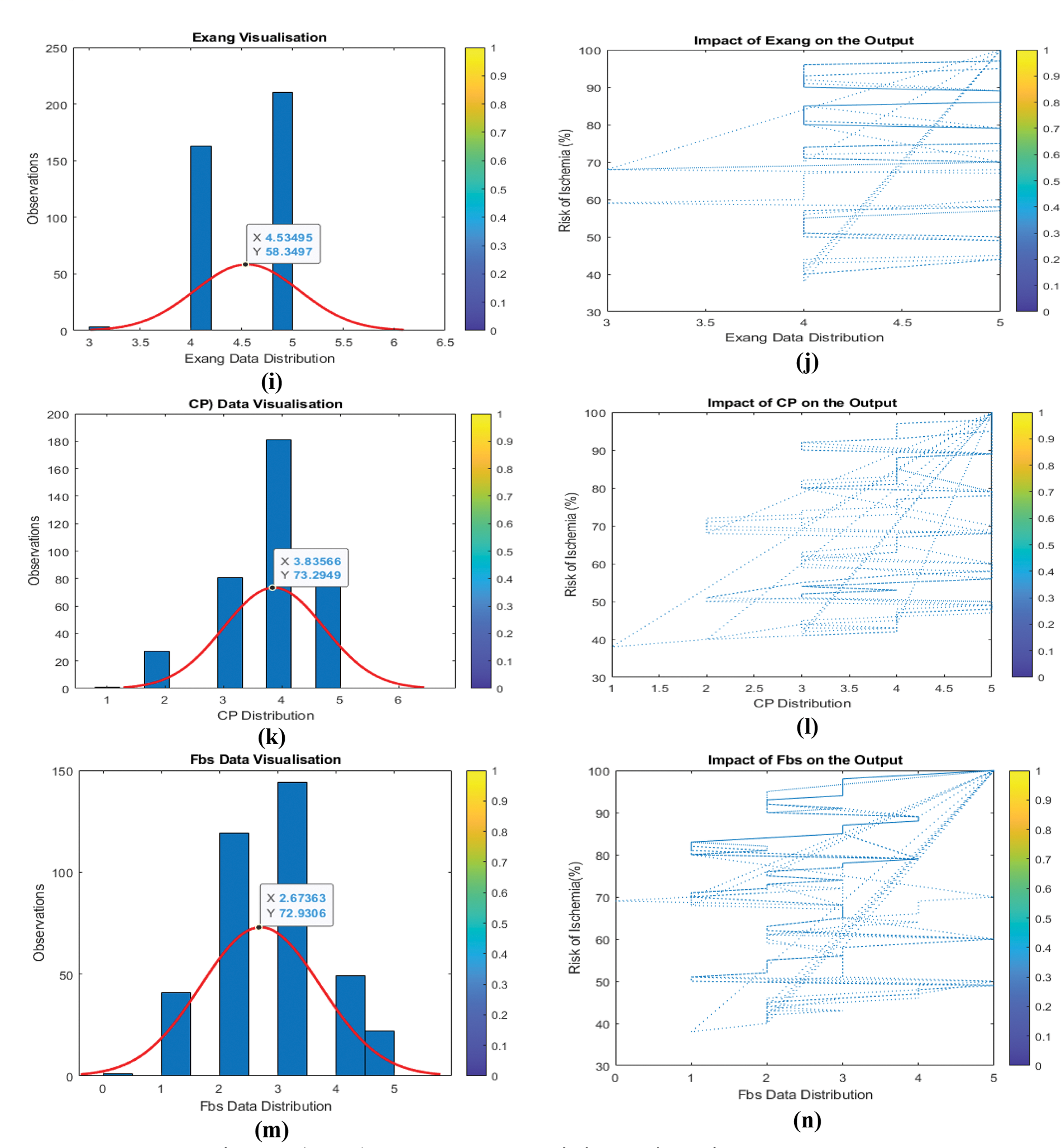

4.2 Normalizing the Input Variables and Their Relationship to the Output

To normalize the input variables, the lowest value must be deleted for each input to construct a loop that begins at zero and divides by the input range (highest value minus minimum value). The revised input normalization (X2) is stored. The output did not need normalization, and the new output was modified. However, the input variables must be normalized to fall between 0 and 10, therefore the new normalization is: Linear regression is impossible because input parameters cannot match output variables. Further features may be added manually, but an Artificial Neural Network (ANN) must build them. Let the ANN combine what is needed to build hidden layer features and discover a non-linear technique to interpolate and map input and output.

Understanding the link between input data and output helps identify whether input variable categories should be modified. Because every input variable changes concurrently, it is difficult to discern the link between the input and output variables. It is necessary to train a machine learning model with several input variables, so the relationship between each input variable and the output is examined. As seen in Fig. 7 (a, b, c,…,n), the influence of input parameters on output is challenging to characterize and quantify. To normalize all input variables and their features, ensuring that all input variables have the same weight, and speed up model training, change the output from (Y) to (Y2).

Figure 7: (a to n) Input data and their impact/relation to the output

4.3 Simulated Results for the ANN Model

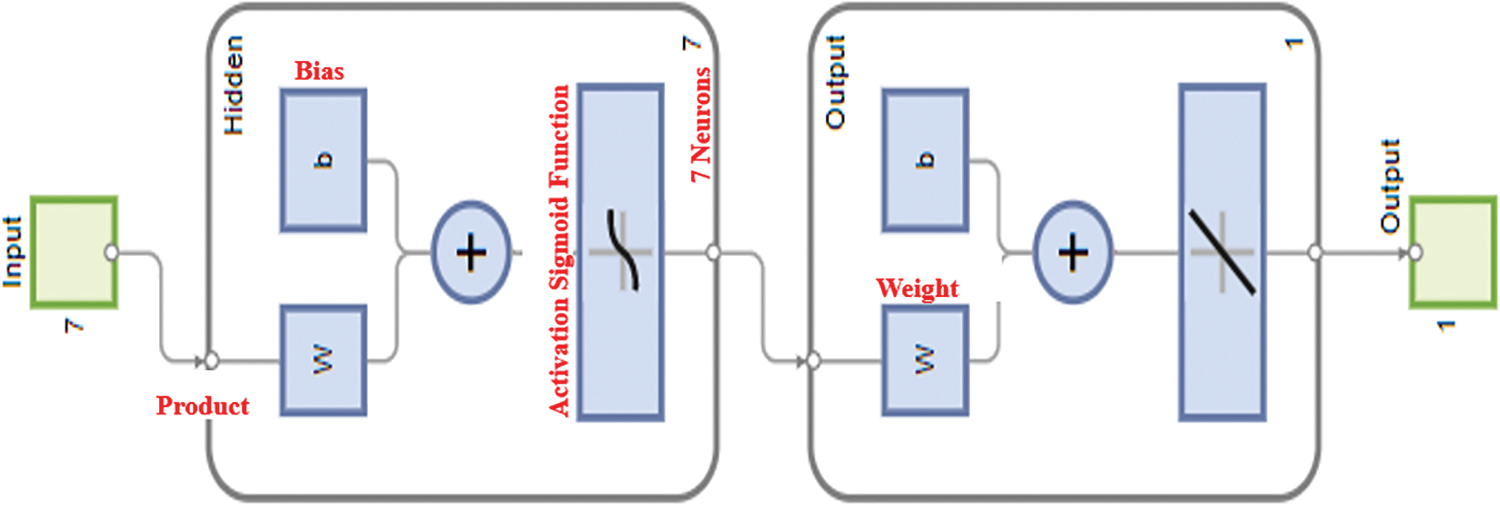

The MATLAB special function (FITNET) may handle multiple inputs at once while training an ANN, but only the number of neurons in the hidden layer. First, a Simple Neural Network (SNN) will be trained to insert the seven variable inputs into the FITNET function to produce only one output while the model creates neurons or new features in the hidden layers to combine all the features to predict the model’s output. ANN trained data may be obtained using the train function, which inserts the network to be trained with this model’s input (X2), the normalized input, and the output. MATLAB does not recognize columns for the function FITNET, thus the data collection must be transformed to rows. Take the transpose of the matrix columns to turn the data set into matrices. Transposing X2 yields seven and 375 columns. New X2’ and Y2’ exist. The ANN model creation graphical interface is presented in Fig. 8.

Figure 8: Construction of the ANN model with seven variables input and one output

The ANN has been developed and trained at this point, and it is prepared to accept any input values and produce a prediction as an output. The effectiveness will be evaluated to compare the ANN’s prediction with the actual output values for the three sets—size, validation, and test. Only the lines that match the training sets, the lines that match the validation sets and the lines that match the test set are examined by Will. Using the new NET function and inserting the value of the features (neurons), the NET function will transfer the features to the output for the anticipated Y value for the output by the model for the training set. In this situation, only 75% of the training sets (Xt), 15% of the verified data, and 10% of the test data will be delivered along with the values of the features of the training sets (Xt). The results are encouraging because the training model only makes 1% more errors than the validation set, which is close to the testing set. The model is not overfitting because the numbers are so close together. The error of the training RMSE = 0.1215 (%), and the error of the validation RMSE = 0.1313 (%). Since the training error is very close to the validation error, the model is performing at a high level of accuracy, and the model is not overfitted. The model is performing with plus or minus 0.1%.

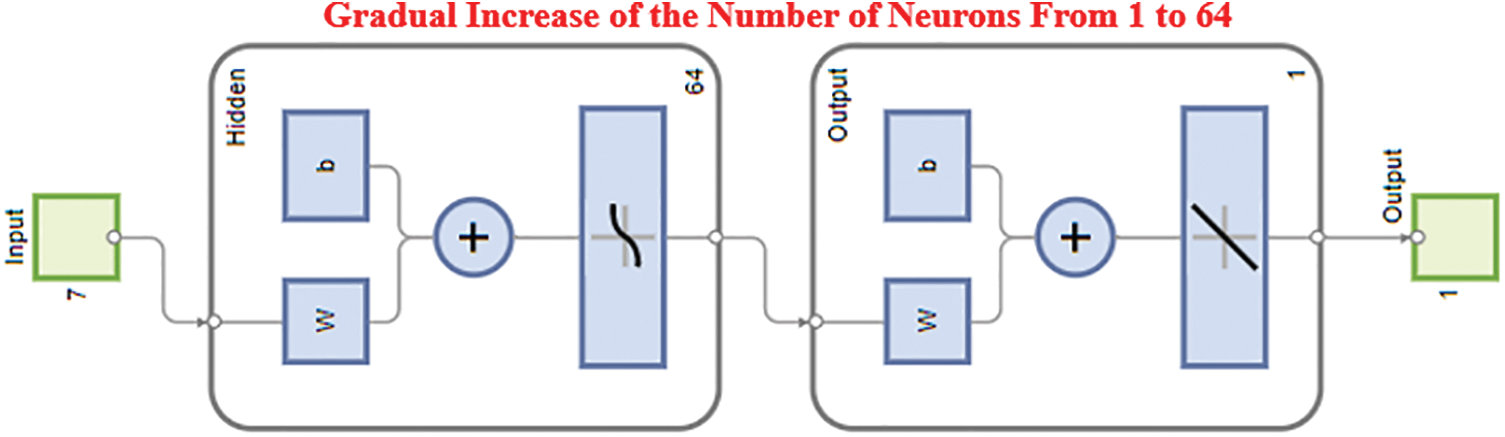

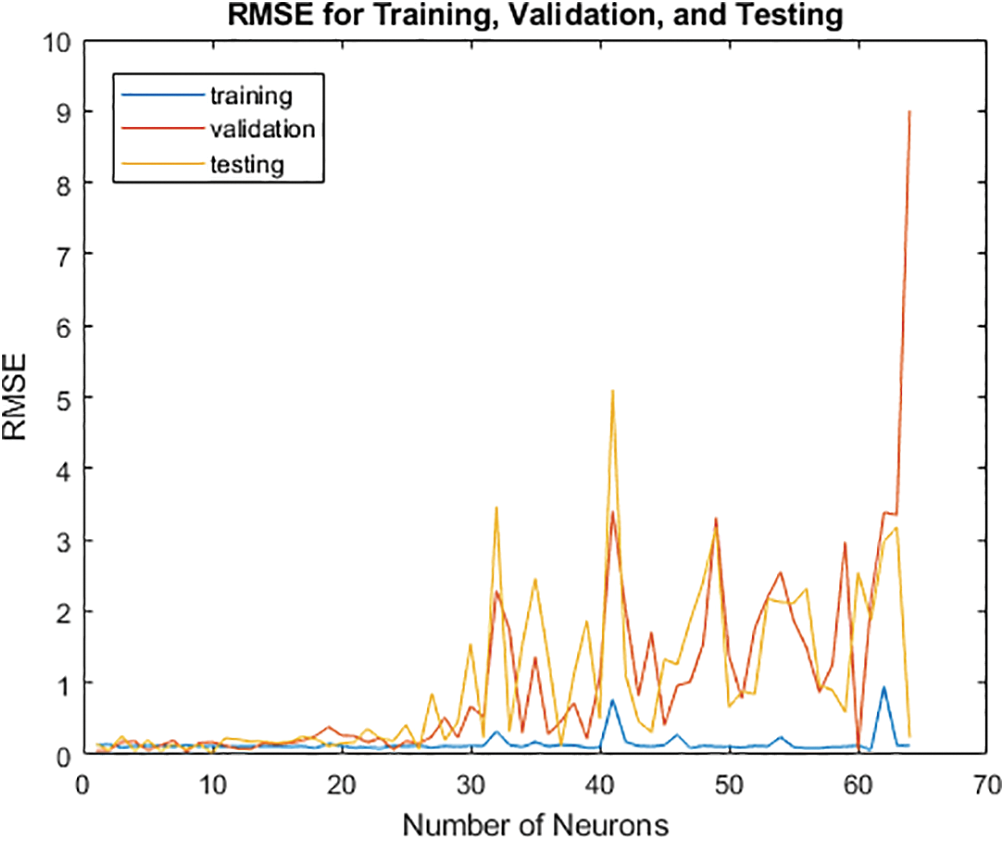

4.3.3 Optimising the Number of Neurons in the Hidden Layer

The model is trained for a loop with a varied number of neurons. Next, a function of the newly chosen number of neurons is studied in relation to the Root Mean Square Error (RMSE) for the validation. The proper number of neurons will be selected for the hidden layer thanks to this method. That offers the validation set's lowest RMSE depending on the number of selected neurons. Depending on the scenario, anything from one to sixty neurons will be picked. The ANN is trained this time with substituted numbers of neurons, where (i) varies from 1: 64. The indicated portions of the train, validate, and test sets (75, 15, and 10) have been recognised, and the network has been established. For subsequent visualisation, the RMSE is maintained as multiple neurons RMSE trains (i). The code that follows shows how to increase the number of neurons in the hidden layer. The new layout, as shown in Fig. 9, indicates that the hidden layer’s number of neurons will increase continuously until it reaches 64. More information about this is provided below.

Figure 9: Gradual increase of the number of neurons from 1–60

4.3.4 Ann for Selecting the Optimal Number of Neurons

After determining the optimal number of neurons for the hidden layer, Fig. 10 below shows the characteristics of the over- and under-fitted layers. The model is excessively simple and will have a significant bias since it is under-fitted from zero to twenty owing to the lack of hidden layers. The model's variance increases and its responsiveness to unidentified validation and testing data decreases as its complexity rises from 45 to 60 neurons. On the other hand, the model retains consistency despite the change in the number of neurons and generalises to the training set of data quite well.

Figure 10: Selection of the optimal number of neurons

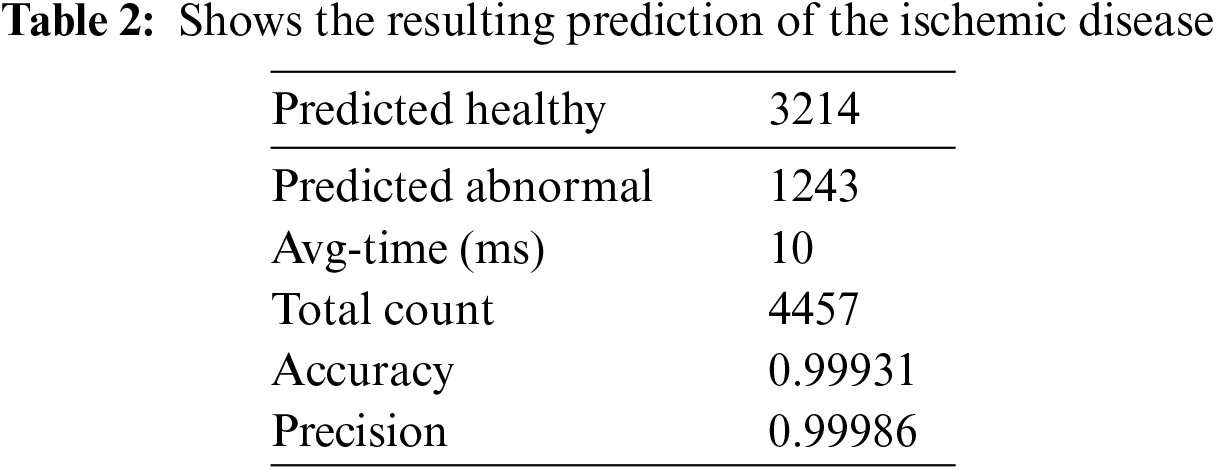

4.4 DT with ANN Model’s Prediction

Based on the result mentioned above, where the RMSE is minimal, this model’s ideal architecture may be identified. Retrain the model by returning to the training ANN and using seven rather than ten neurons for the hidden layer. The model must be trained repeatedly to produce more accurate results, although the new performance can be computed as shown in Table 2.

4.5 Visualization and Validation of Accuracy for the HCT

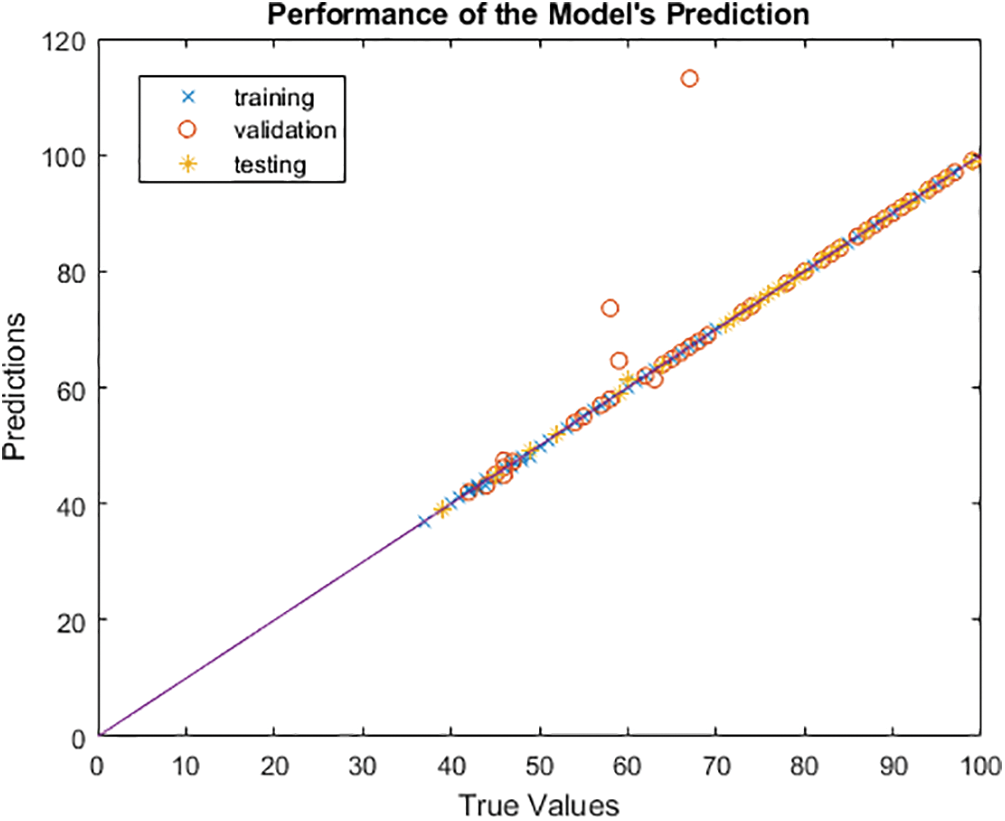

The model’s accuracy is determined by how closely the anticipated outcome values match the actual values after comparison with the true values. Due to the multidimensional nature of the model created by the seven input parameters, the output in this instance cannot be shown as a function of the input. Plotting the output that the model predicts and contrasting it with the actual output will help us see how it performs. As a result, using the code plot (YTrainTrue, YTrain, ‘x’), it is possible to display the projected output as a function of the actual output, with the true output as the X axis and the predicted value as the Y axis. The graph illustrates how the model’s projected values match the true values, demonstrating that the model is ideal for predicting and selecting the most qualified applicants for the position. The perfect linear relationship between the expected and actual values is depicted in Fig. 11. We shall confidently evaluate the linear regression at this time.

Figure 11: Visualization and validation of the accuracy of HCT

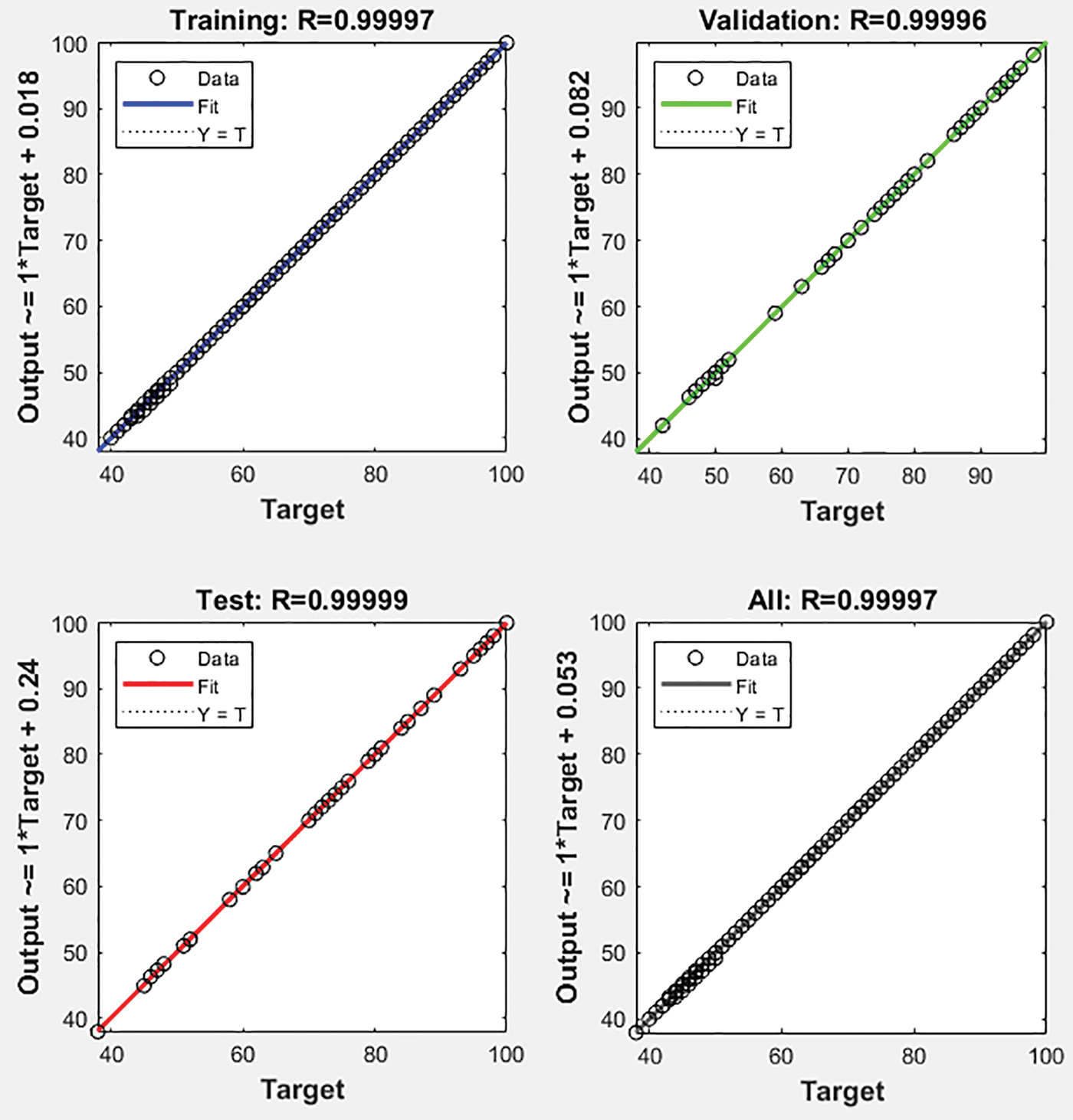

Complex non-linear models can be fitted and evaluated quickly and effectively using a statistical modelling technique known as linear regression. The model’s perfect fit and performance across all training, validation and test sets are shown by the linear regression of the complex relationship between the input data and the output, which shows the model’s exceptional dependability with R = 0.99986 as shown in Fig. 12.

Figure 12: Regression of the accuracy of the proposed HCT model

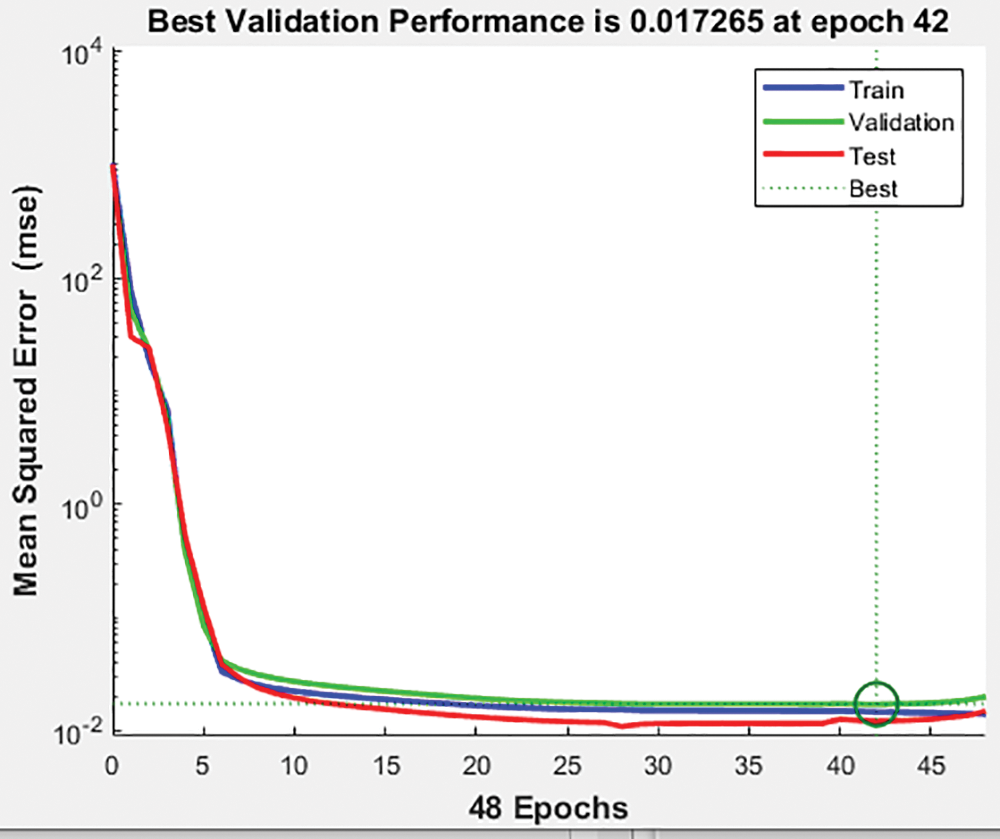

The greatest performance of the ANN model for the training set with three epochs was shown in Fig. 13 below at 0.01726. The validation and test data both have the best fit precisely in the centre, where it has been a green circle, but the mean square error of logs is up to 103 on the Y axis vs. six epochs on the X axis.

Figure 13: Validation of the performance of the proposed HCT model

An algorithm that learns from machine data enables HCT technology to precisely tailor medical care to each patient (ANN). The model was created with the capability of gathering both real-time and historical patient data. The findings will guide medical professionals, healthcare organisations, nurses, and patients in using simulation technologies to diagnose, treat, and anticipate heart disease and aid in developing a model that can be used to diagnose individuals further. The current focus of the Hybrid Cardio Twin is on locating problems and finding solutions to those problems to assist the patients. This HCT capability is one approach that can be taken to solve the problem; however, in the future, the platform will help minimise the risk factors for ischemic heart disease and stroke to contribute to preventing these conditions. We Proposed a Hybrid Cardiac Twin (HCT) framework along with an Artificial Neural Network (ANN) to solve issues of real-time monitoring with high accuracy in decision-making and prediction of future crises. A case study of cardiac ischemia is tested to demonstrate the feasibilities of HCT with a Training, Validation, and Testing accuracy of 0.99997, 0.99954, and 0.9993, respectively, and the overall accuracy is 0.99986. The proposed framework of HCT and the construction of the ANN model can capture historical and real-time data and manage and predict current and future cardiac conditions.

Acknowledgement: The authors would like to thank the Physikalisch Technische Bundesanstalt (PTB) Diagnostic ECG Database, for making the data available on their website.

Funding Statement: The authors did not receive funding for this research study.

Author Contributions: The authors of this research paper have made equal contributions to the study from inception to completion. Each author has played a significant role in conceiving and designing the research, collecting and analysing data, interpreting results, and writing and revising the manuscript. Specifically, each author has collaboratively formulated the research objectives and hypotheses, participated equally in data collection, either through experiments, surveys, or data acquisition, conducted statistical analyses and interpreted the findings jointly, contributed equally to the drafting and revision of the manuscript, including the literature review and discussion sections, and reviewed and approved the final version of the manuscript for submission. We affirm that all authors have made substantial and equal contributions to this research and are in full agreement with the content of the manuscript.

Availability of Data and Materials: The data and materials supporting the findings of this study are available at PTB Diagnostic ECG Database v1.0.0 (physionet.org). We are committed to promoting transparency and facilitating further scientific inquiry, and we will make every effort to provide the necessary information and resources to interested parties in a timely manner.

Conflicts of Interest: The authors affirm that they have no conflicts of interest related to this research. We declare that we do not have any financial, personal, or professional interests that could potentially influence or compromise our impartiality, objectivity, or decision-making in any way. We are committed to acting in the best interests of all parties involved and upholding the highest standards of integrity and ethical conduct. Should any potential conflict of interest arise in the future, we pledge to disclose it promptly and take appropriate steps to mitigate or resolve the conflict to ensure transparency and fairness in all dealings. Our primary goal is to ensure a fair and unbiased outcome for all parties involved in this matter.

References

1. The top 10 causes of death, 2020. [Online]. Available: https://www.who.int/news-room/fact-sheets/detail/the-top-10-causes-of-death (accessed on 08/09/2022) [Google Scholar]

2. G. Coorey, G. A. Figtree, D. F. Fletcher and J. Redfern, “The health digital twin: Advancing precision cardiovascular medicine,” Nature Reviews Cardiology, vol. 18, no. 12, pp. 803–804, 2021. https://doi.org/10.1038/s41569-021-00630-4 [Google Scholar] [PubMed] [CrossRef]

3. Myocardial ischemia, Mayo Clinic Press. [Online]. Available: https://www.mayoclinic.org/diseases-conditions/myocardial-ischemia/symptoms-causes/syc-20375417 (accessed on 15/09/2023) [Google Scholar]

4. Siemens gives some details of “digital twin” work with spaceX, maserati, 2016 [Online]. Available: https://www.sme.org/technologies/articles/2016/may/siemens-gives-some-details-of-digital-twin-work-with-spacex-maserati/ [Google Scholar]

5. Y. Liu, L. Zhang, Y. Yang, L. Zhou, L. Ren et al., “A novel cloud-based framework for the elderly healthcare services using digital twin,” IEEE Access, vol. 7, pp. 49088–49101, 2019. https://doi.org/10.1109/ACCESS.2019.2909828 [Google Scholar] [CrossRef]

6. M. Joordens and M. Jamshidi, “On the development of robot fish swarms in virtual reality with digital twins,” in 2018 13th Annual Conf. on System of Systems Engineering (SoSE), Paris, France, pp. 411–416, 2018. https://doi.org/10.1109/SYSOSE.2018.8428748 [Google Scholar] [CrossRef]

7. K. Bruynseels, F. S. de Sio and J. van den Hoven, “Digital twins in health care: Ethical implications of an emerging engineering paradigm,” Frontiers in Genetics, vol. 9, pp. 31, 2018. https://doi.org/10.3389/fgene.2018.00031 [Google Scholar] [PubMed] [CrossRef]

8. P. Cerrato and J. Halamka, “Reinventing clinical decision support: Data analytics, artificial intelligence, and diagnostic reasoning,” Reinventing Clinical Decision Support, 2020. https://doi.org/10.1201/9781003034339 [Google Scholar] [CrossRef]

9. M. Viceconti, A. Henney and E. Morley-Fletcher, “In silico clinical trials: How computer simulation will transform the biomedical industry,” International Journal of Clinical Trials, vol. 3, no. 2, pp. 37, 2016. https://doi.org/10.18203/2349-3259.IJCT20161408 [Google Scholar] [CrossRef]

10. W. P. Segars, A. I. Veress, G. M. Sturgeon and E. Samei, “Incorporation of the living heart model into the 4-D XCAT phantom for cardiac imaging research,” IEEE Transactions on Radiation and Plasma Medical Sciences, vol. 3, no. 1, pp. 54–60, 2018. https://doi.org/10.1109/TRPMS.2018.2823060 [Google Scholar] [PubMed] [CrossRef]

11. S. N. Makarov, G. M. Noetscher, J. Yanamadala, M. W. Piazza, S. Louie et al., “Virtual human models for electromagnetic studies and their applications,” IEEE Reviews in Biomedical Engineering, vol. 10, pp. 95–121, 2017. https://doi.org/10.1109/RBME.2017.2722420 [Google Scholar] [PubMed] [CrossRef]

12. Y. Feng, J. Zhao, C. Kleinstreuer, Q. Wang, J. Wang et al., “An in silico inter-subject variability study of extra-thoracic morphology effects on inhaled particle transport and deposition,” Journal of Aerosol Science, vol. 123, pp. 185–207, 2018. https://doi.org/10.1016/j.jaerosci.2018.05.010 [Google Scholar] [CrossRef]

13. X. Chen, Y. Feng, W. Zhong, B. Sun and F. Tao, “Numerical investigation of particle deposition in a triple bifurcation airway due to gravitational sedimentation and inertial impaction,” Powder Technology, vol. 323, pp. 284–293, 2018. https://doi.org/10.1016/J.POWTEC.2017.09.050 [Google Scholar] [CrossRef]

14. Y. Feng, J. Zhao, X. Chen and J. Lin, “An in silico subject-variability study of upper airway morphological influence on the airflow regime in a tracheobronchial tree,” Bioengineering, vol. 4, no. 4, pp. 90, 2017. https://doi.org/10.3390/BIOENGINEERING4040090 [Google Scholar] [PubMed] [CrossRef]

15. X. Chen, Y. Feng, W. Zhong and C. Kleinstreuer, “Numerical investigation of the interaction, transport and deposition of multicomponent droplets in a simple mouth-throat model,” Journal of Aerosol Science, vol. 105, pp. 108–127, 2017. https://doi.org/10.1016/j.jaerosci.2016.12.001 [Google Scholar] [CrossRef]

16. T. Erol, A. F. Mendi and D. Dogan, “The digital twin revolution in healthcare,” in 2020 4th Int. Symp. on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Istanbul, Turkey, pp. 1–7, 2020. https://doi.org/10.1109/ISMSIT50672.2020.9255249 [Google Scholar] [CrossRef]

17. J. Zhang, L. Li, G. Lin, D. Fang, Y. Tai et al., “Cyber resilience in healthcare digital twin on lung cancer,” IEEE Access, vol. 8, pp. 201900–201913, 2020. https://doi.org/10.1109/ACCESS.2020.3034324 [Google Scholar] [CrossRef]

18. N. Ayache, “AI & Healthcare: Towards a digital twin?” in 5th Int. Symp. on Multidiscplinary Computational Anatomy, Fukuoka, Japan, pp. hal-02063234, 2019. [Google Scholar]

19. G. Shukla and S. Gochhait, “Cyber security trend analysis using web of science: A bibliometric analysis,” European Journal of Molecular & Clinical Medicine, vol. 7, no. 6, 2020. [Google Scholar]

20. M. Rubart and D. P. Zipes, “Mechanisms of sudden cardiac death,” Journal of Clinical Investigation, vol. 115, no. 9, pp. 2305–2315, 2005. https://doi.org/10.1172/JCI26381 [Google Scholar] [PubMed] [CrossRef]

21. D. P. Zipes and H. J. J. Wellens, “Sudden cardiac death,” in Professor Hein J.J. Wellens, pp. 621–645, Springer, Dordrecht, 2000. https://doi.org/10.1007/978-94-011-4110-9_60 [Google Scholar] [CrossRef]

22. E. Carmeliet, “Cardiac ionic currents and acute ischemia: From channels to arrhythmias,” Physiological Reviews, vol. 79, no. 3, pp. 917–1017, 1999. https://doi.org/10.1152/PHYSREV.1999.79.3.917/ASSET/IMAGES/LARGE/9J0390028024.JPEG [Google Scholar] [CrossRef]

23. A. S. Harris, A. Bisteni, R. A. Russell, J. C. Brigham and J. E. Firestone, “Excitatory factors in ventricular tachycardia resulting from myocardial ischemia; potassium a major excitant,” Science, vol. 119, no. 3085, pp. 200–203, 1954. https://doi.org/10.1126/SCIENCE.119.3085.200 [Google Scholar] [PubMed] [CrossRef]

24. A. L. Wit and M. J. Janse, “Experimental models of ventricular tachycardia and fibrillation caused by ischemia and infarction,” Circulation, vol. 85, no. 1 Suppl, pp. I32–I42, 1992. https://europepmc.org/article/med/1728503 (accessed on 08/09/2022) [Google Scholar] [PubMed]

25. E. Boersma, A. C. P. Maas, J. W. Deckers and M. L. Simoons, “Early thrombolytic treatment in acute myocardial infarction: Reappraisal of the golden hour,” The Lancet, vol. 348, no. 9030, pp. 771–775, 1996. https://doi.org/10.1016/S0140-6736(96)02514-7 [Google Scholar] [PubMed] [CrossRef]

26. M. Satyanarayanan, “The emergence of edge computing,” Computer, vol. 50, no. 1, pp. 30–39, 2017. https://doi.org/10.1109/MC.2017.9 [Google Scholar] [CrossRef]

27. D. Jones, C. Snider, A. Nassehi, J. Yon and B. Hicks, “Characterising the digital twin: A systematic literature review,” CIRP Journal of Manufacturing Science and Technology, vol. 29, pp. 36–52, 2020. https://doi.org/10.1016/J.CIRPJ.2020.02.002 [Google Scholar] [CrossRef]

28. F. Tao, F. Sui, A. Liu, Q. Qi, M. Zhang et al., “Digital twin-driven product design framework,” International Journal of Production Research, vol. 57, no. 12, pp. 3935–3953, 2018. https://doi.org/10.1080/00207543.2018.1443229 [Google Scholar] [CrossRef]

29. C. Zhuang, J. Liu and H. Xiong, “Digital twin-based smart production management and control framework for the complex product assembly shop-floor,” The International Journal of Advanced Manufacturing Technology, vol. 96, no. 1, pp. 1149–1163, 2018. https://doi.org/10.1007/S00170-018-1617-6 [Google Scholar] [CrossRef]

30. S. Boschert and R. Rosen, “Digital twin-the simulation aspect,” in Mechatronic Futures, pp. 59–74, Springer, Cham, 2016. https://doi.org/10.1007/978-3-319-32156-1_5/COVER [Google Scholar] [CrossRef]

31. G. N. Schroeder, C. Steinmetz, C. E. Pereira and D. B. Espindola, “Digital twin data modeling with automationML and a communication methodology for data exchange,” IFAC-PapersOnLine, vol. 49, no. 30, pp. 12–17, 2016. https://doi.org/10.1016/J.IFACOL.2016.11.115 [Google Scholar] [CrossRef]

32. T. H. J. Uhlemann, C. Lehmann and R. Steinhilper, “The digital twin: Realizing the cyber-physical production system for Industry 4.0,” Procedia CIRP, vol. 61, pp. 335–340, 2017. https://doi.org/10.1016/J.PROCIR.2016.11.152 [Google Scholar] [CrossRef]

33. R. Sanders, R. Martin, H. Frumin and M. J. Goldberg, “Data storage and retrieval by implantable pacemakers for diagnostic purposes,” Pacing and Clinical Electrophysiology, vol. 7, no. 6, pp. 1228–1233, 1984. https://doi.org/10.1111/J.1540-8159.1984.TB05688.X [Google Scholar] [PubMed] [CrossRef]

34. Digital twin | Siemens software. [Online]. Available: https://www.plm.automation.siemens.com/global/en/our-story/glossary/digital-twin/24465 (accessed on 17/08/2022) [Google Scholar]

35. Y. A. Qadri, A. Nauman, Y. Bin Zikria, A. V. Vasilakos and S. W. Kim, “The future of healthcare Internet of Things: A survey of emerging technologies,” IEEE Communications Surveys and Tutorials, vol. 22, no. 2, pp. 1121–1167, 2020. https://doi.org/10.1109/COMST.2020.2973314 [Google Scholar] [CrossRef]

36. S. Kohler, “Precision medicine—Moving away from one-size-fits-all,” Quest, vol. 14, no. 3, 2018. [Google Scholar]

37. S. Kohler, “Personalisierte medizin und globale gesundheit,” Public Health Forum, vol. 25, no. 3, pp. 244–248, 2017. https://doi.org/10.1515/PUBHEF-2017-0032/MACHINEREADABLECITATION/RIS [Google Scholar] [CrossRef]

38. M. M. Rathore, S. Attique Shah, D. Shukla, E. Bentafat and S. Bakiras, “The role of AI, machine learning, and big data in digital twinning: A systematic literature review, challenges, and opportunities,” IEEE Access, vol. 9, pp. 32030–32052, 2021. https://doi.org/10.1109/ACCESS.2021.3060863 [Google Scholar] [CrossRef]

39. F. Psarommatis and G. May, “A standardized approach for measuring the performance and flexibility of digital twins,” International Journal of Production Research, vol. 61, no. 20, pp. 6923–6938, 2023. https://doi.org/10.1080/00207543.2022.2139005 [Google Scholar] [CrossRef]

40. F. Psarommatis and G. May, “A literature review and design methodology for digital twins in the era of zero defect manufacturing,” International Journal of Production Research, vol. 2023, no. 16, pp. 5723–5743, 2022. https://doi.org/10.1080/00207543.2022.2101960 [Google Scholar] [CrossRef]

41. M. Shafto, M. Conroy, R. Doyle, E. Glaessgen, C. Kemp et al., Modeling, Simulation, Information Technology & Processing Roadmap, National Aeronautics and Space Administration, pp. 1–38, 2010. [Google Scholar]

42. C. Patrone, G. Galli and R. Revetria, “A state of the art of digital twin and simulation supported by data mining in the healthcare sector,” Frontiers in Artificial Intelligence and Applications, vol. 318, pp. 605–615, 2019. https://doi.org/10.3233/FAIA190084 [Google Scholar] [CrossRef]

43. M. Grieves, “Digital twin: Manufacturing excellence through virtual factory replication,” A Whitepaper, pp. 9, 2014. [Google Scholar]

44. W. Xu, J. Cui, L. Li, B. Yao, S. Tian et al., “Digital twin-based industrial cloud robotics: Framework, control approach and implementation,” Journal of Manufacturing Systems, vol. 58, pp. 196–209, 2021. https://doi.org/10.1016/j.jmsy.2020.07.013 [Google Scholar] [CrossRef]

45. C. Weber, J. Königsberger, L. Kassner and B. Mitschang, “M2DDM—A maturity model for data-driven manufacturing,” Procedia CIRP, vol. 63, pp. 173–178, 2017. https://doi.org/10.1016/j.procir.2017.03.309 [Google Scholar] [CrossRef]

46. C. Zhuang, J. Liu and H. Xiong, “Digital twin-based smart production management and control framework for the complex product assembly shop-floor,” International Journal of Advanced Manufacturing Technology, vol. 96, no. 1–4, pp. 1149–1163, 2018. https://doi.org/10.1007/s00170-018-1617-6 [Google Scholar] [CrossRef]

47. J. Wang, L. Ye, R. X. Gao, C. Li and L. Zhang, “Digital twin for rotating machinery fault diagnosis in smart manufacturing,” International Journal of Production Research, vol. 57, no. 12, pp. 3920–3934, 2019. https://doi.org/10.1080/00207543.2018.1552032 [Google Scholar] [CrossRef]

48. B. Schleich, N. Anwer, L. Mathieu and S. Wartzack, “Shaping the digital twin for design and production engineering,” CIRP Annals, vol. 66, pp. 141–144, 2017. https://doi.org/10.1016/j.cirp.2017.04.040 [Google Scholar] [CrossRef]

49. PTB diagnostic ECG database v1.0.0, 2024. [Online]. Available: https://physionet.org/content/ptbdb/1.0.0/ (accessed on 24/09/2022) [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools