Open Access

Open Access

REVIEW

Improvement method for cervical cancer detection: A comparative analysis

1 Faculty of Electrical Engineering & Technology, Universiti Malaysia Perlis, UniCITI Alam Campus, Sungai Chuchuh, Padang Besar, Perlis, 02100, Malaysia

2 Advanced Computing (AdvCOMP), Centre of Excellence, Universiti Malaysia Perlis (UniMAP), Pauh Putra Campus, Arau, Perlis, 02600, Malaysia

3 Faculty of Electronic Engineering & Technology, Universiti Malaysia Perlis, UniCITI Alam Campus, Sungai Chuchuh, Padang Besar, Perlis, 02100, Malaysia

4 Department of Computer Technical Engineering, College of Technical Engineering, The Islamic University, Najaf, 54003, Iraq

5 Department of Pathology, Hospital Tuanku Fauziah, Kangar, Perlis, 02000, Malaysia

6 Medical Instrumentation Techniques Engineering Department, Al-Mustaqbal University College, Babylon, Hillah, 51001, Iraq

* Corresponding Author: WAN AZANI MUSTAFA. Email:

Oncology Research 2021, 29(5), 365-376. https://doi.org/10.32604/or.2022.025897

Received 04 August 2022; Accepted 28 September 2022; Issue published 10 October 2022

Abstract

Cervical cancer is a prevalent and deadly cancer that affects women all over the world. It affects about 0.5 million women anually and results in over 0.3 million fatalities. Diagnosis of this cancer was previously done manually, which could result in false positives or negatives. The researchers are still contemplating how to detect cervical cancer automatically and how to evaluate Pap smear images. Hence, this paper has reviewed several detection methods from the previous researches that has been done before. This paper reviews pre-processing, detection method framework for nucleus detection, and analysis performance of the method selected. There are four methods based on a reviewed technique from previous studies that have been running through the experimental procedure using Matlab, and the dataset used is established Herlev Dataset. The results show that the highest performance assessment metric values obtain from Method 1: Thresholding and Trace region boundaries in a binary image with the values of precision 1.0, sensitivity 98.77%, specificity 98.76%, accuracy 98.77% and PSNR 25.74% for a single type of cell. Meanwhile, the average values of precision were 0.99, sensitivity 90.71%, specificity 96.55%, accuracy 92.91% and PSNR 16.22%. The experimental results are then compared to the existing methods from previous studies. They show that the improvement method is able to detect the nucleus of the cell with higher performance assessment values. On the other hand, the majority of current approaches can be used with either a single or a large number of cervical cancer smear images. This study might persuade other researchers to recognize the value of some of the existing detection techniques and offer a strong approach for developing and implementing new solutions.Keywords

Cervical cancer is one of the primary causes of gynecologic cancer and one of the most common and dangerous diseases for women, even though it can be treated if detected early. Cervical cancer is cancer that forms when cells on the cervix grow abnormally. There is a large volume of published studies utilizing the Pap smear test to detect pre-cancer in the uterine cervix [1,2]. This type of cancer also remains one of the major public health challenges in several countries, especially countries with low and middle income, in terms of the financial aspect and logistical issues [3]. Previous studies have reported that this cancer is the fourth most pervasive cancer type, which affects the life of many people worldwide [4–6]. A large and growing body of literature has investigated the main cause of cervical cancer, stating that the long-lasting infection with a certain type of human papillomavirus (HPV) is passed from one person to another during sex. Non-human papillomavirus-associated adenocarcinomas (NHPVAs) are uncommon uterine cervix tumors with a deceptive appearance [7–9].

HPV will affect at least half of all sexually active persons at some point in their lives, but only a small percentage of women will develop cervical cancer. A pap smear test is used to detect cervical cancer in most cases and is known as a widely used screening procedure for cervical cancer. However, in recent years, practitioners have executed this evaluation manually, and the results are still controversial due to the accuracy of the diagnosis in detecting cervical cancer cells. In addition, the evaluation is done using the naked eye to determine the type of cervical cell. Furthermore, due to human error, this manual screening approach has a high rate of false-positive results [10].

However, far too little attention has been paid to the occurrence of cervical cancer that can be effectively reduced with preventive clinical management strategies, including vaccines and regular screening examinations [11]. It has previously been observed that early diagnosis and classification of cervical lesions greatly boost the chance of successful treatments of patients [12]. The main objective of the initial diagnosis and classification of cervical cancer is to reduce the mortality rate [13,14]. This cancer can be successfully treated with earlier detection. The findings from existing research recognize the critical role played by the screening test in reducing the mortality rate caused by cervical cancer.

In the past years, the Pap smear test has attracted much attention and is best known as a preventive approach used in the current medical field for detecting cervical cancer [15,16]. This test demands a specialized and labor-intensive analysis of cytological preparations to trace potentially malignant cells from both the internal and external cervix surfaces. The cytopathologist analyzes the microscopic fields by screening for abnormal cells. The use of slide digital cytology imaging to increase cytological diagnosis accuracy could be beneficial. Recent evidence suggests that screening diseases, including cervical cancer, breast cancer, and colorectal cancer, using cell images from slide cells has been widely applied in recent years [17–19]. However, poor image quality due to the uneven staining, complex backgrounds and overlapped cell clusters poses a greater challenge in nuclei segmentation [20].

In addition, biomedical signal processing, which entails analyzing, improving, and presenting pictures obtained via x-ray, ultrasound, MRI, and other methods, has the same concept as biomedical image processing. Image processing is a technique for performing operations on an image to improve or extract relevant information. It is one type of signal processing that processes an input of a picture and turns the output maybe into an image or characteristics/features associated with that image. Most recent attention has focused on image processing for classifying cervical cells. However, the nature of the accurate classification of Pap smear images is still in the improvement stage for better performance. It is still one of the challenging tasks in medical image processing, and its performance can be enhanced by extracting and selecting well-defined features and classifiers [21]. Computer-assisted cervical cancer screening based on automated recognition of cervical cells offers the potential to minimize errors and increase the accuracy of the test when compared to manual screening. Traditional approaches rely heavily on cell segmentation accuracy and discriminative hand-crafted feature extraction [22]. The purpose of this paper is to review recent research on automated detection methods available for the classification of cervical cancer.

Numerous studies have attempted to suggest that image pre-processing may have a dramatic positive effect on the quality of feature extraction and image analysis results [23–26]. For example, Jahan et al. [27] have demonstrated that pre-processing outlines the methods such as cleaning, integration, transformation, and reduction. The main goals of data preparation are to reduce data size, establish data correlations, standardize data, remove outliers, and extract features. Before adopting Machine Learning (ML) models, the basic six steps for coping with the intended dataset must be performed. The process of importing the library, importing the dataset, working with missing data in the dataset, encoding categorical data, and splitting the dataset into training and test sets are all done in a methodical way [27]. A number of studies have found that image segmentation is a common approach used in various pre-processing image applications. Medical imaging, video surveillance, and object detection are some practical applications of image segmentation. The segmentation approach is the method for automatically or semi-automatically extracting the Region of Interest (ROI) from an image [28]. Thus, it enables the suggested method to engage with the image region of interest (ROI) rather than pixels on a grid. After that, the Simple Liner Iterative Clustering (SLIC) output then advances to the second stage, the Density-based Spatial Clustering of Application with noises (DBSCAN) clustering algorithm for similar grouping of super pixels based on their density. DBSCAN produces a clustered image, with each cluster being a nucleus candidate. There are fewer image regions to evaluate at this step, which reduces computing time and prevents a non-nucleus image from being classified as a nucleus. DBSCANs only input parameter is a threshold, which determines to cluster using a density distance function.

In a different study, an artificial intelligence accurate diagnosis solution (AIATBS) is developed to improve cervical liquid-based thin layer cell smear diagnosis according to clinical (The Bethesda System) TBS criteria [29]. The Darknet53 framework was used to coordinate the target detection training, and a YOLOv3 detection model was obtained. Then, integration of XGBoost and a logical decision tree is applied to optimize the parameters provided by the learning process, in which a full cervical liquid-based cytology smear TBS diagnosis system that includes a quality control solution is created. The 121 characteristics from the YOLOv3 detection model, Xception classification model, Patch classification model, and nucleus segmentation model were fed into an XGBoost model for diagnostic model training. Positive and negative squamous intraepithelial lesions were predicted to be positive or negative. A basic XGBoost model for squamous intraepithelial lesions TBS classification was used to further classify the positive results. The system adapts to diverse standards, staining methods, and scanners when it comes to sampling preparation.

An investigation and research finding by Xue et al. [30] also point toward the application of Automatic Visual Evaluation (AVE) to predict pre-cancer based on a digital image of the cervix. This approach has been seen to be a low-cost means of enhancing human performance. However, taking AVE beyond proof-of-concept and into use as a functional complementary tool in visual screening has several challenges. Creating AVE robust across images recorded with several devices is one of them. A new deep learning-based clustering approach is being used to see whether images taken by three different devices (a standard smartphone, a custom smartphone-based handheld device for cervical imaging, and a clinical colposcope with SLR digital camera-based imaging capability) can be distinguished from one another in terms of visual appearance/content within their respective cervix regions. Two established ImageNet pre-trained networks, known as ResNet50 and Vgg16, are used in the study. The representative deep learning classification network is a classification network that has attained excellent performance on the ImageNet dataset to extract features, allowing authors to use the transfer learning technique. The findings and analysis show a need to design a system that reduces the variance between photos acquired from different devices. It also emphasizes the importance of a vast number of training images from various sources for reliable device-independent AVE performance around the world [30].

In addition, Stacked Denoising Autoencoders (SDAEs) are applied to improve the performance of normal Stacked Autoencoders (SAE). However, when examining a larger number of input samples, SDAE’s convergence rate takes longer, given by 2′16, 2′18, and 2′14, s since each sample will be taken into account. The suggested h6, h8, and h4 systems add the Fine-tuned Stacked Denoising Autoencoder (FSDAE), which denoises using a minibatch of samples rather than the entire data from supplied input images. The proposed FSOD-second GAN phase will augment the collected images with segregated classes, related types, and stages to minimize overfitting due to the subsequent detection and classification. Several data augmentation procedures, such as rotation, flip, shift, and zoom, have been used to increase the overall quantity of data. Resizing and cropping the input photographs to a width and height of 100X100 pixels, as well as recoloring the grayscale color channel, were used to enhance and pre-process them. The final image will be a matrix with each row consisting of abnormally flattened grayscale pixels [31].

One of the more significant findings to emerge from this review is that pre-processing of images plays an important phase in image processing techniques, specifically for the detection method of cervical cancer cells. Although the previous researcher has used several techniques, the most common method is augmentation. The augmentation method is one method that is able to increase the cardinality of the training dataset and avoid fitting. Apart from that, this helps in increasing the accuracy of the overall network of the convolutional layer structure. Furthermore, the total number of images can be increased with the application of techniques like rotation, flipping, shifting and zooming for data augmentation.

Detection method based on cells/pap smear images

Deep learning is a computer-aided diagnostics (CAD) based system investigated widely to classify cervical Pap cells. However, deep learning may provide poor performance for a multiclass classification task when there is an uneven distribution of data which is prevalent in the cervical cell dataset. A study has been conducted by Rahaman et al. [32] to address those limitations by proposing DeepCervix, a hybrid deep feature fusion (HDFF) technique. A hybrid ensemble technique comprising 15 different machine learning algorithms such as random forest, bagging, rotation forest, and J48 is able to perform better than an individual algorithm. Various pre-trained deep learning models in this study, including VGGNet, ResNet, ResNetV2, Inception- Net, InceptionResNetV2, XceptionNet, DenseNet, and NasNet has been trained. Results obtained have indicated that a combination of VGG16, VGG19, ResNet50 and XceptionNet provides the best results for this task [32].

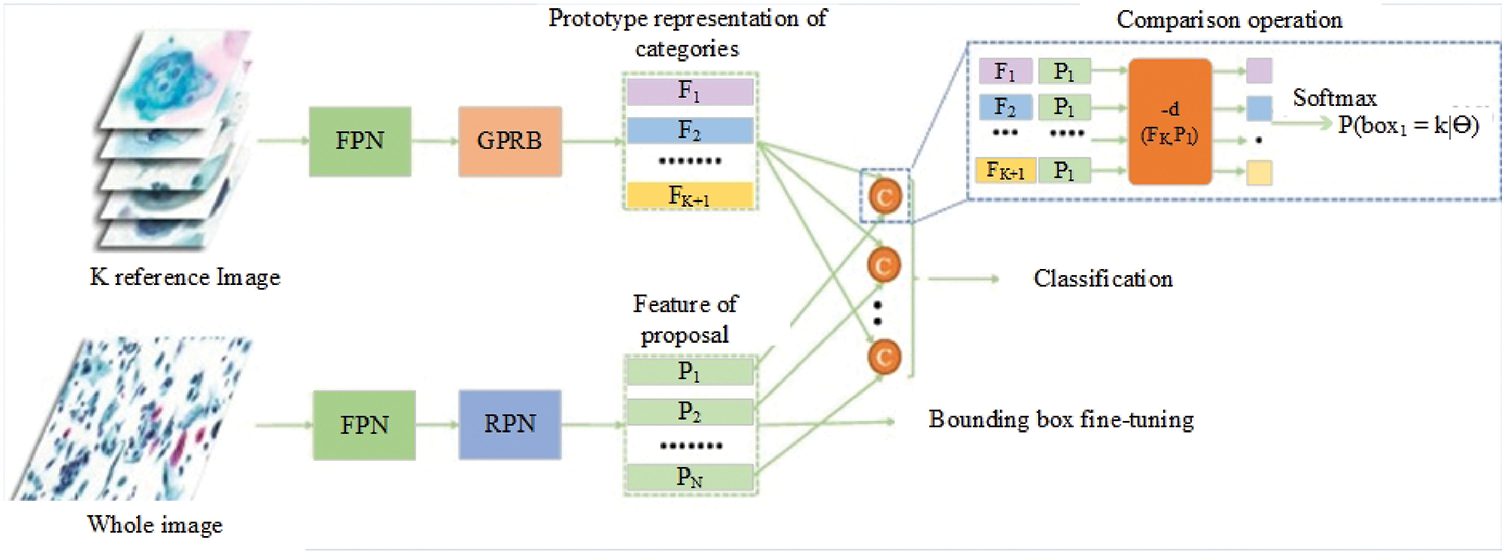

More recent studies have confirmed that current discussions in biomedical technology relate to the method for detecting cervical cancer. There have been several studies in the literature reviews related to the detection of cervical cancer cells with the objective of aiding pathologists. In the year 2021, Cao et al. [33] proposed a method in which they describe a novel deep learning method named attention feature pyramid network (AttFPN) for abnormal cervical cells. The AttPFN method consists of two main components. It comprises an attention module mimicking the way pathologists read a cervical cytology image as well as a multi-scale region-based feature fusion network guided by clinical knowledge to fuse the refined structure for detecting abnormal cervical cells at different scales. The proposed method outperformed the other related deep learning methods of Faster R-CNN with Feature Pyramid Network (FPN), worthy of comparison to experienced pathologists with a 10-year of experience on an independent dataset. The findings are consistent with the study by several researchers, which proposed the utilization of the Faster R-CNN method for the detection of cervical cancer cells [34,35]. Besides, Tang et al. [36] proposed the comparison detector based on a proposal-based detection framework which often consists of a backbone network for feature extraction, an RPN for generating proposals and a head for the proposed classification and bounding box regression. The overall structure of the comparison detector proposed is shown in Fig. 1.

Figure 1: The overall structure of the Comparison detector proposed for feature extraction, an RPN for generating proposals and a head for the proposed classification and bounding box regression [36].

The research study by Chen et al. [37] has proposed a study that focuses on improving the accuracy of cervical cell classification by considering resource limitations. A compact and effective model that meets design requirements for embedded devices is built with a lightweight convolutional neural network (CNN) architecture to create a highly efficient model with fewer parameters and calculations. The proposed method’s basic steps are as follows:

• Prepare the image samples by pre-processing the datasets.

• On the target dataset, use transfer learning to train different teacher models. First, download the already trained CNNs, then fine-tune CNNs to the target dataset. Then, based on the training results, determine the final teacher model.

• From the final instructor model, get the soft labels.

• On the target dataset, train the lightweight student CNN models using dark knowledge loss, cross-entropy loss, as well as soft and hard labels.

• Only use the traditional cross-entropy loss and hard labels to test the lightweight models on the target dataset [37].

A Multi-Task Network (MTN) is one of the methods proposed in a study based on Y-Net’s architecture and performs two tasks: nuclear segmentation and classification. The network’s segmentation component features an encoder-decoder structure. The fundamental feature extraction activities in the encoder are handled by efficient spatial pyramid (ESP) modules. The decoder receives the encoder’s final feature representation and constructs a nuclear mask with the same spatial resolution as the input using up sampling and pyramid spatial pooling (PSP) modules. Information can be shared between the encoder and the decoder by concatenating skip links from the encoder to the decoder. The diagnostic component of the MTN is made up of more ESP modules, which lead to an average global pooling module and two completely connected layers. A single convolution conducts down sampling processes, halving the spatial resolution of the feature maps. Bilinear interpolation is used for up sampling. After each down sampling, up sampling, ESP, and PSP module, batch normalization and ReLU activation are implemented. The modules that make up the MTN and the learning via proxy labels are described in the following sections [38].

In a different study, Diniz et al. [39] has shown an efficient ensemble to classify the segmented regions (nucleus candidate) returned from the pre-processing phase. The ensemble method consists of the Decision Tree (DT), Nearest Centroid (NC) and k-Nearest Neighbors (k-NN). The findings of the study show that this ensemble method achieved the best result concerning the F1 and recall values. With the same objective, three segmentation strategies for automated segmentation of cervical cell nuclei in the presence of debris are described by Arya et al. [40]. Automated Seed Region Growing, Extended Edge Based Detection, and Modified Moving Segmentation is three segmentation approaches. Extraction of the nuclei of cervical cells, k-means approaches are presented. Using the morphological trait of a nucleus, these techniques extract the area of nuclei from smear images. Some debris has an area that matches the nucleus of normal cells, which can cause interference and false-positive results. This study describes three strategies for automated segmentation of cervical cell nuclei in the presence of debris. It comprises Automated Seed Region Growing, Extended Edge Based Detection, and Modified Moving Segmentation approaches. These techniques extract the area of nuclei from smear images using the morphological attribute of the nucleus. Some debris has a nucleus that matches regular cells, causing interference and false-positive results. Research demonstrates that Modified Moving k-means are more accurate in identifying dysplastic in the presence of debris [40].

A workload-reducing algorithm for analysis of cell nuclei features from Pap smear images. An investigation has been done with the involvement of eight traditional machine learning methods to perform a hierarchical classification [41]. The classifier involved were: AdaBoost, Decision Tree (DT), Gaussian Naive-Bayes (GNB), k-Nearest Neighbors (k-NN), Multi-Layer Perceptron (MLP), Nearest Centroid (NC), Random Forest (RF), and Ridge. A hierarchical classification methodology is one method developed for computer-aided screening of cell lesions with an aim to provide another side of view from the Pap smear images based on the nuclei detection of cervical cancer. The methodology starts with the extraction of features from each nucleus segmented in the database. Region Props, Haralick’s features, Local Binary Patterns (LBP), Threshold Adjacency Statistics (TAS), Zernike moments, and Gray Level Co-occurrence Matrix were among the algorithms used (GLCM). All the programmes were written in Python, with Region Props and GLCM coming from the scikit-image package and the rest from the Mahotas package. Morphological and other features are also included in the study. The study found that hierarchical classification provided better findings than those without it. In 2021, Pirovano et al. [42] explained in a study how to apply the suggested method (classifier with regression constraint) to the novel task of categorizing tiles from cytology images in the context of cervical cancer. In that paper, with the application of an attribution strategy, a demonstration has been made to the model learned to discover the cells responsible for the anticipated label under weak supervision. The three suggested architecture (Resnet-101 classifier, Resnet-101 Regressor and Resnet-101{Classifier + Regressor}) surpasses a simple classifier and other state-of-the-art approaches for ordinal classification in terms of overall accuracy and severity prediction. Furthermore, the suggested method is successfully tuned to achieve a higher sensitivity as a tool that can help practitioners [42].

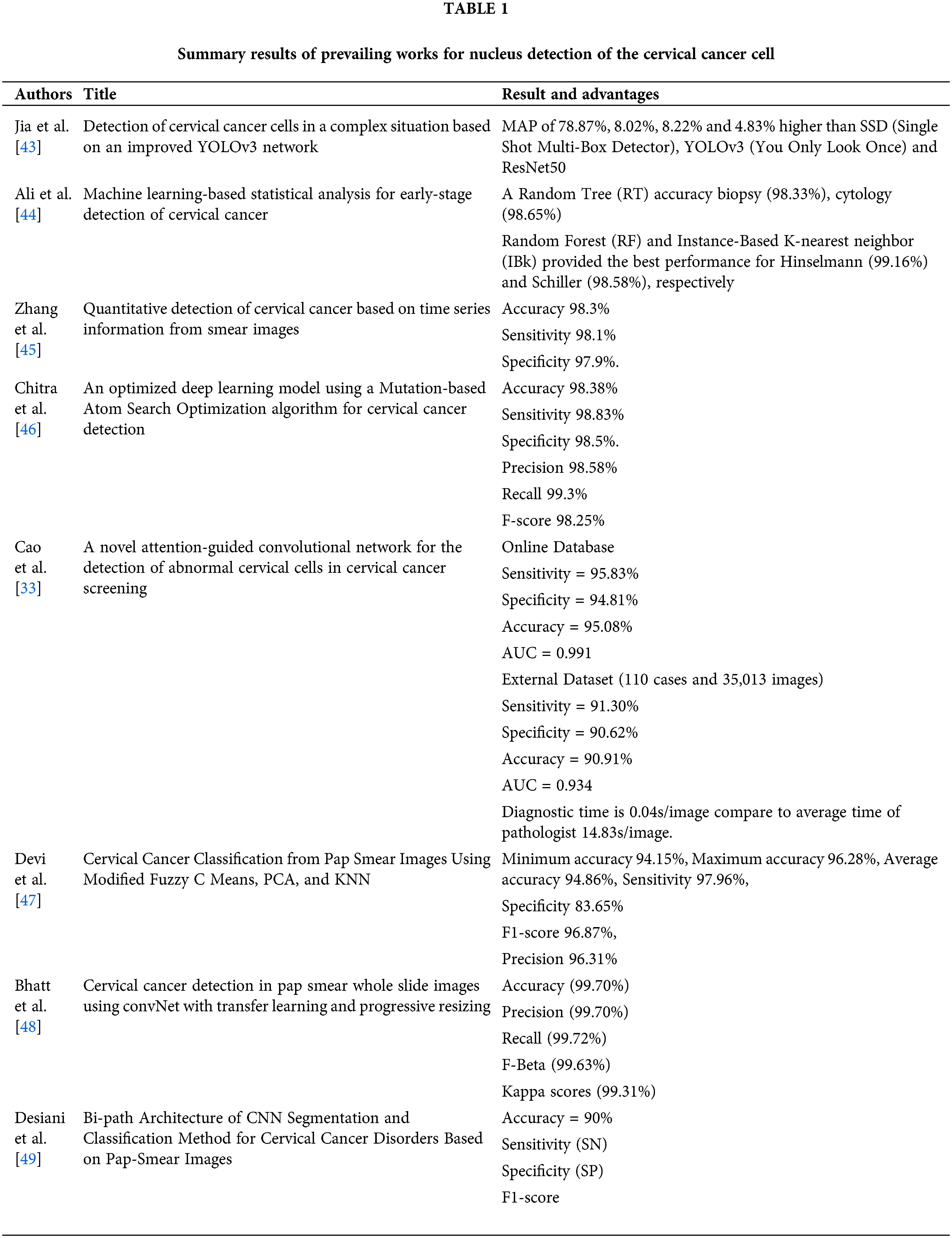

Recently, a convolutional neural network-based detector has been used to lessen the reliance on hand-crafted features and eliminate the need for segmentation. These strategies, on the other hand, tend to produce an excessive number of false-positive predictions. Therefore, to resolve this issue, a global context-aware framework was created with the use of an image-level classification branch and a weighted loss to incorporate global context information. A global context-aware network with soft-scale anchor matching (SSAM) is proposed to optimize the parameters. This method involves a backbone network, image-level classification branch (ILCB) and cervical cell detection branch. This branch’s prediction is paired with cell detection to filter out erroneous positive predictions. The backbone network provides shared features for image-level categorization and cervical cell detection. DarkNet is used as the backbone network. Abnormal cervical image existence is catered to using the application of ILCB, which is directly attached to the top of the backbone network. The cervical cell detection branch consists of a three-level FPN, and the detection head attached to each feature level of FPN is used to anticipate where cervical cells will be spotted and which class they will belong to. Table 1 will illustrate the summary of past studies related to nucleus detection.

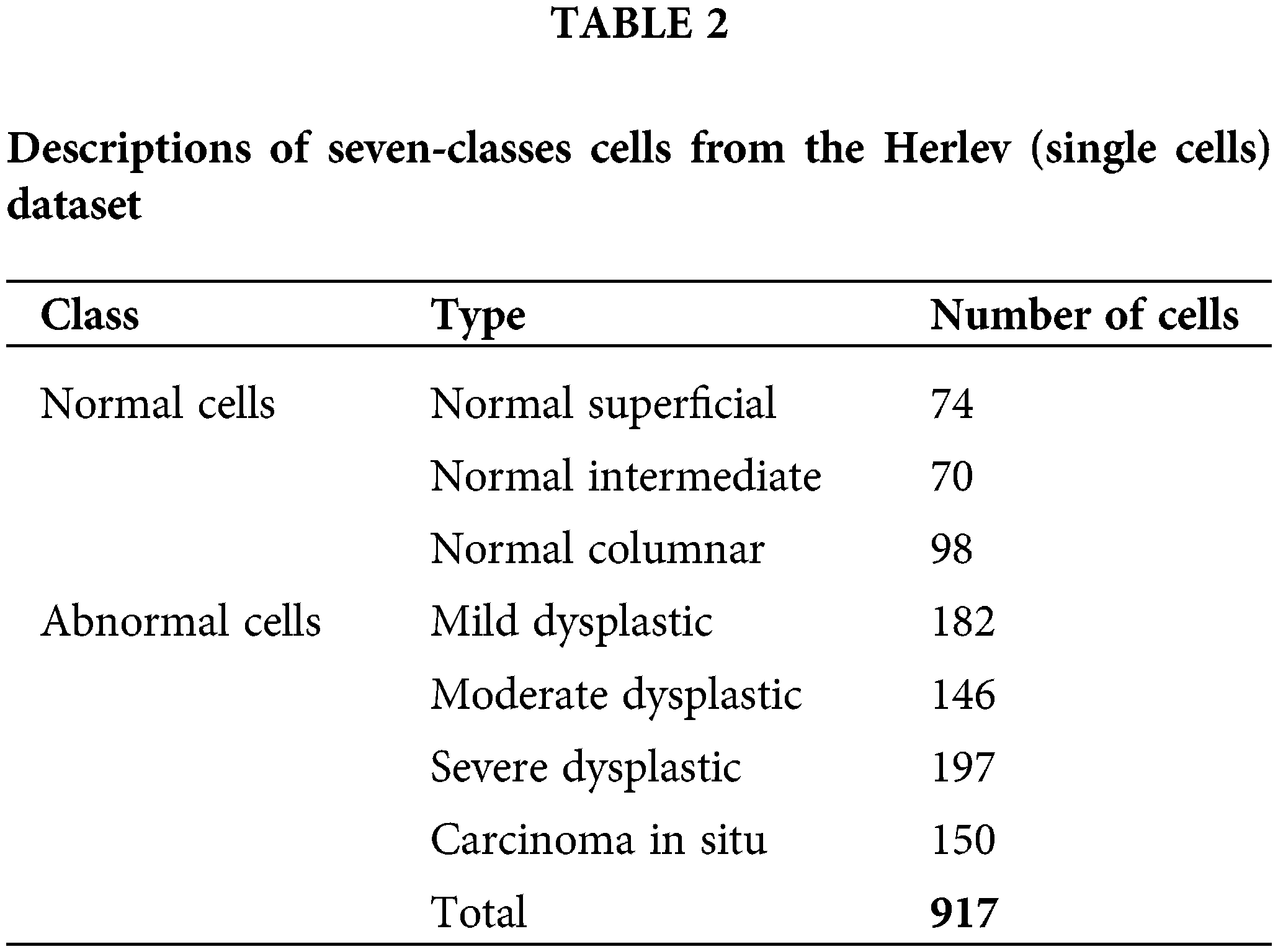

Herlev is a widely used dataset, and this image database has been used to design the detection technique. In addition, most researchers used the Herlev University image datasets to improve the design and development process. Herlev Pap database is compiled by Herlev University Hospital (Denmark) and the Technical University of Denmark. The database contains 917 pictures manually sorted into groups by professional cytotechnicians and physicians. Surface squamous, intermediate squamous, columnar, mild dysplasia, moderate dysplasia, extreme dysplasia, and in situ cancer are among the seven cervical cell classifications in the database. In addition, various cell and nucleus properties are extracted [2].

In this study, 105 pap smear images were used. The database falls under the category of NiSIS or Nature inspired Smart Information System (EU coordination action, contract 13569), with a particular focus on the group “Nature-Inspired Data Technology”. The data is accessible over the internet (http://mde-lab.aegean.gr/index.php/downloads). Table 2 provides the details of the dataset used for the nucleus detection method. Seven types of cells fall under the category of normal cells and abnormal cells. The normal cells consist of normal superficial, normal intermediate and normal columnar types. In contrast, the abnormal cells consist of mild dysplastic, moderate dysplastic, severe dysplastic and carcinoma in situ type of cells. The total numbers of images in this dataset are 917.

The experiment is done based on several approaches used by previous researchers in past studies. This study considers the method for nucleus detection for cervical cells based on pap smear test images. The dataset used is the established Herlev dataset. The image is processed based on the suggested approach for improving existing segmentation techniques such as thresholding, trace region boundary, contrast enhancement, edge detection, as well as a morphological and watershed approach using Matlab R2021a. The processed image is then compared to the ground truth using image quality assessment for performance analysis. Finally, the values calculated are compared to determine the better performance approach for nucleus detection.

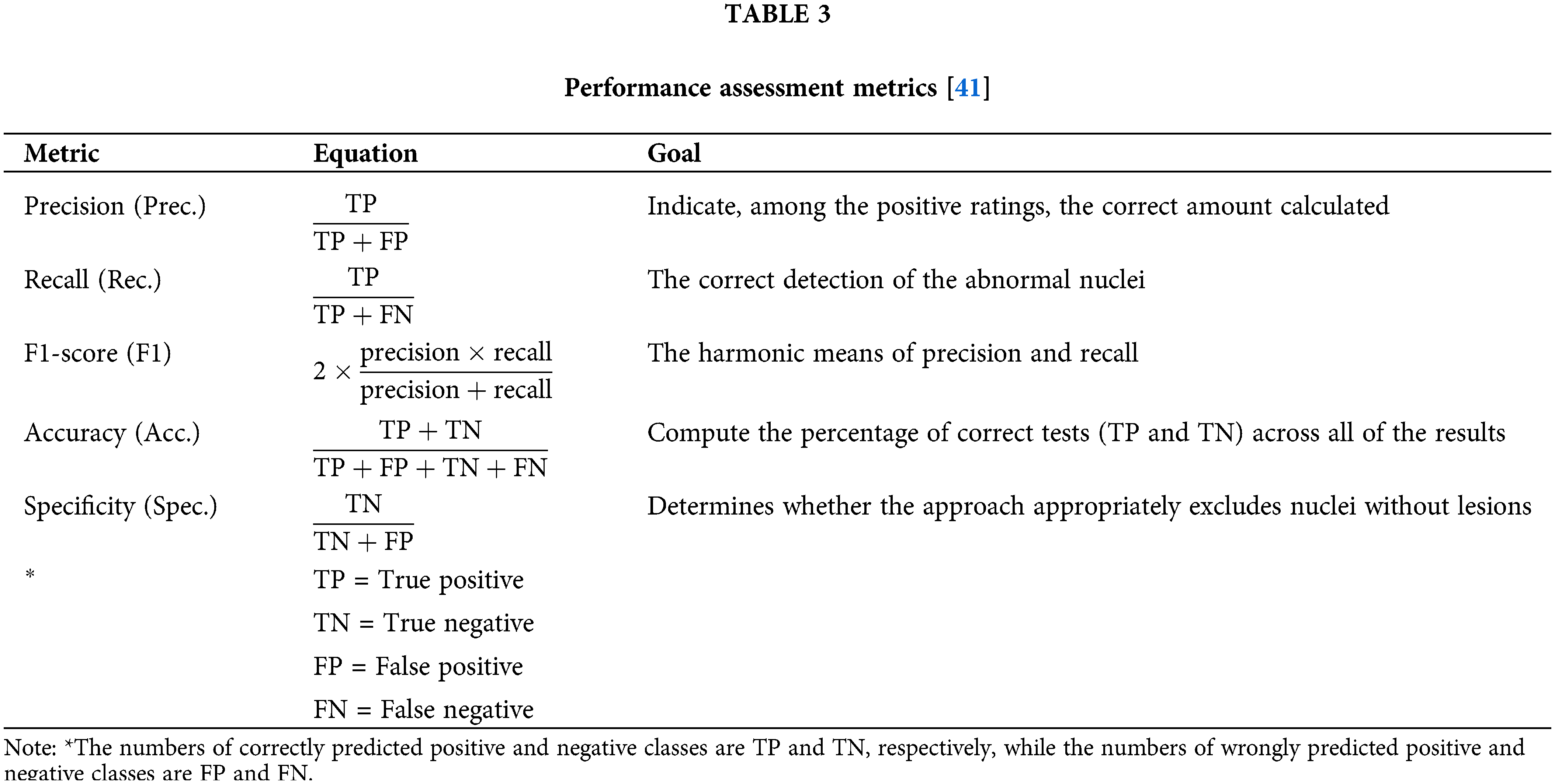

Performance analysis is a series of heterogeneous computer-aided tools that assess a system’s performance at several levels of abstraction, making the task more difficult. The performance of the analysis process can be enhanced through the extraction of a common object model. Five regularly used performance metrics from the literature were used in the reviewed studies. Accuracy, precision, recall, geometric mean and F1-score are the performance measurements. These performance measures were calculated using mathematical equations [31,50–52]. Details of the performance metric as per shown in Table 3.

Furthermore, Jia et al. [53] showed that true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN) are used to create indicators in the confusion matrix. Accuracy, precision, sensitivity, specificity, F-Index, and negative predictive value (NPV) are common measures in biomedical segmentation. Precision is often used in conjunction with sensitivity and refers to the ratio of correctly splatted foreground pixels. The ratio of pixels in ground truth that match the separated ones is referred to as sensitivity.

The average harmonic value of precision and sensitivity is known as the F-Index. The NPV is a metric for how comprehensive a set of results is. Other metrics, such as the Dice coefficient and the Jaccard Index, provide a more comprehensive assessment of segmentation. The extracted contours are estimated fairly using Volumetric Similarity (VS). Visual accuracy (VA) is a visual evaluation of segmentation. The following is a list of the metrics referenced in [53] that lead to false-positive results. The histogram of an image in image processing usually refers to a histogram of pixel intensity values.

One of the most broadly utilized performance analysis methods is qualitative analysis. Probabilistic statements about the algorithm’s performance and weaknesses are based on human visual perception [40,54].

In a study by Arya et al. [40], the first step in analyzing the results of three segmentation techniques to predict the dysplastic in cervical cells in the presence of debris is extracting the Region of interest (ROI). Normal cell nuclei, abnormal cell nuclei, and debris are detected in ROI, and the area of all the objects is computed. Some debris has a region that matches the nucleus of normal cells, which could interfere with the outcome and lead to false-positive results. The histogram of an image in image processing usually refers to a histogram of pixel intensity values.

Quantitative analysis is a numerical-based way of obtaining information on an algorithm’s performance without involving any human interaction. Smear photos have a lot of debris in the background, and the nucleus and cytoplasm are in the foreground. The number of items, precision, sensitivity, F-measure, specificity, accuracy and PSNR are calculated in a complicated context. The calculated values can be calculated based on the segmented images. These findings demonstrate the importance of validating image quality using the suggested techniques on the Pap smear dataset [40].

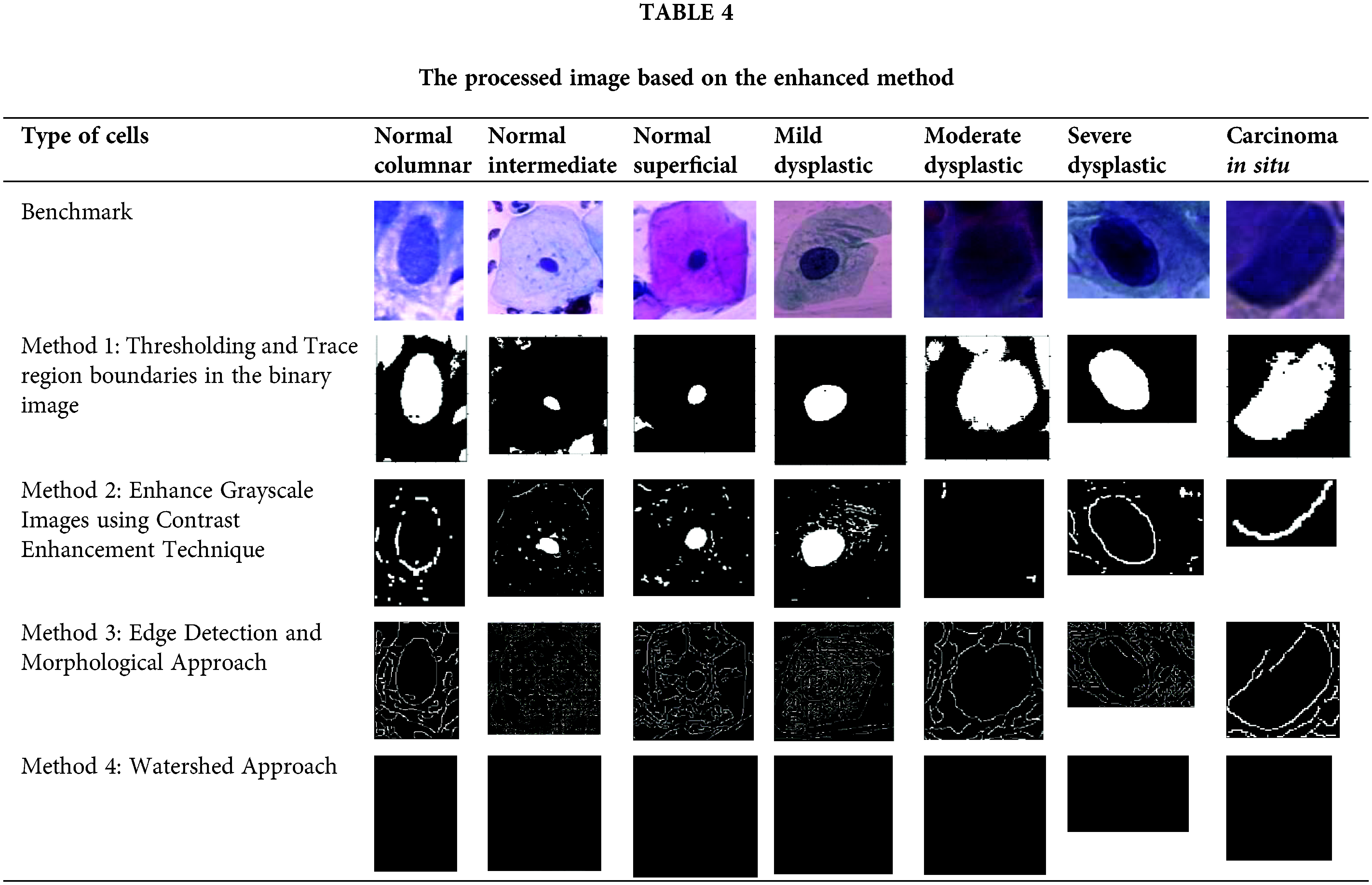

A reviewed method based on several segmentation techniques has been tested using Matlab. Table 4 has tabulated the processed images for different types of cervical cells using four reviewed methods. Methods used are (1) Edge detection and morphological approach, (2) Watershed Approach, (3) Thresholding and trace region boundaries in the binary image and (4) Enhance Grayscale Images using Contrast Enhancement Technique. Nuclei are displayed in the final image for normal cells in the segmented images, while a blank image is formed for aberrant cells. Overall, according to the qualitative analysis, the thresholding and trace region boundaries in binary images outperform the other traditional algorithms in terms of segmentation performance, regardless of the number of objects employed [40]. Several methods have been introduced for cervical cancer detection in the area of nucleus detection. In this study, the image database is processed based on the four methods reviewed and was written as a new image. Table 4 shows that method 1 shows favorable results compared to the other methods. However, the other methods are also able to detect nuclei but are limited to certain types of cells. Performance analysis is a series of heterogeneous computer-aided tools

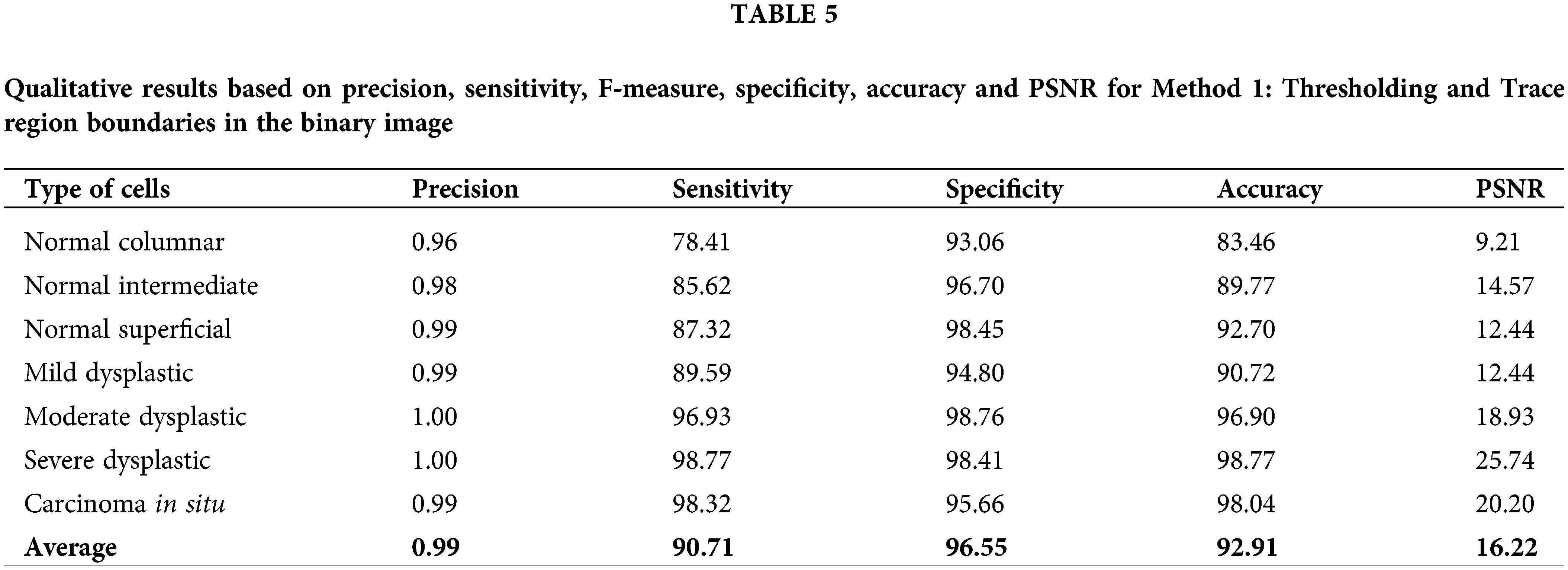

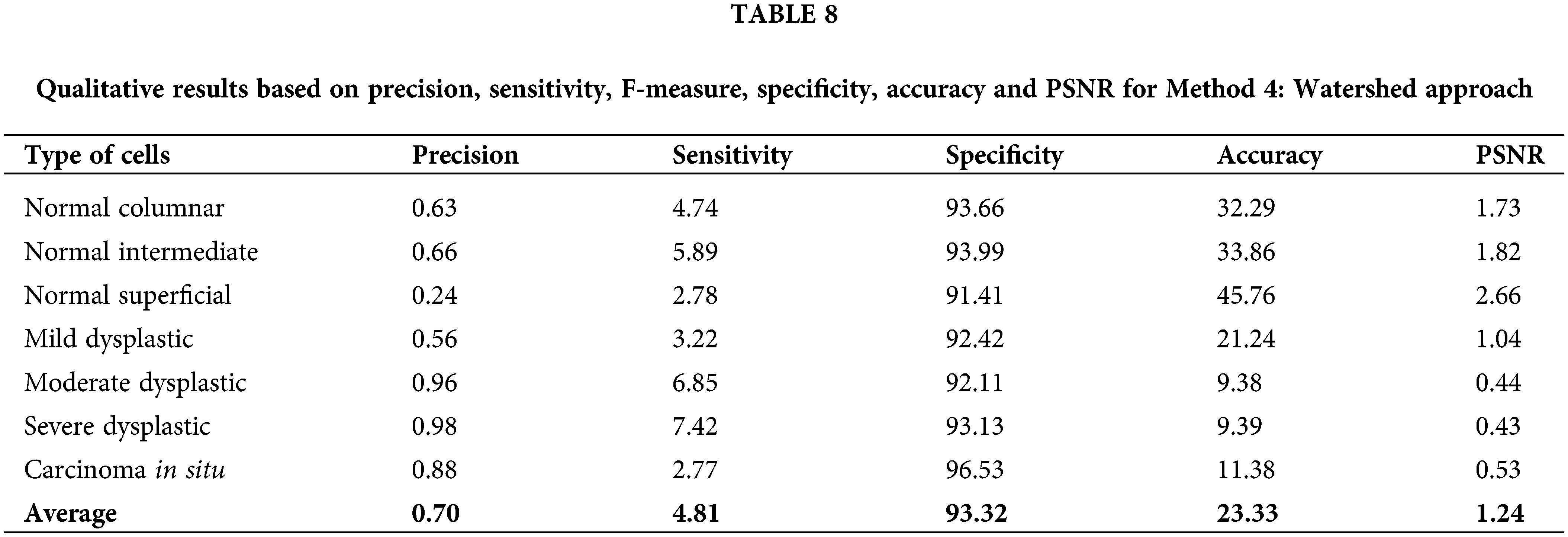

Table 5 until Table 8 has demonstrated the calculated values of precision, sensitivity, f-measure, specificity, accuracy and PSNR for each approach tested. Based on the result shown in Table 5, the approach of thresholding and tracing region boundaries in binary mage showed a favorable result in the ability to detect the nucleus of the cervical cancer cells for all different types of cells. This approach is able to detect all nuclei from seven types of cells with a high value of precision, sensitivity, f-measure, specificity, accuracy and PSNR. The highest values obtained are for a severe dysplastic cell which shows the consistent highest values of precision 1, sensitivity 98.77%, F-measure 99.37%, specificity 98.41%, accuracy 98.77% and PSNR 25.74%. Thus, the tabulated data has proved this method is able to perform a good image pre-processing for the nucleus detection of a cervical cancer cell.

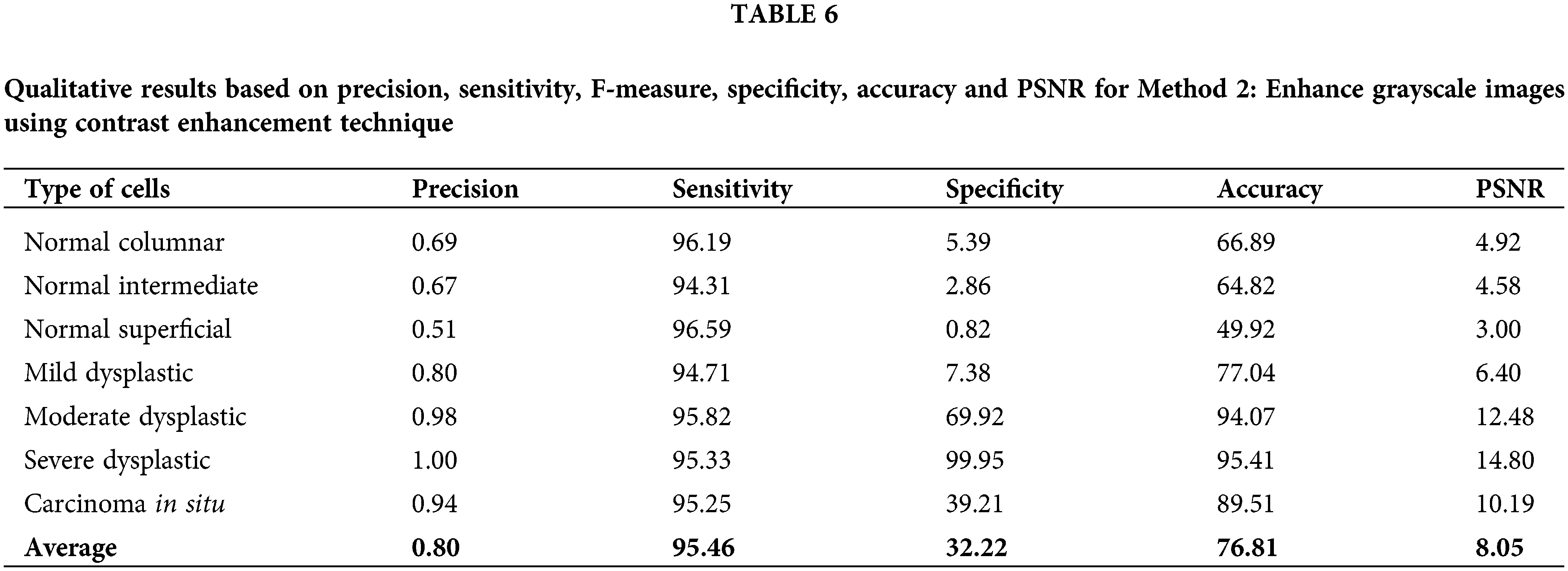

The calculated data of precision, sensitivity, F-measure, specificity, accuracy and PSNR has been recorded in Table 6 for method 2: Enhance grayscale images using contrast enhancement technique. This approach has fluctuating values for all calculated values. Values of sensitivity, F-measure and accuracy are in the high range of around 50% to 100%. The PSNR value might not be the highest compared to method 1. However, the values are still more than 3% and up to 14.80%. This method yields high values for accuracy of 95.41% and 94.07% for the cell type of moderate dysplastic and severe dysplastic, respectively.

The accuracy value is quite high and in the range of other existing techniques reviewed.

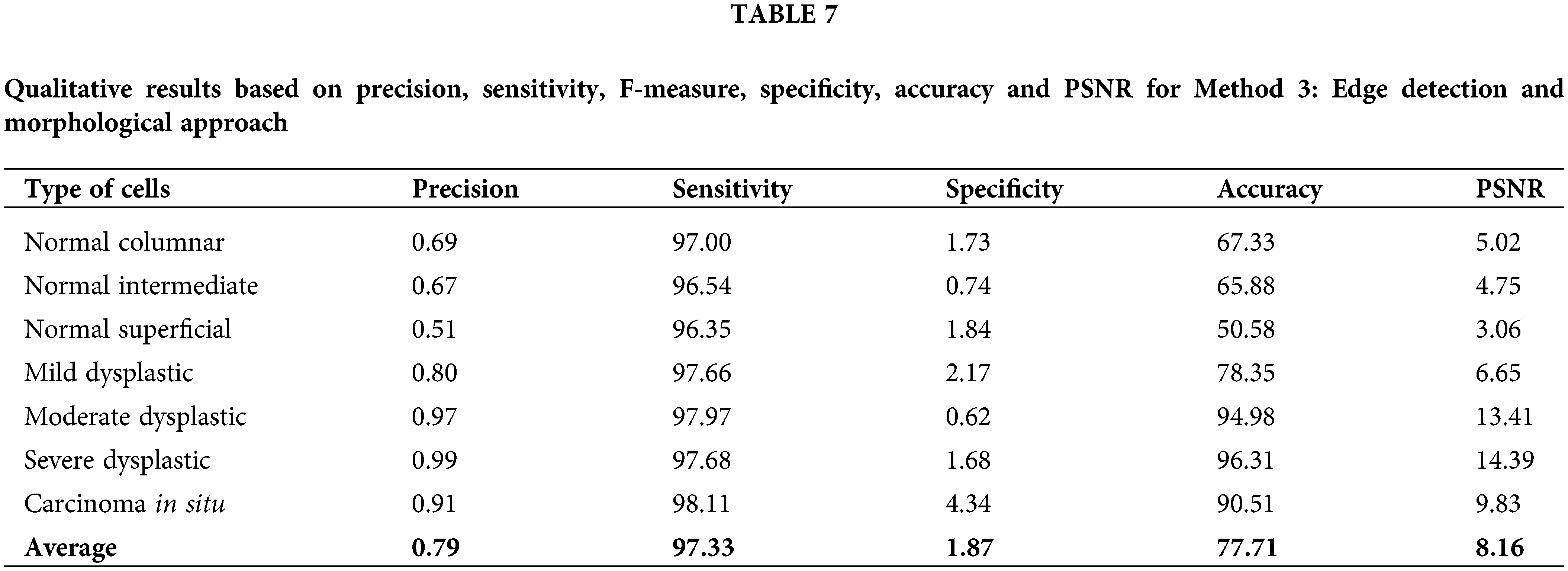

Next, for method 3: Edge detection and morphological approach, the calculated data of precision, sensitivity, F-measure, specificity, accuracy and PSNR have been recorded in Table 7. This approach has fluctuated values for all calculated values, which possess quite a similar pattern to Method 2. Values of sensitivity, F-measure and accuracy are in the high range of around 50% to 100%. The PSNR value might not be the highest compared to Method 1. However, the values are still more than 3% and up to 14.39%. This method yields high values for accuracy of 96.31%, 94.98% and 90.52% for cell types of severe dysplastic, moderate dysplastic and carcinoma in situ, respectively. The value of accuracy is quite high and in the range of other existing techniques reviewed. This method is a better option compared to Method 2 but still a less likely option compared to Method 1.

Lastly, for Method 4: watershed approach, although this method has resulted in the lowest values of all calculated data as in Table 8, it still promotes an opportunity to be improved for better performance. Therefore, compared to the other proposed algorithms, Thresholding and trace region boundaries in binary images outperform them all. The related qualitative analysis demonstrates good image segmentation ability [40]. Based on the values calculated, it is easier to choose a better option for image pre-processing in detecting the nucleus of cervical cancer cells.

The tested approach for cervical cell nucleus detection has greatly improved compared to the literature for method 1: Thresholding and trace region boundaries in the binary images. The highest values for this method are 1.00, 98.77, 98.76,98.77 and 25.74 for precision, sensitivity, specificity, accuracy and PSNR values, respectively. Based on the previous studies that has been reviewed, the performance of approaches used previously has resulted in precision values of 0.98 [46], 0.96 [47], 0.99 [48], sensitivity 98.83% [46], 95.83% [33], 97.96% [47], specificity 97.9% [45], 98.5% [46], 90.62% [33], 83.65% [47], 99.70 [48], accuracy 98.3% [45], 98.38 [46], 95.08% [33], 96.28% [47], 99.70% [48], 90% [49]. The values obtained from the experiment for method 1, which is thresholding and trace region boundaries in a binary image, outperform other methods in terms of precision, sensitivity and specificity from previous studies. This shows that this method can provide good data for further classification of the cervical cancer cell type.

Several approaches and analysis methods are studied and reviewed in this paper that has been developed to create an end-to-end framework for cervical cancer diagnosis and classification. All of the strategies proposed have been designed to work with multivariate datasets. The recommended methods have also been expanded to include determining the kind and stage of cervical cancer in addition to diagnosing it. Experiments were conducted at the training, validation, and testing stages. The results show that the highest performance assessment metric values obtain from Method 1: Thresholding and Trace region boundaries in a binary image with the highest values of precision 1.0, sensitivity 98.77%, specificity 98.76%, accuracy 98.77% and PSNR 25.74% for a certain type of cell. Meanwhile, for average, the values of precision were 0.99, sensitivity 90.71%, F-measure 94.00%, specificity 96.55%, accuracy 92.91% and PSNR 16.22%. The experimental results are then compared to the existing methods from previous studies. They show that the improvement method is able to detect the nucleus of the cell with higher performance assessment values in sensitivity, specificity and precision. According to the publications reviewed, the current techniques have shortcomings, resulting in poorer classification accuracy in specific cell types. Most existing techniques, on the other hand, work on single or many cervical cancers smear images. Furthermore, there is little evidence that these algorithms will work in clinical situations. Furthermore, there seems to be no evidence that these algorithms will perform in clinical settings in developing countries (where 85% of cervical cancer cases occur) since competent cytologists and funds to purchase commercial segmentation software are limited. In conclusion, this research may motivate other field researchers to recognize the potential of some of the methodologies investigated, as well as give a solid platform for creating and implementing new ways.

Author Contributions:Conceptualization, Wan Azani Mustafa; methodology, Wan Azani Mustafa, Nur Ain Alias and Mohd Aminuddin Jamlos; writing, Wan Azani Mustafa, Nur Ain Alias, Ahmed Alkhayyat and Khairul Shakir Ab Rahman; project administration, Wan Azani Mustafa, Ahmed Alkhayyat and Rami Q. Malik. All authors have read and agreed to the published version of the manuscript.

Availability of Data and Materials:Data Availability Statement: The dataset analyzed during the current study was derived from The Herlev Database, which consists of 917 manually isolated pap smear cells. Dataset is available on the corresponding website http://mde-lab.aegean.gr/index.php/downloads (accessed on 15 March 2022).

Ethics Approval:Not applicable.

Acknowledgement: The authors would like to thank the University Malaysia Perlis and anonymous reviewers for contributing to enhance this paper.

Funding Statement: This research was supported by funding from the Ministry of Higher Education (MoHE) Malaysia under the Fundamental Research Grant Scheme (FRGS/1/2021/SKK0/UNIMAP/02/1).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Thirumurugan, P., Lavanya Devi, N. (2022). A literature survey of automated detection of cervical cancer cell in pap smear images. World Review of Science, Technology and Sustainable Development, 18(1), 74. DOI 10.1504/WRSTSD.2022.119330. [Google Scholar] [CrossRef]

2. Halim, A., Mustafa, W. A., Ahmad, W. K. W., Rahim, H. A., Sakeran, H. (2021). Nucleus detection on pap smear images for cervical cancer diagnosis: A review analysis. Oncologie, 23(1), 73–88. DOI 10.32604/Oncologie.2021.015154. [Google Scholar] [CrossRef]

3. Siegel, R. L., Miller, K. D., Fuchs, H. E., Jemal, A. (2021). Please delete the and, 2021 Cancer Statistics, 2021. CA: A Cancer Journal for Clinicians, 71(1), 7–33. DOI 10.3322/caac.21654. [Google Scholar] [CrossRef]

4. Khamparia, A., Gupta, D., Rodrigues, J. J. P. C., de Albuquerque, V. H. C., (2021). DCAVN: Cervical cancer prediction and classification using deep convolutional and variational autoencoder network. Multimedia Tools and Applications, 80(20), 30399–30415. DOI 10.1007/s11042-020-09607-w. [Google Scholar] [CrossRef]

5. Ramzan, Z., Hassan, M. A., Asif, H. M. S., Farooq, A. (2020). A machine learning-based self-risk assessment technique for cervical cancer. Current Bioinformatics, 16(2), 315–332. DOI 10.2174/1574893615999200608130538. [Google Scholar] [CrossRef]

6. Mustafa, W. A., Halim, A., Rahman, K. S. A. (2020). A narrative review: Classification of pap smear cell image for cervical cancer diagnosis. Oncologie, 22(2), 53–63. DOI 10.32604/oncologie.2020.013660. [Google Scholar] [CrossRef]

7. Negri, G., Macciocu, E., Cepurnaite, R., Kasal, A., Troncone, G. (2021). Non-human papilloma virus associated adenocarcinomas of the cervix uteri. Cytologic features and diagnostic agreement using whole slide digital cytology imaging. Diagnostic Cytopathology, 49(2), 316–321. DOI 10.1002/dc.24652. [Google Scholar] [CrossRef]

8. Soni, V. D., Soni, A. N. (2021). Cervical cancer diagnosis using convolution neural network with conditional random field. Proceedings of the 3rd International Conference on Inventive Research in Computing Applications, ICIRCA 2021, Coimbatore, Tamilnadu, pp. 1749–1754. IEEE. [Google Scholar]

9. Nisar, H., Wai, L. Y., Hong, L. S. (2018). Segmentation of overlapping cells obtained from pap smear test. 2017 IEEE Life Sciences Conference, LSC 2017, Sydney, Australia, pp. 254–257. [Google Scholar]

10. Riries, R., Winarno, Asiah, C., N., Putri, A. Y., Suksmono, A. B. et al. (2021). Cervical single cell of squamous intraepithelial lesion classification using shape features and extreme learning machine. Journal of Physics: Conference Series, 1816(1), 12081. DOI 10.1088/1742-6596/1816/1/012081. [Google Scholar] [CrossRef]

11. Lin, H., Chen, H., Wang, X., Wang, Q., Wang, L. et al. (2021). Dual-path network with synergistic grouping loss and evidence driven risk stratification for whole slide cervical image analysis. Medical Image Analysis, 69(2), 101955. DOI 10.1016/j.media.2021.101955. [Google Scholar] [CrossRef]

12. Wang, C. W., Liou, Y. A., Lin, Y. J., Chang, C. C., Chu, P. H. et al. (2021). Artificial intelligence-assisted fast screening cervical high grade squamous intraepithelial lesion and squamous cell carcinoma diagnosis and treatment planning. Scientific Reports, 11(1), 1–14. DOI 10.1038/s41598-021-95545-y Nature Publishing Group UK. [Google Scholar] [CrossRef]

13. Chitra, B., Kumar, S. S. (2022). Recent advancement in cervical cancer diagnosis for automated screening: A detailed review. Journal of Ambient Intelligence and Humanized Computing, 13(1), 251–269. DOI 10.1007/s12652-021-02899-2. [Google Scholar] [CrossRef]

14. McDermott, A. K., McDermott, A. J., Osbaldiston, R., Lennon, R. P. (2021). Improving breast and cervical cancer screening compliance through direct physician contact in a military treatment facility: A non-randomized pilot study. Military Medicine, 186(5–6), E480–E485. DOI 10.1093/milmed/usaa436. [Google Scholar] [CrossRef]

15. Mustafa, W. A., Halim, A., Nasrudin, M. W., Rahman, K. S. A. (2022). Cervical cancer situation in Malaysia: A systematic literature review. Biocell, 46(2), 367–381. DOI 10.32604/biocell.2022.016814. [Google Scholar] [CrossRef]

16. Alquran, H., Mustafa, W. A., Qasmieh, I. A., Yacob, Y. M., Alsalatie, M. et al. (2022). Cervical cancer classification using combined machine learning and deep learning approach. Computers, Materials & Continua, 72(3), 5117–5134. DOI 10.32604/cmc.2022.025692. [Google Scholar] [CrossRef]

17. Montminy, E. M., Jang, A., Conner, M., Karlitz, J. J. (2020). Screening for colorectal cancer. Medical Clinics of North America, 104(6), 1023–1036. DOI 10.1016/j.mcna.2020.08.004. [Google Scholar] [CrossRef]

18. Narayan, A. K., Lee, C. I., Lehman, C. D. (2020). Screening for breast cancer. Medical Clinics of North America, 104(6), 1007–1021. DOI 10.1016/j.mcna.2020.08.003. [Google Scholar] [CrossRef]

19. Houston, T. (2020). Screening for lung cancer. Medical Clinics of North America, 104(6), 1037–1050. DOI 10.1016/j.mcna.2020.08.005. [Google Scholar] [CrossRef]

20. Zhao, M., Wang, H., Han, Y., Wang, X., Dai, H. N. et al. (2021). SEENS: nuclei segmentation in pap smear images with selective edge enhancement. Future Generation Computer Systems, 114(5), 185–194. DOI 10.1016/j.future.2020.07.045 Elsevier B.V. [Google Scholar] [CrossRef]

21. Shanthi, P. B., Modi, S., Hareesha, K. S., Kumar, S. (2021). Classification and comparison of malignancy detection of cervical cells based on nucleus and textural features in microscopic images of uterine cervix. International Journal of Medical Engineering and Informatics, 13(1), 1–13. DOI 10.1504/IJMEI.2021.111861. [Google Scholar] [CrossRef]

22. Liang, Y., Pan, C., Sun, W., Liu, Q., Du, Y. (2021). Global context-aware cervical cell detection with soft scale anchor matching. Computer Methods and Programs in Biomedicine, 204(4–5), 106061. DOI 10.1016/j.cmpb.2021.106061. [Google Scholar] [CrossRef]

23. Almuhaideb, S., Menai, M. E. B. (2016). Impact of pre-processing on medical data classification. Frontiers of Computer Science, 10(6), 1082–1102. DOI 10.1007/s11704-016-5203-5. [Google Scholar] [CrossRef]

24. Idri, A., Benhar, H., Fernández-Alemán, J. L., Kadi, I. (2018). A systematic map of medical data preprocessing in knowledge discovery. Computer Methods and Programs in Biomedicine, 162, 69–85. DOI 10.1016/j.cmpb.2018.05.007. [Google Scholar] [CrossRef]

25. Guo, P., Xue, Z., Mtema, Z., Yeates, K., Ginsburg, O. et al. (2020). Ensemble deep learning for cervix image selection toward improving reliability in automated cervical precancer screening. Diagnostics, 10(7), 451. DOI 10.3390/diagnostics10070451. [Google Scholar] [CrossRef]

26. Mustafa, W. A., Yazid, H., Yaacob, S. (2014). A review: Comparison between different type of filtering methods on the contrast variation retinal images. IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, pp. 542–546. [Google Scholar]

27. Jahan, S., Islam, M. D. S., Islam, L., Rashme, T. Y., Prova, A. A. et al. (2021). Automated invasive cervical cancer disease detection at early stage through suitable machine learning model. SN Applied Sciences, 3(10), 199. DOI 10.1007/s42452-021-04786-z. [Google Scholar] [CrossRef]

28. Matias, A. V., Cerentini, A., Macarini, L. A. B., Amorim, J. G. A., Daltoé, F. P. et al. (2021). Segmentation, detection, and classification of cell nuclei on oral cytology samples stained with papanicolaou. SN Computer Science, 2, 1–15. DOI 10.1007/s42979-021-00676-8 Springer Singapore. [Google Scholar] [CrossRef]

29. Zhu, X., Li, X., Ong, K., Zhang, W., Li, W. et al. (2021). Hybrid AI-assistive diagnostic model permits rapid TBS classification of cervical liquid-based thin-layer cell smears. Nature Communications, 12, 1–12. DOI 10.1038/s41467-021-23913-3. [Google Scholar] [CrossRef]

30. Xue, Z., Guo, P., Desai, K. T., Pal, A., Ajenifuja, K. O. et al. (2021). A deep clustering method for analyzing uterine cervix images across imaging devices. Proceedings-IEEE Symposium on Computer-Based Medical Systems, Aveiro, Portugal, pp. 527–532. [Google Scholar]

31. Elakkiya, R., Subramaniyaswamy, V., Vijayakumar, V., Mahanti, A. (2021). Cervical cancer diagnostics healthcare system using hybrid object detection adversarial networks. IEEE Journal of Biomedical and Health Informatics, 2194(4), 1464–1471. DOI 10.1109/JBHI.2021.3094311 IEEE. [Google Scholar] [CrossRef]

32. Rahaman, M. M., Li, C., Yao, Y., Kulwa, F., Wu, X. et al. (2021). DeepCervix: A deep learning-based framework for the classification of cervical cells using hybrid deep feature fusion techniques. Computers in Biology and Medicine, 136(1), 104649. DOI 10.1016/j.compbiomed.2021.104649. [Google Scholar] [CrossRef]

33. Cao, L., Yang, J., Rong, Z., Li, L., Xia, B. et al. (2021). A novel attention-guided convolutional network for the detection of abnormal cervical cells in cervical cancer screening. Medical Image Analysis, 73, 102197. DOI 10.1016/j.media.2021.102197 Elsevier B.V. [Google Scholar] [CrossRef]

34. Li, X., Lai, T., Wang, S., Chen, Q., Yang, C. et al. (2019). Feature pyramid network. Proceedings-2019 IEEE International Conference on Parallel and Distributed Processing with Applications, Big Data and Cloud Computing, Sustainable Computing and Communications, Social Computing and Networking, ISPA/BDCloud/SustainCom/SocialCom 2019, Xiamen, China, pp. 1500–1504. [Google Scholar]

35. Wang, C., Zhong, C. (2021). Adaptive feature pyramid networks for object detection. IEEE Access, 9, 107024–107032. DOI 10.1109/ACCESS.2021.3100369. [Google Scholar] [CrossRef]

36. Liang, Y., Tang, Z., Yan, M., Chen, J., Liu, Q. et al. (2021). Comparison detector for cervical cell/clumps detection in the limited data scenario. Neurocomputing, 437(3), 195–205. DOI 10.1016/j.neucom.2021.01.006 Elsevier B.V. [Google Scholar] [CrossRef]

37. Chen, W., Gao, L., Li, X., Shen, W. (2022). Lightweight convolutional neural network with knowledge distillation for cervical cells classification. Biomedical Signal Processing and Control, 71, 103177. DOI 10.1016/j.bspc.2021.103177 Elsevier Ltd. [Google Scholar] [CrossRef]

38. Brenes, D., Barberan, C., Hunt, B., Parra, S. G., Salcedo, M. P. et al. (2022). Multi-task network for automated analysis of high-resolution endomicroscopy images to detect cervical precancer and cancer. Computerized Medical Imaging and Graphics, 97(8), 102052. DOI 10.1016/j.compmedimag.2022.102052. [Google Scholar] [CrossRef]

39. Diniz, D. N., Vitor, R. F., Bianchi, A. G. C., Delabrida, S., Carneiro, C. M. et al. (2021). An ensemble method for nuclei detection of overlapping cervical cells. Expert Systems with Applications, 185(3), 115642. DOI 10.1016/j.eswa.2021.115642 Elsevier Ltd. [Google Scholar] [CrossRef]

40. Arya, M., Mittal, N., Singh, G. (2020). Three segmentation techniques to predict the dysplasia in cervical cells in the presence of debris. Multimedia Tools and Applications, Multimedia Tools and Applications, 79(33–34), 24157–24172. DOI 10.1007/s11042-020-09206-9. [Google Scholar] [CrossRef]

41. Diniz, D. N., Rezende, M. T., Bianchi, A. G. C., Carneiro, C. M., Ushizima, D. M. et al. (2021). A hierarchical feature-based methodology to perform cervical cancer classification. Applied Sciences (Switzerland), 11(9), 4091. DOI 10.3390/app11094091. [Google Scholar] [CrossRef]

42. Pirovano, A., Almeida, L. G., Ladjal, S., Bloch, I., Berlemont, S. (2021). Computer-aided diagnosis tool for cervical cancer screening with weakly supervised localization and detection of abnormalities using adaptable and explainable classifier. Medical Image Analysis, 73(3), 102167. DOI 10.1016/j.media.2021.102167. [Google Scholar] [CrossRef]

43. Jia, D., He, Z., Zhang, C., Yin, W., Wu, N. et al. (2022). Detection of cervical cancer cells in complex situation based on improved YOLOv3 network. Multimedia Tools and Applications, 81(6), 8939–8961. DOI 10.1007/s11042-022-11954-9. [Google Scholar] [CrossRef]

44. Ali, M. M., Ahmed, K., Bui, F. M., Paul, B. K., Ibrahim, S. M. et al. (2021). Machine learning-based statistical analysis for early stage detection of cervical cancer. Computers in Biology and Medicine, 139(6), 104985. DOI 10.1016/j.compbiomed.2021.104985. [Google Scholar] [CrossRef]

45. Zhang, C. W., Jia, D. Y., Wu, N. K., Guo, Z. G., Ge, H. R. (2021). Quantitative detection of cervical cancer based on time series information from smear images. Applied Soft Computing, 112(3), 107791. DOI 10.1016/j.asoc.2021.107791. [Google Scholar] [CrossRef]

46. Chitra, B., Kumar, S. S. (2021). An optimized deep learning model using mutation-based atom search optimization algorithm for cervical cancer detection. Soft Computing, 25(24), 15363–15376. DOI 10.1007/s00500-021-06138-w. [Google Scholar] [CrossRef]

47. Lavanya Devi, N., Thirumurugan, P. (2021). Cervical cancer classification from pap smear images using modified fuzzy C means, PCA, and KNN. IETE Journal of Research, 68(3), 1591–1598. DOI 10.1080/03772063.2021.1997353. [Google Scholar] [CrossRef]

48. Bhatt, A. R., Ganatra, A., Kotecha, K. (2021). Cervical cancer detection in pap smear whole slide images using convNet with transfer learning and progressive resizing. PeerJ Computer Science, 7(1), 1–18. DOI 10.7717/peerj-cs.348. [Google Scholar] [CrossRef]

49. Desiani, A., Erwin, M., Suprihatin, B., Yahdin, S., Putri, A. I. et al. (2021). Bi-path architecture of CNN segmentation and classification method for cervical cancer disorders based on pap-smear images. IAENG International Journal of Computer Science, 48, 1–9. [Google Scholar]

50. Sampaio, A. F., Rosado, L., Vasconcelos, M. J. M. (2021). Towards the mobile detection of cervical lesions: A region-based approach for the analysis of microscopic images. IEEE Access, 9, 152188–152205. DOI 10.1109/ACCESS.2021.3126486. [Google Scholar] [CrossRef]

51. Starmans, M. P. A., Timbergen, M. J. M., Vos, M., Padmos, G. A., Grünhagen, D. J. et al. (2021). The WORC database: MRI and CT scans, segmentations, and clinical labels for 930 patients from six radiomics studies. medRxiv. 1–17. DOI 10.1101/2021.08.19.21262238. [Google Scholar] [CrossRef]

52. Saini, S. K., Bansal, V., Kaur, R., Juneja, M. (2020). ColpoNet for automated cervical cancer screening using colposcopy images. Machine Vision and Applications, 31, 1–15. DOI 10.1007/s00138-020-01063-8 Springer Berlin Heidelberg. [Google Scholar] [CrossRef]

53. Jia, D., Zhang, C., Wu, N., Guo, Z., Ge, H. (2021). Multi-layer segmentation framework for cell nuclei using improved GVF snake model, watershed, and ellipse fitting. Biomedical Signal Processing and Control, 67(7788), 102516. DOI 10.1016/j.bspc.2021.102516 Elsevier Ltd. [Google Scholar] [CrossRef]

54. Pal, A., Xue, Z., Befano, B., Rodriguez, A. C., Long, L. R. et al. (2021). Deep metric learning for cervical image classification. IEEE Access, 9, 53266–53275. DOI 10.1109/ACCESS.2021.3069346. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2021 The Author(s). Published by Tech Science Press.

Copyright © 2021 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools